Professional Documents

Culture Documents

Lecture 6 - Multi-Layer Feedforward Neural Networks Using Matlab Part 2

Uploaded by

Ammar AlkindyOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Lecture 6 - Multi-Layer Feedforward Neural Networks Using Matlab Part 2

Uploaded by

Ammar AlkindyCopyright:

Available Formats

Dr.

Qadri Hamarsheh

Multi-Layer Feedforward Neural Networks using matlab

Part 2

Examples:

Example 1: (fitting data) Consider humps function in MATLAB. It is given by

Solution: Matlab Code:

%Obtain the data:

x = 0:.05:2; y=humps(x);

P=x; T=y;

%Plot the data

plot (P, T, 'x')

grid; xlabel('time (s)'); ylabel('output'); title('humps function')

%DESIGN THE NETWORK

net = newff([0 2], [5,1], {'tansig','purelin'},'traingd');

% the first argument [0 2] defines the range of the input and initializes the network.

% the second argument the structure of the network, there are two layers.

% 5 is the number of the nodes in the first hidden layer,

% 1 is the number of nodes in the output layer,

% Next the activation functions in the layers are defined.

% in the first hidden layer there are 5 tansig functions.

% in the output layer there is 1 linear function.

% ‘traingd’ defines the basic teaching scheme – gradient method

% Define learning parameters

net.trainParam.show = 50; % The result is shown at every 50th iteration (epoch)

net.trainParam.lr = 0.05; % Learning rate used in some gradient schemes

net.trainParam.epochs =1000; % Max number of iterations

net.trainParam.goal = 1e-3; % Error tolerance; stopping criterion

%Train network

net1 = train(net, P, T); % Iterates gradient type of loop

% Resulting network is strored in net1

%Convergenceurve c is shown below.

% Simulate how good a result is achieved: Input is the same input vector P.

% Output is the output of the neural network, which should be compared with output data

a= sim(net1,P);

% Plot result and compare

plot (P, a-T, P,T); grid;

The fit is quite bad, to solve this problem:

Change the size of the network (bigger. size).

net=newff([0 2], [20,1], {'tansig','purelin'},'traingd');

Improve the training algorithm performance or even change the algorithm.

1

Dr. Qadri Hamarsheh

Try Levenberg-Marquardt – trainlm (more efficient training algorithm)

net=newff([0 2], [10,1], {'tansig','purelin'},'trainlm');

It is clear that L-M algorithm is significantly faster and preferable method to

back-propagation.

Try simulating with independent data.

x1=0:0.01:2; P1=x1;y1=humps(x1); T1=y1;

a1= sim (net1, P1);

plot (P1,a1, 'r', P1,T1, 'g', P,T, 'b', P1, T1-a1, 'y');

legend ('a1','T1','T','Error');

Example 2: Consider a surface described by 𝐳 = 𝐜𝐨𝐬 (𝐱) 𝐬𝐢𝐧 (𝐲) defined on a

square −𝟐 ≤ 𝒙 ≤ 𝟐, −𝟐 ≤ 𝒚 ≤ 𝟐, plot the surface z as a function of x and y and

design a neural network, which will fit the data. You should study different

alternatives and test the final result by studying the fitting error.

Solution

%Generate data

x = -2:0.25:2; y = -2:0.25:2;

z = cos(x)'*sin(y);

%Draw the surface

mesh (x, y, z)

xlabel ('x axis'); ylabel ('y axis'); zlabel ('z axis');

title('surface z = cos(x)sin(y)');

gi=input('Strike any key ...');

%Store data in input matrix P and output vector T

P = [x; y]; T = z;

%Create and initialize the network

net=newff ([-2 2; -2 2], [25 17], {'tansig' 'purelin'},'trainlm');

%Apply Levenberg-Marquardt algorithm

%Define parameters

net.trainParam.show = 50;

net.trainParam.lr = 0.05;

net.trainParam.epochs = 300;

net.trainParam.goal = 1e-3;

%Train network

net1 = train (net, P, T);

gi=input('Strike any key ...');

%Plot how the error develops

%Simulate the response of the neural network and draw the surface

a= sim(net1,P);

mesh (x, y, a)

% Error surface

mesh (x, y, a-z)

xlabel ('x axis'); ylabel ('y axis'); zlabel ('Error'); title ('Error surface')

gi=input('Strike any key to continue......');

% Maximum fitting error

Maxfiterror = max (max (z-a)); %Maxfiterror = 0.1116

2

Dr. Qadri Hamarsheh

Example 3

Here a perceptron is created with a 1-element input ranging from -10 to 10,

and one neuron.

net = newp ([-10 10],1);

Here the network is given a batch of inputs P. The error is calculated by

subtracting the output Y from target T. Then the mean absolute error is

calculated.

P = [-10 -5 0 5 10];

T = [0 0 1 1 1];

Y = sim (net, P)

E = T-Y;

perf = mae (E) ;

Example 4

Here a two-layer feed-forward network is created with a 1-element input

ranging from -10 to 10, four hidden tansig neurons, and one purelin

output neuron.

net = newff ([-10 10],[4 1],{'tansig','purelin'});

Here the network is given a batch of inputs P. The error is calculated by

subtracting the output A from target T. Then the mean squared error is

calculated.

P = [-10 -5 0 5 10];

T= [0 0 1 1 1];

Y = sim(net,P)

E = T-Y

perf = mse(E)

Example 5:

load house_dataset;

inputs = houseInputs;

targets = houseTargets;

net = newff (inputs, targets, 20);

net = train(net, inputs, targets);

outputs = sim(net, inputs)

%outputs1 = net (inputs);

errors = outputs - targets;

perf = perform(net, outputs, targets)

You might also like

- NN Examples MatlabDocument91 pagesNN Examples MatlabAnonymous 1dVLJSVhtrNo ratings yet

- Inglés Instrumental. Actividad Unidad 4.Document3 pagesInglés Instrumental. Actividad Unidad 4.Carlos Castro100% (1)

- Summary - Five Dysfunctions of A TeamDocument2 pagesSummary - Five Dysfunctions of A TeamPoch BajamundeNo ratings yet

- Neural Lab 1Document5 pagesNeural Lab 1Bashar AsaadNo ratings yet

- Lecture 7 - Perceptrons and Multi-Layer Feedforward Neural Networks Using Matlab Part 3Document6 pagesLecture 7 - Perceptrons and Multi-Layer Feedforward Neural Networks Using Matlab Part 3Ammar AlkindyNo ratings yet

- Neural Network Tool in MatlabDocument10 pagesNeural Network Tool in MatlabGitanjali ChalakNo ratings yet

- Introduction To Matlab Neural Network ToolboxDocument10 pagesIntroduction To Matlab Neural Network ToolboxLastri WidyaNo ratings yet

- NEURAL NETWORKS: Basics using MATLAB Neural Network ToolboxDocument54 pagesNEURAL NETWORKS: Basics using MATLAB Neural Network ToolboxBob AssanNo ratings yet

- Lab Manual Soft ComputingDocument44 pagesLab Manual Soft ComputingAnuragGupta100% (1)

- NN ExamplesDocument91 pagesNN Examplesnguyenhoangan.13dt2No ratings yet

- Tutorial Questions On Fuzzy Logic 1. A Triangular Member Function Shown in Figure Is To Be Made in MATLAB. Write A Function. Answer: CodeDocument13 pagesTutorial Questions On Fuzzy Logic 1. A Triangular Member Function Shown in Figure Is To Be Made in MATLAB. Write A Function. Answer: Codekiran preethiNo ratings yet

- Mix Achar Numerical MatlabDocument4 pagesMix Achar Numerical MatlabMuhammad MaazNo ratings yet

- Neural Networks: MATLABDocument91 pagesNeural Networks: MATLABlaerciomosNo ratings yet

- Neural Networks - Basics Matlab PDFDocument59 pagesNeural Networks - Basics Matlab PDFWesley DoorsamyNo ratings yet

- Matlab Instruction RBFDocument22 pagesMatlab Instruction RBFmechernene_aek9037No ratings yet

- Rosenbrock Function Optimization Using Gradient DescentDocument27 pagesRosenbrock Function Optimization Using Gradient DescentlarasmoyoNo ratings yet

- Classification Problem: Feedforwardnet Patternnet FitnetDocument16 pagesClassification Problem: Feedforwardnet Patternnet FitnetAmartya KeshriNo ratings yet

- Lab PerceptDocument2 pagesLab PerceptAnibal Raynol Medrano ValeraNo ratings yet

- Matlab Practical FileDocument19 pagesMatlab Practical FileAmit WaliaNo ratings yet

- Artificial Neural Networks For BeginnersDocument15 pagesArtificial Neural Networks For BeginnersAnonymous Ph4xtYLmKNo ratings yet

- Daracomm Lab1 06Document10 pagesDaracomm Lab1 06Abi ShresthaNo ratings yet

- Matlab Newton-Raphson Root Finding CodeDocument2 pagesMatlab Newton-Raphson Root Finding CodeDeema AbuzaidNo ratings yet

- Digital Signal ProcessingDocument20 pagesDigital Signal Processingusamadar707No ratings yet

- Recursive Least Squares (RLS) AlgorithmDocument6 pagesRecursive Least Squares (RLS) AlgorithmRobi KhanNo ratings yet

- Secant and Modify Secant Method: Prum Sophearith E20170706Document11 pagesSecant and Modify Secant Method: Prum Sophearith E20170706rithNo ratings yet

- Lecture 14 - Associative Neural Networks Using MatlabDocument6 pagesLecture 14 - Associative Neural Networks Using MatlabAmmar AlkindyNo ratings yet

- Exercise 1 Instruction PcaDocument9 pagesExercise 1 Instruction PcaHanif IshakNo ratings yet

- COMPARING NUMERICAL METHODSDocument8 pagesCOMPARING NUMERICAL METHODSSohaib Raja RajaNo ratings yet

- Metode NumerikDocument48 pagesMetode NumerikKang MasNo ratings yet

- Neural Network Digit RecognitionDocument15 pagesNeural Network Digit RecognitionPaolo Del MundoNo ratings yet

- MATLAB Functions for DSPDocument21 pagesMATLAB Functions for DSPRobyNo ratings yet

- Digital Signal ProcessingDocument23 pagesDigital Signal ProcessingSanjay PalNo ratings yet

- 8.integration by Numerical MethodDocument7 pages8.integration by Numerical Methodمحمد اسماعيل يوسفNo ratings yet

- 13 - Chapter 5 PDFDocument40 pages13 - Chapter 5 PDFBouchouicha KadaNo ratings yet

- ASNM Program ExplainDocument4 pagesASNM Program ExplainKesehoNo ratings yet

- Narx Model NN 0 MatlabDocument8 pagesNarx Model NN 0 MatlabSn ProfNo ratings yet

- Introduction to NETLAB Neural Network ToolboxDocument10 pagesIntroduction to NETLAB Neural Network ToolboxBhaumik BhuvaNo ratings yet

- Index: S.No Title RemarksDocument25 pagesIndex: S.No Title RemarksVishvajeet YadavNo ratings yet

- Tutoriales Control NeurodifusoDocument47 pagesTutoriales Control NeurodifusoGaby ZúñigaNo ratings yet

- Lab # 1 Introduction To Communications Principles Using MatlabDocument22 pagesLab # 1 Introduction To Communications Principles Using Matlabzain islamNo ratings yet

- Lab 01 Bisection Regula-Falsi and Newton-RaphsonDocument12 pagesLab 01 Bisection Regula-Falsi and Newton-RaphsonUmair Ali ShahNo ratings yet

- Tutorial NN ToolboxDocument7 pagesTutorial NN Toolboxsaurabhguptaengg1540No ratings yet

- Matlab TutorialDocument18 pagesMatlab TutorialUmair ShahidNo ratings yet

- Matlab CodesDocument92 pagesMatlab Codesonlymag4u75% (8)

- A Brief Introduction to MATLAB: Taken From the Book "MATLAB for Beginners: A Gentle Approach"From EverandA Brief Introduction to MATLAB: Taken From the Book "MATLAB for Beginners: A Gentle Approach"Rating: 2.5 out of 5 stars2.5/5 (2)

- Integer Optimization and its Computation in Emergency ManagementFrom EverandInteger Optimization and its Computation in Emergency ManagementNo ratings yet

- Advanced C Concepts and Programming: First EditionFrom EverandAdvanced C Concepts and Programming: First EditionRating: 3 out of 5 stars3/5 (1)

- Nonlinear Control Feedback Linearization Sliding Mode ControlFrom EverandNonlinear Control Feedback Linearization Sliding Mode ControlNo ratings yet

- Waste Incinerator Operation ManualDocument8 pagesWaste Incinerator Operation ManualAmmar AlkindyNo ratings yet

- H - T100t-P Manual Hadware PDFDocument29 pagesH - T100t-P Manual Hadware PDFjarpeasvNo ratings yet

- Elco 290320Document32 pagesElco 290320Ammar AlkindyNo ratings yet

- System Overview: Burnertronic Bt300 Bt320 ... Bt341Document8 pagesSystem Overview: Burnertronic Bt300 Bt320 ... Bt341cristiandresNo ratings yet

- HFY3-3690-00-ELE-ITP-0001 - 0 ITP For Electrical Inspection and Test PlanDocument97 pagesHFY3-3690-00-ELE-ITP-0001 - 0 ITP For Electrical Inspection and Test PlanAmmar AlkindyNo ratings yet

- Nise106 N3160 PDFDocument2 pagesNise106 N3160 PDFAmmar AlkindyNo ratings yet

- Transformer Installation MethodDocument12 pagesTransformer Installation MethodAmmar Alkindy100% (1)

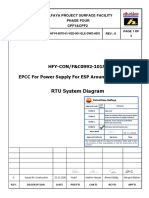

- HFY4-5070-01-VED-001-ELE-DWG-6001 - 0-RTU System Diagram - Code-D PDFDocument3 pagesHFY4-5070-01-VED-001-ELE-DWG-6001 - 0-RTU System Diagram - Code-D PDFAmmar AlkindyNo ratings yet

- Pi SPHW E4 220624 1Document1 pagePi SPHW E4 220624 1Ammar AlkindyNo ratings yet

- SPRT BrochureDocument23 pagesSPRT BrochureAmmar AlkindyNo ratings yet

- Digital I/O (Part 2): Keypads, LCDs and EEPROMDocument4 pagesDigital I/O (Part 2): Keypads, LCDs and EEPROMAmmar AlkindyNo ratings yet

- SP Rmdiiid Divd Users Manual101Document61 pagesSP Rmdiiid Divd Users Manual101Ammar AlkindyNo ratings yet

- ELCO Heating Catalogue ENGDocument208 pagesELCO Heating Catalogue ENGAmmar AlkindyNo ratings yet

- Nise106 N3160 PDFDocument2 pagesNise106 N3160 PDFAmmar AlkindyNo ratings yet

- HW2Document1 pageHW2Ammar AlkindyNo ratings yet

- Digital I/O (Part 2): Keypads, LCDs and EEPROMDocument4 pagesDigital I/O (Part 2): Keypads, LCDs and EEPROMAmmar AlkindyNo ratings yet

- HFY4-5070-01-VED-001-ELE-DWG-6002 - E-RTU Panel Electrical Diagram-Code D PDFDocument14 pagesHFY4-5070-01-VED-001-ELE-DWG-6002 - E-RTU Panel Electrical Diagram-Code D PDFAmmar AlkindyNo ratings yet

- Modifications: Before Modification After Modification Set - Tris - B (0x7F) Pin - b7Document7 pagesModifications: Before Modification After Modification Set - Tris - B (0x7F) Pin - b7Ammar AlkindyNo ratings yet

- Proteus Simulation Program: FIG 1: PIC C Start Screen. FIG 2: The Body of Any Program. FIG 3: Compile ConfirmationDocument5 pagesProteus Simulation Program: FIG 1: PIC C Start Screen. FIG 2: The Body of Any Program. FIG 3: Compile ConfirmationAmmar Alkindy100% (1)

- ADC Module: VoltageDocument5 pagesADC Module: VoltageAmmar AlkindyNo ratings yet

- 3 Class Micrcontroller Dr. Mohanad & Dr. Tariq Homework (1) : IntroductionDocument1 page3 Class Micrcontroller Dr. Mohanad & Dr. Tariq Homework (1) : IntroductionAmmar AlkindyNo ratings yet

- Ctive Ilters: Chapter Outline Application Activity PreviewDocument6 pagesCtive Ilters: Chapter Outline Application Activity PreviewAmmar AlkindyNo ratings yet

- ch1 Introduction To IoTDocument27 pagesch1 Introduction To IoTAmmar AlkindyNo ratings yet

- Chapter 3. Smart Objects: The "Things" in IotDocument18 pagesChapter 3. Smart Objects: The "Things" in IotAmmar AlkindyNo ratings yet

- Ch4 Connecting Smart ObjectsDocument31 pagesCh4 Connecting Smart ObjectsAmmar AlkindyNo ratings yet

- Ch5. IOT Routing ProtocolsDocument52 pagesCh5. IOT Routing ProtocolsAmmar AlkindyNo ratings yet

- Microcontroller vs Microprocessor: Key DifferencesDocument4 pagesMicrocontroller vs Microprocessor: Key DifferencesAmmar AlkindyNo ratings yet

- COOJA Network Simulator: Exploring The Infinite Possible Ways To Compute The Performance Metrics of IOT Based Smart Devices To Understand The Working of IOT Based Compression & Routing ProtocolsDocument7 pagesCOOJA Network Simulator: Exploring The Infinite Possible Ways To Compute The Performance Metrics of IOT Based Smart Devices To Understand The Working of IOT Based Compression & Routing ProtocolsMohamed Hechmi JERIDINo ratings yet

- Fuzzy Membership Functions Fuzzy Operations: Fuzzy Union Fuzzy Intersection Fuzzy ComplementDocument21 pagesFuzzy Membership Functions Fuzzy Operations: Fuzzy Union Fuzzy Intersection Fuzzy ComplementAmmar AlkindyNo ratings yet

- Neural NetworksDocument109 pagesNeural NetworksDerrick mac GavinNo ratings yet

- MBTI IranDocument8 pagesMBTI IranEliad BeckerNo ratings yet

- Compiler Design Unit 2Document117 pagesCompiler Design Unit 2Arunkumar PanneerselvamNo ratings yet

- Tutorial Stress and IntonationDocument5 pagesTutorial Stress and IntonationTSL10621 NUR ADLEEN HUSNA BINTI AZMINo ratings yet

- English Debate Script: "The Impact of Social Networking For Students"Document5 pagesEnglish Debate Script: "The Impact of Social Networking For Students"Arlan TogononNo ratings yet

- When I Teach What Should I DoDocument2 pagesWhen I Teach What Should I Dokamille gadinNo ratings yet

- Characteristics of Artificial Neural NetworksDocument38 pagesCharacteristics of Artificial Neural NetworkswowoNo ratings yet

- Stepping Out of Social Anxiety - Module 2 - Overcoming Negative ThinkingDocument8 pagesStepping Out of Social Anxiety - Module 2 - Overcoming Negative Thinkingaphra.agabaNo ratings yet

- Brand Management: Assignment - 2Document8 pagesBrand Management: Assignment - 2POOJA AGARWAL BMS2019No ratings yet

- 2022 - Knowledge Management and Digital Transformation For Industry 4.0 A Structured Literature ReviewDocument20 pages2022 - Knowledge Management and Digital Transformation For Industry 4.0 A Structured Literature ReviewmonimawadNo ratings yet

- Ielts Tips WritingDocument14 pagesIelts Tips Writingsanjeevk6No ratings yet

- University of West Alabama 5E Lesson Plan TemplateDocument7 pagesUniversity of West Alabama 5E Lesson Plan Templateapi-431101433No ratings yet

- Cip Lesson Plan TemplateDocument2 pagesCip Lesson Plan Templateapi-245889774No ratings yet

- Kiribati InternshipDocument17 pagesKiribati Internshipapi-376421690No ratings yet

- COMSATS Internship Evaluation FormDocument3 pagesCOMSATS Internship Evaluation FormMuhammad Muavia KhanNo ratings yet

- Nature and ProcessDocument10 pagesNature and ProcessShashankNo ratings yet

- Chisholm Human Freedom and The SelfDocument25 pagesChisholm Human Freedom and The SelfJean BenoitNo ratings yet

- Understanding contemporary Philippine artsDocument7 pagesUnderstanding contemporary Philippine artsMary Joy GeraldoNo ratings yet

- How to use prepositions in English grammar (on, at, in, of, forDocument3 pagesHow to use prepositions in English grammar (on, at, in, of, formahamnadirminhasNo ratings yet

- AdosDocument11 pagesAdoseducacionchileNo ratings yet

- Approaches To Classroom ManagementDocument10 pagesApproaches To Classroom ManagementIris De Leon0% (1)

- Field Study 1: Hannah NovioDocument11 pagesField Study 1: Hannah NovioJeffrey MendozaNo ratings yet

- Debt of GratitudeDocument26 pagesDebt of GratitudeMelinda YunJae CassiopeiaBigeastNo ratings yet

- Kutztown University Elementary Education Department Professional Semester Program Lesson Plan FormatDocument4 pagesKutztown University Elementary Education Department Professional Semester Program Lesson Plan Formatapi-271173063No ratings yet

- Parts of Speech-ADJECTIVESDocument14 pagesParts of Speech-ADJECTIVESAlfian IskandarNo ratings yet

- Emilys Formal Observation 4 WorksheetDocument2 pagesEmilys Formal Observation 4 Worksheetapi-347062025No ratings yet

- Teacher Note Answer Key: Vocabulary LaddersDocument6 pagesTeacher Note Answer Key: Vocabulary LaddersfairfurNo ratings yet

- PbisDocument36 pagesPbisapi-257903405No ratings yet

- PTK RecountDocument115 pagesPTK RecountT. Wisnu Warnia WrNo ratings yet