Professional Documents

Culture Documents

2013HW70753-EndSemReport-Sagar Agrawal

Uploaded by

SouravDasCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

2013HW70753-EndSemReport-Sagar Agrawal

Uploaded by

SouravDasCopyright:

Available Formats

SEWPZG628T – Dissertation Finance Transformation – TARDIS

FINANCE TRANSFORMATION

TARDIS (Transparent Actuarial Reporting Database Insight – UNISURE)

SEWP ZG628T DISSERTATION

Final Semester Dissertation Report

By:

Sagar Agrawal

(2013HW70753)

Dissertation work carried out at

Wipro Technologies, PUNE

BIRLA INSTITUTE OF TECHNOLOGY AND SCIENCE

PILANI, (RAJASTHAN) INDIA – 333031

November, 2017

Bits ID: 2013HW70753 Page 1 of 56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

SEWP ZG628T DISSERTATION

FINANCE TRANSFORMATION

Submitted in partial fulfillment of the requirements of

M. Tech Software Engineering Degree Program

By:

Sagar Agrawal

(2013HW70753)

Under the Supervision of

Priyanka Katare, Technical Lead

Wipro Technologies, Pune

BIRLA INSTITUTE OF TECHNOLOGY AND SCIENCE

PILANI, (RAJASTHAN) – 333031

November, 2017

Bits ID: 2013HW70753 Page 2 of 56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

BIRLA INSTITUTE OF TECHNOLOGY AND SCIENCE

Pilani (Rajasthan) India

SEWP ZG628T DISSERTATION

First Semester 2017-2018

Dissertation Title : Finance Transformation

Name of Supervisor : Priyanka Katare

Name of Student : Sagar Agrawal

BITS ID of Student : 2013HW70753

Abstract:

This document is a Finance Transformation deliverable and represents the interface macro design

for the UNISURE, covering the movement and transformation of data from the UNISURE

source system extract through to the Persistent Actuarial Database (PAD) for Product Families

Unitized NP Pensions, Unitized WP Pensions and Term Assurance. It will serve as the major

source input into the development of off-shore Technical specification for the same functional

scope.

Bits ID: 2013HW70753 Page 3 of 56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

Bits ID: 2013HW70753 Page 4 of 56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

Acknowledgements

I am highly indebted to Priyanka Katare for all the guidance and constant

supervision as well as for providing necessary information regarding the project &

also for the support in completing the project.

I would like to express my gratitude towards my examiners, Miss Sona Jain & Mr.

Naman Pandey for their kind co-operation and encouragement which help me in

completion of this project.

I would like to thank Wipro Technologies to provide me with the necessary

infrastructure to undertake and complete the project & BITS Pilani for providing

me with the platform to undertake the project and carry forward the vision.

My thanks and appreciations to my peers in helping me develop the project and

giving me such attention and time.

Bits ID: 2013HW70753 Page 5 of 56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

Bits ID: 2013HW70753 Page 6 of 56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

Bits ID: 2013HW70753 Page 7 of 56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

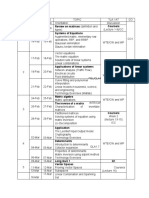

Version History:

Version Status Date Author Summary of Changes

1.0 Draft 10/8/2017 Sagar Agrawal Initial Version

Layout changes and more

1.1 Draft 10/9/2017 Sagar Agrawal description added as per reviewer

comments.

Final term context added, review

1.2 Draft 19/11/2017 Sagar Agrawal

comments added.

Reviewer(s):

Name Role Organization

Sona Jain Senior Project Engineer Wipro Technologies

Naman Pandey Senior Project Engineer Teradata

Bits ID: 2013HW70753 Page 8 of 56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

Contents

1 Introduction........................................................................................................................................................11

1.1 Purpose.......................................................................................................................................................11

1.2 Business Background..................................................................................................................................12

1.3 Approach.....................................................................................................................................................13

1.4 Scope...........................................................................................................................................................15

2 High Level Macro Design..................................................................................................................................16

2.1 Assumptions................................................................................................................................................16

2.2 Design Decisions........................................................................................................................................17

2.1 High Level System Architecture..................................................................................................................20

2.2 ETL Architecture.........................................................................................................................................20

2.3 List of Figures.............................................................................................................................................24

2.4 Open Issues at Submission of This Draft....................................................................................................24

2.5 Environment Definition...............................................................................................................................25

3 Tools Used...........................................................................................................................................................26

4 Version Control Process....................................................................................................................................27

5 Archival Strategy...............................................................................................................................................28

6 Source System Dependency...............................................................................................................................29

7 Non-Functional Requirements..........................................................................................................................30

8 Source Specific macro design............................................................................................................................31

8.1 Data Flow Diagram....................................................................................................................................31

8.2 Pre and Post Processing Requirements......................................................................................................31

9 Source Data.........................................................................................................................................................32

9.1 Source Data Specification...........................................................................................................................32

9.2 Extract Process...........................................................................................................................................33

9.3 Staging Source Data...................................................................................................................................33

9.4 Source Data Dictionary..............................................................................................................................34

9.5 Identify the Movement Logic.......................................................................................................................37

10 PAD TO MPF generation for Unisure.............................................................................................................38

10.1 MPF generation Business Overview...........................................................................................................38

10.2 PAD TO MPF END TO END Architecture................................................................................................39

10.3 Detail Architecture and flow for PAD TO MPF.........................................................................................40

10.4 Inforce criterion for Unisure Source System..............................................................................................40

Bits ID: 2013HW70753 Page 9 of 56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

10.5 Types of MPF file generated.......................................................................................................................41

10.6 ETL Architecture Closure look...................................................................................................................42

11 Error Handling...................................................................................................................................................46

11.1 Source to Staging........................................................................................................................................46

11.2 Staging to PAD...........................................................................................................................................48

11.3 PAD TO MPF Error Handling...................................................................................................................49

11.4 Error Notification Approach.......................................................................................................................50

12 Database Overview............................................................................................................................................51

13 Data mapping and business rules.....................................................................................................................52

14 References...........................................................................................................................................................53

15 Appendix.............................................................................................................................................................54

16 Plan of Work.......................................................................................................................................................55

17 Check List of Items............................................................................................................................................56

Bits ID: 2013HW70753 Page 10 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

1 Introduction

1.1 Purpose

This document is a Finance Transformation deliverable and represents the interface macro

design for the UNISURE, covering the movement and transformation of data from the UNISURE

source system extract through to the Persistent Actuarial Database (PAD) for Product Families

Unitized NP Pensions, Unitized WP Pensions and Term Assurance. It will serve as the major

source input into the development of off-shore Technical specification for the same functional

scope.

This macro design is one of a number of Finance Transformation designs ultimately concerned

with the production of in-force and movement Model Point Files (MPFs) for consumption by

the Prophet application. For simplicity, the scope of this particular design is highlighted in

yellow in the diagram below:

P1L70 –Product

Families 1 to3

P1L70 – Product

Families 4 to 13

UNISURE

In-Force

And

AR

Movement

Model

Paymaster

(Refresh)

PAD Point Files

For Prophet

Alpha

Administrator

Other Sources

(manual policy

feeds)

Figure 1

This design therefore covers the extraction, transformation and loading of data items from the

UNISURE extracts into the target PAD database. The data transformed and stored is that

required to fulfil the stated requirements of the Finance Transformation Prophet Enhancement

team for the valuation of Product Families Unitized NP Pensions, Unitized WP Pensions and

Term Assurance.

Bits ID: 2013HW70753 Page 11 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

The onward transformation of UNISURE data into Model Point Files (MPFs) for consumption by

Prophet will be covered within a separate macro design.

1.2 Business Background

CUSTOMER has outlined a strategic intent to significantly grow and develop its business. This in

turn means that CUSTOMER Finance must change, if it is to provide the right level of service

and support along the agreed dimensions.

To meet this challenge, CUSTOMER is planning to transform its Finance function and the way it

serves its customers. The Finance Transformation programme has been established to address

this need through delivering the following outcomes.

Deliver a significant reduction in reporting cycle times;

Develop deep capability in business partnering and strategic business insight;

Re-engineer processes to create clearer accountability, reduce duplication and drive

efficiency and effectiveness improvements;

Significantly simplify very complex data feeds and so reduce complexity in Prophet

modelling and reducing reconciliation effort; and

Restructure the organization to facilitate service quality improvement and cost

reductions and create an inspirational finance leadership team that actively engages

with its people, prioritizes people development and customer service.

The PAD forms a key foundation of the Solution Architecture developed to support these

programmed outcomes, providing:

A single source of data for in-scope actuarial valuation and reporting

Data transformations which are documented, agreed and visible to the business via

Informatica’ s Meta Data Manager tool

A logical and physical data model as a representation of business requirements as

opposed to disparate source data structures

Below are the product families to which Unisure data goes as modal point files.

Bits ID: 2013HW70753 Page 12 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

Figure 2

1.3 Approach

This macro design has been generated from the following primary inputs:

1 – The UNISURE source extracts specification.

2 – The specification of required signed off business logic based on stakeholder engagement

with the Finance Data Team and consequent validation with the reporting teams

3 – The representation of that business logic in ETL mappings, applied using the following

design principles to the physical and logical models of the PAD and its data transformations:

Principle Explanation Where Applied

The PAD is a model Only required data will be maintained in the PAD, not all

of business available source data. The PAD represents a model of PAD PDM

requirements expressed business requirements.

Bits ID: 2013HW70753 Page 13 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

Principle Explanation Where Applied

If policy level data is computed (e.g. aggregations,

Transformed source selection, etc.,) from the transactional records (cash flows, PAD PDM,

data will be stored in movements, history, etc.,), the transformation will be Source to PAD

the PAD performed in the inbound ETL and the computed value ETL

will be stored in the PAD.

Source extract data will be held in the inbound staging

area for the minimum period based on business

Historic source data requirements. Typically this will be a maximum of 2

will not be months (where month to month deltas are required in Source extract

preserved in the order to infer movements). staging

PAD The PAD will build history and will preserve historic

positions. This is not the same as storing historic source

extracts.

All specific Prophet-

All the Prophet Related transformations/adjustments will

Related

be performed between PAD and outbound staging.

transformations/adj

On occasions where a DataMart and Prophet wish to PAD to Prophet

ustments will be

receive the same computed value we should store both ETL

performed between

the data before and after transformation (increasing

PAD and outbound

storage, but improving data lineage etc.)

MPF staging

Frequently changing

The use of lookup tables allowing soft-coded business PAD PDM

mappings should be

rules will make the system easier to maintain. (reference

soft-coded using

data),Source to

reference data Exceptions to this rule may be made where there is a PAD ETL,PAD to

lookup tables where performance consideration. Prophet ETL

possible

4 – Specific design activities in accordance with the principles listed above to determine a) the

physical PAD model, and b) whether a particular piece of logic should be applied between

source extract and PAD or between PAD and MPF. The scope of this design excludes PAD to

MPF data transformations for now. It would be included in other few weeks.

Bits ID: 2013HW70753 Page 14 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

1.4 Scope

1.4.1 In-Scope

Consume the UNISURE source extract and populate the PAD according to stated

requirements and agreed business rules. The stated requirements are explicitly taken

from Prophet IFAD for Unitized NP Pensions, Unitized WP Pensions and Term Assurance.

Application of scheduling in line with the Generic OMD macro design

Application of errors and controls processing in line with the Errors and Controls macro

design

Movements capture for Non New business and Non Incremental business.

1.4.2 Out of Scope

The following out of scope items are within the scope of separate Finance Transformation

Technology Delivery designs, however out of scope of this document for now. It would be

covered in next few weeks.

Any Model Point File data production (In force and Movement MPF, GMPF, XMPF).

The production of any Data marts or the consumption of any Prophet results

The supply of Assumptions data to the Prophet Application

Any interaction with Prophet Automation (the processes which control the interface

between the PAD and Prophet)

Code Release and Version Control mechanisms and processes

Any storing/archiving of source extracts once these files have been read into the PAD

staging tables

Reporting of controls data, trend analysis and reconciliation. This design supports the

storage of that data and the subsequent macro design will incorporate the reporting

functionality.

Movements capture for new business and incremental business.

Bits ID: 2013HW70753 Page 15 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

2 High Level Macro Design

2.1 Assumptions

Assumption Justification

The UNISURE source extract, ICMS Commissions Consistent with Solution Architecture

and Asset share factors extract files would be expectations

produced on monthly basis and will be pushed to

a location on the Unix file system from where it

can be read by Informatica and the GOMD

scheduling tool

The UNISURE extracts will be in Fixed Width ASCII Specified in the UNISURE Source extract

format specifications

Multiple extracts will be provided. Specified in the UNISURE extract

specifications

Business rules for MPF variables which were not These variables are defaulted to maintain the

present in Unisure would be defaulted during the consistency of MPF variables across Product

MPF generation, so not being covered as a part families.

of loading into PAD

The movements provided on the contract engine Confirmed with FT BD team (Harj Cheema)

extract are understood to represent a super-set

of Finance Transformation requirements. These

will be grouped and filtered as required between

PAD and MPF to derive the movements in which

Prophet is interested.

As a part of DFR requirements, Headers and Based on the communication from Customer

footers will be included in Unisure Inforce and DFR team.

Movement extracts from September – 2009

onwards and accordingly the Pre and Post DFR

files shall be loaded into STG.

There is a potential chance of the occurrence of Based on the communication from

duplicates records in the inforce files due to the CUSTOMER team.

structure of inforce IFAD and these shall be

considered as genuine duplicates and shall be

processed to the stage tables

Bits ID: 2013HW70753 Page 16 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

2.2 Design Decisions

Design Decision Justification

All the data sets created from the UNISURE source Reusability of Dataset’s data created,

extracts will be staged in intermediate tables Simplifying the data flow

between Inbound and PAD

Due to the various migrations onto the Unisure

systems, though the input file structure is mostly

confirmed, the level of transformations and grain Consistent by the Business Requirement

of processing the data and generating the model

points is different across the various product

references.

To accommodate these differences within the

Unisure source and conform it across the different

contract engines with the PAD database and

Outbound design, the inbound design would store

data within PAD at two grains - one catering the

grain of Policy Number, Increment Reference and

Coverage Reference combination and another

grain catering the Policy level data. All the

measure/dimensions will be populated across

PAD with both these grains, these of course are

aggregates for policy level grain. The outbound

design, would perform a discriminatory selection

depending on the product reference and

transform the agreement data into the model

points.

The grain where a particular policy can be

invested using multiple premium types would be

accommodate on the policy - fund relationship,

which indicate the current position of investments

depending on the premium type.

Cases where the input files behaves differently

across the sub tranches (like Indemnity

Proportion, % LAUTRO Initial Commission Rate,

etc., are selected from different columns of the

source file depending on the product references)

Bits ID: 2013HW70753 Page 17 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

Design Decision Justification

this will be conformed with in the inbound.

Movements would be captured only from the Driven based on the discussions with

weekly movement extracts and no specific Unisure Stack team

derivations would be made to populate the

movements in the activity agreement relationship

table. The target activity code and activity

effective date would be populated solely based on

the source movement type and movement

effective date coming from the weekly movement

extract

The initial load to the PAD table Agreement To make sure that all the policies would be

Activity Relationship would be done from the available in the table before capturing the

Inforce snapshot and the load to this table from changes for all the policies

the subsequent months would happen from the

weekly movement extracts.

SCD Logical Deletes month-on-month: Approach 2 was preferred because of its less

We have a requirement for month-on-month number of updates on the PAD table where

logical deletes while loading data into PAD. A few the data will be in huge volumes.

such scenarios are: Also, for inserts into the temporary table we

Unexpected off's like a policy or a have the flexibility to use Bulk Load option

component/element of a policy of Informatica.

disappearing from the extracts

Benefit/Life/Reassurance details on the

policy not populated in the subsequent

extracts

Changes to Fund investments of a policy;

Investments removed or switches between

the funds, etc.

As these are logical deletes from the source

system, they aren’t communicated through to

PAD. Therefore, our ETL would’ve no information

regarding the same.

Now, all the inbound data from the source month-

on-month is a complete snapshot of data as

compared to the previous month. Therefore,

identifying new records and updated records

would be easier as compared to identifying the

Bits ID: 2013HW70753 Page 18 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

Design Decision Justification

deletes per PAD table/entities.

The following 2 approaches were considered for

implementation:

Approach 1:

Updating all the records from the source for the

current month/load while loading the target PAD

tables for identifying the residual records &

concluding them as deletes.

Approach 2:

A slim long temporary table will be created to

store all the surrogate keys for the data

corresponding to the current run/month and later

a table-minus-table is computed between the PAD

table and this temporary table to infer the logical

deletes from the source.

This temporary table would be partitioned based

on the mapping identifier and source to facilitate

a partition truncate once the logical deletes have

been made.

A decision was taken to implement Approach 2.

Change Data Capture (CDC) will not be used This is because if CDC is implemented, We

across the work streams (P1L70, Unisure, would not be in a position to identify

Paymaster and Administrator). records which need to be logically closed in

the PAD tables. The approach for closing the

records in the PAD tables is explained in the

SCD logical deletes design decision.

Bits ID: 2013HW70753 Page 19 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

1.1 High Level System Architecture

2.3 ETL Architecture

The ETL architecture covering the scope of this design. For clarity and simplicity, the key

elements are represented below:

ETL Architecture Flow

PERL SCRIPT

ETL2 ETL3 ETL4 ETL5 ETL6

DB DB

DB DB

Target Files

UNISURE Source Files (139 MPF’s)

Inbound Staging Intermediate Tables

(Fixed width ASCII) PAD Outbound Staging

on Informatica

to store UNISURE

UNIX server data sets

ETL – Using Informatica 8.6 ETL1

DB - Oracle 10g database

DB DB DB

Normal Data Flow

Unprocessed Data Flow

Operational Batch Reference Data

Operational Batch Data Flow Unprocessed Data Lookup Data

Data (GOMD) Files

Lookup Data Flow

Out of scope for

this document

GOMD

Figure 3

The description of the above components and process flow is given below.

GENERIC OMD

Generic OMD is an CUSTOMER scheduling tool. As part of GOMD, a PERL script is the basic

driver to invoke and execute each ETL job throughout the process as explained in the OMD

Macro design document.

Bits ID: 2013HW70753 Page 20 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

OMD Operational Meta Data table will contain data about batch identifiers, for instance: source

system name, received date of the source file, processed date and statuses of each processing

state (such as Pre-PAD Data Stage successful, Loaded to PAD, Post-PAD Data Stage Successful,

and MPF created). The data into this table inserted/updated by PERL script only. There will not

be any direct interface to this table from Informatica mapping.

e.g.: PAD is associated with a Batch Identifier, enabling roll back to be handled by the Batch

Identifier when a session fails abruptly. It is expected that a particular ETL job should be re-

started on failure. More details can be found in the OMD Macro Design Document.

UNPROCESSED DATA:

Error handling and controls data structures and processes are represented in the Errors and

Controls macro design. The source specific implementation of that generic design is contained

in Section 11 of this document.

REFERENCE DATA FILES:

These files will be loaded into respective lookup database tables using ETL-1.

LOOKUP DATA:

Reference data is stored in multiple database tables. These tables will be looked up and

required reference values will be retrieved during the processes ETL-3 and ETL-4 which loads

data into PAD and outbound staging tables respectively.

ETL-1:

It consists of Informatica mapping/s which reads the data from reference files/tables and loads

into respective lookup database tables.

SOURCE:

CUSTOMER Valuation system on monthly basis will generate UNISURE extract files. The ETL

source will be the UNISURE source extract files which are generated by this valuation system.

The same files will be copied onto Informatica UNIX server at specified location from where the

Informatica service reads and processes the source data.

ICMS Commission system would be generating the extract on monthly basis. The same would

be copied onto Informatica server on the Unix environment at specified location and then

picked up by the Informatica service to processes it.

Bits ID: 2013HW70753 Page 21 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

Asset Share Factors data would be used for referencing the Fund, Asset Share and Unit Price

calculations

ETL-2:

ETL-2 consists of Informatica mappings which read the data from source UNISURE files and

loads into inbound staging table. During the process if any source record violates the business

or database constraint rule, that record will be populated into unprocessed data table using the

process described in the Error Handling Macro Design.

INBOUND STAGING:

Inbound staging table has similar structure to the source data, with the addition of meta data

fields (e.g. Extract Date, Source File Name, Batch Identifier).

Where required, Inbound Staging will contain the data from the current and prior extract (i.e.

two consequent months of data), allowing movements to be detected via the delta between

two extracts, where movement events or transactions are not provided on the source extract.

The stage table will be partitioned in order to enable historical data to be easily deleted (via

truncating the partition) when no longer required.

ETL-3:

ETL -3 consists of Informatica mappings which reads the data from inbound staging table and

loads into Intermediate tables specific to UNISURE. These intermediate tables will contain the

Data sets derived from the UNISURE inbound staging data.

ETL-4:

ETL -4 consists of Informatica mappings which reads the data from inbound staging table and

loads into PAD table. During the process if any record violates the business or database

constraint rule that record will be populated into unprocessed data table according to the Error

Handling Macro Design.

PAD:

PAD (Persistent Actuarial Database) consists of multiple database tables with predefined

relationships.

Bits ID: 2013HW70753 Page 22 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

The following stages are explicitly out of scope for this design:

ETL-5:

ETL-5 consists of Informatica mappings which read the data from the PAD and loads into

outbound staging table. More details are provided as a part of PAD-MPF Macro.

OUTBOUND STAGING:

Data will be staged in the outbound staging before creating the MPF files. More details are

provided as a part of PAD-MPF Macro.

ETL-6:

ETL-6 consists of mappings that will create the MPF files. More details are provided as a part of

PAD-MPF Macro.

TARGET:

The target is Model Point ASCII CSV files with column and business header information. More

details are provided as a part of PAD-MPF Macro.

OTHER PROCESS STAGES:

Post-Prophet, a series of ETL processes will consume Prophet Results, PAD data and other data

sources to fulfil the ultimate business reporting requirements via the Data Marts. These

processes will be specified within the Data Mart ETL macro designs.

Bits ID: 2013HW70753 Page 23 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

2.4 List of Figures

Figure Description

Figure 1 Holistic view of UNISURE in Finance Transformation Solution

Figure 2 ETL Architecture flow

Figure 3 Data flow from Source to PAD

Figure 4 Data flow from Source to PAD with usage of tools at every stage

Figure 5 Modal point file generation for Unisure Source System

Figure 6 PAD to MPF E2E Architecture

Figure 7 Detail Architecture & flow of PAD to MPF

Figure 8 Types of generated MPFs

Figure 9 Closure ETL Architecture for PAD to Pre POL stage

Figure 10 Data Flow from POL to Grain stage

Figure 11 Data Flow from Grain to Outbound tables on split logic

Figure 12 MPF generation from Outbound Staging tables

2.5 Open Issues at Submission of This Draft

Issue Planned Resolution

The full volume Post DFR files for The communication is already sent to CUSTOMER DFR

Inforce and Movement extracts have teams requesting for the files

not yet been received and this might

impact the testing of the POST DFR

code.

2.6 Environment Definition

Tools Version Function

AIX 5.3 Operating System

Oracle 10g Database

Bits ID: 2013HW70753 Page 24 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

Informatica 9.1, 10.1 ETL tools to perform all the Extract Transform

and Load functions

GOMD CUSTOMER tool used for scheduling in the

Finance Transformation Programme.

Bits ID: 2013HW70753 Page 25 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

3 Tools Used

Informatica Power Centre- ETL tool

Perl script

Shell Script

Database Toad Oracle application

GOMD job scheduler

PVCS- Version maintenance used for documentation

Bits ID: 2013HW70753 Page 26 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

4 Version Control Process

The control of specific code releases, and the management of the Informatica repository, is out

of scope of this design. As Release Management activities, they will be specified elsewhere.

Bits ID: 2013HW70753 Page 27 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

5 Archival Strategy

Archiving breakdown will include:

File storage (Source file staging, MPF staging)

Database staging areas

PAD.

Bits ID: 2013HW70753 Page 28 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

6 Source System Dependency

Not applicable for UNISURE system.

Bits ID: 2013HW70753 Page 29 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

7 Non-Functional Requirements

All Non-functional requirements are captured in Requisite Pro. They include response times,

data storage, tracking of data, data lineage, access levels and security etc.

In addition to the documents referenced above, the following ‘Issue Resolution’ requirement

was captured during the design review:

"In the event that reference data used to load a given source system data feed into the PAD is

found to be corrupt, incorrect or incomplete then it must be possible to re-supply or amend the

reference data and re-run the staging to PAD mapping to resolve the errors. In practice, this

would mean:

Policies that had previously been written to the Unprocessed Data table being successfully

loaded to the PAD

Policies that had previously been loaded to the PAD being written to the Unprocessed Data

table

Policies that had previously been written to the PAD being again written to the PAD but with

changed data values

The re-running of Staging to PAD processes will first require the manual deletion of inserted

records on the basis of batch identifier, and the re-setting of record end dates and current flags

for those records which have been updated. This is expected to be a manual BAU process,

though the necessary SQL statements are expected to be provided to aid testing.

Any PAD batch activities which are dependent on the successful load of this source system feed

(e.g. MPF production) should not commence until users confirm that the data loaded to the

PAD for the given source system is of sufficient quality (i.e. that the Unprocessed Data has been

examined and any reprocessing of the kind described above has taken place). This requirement

should be handled by the creation of GOMD meta data and the configuration of GOMD for

Finance Transformation."

Bits ID: 2013HW70753 Page 30 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

8 Source Specific macro design

8.1 Data Flow Diagram

The diagram represented below gives the flow of data from the Source to PAD.

Figure 4

8.2 Pre and Post Processing Requirements

Not applicable for UNISURE

Bits ID: 2013HW70753 Page 31 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

9 Source Data

9.1 Source Data Specification

The UNISURE source data is transmitted in fixed width ASCII files. Data is split across 3 extracts,

each containing multiple files.

The 3 extracts are listed below:

Interface Name Description

The extract will transmit the below 3 files on a monthly basis

NUCBL.DESIGNER.DATA.TCBDLEL.UNLOAD

ICMS NUCBL.DESIGNER.DATA.TCBCNDL.UNLOAD

commission NUCBL.DESIGNER.DATA.TCBDEAL.UNLOAD

shapes(3 files) Note:- ICMS is descoped because Unisure is dealing with Existing Business

whereas ICMS is dealing with New Business

For future enhancement, We are just loading data up to Stage

The extract will transmit the below 20 files on a monthly basis

NUWJL.FTP.UN.MHLY.FILE01.CRExxxxx.DEL500

NUWJL.FTP.UN.MHLY.FILE02.CRExxxxx.DEL500

NUWJL.FTP.UN.MHLY.FILE03.CRExxxxx.DEL500

NUWJL.FTP.UN.MHLY.FILE04.CRExxxxx.DEL500

NUWJL.FTP.UN.MHLY.FILE05.CRExxxxx.DEL500

NUWJL.FTP.UN.MHLY.FILE06.CRExxxxx.DEL500

NUWJL.FTP.UN.MHLY.FILE07.CRExxxxx.DEL500

NUWJL.FTP.UN.MHLY.FILE08.CRExxxxx.DEL500

Unisure to NUWJL.FTP.UN.MHLY.FILE09.CRExxxxx.DEL500

valuation inforce NUWJL.FTP.UN.MHLY.FILE10.CRExxxxx.DEL500

interface(20 NUWJL.FTP.UN.MHLY.FILE11.CRExxxxx.DEL500

files) NUWJL.FTP.UN.MHLY.FILE12.CRExxxxx.DEL500

NUWJL.FTP.UN.MHLY.FILE13.CRExxxxx.DEL500

NUWJL.FTP.UN.MHLY.FILE14.CRExxxxx.DEL500

NUWJL.FTP.UN.MHLY.FILE15.CRExxxxx.DEL500

NUWJL.FTP.UN.MHLY.FILE16.CRExxxxx.DEL500

NUWJL.FTP.UN.MHLY.FILE17.CRExxxxx.DEL500

NUWJL.FTP.UN.MHLY.FILE18.CRExxxxx.DEL500

NUWJL.FTP.UN.MHLY.FILE19.CRExxxxx.DEL500

NUWJL.FTP.UN.MHLY.FILE20.CRExxxxx.DEL500

NUWJL.FTP.UN.WKLY.CRExxxxx.DEL500

Bits ID: 2013HW70753 Page 32 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

The extract will transmit the below 3 files on a monthly basis

NUVRP.PRODUS.P.PRICES.CREYYMM.INP.G0001V00

Asset share

NUVRP.PRODUS.P.SPRICE.CREYYMM.INP.G0001V00

factors and W/P

NUVRP.PRODUS.P.FTGIPP.CREYYMM.INP.G0001V00

fund ID's(5 files)

NUVRP.PRODUS.P.FTGNG.CREYYMM.INP.G0001V00

NUVRP.PRODUS.P.FTGNGG.CREYYMM.INP.G0001V00

The Inforce and Movement files come in two sets, one set without headers and footers until

August – 2009 and would be termed as Pre-DFR files the files that arrive after August -2009 will

have headers and footers and would be termed as POST DFR files and the files would be

processed by two different ETL processes.

9.2 Extract Process

Once all the data files are available on the ETL server, the files will be connected to the

Informatica source components and then processed further.

9.3 Staging Source Data

Input data will be staged within a dedicated set of database inbound staging tables within the

Persistent Actuarial Data store staging schema. The staging tables will need to be able to store

at least two entire versions of the source data in order to allow change data capture and

movement detection.

The metadata stored in the staging tables facilitates the identification of the source file and

batch identifier from which the policy got loaded.

Using Transformation logic (based on assumptions made out of available UNISURE SAS code,

and validated by CUSTOMER) the 3 UNISURE source extract files provided in section 13.1 of this

document will be merged and 10 data sets will be created. These data sets will be stored in

intermediate tables for further processing to PAD.

The Inbound stating tables are

WH_STG_UNISURE

WH_STG_UNISURE_ICMS_DLEL

WH_STG_UNISURE_ICMS_DEAL

WH_STG_UNISURE_ICMS_CNDL

Bits ID: 2013HW70753 Page 33 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

WH_STG_UNISURE_MOV

WH_STG_UNISURE_ASF_GNGG

WH_STG_UNISURE_ASF_GIPP

WH_STG_UNISURE_ASF_GNG

The Intermediate tables are

WH_STG_UNISURE_POL

WH_STG_UNISURE_INCCOV

WH_STG_UNISURE_BENCOMDA

WH_STG_UNISURE_ASSNG

WH_STG_UNISURE_UNTS

WH_STG_UNISURE_ICMS

WH_STG_UNISURE_ASH_FCTR

WH_STG_UNISURE_FPHA_AGR_PRMM

WH_STG_UNISURE_AGR_BNFT

In the process of loading the data from inbound staging to intermediate tables the data is split

and grouped which is discussed in detail in the Data Mapping sheet in section 15.

9.4 Source Data Dictionary

The below mentioned provides the source data dictionary. This data dictionary is created from

the UNISURE source layouts.

Code

Variable Source Name

polref Policy number *

inrref Increment reference *

Bits ID: 2013HW70753 Page 34 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

covref Coverage reference *

prdref Product ref

prdref Product ref

prdvr_no Product version no.

schemeno Scheme number

prefix Prefix

territ Territory

p_lcon Life contingency

currdta Policy currency date

currdta Policy currency date

currdta Policy currency date

surname1 Surname

dob1a DoB

sex1 Sex

smkr1 Smoker

distchan Distribution channel

agt_type Agent type

agid Agent code

element Element Type

serps_t SERPS Type

bencomda Benefit Start Date

bencomda Benefit Start Date

benamt1 Benefit amount

matdta Maturity Date

alloc Allocation Type

premtype Premium Type

premstat Premium Status

freq Premium Frequency

premium Premium Amount

premium Premium Amount

prmcsdta Premium Ceasing Date

prmindex Premium Indexation

indemnyt Indemnity Indicator

i_enhanc Initial Commission Enhancement Rate

incomrte Initial Commission Enhancement Rate

r_enhanc Renewal Commission Enhancement Rate

eiloc EILOC code

fund_bc Fund Based Commission Rate

purch_da Purchase date

cal_yr Calendar year of unit purchase

polfeew #N/A

commrate Commission Rate

Bits ID: 2013HW70753 Page 35 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

comforpc Commission Foregone Percent

polfee Policy Fee *

wvrprem Policy Fee Waiver *

al_rate1 Allocation Rate 1

al_rate2 Allocation Rate 2

al_rate3 Allocation Rate 3

al_rate4 Allocation Rate 4

al_per1 Allocation Period 1

al_per2 Allocation Period 2

al_per3 Allocation Period 3

al_per4 Allocation Period 4

basetafc Base TAFC

pclautro % of Lautro

commtype Commission Type

afcsign Total AFC Sign

age_adj Age Adjustment Rating

Weighted Explicit External Fund Manager Charge

eefmc (EEFMC)

sunits #N/A

fbcend_da FBC End date

fbcfreq FBC Frequency

fbcstrt_da FBC Start date

occlass Occupation class

termfact Term Factor Rate

indemi Indemnify Indicator

comshape Commission Shape

chgdur Charge Duration

agepay Age / Payment Factor

prntschm Parent Scheme Reference

baseterm Secondary Base TAFC

secondaf Secondary Charge Start Date

elec_result Wagner Voting Status

elec_numbe

r Wagner election id

agtyp Original Agreement Type Code

agtyp Original Agreement Type Code

apwfreq #N/A

apwamnt #N/A

apwdate #N/A

Bits ID: 2013HW70753 Page 36 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

9.5 Identify the Movement Logic

Movements will be captured by comparing the current extract against the previous extract,

rather than from source-supplied transactions. Staging at any point of time will hold one month

of history extract to allow comparison of two sequential extracts.

The Activity table (WH_PAD_ACTVTY) will hold all the data related to movements for a

particular policy. The data mapping sheet for the target WH_PAD_ACTVTY table contains details

of how to populate this table.

PAD to MPF processing is common for all the Source Systems in TARDIS. Aviva uses an actuarial

engine Prophet, which consumes Model Point Files as its input.

PAD to MPF deals with the generation of these Prophet–ready Model Point Files (MPFs) from

PAD data.

Two kinds of MPFs are generated every month: Inforce and Movement.

The inforce and movement MPFs differ only in terms of the PAD extraction criterion. The

transformation logic applied is the same.

Bits ID: 2013HW70753 Page 37 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

10 PAD TO MPF generation for Unisure

PAD to MPF processing is common for all the Source Systems in TARDIS. Organization uses an

actuarial engine Prophet, which consumes Model Point Files as its input.

PAD to MPF deals with the generation of these Prophet–ready Model Point Files (MPFs) from

PAD data.

Two kinds of MPFs are generated every month: Inforce and Movement.

The inforce and movement MPFs differ only in terms of the PAD extraction criterion. The

transformation logic applied is the same.

Figure 5

10.1 MPF generation Business Overview

Figure5 explains different types of policies which hold data for all the Source

Systems in common.

For Unisure, data is held in Unitized NP Pensions, Unitized WP Pensions, Term

Assurance.

Bits ID: 2013HW70753 Page 38 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

10.2 PAD TO MPF END TO END Architecture

Figure 6

Bits ID: 2013HW70753 Page 39 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

10.3 Detail Architecture and flow for PAD TO MPF

Figure 7

10.4 Inforce criterion for Unisure Source System

Based upon source specific business conditions, agreements in PAD are evaluated for “Inforce

status” and processed for actuarial valuation.

Agreement should have active status (no termination reason code).

All Unitized agreements (invested in Unitized product) should have at least one fund

investment or have annual premium greater than zero (for conventional agreements). All

agreements should have at least one life attached.

Bits ID: 2013HW70753 Page 40 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

10.5 Types of MPF file generated

There are two types of MPFs generated for Unisure Source System i.e. Inforce and Movement.

Figure 8

Based upon source specific business conditions, agreements in PAD are evaluated for “Inforce

status” and processed for actuarial valuation.

Agreement should have active status (no termination reason code).

All Unitized agreements (invested in Unitized product) should have at least one fund

investment or have annual premium greater than zero (for conventional agreements). All aAs

shown in fig. above,

• Policy marked as (1) would be present in Inforce MPF because it started in the month of

• December 2009 which is standard period of start of policy and there are no transactions

in it till date which means it holds the snapshot of the data in policy till date from the beginning.

• Policy marked as (2) would be present in Movement MPF because it lapsed in the

middle before the end date.

Bits ID: 2013HW70753 Page 41 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

• Policy marked as (3) would be present in Inforce as well as Movement as it started after

the month of December 2009 in which case it would be considered in the next Financial Year

i.e. 2010. Also, in Movement it would be marked as “New Business”.

• Policy marked as (4) would be similar to the marked as (3). 31

• Policy marked as (5) would be present only in Movement MPF as it started after the

financial year 2009 and also terminated before the end time.

10.6 ETL Architecture Closure look

Figure 9

Figure shows flow for PAD to Pre POL Stage loading of data. Where Pre POL stage is junction tables.

Junction tables are join of few PAD tables based on some specific conditions.

Bits ID: 2013HW70753 Page 42 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

Figure 10

Figure shows data load from POL stages to GRAIN ATTR table depending upon business conditions.

Bits ID: 2013HW70753 Page 43 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

Figure 11

Figure shows loading of Data in Outbound Tables using Split Logic.

Bits ID: 2013HW70753 Page 44 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

Figure 12

Figure shows the last step to generate MPFs from OB staging tables.

Bits ID: 2013HW70753 Page 45 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

11 Error Handling

Generic Error Handling for all the source systems is described in the Component Macro Design

for Process Optimization and Governance (Controls-Error Handling) Macro Design Document.

The tables below list provide information about the specific check that should be performed

within the Unisure feed into the PAD. For checks which may result in a complete stop to the

data load the “Error Code” column provides the specific error code which should be returned to

indicate the issue that occurred.

11.1 Source to Staging

Error Control / Error Check Design Decision

Code

E1001 Has extract arrived All of the source files should be received according to the

schedule defined in section 11 of this document.

The SLA table should be updated to reflect these

requirements.

If any of the files have not been received according to the

defined schedule then an e-mail should be sent to the

support team (the e-mail address and message content

should be parameterised to provide flexibility for future

change).

E1002 Has file been received As defined by the control framework upon receipt of the

before? source files a binary comparison of each file against those

processed in the previous month should be performed. In the

event that the files identically match the previous file the

batch should terminate, returning the error code and an e-

mail should be sent to the support team (the e-mail address

and message content should be parameterised to provide

flexibility for future change).

The Unisure Inforce source extract would be consisting of 20

different files split based on the product types, all of the same

format and combined weekly movement extracts

This comparison would be done on the Inforce extracts on a

monthly basis and on the weekly extracts on a weekly basis

E1003 File names are as The file names for the 20 Unisure extracts would be in the

expected?

Bits ID: 2013HW70753 Page 46 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

Error Control / Error Check Design Decision

Code

following format

NUUNL.UNISURE.IFVF.xxxxxxxxx.CRE08366.DEL2600

xxxxxxxxx would specify the actual name of the extract file.

The file names for the 3 Commission extracts would be in the

following format

NUCBL.DESIGNER.DATA.xxxxxxxx.UNLOAD

xxxxxxxx would specify the actual name of the extract file

In the event that any of the files do not match the defined

format then the batch should terminate, returning the error

code and an e-mail should be sent to the support team (the e-

mail address and message content should be parameterised

to provide flexibility for future change).

E1004 Is file structure as In the event that any of the data does not conform to the

expected? structure defined by the Valuation Inforce extract

specification and Valuation Movement extract specification

then the batch should terminate, returning the error code

and an e-mail should be sent to the support team (the e-mail

address and message content should be parameterised to

provide flexibility for future change).

E1007 Is header and footer As of now the Unisure extracts does not consist of header and

found? footer, but if the headers and footers were included at a later

point of time, this particular control should be handled in the

following manner.

Each collection of files should be checked to ensure that they

include both a header and footer record conforming to the

Unisure layout structures.

In the event that either the header or footer cannot be found

then the batch should terminate, returning the error code

and an e-mail should be sent to the support team (the e-mail

address and message content should be parameterised to

provide flexibility for future change).

E1008 Number of records As of now the Unisure extracts does not consist of a footer,

but if the footers were included at a later point of time, this

particular control should be handled in the following manner.

The trailer record contains the number of records that should

be present for each record type. These record counts should

Bits ID: 2013HW70753 Page 47 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

Error Control / Error Check Design Decision

Code

be compared against the number of records found in the file.

If any of the record counts do not match then the file is

corrupt and therefore the batch should terminate, returning

the error code and an e-mail should be sent to the support

team (the e-mail address and message content should be

parameterised to provide flexibility for future change).

E1009 Timestamp The Unisure POST DFR extracts consist of header, this

particular control should be handled in the following manner.

The header record contains timestamp information within the

“Date Of Extract” field. This should be checked to ensure that

this is as expected (after the last working day of the previous

month such as 29/9/2007).

If the timestamp is not correct then the file is corrupt and

therefore the batch should terminate, returning the error

code and an e-mail should be sent to the support team (the e-

mail address and message content should be parameterised

to provide flexibility for future change).

E1010 Other unhandled error In the event that any unhandled error occurs then the batch

should terminate, returning the error code and an e-mail

should be sent to the PAD support team (the e-mail address

and message content should be parameterised to provide

flexibility for future change).

Load success If the load process is successful then the batch should

complete successfully and the staging to PAD batch should be

initiated.

11.2 Staging to PAD

Error Control / Error Check Design Decision

Code

Exceptions A number of key fields are required in order to populate the

Unisure data into the PAD either due to the potential

corruption of the PAD, or the inability to value the business.

The following fields must be populated with valid data in

Bits ID: 2013HW70753 Page 48 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

Error Control / Error Check Design Decision

Code

order for the data to be loaded into the PAD:

Policy Number

Increment Reference

Coverage Reference

Premium Type

If any of these fields is found to contain invalid data then the

record should be excluded from the load and should instead

be added to the unprocessed data table with a record status

of excluded, and the Error Description which indicates the

field and value that caused the exclusion. In addition all

associated records for the same policy should also be added

to the unprocessed data table.

Processing should then continue for subsequent records.

Data item validation Any fields which contain invalid data, but which are not

mandatory (see exceptions above) should be defaulted

within the PAD, and a record should be added to the non-

fatal error table containing type, record identifier, invalid

field and the invalid value.

For each field the details of how to detect invalid data is

included within the “Data mapping and Business Rules”

section of this document. It also provides details of the

default value, or the reference table from which the default

value can be obtained.

Load success If the load process is successful then the batch should

complete successfully allowing batches which are dependent

on this load to commence (e.g. MPF production)

Lookup Failure If there is a lookup failure on dimension master or key lookup

then populate with the key for unknown and populate the

record in non-fatal errors table.

11.3 PAD TO MPF Error Handling

Error Code Control / Error Check Design Decision

E5001 Exceptions Unknown product family or MPF name after

Bits ID: 2013HW70753 Page 49 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

Error Code Control / Error Check Design Decision

logic

E5003 Exceptions Null Check on MPF variable at various stages

of transformation

11.4 Error Notification Approach

As defined by the Generic Error Handling framework the following notification approaches will

be used:

Fatal errors should result in termination of the process with an error code returned to

indicate the type of error, and an e-mail transmitted to alert the support teams.

Non-fatal errors where the data should not be processed should result in the data being

logged to the unprocessed data table and a message logged to the non-fatal errors

table.

Non-fatal errors where the data should be processed should result in a message logged

to the non-fatal errors table.

Bits ID: 2013HW70753 Page 50 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

12 Database Overview

A fully comprehensive overview of the database is covered in the Physical Design Model (PDM)

document. The following are the list of the tables that are applicable for the source to PAD

macro design of UNISURE.

Table Name Description

WH_PAD_AGRMNT_ACTVTY_RLTNSHP Activity Agreement Relationship

WH_PAD_AGRMNT Agreement

WH_PAD_DIMENSION_MSTR Dimension Master

WH_PAD_FNCL_PRDCT_HLDG_AGRMNT Financial Product Holding Agreement

WH_PAD_FPHA_SUPP FPHA Measures

WH_PAD_KY_LKP Key Lookup

WH_PAD_PARTY Party

WH_PAD_PSN Person

WH_PAD_RLTNSHP_MSTR Relationship Master

WH_PAD_FND_AGRMNT_RLTNSHP Fund Agreement Relationship

WH_PAD_UNT_PRICE Unit Price

WH_DATE Generic date dimension

WH_PAD_LDG Load details

WH_PAD_AST_SHR_FCTR Asset Share Factors

WH_PAD_AST_SHR_MVMNT Asset Share Factors for movements

Bits ID: 2013HW70753 Page 51 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

13 Data mapping and business rules

This section will have a mapping sheet which will provide the following mappings

Staging Table Mapping

Agreement Table Mapping

Party Table Mapping

Person Table Mapping

Financial Product Holding Agreement Table Mapping

Financial Product Holding Agreement Supplementary Table Mapping

Agreement Activity Table Mapping

Relationship Master Table Mappings

Asset Share Factors Table Mapping

Loading Table Mapping

In the mappings, Extract Date is the last day of the month for which extract is provided. For

example for January 2017’s extract date is 31/01/2017, for February 2017 it is 28/02/2017.

Bits ID: 2013HW70753 Page 52 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

14 References

1. Roger S. Pressman, Software Engineering – A Practitioners approach, McGraw-Hill International,

6th Edition, 2005.

2. Len Bass, Paul Clements, Rick Kazman, Software Architecture in Practice, Pearson Education, 2nd

Edition, 2005

3. Harry S. Singh, “Data Warehousing – concepts, Technologies, Implementations, and

Management “, Prentice Hall PTR, New Jersey.

4. Douglas Hackney, “Understanding and Implementing successful DataMart, Addison-

Wesley Developers Press”.

5. http://www.dw-institute.com

6. http://www.datawarehouse.org

Bits ID: 2013HW70753 Page 53 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

15 Appendix

Term Description

UNISURE Data related to Pensions

PAD PAD = Persistent Actuarial Data Store. Synonymous with “ODS”

MPF Model Point File

A data file with a defined structure that is used to load required data into

the Prophet tool

ETL Extract Transform Load

The process of extracting data from external sources, transforming it to fit

business needs and ultimately loading it into the end target.

Contract Engine A contract engine is a system that is used to generate and store contracts.

I.e. a policy, pension scheme, pension scheme category etc.

Prophet Prophet is a modelling tool used by the Actuarial Community. Inputs

include Model Point Files containing policy information taken from the

contract engines/databases.

Prophet calculates liabilities. It analyses at all cash flows on each policy, for

each month, up to 40 years in the future.

Prophet comprises two elements:

• An automation tool which runs Prophet Models as soon as all required

data is available.

• An Actuarial tool which performs deterministic valuations of individual

policy / benefits (individual model points) or summarised (grouped)

models. This creates two types of results – a record for each input model

point for a fixed time period and a summary for multiple time periods.

Staging A staging area is used as a place to store temporary data for import.

GOMD Generic OMD is an CUSTOMER scheduling tool

Database A computer application whose sole purpose is to store, retrieves, and modifies

data in

A highly structured way

Data Mart A logical and physical subset of the data warehouse’s presentation area.

Bits ID: 2013HW70753 Page 54 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

16 Plan of Work

No

Planned

of Specific Deliverable in terms

Tasks to be done Duration Status

task of project

(Weeks)

s

Requirement

1 2.5 IFAD’s, Solution design Completed

Gathering

2 Analysis & Design 2.5 Design Doc & Mapping sheets Completed

Test case and plan

3 1 Test cases Completed

preparation

3 Build & Unit testing 3 Unit tested code & UTC Completed

Acceptance test results and

4 System Testing 4 Completed

Quality test results

Deployment & Implementation plan &

5 1 Completed

Implementation Deliverable code

6 Documentation 2 SOP’s & SHS, User manuals Completed

Bits ID: 2013HW70753 Page 55 of

56

SEWPZG628T – Dissertation Finance Transformation – TARDIS

17 Check List of Items

S.

Need to Check Y/N

No.

1 Is the Cover page in proper format? Y

2 Is the Title page in proper format? Y

3 Is the Certificate from the Supervisor in proper format? Has it been signed? Y

4 Is Abstract included in the Report? Is it properly written? Y

5 Does the Table of Contents’ page include chapter page numbers? Y

6 Is Introduction included in the report? Is it properly written? Y

7 Are the Pages numbered properly? Y

8 Are the Figures numbered properly? Y

9 Are the Tables numbered properly? Y

10 Are the Captions for the Figures and Tables proper? Y

11 Are the Appendices numbered? Y

12 Does the Report have Conclusions/ Recommendations of the work? Y

13 Are References/ Bibliography given in the Report? Y

14 Have the References been cited in the Report? Y

15 Is the citation of References/ Bibliography in proper format? Y

Bits ID: 2013HW70753 Page 56 of

56

You might also like

- Mini Project Report SampleDocument27 pagesMini Project Report SamplePRAKHAR KUMAR100% (1)

- Tic Tac Toe Documentation (In Python 3) .Document36 pagesTic Tac Toe Documentation (In Python 3) .Nameless Faceless68% (47)

- PHP COURSE: Learn PHP Programming from ScratchDocument67 pagesPHP COURSE: Learn PHP Programming from ScratchSagar SikchiNo ratings yet

- Boiler Feed Water PumpDocument7 pagesBoiler Feed Water PumphadijawaidNo ratings yet

- PR3194 - Cryptocurrency Prediction Using Machine Learning - Report - SNDocument60 pagesPR3194 - Cryptocurrency Prediction Using Machine Learning - Report - SNMohd waseem100% (1)

- Online Recruitment SystemDocument40 pagesOnline Recruitment SystemSANGEETHA B 112005014No ratings yet

- Introduction To Augmented Reality Hardware: Augmented Reality Will Change The Way We Live Now: 1, #1From EverandIntroduction To Augmented Reality Hardware: Augmented Reality Will Change The Way We Live Now: 1, #1No ratings yet

- Project ReportDocument50 pagesProject Reportjames adamsNo ratings yet

- Attendence Management SystemDocument32 pagesAttendence Management SystemArsalan Khan50% (4)

- Finance Transformation: Sewpzg628T DissertationDocument38 pagesFinance Transformation: Sewpzg628T DissertationSouravDasNo ratings yet

- Railway Rest House and Holiday Homes ManagementDocument67 pagesRailway Rest House and Holiday Homes ManagementRajkumar kachhawa67% (3)

- Report FileDocument33 pagesReport FileChaitanya JagarwalNo ratings yet

- Electricity Bill Payment-1Document44 pagesElectricity Bill Payment-1shajanNo ratings yet

- Dbms Mini Project Report FINAL-1 - 1Document165 pagesDbms Mini Project Report FINAL-1 - 1mahesh bochareNo ratings yet

- Mini-Project-Report (Expense Manager Tracker Management System)Document21 pagesMini-Project-Report (Expense Manager Tracker Management System)phenomenal 4spNo ratings yet

- Final Project Documentation (Feasibility Report ch1, SRS ch2, Design Document ch.3)Document69 pagesFinal Project Documentation (Feasibility Report ch1, SRS ch2, Design Document ch.3)Areej 56No ratings yet

- Brain-Connect Academic Project ReportDocument66 pagesBrain-Connect Academic Project ReportAbhishek Maity 3364No ratings yet

- "Rocket Launch": Visvesvaraya Technological UniversityDocument29 pages"Rocket Launch": Visvesvaraya Technological UniversityFARHAAN PASHANo ratings yet

- LathaDocument16 pagesLathakarthikeyaarasadaNo ratings yet

- Final Project ReportDocument75 pagesFinal Project ReportSoftCrowd TechnologiesNo ratings yet

- RTO App - One-stop solution for vehicle & license documentsDocument42 pagesRTO App - One-stop solution for vehicle & license documentssaurabh gupta100% (1)

- GymmanagementDocument31 pagesGymmanagementSujan GrgNo ratings yet

- DROPS: Secure cloud data storage through division and replicationDocument49 pagesDROPS: Secure cloud data storage through division and replicationMrinal SaurajNo ratings yet

- Project Reprot Sem 1Document43 pagesProject Reprot Sem 1dawokel726No ratings yet

- WiVi Human Activity RecognitionDocument32 pagesWiVi Human Activity Recognitionrajeevjayan18No ratings yet

- Project ReportDocument32 pagesProject ReportShreyith J AminNo ratings yet

- YashGoel Internship ReportDocument33 pagesYashGoel Internship Reportyuvraj.2024cs1076No ratings yet

- MTech Thesis - END SEM REPORT (FINAL)Document62 pagesMTech Thesis - END SEM REPORT (FINAL)RACHIT SAXENANo ratings yet

- Report Final (2)Document50 pagesReport Final (2)adarshbirhade007No ratings yet

- Mini Project Report on Real-Time Object Detection Using YOLODocument15 pagesMini Project Report on Real-Time Object Detection Using YOLOSwapnil AryaNo ratings yet

- 1RG17CS032-Seminar ReportDocument34 pages1RG17CS032-Seminar Report1RN20IS411No ratings yet

- Mad Report Color PrintDocument5 pagesMad Report Color PrintjobimNo ratings yet

- Mini Project ReportDocument21 pagesMini Project ReportGOURAV MAKURNo ratings yet

- A Smart System For Donation Handling of Charitable Trusts and NgosDocument75 pagesA Smart System For Donation Handling of Charitable Trusts and NgosApoorva MehetreNo ratings yet

- Visvesvaraya Technological University: Personality PredictionDocument33 pagesVisvesvaraya Technological University: Personality PredictionHac Ker3456No ratings yet

- ICMS Report PDFDocument103 pagesICMS Report PDFSai Shyam S.NNo ratings yet

- BTP Report FinalDocument40 pagesBTP Report FinalvikNo ratings yet

- 2nd Year (1)Document22 pages2nd Year (1)Mahendra Kumar PrajapatNo ratings yet

- 01 IoT Based Smart AgricultureDocument45 pages01 IoT Based Smart AgricultureGautam DemattiNo ratings yet

- Submitted by Submitted byDocument77 pagesSubmitted by Submitted byAbhishek MathurNo ratings yet

- G63 ReportDocument76 pagesG63 ReportRAJDEEP srivatavaNo ratings yet

- "Routing Algorithm": Chhattisgarh Swami Vivekanand Technical University Bhilai (India)Document37 pages"Routing Algorithm": Chhattisgarh Swami Vivekanand Technical University Bhilai (India)Akshay AgrawalNo ratings yet

- Bluetooth Car Using ArduinoDocument50 pagesBluetooth Car Using ArduinoKondwaniNo ratings yet

- ReportDocument91 pagesReportArunNo ratings yet

- Journal App ReportDocument37 pagesJournal App ReportAditya SNo ratings yet

- Dbms Mini Project Report FINALDocument24 pagesDbms Mini Project Report FINALimadityaim777No ratings yet

- Rpkdtech PDFDocument56 pagesRpkdtech PDFKãrãñ K DâhiwãlêNo ratings yet

- Report ITS 7 SEM BharatDocument62 pagesReport ITS 7 SEM Bharatbakoliyapremchand892No ratings yet

- Thesis RajaKumar 19MCA005Document50 pagesThesis RajaKumar 19MCA005Mrinal SaurajNo ratings yet

- Remote Monitoring System ReportDocument33 pagesRemote Monitoring System ReportAkankhya BeheraNo ratings yet

- Update Weather App Project ReportDocument35 pagesUpdate Weather App Project Reportbhanu.singhcs22No ratings yet

- Blockchain-Based Admission ProcessDocument41 pagesBlockchain-Based Admission ProcessTry Try0% (1)

- 8-1Document137 pages8-1Prasad SalviNo ratings yet

- Cse-f Batch8 FinaldocDocument81 pagesCse-f Batch8 FinaldocpolamarasettibhuwaneshNo ratings yet

- Special Topics - II Report Team No - 56Document33 pagesSpecial Topics - II Report Team No - 56Don WaltonNo ratings yet

- Khasim Complete Main ProjectDocument98 pagesKhasim Complete Main Projectshaikashik1437No ratings yet

- tranning project reportDocument25 pagestranning project reportMahendra Kumar PrajapatNo ratings yet

- Vehicle Trackingusing Io TDocument22 pagesVehicle Trackingusing Io TMD. Akif RahmanNo ratings yet

- Project NewDocument41 pagesProject NewProdigy PvtNo ratings yet

- DBMSProject ReportDocument27 pagesDBMSProject ReportYashwanth MalviyaNo ratings yet

- Phase REPORT FORMAT1 CDocument8 pagesPhase REPORT FORMAT1 CAkshathNo ratings yet

- Constraining Designs for Synthesis and Timing Analysis: A Practical Guide to Synopsys Design Constraints (SDC)From EverandConstraining Designs for Synthesis and Timing Analysis: A Practical Guide to Synopsys Design Constraints (SDC)No ratings yet

- 05 38 Counts Exercise & TextDocument5 pages05 38 Counts Exercise & TextANUP MUNDENo ratings yet

- N210 - Computer Practice N4 QP Nov 2019Document21 pagesN210 - Computer Practice N4 QP Nov 2019Pollen SilindaNo ratings yet

- Avaya IP Office ContactCenter BrochureDocument87 pagesAvaya IP Office ContactCenter BrochureVicky NicNo ratings yet

- Paytm StatementDocument3 pagesPaytm Statementayushi tripathiNo ratings yet

- Chap 06Document46 pagesChap 06M. Zainal AbidinNo ratings yet

- Computer Science and TranslationDocument21 pagesComputer Science and TranslationMarina ShmakovaNo ratings yet

- Computer Terms GlossaryDocument28 pagesComputer Terms GlossaryAngélica AlzateNo ratings yet

- Article - AVEVA Predictive Analytics Metals and MiningDocument3 pagesArticle - AVEVA Predictive Analytics Metals and MiningJuan Manuel PardalNo ratings yet

- Hacksaw TypeDocument6 pagesHacksaw TypeZool HilmiNo ratings yet

- Review On Matrices (Definition and Coursera Systems of EquationsDocument2 pagesReview On Matrices (Definition and Coursera Systems of EquationsAlwin Palma jrNo ratings yet

- Foxit Reader Quick Start GuideDocument41 pagesFoxit Reader Quick Start GuideMedina BekticNo ratings yet

- ME453 CAD: Design ProjectDocument33 pagesME453 CAD: Design ProjectKaori MiyazonoNo ratings yet

- 4 Job Interviews and Career Part 2Document2 pages4 Job Interviews and Career Part 2lala inriyaniNo ratings yet

- User ManualDocument60 pagesUser Manuallilya mohNo ratings yet

- PD24 Sales SheetDocument2 pagesPD24 Sales SheetComunicación Visual mARTaderoNo ratings yet

- Og&C Standard Work Process Procedure Welder QualificationDocument11 pagesOg&C Standard Work Process Procedure Welder QualificationGordon LongforganNo ratings yet

- Lesson 13 StsDocument11 pagesLesson 13 StsJasmin Lloyd CarlosNo ratings yet

- Alla Priser Anges Exkl. Moms. Kontakta Din Säljare Vid FrågorDocument46 pagesAlla Priser Anges Exkl. Moms. Kontakta Din Säljare Vid FrågorSamuelNo ratings yet

- Elec Cold Test - Smdb-Roof AtkinsDocument2 pagesElec Cold Test - Smdb-Roof AtkinsNabilBouabanaNo ratings yet

- PVS980 - 5MW Pre Commissioning InstructionDocument6 pagesPVS980 - 5MW Pre Commissioning InstructionFrancisco Aguirre VarasNo ratings yet

- Manuale Stampante 3DDocument45 pagesManuale Stampante 3DMiriamNo ratings yet

- Five Traits of Technical WriitngDocument18 pagesFive Traits of Technical WriitngNINETTE TORRESNo ratings yet

- Automatic smoke detector and fire alarm systemDocument7 pagesAutomatic smoke detector and fire alarm systemSwastik JainNo ratings yet

- Justmoh Procter Test So-13Document11 pagesJustmoh Procter Test So-13Abu FalasiNo ratings yet

- Image Stitching Using Matlab PDFDocument5 pagesImage Stitching Using Matlab PDFnikil chinnaNo ratings yet

- Laporan Aplikasi Kalkulator & Intent PDFDocument15 pagesLaporan Aplikasi Kalkulator & Intent PDFchusnun nidhomNo ratings yet

- Line CodingDocument16 pagesLine CodingAitzaz HussainNo ratings yet

- Contact Google Workspace SupportDocument2 pagesContact Google Workspace Supportmhpkew6222No ratings yet