Professional Documents

Culture Documents

AllenNLP: A Deep Learning Platform for Natural Language Processing

Uploaded by

Giang Từ0 ratings0% found this document useful (0 votes)

19 views6 pagesAllenNLP is a deep learning platform for natural language processing (NLP) research built on PyTorch. It addresses issues with existing NLP research codebases such as being difficult to run, extend, and reproduce results. AllenNLP aims to lower barriers to NLP research by providing useful NLP abstractions, handling common NLP problems, defining experiments declaratively, and sharing models. It includes reference implementations of state-of-the-art NLP models and has interactive online demos.

Original Description:

ahaha

Original Title

Article 01. AllenNLP_A-Deep-Semantic-Natural-Language-Processing-Platform

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentAllenNLP is a deep learning platform for natural language processing (NLP) research built on PyTorch. It addresses issues with existing NLP research codebases such as being difficult to run, extend, and reproduce results. AllenNLP aims to lower barriers to NLP research by providing useful NLP abstractions, handling common NLP problems, defining experiments declaratively, and sharing models. It includes reference implementations of state-of-the-art NLP models and has interactive online demos.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

19 views6 pagesAllenNLP: A Deep Learning Platform for Natural Language Processing

Uploaded by

Giang TừAllenNLP is a deep learning platform for natural language processing (NLP) research built on PyTorch. It addresses issues with existing NLP research codebases such as being difficult to run, extend, and reproduce results. AllenNLP aims to lower barriers to NLP research by providing useful NLP abstractions, handling common NLP problems, defining experiments declaratively, and sharing models. It includes reference implementations of state-of-the-art NLP models and has interactive online demos.

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 6

AllenNLP: A Deep Semantic Natural Language Processing Platform

Matt Gardner, Joel Grus, Mark Neumann, Oyvind Tafjord, Pradeep Dasigi,

Nelson F. Liu, Matthew Peters, Michael Schmitz, Luke Zettlemoyer

Allen Institute for Artificial Intelligence

Abstract reproduce results, creating a barrier to entry for

arXiv:1803.07640v2 [cs.CL] 31 May 2018

research on many problems.

Modern natural language processing AllenNLP, a platform for research on deep

(NLP) research requires writing code. learning methods in natural language processing,

Ideally this code would provide a pre- is designed to address these problems and to sig-

cise definition of the approach, easy nificantly lower barriers to high quality NLP re-

repeatability of results, and a basis for search by

extending the research. However, many

research codebases bury high-level pa- • implementing useful NLP abstractions that

rameters under implementation details, make it easy to write higher-level model code

are challenging to run and debug, and are for a broad range of NLP tasks, swap out

difficult enough to extend that they are components, and re-use implementations,

more likely to be rewritten. This paper

• handling common NLP deep learning prob-

describes AllenNLP, a library for applying

lems, such as masking and padding, and

deep learning methods to NLP research,

keeping these low-level details separate from

which addresses these issues with easy-

the high-level model and experiment defini-

to-use command-line tools, declarative

tions,

configuration-driven experiments, and

modular NLP abstractions. AllenNLP • defining experiments using declarative con-

has already increased the rate of research figuration files, which provide a high-level

experimentation and the sharing of NLP summary of a model and its training, and

components at the Allen Institute for make it easy to change the deep learning ar-

Artificial Intelligence, and we are working chitecture and tune hyper-parameters, and

to have the same impact across the field.

• sharing models through live demos, making

1 Introduction complex NLP accessible and debug-able.

Neural network models are now the state-of-the- The AllenNLP website1 provides tutorials,

art for a wide range of tasks such as text classifi- API documentation, pretrained models, and

cation (Howard and Ruder, 2018), machine trans- source code2 . The AllenNLP platform has a per-

lation (Vaswani et al., 2017), semantic role label- missive Apache 2.0 license and is easy to down-

ing (Zhou and Xu, 2015; He et al., 2017), corefer- load and install via pip, a Docker image, or cloning

ence resolution (Lee et al., 2017a), and semantic the GitHub repository. It includes reference im-

parsing (Krishnamurthy et al., 2017). However it plementations for recent state-of-the-art models

can be surprisingly difficult to tune new models (see Section 3) that can be easily run (to make

or replicate existing results. State-of-the-art deep predictions on arbitrary new inputs) and retrained

learning models often take over a week to train with different parameters or on new data. These

on modern GPUs and are sensitive to initialization pretrained models have interactive online demos3

and hyperparameter settings. Furthermore, ref- 1

http://allennlp.org/

erence implementations often re-implement NLP 2

http://github.com/allenai/allennlp

3

components from scratch and make it difficult to http://demo.allennlp.org/

with visualizations to help interpret model deci- and a span from the passage as output, each train-

sions and make predictions accessible to others. ing Instance comprises a TextField for the

The reference implementations provide examples question, a TextField for the passage, and a

of the framework functionality (Section 2) and SpanField representing the start and end posi-

also serve as baselines for future research. tions of the answer in the passage.

AllenNLP is an ongoing open-source effort The user need only read data into a set of

maintained by several full-time engineers and re- Instance objects with the desired fields, and the

searchers at the Allen Institute for Artificial Intel- library can automatically sort them into batches

ligence, as well as interns from top PhD programs with similar sequence lengths, pad all sequences

and contributors from the broader NLP commu- in each batch to the same length, and randomly

nity. It is used widespread internally for research shuffle the batches for input to a model.

on common sense, logical reasoning, and state-

of-the-art NLP components such as: constituency 2.2 NLP-Focused Abstractions

parsers, semantic parsing, and word representa-

AllenNLP provides a high-level API for building

tions. AllenNLP is gaining traction externally and

models, with abstractions designed specifically for

we want to invest to make it the standard for ad-

NLP research. By design, the code for a model

vancing NLP research using PyTorch.

actually specifies a class of related models. The

2 Library Design researcher can then experiment with various ar-

chitectures within this class by simply changing

AllenNLP is a platform designed specifically for a configuration file, without having to change any

deep learning and NLP research. AllenNLP is code.

built on PyTorch (Paszke et al., 2017), which pro- The library has many abstractions that encap-

vides many attractive features for NLP research. sulate common decision points in NLP models.

PyTorch supports dynamic networks, has a clean Key examples are: (1) how text is represented as

“Pythonic” syntax, and is easy to use. vectors, (2) how vector sequences are modified to

The AllenNLP library provides (1) a flexible produce new vector sequences, (3) how vector se-

data API that handles intelligent batching and quences are merged into a single vector.

padding, (2) high-level abstractions for common TokenEmbedder: This abstraction takes in-

operations in working with text, and (3) a modular put arrays generated by e.g. a TextField and

and extensible experiment framework that makes returns a sequence of vector embeddings. Through

doing good science easy. the use of polymorphism and AllenNLP’s exper-

AllenNLP maintains iment framework (see Section 2.3), researchers

a high test coverage of over 90% 4 to ensure can easily switch between a wide variety of pos-

its components and models are working as in- sible word representations. Simply by changing

tended. Library features are built with testability a configuration file, an experimenter can choose

in mind so new components can maintain a similar between pre-trained word embeddings, word em-

test coverage. beddings concatenated with a character-level CNN

2.1 Text Data Processing encoding, or even pre-trained model token-in-

context embeddings (Peters et al., 2017), which

AllenNLP’s data processing API is built around allows for easy controlled experimentation.

the notion of Fields. Each Field represents a

Seq2SeqEncoder: A common operation in

single input array to a model. Fields are grouped

deep NLP models is to take a sequence of word

together in Instances that represent the exam-

vectors and pass them through a recurrent net-

ples for training or prediction.

work to encode contextual information, produc-

The Field API is flexible and easy to extend,

ing a new sequence of vectors as output. There

allowing for a unified data API for tasks as diverse

is a large number of ways to do this, includ-

as tagging, semantic role labeling, question an-

ing LSTMs (Hochreiter and Schmidhuber, 1997),

swering, and textual entailment. To represent the

GRUs (Cho et al., 2014), intra-sentence atten-

SQuAD dataset (Rajpurkar et al., 2016), for exam-

tion (Cheng et al., 2016), recurrent additive net-

ple, which has a question and a passage as inputs

works (Lee et al., 2017b), and many more. Al-

4

https://codecov.io/gh/allenai/allennlp lenNLP’s Seq2SeqEncoder abstracts away the

decision of which particular encoder to use, allow- plementations of any of the provided abstractions,

ing the user to build an encoder-agnostic model or even to create their own new abstractions.

and specify the encoder via configuration. In this While some entries in the configuration file are

way, a researcher can easily explore new recur- optional, many are required and if unspecified

rent architectures; for example, they can replace AllenNLP will raise a ConfigurationError when

the LSTMs in any model that uses this abstrac- reading the configuration. Additionally, when a

tion with any other encoder, measuring the impact configuration file is loaded, AllenNLP logs the

across a wide range of models and tasks. configuration values, providing a record of both

Seq2VecEncoder: Another common op- specified and default parameters for your model.

eration in NLP models is to merge a sequence

of vectors into a single vector, using either a 3 Reference Models

recurrent network with some kind of averaging

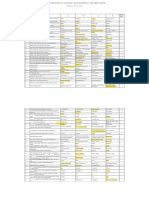

AllenNLP includes reference implementations

or pooling, or using a convolutional network.

of widely used language understanding models.

This operation is encapsulated in AllenNLP by a

These models demonstrate how to use the frame-

Seq2VecEncoder. This abstraction again al-

work functionality presented in Section 2. They

lows the model code to only describe a class of

also have verified performance levels that closely

similar models, with particular instantiations of

match the original results, and can serve as com-

that model class being determined by a configu-

parison baselines for future research.

ration file.

AllenNLP includes reference implementations

SpanExtractor: A recent trend in NLP

for several tasks, including:

is to build models that operate on spans of

text, instead of on tokens. State-of-the-art mod- • Semantic Role Labeling (SRL) models re-

els for coreference resolution (Lee et al., 2017a), cover the latent predicate argument structure

constituency parsing (Stern et al., 2017), and se- of a sentence (Palmer et al., 2005). SRL

mantic role labeling (He et al., 2017) all op- builds representations that answer basic ques-

erate in this way. Support for building this tions about sentence meaning; for example,

kind of model is built into AllenNLP, including “who” did “what” to “whom.” The Al-

a SpanExtractor abstraction that determines lenNLP SRL model is a re-implementation

how span vectors get computed from sequences of of a deep BiLSTM model (He et al., 2017).

token vectors. The implemented model closely matches the

2.3 Experimental Framework published model which was state of the art

in 2017, achieving a F1 of 78.9% on En-

The primary design goal of AllenNLP is to make glish Ontonotes 5.0 dataset using the CoNLL

it easy to do good science with controlled exper- 2011/12 shared task format.

iments. Because of the abstractions described in

Section 2.2, large parts of the model architecture • Machine Comprehension (MC) systems

and training-related hyper-parameters can be con- take an evidence text and a question as in-

figured outside of model code. This makes it easy put, and predict a span within the evidence

to clearly specify the important decisions that de- that answers the question. AllenNLP in-

fine a new model in configuration, and frees the cludes a reference implementation of the

researcher from needing to code all of the imple- BiDAF MC model (Seo et al., 2017) which

mentation details from scratch. was state of the art for the SQuAD bench-

This architecture design is accomplished in Al- mark (Rajpurkar et al., 2016) in early 2017.

lenNLP using a HOCON5 configuration file that

specifies, e.g., which text representations and en- • Textual Entailment (TE) models take a pair

coders to use in an experiment. The mapping from of sentences and predict whether the facts

strings in the configuration file to instantiated ob- in the first necessarily imply the facts in

jects in code is done through the use of a registry, the second. The AllenNLP TE model is a

which allows users of the library to add new im- re-implementation of the decomposable at-

5

tention model (Parikh et al., 2016), a widely

We use it as JSON with comments. See

https://github.com/lightbend/config/blob/master/HOCON.md used TE baseline that was state-of-the-art on

for the full spec. the SNLI dataset (Bowman et al., 2015) in

late 2016. The AllenNLP TE model achieves than modeling NLP architectures. While Al-

an accuracy of 86.4% on the SNLI 1.0 test lenNLP supports making predictions using pre-

dataset, a 2% improvement on most publicly trained models, its core focus is on enabling

available implementations and a similar score novel research. This emphasis on configur-

as the original paper. Rather than pre-trained ing parameters, training, and evaluating is simi-

Glove vectors, this model uses ELMo embed- lar to Weka (Witten and Frank, 1999) or Scikit-

dings (Peters et al., 2018), which are com- learn (Pedregosa et al., 2011), but AllenNLP fo-

pletely character based and account for the cuses on cutting-edge research in deep learning

2% improvement. and is designed around declarative configuration

of model architectures in addition to model param-

• A Constituency Parser breaks a text into eters.

sub-phrases, or constituents. Non-terminals Most existing deep-learning toolkits

in the tree are types of phrases and the ter- are designed for general machine learn-

minals are the words in the sentence. The ing (Bergstra et al., 2010; Yu et al., 2014;

AllenNLP constituency parser is an imple- Chen et al., 2015; Abadi et al., 2016;

mentation of a minimal neural model for Neubig et al., 2017), and can require signifi-

constituency parsing based on an indepen- cant effort to develop research infrastructure

dent scoring of labels and spans (Stern et al., for particular model classes. Some, such as

2017). This model uses ELMo embed- Keras (Chollet et al., 2015), do aim to make it

dings (Peters et al., 2018), which are com- easy to build deep learning models. Similar to

pletely character based and improves single how AllenNLP is an abstraction layer on top of

model performance from 92.6 F1 to 94.11 F1 PyTorch, Keras provides high-level abstractions

on the Penn Tree bank, a 20% relative error on top of static graph frameworks such as Tensor-

reduction. Flow. While Keras’ abstractions and functionality

are useful for general machine learning, they

AllenNLP also includes a token embedder that are somewhat lacking for NLP, where input data

uses pre-trained ELMo (Peters et al., 2018) repre- types can be very complex and dynamic graph

sentations. ELMo is a deep contextualized word frameworks are more often necessary.

representation that models both complex charac-

Finally, AllenNLP is related to toolkits for

teristics of word use (e.g., syntax and semantics)

deep learning research in dialog (Miller et al.,

and how these uses vary across linguistic contexts

2017) and machine translation (Klein et al., 2017).

(in order to model polysemy). ELMo embeddings

Those toolkits support learning general functions

significantly improve the state of the art across a

that map strings (e.g. foreign language text or user

broad range of challenging NLP problems, includ-

utterances) to strings (e.g. English text or sys-

ing question answering, textual entailment, and

tem responses). AllenNLP, in contrast, is a more

sentiment analysis.

general library for building models for any kind

Additional models are currently under de- of NLP task, including text classification, con-

velopment and are regularly released, includ- stituency parsing, textual entailment, question an-

ing semantic parsing (Krishnamurthy et al., swering, and more.

2017) and multi-paragraph reading comprehen-

sion (Clark and Gardner, 2017). We expect the 5 Conclusion

number of tasks and reference implementations

to grow steadily over time. The most up-to- The design of AllenNLP allows researchers to fo-

date list of reference models is maintained at cus on the high-level summary of their models

http://allennlp.org/models. rather than the details, and to do careful, repro-

ducible research. Internally at the Allen Insti-

4 Related Work tute for Artificial Intelligence the library is widely

adopted and has improved the quality of our re-

Many existing NLP pipelines, such as Stan-

search code, spread knowledge about deep learn-

ford CoreNLP (Manning et al., 2014) and spaCy6 ,

ing, and made it easier to share discoveries be-

focus on predicting linguistic structures rather

tween teams. AllenNLP is gaining traction exter-

6

https://spacy.io/ nally and is growing an open-source community

of contributors 7 . The AllenNLP team is com- Luheng He, Kenton Lee, Mike Lewis, and Luke Zettle-

mitted to continuing work on this library in or- moyer. 2017. Deep semantic role labeling: What

works and whats next. In Proceedings of the Asso-

der to enable better research practices throughout

ciation for Computational Linguistics (ACL).

the NLP community and to build a community of

researchers who maintain a collection of the best Sepp Hochreiter and Jürgen Schmidhuber. 1997.

models in natural language processing. Long short-term memory. Neural computation,

9(8):1735–1780.

Jeremy Howard and Sebastian Ruder. 2018. Fine-

References tuned language models for text classification. CoRR,

abs/1801.06146.

Martı́n Abadi, Ashish Agarwal, Paul Barham, Eugene

Brevdo, Zhifeng Chen, Craig Citro, Greg S Cor- Guillaume Klein, Yoon Kim, Yuntian Deng,

rado, Andy Davis, Jeffrey Dean, Matthieu Devin, Jean Senellart, and Alexander M. Rush. 2017.

et al. 2016. Tensorflow: Large-scale machine learn- Opennmt: Open-source toolkit for neural machine translation.

ing on heterogeneous distributed systems. CoRR In Proceedings of the 55th Annual Meeting of the

abs/1603.04467. Association for Computational Linguistics, ACL,

pages 67–72.

James Bergstra, Olivier Breuleux, Frédéric Bastien,

Pascal Lamblin, Razvan Pascanu, Guillaume Des- Jayant Krishnamurthy, Pradeep Dasigi, and Matthew

jardins, Joseph Turian, David Warde-Farley, and Gardner. 2017. Neural semantic parsing with type

Yoshua Bengio. 2010. Theano: A cpu and gpu math constraints for semi-structured tables. In EMNLP.

compiler in python. In Proc. 9th Python in Science

Conf, pages 1–7. Kenton Lee, Luheng He, Mike Lewis, and Luke Zettle-

moyer. 2017a. End-to-end neural coreference res-

Samuel R. Bowman, Gabor Angeli, Christopher Potts, olution. In Proceedings of the Conference on Em-

and Christopher D. Manning. 2015. A large an- pirical Methods in Natural Language Processing

notated corpus for learning natural language infer- (EMNLP).

ence. In Proceedings of the Conference on Em-

Kenton Lee, Omer Levy, and Luke Zettlemoyer.

pirical Methods in Natural Language Processing

2017b. Recurrent additive networks. CoRR

(EMNLP). Association for Computational Linguis-

abs/1705.07393.

tics.

Christopher D Manning, Mihai Surdeanu, John Bauer,

Tianqi Chen, Mu Li, Yutian Li, Min Lin, Naiyan Wang, Jenny Rose Finkel, Steven Bethard, and David Mc-

Minjie Wang, Tianjun Xiao, Bing Xu, Chiyuan Closky. 2014. The stanford corenlp natural language

Zhang, and Zheng Zhang. 2015. Mxnet: A flexible processing toolkit. In Proceedings of the Associ-

and efficient machine learning library for heteroge- ation for Computational Linguistics (ACL) (System

neous distributed systems. CoRR abs/1512.01274. Demonstrations).

Jianpeng Cheng, Li Dong, and Mirella Lapata. 2016. Alexander H. Miller, Will Feng, Dhruv Ba-

Long short-term memory-networks for machine tra, Antoine Bordes, Adam Fisch, Jiasen

reading. In Proceedings of the Conference on Em- Lu, Devi Parikh, and Jason Weston. 2017.

pirical Methods in Natural Language Processing Parlai: A dialog research software platform. In

(EMNLP). Proceedings of the Conference on Empirical Meth-

ods in Natural Language Processing, EMNLP,

Kyunghyun Cho, Bart van Merrienboer, Çaglar pages 79–84.

Gülçehre, Dzmitry Bahdanau, Fethi Bougares,

Holger Schwenk, and Yoshua Bengio. 2014. Graham Neubig, Chris Dyer, Yoav Goldberg, Austin

Learning phrase representations using RNN encoder-decoder Matthews, Waleed

for statistical Ammar,

machine Antonios Anastasopou-

translation.

In Proceedings of the Conference on Empiri- los, Miguel Ballesteros, David Chiang, Daniel

cal Methods in Natural Language Processing, Clothiaux, Trevor Cohn, et al. 2017. Dynet:

(EMNLP), pages 1724–1734. The dynamic neural network toolkit. CoRR

abs/1701.03980.

François Chollet et al. 2015. Keras.

https://keras.io. Martha Palmer, Daniel Gildea, and Paul Kingsbury.

2005. The proposition bank: An annotated cor-

Christopher T Clark and Matthew Gardner. 2017. Sim- pus of semantic roles. Computational linguistics,

ple and effective multi-paragraph reading compre- 31(1):71–106.

hension. CoRR, abs/1710.10723.

Ankur P Parikh, Oscar Täckström, Dipanjan Das, and

7

See GitHub stars and issues Jakob Uszkoreit. 2016. A decomposable attention

on https://github.com/allenai/allennlp model for natural language inference. In Proceed-

and mentions from publications at ings of the Conference on Empirical Methods in Nat-

https://www.semanticscholar.org/search?q=allennlp. ural Language Processing (EMNLP).

Adam Paszke, Sam Gross, Soumith Chintala, Gre-

gory Chanan, Edward Yang, Zachary DeVito, Zem-

ing Lin, Alban Desmaison, Luca Antiga, and Adam

Lerer. 2017. Automatic differentiation in pytorch.

In NIPS-W.

F. Pedregosa, G. Varoquaux, A. Gramfort, V. Michel,

B. Thirion, O. Grisel, M. Blondel, P. Pretten-

hofer, R. Weiss, V. Dubourg, J. Vanderplas, A. Pas-

sos, D. Cournapeau, M. Brucher, M. Perrot, and

E. Duchesnay. 2011. Scikit-learn: Machine learning

in Python. Journal of Machine Learning Research,

12:2825–2830.

Matthew E Peters, Waleed Ammar, Chandra Bhagavat-

ula, and Russell Power. 2017. Semi-supervised se-

quence tagging with bidirectional language models.

In Proceedings of the Association for Computational

Linguistics (ACL).

Matthew E. Peters, Mark Neumann, Mohit Iyyer,

Matthew Gardner, Christopher T Clark, Kenton Lee,

and Luke S. Zettlemoyer. 2018. Deep contextual-

ized word representations. CoRR, abs/1802.05365.

Pranav Rajpurkar, Jian Zhang, Konstantin Lopyrev, and

Percy Liang. 2016. Squad: 100,000+ questions for

machine comprehension of text. In Proceedings of

the Conference on Empirical Methods in Natural

Language Processing (EMNLP).

Minjoon Seo, Aniruddha Kembhavi, Ali Farhadi, and

Hannaneh Hajishirzi. 2017. Bidirectional attention

flow for machine comprehension.

Mitchell Stern, Jacob Andreas, and Dan Klein. 2017. A

minimal span-based neural constituency parser. In

ACL.

Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob

Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz

Kaiser, and Illia Polosukhin. 2017. Attention is all

you need. In NIPS.

Ian H. Witten and Eibe Frank. 1999. Data mining:

Practical machine learning tools and techniques with

java implementations.

Dong Yu, Adam Eversole, Mike Seltzer, Kaisheng Yao,

Zhiheng Huang, Brian Guenter, Oleksii Kuchaiev,

Yu Zhang, Frank Seide, Huaming Wang, et al. 2014.

An introduction to computational networks and the

computational network toolkit. Microsoft Technical

Report MSR-TR-2014–112.

Jie Zhou and Wei Xu. 2015. End-to-end learning of

semantic role labeling using recurrent neural net-

works. In Proceedings of the Association for Com-

putational Linguistics (ACL).

You might also like

- Autoencoders: Parallel Programming Parallel ProcessingDocument5 pagesAutoencoders: Parallel Programming Parallel ProcessingbaskarchennaiNo ratings yet

- Iphone App Programming GuideDocument120 pagesIphone App Programming GuideWilliam ChangNo ratings yet

- Robert Smallshire, Austin Bingham - The Python Apprentice - A Practical and Thorough Introduction To The Python Programming Language-Packt Publishing (2017)Document344 pagesRobert Smallshire, Austin Bingham - The Python Apprentice - A Practical and Thorough Introduction To The Python Programming Language-Packt Publishing (2017)Piyush100% (1)

- PDA CS Aptitude Test XVIDocument7 pagesPDA CS Aptitude Test XVIreky_georgeNo ratings yet

- HANA (High Performance Analytic Appliance)Document159 pagesHANA (High Performance Analytic Appliance)Mohit KumarNo ratings yet

- HEMWATI NANDAN BAHUGUNA GARHWAL UNIVERSITY PRACTICAL FILE FOR NATURAL LANGUAGE PROCESSINGDocument100 pagesHEMWATI NANDAN BAHUGUNA GARHWAL UNIVERSITY PRACTICAL FILE FOR NATURAL LANGUAGE PROCESSINGBhawini RajNo ratings yet

- French To English Translator in PyTorchDocument30 pagesFrench To English Translator in PyTorchDivyanshu NandwaniNo ratings yet

- CH 5Document16 pagesCH 521dce106No ratings yet

- Deep Speech 2: End-to-End Speech Recognition in English and MandarinDocument10 pagesDeep Speech 2: End-to-End Speech Recognition in English and MandarinJose Luis Rojas ArandaNo ratings yet

- Deep learning in NLP semDocument26 pagesDeep learning in NLP semKevin Raj SNo ratings yet

- Online Embedding Compression For Text Classification Using Low Rank Matrix FactorizationDocument9 pagesOnline Embedding Compression For Text Classification Using Low Rank Matrix FactorizationHfhdjdNo ratings yet

- pNLP-Mixer: An Efficient all-MLP Architecture For LanguageDocument11 pagespNLP-Mixer: An Efficient all-MLP Architecture For Languageisrealvirgin763No ratings yet

- SMART: Robust and E Fficient Fine-Tuning For Pre-Trained Natural Language Models Through Principled Regularized OptimizationDocument21 pagesSMART: Robust and E Fficient Fine-Tuning For Pre-Trained Natural Language Models Through Principled Regularized OptimizationsamsNo ratings yet

- 2022.acl-demo.10Document9 pages2022.acl-demo.10reghecampflucaNo ratings yet

- WizardLM - Empowering Large Language Models To Follow Complex InstructionsDocument39 pagesWizardLM - Empowering Large Language Models To Follow Complex InstructionswilhelmjungNo ratings yet

- Universal Sentence Encoder for Transfer LearningDocument7 pagesUniversal Sentence Encoder for Transfer Learningviterbi kkkNo ratings yet

- Extracting Interpretable Features For Time Series An - 2023 - Expert Systems WitDocument11 pagesExtracting Interpretable Features For Time Series An - 2023 - Expert Systems WitDilip SinghNo ratings yet

- rnn-1406 1078 PDFDocument15 pagesrnn-1406 1078 PDFalanNo ratings yet

- Bhawini NLP PracticalDocument98 pagesBhawini NLP PracticalBhawini RajNo ratings yet

- Open Domain Web Keyphrase Extraction Beyond Language ModelingDocument10 pagesOpen Domain Web Keyphrase Extraction Beyond Language ModelingrimenNo ratings yet

- Horatio - Wjec A2 Computing Unit 3 4 3 5Document3 pagesHoratio - Wjec A2 Computing Unit 3 4 3 5Swift OsNo ratings yet

- Discriminative Unsupervised Feature Learning with Exemplar CNNsDocument14 pagesDiscriminative Unsupervised Feature Learning with Exemplar CNNsAlejandra Rodriguez VasquezNo ratings yet

- Capacity and Trainability in Recurrent Neural Networks (2017)Document17 pagesCapacity and Trainability in Recurrent Neural Networks (2017)wjc2625010No ratings yet

- Transformer Neural Network: BY Tharun E 1MS18CS127 Under The Guidance of Ganeshayya ShidagantiDocument17 pagesTransformer Neural Network: BY Tharun E 1MS18CS127 Under The Guidance of Ganeshayya ShidagantiRiddhi SinghalNo ratings yet

- Where OOP Falls Short of Hardware Verification NeedsDocument9 pagesWhere OOP Falls Short of Hardware Verification NeedsSwift superNo ratings yet

- NN TextClassificationDocument7 pagesNN TextClassificationDian PuspitaNo ratings yet

- Tailoring An Interpretable Neural Language Model: Yike Zhang, Pengyuan Zhang, and Yonghong YanDocument15 pagesTailoring An Interpretable Neural Language Model: Yike Zhang, Pengyuan Zhang, and Yonghong Yanantonio ScacchiNo ratings yet

- Large ScaleLanguageModelingDocument8 pagesLarge ScaleLanguageModelingTzi-cker ChiuehNo ratings yet

- 2016-ACL-Combining Discrete and Neural Features For Sequence LabelingDocument15 pages2016-ACL-Combining Discrete and Neural Features For Sequence LabelingYeqing ChangNo ratings yet

- A Comparative Study On Selecting Acoustic Modeling Units in Deep Neural Networks Based Large Vocabulary Chinese Speech RecognitionDocument6 pagesA Comparative Study On Selecting Acoustic Modeling Units in Deep Neural Networks Based Large Vocabulary Chinese Speech RecognitionGalaa GantumurNo ratings yet

- NLP Cook BOOK With TransformersDocument27 pagesNLP Cook BOOK With TransformersasdfghjkNo ratings yet

- High Speed Tracking With Kernelized Correlation FiltersDocument14 pagesHigh Speed Tracking With Kernelized Correlation FiltersLarry CocaNo ratings yet

- Analysis of The Evolution of Advanced Transformer-Based Language Models: Experiments On Opinion MiningDocument16 pagesAnalysis of The Evolution of Advanced Transformer-Based Language Models: Experiments On Opinion MiningIAES IJAINo ratings yet

- A Survey On Vision TransformerDocument24 pagesA Survey On Vision Transformermainproject967No ratings yet

- Neural Machine Translation and Sequence-To-Sequence Models: A TutorialDocument65 pagesNeural Machine Translation and Sequence-To-Sequence Models: A TutorialAlon GonenNo ratings yet

- Handwritten Text Recognition Using Machine Learning Techniques in Application of NLPDocument4 pagesHandwritten Text Recognition Using Machine Learning Techniques in Application of NLPAnimesh PrasadNo ratings yet

- Research On Text Classification Based On CNN and LSTM: Yuandong Luan Shaofu LinDocument4 pagesResearch On Text Classification Based On CNN and LSTM: Yuandong Luan Shaofu LinmainakroniNo ratings yet

- 2302.1271Document5 pages2302.1271994132768No ratings yet

- Article Esarda Bulletin - 63 - 2021.10Document6 pagesArticle Esarda Bulletin - 63 - 2021.10JOHNNo ratings yet

- Convolutional Sequence to Sequence Learning with AttentionDocument15 pagesConvolutional Sequence to Sequence Learning with AttentionLong DangNo ratings yet

- Toolkits and Libraries For Deep Learning: J Digit Imaging DOI 10.1007/s10278-017-9965-6Document6 pagesToolkits and Libraries For Deep Learning: J Digit Imaging DOI 10.1007/s10278-017-9965-6Luis Dominguez LeitonNo ratings yet

- Analysis Methods in Neural Language Processing: A SurveyDocument24 pagesAnalysis Methods in Neural Language Processing: A Surveyfuzzy_slugNo ratings yet

- Deep Learning in Natural Language Processing A State-of-the-Art SurveyDocument6 pagesDeep Learning in Natural Language Processing A State-of-the-Art SurveySmriti Medhi MoralNo ratings yet

- S E A T - S: Ample Fficient Daptive EXT TO PeechDocument15 pagesS E A T - S: Ample Fficient Daptive EXT TO PeechGerardo Meza GómezNo ratings yet

- Wide and Deep Learning For Recommender Systems - Google Play Store - Highlighted PaperDocument4 pagesWide and Deep Learning For Recommender Systems - Google Play Store - Highlighted PaperShubham ChaudharyNo ratings yet

- T F E: A - , P - DSL: Ensor LOW Ager Multi Stage Ython Embedded FOR Machine LearningDocument12 pagesT F E: A - , P - DSL: Ensor LOW Ager Multi Stage Ython Embedded FOR Machine LearningTegui RossNo ratings yet

- A Comprehensive Survey On Human-To-Database Communication Using NLPDocument5 pagesA Comprehensive Survey On Human-To-Database Communication Using NLPInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Recurrent Neural Network For Text Classification With Multi-Task LearningDocument7 pagesRecurrent Neural Network For Text Classification With Multi-Task LearningBassel GhaybourNo ratings yet

- 11 - Vietnamese Text Classification and Sentiment BasedDocument3 pages11 - Vietnamese Text Classification and Sentiment BasedPhan Dang KhoaNo ratings yet

- Dynamic Text ClassificationDocument16 pagesDynamic Text ClassificationNexgen TechnologyNo ratings yet

- A Multilayer Convolutional Encoder-Decoder Neural Network For Grammatical Error CorrectionDocument8 pagesA Multilayer Convolutional Encoder-Decoder Neural Network For Grammatical Error CorrectionJadir Ibna HasanNo ratings yet

- N A S R L: Eural Rchitecture Earch With Einforcement EarningDocument16 pagesN A S R L: Eural Rchitecture Earch With Einforcement Earningsrikanth madakaNo ratings yet

- CNN for Sentence ClassificationDocument6 pagesCNN for Sentence ClassificationAhmad KarlamNo ratings yet

- Deep Speech - Scaling Up End-To-End Speech RecognitionDocument12 pagesDeep Speech - Scaling Up End-To-End Speech RecognitionSubhankar ChakrabortyNo ratings yet

- Teaching Programming With The Kernel Language Approach: 1 Existing ApproachesDocument10 pagesTeaching Programming With The Kernel Language Approach: 1 Existing ApproachesGauravNo ratings yet

- Compiling ONNX Neural Network Models Using MlirDocument8 pagesCompiling ONNX Neural Network Models Using MlirGuilhermeNo ratings yet

- VNLP-an Open Source Framework For Vietnamese Natural Language Processing PDFDocument7 pagesVNLP-an Open Source Framework For Vietnamese Natural Language Processing PDFpbtaiNo ratings yet

- Deep Visual-Semantic Alignments For Generating Image DescriptionsDocument14 pagesDeep Visual-Semantic Alignments For Generating Image DescriptionsRAGURAM SHANMUGAMNo ratings yet

- A Survey On Vision TransformerDocument23 pagesA Survey On Vision TransformerLưu HảiNo ratings yet

- OpTorch Optimized Deep Learning Architectures ForDocument7 pagesOpTorch Optimized Deep Learning Architectures ForFree SyncNo ratings yet

- Lin_Deep_Frequency_Filtering_for_Domain_Generalization_CVPR_2023_paperDocument11 pagesLin_Deep_Frequency_Filtering_for_Domain_Generalization_CVPR_2023_paperperry1005No ratings yet

- 2021-Sentence Embedding Models For Similarity Detection of Software RequirementsDocument11 pages2021-Sentence Embedding Models For Similarity Detection of Software Requirementsless64014No ratings yet

- D A L N E R: EEP Ctive Earning FOR Amed Ntity EcognitionDocument15 pagesD A L N E R: EEP Ctive Earning FOR Amed Ntity EcognitionMaithiliNo ratings yet

- Dbms Multiple Choice Questions For Midterm LPUDocument5 pagesDbms Multiple Choice Questions For Midterm LPUAman PrajapatiNo ratings yet

- CNC control system object oriented designDocument43 pagesCNC control system object oriented designSandeep SNo ratings yet

- Overview of Yacc ProgramDocument14 pagesOverview of Yacc ProgramDr. V. Padmavathi Associate ProfessorNo ratings yet

- LogcatDocument13 pagesLogcatfathyocamposNo ratings yet

- Comprehensive CSE Examination - 2019 BatchDocument174 pagesComprehensive CSE Examination - 2019 BatchMukul DabiNo ratings yet

- Factorial of A Number Using RecursionDocument5 pagesFactorial of A Number Using RecursionPriyanshu JainNo ratings yet

- Financial Numerical Recipes in C++Document152 pagesFinancial Numerical Recipes in C++Francisco Javier Villaseca AhumadaNo ratings yet

- What About The Usability in Low-Code Platforms - A SLRDocument16 pagesWhat About The Usability in Low-Code Platforms - A SLRJason K. RubinNo ratings yet

- Carti C++Document1 pageCarti C++Razvan MaziluNo ratings yet

- Understanding the Architecture and Components of the Unix System KernelDocument79 pagesUnderstanding the Architecture and Components of the Unix System KernelanksncodeNo ratings yet

- COBOL Selftest Part 1Document10 pagesCOBOL Selftest Part 1Anoop PgNo ratings yet

- 10 Contoh Coding Bahasa Pemrograman JavaDocument15 pages10 Contoh Coding Bahasa Pemrograman JavaEgi Putrima MulyaNo ratings yet

- FixedDocument48 pagesFixedKi이No ratings yet

- Brotlipy Documentation: Release 0.7.0Document15 pagesBrotlipy Documentation: Release 0.7.0CRABS ID OfficialNo ratings yet

- DsaDocument7 pagesDsahans peterNo ratings yet

- NET Perf and Test EbookDocument403 pagesNET Perf and Test EbookpremNo ratings yet

- Microsoft Access 2003: The CompleteDocument30 pagesMicrosoft Access 2003: The Completekrisnando71No ratings yet

- SQL document with data analysis questionsDocument3 pagesSQL document with data analysis questionsFoxiNo ratings yet

- CODING DOJO Master Course PacketDocument24 pagesCODING DOJO Master Course PacketDebra HargersilverNo ratings yet

- Presentation Chaaba Ayoub Genie LogicielDocument48 pagesPresentation Chaaba Ayoub Genie LogicielMoulay Ayoub ChaabaNo ratings yet

- Zoom 1Document29 pagesZoom 1nageshmcackmNo ratings yet

- IT Syllabus NIT KURUKSHETRADocument81 pagesIT Syllabus NIT KURUKSHETRADevyani VarmaNo ratings yet

- Leda Rule SpecifierDocument102 pagesLeda Rule SpecifierMahesh ReddyNo ratings yet

- Computing Fundamentals: Dr. Muhammad Yousaf HamzaDocument27 pagesComputing Fundamentals: Dr. Muhammad Yousaf HamzaShujaAmjadNo ratings yet

- AST20105 Data Structure and Algorithms: Chapter 9 - Hash TableDocument39 pagesAST20105 Data Structure and Algorithms: Chapter 9 - Hash TableHANG XUNo ratings yet

- Snort 3 User ManualDocument103 pagesSnort 3 User ManualColm MaddenNo ratings yet