Professional Documents

Culture Documents

Building a Reduced Reference Video Quality Metric with Very Low Overhead

Uploaded by

Azmi AbdulbaqiOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Building a Reduced Reference Video Quality Metric with Very Low Overhead

Uploaded by

Azmi AbdulbaqiCopyright:

Available Formats

Building a Reduced Reference Video Quality Metric with

Very Low Overhead using Multivariate Data Analysis

Tobias OELBAUM and Klaus DIEPOLD

Institute for Data Processing

Technische Universität München

Munich, Germany

Abstract—In this contribution a reduced reference video quality mathematical difference between every pixel of the encoded

metric for AVC/H.264 is proposed that needs only a very low video and the original video. In fact up to now PSNR is the

overhead (not more than two bytes per sequence). This reduced

only video quality metric that is widely accepted and therefore

reference metric uses well established algorithms to measure ob-

jective features of the video such as ’blur’ or ’blocking’. Those PSNR is the de-facto standard for measuring video quality.

measurements are then combined into a single measurement for In 2004 the ITU released a recommendation which included

the overall video quality. The weights of the single features and the four different full reference (not only the coded video but

combination of those are determined using methods provided by also the original video is needed for the evaluation) metrics

multivariate data analysis. The proposed metric is verified using

a data set of AVC/H.264 encoded videos and the corresponding which outperformed PSNR in terms of correlation to results of

results of a carefully designed and conducted subjective evaluation. extensive subjective tests [3]. Among those is the Edge PSNR

Results show that the proposed reduced reference metric not only [4] method developed by Lee et al. which was chosen as a

outperforms standard PSNR but also two well known full reference comparison point to the metric presented in this contribution.

metrics.

This metric is based on the observation that human observers

Index Terms—video quality metric, reduced reference, multivari- are especially sensitive to degradations in regions around edges.

ate data analysis, AVC/H.264. Therefore this metric evaluates the PSNR only at those pixels

that have been classified to belong to an edge region. One FR

1. I NTRODUCTION

image metric which has gained a high popularity since it was

Knowing the visual quality of an encoded video is essential introduced in 2002 is the so called SSIM (Structural SIMilarity

for next to all applications dealing with digital video. This index) as presented in [5] and [6]. This metric was the second

task can be accomplished either by a very time consuming metric chosen for comparison. The SSIM performs a separate

(but accurate) subjective test or by an objective video quality comparison on luminance, contrast and structure in the original

metric. A good objective video quality metric would produce and the coded image and uses this information to calculate

results highly correlated to those obtained by a subjective test. one overall quality index. Among the quite extensive list of

Four years after the first version of the upcoming video coding FR image and video quality metrics four recently proposed

standard AVC/H.264 [1] was released, next to no results exist to metrics are [7], [8], [9] and [10]. Shnayderman et al propose

demonstrate the prediction capabilities of video quality metrics to use a singular value decomposition representation of the

for AVC/H.264 encoded video data. Up to now most video original and the coded image for the comparison [7]. In [8]

quality metrics have been verified using MPEG-2 encoded Zhai et al. propose to decompose the images using a fiterbank

videos, but as AVC/H.264 encoded video has significant dif- of 2D Garbor filters and then measures the correlation between

ferent characteristics (e.g. no fixed block sizes, filtering in the the decompositions of the original and the coded image. This

decoder loop), those results do not necessarily apply for this attempt is inspired by the fact, that simple cell in the visual

new generation of encoded video. cortex can be modeled as 2D Garbor functions. The same

Being the de-facto standard for objective video quality metrics authors extend the SSIM by a previous multiscale decomposition

PSNR is still used for comparing AVC/H.264 encoded video of an image in [9]. Finally Ong et al. propose a full reference

with other video codecs or for comparing different encoder metric that combines a measurement for block fidelity and

implementations or coding settings for AVC/H.264. This is content richness with some visibility masking functions [10].

in spite of the knowledge, that PSNR values may be heavily

misleading [2]. Reduced Reference and No Reference Quality Metrics

The rest of the contribution is organized as follows: In section Comparably few approaches were presented for reduced ref-

2 a short overview about related works is presented. The erence (RR) quality evaluation and even less for no reference

method used to develop the proposed reduced reference metric is (NR) quality evaluation. Compared to full reference metrics for

described in section 3 and the model itself is described in detail a RR metric only parts of the original video or some extracted

in section 4. Section 5 presents the results for the proposed properties of the original video are needed for evaluation. 2003

method and finally section 6 concludes this contribution. Carnec et al. presented a RR image quality metric that is based

on properties of the human visual system (HVS) such as color

2. R ELATED W ORKS

perception and masking effects. For the RR approach only high

Full Reference (FR) Quality Metrics level visual information is needed, however an additional data

The most popular video quality metric is the Peak Signal load of 1056 real numbers for every frame is reported [11].

to Noise Ratio (PSNR). This simple metric just calculates the Kusuma et al. presented a RR approach for still images using a

ISSN: 1690-4524 SYSTEMICS, CYBERNETICS AND INFORMATICS VOLUME 6 - NUMBER 5 81

Blur

linear combination of blocking, blur, ringing, masking and lost

blocks in 2005 [12]. Wang and Simoncelli showed that natural Blocking

images have a certain frequency distribution and therefore the Detail

frequency distribution of a coded image can be used to predict Noise

HVS Quality

the visual quality [13]. To transmit a representation of the

frequency distribution of the original image they require 162 Motion

bits.

For a NR metric no information about the original video is

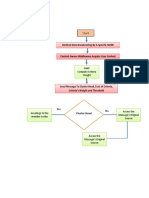

needed. One popular approach for a NR image and video quality Fig. 1. Black Box HVS Model

metric is the inclusion of watermarks in the original image and

then measuring the amount to which these watermarks can be

recovered at the receiver [14]. An approach similar to the metric video four different continuity measurements were performed:

proposed here was presented just recently by Callet et al in predictability (shows how good one frame can be predicted using

[15]. While the set of objective features used in both metrics is the previous frame only), motion continuity (measurement for

quite similar, the main differences can be found in the way this the smoothness of the motion), color continuity (shows how

objective features are then combined to an overall video quality much color changes between two successive images) and edge

metric. For this task Callet et al use a neural network approach continuity (shows how much edge regions are changing between

to simulate the human visual system (HVS) and its reaction to two successive images).

a set of features. • Blur: the blur measurement used is described in [17].

In addition to complete quality metrics there exist several The algorithm measures the width of an edge and then

measurements that concentrate on one single image property calculates the blur by assuming that blur is reflected by

or a special artifact. Prominent candidates from this field are wide edges. As blur is something natural in a fast moving

the blocking measurement by Bovik and Wang [16], or the blur sequence this measurement is adjusted if the video contains

measurement proposed by Winkler [17]. a high amount of fast motion.

• Blocking: for measuring the blockiness the algorithm in-

3. M ULTIVARIATE DATA A NALYSIS FOR O BJECTIVE troduced in [16] is used. This algorithm calculates the

V IDEO Q UALITY A SSESSMENT blockiness by applying a FFT along each line or column.

The unwanted blockiness can be easily detected by the

Video quality metrics that try to model the HVS face the

location in the spectra.

problem, that what they want to model is very complicated and

• Noise: to detect the noise present in the video a very simple

up to the moment not well understood. Measuring the strength

noise detector was designed. First a prediction of the actual

of a certain artifact (e.g. blocking, blur) and trying to predict

image is built by motion compensation using a simple block

the quality by a linear combination of the measured artifacts

matching algorithm. Second a difference image between

introduces the problem, that it is not known to which extend a

the actual image and its prediction is calculated and a

certain artifact affects the perceived video quality. In addition

low pass version of this difference image is produced by

this method ignores the possibility that there may be interference

first applying a median filter and a Gaussian low pass

between certain types of artifacts.

filter afterward. A pixel is classified to contain noise if

For these two reasons it is proposed to build new video quality

the difference value between the original difference image

models using methods provided by multivariate data analysis.

and the low pass difference image exceeds a threshold of

Multivariate data analysis is a tool that is widely used in

25 (assuming 8 bit values ranging from 0 to 255) for one

chemo metrics and food science where the aim is to find

of the three color planes.

the value of a latent variable (e.g. taste) by measuring some

• Details: to measure the amount of details that are present

fixed variables (e.g. sugar, milk, cocoa). For the field of video

in a video the percentage of turning points along each line

quality assessment this translates to measure the latent variable

and each row are calculated. This measurement is part of

video quality by measuring fixed variables such as blocking,

a BTFR metric included in [3]. As the amount of details

blur, activity, continuity or noise. The main advantages of this

that are noticed by an observer decreases with increasing

approach are twofold:

motion the activity measurement is adjusted if high motion

• The HVS is regarded as black box model and no attempt

is detected in the video.

to model any aspect of the HVS is needed. The ’black • Predictability: A predicted image is built by motion com-

box’ has some inputs (the fixed variables) and hopefully pensation using a simple block matching algorithm. The

the output (visual quality) can be somehow predicted using actual image and its prediction are then compared block

these input values. by block. A 8 × 8 block is considered to be noticeable

• The approach requires no previous assumptions about the

different if the SAD exceeds 384. To avoid that single

influence of the fixed variables. pixels dominate the SAD measurement both images are

filtered using first a Gaussian blur filter and a median

Feature Selection filtering afterward.

A set of simple no reference feature measurements was • Edge Continuity: The actual image and its motion com-

selected representing the most common kind of distortions pensated prediction are compared using the Edge-PSNR

namely blocking, blurriness and noise. One feature measurement algorithm as described in [4].

was added to measure the amount of detail present in the • Motion Continuity: Two motion vector fields are calculated:

encoded video. To take into account the time dimension of between the current and the previous frame and between

82 SYSTEMICS, CYBERNETICS AND INFORMATICS VOLUME 6 - NUMBER 5 ISSN: 1690-4524

the following and the current frame. The percentage of the average of the feature values of all calibration sequences.

motion vectors where the difference between the two For a detailed description of MSC see chapter 7.4 in [18].

corresponding motion vectors exceeds 5 pixels (either in Consequently the matrix F becomes F0 after MSC treatment.

x- or y-direction) determines the motion continuity.

Multivariate Regression with PLS: The obtained feature

• Color continuity: A color histogram with 51 bins for each 0

values fmi are then used together with the corresponding subjec-

RGB channel is calculated for the actual image and its

tive ratings yi that form the column vector y to built a regression

prediction. Color continuity is then given as the linear

model using the method of Partial Least Squares Regression

correlation between those two histograms.

(PLSR). PLSR is an extension of the Principal Component

All feature measurements are done for each frame of the video Regression (PCR), that tries to find the principal components

separately and the mean value of all frames is then used for (PC) that are most relevant not only for the interpretation of the

further processing. variation in the input values in F but also for the variation in

The above selected measurements are just one example for a the output values y. So while the PCR is a bilinear regression

set of variables that are used for building such a model. The method that consists of a Principal Component Analysis (PCA)

presented variables were used for their simplicity, using more of F0 into the matrix T that contains the PCs of F0 followed by

complex measurements for artifacts like noise or blur may result a regression of y on T, for the PLSR the modeling of F0 and

in even more accurate models as well as adding measurements y is done simultaneously to ensure that the PCs gained from F0

for artifacts not considered here (e.g. ringing). For this case only are relevant for y.

no reference feature measurements are considered, including F0 can be modeled as:

some feature measurements that require the original video a

RR or FR metric could be built. The nature of the multivariate F0 = f + T • PT + Ef .

calibration allows including an unrestricted number of fixed With P being the loadings of the k input features, T being

variables in the calibration step. If the calibration phase is done the scores of the l input sequences. f represents the row vector

properly, fixed variables that do not contribute to the latent of the mean values of the features and Ef is the error in F0 that

variable ’video quality’ do not spoil the calibration process. The can not be modeled.

regression model will contain these useless fixed variables with

Likewise y can be modeled as:

zero (or very close to zero) weight and those variables then can

be removed from the model. y = y + T • QT + Ey .

The calibration step The prediction yb can then be modeled as:

Multivariate calibration is the method of learning to interpret ybi = b0 + fi0 ∗ b

a number of k input sensory signals that contribute to a

common output y. For the presented metric the input signals b is the column vector of the single estimation weights bm ,

are the above mentioned feature measurements while the output b0 is the model offset. A detailed description of PLSR can be

would be the visual quality of the video. The data set used found in chapter 3.5 of [18].

for calibrating (training) the model consisted of four different

standard video test sequences (Bus, Football, Harbour, Mobile) Prediction Correction using Additional Quality Information

at CIF resolution that were encoded according to AVC/H.264 at The NR quality metric gained by the previous steps faces

three (Bus, Harbour) and seven (Football, Mobile) different bit the problem that even the original video may contain a certain

rates ranging from 96 kbps to 1024 kbps and with a frame rate of amount of blur or blocking and different sequences do not only

15 or 30 fps. Different encoder settings concerning the number have a different amount of details but also do have different

of B-Frames that were inserted (zero to two B-Frames), or the I- motion properties. For this reason the overall prediction accuracy

Frame periodicity (only one I-Frame or periodic I-Frames) were of the so far described model is low. But plotting the predicted

used. For each of the l calibration sequences the selected feature quality against the quality measured in subjective tests reveals

values fmi (m ∈ {1 · · · k} , i ∈ {1 · · · l}) were computed, for that the prediction accuracy for each single sequence is very

reference the l×k matrix containing the feature values is denoted high: the data points for one single sequence lie on one straight

as F. line only with unknown slope s and unknown offset o. Figure 3

Correcting the Features using MSC: As it is expected that shows the prediction without the correction step: the dashed

the measured features are not free from multiplicative or additive diagonal shows the required regression line. The regression

effects (e.g. the measurement for noise may be correlated lines for the three sequences Crew, Foreman and Husky have

and affected by the amount of details present in the video) a significant different slope and offset. The overall prediction

a multiplicative signal correction (MSC) step is performed accuracy therefore can be improved by estimating the slope and

before starting the multivariate regression. MSC was originally the offset of these lines by calculating the predicted quality of

developed to correct measurements in reflectance spectroscopy, the original video (yborig ) and of a low quality version of the

but can also help in this context to remove multiplicative and video (yblow ) using the same quality predictor.

additive effects between different objective features. The MSC While the original video is available and the subjective visual

corrected value of one feature m for one sequence i is calculated quality of this original is inherently given to be 1 on a 0 to 1

as following: scale with a comparably small error only, an estimation of a

0 low quality video can be produced by e.g. encoding the original

fmi = c + fmi ∗ d

with a low bit rate. Obviously the subjective visual quality of

The two variables c and d are obtained by simple linear this low quality video can only be guessed (here set to 0.25).

regression of the feature values of the sequence i compared to Including the predicted quality of the original video and the

ISSN: 1690-4524 SYSTEMICS, CYBERNETICS AND INFORMATICS VOLUME 6 - NUMBER 5 83

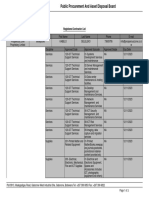

TABLE I

Encoded

Original Video

T EST S EQUENCES

Encoder Decoder

Video (unknown

quality)

Sequence Bit Rate (kbps) fps Conditions

Sequences used for calibration

Encoder 128,256 15fps IBP, only one I-Frame

Bus

Decoder

512 30fps IBBP, only one I-Frame

256, 512 15fps IBP, only one I-Frame

Football

1024 30fps IBBP, only one I-Frame

Feature Feature

Extraction

Low Quality

Extraction IBP, one I-Frame

Video Mobile 96, 192, 384, 768 15fps

every 2 seconds

IBP, one I-Frame

192 15fps

Feature every 1.2 seconds

Harbour

Extraction IBBP, one I-Frame

384, 750 30fps

every 1.2 seconds

Quality Model Quality Model Quality Model Sequences used for validation

y^orig y^low ^y City IBP, one I-Frame

192 15fps

Crew every 1.2 seconds

^y‘ ^y‘‘ Ice IBBP, one I-Frame

384, 750 30fps

every 1.2 seconds

Deadline

Fig. 2. Complete Prediction Model Paris 96, 192, 384, 768 15fps IPPP, only one I-Frame

Zoom

256, 512 15fps IBP, only one I-Frame

predicted quality of the low quality video, the NR model will Foreman

1024 30fps IBBP, only one I-Frame

become a RR model, even if the additional data that has to be IBP, one I-Frame

96, 192, 384, 768 15fps

every 2 seconds

send is very low (only two values per sequence). The corrected

Husky IBP, one I-Frame

prediction ybi0 is calculated as Tempete

96, 192, 384, 768 15fps

every 2 seconds

yi −o y −b

ylow

ybi0 = b with s = orig and o = yblow − 0.25 ∗ s .

b

s 1.0−0.25

Correcting Nonlinearities of Subjective Ratings • To minimize the contextual effect, which is known to affect

results in a single stimulus environment, every encoded

It is known, that at the extremes of the test range (very good

sequence was shown twice in the test and rated twice by

or very bad quality) subjective testing does have a nonlinear

each test subject. Like this also the ability of the viewers

quality rating and ratings do not reach the very extremes of the

to rate one video could be tested and outliers (indicated by

scale but are saturated before. For this reason it is proposed

two votes for the same sequence that differ to much) could

to slightly correct the prediction values yb0 using a sigmoid

be removed.

nonlinear correction. The general sigmoid function is given as

• Each test was preceded by an extensive training session to

0

yb00 = a/(1 + e(−(b

y −b)/c)

) train the subjects on the task of evaluating the video

• Each single test session did not last longer than 25 minutes

For the correction the following values were chosen: a = 1.0, and an adaptation phase of five sequences was set at the

b = 0.5, c = 0.2. The nonlinear sigmoid correction function is start of each test session (this was not undisclosed to the

shown in figure 4, it has to be noted, that the applied correction subjects).

function is very close to be linear over a wide quality range.

The 95% confidence intervals were below 0.04 on a 0 to 1 scale,

Figure 2 gives an overview over the presented prediction model.

which shows, that the results from the tests are very reliable.

Table I shows the sequences and bit rates used for these two

4. A R EDUCED R EFERENCE M ODEL FOR AVC/H.264

tests. Before building the model the data from those tests was

E NCODED V IDEO

split into two parts: only four out of 13 sequences were used for

Subjective Testing for Generating Ground Truth Data calibration of the metric, while the other nine sequences were

A reduced reference metric using the above described method used for the verification phase.

was built using data from two subjective tests that included

AVC/H.264 encoded video. Tests were done on video encoded The Regression Model

at CIF resolution and were performed according to the rules After applying a MSC on the calibration data, a very simple

given in ITU-R BT-500 [19]. This especially includes: regression model with only one PC can be built by applying a

• Room setup compliant to ITU-R BT-500 PLSR. The resulting weights bm of the objective features and

• To maintain a fixed viewing distance the video were the model offset b0 are given in Table II. The PLSR on the

displayed using a DLP projector, the viewing distance was matrix F0 revealed that the feature ’noise’ does not have an

set to four times the picture height influence on the model, therefore this feature was removed and

• SSIS (Single Stimulus Impairment Scale) evaluation using only the remaining seven features were taken into account.

a discrete impairment scale ranging from 0 to 10 (later

rescaled to 0 to 1) Correcting the Results of the Model

• All test sequences were evaluated by at least 20 naive view- The low quality video needed for the correction step was

ers (students who were not familiar with video coding or constructed by encoding the video using the AVC/H.264 stan-

video quality evaluation), all screened for visual accuracy dard with a high (fixed) quantization parameter (resulting in low

and color blindness quality). It has to be noted, that not only the coding parameters

84 SYSTEMICS, CYBERNETICS AND INFORMATICS VOLUME 6 - NUMBER 5 ISSN: 1690-4524

TABLE II TABLE III

W EIGHTS OF OBJECTIVE FEATURES C OMPARISON OF OBJECTIVE METRICS

Feature Weight b Pearson Spearman Outlier

Activity 0.036 Model Linear Rank Oder Ratio

Blocking -0.120 Correlation Correlation

Blur -0.109 Proposed 0.851 0.782 0.500

Color Continuity 0.054 PSNR 0.690 0.623 0.833

Edge Continuity -0.072 Edge-PSNR 0.802 0.745 0.833

Motion Continuity 0.090 SSIM 0.763 0.623 0.667

Predictability 0.095

b0 4.073 TABLE IV

R EGRESSION LINE BEFORE DATA FITTING

Model Slope Offset

for producing this low quality video differ quite significant from

Proposed 0.969 0.042

those used to encode the videos under test, but also a different PSNR* 0.625 0.183

encoder has been used for this task. Edge-PSNR 0.353 0.348

SSIM 0.173 0.821

For easier comparison PSNR was

5. R ESULTS rescaled to PSNR* = (PSNR-15)/30

Verification was done on the sequences not used for the

development of the presented RR quality metric. In total 36

data points were available spanning a quality range from 0.098 during the verification phase. Beside providing a high prediction

to 0.913. Beside PSNR two other FR metrics were calculated accuracy, the gained model allows a quality prediction by trans-

for the presented data. The Edge-PSNR metric [4] was chosen mitting only two additional values, while most other reduced

as one representative of the methods standardized in ITU-T J144 reference metrics need a much higher amount of additional data

[3]. The second FR metric chosen for comparison is the popular to be transmitted. Compared to FR metrics the presented RR

SSIM (Structural SIMilarity index) as presented by Wang in metric also carries the advantage, that no temporal or spatial

[5]. In addition to the classical Pearson Linear Correlation and alignment between the coded sequence and the original sequence

Spearman Rank Oder Correlation to express the ability of the has to be made. In fact the correction step delivers equally good

model to correctly predict the visual quality, also an outlier results if the quality prediction for the original video and the low

ratio is given. A data point is considered to be an outlier if quality video is performed on a part of the video only.

the difference between measured and predicted quality is more

than 0.05 on a 0 to 1 scale (remember, that the 95% confidence R EFERENCES

intervals from the tests were below 0.04). Note that for the [1] ITU-T Rec. H.264 and ISO/IEC 14496-10 (MPEG4-AVC), Ad-

outlier ratio no data fitting was applied for the proposed method vanced Video Coding for Generic Audiovisual Services v1, May,

while linear fitting was applied for the other three models. Linear 2003; v2, Jan. 2004; v3 (with FRExt),Sept. 2004; v4, Jul. 2005

[2] U. Benzler, M. Wien, Results of SVC CE3 (Quality Evaluation).

data fitting has been chosen to fit the predicted values to the ISO/IEC JTC1/SC29/WG11 M10931, Redmond, Jul. 2004

actual given data. While nonlinear fitting (logistic, sigmoid) is [3] ITU-T J.144. Objective perceptual video quality measurement

sometimes proposed for this purpose, higher order fitting always techniques for digital cable television in the presence of a full

carries the danger of fitting the model too much to the actual reference. 2004

data and possibly jeopardizing the ability to predict unknown [4] C. Lee, S. Cho, J. Choe, T. Jeong, W. Ahn and E. Lee Objective

video quality assessment SPIE Journal of Optical Engineering,

data. Volume 45, Issue 1, Jan. 2006

In addition the slope and offset of the regression line before [5] Z. Wang, L. Lu, A. C.Bovik. Video quality assessment using struc-

linear data fitting are given in Table IV. This shows how much tural distortion measurement. ICIP 2002. International Conference

the model relies on a final fitting stage (an information, that on Image Processing, IEEE Proceedings on, 2002

[6] Z. Wang, A. C.Bovic, E. P.Simoncelli, Structural Approaches to

is not given by the correlation measurement) and the ability to Image Quality Assessment. Chapter 8.3 in Handbook of Image and

finally provide a correct and meaningful quality measurement as Video Processing, 2nd Edition Academic Press, 2005

without the knowledge of that line no prediction can be made. [7] A. Shnayderman, A. Gusev, A. M.Eskicioglu, An SVD-based

For a perfect model the slope of this regression line would be grayscale image quality measure for local and global assessment

Image Processing, IEEE Transactions on Volume 15, Issue 2,

1.0 with 0 offset. The problems of a regression far different

Page(s):422 - 429, Feb. 2006

compared to the one of an optimal model can be shown easily [8] G. Zhai, W. Zhang, X. Yang, S. Yao, Y. Xu, GES: a new image

for the SSIM metric: if the regression line that comes with the quality assessment metric based on energy features in Gabor

model is only a little bit different to the real data (e.g. assume transform domain ISCAS 2006, Proceedings IEEE International

the slope to be 0.16 with 0.8 offset instead of 0.173 and 0.821), Symposium on, Page(s):4 pp., 21-24 May 2006

[9] G. Zhai, W. Zhang, X. Yang, Y. Xu, Image quality assessment

the outlier ratio increases dramatically from 0.667 to 0.861. metrics based on multi-scale edge presentation Signal Processing

Detailed results of each metric are given in figures 5 to 8. Systems Design and Implementation, 2005. IEEE Workshop on,

Page(s):331 - 336, 2-4 Nov. 2005

[10] E. Ong, W. Lin, Z. Lu, S. Yao, Colour perceptual video quality

6. C ONCLUSION metric ICIP 2005. IEEE International Conference on Image Pro-

A reduced reference quality metric for AVC/H.264 was built cessing, Volume 3, Page(s):III - 1172-5, 11-14 Sept. 2005

[11] M. Carnec, P. Le Callet, D. Barba, Full reference and reduced

using methods provided by multivariate data analysis. The met- reference metrics for image quality assessment Seventh Inter-

ric was validated using results from careful conducted subjective national Symposium on Signal Processing and Its Applications,

tests and no sequence used for calibration of the model was used Proceedings, Volume 1, Pages:477 - 480, 1-4 July 2003

ISSN: 1690-4524 SYSTEMICS, CYBERNETICS AND INFORMATICS VOLUME 6 - NUMBER 5 85

[12] T. M.Kusuma, H.-J. Zepernick, M. Caldera, On the development Proposed

of a reduced-reference perceptual image quality metric Systems 1,0

Communications, Proceedings, Pages:178 - 184, 14-17 Aug. 2005 0,9

[13] Z. Wang; E. P.Simoncelli Reduced-Reference Image Quality As- 0,8

sessment using a Walet-Domain Natural Image Statistic Model 0,7

Predicted Quality

Human Vision and Electronic Imaging X, Proceedings, Spie, vol 0,6

5666, San Jose, CA, Jan. 2005 0,5

[14] Y. Fu-zheng, W. Xin-dai, C. Yi-lin, W. Shuai, A no-reference video

0,4

quality assessment method based on digital watermark Personal,

0,3

Indoor and Mobile Radio Communications, 2003. 14th IEEE

Proceedings on Volume 3, Page(s):2707 - 2710 vol.3, 7-10 Sept. 0,2

2003 0,1

[15] P. Le Callet, C. Viard-Gaudin, D. Barba, A Convolutional Neu- 0,0

ral Network Approach for Objective Video Quality Assessment. 0,0 0,1 0,2 0,3 0,4 0,5 0,6 0,7 0,8 0,9 1,0

Visual Quality

IEEE Transactions on Neural Networks, Volume 17, Issue 5,

Page(s):1316 - 1327, Sept. 2006

[16] A. C. Bovik, S. Liu, DCT-domain blind measurement of block- Fig. 5. Proposed RR metric - no data fitting

ing artifacts in DCT-coded images. International Conference on

Acoustics, Speech, and Signal Processing. IEEE Proceedings on,

Volume 3, Page(s): 1725 -1728, 07-11 May 2001 PSNR

[17] S. Winkler, T. Ebrahimi, A no-reference perceptual blur metric. 1,0

Proceedings of the International Conference on Image Processing, 0,9

vol. 3, pp. 57–60, Rochester, NY, Sep. 22-25, 2002 0,8

[18] H. Martens, T. Naes, Mutltivariate Calibration. Wiley & Sons, 0,7

Predicted Quality

1992. 0,6

[19] ITU-R Rec. BT. 500-11, Methodology for the Subjective Assessment 0,5

of Quality for Television Pictures. 2002

0,4

0,3

NR quality metric 0,2

1

0,1

0,9

0,0

0,8

0,0 0,1 0,2 0,3 0,4 0,5 0,6 0,7 0,8 0,9 1,0

0,7 Visual Quality

Predicted Quality

0,6

Crew

0,5 Fig. 6. PSNR - linear data fitting

Foreman

0,4

0,3 Husky

Edge-PSNR

0,2 1,0

0,1 0,9

0 0,8

0 0,1 0,2 0,3 0,4 0,5 0,6 0,7 0,8 0,9 1 0,7

Predicted Quality

Visual Quality

0,6

0,5

Fig. 3. Predicted vs. measured quality before slope and offset correction 0,4

0,3

0,2

Sigmoid Quality Correction

1 0,1

0,0

0.9 0,0 0,1 0,2 0,3 0,4 0,5 0,6 0,7 0,8 0,9 1,0

Visual Quality

0.8

Fig. 7. Edge-PSNR - linear data fitting

0.7

Predicted Quality

0.6

SSIM

1,0

0.5

0,9

0,8

0.4

0,7

Predicted Quality

0.3 0,6

0,5

0.2

0,4

0,3

0.1

0,2

0 0,1

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

Visual Quality 0,0

0,0 0,1 0,2 0,3 0,4 0,5 0,6 0,7 0,8 0,9 1,0

Visual Quality

Fig. 4. Sigmoid correction function

Fig. 8. SSIM - linear data fitting

86 SYSTEMICS, CYBERNETICS AND INFORMATICS VOLUME 6 - NUMBER 5 ISSN: 1690-4524

You might also like

- High Efficiency Video Coding (HEVC): Algorithms and ArchitecturesFrom EverandHigh Efficiency Video Coding (HEVC): Algorithms and ArchitecturesNo ratings yet

- Ma 2012Document16 pagesMa 2012Zahir MahroukNo ratings yet

- NR Va RRDocument2 pagesNR Va RRHoàng Mạnh QuangNo ratings yet

- Sipij040309 PDFDocument18 pagesSipij040309 PDFsipijNo ratings yet

- (User Experiences) Quality Model For Point CloudsDocument5 pages(User Experiences) Quality Model For Point Cloudsdorientoon40No ratings yet

- Info Com 2010Document9 pagesInfo Com 2010Hoang TranNo ratings yet

- Offset Trace-Based Video Quality Evaluation After Network TransportDocument13 pagesOffset Trace-Based Video Quality Evaluation After Network TransportRavneet SohiNo ratings yet

- Error Detection and Data Recovery Architecture For Motion EstimationDocument63 pagesError Detection and Data Recovery Architecture For Motion Estimationkasaragadda100% (1)

- 978-1-4244-4318-5/09/$25.00 C °2009 IEEE 3DTV-CON 2009Document4 pages978-1-4244-4318-5/09/$25.00 C °2009 IEEE 3DTV-CON 2009lido1500No ratings yet

- Video Quality Measurement Standards - Current Status and TrendsDocument5 pagesVideo Quality Measurement Standards - Current Status and TrendsRenzo Carlo Navarro PozoNo ratings yet

- Activity-Based Motion Estimation Scheme ForDocument11 pagesActivity-Based Motion Estimation Scheme ForYogananda PatnaikNo ratings yet

- Comparison of Video CodecsDocument12 pagesComparison of Video CodecsRahul MittalNo ratings yet

- Compressed-Sensing - Enabled Video Streaming For Wireless Multimedia Sensor NetworksDocument7 pagesCompressed-Sensing - Enabled Video Streaming For Wireless Multimedia Sensor NetworksAnonymous m1ANXYBBSNo ratings yet

- Modeling, Simulation and Analysis of Video Streaming Errors in Wireless Wideband Access NetworksDocument15 pagesModeling, Simulation and Analysis of Video Streaming Errors in Wireless Wideband Access NetworksEvin JoyNo ratings yet

- Objective Video Quality Assessment Methods: A Classification, Review, and Performance ComparisonDocument18 pagesObjective Video Quality Assessment Methods: A Classification, Review, and Performance ComparisonThu TrangNo ratings yet

- An Image-Based Approach To Video Copy Detection With Spatio-Temporal Post-FilteringDocument27 pagesAn Image-Based Approach To Video Copy Detection With Spatio-Temporal Post-FilteringAchuth VishnuNo ratings yet

- International Journal of Engineering Research and Development (IJERD)Document8 pagesInternational Journal of Engineering Research and Development (IJERD)IJERDNo ratings yet

- Object Based Compression TehcniquesDocument2 pagesObject Based Compression TehcniquesPavi ShankarNo ratings yet

- Bedanta Et Al. - 2015 - SNR Scalable Video Coding With An Improvised Approach of Motion EstimationDocument5 pagesBedanta Et Al. - 2015 - SNR Scalable Video Coding With An Improvised Approach of Motion EstimationypnayakNo ratings yet

- Efficient and Robust Detection of Duplicate Videos in A DatabaseDocument4 pagesEfficient and Robust Detection of Duplicate Videos in A DatabaseEditor IJRITCCNo ratings yet

- Measuring Quality in MPEGDocument8 pagesMeasuring Quality in MPEGdaniel santosNo ratings yet

- A Different Approach For Spatial Prediction and Transform Using Video Image CodingDocument6 pagesA Different Approach For Spatial Prediction and Transform Using Video Image CodingInternational Organization of Scientific Research (IOSR)No ratings yet

- Effective Image Quality Estimation Using Wavelet Based Watermarking TechniqueDocument6 pagesEffective Image Quality Estimation Using Wavelet Based Watermarking TechniqueerpublicationNo ratings yet

- Efficient Video Indexing For Fast-Motion VideoDocument16 pagesEfficient Video Indexing For Fast-Motion VideoijcgaNo ratings yet

- The Relationship Among Video Quality, Screen Resolution, and Bit Rate - IEEE11Document5 pagesThe Relationship Among Video Quality, Screen Resolution, and Bit Rate - IEEE11JeransNo ratings yet

- XDVDocument54 pagesXDVnachiket1234No ratings yet

- IPStatRate FinalDocument16 pagesIPStatRate FinalClone HaNo ratings yet

- Video Chatting Platform With Video Enhancing Feature Using Super ResolutionDocument7 pagesVideo Chatting Platform With Video Enhancing Feature Using Super ResolutionIJRASETPublicationsNo ratings yet

- Vol 2 No 4Document113 pagesVol 2 No 4IJCNSNo ratings yet

- Design and Implementation of An Optimized Video Compression Technique Used in Surveillance System For Person Detection With Night Image EstimationDocument13 pagesDesign and Implementation of An Optimized Video Compression Technique Used in Surveillance System For Person Detection With Night Image EstimationChetan GowdaNo ratings yet

- Video Coding and Routing in Wireless Video Sensor NetworksDocument6 pagesVideo Coding and Routing in Wireless Video Sensor Networkseily0No ratings yet

- An Overview of Recent Video Coding Developments in Mpeg and AomediaDocument9 pagesAn Overview of Recent Video Coding Developments in Mpeg and AomediaAnonymous RHXmnbTNo ratings yet

- High Efficiency Video Coding With Content Split Block Search Algorithm and Hybrid Wavelet TransformDocument7 pagesHigh Efficiency Video Coding With Content Split Block Search Algorithm and Hybrid Wavelet TransformSai Kumar YadavNo ratings yet

- Ijcsit2015060459 PDFDocument6 pagesIjcsit2015060459 PDFAnonymous VVUp0Q4oeFNo ratings yet

- The Relationship Among Video Quality, Screen Resolution, and Bit RateDocument5 pagesThe Relationship Among Video Quality, Screen Resolution, and Bit RateDiscover MonitoringNo ratings yet

- Emotion Triggered H.264 Compression: This Chapter DescribesDocument27 pagesEmotion Triggered H.264 Compression: This Chapter DescribesRajshree MandalNo ratings yet

- 2013 GIIS Hdadheech Et Al FinalDocument7 pages2013 GIIS Hdadheech Et Al FinalKamal SwamiNo ratings yet

- Comparison of Video CodecsDocument11 pagesComparison of Video Codecsvishal67% (3)

- Full-Reference Metric For Image Quality Assessment: Abstract-The Quality of Image Is Most Important Factor inDocument5 pagesFull-Reference Metric For Image Quality Assessment: Abstract-The Quality of Image Is Most Important Factor inIjarcsee JournalNo ratings yet

- RD-Optimisation Analysis For H.264/AVC Scalable Video CodingDocument5 pagesRD-Optimisation Analysis For H.264/AVC Scalable Video Codingvol2no4No ratings yet

- White Paper - Video Quality - AcceptTVDocument5 pagesWhite Paper - Video Quality - AcceptTVjind_never_thinkNo ratings yet

- An Image-Based Approach To Video Copy Detection With Spatio-Temporal Post-FilteringDocument10 pagesAn Image-Based Approach To Video Copy Detection With Spatio-Temporal Post-FilteringAchuth VishnuNo ratings yet

- Video Classification:A Literature Survey: Pravina Baraiya Asst. Prof. Disha SanghaniDocument5 pagesVideo Classification:A Literature Survey: Pravina Baraiya Asst. Prof. Disha SanghaniEditor IJRITCCNo ratings yet

- IJRAR1CSP053Document4 pagesIJRAR1CSP053VM SARAVANANo ratings yet

- 153 CPBDDocument7 pages153 CPBDalisalahshor9No ratings yet

- A Hybrid Transformation Technique For Advanced Video Coding: M. Ezhilarasan, P. ThambiduraiDocument7 pagesA Hybrid Transformation Technique For Advanced Video Coding: M. Ezhilarasan, P. ThambiduraiUbiquitous Computing and Communication JournalNo ratings yet

- Evaluate MPEG Video Quality with Network SimulationsDocument8 pagesEvaluate MPEG Video Quality with Network SimulationszahraNo ratings yet

- Video EnhancementDocument29 pagesVideo Enhancementhanumantha12No ratings yet

- Scalable Video Content Adaptation: Iii C I C TDocument12 pagesScalable Video Content Adaptation: Iii C I C TNormaISDBTNo ratings yet

- Video Summarization and Retrieval Using Singular Value DecompositionDocument12 pagesVideo Summarization and Retrieval Using Singular Value DecompositionIAGPLSNo ratings yet

- Improved Entropy Encoding Boosts HEVC CompressionDocument9 pagesImproved Entropy Encoding Boosts HEVC CompressionThành PhạmNo ratings yet

- 5.5.2 Video To Text With LSTM ModelsDocument10 pages5.5.2 Video To Text With LSTM ModelsNihal ReddyNo ratings yet

- MpegdocDocument30 pagesMpegdocveni37No ratings yet

- 2011 - APSIPA - Recent Developments and Future Trends in Visual Quality AssessmentDocument10 pages2011 - APSIPA - Recent Developments and Future Trends in Visual Quality Assessmentarg.xuxuNo ratings yet

- A Tutorial On H.264SVC Scalable Video CodingDocument24 pagesA Tutorial On H.264SVC Scalable Video CodingNeda IraniNo ratings yet

- A New Approach For Video Denoising and Enhancement Using Optical Flow EstimationDocument4 pagesA New Approach For Video Denoising and Enhancement Using Optical Flow EstimationAnonymous kw8Yrp0R5rNo ratings yet

- Electronic Reference Images For Flaw Indications in Welds and CastingsDocument8 pagesElectronic Reference Images For Flaw Indications in Welds and CastingsDeepak kumarNo ratings yet

- Ivpsnr Final Final With IEEE Copyright FooterDocument17 pagesIvpsnr Final Final With IEEE Copyright FooterzerrojsNo ratings yet

- VVC, HEVC and AV1 Codec ComparisonDocument9 pagesVVC, HEVC and AV1 Codec ComparisonĐoàn Mạnh CườngNo ratings yet

- Comparison of Video Codecs - WikipediaDocument57 pagesComparison of Video Codecs - WikipediaJose Rhey OsabalNo ratings yet

- TGGTDocument3 pagesTGGTAzmi AbdulbaqiNo ratings yet

- How Computer Graphics Uses Clipping to Display Visible Portions of ImagesDocument8 pagesHow Computer Graphics Uses Clipping to Display Visible Portions of ImagesAzmi AbdulbaqiNo ratings yet

- 3 2D Transformation ROTATIONDocument3 pages3 2D Transformation ROTATIONAzmi AbdulbaqiNo ratings yet

- Edddas 11223344Document11 pagesEdddas 11223344Azmi AbdulbaqiNo ratings yet

- Scaling objects with scale factorsDocument3 pagesScaling objects with scale factorsAzmi AbdulbaqiNo ratings yet

- TGGTDocument3 pagesTGGTAzmi AbdulbaqiNo ratings yet

- Normalized Device Coordinates (NDC) MappingDocument7 pagesNormalized Device Coordinates (NDC) MappingAzmi AbdulbaqiNo ratings yet

- Reflection MatrixDocument3 pagesReflection MatrixAzmi AbdulbaqiNo ratings yet

- TGGTDocument3 pagesTGGTAzmi AbdulbaqiNo ratings yet

- Quality Assessment Tool For Performance Measurement of Image Contrast Enhancement MethodsDocument10 pagesQuality Assessment Tool For Performance Measurement of Image Contrast Enhancement MethodsAzmi AbdulbaqiNo ratings yet

- 3 2D Transformation ROTATIONDocument3 pages3 2D Transformation ROTATIONAzmi AbdulbaqiNo ratings yet

- 3 2D Transformation ROTATIONDocument3 pages3 2D Transformation ROTATIONAzmi AbdulbaqiNo ratings yet

- 2-Circle Drawing AlgorithmsDocument4 pages2-Circle Drawing AlgorithmsAzmi AbdulbaqiNo ratings yet

- Computer Graphics - LectureDocument5 pagesComputer Graphics - LectureAzmi AbdulbaqiNo ratings yet

- Computer Graphics - Lecture (1)Document6 pagesComputer Graphics - Lecture (1)Azmi AbdulbaqiNo ratings yet

- Computer Graphics: Line and Circle 1-Line Drawing AlgorithmsDocument4 pagesComputer Graphics: Line and Circle 1-Line Drawing AlgorithmsAzmi AbdulbaqiNo ratings yet

- Enhanced Image No-Reference Quality Assessment Based On Colour Space DistributionDocument11 pagesEnhanced Image No-Reference Quality Assessment Based On Colour Space DistributionAzmi AbdulbaqiNo ratings yet

- Files 112Document1 pageFiles 112Azmi AbdulbaqiNo ratings yet

- Chen2012 Chapter ReducedReferenceImageQualityAsDocument8 pagesChen2012 Chapter ReducedReferenceImageQualityAsAzmi AbdulbaqiNo ratings yet

- Files 112Document1 pageFiles 112Azmi AbdulbaqiNo ratings yet

- Neurocomputing: Sergio Decherchi, Paolo Gastaldo, Rodolfo Zunino, Erik Cambria, Judith RediDocument12 pagesNeurocomputing: Sergio Decherchi, Paolo Gastaldo, Rodolfo Zunino, Erik Cambria, Judith RediAzmi AbdulbaqiNo ratings yet

- Blockiness Estimation in Reduced Reference Mode For Image Quality AssessmentDocument4 pagesBlockiness Estimation in Reduced Reference Mode For Image Quality AssessmentAzmi AbdulbaqiNo ratings yet

- Files 112Document1 pageFiles 112Azmi AbdulbaqiNo ratings yet

- Files 112Document1 pageFiles 112Azmi AbdulbaqiNo ratings yet

- Files 112Document1 pageFiles 112Azmi AbdulbaqiNo ratings yet

- Files 112Document1 pageFiles 112Azmi AbdulbaqiNo ratings yet

- J. Vis. Commun. Image R.: Saeed Mahmoudpour, Peter SchelkensDocument13 pagesJ. Vis. Commun. Image R.: Saeed Mahmoudpour, Peter SchelkensAzmi AbdulbaqiNo ratings yet

- Neurocomputing: Lin Ma, Xu Wang, Qiong Liu, King Ngi NganDocument11 pagesNeurocomputing: Lin Ma, Xu Wang, Qiong Liu, King Ngi NganAzmi AbdulbaqiNo ratings yet

- Natural Image Statistics Based 3D Reduced Reference Image Quality AssessmentDocument9 pagesNatural Image Statistics Based 3D Reduced Reference Image Quality AssessmentAzmi AbdulbaqiNo ratings yet

- Unit IIIDocument5 pagesUnit IIISandhya FugateNo ratings yet

- ECO304 - Sampling DistributionDocument39 pagesECO304 - Sampling DistributionNicholas Atuoni GyabaaNo ratings yet

- 4343 11393 1 PBDocument4 pages4343 11393 1 PBYong PanNo ratings yet

- Qmt181 Assignment Group.Document18 pagesQmt181 Assignment Group.Hazwani Sanusi100% (1)

- Professional Cloud Architect Exam - Free Actual Q&as, Mar 32pageDocument335 pagesProfessional Cloud Architect Exam - Free Actual Q&as, Mar 32pageTharun PanditiNo ratings yet

- Condor Technology: Datasheet: Electrical Smart Heater For Space Heating, Model SM - ( ) ADocument2 pagesCondor Technology: Datasheet: Electrical Smart Heater For Space Heating, Model SM - ( ) AEldar IsgenderovNo ratings yet

- AWM 5000 Series Microbridge Mass Airflow Sensor: Installation Instructions For TheDocument2 pagesAWM 5000 Series Microbridge Mass Airflow Sensor: Installation Instructions For ThewidsonmeloNo ratings yet

- Mini Project 2 Report Mba 2nd Semester 2Document51 pagesMini Project 2 Report Mba 2nd Semester 2Saurav KumarNo ratings yet

- WIDI Jack Owner S Manual v07 Mobile View enDocument17 pagesWIDI Jack Owner S Manual v07 Mobile View enMike BuchananNo ratings yet

- Circuit Symbols PDFDocument9 pagesCircuit Symbols PDFStefanos Duris100% (1)

- Lotus Notes Training ManualDocument64 pagesLotus Notes Training Manualiamprabir100% (1)

- Reading Innovation Via ProFuturo SolutionDocument7 pagesReading Innovation Via ProFuturo SolutionMa Cristina ServandoNo ratings yet

- HP 5120 EI Switch SeriesDocument39 pagesHP 5120 EI Switch SeriesKarman77No ratings yet

- Labview Thesis ProjectsDocument5 pagesLabview Thesis Projectsdwm7sa8p100% (2)

- Log Cat 1700663779292Document144 pagesLog Cat 1700663779292Enry GomezNo ratings yet

- Inglés Técnico 01Document23 pagesInglés Técnico 01Stefany DulkaNo ratings yet

- IM0973112 Camera AFZ EN A04 MailDocument12 pagesIM0973112 Camera AFZ EN A04 MailEmerson BatistaNo ratings yet

- Registered Contractor List PPADBDocument3 pagesRegistered Contractor List PPADBGaone Lydia SetlhodiNo ratings yet

- Cyberbully Movie in Class AssignmentDocument5 pagesCyberbully Movie in Class AssignmentHarshini Kengeswaran100% (1)

- Cioks DC7Document2 pagesCioks DC7Mac MiñaNo ratings yet

- Building Customer-Centric OrganizationsDocument58 pagesBuilding Customer-Centric Organizationscamitercero7830No ratings yet

- KOMATSU Hydraulic Breaker JMHB09H-1 SEN06664-01 Shop ManualDocument60 pagesKOMATSU Hydraulic Breaker JMHB09H-1 SEN06664-01 Shop ManualchinhNo ratings yet

- As Cfe Interop 61850 en PDFDocument29 pagesAs Cfe Interop 61850 en PDFlhuizxNo ratings yet

- Article Review 1Document24 pagesArticle Review 1limap5No ratings yet

- As Test 04Document6 pagesAs Test 04LearnchemNo ratings yet

- LP Math 7 (4) (Integers-Division)Document2 pagesLP Math 7 (4) (Integers-Division)Rookie LoraNo ratings yet

- BV261 - U Type Butterfly ValveDocument2 pagesBV261 - U Type Butterfly ValveFAIYAZ AHMEDNo ratings yet

- 8051 MaterialDocument198 pages8051 Materialsupreetp555No ratings yet

- Motorola Moto G (2014) ManualDocument68 pagesMotorola Moto G (2014) ManualXBNo ratings yet

- Lecture 2.2: Initial Value Problems: Matthew MacauleyDocument10 pagesLecture 2.2: Initial Value Problems: Matthew Macauleyaamir064No ratings yet

- Certified Solidworks Professional Advanced Weldments Exam PreparationFrom EverandCertified Solidworks Professional Advanced Weldments Exam PreparationRating: 5 out of 5 stars5/5 (1)

- Autodesk Fusion 360: A Power Guide for Beginners and Intermediate Users (3rd Edition)From EverandAutodesk Fusion 360: A Power Guide for Beginners and Intermediate Users (3rd Edition)Rating: 5 out of 5 stars5/5 (2)

- SolidWorks 2015 Learn by doing-Part 2 (Surface Design, Mold Tools, and Weldments)From EverandSolidWorks 2015 Learn by doing-Part 2 (Surface Design, Mold Tools, and Weldments)Rating: 4.5 out of 5 stars4.5/5 (5)

- FreeCAD | Step by Step: Learn how to easily create 3D objects, assemblies, and technical drawingsFrom EverandFreeCAD | Step by Step: Learn how to easily create 3D objects, assemblies, and technical drawingsRating: 5 out of 5 stars5/5 (1)

- From Vision to Version - Step by step guide for crafting and aligning your product vision, strategy and roadmap: Strategy Framework for Digital Product Management RockstarsFrom EverandFrom Vision to Version - Step by step guide for crafting and aligning your product vision, strategy and roadmap: Strategy Framework for Digital Product Management RockstarsNo ratings yet

- Certified Solidworks Professional Advanced Surface Modeling Exam PreparationFrom EverandCertified Solidworks Professional Advanced Surface Modeling Exam PreparationRating: 5 out of 5 stars5/5 (1)

- Mastering Autodesk Inventor 2014 and Autodesk Inventor LT 2014: Autodesk Official PressFrom EverandMastering Autodesk Inventor 2014 and Autodesk Inventor LT 2014: Autodesk Official PressRating: 5 out of 5 stars5/5 (1)

- Autodesk Inventor | Step by Step: CAD Design and FEM Simulation with Autodesk Inventor for BeginnersFrom EverandAutodesk Inventor | Step by Step: CAD Design and FEM Simulation with Autodesk Inventor for BeginnersNo ratings yet

- Autodesk Inventor 2020: A Power Guide for Beginners and Intermediate UsersFrom EverandAutodesk Inventor 2020: A Power Guide for Beginners and Intermediate UsersNo ratings yet

- Fusion 360 | Step by Step: CAD Design, FEM Simulation & CAM for Beginners.From EverandFusion 360 | Step by Step: CAD Design, FEM Simulation & CAM for Beginners.No ratings yet