Professional Documents

Culture Documents

Multi-Class Classification of Thorax Diseases Using Neural Networks

Uploaded by

KIRAN SURYAVANSHIOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Multi-Class Classification of Thorax Diseases Using Neural Networks

Uploaded by

KIRAN SURYAVANSHICopyright:

Available Formats

International Conference on Smart Data Intelligence

(ICSMDI 2021)

Multi-class classification of thorax diseases using neural networks

Digvijay Desaia, Shreyash Zanjalb, Harish Chavanc, Rushikesh Patild, Pravin Futanee

a

Department of Information Technology, Vishwakarma Institute of Information Technology, Pune, 411048, India

b

Department of Information Technology, Vishwakarma Institute of Information Technology, Pune, 411048, India

c

Department of Information Technology, Vishwakarma Institute of Information Technology, Pune, 411048, India

d

Department of Information Technology, Vishwakarma Institute of Information Technology, Pune, 411048, India

e

Department of Information Technology, Vishwakarma Institute of Information Technology, Pune, 411048, India

E-mail address: {digvijay.21810440, shreyash.21810616, harish.21810663, rushikesh.21810558, pravin.futane}@viit.ac.in

ABSTRACT

Chest radiograph or chest X-ray (CXR) is one of the most conducted radiology examinations, it is also considered difficult to interpret. Increasing

availability of imaging equipment has also increased the demand for highly trained staff. Doctors are absolutely good at putting forward the diagnosis, but

some minor details can be overlooked. In this paper, deep learning method is used to predict Thorax disease categories using CXR and its metadata using

Convolutional Neural Network (CNN) and MobileNets neural network architecture. The huge NIH (National Institutes of Health, Maryland) dataset of

chest X-rays available is used for making the predictions of a multiclass image classification problem with 15 different labels. This model will provide a

sanity test in the form of second opinion for radiologists and doctors to achieve more confidence in predicting accurate diagnosis. The accuracy of this

model is appreciable as compared to the current practice of predicting diseases by radiologists. This paper successfully classifies different categories of

Thorax disease with 94.68% accuracy, which concludes that this model trained with deep learning neural network has a real-world application and can be

used in the prediction of Thorax diseases. The scope of this model would increase along with the accuracy when more data is collected from some other

lab tests, clinical notes, or some other scans.

Keywords: Neural networks, Multiclass classification, Chest x-ray, Medical image processing.

1. Introduction and motivation

India is amongst the highest disease burdens in the world. Frequency of respiratory disease with its economic burden is rising [1]. Although Chest

radiograph or CXR is one of the most conducted radiology studies, it is also considered difficult to interpret [2].

The thorax or chest is a part located between neck and abdomen. It is secured and supported by the rib cage, shoulder girdle and spine. The disease related

to this part of the body are called as thorax diseases. These diseases include pneumothorax which is an abnormal collection of air in the space that exist

between the lung and the chest wall. Pneumonia is another thorax disease which in an inflammatory condition of the lungs which affect the small air sacs

present in the lungs known as alveoli. The symptoms include cough, rapid breathing, difficulty in breathing, fever, chest pain, etc. These diseases can be

predicted using the chest X-ray of the patient.

Today, India has nearly 10,000 practicing radiologists. Due to availability of less expensive imaging equipment manufactured in the country, accessing the

imaging equipment has become easier, but with all these developments, the growing availability of imaging equipment has also increased the demand for

highly trained staff [3]. In fact, in some parts of India there is only one radiologist for every 1,00,000 people, compared to a United States ratio of 1 for

every 10,000 [4].

In a medical division, the highest accuracy and confidence in CXR reporting is given by specialist registrars (StRs) and consultants followed by core

medical trainees (CMT) and general practitioners (GPST) [5]. But, for radiology diagnosis the error rate is approximately in-between 10-15%. The rate of

clinically significant errors in radiology as found in a 2001 review was between 2-20% [6].

In this paper, deep learning methods are used to predict 14 different Thorax disease categories using CXR and its metadata. Multi-class classification is

defined as the process of classifying instances into one of three or more classes present in the data. Classification of 14 different diseases using Softmax

regression will provide a sanity test in the form of second opinion for radiologists to achieve more confidence in predicting accurate diagnosis for the

same.

Electronic copy available at: https://ssrn.com/abstract=3852748

2. Related work

2.1. Detecting malaria with deep learning

Detecting Malaria is an intensive manual process which is automated using deep learning with the help of Lister Hill National Centre for Biomedical

Communications (LHNCBC), part of National Library of Medicine (NLM) who have collected the dataset of healthy and infected blood smear images [7].

They focused on single disease i.e., Malaria detection, but in this paper, broader spectrum of problem is considered i.e., 14 Thorax disease classification.

2.2. Predicting thorax diseases with NIH chest x-rays

Image processing of X-rays using deep learning have predicted 14 different categories of Thorax diseases using the same NIH dataset with F1 score of

71.8% [8]. Their main aim was to cast this problem as a multi-class, multi-label (1 patient may have multiple diseases), image classification challenge.

2.3. Predicting COVID-19 from chest x-ray images using deep transfer learning

Detecting Covid-19 from radiography or radiology images is one of the most preferred and quickest ways to diagnose a patient. Dataset of 5000 CXR are

available from the publicly available dataset. Board-certified radiologist identified X-rays detecting COVID-19 disease. ResNet50, ResNet18, DenseNet-

121 and SqueezeNet, were trained by transfer learning on a subset of 2000 x-ray images.

2.4. Thoracic disease identification and localization with limited supervision

In treatment planning and clinical diagnosis, accurate identification and localization of anomalies in X-ray images are important. In this model, these tasks

can be achieved very well by small amount of location annotation. It effectively outputs both class information as well as limited location annotation, and

significantly outperforms the comparative reference baseline in both classification and localization tasks. For multi-label classification (multiple diseases

can be identified in one chest x-ray image), binary classifier has been defined for each disease type.

In this paper, the NIH dataset is improvised and used for experimentation. The study focuses on presenting the probability distribution of all 14

diseases, while utilizing all the data given by NIH.

3. Problem statement

The objective of our research on Thorax disease will cover:

• Multiclass classification of 14 different Thorax diseases as an aid for radiologist’s diagnosis.

• Visualizing the traits of patients and their disease predicted.

• Analysing the correlation between the disease and the patient’s trait.

4. Dataset

The NIH Chest X-ray dataset has 112,120 CXR with disease labels of 30,805 unique patients. It includes information like patient ID, patient gender,

patient age, number of visits of patient, view position, etc., which will be considered as patient’s traits in data analysis [9].

Labels in the images are created by using NLP to text-mine disease classification from the CXR report associated with it. It is clearly mentioned that the

accuracy of these NLP labelling is approximately >90%.

There are 15 classes in the expected distribution which consists of 14 Thorax diseases, and one for "No findings" label. These Images can be analysed as:

• “No findings”.

• Most probable disease found.

Out of 112,120 X-ray images as shown in Table 1, from the NIH dataset, 91,312 X-ray images are used after removing the multi-labelled X-ray images.

This is necessary due to fact that the Softmax activation function is a probability distribution function which gives us the distribution of probable disease

out of all 15 classes. Therefore, any multi-labelled X-ray couldn’t provide the distribution of a disease. New labels after removing the multi-labelled

images are shown in the Table 2.

Pneumonia, Fibrosis, Infiltration, Atelectasis, Edema, Consolidation, Nodule Mass, Pneumothorax, Pleural thickening, Cardiomegaly, Effusion,

Emphysema, and Hernia are the 14 different categories of Thorax diseases to be predicted. Fig. 1.1. and Fig. 1.1. show examples of the thorax diseases

and their labels with their identification.

Electronic copy available at: https://ssrn.com/abstract=3852748

The actual X-ray image size in NIH dataset is 1024×1024 pixels. These images are normalized to 128×128 pixels while preprocessing the data. Also, the

data is pre-processed by removing the bad samples from the dataset which are inverted, not-frontal or somehow badly rotated. These bad labels can

increase the error in prediction of diseases.

Fig. 1 - (a), (b) Example of mentioned diseases.

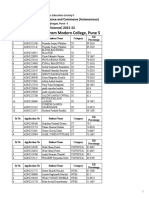

Table 1 – Data before pre-processing.

Disease No. of labels

Infiltration 19894

Atelectasis 13317

Effusion 11559

Nodule 6331

Pneumothorax 5782

Mass 5302

Consolidation 4667

Pleural Thickening 3385

Cardiomegaly 2776

Emphysema 2516

Fibrosis 1686

Edema 2303

Pneumonia 1431

Hernia 227

No Findings 60353

Electronic copy available at: https://ssrn.com/abstract=3852748

Table 2 – Data after pre-processing.

Disease No. of labels

Infiltration 9546

Atelectasis 4214

Effusion 3955

Nodule 2705

Pneumothorax 2193

Mass 2139

Consolidation 1310

Pleural Thickening 1126

Cardiomegaly 1093

Emphysema 892

Fibrosis 727

Edema 627

Pneumonia 322

Hernia 110

No Findings 60353

5. Method

To classify Thorax diseases, Convolutional Neural Network (CNN) are used along with MobileNets neural network architecture. Softmax regression

as activation function in the final layer. Softmax regression allows to distribute the probability of disease among 15 different categories. In early layers,

MobileNets architecture will be used in the early layers to create a lightweight deep CNN.

5.1. Convolutional neural network (CNN)

Images are fed into the CNN as input, which is a Deep Learning algorithm, and then identifies the feature set in the image with the help of filters

(kernels) by training the weights and biases. This helps in differentiating different images with the help of feature sets.

CNN architecture as shown in Fig. 2., is comparable to the pattern of Neurons in human brain. It is analogous to the organization of visual cortex.

Receptive field is the region of visual field where individual neurons respond to stimuli. The collection of receptive fields overlaps and covers the

complete visual area.

Electronic copy available at: https://ssrn.com/abstract=3852748

Fig. 2 CNN architecture.

5.2. Steps involved in convolutional neural network

Step 1: Convolution

Convolution is the process of adding each element of the image pixel by pixel to its local neighbours, weighted by the learnable filter or kernel. This helps

in feature extraction from the image and reduce the dependency of network on the individual neuron. Convolution is the matrix operation as shown in Fig.

3., and is not the matrix multiplication, although being similarly denoted by *.

Fig. 3 Convolution operation.

Step 2: Pooling

The spatial size of features which are extracted in the convolution step are reduced by pooling layer. It adds up as an extra step for extracting dominant

feature. After extracting features from convolution step, dimensions of feature sets are decreased which subsequently helps to lower computational power

for data processing.

There are two types of Pooling as shown in Fig. 4.: i) Max Pooling ii) Average Pooling.

Max Pooling returns the highest value from the portion of the region of the image after the convolution step weighted by the filter.

Average Pooling returns the average of all the values from the portion of the region after convolution step covered by the filter.

Electronic copy available at: https://ssrn.com/abstract=3852748

Fig. 4 Types of pooling.

Step 3: Full connection

The Convolution layer gives an output of high-level features. Its non-linear combination can be learnt in a cheap way by adding a fully connected layer as

shown in Fig. 5. So, a fully connected layer is responsible for learning a non-linear function.

Fig. 5 Fully connected layer.

Step 4: Flattening

Electronic copy available at: https://ssrn.com/abstract=3852748

Flattening step involves conversion of CNN architecture into MLP (Multi-Level Perceptron) architecture, where we create a column vector by flattening

the image as shown in Fig. 5. From here, the column vector is fed to a feed-forward neural network. For training, we create epochs and then apply

standard backpropagation algorithm for every iteration. Now this trained model is ready to identify the low-level features and high level dominating

features in images and decide their influence on individual neuron.

5.3. Softmax regression

Softmax regression (or multinomial logistic regression) is a more advanced and improved version logistic regression. In logistic regression, only

binary labels can be classified 1.e., y(i) ∈ {0,1}. While in Softmax regression, multiple classes are handled [27]. Softmax regression allows us to handle

y(i) ∈ {1, …, K} labels, where K is the number of classes [28]. p ∈ ℝ15 were obtained through the matrix calculation p = W x where x is the input data and

W ∈ ℝ15×10242. Denoting W (Weight matrix) as

− 𝜃𝜃1𝑇𝑇 −

⎡ ⎤

⎢ − 𝜃𝜃2𝑇𝑇 −⎥

⎢− 𝜃𝜃3𝑇𝑇 −⎥

𝑊𝑊 = ⎢ . ⎥ (1)

⎢ ⎥

⎢ . ⎥

⎢ . ⎥

𝑇𝑇

⎣− 𝜃𝜃15 −⎦

In equation 1, Θ are the parameters.

W was calculated through optimization of the following cost function,

−1 exp�𝜃𝜃𝑗𝑗𝑇𝑇 𝑥𝑥 (𝑖𝑖) �

𝐽𝐽(𝑊𝑊) = � ∑𝑚𝑚 15

𝑖𝑖=1 ∑𝑗𝑗=1 1{𝑦𝑦

(𝑖𝑖)

= 𝑗𝑗} 𝑙𝑙𝑙𝑙𝑙𝑙 𝑘𝑘 � (2)

𝑚𝑚 ∑𝑙𝑙=1 exp�𝜃𝜃𝑙𝑙𝑇𝑇 𝑥𝑥 𝑖𝑖 �

With gradient descent function 𝜃𝜃𝑗𝑗 ∶ 𝛻𝛻𝜃𝜃𝑗𝑗 𝐽𝐽(𝑊𝑊) =

−1

∑𝑚𝑚

𝑖𝑖=1 𝑥𝑥

(𝑖𝑖)

�1�𝑦𝑦 (𝑖𝑖) = 𝑗𝑗� − 𝑝𝑝�𝑦𝑦 (𝑖𝑖) = 𝑗𝑗 | 𝑥𝑥 (𝑖𝑖) ; 𝜃𝜃𝑗𝑗 �� (3)

𝑚𝑚

5.4. MobileNet architecture

MobileNet neural network architecture is used to build light weight deep CNN. The MobileNet model is trained on the ImageNet dataset which

consists of images from 1000 different classes. It also consists of two simple global hyper-parameters- latency and accuracy. These hyper-parameters

undergo trade off which allow model builder to decide model of the right size for their application.

Before using data for training, the images in data were converted to 128x128 image rather than using a high resolution 1024x1024 greyscale X-rays. This

helps in training the model faster due to less computational power.

Below Fig. 6 shows the final architecture of the neural network:

Electronic copy available at: https://ssrn.com/abstract=3852748

Fig. 6 Final model architecture.

5.5. Data pre-processing

To increase the accuracy of model and to reduce the computational power, before training the model, the data is pre-processed. This helps to increase

the model accuracy. The steps involved in pre-processing are:

• Reading the images: In this step, the path to the image dataset is stored into a variable then a function is created to load folders containing all the

images into arrays.

• Resizing the images: The images have 1024*1024 pixels size. This requires a lot of computational power to process the data. So, the images are

reduced to 128*128 pixels. These images are further denoised to get more accuracy during training the data model.

• Data augmentation: It is a process used to increase the number of images by making slight changes in the images. These changes include slight

rotation, flipping the image, cropping the image, etc. This step increases the data size which helps more in increasing the accuracy of the final training

model.

6. Results and experiments

6.1. Quantitative results

The data is split into Training, Validation and Test data set. Training data set being 72%, Validation set of 8% and Test set of 20%. Evaluation of the

model was based on its accuracy as metric. Calculation of overall Precision, F1-score and Recall is shown in table 2. The results obtained were

outstanding and clearly show that neural network performed very well for predicting thorax diseases.

Precision = TP / (TP + FP) (4)

Recall = TP / (TP + FN) (5)

Where, TP is correctly predicted positive observation,

(TP + FP) is the total predicted positive observations,

(TP + FN) is the total of all observations in actual class.

F1 Score is the weighted average of Precision and Recall. Both false negatives and false positives are taken into accounts.

F1 Score = 2(Recall × Precision) / (Recall + Precision) (6)

Overall accuracy was 94.68 % as shown in Table 3, which is significantly good when compared to the previous works done with the similar intentions.

The precision score achieved is 0.69 as show in Table 4, which is a bit on the lower side. This is due the fact that the model used in implementation has 15

classes in total. Taking this into consideration, the precision achieved is very respectable. The overall F1-score achieved is 61.5% and the F- beta score

@beta=0.5 is 66% which is considerable for the medical standards. The labelled data used for training the model is NLP extracted which has accuracy

greater than 90%. Hence, the 10% inaccurate labels in the dataset also affect the performance of the model. If these inaccurate labels are more of the labels

that are diseases, then it reduces the precision and recall of the model very drastically. Finding and correcting these labels might help us to increase the

results of the proposed model. Also, there are very few examples of some diseases for testing like Fibrosis, Edema, Pneumonia and Hernia which have

less than 1000 examples in the complete dataset which makes it more difficult to get better results. Increasing the input dataset size for these images will

also help in improving the results.

Table 3 – Accuracy of individual dataset.

Data Accuracy (%)

Train 95.28

Validation 95.48

Test 94.87

Table 4 – Results.

Data Precision Recall F1 Score F Beta Score

Train 0.68 0.55 0.60 0.65

Validation 0.70 0.57 0.63 0.67

Electronic copy available at: https://ssrn.com/abstract=3852748

6.2. Model analysis

As shown in Fig. 2., the first layer is the MobileNet network without any pretrained weights.

The second layer is a pooling layer which is used to reduce the dimensions of the feature set after convolution step.

The Third layer is a dropout layer with a probability of 0.5, which is to avoid overfitting of the data.

The fourth layer is dense layer with 512 units again followed by dropout with a probability of 0.5.

Final layer consists of 15 units corresponding to 15 classes with Softmax as activation function.

The model was built using Keras which is an opensource software library written python programming language to build deep learning models.

Fig. 7 shows the synopsis of the proposed model. In the Fig. 7, param means the parameters present in the model.

Fig. 7 Synopsis of architecture of the proposed model.

6.3. Future works

The main aim of future work will be focusing on improving the results of the model by increasing the dataset size and getting more images of the

diseases which have less images present in the dataset. Also, the number of diseases which can be predicted using this proposed model will be increased.

Currently, this model predicts 14 thorax diseases.

7. Conclusion

This model has unlocked a new potential for Thorax disease prediction using CXRs. The accuracy of this model is considerable as compared to the

current practice of predicting diseases by radiologists. This research successfully classified different categories of Thorax disease with 94.68% accuracy,

which concludes that this model trained with deep learning neural network has a real-world application and can be used in the prediction of Thorax

diseases. The scope of this model would increase along with the accuracy when more data is collected from some other lab tests, clinical notes, or some

other scans. The analysis would be better when it is correlated with more traits of patients with the diseases found. Additional experiments with resolution

of the images along with custom architecture of the neural network will help for better preprocessing. This model will provide a sanity test in the form of

second opinion for radiologist to achieve more confidence in predicting accurate diagnosis. The results can be improved by increasing the dataset size for

training the model. The future work will focus on the same and will also increase the number of diseases which can be predicted using the proposed

model.

Acknowledgements

This research did not receive any specific grant from any funding agencies in the public, commercial, or not-for-profit sectors.

REFERENCES

[1] Salvi, S., Apte, K., Madas, S., Barne, M., Chhowala, S., Sethi, T., Aggarwal, K., Agrawal, A., & Gogtay, J. (2015). Symptoms and medical conditions in

204 912 patients visiting primary health-care practitioners in India: a 1-day point prevalence study (the POSEIDON study). The Lancet Global Health, 3,

776–784.

Electronic copy available at: https://ssrn.com/abstract=3852748

[2] Kanne, J. P., Thoongsuwan, N., & Stern, E. J. (2005). Common Errors and Pitfalls in Interpretation of the Adult Chest Radiograph. Clinical Pulmonary

Medicine, 12(2).

[3] Arora, R. (2014). The training and practice of radiology in India: current trends. Quantitative Imaging in Medicine and Surgery, 4(6), 449–450.

[4] Satia, I., Bashagha, S., Bibi, A., Ahmed, R., Mellor, S., & Zaman, F. (2013). Assessing the accuracy and certainty in interpreting chest X-rays in the

medical division. Clinical Medicine (London, England), 13(4), 349–352.

[5] Zhou, Y., Boyd, L., & Lawson, C. (2015). Errors in Medical Imaging and Radiography Practice: A Systematic Review. Journal of Medical Imaging and

Radiation Sciences, 46(4), 435–441. https://doi.org/https://doi.org/10.1016/j.jmir.2015.09.002

[6] Wang, X., Peng, Y., Lu, L., Lu, Z., Bagheri, M., & Summers, R. M. (2017). ChestX-Ray8: Hospital-Scale Chest X-Ray Database and Benchmarks on

Weakly-Supervised Classification and Localization of Common Thorax Diseases. 2017 IEEE Conference on Computer Vision and Pattern Recognition

(CVPR), 3462–3471.

[7] Minaee, S., Kafieh, R., Sonka, M., Yazdani, S., & Jamalipour Soufi, G. (2020). Deep-COVID: Predicting COVID-19 from chest X-ray images using

deep transfer learning. Medical Image Analysis, 65, 101794. https://doi.org/https://doi.org/10.1016/j.media.2020.101794

[8] Li, Z., Wang, C., Han, M., Xue, Y., Wei, W., Li, L., & Fei-Fei, L. (2018). Thoracic Disease Identification and Localization with Limited Supervision.

2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 8290–8299.

[9] Ausawalaithong, W., Thirach, A., Marukatat, S., & Wilaiprasitporn, T. (2018). Automatic Lung Cancer Prediction from Chest X-ray Images Using the

Deep Learning Approach. 2018 11th Biomedical Engineering International Conference (BMEiCON), 1–5.

[10] Dargan, S., Kumar, M., Ayyagari, M. R., & Kumar, G. (2020). A Survey of Deep Learning and Its Applications: A New Paradigm to Machine Learning.

Archives of Computational Methods in Engineering, 27(4), 1071–1092.

[11] Bhattacharya, S., Reddy Maddikunta, P. K., Pham, Q.-V., Gadekallu, T. R., Krishnan S, S. R., Chowdhary, C. L., Alazab, M., & Jalil Piran, M. (2021).

Deep learning and medical image processing for coronavirus (COVID-19) pandemic: A survey. Sustainable Cities and Society, 65, 102589.

[12] Le, W., Maleki, F., Perdigón Romero, F., Forghani, R., & Kadoury, S. (2020). Overview of Machine Learning: Part 2 Deep Learning for Medical Image

Analysis. Neuroimaging Clinics of North America, 30.

[13] Jain, R., Nagrath, P., Kataria, G., Sirish Kaushik, V., & Jude Hemanth, D. (2020). Pneumonia detection in chest X-ray images using convolutional

neural networks and transfer learning. Measurement, 165, 108046.

[14] Keller, J. M., Liu, D., & Fogel, D. B. (2016). Multilayer Neural Networks and Backpropagation. In Fundamentals of Computational Intelligence: Neural

Networks, Fuzzy Systems, and Evolutionary Computation (pp. 35–60). IEEE.

[15] Deng, L., & Yu, D. (2014). Deep Learning: Methods and Applications. In Deep Learning: Methods and Applications. now.

[16] Pham, T. D. (2020). Classification of COVID-19 chest X-rays with deep learning: new models or fine tuning? Health Information Science and Systems,

9(1), 2.

[17] Nakasi, R., Mwebaze, E., Zawedde, A., Tusubira, J., Akera, B., & Maiga, G. (2020). A new approach for microscopic diagnosis of malaria parasites in

thick blood smears using pre-trained deep learning models. SN Applied Sciences, 2(7), 1255.

[18] Sriporn, K., Tsai, C.-F., Tsai, C.-E., & Wang, P. (2020). Analyzing Malaria Disease Using Effective Deep Learning Approach. Diagnostics,

10(10).

[19] Lai, L., Cai, S., Huang, L., Zhou, H., & Xie, L. (2020). Computer-aided diagnosis of pectus excavatum using CT images and deep learning

methods. Scientific Reports, 10(1), 20294.

[20] Hashmi, M. F., Katiyar, S., Keskar, A. G., Bokde, N. D., & Geem, Z. W. (2020). Efficient Pneumonia Detection in Chest Xray Images Using

Deep Transfer Learning. Diagnostics, 10(6).

[21] Tanzi, L., Vezzetti, E., Moreno, R., & Moos, S. (2020). X-Ray Bone Fracture Classification Using Deep Learning: A Baseline for Designing

a Reliable Approach. Applied Sciences, 10, 1507.

[22] Sori, W. J., Feng, J., Godana, A. W., Liu, S., & Gelmecha, D. J. (2020). DFD-Net: lung cancer detection from denoised CT scan image using deep

learning. Frontiers of Computer Science, 15(2), 152701.

[23] Radiology Diagnostic Errors Are Surprisingly High, https://zaggocare.org/radiology-diagnostic-errors-surprisingly-high/

[24] Detecting Malaria with Deep Learning, https://opensource.com/article/19/4/detecting-malaria-deep-learning

[25] Predicting Thorax Diseases with NIH Chest X-Rays, http://cs229.stanford.edu/

[26] NIH Clinical Center provides one of the largest publicly available chest x-ray datasets to scientific community, https://www.nih.gov/news-events/news-

releases/

[27] Multiclass classification, https://en.wikipedia.org/wiki/Multiclass_classification

[28] Softmax Regression, http://deeplearning.stanford.edu/tutorial/supervised/SoftmaxRegression/

[29] India has the CT and MRI machines, but not enough technicians to run them, https://globalhealthi.com/2017/04/20/medical-imaging-india/

Electronic copy available at: https://ssrn.com/abstract=3852748

You might also like

- Unveiling The Precision of CheXNeXt Algorithm Against Radiologist Expertise in Chest Radiograph Pathology DetectionDocument10 pagesUnveiling The Precision of CheXNeXt Algorithm Against Radiologist Expertise in Chest Radiograph Pathology DetectionselvaNo ratings yet

- Deep Learning Based Automated Chest X-Ray Abnormalities DetectionDocument12 pagesDeep Learning Based Automated Chest X-Ray Abnormalities DetectionTusher Kumar SahaNo ratings yet

- Final Revision of Octane Paper For Nature MedicineDocument41 pagesFinal Revision of Octane Paper For Nature MedicineElias Alboadicto Villagrán DonaireNo ratings yet

- Project Report On Primitive Diagnosis of Respiratory Diseases - Google Docs1Document13 pagesProject Report On Primitive Diagnosis of Respiratory Diseases - Google Docs1sasanknakka9No ratings yet

- 2004 06578 PDFDocument19 pages2004 06578 PDFSumaNo ratings yet

- Refining Dataset Curation Methods For Deep LearninDocument8 pagesRefining Dataset Curation Methods For Deep Learninalamin ridoyNo ratings yet

- 1 s2.0 S1532046420302768 MainDocument10 pages1 s2.0 S1532046420302768 MainDenzo NaldaNo ratings yet

- 1 s2.0 S0957417422016372 MainDocument14 pages1 s2.0 S0957417422016372 MainMd NahiduzzamanNo ratings yet

- Respiratory System GROUP 9-1Document10 pagesRespiratory System GROUP 9-1Iffat Jahan ShornaNo ratings yet

- Algorithmic Precision in Chest Radiograph InterpretationDocument9 pagesAlgorithmic Precision in Chest Radiograph InterpretationselvaNo ratings yet

- Applied Sciences: A Novel Transfer Learning Based Approach For Pneumonia Detection in Chest X-Ray ImagesDocument17 pagesApplied Sciences: A Novel Transfer Learning Based Approach For Pneumonia Detection in Chest X-Ray ImagesmzshaikhNo ratings yet

- Disease Prediction Using Machine Learning Algorithms KNN and CNNDocument7 pagesDisease Prediction Using Machine Learning Algorithms KNN and CNNIJRASETPublications100% (1)

- Healthcare 11 00207 v2Document20 pagesHealthcare 11 00207 v2Uliyana KechikNo ratings yet

- Comparative Analysis of Deep Learning Convolutional Neural Networks Based On Transfer Learning For Pneumonia DetectionDocument12 pagesComparative Analysis of Deep Learning Convolutional Neural Networks Based On Transfer Learning For Pneumonia DetectionIJRASETPublicationsNo ratings yet

- Anapub Paper TemplateDocument10 pagesAnapub Paper Templatebdhiyanu87No ratings yet

- Comparison of Deep Learning Algorithms For Pneumonia DetectionDocument5 pagesComparison of Deep Learning Algorithms For Pneumonia DetectionInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- 1 s2.0 S0010482523001488 MainDocument10 pages1 s2.0 S0010482523001488 MainEng-Ali Al-madaniNo ratings yet

- Ies50839 2020 9231540Document5 pagesIes50839 2020 9231540AhdiatShinigamiNo ratings yet

- Pneumonia Detection: Department of CSE, NITDocument17 pagesPneumonia Detection: Department of CSE, NITAkashNo ratings yet

- Proposwal 1Document5 pagesProposwal 1farzanaNo ratings yet

- Lung Tumor Localization and Visualization in ChestDocument17 pagesLung Tumor Localization and Visualization in ChestAida Fitriyane HamdaniNo ratings yet

- Diagnosis of Pneumonia From Chest X-Ray Images Using Deep LearningDocument5 pagesDiagnosis of Pneumonia From Chest X-Ray Images Using Deep LearningShoumik MuhtasimNo ratings yet

- Prediction of Pneumonia Using CNNDocument9 pagesPrediction of Pneumonia Using CNNIJRASETPublicationsNo ratings yet

- Journalnx DataDocument4 pagesJournalnx DataJournalNX - a Multidisciplinary Peer Reviewed JournalNo ratings yet

- Campen EllaDocument21 pagesCampen EllaWhy BotherNo ratings yet

- DOI Problem 2Document11 pagesDOI Problem 2RajendraNo ratings yet

- Identifying Drug-Resistant Tuberculosis in Chest Radiographs Evaluation of CNN Architectures and Training StrategiesDocument4 pagesIdentifying Drug-Resistant Tuberculosis in Chest Radiographs Evaluation of CNN Architectures and Training StrategiesManya K MNo ratings yet

- Automatic Detection of Pneumonia On Compressed Sensing Images Using Deep LearningDocument4 pagesAutomatic Detection of Pneumonia On Compressed Sensing Images Using Deep LearningRahul ShettyNo ratings yet

- Smart Medical Diagnosis SystemDocument34 pagesSmart Medical Diagnosis SystemKhushiiNo ratings yet

- Chest X-Ray Outlier Detection Model Using Dimension Reduction and Edge DetectionDocument11 pagesChest X-Ray Outlier Detection Model Using Dimension Reduction and Edge DetectionHardik AgrawalNo ratings yet

- The Reliability of Convolution Neural Networks Diagnosing Malignancy in MammographyDocument3 pagesThe Reliability of Convolution Neural Networks Diagnosing Malignancy in Mammographyapi-436279390No ratings yet

- Paper2 15pagesDocument15 pagesPaper2 15pagesCharlène Béatrice Bridge NduwimanaNo ratings yet

- Pneumonia Detection Using Convolutional Neural Networks (CNNS)Document14 pagesPneumonia Detection Using Convolutional Neural Networks (CNNS)shekhar1405No ratings yet

- Deep Convolutional Neural Networks in Thyroid Disease Detection-Full TextDocument10 pagesDeep Convolutional Neural Networks in Thyroid Disease Detection-Full TextJamesLeeNo ratings yet

- A Survey Paper On Pneumonia Detection in Chest X-Ray Images Using An Ensemble of Deep LearningDocument9 pagesA Survey Paper On Pneumonia Detection in Chest X-Ray Images Using An Ensemble of Deep LearningIJRASETPublicationsNo ratings yet

- Scrutiny: A On COVID-19 Detection Using Convolutional Neural Network and Image ProcessingDocument13 pagesScrutiny: A On COVID-19 Detection Using Convolutional Neural Network and Image ProcessingAk shatha SNo ratings yet

- A Multi-Plant Disease Diagnosis Method Using Convolutional Neural NetworkDocument13 pagesA Multi-Plant Disease Diagnosis Method Using Convolutional Neural NetworkTefeNo ratings yet

- Detecting Tuberculosis in Chest X-Ray Images UsingDocument5 pagesDetecting Tuberculosis in Chest X-Ray Images UsingShoumik MuhtasimNo ratings yet

- AI Radiology Dec Support SystemDocument25 pagesAI Radiology Dec Support SystemBestari SetyawatiNo ratings yet

- Lung Disease Prediction System Using Data Mining TechniquesDocument6 pagesLung Disease Prediction System Using Data Mining TechniquesKEZZIA MAE ABELLANo ratings yet

- Novel Deep Learning Architecture For Heart Disease Prediction Using Convolutional Neural NetworkDocument6 pagesNovel Deep Learning Architecture For Heart Disease Prediction Using Convolutional Neural Networksdfasfd100% (1)

- Radiologist Observations of Computed Tomography (CT) Images Predict Treatment Outcome in TB Portals, A Real-World Database of Tuberculosis (TB) CasesDocument17 pagesRadiologist Observations of Computed Tomography (CT) Images Predict Treatment Outcome in TB Portals, A Real-World Database of Tuberculosis (TB) CasesMuhammad ReskyNo ratings yet

- Healthcare 10 02335 v2Document23 pagesHealthcare 10 02335 v2alamin ridoyNo ratings yet

- SirishKaushik2020 Chapter PneumoniaDetectionUsingConvoluDocument14 pagesSirishKaushik2020 Chapter PneumoniaDetectionUsingConvoluMatiqul IslamNo ratings yet

- Ureta 2021 J. Phys. Conf. Ser. 2071 012001Document9 pagesUreta 2021 J. Phys. Conf. Ser. 2071 012001Manya K MNo ratings yet

- Retina Full DocumentDocument34 pagesRetina Full DocumentThe MindNo ratings yet

- 1 s2.0 S1532046420302550 MainDocument17 pages1 s2.0 S1532046420302550 MainSyamkumarDuggiralaNo ratings yet

- TB Detection in Chest Radiograph Using Deep Learning ArchitectureDocument12 pagesTB Detection in Chest Radiograph Using Deep Learning ArchitectureYazid BastomiNo ratings yet

- TB Detection in Chest Radiograph Using Deep Learning ArchitectureDocument12 pagesTB Detection in Chest Radiograph Using Deep Learning ArchitectureYazid BastomiNo ratings yet

- 19MCB0010 Paper - 130321Document6 pages19MCB0010 Paper - 130321Godwin EmmanuelNo ratings yet

- Covid 19Document18 pagesCovid 19AISHWARYA PANDITNo ratings yet

- Document3 TexDocument4 pagesDocument3 TexNavin M ANo ratings yet

- The Role of Radiology in Diagnostic Error: A Medical Malpractice Claims ReviewDocument7 pagesThe Role of Radiology in Diagnostic Error: A Medical Malpractice Claims ReviewEva HikmahNo ratings yet

- TelemedicineDocument9 pagesTelemedicineAna CaballeroNo ratings yet

- Recent Trends in Disease Diagnosis Using Soft Computing Techniques: A ReviewDocument13 pagesRecent Trends in Disease Diagnosis Using Soft Computing Techniques: A ReviewVanathi AndiranNo ratings yet

- RetrieveDocument13 pagesRetrieveNEUMOLOGIA TLAHUACNo ratings yet

- MIMIC-CXR, A De-Identified Publicly Available Database of Chest Radiographs With Free-Text ReportsDocument8 pagesMIMIC-CXR, A De-Identified Publicly Available Database of Chest Radiographs With Free-Text ReportsIsmii NhurHidayatieNo ratings yet

- Handwritten Pattern Recognition For Early Parkinson's Disease Diagnosis - Bernardo2019Document7 pagesHandwritten Pattern Recognition For Early Parkinson's Disease Diagnosis - Bernardo2019DrHellenNo ratings yet

- Research ArticleDocument15 pagesResearch ArticleShanviNo ratings yet

- Ai Cie3Document2 pagesAi Cie3KIRAN SURYAVANSHINo ratings yet

- BCA Second Round Allotment ListDocument3 pagesBCA Second Round Allotment ListKIRAN SURYAVANSHINo ratings yet

- AI Research Paper UpdatedDocument6 pagesAI Research Paper UpdatedKIRAN SURYAVANSHINo ratings yet

- BCA Science Merit List From Modern CollegeDocument3 pagesBCA Science Merit List From Modern CollegeKIRAN SURYAVANSHINo ratings yet

- Offer Letter - YI 2Document4 pagesOffer Letter - YI 2KIRAN SURYAVANSHINo ratings yet

- Water Properties Experiment: Universal SolventDocument2 pagesWater Properties Experiment: Universal SolventKIRAN SURYAVANSHINo ratings yet

- S24Document17 pagesS24Chinny FortalezaNo ratings yet

- Cec Committee Ty (A.Y 2021-22) : Vishwakarma Institute of Information TechnologyDocument2 pagesCec Committee Ty (A.Y 2021-22) : Vishwakarma Institute of Information TechnologyKIRAN SURYAVANSHINo ratings yet

- Global Software Development: by Logan ThiemDocument24 pagesGlobal Software Development: by Logan ThiemKIRAN SURYAVANSHINo ratings yet

- SCT 1st 3 Clusters 2022Document9 pagesSCT 1st 3 Clusters 2022Vulli Leela Venkata PhanindraNo ratings yet

- CC511 Week 5 - 6 - NN - BPDocument62 pagesCC511 Week 5 - 6 - NN - BPmohamed sherifNo ratings yet

- Prediction Point of Fault Location On Its Campus PDocument11 pagesPrediction Point of Fault Location On Its Campus PRena KazukiNo ratings yet

- SAS Miner Get Started 53Document184 pagesSAS Miner Get Started 53Truely MaleNo ratings yet

- Soil Dynamics and Earthquake Engineering: SciencedirectDocument13 pagesSoil Dynamics and Earthquake Engineering: SciencedirectAmine OsmaniNo ratings yet

- Loan Risk Prediction Using User Transaction InformationDocument3 pagesLoan Risk Prediction Using User Transaction InformationAbhi ShikthNo ratings yet

- Chapter 4 ClassificationDocument78 pagesChapter 4 ClassificationMohamedsultan AwolNo ratings yet

- QB SoftDocument10 pagesQB Softjoydeep12No ratings yet

- 2 - Neural NetworkDocument59 pages2 - Neural NetworkJitesh Behera100% (1)

- Detection and Assessment of Partial Shading in Photovoltaic ArraysDocument10 pagesDetection and Assessment of Partial Shading in Photovoltaic ArraysÖğr. Gör. Yasin BEKTAŞNo ratings yet

- Cours - Machine LearningDocument20 pagesCours - Machine LearningINTTICNo ratings yet

- Contoh PngmbngnAplikasiTextRecognitionDgnNeuralNetwork - Anif - SafitriDocument9 pagesContoh PngmbngnAplikasiTextRecognitionDgnNeuralNetwork - Anif - SafitriUsNo ratings yet

- Introduction To ANNDocument14 pagesIntroduction To ANNShahzad Karim KhawerNo ratings yet

- Artificial Neural Networks: ReferencesDocument57 pagesArtificial Neural Networks: Referencesprathap394No ratings yet

- An Intelligent Modeling of Coagulant Dosing System For Water Treatment Plants Based On Artificial Neural NetworkDocument8 pagesAn Intelligent Modeling of Coagulant Dosing System For Water Treatment Plants Based On Artificial Neural NetworkamirNo ratings yet

- Supervised Machine Learning: A Review of Classification TechniquesDocument20 pagesSupervised Machine Learning: A Review of Classification TechniquesJoélisson LauridoNo ratings yet

- Forecasting Hotel Room Prices in Selected GC - 2020 - Journal of Hospitality and PDFDocument11 pagesForecasting Hotel Room Prices in Selected GC - 2020 - Journal of Hospitality and PDFCEVOL PHYSICNo ratings yet

- UNIT-2 Foundations of Deep LearningDocument64 pagesUNIT-2 Foundations of Deep LearningbhavanaNo ratings yet

- Artificial Neural Network Applications in Power ElectronicsDocument8 pagesArtificial Neural Network Applications in Power ElectronicsSubhajit MondalNo ratings yet

- What Is Perceptron - SimplilearnDocument46 pagesWhat Is Perceptron - SimplilearnWondwesen FelekeNo ratings yet

- Spam Email Using Machine LearningDocument13 pagesSpam Email Using Machine LearningushavalsaNo ratings yet

- Deep Learning Algorithms Report PDFDocument11 pagesDeep Learning Algorithms Report PDFrohillaanshul12No ratings yet

- Prediction of Ship Fuel Consumption by Using An Artificial Neural NetworkDocument12 pagesPrediction of Ship Fuel Consumption by Using An Artificial Neural Networkjwpaprk1No ratings yet

- Deep Learning For Tube Amplifier EmulationDocument7 pagesDeep Learning For Tube Amplifier EmulationVincent RainNo ratings yet

- AISC - Term Test 2 - 2021 22Document11 pagesAISC - Term Test 2 - 2021 22Om GawdeNo ratings yet

- IPS Academy, Institute of Engineering & ScienceDocument19 pagesIPS Academy, Institute of Engineering & Sciencevg0No ratings yet

- Slideshare. Present YourselfDocument56 pagesSlideshare. Present YourselfGurpreet SinghNo ratings yet

- Neural SyllabusDocument1 pageNeural SyllabuskamalshrishNo ratings yet

- NeuralNetworks One PDFDocument58 pagesNeuralNetworks One PDFDavid WeeNo ratings yet