Professional Documents

Culture Documents

Measuring What Matters: Aaron M. Pallas

Uploaded by

Aaron PallasOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Measuring What Matters: Aaron M. Pallas

Uploaded by

Aaron PallasCopyright:

Available Formats

R&D

Measuring What Matters

Few practices in education are subject to as education landscape. A well-designed teacher

much ridicule as how we evaluate the perform- evaluation system can signal to the public, and

Thinkstock/Comstock

ance of classroom teachers. The evaluation of to young adults considering teaching as an oc-

teachers is criticized as perfunctory and hap- cupation, that teacher performance is taken se-

hazard, relying on limited information and riously. In this way, teacher evaluation might

subject to the whims of the evaluators. The im- have longer-term effects on who enters teach-

age of a principal dropping into a classroom, ing, and on the distribution of good teaching

watching from the back of the class for 10 min- in U.S. schools.

AARON M. PALLAS utes, and then filling out a form rating the Tensions often exist among the diverse pur-

teacher’s performance as satisfactory may seem poses of teacher evaluation, especially when

like a caricature, but there’s enough truth in principals have primary responsibility for eval-

the depiction to cause anyone concerned with uating teachers. Some systems ask principals to

teaching and learning to squirm in discomfort. police teachers’ performance through evalua-

When some school districts hand out top rat- tion so that poor performers get drummed out.

ings to nearly all of their teachers, even when Others ask principals to support teachers and

Although the current their evaluations of student achievement aren’t guide them toward more effective practices.

system of teacher nearly so positive, something is seriously amiss. Principals report discomfort with the “cop vs.

evaluation is an easy The recognition that teacher evaluation is coach” roles thrust on them by teacher evalu-

target, designing a broken has given rise to the view — not nec- ation systems, and a recent study of a teacher

essarily true — that almost any alternative evaluation system in Chicago shows that they

better system is

would be better than the status quo. But sev- may manage this discomfort by inflating their

more complicated eral key issues must be considered when de- evaluations of teacher performance to main-

than it appears. signing an effective teacher evaluation system. tain the trust and support of teachers (Sartain,

Design evaluation systems to promote clear Stoelinga, and Brown 2009). Thus, the certi-

purposes. There are many reasons to evaluate fication and selection functions of teacher eval-

teacher performance, and the features of an uation may be weakened to support the moti-

evaluation system may support some of these vational and directional functions.

better than others. Evaluations can be used to In addition, the number of categories used

certify teachers as competent (for example, the to describe teacher performance, and the la-

award of tenure) or to select them for oppor- bels associated with them, may differ depend-

tunities or rewards (for example, professional ing on the evaluation’s purpose. Certifying

development to address weaknesses or a bonus teachers as competent, for example, might re-

R&D appears in each issue of based on excellent performance). Evaluations quire evaluators simply to differentiate those

Kappan with the assistance of can also direct the attention of teachers and ad- who meet some threshold for competence

the Deans’ Alliance, which is ministrators to what the schools deem impor- from those who do not. In other cases, evalu-

composed of the deans of the tant (for example, raising students’ test scores or ators might need to distinguish teachers who

education schools/colleges at maintaining order in the classroom). And eval- are highly skilled from those who are compe-

the following universities: uations, especially when coupled with low- and tent.

Harvard University, Michigan high-stakes rewards and punishments, might For some purposes, evaluators might need

State University, Northwestern motivate teachers to perform at high levels. to compare or rank teachers’ performances

University, Stanford University,

Beyond the consequences of evaluation for against one another; for other purposes, they

Teachers College Columbia

individual teachers, there are arguments that might need to compare a teacher’s perform-

University, University of

California Berkeley, University of

teacher evaluation can transform the broader ance against an absolute standard. In assessing

California Los Angeles, a teacher’s contribution to student learning, for

University of Michigan, AARON M. PALLAS is a professor of sociology and ed- example, an evaluator might hold samples of

University of Pennsylvania, and ucation at Teachers College, Columbia University, New student work to an absolute standard, then

University of Wisconsin. York. judge whether each teacher has met the stan-

68 Kappan December 2010/January 2011 kappanmagazine.org

dard. The Performance Evaluation Program they are purely a technical issue of data avail-

in Alexandria, Va., used this strategy and had ability; if there were annual tests in grades

teachers set annual goals for improving student other than three through eight that could be

achievement (for example, “90% of my stu- used to generate individual value-added scores

dents will be able to solve inequalities using for teachers, presumably these data would as-

multiplication and division”) and used teacher- sume the same importance (that is, 50% of the

constructed tests and other artifacts to meas- overall evaluation). But, this way of thinking

ure progress toward these goals. disguises the value judgments that are embed-

In contrast, if teachers are ranked against ded in the criteria for evaluating teacher per-

one another in their contributions to student formance and the weights assigned to these cri-

learning, as is commonly done in value-added teria.

assessment systems (Harris 2010), there may One key but problematic assumption is that

be winners and losers. Washington, D.C.’s standardized test scores are satisfactorily com-

highly touted IMPACT system for teacher plete measures of student learning. To be sure,

evaluation, for example, classifies 50% of the students’ scores on NCLB-style standardized

teachers in grades four through eight as inef- tests are reliable, are designed to align with Since novice

fective or minimally effective and 50% as ef- state curricular standards, and are moderately teachers’

fective or highly effective, regardless of the ab- good predictors of future educational success. performance can

solute level of students’ learning. In contrast, But, some dimensions of student learning for

change quickly,

the program’s approach to classroom observa- which teachers are responsible may not easily

tion does not impose these kinds of constraints, be captured by these tests. To rely solely on these evaluators should

allowing all teachers to be judged effective or tests as measures of teachers’ contributions to observe their

highly effective. These two features of the IM- student learning is to implicitly devalue other performance

PACT evaluation system address different pur- dimensions of student learning. Rothstein and frequently, perhaps

poses. Jacobsen (2006) found that many stakeholders several times a year.

Recognize that teacher evaluation identi- value critical thinking and problem solving, so-

fies the kind of learning we value. The cur- cial skills and a work ethic, and citizenship and

rent wave of teacher evaluation systems em- community responsibility as goals of public ed-

phasizes two distinct features of teachers’ ucation. If we are to hold schools accountable

work: their effect on student learning and their for such diverse student learning goals, they

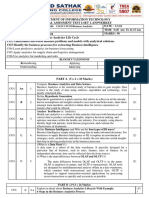

classroom practices. For example, in the 2010- should be reflected in the learning measures

11 school year, D.C. IMPACT evaluates and used to evaluate teachers.

labels general education teachers in grades four Sometimes, evaluations implicitly value an

through eight as ineffective, minimally effec- instantaneous effect on student learning. In the

tive, effective, or highly effective. This evalu- effort to isolate a teacher’s contribution to stu-

ation is based on four factors: individual value- dent learning, value-added methods typically

added student achievement data (50%), class- look at the change in students’ achievement

room observations based on a teaching and levels from one year to the next. But, a teacher’s

learning framework (35%), a principal’s assess- effect on students is not instantaneous, and

ment of a teacher’s commitment to the school measures of that effect may differ after two

community (10%), and school-level value- years or five years. Brian Jacob and his colleagues

added achievement data (5%). (Teachers’ final found that even one year after a value-added

scores can be adjusted downward if they don’t effect was measured, only 20% of the original

meet four standards for core professionalism.) effect persisted, and after two years, only one-

But teachers who teach grades or subjects for eighth of the effect persisted (Jacob, Lefgren,

which individual value-added scores aren’t and Sims forthcoming). This means that dif-

available have slightly different criteria, and ferences in teachers’ effects on student learn-

different weights: 75% of their overall evalua- ing that appear relatively large may decline

tion is based on classroom observations keyed sharply over time. If we value teachers’ contri-

to the teaching and learning framework, 10% butions to student learning in the long run, we

is based on teachers’ assessments of student must design evaluations that assign an appro-

learning, 10% is based on commitment to the priate value to those contributions. But, there

school community, and 5% is based on school- are always trade-offs. If the long-term effects

level value-added achievement data. of a teacher’s contribution to a student’s learn-

The conventional interpretation of such ing aren’t observed for five years, the measures

differences between weighting schemes is that of this long-term influence might not be avail-

kappanmagazine.org V92 N4 Kappan 69

able when a teacher is evaluated for tenure, of- ation system gives it no value.

ten around the third year of teaching. The problem of overlooking important fea-

To use a particular student assessment or se- tures of teachers’ practice may be most acute

ries of assessments to measure what teachers in secondary schools, where disciplinary

contribute to student learning is to implicitly knowledge, and knowing how to teach disci-

value what those tests measure — and implic- plinary concepts, is so central to teachers’

itly devalue what they do not. Some assess- work. As the National Comprehensive Center

ments are more comprehensive than others in for Teacher Quality points out, many of the

their coverage of learning objectives; its breadth frameworks for teaching and learning that

Thinkstock/liquidlibrary

is one reason the National Assessment of Ed- serve as the foundation for classroom observa-

ucational Progress is often called the “gold tion rubrics give short shrift to the knowledge

standard” of assessments in relation to NCLB- and practices that might make teachers suc-

style state tests. New York, by contrast, has 48 cessful in teaching trigonometry, or chemistry,

8th-grade mathematics standards, but the state’s or Shakespeare (Goe, Bell, and Little 2008).

2009 test allowed just seven of those standards Classroom observation rubrics are often ori-

to account for 50% of the total points available. ented toward the presence or absence of low-

If we value the contribution that teachers make inference, observable student behaviors. For

to students’ mastery of standards that don’t ap- example, the recent design standards for

pear on an assessment, we shouldn’t design teacher evaluation created by The New

evaluation systems that give that mastery no Teacher Project suggest rating the percentage

If we are to hold weight. The next generation of assessments of students who raise their hands when the

schools accountable will be aligned with the Common Core stan- teacher poses a question (The New Teacher

for diverse student dards that many states adopted in 2010 and may Project 2010). It’s not evident that effective

learning goals, those thus allow evaluators to assess teachers’ con- practice in the teaching of complex subject

tribution to the learning of most standards. matter can be measured in ways that don’t rely

goals should be

But, it will be several years before they appear. on complex inferences. For this reason, some

reflected in the Recognize that teacher evaluation expresses districts hire experienced secondary school

learning measures what we value as good teaching practice. We subject-matter teachers, who presumably have

used to evaluate have made great strides toward establishing deep content knowledge, to observe secondary

teachers. what counts as good teaching, but there is still classroom teachers.

much we don’t know about how to measure it. The good news is that, at least at the ele-

And though we hope that good teaching will mentary level, evidence exists that principals

result in substantial learning, an uncomfort- and other observers can be trained to use class-

able circularity is built into identifying effec- room observation protocols reliably, such that

tive teaching practices based on their associa- two observers watching the same teaching les-

tion with value-added measures of student son can generate similar ratings. A key element

learning on tests that assess only a small frac- of this training is having observers watch and

tion of the learning that we value. Are some rate videotaped lessons for which there is con-

practices universally good or bad? How much sensus among experts about whether the teach-

does good practice depend on the context in ers’ practices are desirable or undesirable, and

which it is observed? The assessment of teach- providing feedback to the observers that allows

ers’ classroom practices will not wait until we them to align their ratings to the expert judg-

have comprehensive and validated measures of ments (Sartain, Stoelinga, and Brown 2009).

good practice. Instead, the state of the art in Synchronize data collection with reason-

assessing teachers’ practices is to rely on class- able beliefs about how quickly teachers’ per-

room observation protocols. formance changes. We’re accustomed to using

Even classroom observation protocols, how- a single school year as the timeframe for eval-

ever, are an expression of which dimensions of uating teacher performance. However, re-

teachers’ work are to be valued. Teachers’ work searchers, administrators, and experienced

includes planning and classroom assessment teachers recognize that the performance of

skills (among many others), and to rely solely novice teachers can change rapidly over the

on classroom observation protocols to repre- first few years, but that after 10 or 15 years of

sent teachers’ practices is to implicitly devalue teaching, additional years of experience don’t

other dimensions of teachers’ professional seem to matter as much for outcomes such as

practice. If a feature of teachers’ practices gets students’ performance on standardized tests.

no weight in the evaluation system, the evalu- Because novice teachers’ performance can

70 Kappan December 2010/January 2011 kappanmagazine.org

change quickly, evaluators should observe their tion of teacher evaluations is more accurate or

performance frequently, perhaps several times precise than is justified by what goes into them,

a year. Conversely, since the performance of even when they’re transformed into numbers.

experienced teachers is more stable, evaluators Acknowledging the limits to what we know

should observe them less frequently. about teachers’ performance based on the cur-

Value-added assessments of teacher per- rent technologies for measuring their contri-

formance have shown us that a teacher’s rank- butions to student learning and for assessing

ing compared to other teachers is not stable teachers’ professional practices does not re-

from one year to the next, even among expe- quire us to return to the bad old days of teacher

rienced teachers. Using a single year of student evaluation. K

test scores to evaluate teachers will, therefore,

We have made great

make it appear as though a teacher’s perform- REFERENCES

ance varies a great deal from one year to the strides toward

Goe, Laura, Courtney Bell, and Olivia Little. Approaches establishing what

next. One reason for this is that many teachers

to Evaluating Teacher Effectiveness: A Research

teach a relatively small number of students counts as good

Synthesis. Washington, D.C.: National Comprehensive

each year. Averaging value-added estimates of teaching, but there is

Center for Teacher Quality, 2008.

teacher performance over several years in- still much we don’t

creases the sample size and makes estimates Harris, Douglas N. “Clear Away the Smoke and Mirrors

know about how to

more stable, but doing so may sacrifice infor- of Value-Added.” Phi Delta Kappan 91, no. 8 (May

mation about a teacher’s current or recent rel- 2010): 66-69.

measure it.

ative standing.

Jacob, Brian, Lars Lefgren, and David Sims. “The

The problems of designing good teacher

Persistence of Teacher-Induced Learning Gains.”

evaluation systems can be conquered. We’ve

Journal of Human Resources, forthcoming.

made great strides in developing ways to assess

teachers’ contributions to student learning and The New Teacher Project. Teacher Evaluation 2.0: Six

to observe the extent to which teachers engage Design Principles. Brooklyn, N.Y.: The New Teacher

in classroom practices that are widely recog- Project, 2010.

nized as desirable. The challenges I’ve described

Rothstein, Richard, and Rebecca Jacobsen. “The

aren’t roadblocks to continued improvement.

Goals of Education.” Phi Delta Kappan 88, no. 4

But, it may be costly, in terms of time and other

(December 2006): 264-272.

resources, to make continuous progress both

in developing measures of what is to be assessed Sartain, Lauren, Sara R. Stoelinga, and Eric Brown.

and in how to produce reliable and valid indi- Evaluation of the Excellence in Teaching Pilot: Year One

cators. Until such improvements are in hand, Report. Chicago, Ill.: Consortium on Chicago School

we shouldn’t assume that the current genera- Research, 2009.

“What do you mean, it’s the wrong kind of right?”

kappanmagazine.org V92 N4 Kappan 71

You might also like

- The Journal of Educational ResearchDocument12 pagesThe Journal of Educational ResearchNguyễn Hoàng DiệpNo ratings yet

- Strengthening Teacher Evaluation - What District Leaders Can DoDocument6 pagesStrengthening Teacher Evaluation - What District Leaders Can Doapi-208210160No ratings yet

- Teacher Turnover, Tenure Policies, and The Distribution of Teacher QualityDocument28 pagesTeacher Turnover, Tenure Policies, and The Distribution of Teacher QualityNythNo ratings yet

- Evaluating Teaching PDFDocument11 pagesEvaluating Teaching PDFBongani GutuzaNo ratings yet

- Assessment Practices For Students With Learning Disabilities in Lebanese Private Schools: A National SurveyDocument20 pagesAssessment Practices For Students With Learning Disabilities in Lebanese Private Schools: A National Surveysheyla_liwanagNo ratings yet

- Gonzales Asynch 1 KWL ChartDocument3 pagesGonzales Asynch 1 KWL Chartapi-712941119No ratings yet

- The Principal Leadership For A Global Society - I1275Document24 pagesThe Principal Leadership For A Global Society - I1275mualtukNo ratings yet

- Evaluation: Discussion Guides For Creating A Teacher-Powered SchoolDocument8 pagesEvaluation: Discussion Guides For Creating A Teacher-Powered SchoolTeachingQualityNo ratings yet

- PLC - Mattos DuFour ArticleDocument8 pagesPLC - Mattos DuFour ArticlehanahNo ratings yet

- m119 PPTPTDocument25 pagesm119 PPTPTJoshua CorpuzNo ratings yet

- Sledge 2013Document33 pagesSledge 2013rahid khanNo ratings yet

- Unit Teacher Evaluation: StructureDocument14 pagesUnit Teacher Evaluation: Structureushma19No ratings yet

- OSF - Teacher Hiring & Retention Policy Brief - 20190801Document11 pagesOSF - Teacher Hiring & Retention Policy Brief - 20190801Christine Palcon NogarNo ratings yet

- 增值能为教师评价增值吗?Document7 pages增值能为教师评价增值吗?Ying Ping SuNo ratings yet

- Teacher Appraisal System in Singapore SchoolDocument6 pagesTeacher Appraisal System in Singapore Schooltallalbasahel100% (1)

- Activity 8Document2 pagesActivity 8Borj YarteNo ratings yet

- Teacher EvaluationDocument12 pagesTeacher EvaluationNyeinTunNo ratings yet

- Natriello 1987Document22 pagesNatriello 1987Paddy Nji KilyNo ratings yet

- Assessment in Learning 1 DietherDocument18 pagesAssessment in Learning 1 DietherMery Rose AdelanNo ratings yet

- Classroom Evaluation - Critic ArticleDocument10 pagesClassroom Evaluation - Critic ArticleHarold John100% (1)

- Pearson Assessment Paper Small PDFDocument24 pagesPearson Assessment Paper Small PDFstaceyfinkelNo ratings yet

- Measuring Teacher Effectiveness RinkDocument12 pagesMeasuring Teacher Effectiveness RinkKiray EscarezNo ratings yet

- Understandin G Language Assessment: Paul Levin R. Olaybar Maed - EltDocument18 pagesUnderstandin G Language Assessment: Paul Levin R. Olaybar Maed - Eltarlene feliasNo ratings yet

- Challenges and Practices of School-Based Management in Public Elementary School Heads in The Division of PalawanDocument13 pagesChallenges and Practices of School-Based Management in Public Elementary School Heads in The Division of PalawanPsychology and Education: A Multidisciplinary JournalNo ratings yet

- Research and Practice On The Teaching PerformanceDocument6 pagesResearch and Practice On The Teaching PerformanceDianne TabanaoNo ratings yet

- Dynamic Effects of Teacher Turnover On The Quality of InstructionDocument17 pagesDynamic Effects of Teacher Turnover On The Quality of InstructionCesar PabloNo ratings yet

- Measurement and Evaluation: The National Center For Teacher EducationDocument3 pagesMeasurement and Evaluation: The National Center For Teacher EducationPhilip Sta. CruzNo ratings yet

- Feldman (2019) - Beyond Standards-Based Grading - Why Equity Must Be Part of Grading Reform.Document5 pagesFeldman (2019) - Beyond Standards-Based Grading - Why Equity Must Be Part of Grading Reform.Calum DunlopNo ratings yet

- Lee 2018 Pulling Back The Curtain Revealing The Cumulative Importance of High Performing Highly Qualified Teachers OnDocument23 pagesLee 2018 Pulling Back The Curtain Revealing The Cumulative Importance of High Performing Highly Qualified Teachers OnNicolás MuraccioleNo ratings yet

- Of The Philippines Don Honorio Ventura State University Bacolor, Pampanga Graduate School Executive Brief For The Thesis Title ProposalDocument3 pagesOf The Philippines Don Honorio Ventura State University Bacolor, Pampanga Graduate School Executive Brief For The Thesis Title ProposalChris KabilingNo ratings yet

- Evaluation: ABC of Learning and Teaching in MedicineDocument3 pagesEvaluation: ABC of Learning and Teaching in MedicinerezautamaNo ratings yet

- Evaluating Teacher EffectivenessDocument36 pagesEvaluating Teacher EffectivenessCenter for American Progress100% (8)

- A Critical Review of Research On Formative AssessmDocument12 pagesA Critical Review of Research On Formative AssessmAfina AhdiatNo ratings yet

- Assessment Literacy For Teacher Candidates: A Focused ApproachDocument18 pagesAssessment Literacy For Teacher Candidates: A Focused ApproachAiniNo ratings yet

- Results-Based Performance Management For Teachers EvaluationDocument9 pagesResults-Based Performance Management For Teachers EvaluationPsychology and Education: A Multidisciplinary JournalNo ratings yet

- Assessment For LearningDocument2 pagesAssessment For LearningBRANDON JHOSUA HUILCA PROAÑONo ratings yet

- 2002 - McMillan - Myran - Workman - Elementary Teacher's Classroom Assessment and Grading Practices PDFDocument12 pages2002 - McMillan - Myran - Workman - Elementary Teacher's Classroom Assessment and Grading Practices PDFPatricioNo ratings yet

- Precious B. Maala Dionisia T. Guansing Maxima C. ReyesDocument8 pagesPrecious B. Maala Dionisia T. Guansing Maxima C. ReyesPrecious Baysantos MaalaNo ratings yet

- Principal Evaluation From The Ground UpDocument7 pagesPrincipal Evaluation From The Ground Upapi-208210160No ratings yet

- Contemporary Evaluation of Teaching Challenges and PromisesDocument3 pagesContemporary Evaluation of Teaching Challenges and PromisesPaco CruzNo ratings yet

- Black1998 PDFDocument69 pagesBlack1998 PDFTIMSS SARAWAKNo ratings yet

- Ideal Teacher Behaviors Student MotivationDocument7 pagesIdeal Teacher Behaviors Student MotivationIoana MoldovanNo ratings yet

- Assessment Earl L (2003)Document8 pagesAssessment Earl L (2003)Pia Torres ParraguezNo ratings yet

- Analyse Theories Principles and Models oDocument5 pagesAnalyse Theories Principles and Models oTitser JeffNo ratings yet

- Supervision and Evaluation Model: Form of Evaluation Description Strengths/Weaknesses ResearchDocument4 pagesSupervision and Evaluation Model: Form of Evaluation Description Strengths/Weaknesses Researchapi-341585130No ratings yet

- B-Ed Thesis WrittenDocument91 pagesB-Ed Thesis Writtensamra jahangeerNo ratings yet

- Ap Lang ArgumentDocument7 pagesAp Lang Argumentapi-280982802No ratings yet

- Douglas (2008) Using Formative Assessment To Increase LearningDocument8 pagesDouglas (2008) Using Formative Assessment To Increase LearningMarta GomesNo ratings yet

- Oct2017 ALN LearningPoint Performance Assessments 1Document2 pagesOct2017 ALN LearningPoint Performance Assessments 1mariane latawanNo ratings yet

- Bato A. Ped 107Document10 pagesBato A. Ped 107asumbrajamesmichaelNo ratings yet

- VELOV - A Passion For QualityDocument10 pagesVELOV - A Passion For QualityAvinesh KumarNo ratings yet

- EM-507 Continuum Model On Art and Sciences of TeachingDocument19 pagesEM-507 Continuum Model On Art and Sciences of TeachingSheenalyn100% (2)

- Assignment 1-Ocampo, Jessica Paula S. SOCSTUD 4-1Document2 pagesAssignment 1-Ocampo, Jessica Paula S. SOCSTUD 4-1PaulaNo ratings yet

- EJ1144100Document9 pagesEJ1144100Vincent NollanNo ratings yet

- Addressing Teacher Evaluation AppropriatelyDocument7 pagesAddressing Teacher Evaluation AppropriatelyThe Washington Post100% (1)

- Cassandra's Classroom Innovative Solutions for Education ReformFrom EverandCassandra's Classroom Innovative Solutions for Education ReformNo ratings yet

- Empowering Growth - Using Proficiency Scales for Equitable and Meaningful Assessment: Quick Reads for Busy EducatorsFrom EverandEmpowering Growth - Using Proficiency Scales for Equitable and Meaningful Assessment: Quick Reads for Busy EducatorsNo ratings yet

- Teachers’ Perceptions of Their Literacy Professional DevelopmentFrom EverandTeachers’ Perceptions of Their Literacy Professional DevelopmentNo ratings yet

- Principles for Princi-PALS and Teachers!: One Principal’s Mind-Staggering Insight into Students’ Hope-Filled FuturesFrom EverandPrinciples for Princi-PALS and Teachers!: One Principal’s Mind-Staggering Insight into Students’ Hope-Filled FuturesNo ratings yet

- Chemist Job - Gim Cosmetic Industries Sdn. BHDDocument2 pagesChemist Job - Gim Cosmetic Industries Sdn. BHDAida AzmanNo ratings yet

- 02 Mallon CH 01Document13 pages02 Mallon CH 01Valentin rodil gavalaNo ratings yet

- CCW331 BA IAT 1 Set 1 & Set 2 QuestionsDocument19 pagesCCW331 BA IAT 1 Set 1 & Set 2 Questionsmnishanth2184No ratings yet

- A PERFORMANCE GUIDE TO GABRIEL FAURÉ - S - em - LA CHANSON D - ÈVE - emDocument136 pagesA PERFORMANCE GUIDE TO GABRIEL FAURÉ - S - em - LA CHANSON D - ÈVE - emEunice ngNo ratings yet

- The Magnificent 7 PDFDocument17 pagesThe Magnificent 7 PDFApoorv SinghalNo ratings yet

- Ecom 3 PART2 CHAP 4Document41 pagesEcom 3 PART2 CHAP 4Ulfat Raza KhanNo ratings yet

- Nursing Questions Nclex-: Delegation For PNDocument5 pagesNursing Questions Nclex-: Delegation For PNsaxman011No ratings yet

- Procrastination: Reasons Why People ProcrastinateDocument8 pagesProcrastination: Reasons Why People Procrastinatehuzaifa anwarNo ratings yet

- Summer Camps 2023Document24 pagesSummer Camps 2023inforumdocsNo ratings yet

- Z Score Table - Z Table and Z Score CalculationDocument7 pagesZ Score Table - Z Table and Z Score CalculationRizky AriyantoNo ratings yet

- Culture and ConflictDocument8 pagesCulture and ConflictToufik KoussaNo ratings yet

- MBA 7003 Marketing Assignment 1Document7 pagesMBA 7003 Marketing Assignment 1MOHAMED AMMARNo ratings yet

- MIS Management Information SystemDocument19 pagesMIS Management Information SystemNamrata Joshi100% (1)

- Individual Weekly Learning Plan: Day / Lesson Number / Topic Objectives Assessment ActivitiesDocument2 pagesIndividual Weekly Learning Plan: Day / Lesson Number / Topic Objectives Assessment ActivitiesROBERT JOHN PATAGNo ratings yet

- The Cognitive Neuroscience of LGG Acquisition PDFDocument24 pagesThe Cognitive Neuroscience of LGG Acquisition PDFElisabete BarbosaNo ratings yet

- I. Objectives:: Peh11Fh-Iia-T-8 Peh11Fh-Iia-T-12 Peh11Fh-Iid-T-14Document2 pagesI. Objectives:: Peh11Fh-Iia-T-8 Peh11Fh-Iia-T-12 Peh11Fh-Iid-T-14John Paolo Ventura100% (2)

- Lesson Plan For English Grade 10: Tabao National High SchoolDocument3 pagesLesson Plan For English Grade 10: Tabao National High SchoolAnn Marey Manio GrijaldoNo ratings yet

- Employment News 24 November - 30 November 2018Document32 pagesEmployment News 24 November - 30 November 2018ganeshNo ratings yet

- By David G. Messerschmitt: Understanding Networked Applications: A First CourseDocument48 pagesBy David G. Messerschmitt: Understanding Networked Applications: A First CoursenshivegowdaNo ratings yet

- Geology of The Cuesta Ridge Ophiolite Remnant Near San Luis Obispo, California: Evidence For The Tectonic Setting and Origin of The Coast Range OPhioliteDocument149 pagesGeology of The Cuesta Ridge Ophiolite Remnant Near San Luis Obispo, California: Evidence For The Tectonic Setting and Origin of The Coast Range OPhiolitecasnowNo ratings yet

- Lesson Plan BiDocument5 pagesLesson Plan BiIntan Nurbaizurra Mohd RosmiNo ratings yet

- List of Colleges Under 2 (F) and 12 (B) (State-Wise & University-Wise)Document46 pagesList of Colleges Under 2 (F) and 12 (B) (State-Wise & University-Wise)harsh_sharma_25No ratings yet

- Quantitative ResearchDocument10 pagesQuantitative ResearchAixa Dee MadialisNo ratings yet

- Balasundaram Resume 0.01Document4 pagesBalasundaram Resume 0.01Kishore B KumarNo ratings yet

- Devil and Tom WalkerDocument27 pagesDevil and Tom Walkerapi-315186689No ratings yet

- Lesson Plan ModelDocument25 pagesLesson Plan ModeldeusdemonNo ratings yet

- Moss - Plato's Epistemology. Being and SeemingDocument266 pagesMoss - Plato's Epistemology. Being and SeemingJosue GutierrezNo ratings yet

- Project Electrical Best Practices: IEEE SAS/NCS IAS-PES Chapter SeminarDocument63 pagesProject Electrical Best Practices: IEEE SAS/NCS IAS-PES Chapter SeminarAlex ChoongNo ratings yet

- Chapters 1 5Document81 pagesChapters 1 5ogost emsNo ratings yet

- Quarter 2, Week 2 - 2021-2022Document11 pagesQuarter 2, Week 2 - 2021-2022Estela GalvezNo ratings yet