Professional Documents

Culture Documents

Subtitle

Uploaded by

757rustam0 ratings0% found this document useful (0 votes)

8 views3 pages1

Original Title

Subtitle(6)

Copyright

© © All Rights Reserved

Available Formats

TXT, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this Document1

Copyright:

© All Rights Reserved

Available Formats

Download as TXT, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

8 views3 pagesSubtitle

Uploaded by

757rustam1

Copyright:

© All Rights Reserved

Available Formats

Download as TXT, PDF, TXT or read online from Scribd

You are on page 1of 3

So this unit is a lot about

pretesting techniques and making sure that your questionnaire

is in the best possible format. We'll start out with

a subset of techniques, but let me first tell you why we do

pretesting in the first place. Overall, we try to do

pretest to identify and reduce any possible errors on the test. Now any, that's a

hot claim. Remember, it took them 400 years

to measure the longitudes. So don't expect that you can build

a good measurement instrument now within two weeks or the six weeks of

this course, but these techniques that we present here are a good step to get

better at identifying specification error. Seeing if your decomposition

has worked if you really tap into all the concepts that

we discussed at the beginning. It will help you to identify

operationalization error. Is the question really

measuring the construct, do you see variability across the

respondents, the interviews, the times? Issues with measurement in that regard? And

then measurement error in general,

remember the sensitive questions, do you have question characteristics

that are leading the respondents to answer a certain way? And there are typical

interviewer

characteristics that interfere with the certain question, and

issues of that nature. So all of that, hopefully,

you can detect in pretests. It is rather uncommon that you would

be able to administer a survey perfectly, measurement error free,

without any pretesting, so you know, one of these techniques you should use,

ideally, a multitude of these techniques. We'll show a comparison of the techniques

at the end of the segment, and you will see they're not suitable for

all settings. And you certainly get the best picture

if you use several techniques at once. The first thing, expert reviews, focus

group, cognitive interviews,

they are all more qualitative techniques. And then we have behavior coding and

other statistical methods that, I guess behavioral coding can be done on

the qualitative interviews as well, but all of the others are sort of assuming

that you have a larger set of paces for which you test whether

they measure questions and the answer match what

you're trying to measure. So, let's start with the expert reviews. First thing you

need to ask yourself is,

who's an expert? Who the questionnaire designated

expert in that matter, that's one set. But also, you should probably

enroll subject matter experts to review your questionnaire to make sure

that it also matches the concept that the subject matter expert

told you to begin with. Questionnaire administration

expert is another good set for trying to enlist into review

those would be interviews. And then there are some computer based

systems, expert systems like QUAID that have been developed and the link is

available here, you can test that out. What do these experts do? They identified

potential response

problems and make recommendations for improvement. Now, how do they do that? Are

they individual or in a group setting? Sometimes you have open-ended comments,

sometimes you can solicit codes for a particular problem that

would be in form of an appraisal system. In the end, you hopefully have a report

with comments that help you to improve, noted problems and revisions, a summary of

report that shows you the distribution of the problems you want to definitely

have a few experts review this and not just relying on the answers from one.

They're really qualitative in nature, though, so that you won't be able to make

any statistics off the problems you found. Now, experts are good at identifying

problems related to the data analysis question. They are good at looking

at issues that pop up. In a quantitative analysis of comparing

expert reviews with more sofisticated techniques like latent class analysis,

we've found considerable agreement, but we'll come back to that point once

we look closer at these LCAs. What's definitely good is they're

relatively cheap and fast, and what's not so good is the quality

of various in practice much a lot, we don't have a lot of

literature on this and there is this one thing known, large inconsistency

and disagreement between experts. So with that, let's move to the next

technique, which are focus groups. Focus groups are small groups,

five to ten people, that you bring together to

investigate a research topic. You try to find out what people

think when they hear this topic, when they hear a particular question, so

you can mix with an open discussion, and an evaluation of survey

questions themselves. Try to figure out how people

about the vocabulary, about the terms used, about any key

concepts that appear in the questionnaire. And keep in mind interviewer and

respondents don't always

agree on this context. You know let's say for

example sources of income. A questionnaire administered in a low

SES neighborhood, the investigator and as an extension the interviewer,

they might think of job salary, interest income, dividends, whereas

the respondent would think of ad hoc work, illicit activities, drug use,

prostitution

and gambling, there you have it. So the key task of the focus group is to

explore what the respondent thinks and how the respondent thinks about the topic,

maybe both. You have to think about recruiting,

what the moderator does, identify good moderators,

decide on whether to tape, and videotape, but

at least you should audio record, it's very difficult to take sufficient

notes, and then have a report written. A little bit more on recruitment. You really

would like to target participants that are also part of

your target population in the survey. You want to decide if you want

to have a homogeneous group or a more heterogeneous group. If you have the means

to have several groups, it could be good to

vary that a little bit. You will get a different group discussion

whether you do one or the other. But in general, it's probably a good

idea if you only have one focus group to have it diversified, but not too much. So

each group has a little

bit of a mixture in it. As for the moderator,

you want to give the moderator a guide, where you make clear what

the purpose is that they study here. You can talk about the flow

where you write out questions with open ends and then you can see

what the respondents will answer those. And keep in mind what

problem should be solved and what information you search for. Here's an example for

points that should

be mentioned in the moderator guide. Flow of ground rules, introduction,

open questions, in-depth investigation, and some statements on how they

can close the focus group. It's also good to give them a guideline

for good questions inside the focus group. Tell them that they should ask short

questions to get long answers. They should ask questions

that are easy to say, they should address one issue at a time,

use a conversational tone, ask open-ended questions, and

ask positive before negative ones. A focus group is very good at providing

qualitative information as we had earlier. They're good in providing a range

of information, lots of a variety. But what you learn there,

it's hardly generalizable. So it's qualitative just

like the expert reviews. The advantage is they're efficient and

small. The disadvantage is they're more costly,

because you have to recruit, you have to incentivize, you often have to

rent a facility, in particular if you want to have outside service through one

way mirror and things of that nature. There are lots of good

textbooks on focus groups, a couple of them listed here, more

are listed on the syllabus on Coursera. Our next segment will move into cogmented

interviewing another qualitative technique that can be used to test questionnaires.

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- SubtitleDocument2 pagesSubtitle757rustamNo ratings yet

- SubtitleDocument4 pagesSubtitle757rustamNo ratings yet

- SubtitleDocument3 pagesSubtitle757rustamNo ratings yet

- Introduction To Human Resource Concepts Lesson 3 - Recruiting, Selection, and OrientationDocument30 pagesIntroduction To Human Resource Concepts Lesson 3 - Recruiting, Selection, and Orientation757rustamNo ratings yet

- Can, Know and Care Script: State Job RoleDocument2 pagesCan, Know and Care Script: State Job Role757rustamNo ratings yet

- SubtitleDocument3 pagesSubtitle757rustamNo ratings yet

- Learner Needs Analysis Template: What'S The Point of This Template?Document13 pagesLearner Needs Analysis Template: What'S The Point of This Template?757rustamNo ratings yet

- 2 Wellbeing at Work My Role As A Manager or Team LeaderDocument2 pages2 Wellbeing at Work My Role As A Manager or Team Leader757rustamNo ratings yet

- IICF Volunteer Team Leader Website Guide: Sign Up For Year-Round Volunteer Projects As Well As The Annual Week of Giving!Document6 pagesIICF Volunteer Team Leader Website Guide: Sign Up For Year-Round Volunteer Projects As Well As The Annual Week of Giving!757rustamNo ratings yet

- Tools of An Effective Manager: Soulcast Media's Activity SheetDocument1 pageTools of An Effective Manager: Soulcast Media's Activity Sheet757rustamNo ratings yet

- Stress Talking ToolkitDocument24 pagesStress Talking Toolkit757rustamNo ratings yet

- SLAM Managers Resilience Building ToolsDocument1 pageSLAM Managers Resilience Building Tools757rustamNo ratings yet

- Recognition Toolkit For LeadersDocument2 pagesRecognition Toolkit For Leaders757rustam100% (1)

- There Is Someone at Work Who Encourages My Development.: Help Me GrowDocument2 pagesThere Is Someone at Work Who Encourages My Development.: Help Me Grow757rustamNo ratings yet

- Point-of-Care: Leadership Tips and Tools For NursesDocument6 pagesPoint-of-Care: Leadership Tips and Tools For Nurses757rustamNo ratings yet

- BPM Team Building Toolkit 2019Document84 pagesBPM Team Building Toolkit 2019757rustamNo ratings yet

- Busy Leaders Took Kit 10 24 2019Document24 pagesBusy Leaders Took Kit 10 24 2019757rustamNo ratings yet

- Task Force & Strike Team Leader Guidebook: Updated For 2020 COVID-19 EnvironmentDocument34 pagesTask Force & Strike Team Leader Guidebook: Updated For 2020 COVID-19 Environment757rustamNo ratings yet

- Action Team Leader ToolkitDocument20 pagesAction Team Leader Toolkit757rustamNo ratings yet

- Team Leader Guide: Thank You For Accepting The Role of Team Leader!Document7 pagesTeam Leader Guide: Thank You For Accepting The Role of Team Leader!757rustamNo ratings yet

- 2015 - Leaders GuideDocument44 pages2015 - Leaders Guide757rustamNo ratings yet

- Leading and Managing People Trainers' Toolkit: Building A TeamDocument36 pagesLeading and Managing People Trainers' Toolkit: Building A Team757rustam100% (1)

- Team Leader Guide: Prepared byDocument9 pagesTeam Leader Guide: Prepared by757rustamNo ratings yet

- (eBook-EN) Make UX MeasurableDocument34 pages(eBook-EN) Make UX Measurable757rustamNo ratings yet

- Cajg Applicant Guide PDFDocument17 pagesCajg Applicant Guide PDF757rustamNo ratings yet

- GG202x: Mindfulness and Resilience To Stress at Work: Team Leader GuideDocument10 pagesGG202x: Mindfulness and Resilience To Stress at Work: Team Leader Guide757rustamNo ratings yet

- Career Conversations: Employee-Driven. Development-Oriented. SimpleDocument12 pagesCareer Conversations: Employee-Driven. Development-Oriented. Simple757rustamNo ratings yet

- Manager'S Guide To Building A Successful Learning Path Using UlearnitDocument1 pageManager'S Guide To Building A Successful Learning Path Using Ulearnit757rustamNo ratings yet

- Template InterviewGuideDocument6 pagesTemplate InterviewGuideJames Patrick NarcissoNo ratings yet

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Research ManualDocument25 pagesResearch ManualEdna Jaen MaghinayNo ratings yet

- Data Protection and Privacy Audit ChecklistDocument8 pagesData Protection and Privacy Audit Checklistviolet osadiayeNo ratings yet

- Review of Ecodesign Methods and Tools - Barriers and StrategiesDocument33 pagesReview of Ecodesign Methods and Tools - Barriers and Strategiesmatic91No ratings yet

- Riphah International University I-14 Campus, Islamabad: Project/End Term Exam Fall 2020 Faculty of ComputingDocument2 pagesRiphah International University I-14 Campus, Islamabad: Project/End Term Exam Fall 2020 Faculty of Computingusama 7788No ratings yet

- Literature Review Related To CPRDocument4 pagesLiterature Review Related To CPRafmzqzvprmwbum100% (1)

- Digital Banking Challenges Emerging Technology TreDocument20 pagesDigital Banking Challenges Emerging Technology Treahmad.sarwary13No ratings yet

- ACL-SRW 2018 Review For Submission #18Document4 pagesACL-SRW 2018 Review For Submission #18kpespinosaNo ratings yet

- Evaluation of Business PerformanceDocument6 pagesEvaluation of Business Performancejoel50% (2)

- Oracle Database Auditing: Using Accounting Setup ManagerDocument18 pagesOracle Database Auditing: Using Accounting Setup ManagerRoel Antonio PascualNo ratings yet

- Project Execution Plan 27 PDFDocument27 pagesProject Execution Plan 27 PDFbakabaka100% (1)

- 3rd Summative Test in Reading and Writing: General InstructionDocument4 pages3rd Summative Test in Reading and Writing: General InstructionTyrsonNo ratings yet

- Case Studies Mba 1 AssignmentDocument2 pagesCase Studies Mba 1 Assignmentayushi aggarwalNo ratings yet

- DBA DSP Template - Fall 2022Document54 pagesDBA DSP Template - Fall 2022Jeffrey O'LearyNo ratings yet

- Cederborg Etal 2015 PDFDocument7 pagesCederborg Etal 2015 PDFbanned minerNo ratings yet

- E678-07 (2013) Standard Practice For Evaluation of Scientific or Technical DataDocument2 pagesE678-07 (2013) Standard Practice For Evaluation of Scientific or Technical DataMohamed0% (1)

- GBIC - COLLECTION OF STRUCTUREs OF COURSEWORK 2 LITERATURE REVIEWDocument15 pagesGBIC - COLLECTION OF STRUCTUREs OF COURSEWORK 2 LITERATURE REVIEWisraeladejoh303No ratings yet

- v6 BSI Self Assesment Questionnaire 27001Document4 pagesv6 BSI Self Assesment Questionnaire 27001DonNo ratings yet

- Literature Review Website DesignDocument9 pagesLiterature Review Website Designluwahudujos3100% (1)

- Research Proposal Template MGT Economics IR PolS Education4Document6 pagesResearch Proposal Template MGT Economics IR PolS Education4Parhi Likhi JahilNo ratings yet

- Report Writing: by Aimen, Ayesha, Rida & HalimaDocument27 pagesReport Writing: by Aimen, Ayesha, Rida & HalimaBushra SyedNo ratings yet

- As9120 Internal Audit ChecklistDocument3 pagesAs9120 Internal Audit Checklistamelia0% (1)

- Radial Spike and Slab Bayesian Neural NetworkDocument2 pagesRadial Spike and Slab Bayesian Neural NetworkFarshid Bagheri SaraviNo ratings yet

- CAE Writing Task 4: of February. of February.: TH THDocument4 pagesCAE Writing Task 4: of February. of February.: TH THМилана ИгоревнаNo ratings yet

- A Concept PaperDocument2 pagesA Concept PaperWhiteshop PhNo ratings yet

- Team Planning Workshop Agenda ExampleDocument4 pagesTeam Planning Workshop Agenda Examplexia leaderisbNo ratings yet

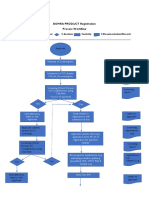

- BoMRA Product PDFDocument3 pagesBoMRA Product PDFEW WafulaNo ratings yet

- 1951-1651743519889-Unit 5 Accounting Principles - Assignment 1Document39 pages1951-1651743519889-Unit 5 Accounting Principles - Assignment 1devindiNo ratings yet

- AS9100c-IA ChecklistDocument61 pagesAS9100c-IA ChecklistmichaligielNo ratings yet

- UNIT 3 Lesson 4 MemorandumDocument5 pagesUNIT 3 Lesson 4 MemorandumAngelica TalandronNo ratings yet

- Drugs and Veterinary Products in Botswana PDFDocument3 pagesDrugs and Veterinary Products in Botswana PDFEW WafulaNo ratings yet