Professional Documents

Culture Documents

Automated COVID-19 Misinformation Checking System Using Encoder Representation With Deep Learning Models

Uploaded by

IAES IJAIOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Automated COVID-19 Misinformation Checking System Using Encoder Representation With Deep Learning Models

Uploaded by

IAES IJAICopyright:

Available Formats

IAES International Journal of Artificial Intelligence (IJ-AI)

Vol. 12, No. 1, March 2023, pp. 488~495

ISSN: 2252-8938, DOI: 10.11591/ijai.v12.i1.pp488-495 488

Automated COVID-19 misinformation checking system using

encoder representation with deep learning models

Marina Azer1,3, Mohamed Taha1, Hala H. Zayed1,2, Mahmoud Gadallah3

1

Faculty of Computers and Artificial Intelligence, Benha University, Benha, Egypt

2

School of Information Technology and Computer Science, Nile University, Cairo, Egypt

3

Faculty of Computer Science, Modern Academy for Computer Science and Management Technology, Cairo, Egypt

Article Info ABSTRACT

Article history: Social media impacts society whether these impacts are positive or negative,

or even both. It has become a key component of our lives and a vital news

Received Jul 12, 2021 resource. The crisis of COVID-19 has impacted the lives of all people. The

Revised Aug 6, 2022 spread of misinformation causes confusion among individuals. So automated

Accepted Sep 4, 2022 methods are vital to detect the wrong arguments to prevent misinformation

spread. The COVID-19 news can be classified into two categories: false or

real. This paper provides an automated misinformation checking system for

Keywords: the COVID-19 news. Five machine learning algorithms and deep learning

models are evaluated. The proposed system uses the bidirectional encoder

Bidirectional encoder representations from transformers (BERT) with deep learning models.

representations from detecting fake news using BERT is a fine-tuning. BERT achieved accuracy

transformers (98.83%) as a pre-trained and a classifier on the COVID-19 dataset. Better

Deep learning results are obtained using BERT with deep learning models, which achieved

Fake news accuracy (99.1%). The results achieved improvements in the area of fake

Machine learning news detection. Another contribution of the proposed system allows users to

Pre_trained models detect claims' credibility. It finds the most related real news from experts to

Social media the fake claims and answers any question about COVID-19 using the

universal-sentence-encoder model.

This is an open access article under the CC BY-SA license.

Corresponding Author:

Marina Azer

Faculty of Computer Science, Modern Academy for Computer Science and Management Technology

El-Basatin Sharkeya, El Basatin, Cairo Governorate, Egypt

Email: marina.essam991@gmail.com

1. INTRODUCTION

Before Internet development, the idea of fake news already existed. However, the appearance of

social networks such as Facebook and Twitter, increases the speed of spreading fake news, especially if

nobody can submit any news. Most sites also have an option to share that allows users to propagate the

website content. Social networking platforms make it possible to share content quickly and efficiently. In a

short period, users can distribute incorrect consumer reviews are the part of everyday life. User read the

reviews before purchase, or stores it for finding the best product through comparison of the product review

[1]. So, detecting fake reviews is important also for sommerical aspects, Facebook and other companies have

pledged to do more to stop false news from spreading.

Fake news is one of many sorts of misinformation, including rumors and misleading web material.

False news is described as "news pieces meant to mislead readers but can be misrepresented by other

sources" [2], [3]. There has been a global problem of the large spread of misinformation on social media. The

effects on public opinion and social-political evolution threaten great. The Internet is currently handier than

other traditional media for users to acquire news.

Journal homepage: http://ijai.iaescore.com

Int J Artif Intell ISSN: 2252-8938 489

In recent years, the detection of false information has been a significant subject of research. Many

efforts have been made to overcome problems of false information detection and to identify promising

approaches. False news is intentional and verifiable misleading news pieces that may mislead the reader. In

the middle of COVID-19 scenario fraudulent purveyors of the COVID-19 commodity have caused many

people to suffer, public health group is making significant efforts on social media sites to educate and rectify

misinformation. The World Health Organization (WHO) declared a "major infodemic" due to coronavirus

transmission, the worldwide epidemic of COVID-19 has become an international war against misinformation

[4]. Fake news spreads like an illness during critical times in a country, causing unrest, havoc, and disruption

among citizens. The use of machine learning to counteract fake news on social media platforms has increased

dramatically. However, because these algorithms are unaware of context, they have only had limited

effectiveness in detecting spam and harmful information [5].

Two primary methodologies are available to detect credibility: machine learning and profound

learning. According to Perez-Rosas et al. [6], the workers were tasked to create a fraudulent version of the news

reports using crowdsourcing, the rumor identification framework is a machine learning strategy that legitimizes

signals of ambiguous messages so that a person can readily recognize bogus news, people will be notified if

posts appear to be false [7]. The Twitter crawler, a machine learning approach, collects tweets and adds them to

a database, comparing distinct tweets [8]. The Twitter credibility issue was initially discussed in an organized

approach by Castillo et al. [9], [10]. The authors identified several language indicators of disappointment and

used Random Forest (RF) to detect false information. In detecting bogus news in [11], [12], [13]. Briscoe et al.

presented some recurrent neural network (RNN) designs such as gated recurrent unit (GRU), Tanh-RNN and

long short term memory (LSTM) to get the most outstanding possible performance [14].

The problem here is how to ensure the credibility of the content of the news if it’s true or fake to not be

misleading. which algorithm is more accurate and with which feature extraction method that is the first part of

the problem, the second part is how can the reader check the correct news from experts about specific rumor or

find the answer of specific question related to COVID-19. Our approach can help improve traditional fake news

detection strategies, wherein content features are frequently utilized to identify fake news.

The contributions of the proposed strategies in the paper can be summarize: i) An automated

misinformation checking system for the COVID-19 news utilizing several approaches on social media

platforms; ii) Improve the precise identification of existing fake news using a pre-trained model with deep

learning; iii) The system allows, after detecting the credibility of claims, to find the most related real news

and the appropriate answer to any question about COVID-19.

The remainder of this paper is organized: the research method is presented in section 2. The results

and discussion are introduced in section 3. Finally, the conclusion is presented in section 4.

2. RESEARCH METHOD

An automated COVID-19 misinformation checking system is presented. The proposed system uses

three main approaches as shown in Figure 1. In the first approach uses regular machine learning algorithms it

detects fake news using five baseline traditional machine learning techniques. The machine learning

techniques used are naive Bayes (NB), decision tree (DT), linear regression (LR), and support vector

machine (SVM), and random forest (RF) the second approach is detecting fake news using deep neural

networks as convolutional neural network (CNN), the LSTM (one to two layers) and the GRU (one to two

layers). The third approach uses a transformer model BERT which is a pre-trained model. Instead of training

a transformer model from scratch, it is probably more efficient to use (and eventually fine-tune) a pre-trained

model (BERT, XLNet, DistilBERT) from the transformers package. An open-source machine learning

framework PyTorch and TensorFlow are used.

The BERT model is the most used with text classification tasks. The original English-language

BERT model has two pre-trained general types. The BERTBASE model: a 12-layer, 768-hidden, 12-heads, and

a 110M parameter neural network architecture. The BERT LARGE model: 24 Encoders with 16 bidirectional

self-attention heads. Both models are trained on the BooksCorpus with 800M words [15]. The BERTBASE

model is used because the dataset in this work is not extensive [16].

The BERT-large has 16 attention heads with 340 million parameters and 1024 hidden layers bert

large increases the performance of bert base. A simple softmax classifier is added on top to classify the

representation. We can use a pre-existing model based on a vast dataset and tune it [17] to achieve other tasks

on different datasets. The pre_trained models have two main advantages: i) Training costs are constantly

reduced for a new deep learning model; ii) These datasets fulfill industry-accepted norms, and hence the

quality aspect of the pre-trained models has previously been examined.

Automated COVID-19 misinformation checking system using … (Marina Azer)

490 ISSN: 2252-8938

Figure 1. The proposed system for fake news detection on COVID-19 data

The second phase of the model is as shown in Figure 2 that the user can enter a claim that wants to

detect its credibility if it is real or fake. The proposed system will detect the credibility of the sentence using

the BERT as a pre_trained model with LSTM deep learning model, which achieved the best accuracy than

other models. If the claim is real, the system will show that it's an actual claim. Otherwise, it will return a

fake claim and show the most relevant five real news to this claim from experts' sayings. The Cosine

similarity type is used. Each vector's text counts cosine similarity that can be used to express the number by

vectors by calculating one vector function, namely the angle cosine between the vectors. So, the similitude

cosine is a statistic used to decide how similar the phrases are, regardless of their size. The other

methodology uses universal sentence encoding encoders for text to high-dimensional vectors, which may be

used to evaluate text, semantic similitude, grouping.

For the text that is longer than a word, such as sentences, phrases, or short paragraphs. The universal-

sentence-encoder tensorflow is publicly available with the pre-trained Universal Judgment Code. The model is

trained and optimized for greater-than-word length text, such as sentences, phrases, or short paragraphs. The

model is optimized, has trained with a deep averaging network (DAN) encoder on varied data.

2.1. Dataset

Insurance claims, which come from numerous sources, are typically the raw data for healthcare

fraud detection. In addition to insurance claim data, additional types of data utilised in the identification of

healthcare fraud include information about doctors, information about prescriptions written by doctors,

information about the medication or substances prescribed, and information about bills and transactions. Data

on the healthcare system in each nation is distinct. Therefore, the job in fraud detection is assessed while

taking into account the understanding of the official health data. The U.S. Health Care Financing

Administration (HCFA) is a significant government agency in charge of health care. In the US, there are two

healthcare programmes: Medicare and medicaid. Most often, researchers use medicare or medicaid data,

which includes information on medications and pharmaceuticals, bills and transactions, and medical

providers, to find frauds and misuse in the healthcare systems [18]. A healthcare misinformation-dataset from

fake news on blogs and social media, along with the impact that individuals have on such fake news. The

data contains 4,251 news mentions, 296,000 user engagements, 926 tweets referencing the COVID-19 and

ground truth label.

Int J Artif Intell, Vol. 12, No. 1, March 2023: 488-495

Int J Artif Intell ISSN: 2252-8938 491

User enter the sentence about covid-19

Pre-processing

Enter a question Enter a claim

Show the appropriate

answer using universal Check the credibility Yes It is a real

sentence encoder model of the claim using claim

Bert+LSTM

No

It is fake and show the most similar

five real news from experts realted to

this claim using universal sentence

encoder model

Figure 2. The second phase of the proposed model for fake news detection on COVID-19 data and

answer related questions

2.2. Pre-processing

Pre-processing is a critical step that affects detection effectiveness significantly. For example,

Twitter data is a popular, unstructured data set of information collected from individuals who input their

feelings, opinions, attitudes, product reviews. The basic cleaning steps in this work are:

− Cutting down: One of the fundamental procedures of cleaning is to turn a word into lowercases.

− Removal of symbols and irrelevant characters: the punctuations are deleted, such as commas,

quotations, marks for questioning, and more that give little value to the model.

− Deletion of URLs: removal of any text links.

− Tokenization: to divide the text into units of tokens (words).

− Deletion of stop-words: it refers to words that do not contribute a lot of meaning to the sentence, delete

those terms, then automatically fix any misspelling,

− Lemmatization: lemmatization is used instead of stemming because stemming is just cutting off the

conclusion of the starting of the word, considering a list of common prefixes and postfixes that can be

found in an inflected word.

This aimless cutting can be fruitful on a few events, continuously, whereas lemmatization, on the

other hand, considers the morphological investigation of the words. It is fundamental to have nitty gritty

word references that the calculation can see through to connect the shape back to its lemma. Therefore,

lemmatization accomplishes superior results than utilizing stemming whereas utilizing standard machine

learning algorithms as shown in Table 1 and Table 2.

2.3. Feature extraction

The Term Frequency- Inverse Document Frequency (TF-IDF) with n-gram in machine learning

algorithms is utilized to offer numerical weighting to textual content utilized for mining. TF-IDF identifies

how important is a term T depending on based on the frequency and relative relevance of t in document D

within the entire training dataset D. As indicated in (1), the TF-IDF measure has a weight computed with a

multiplication of two values: the standardized term frequency (TF) and the inversion frequency (IDF) [19].

TF−IDF (td)=TF (td)×IDF (t, D) (1)

The word embedding feature extraction method is employed during a deep learning technique,

which usually turns text data, words, into vectors. It is an n-dimensional dense vector that represents every

Automated COVID-19 misinformation checking system using … (Marina Azer)

492 ISSN: 2252-8938

word, where similar words have the same vector. Glove and Word2Vec have shown the best word

embedding approaches to turn words into vectors. The glove is an uncontrolled word embedding learning

algorithm.

The main idea of GloVe is to find out about the proximity of two words. The vector size of 25d,

50d, 100d and 200d vectors was variable. We have utilized "glove.twitter.27B.zip". We have used a library

of Keras-tuner [20] to select the optimum collection of hyper-parameters in hidden layers (LSTM and GRU)

and abandonment layers for hyper-optimization parameters.

For the fake news classification task, the uncased BERT -Base model is employed. This model

consists of 12 focus layers with 110M parameters in total. BERT uses the built-in Word Piece. Furthermore,

before the fine-tuning, the tokenizer with the Google implementation converts the whole text into lowercase.

2.4. Data splitting

The dataset is split into 90% training and 10% testing. The training set is fed into the machine

learning and deep learning models to check what should be done with the data. The test set is used to check

the results and the algorithm's performance.

2.5. Learning models

Different approaches’s models have been used here to detect the fraud using the highest

performance model, regular machine learning models, deep learning models, and pre-trained models. Which

achieve a good result in nlp tasks. Moderls are measures by different measurements accuracy, precision,

recall and f1_score Details will be discussed in the following sections.

2.5.1. Machine learning models

Five different machine learning models are used. The models are Naïve Bayes (NB), Logistic

Regression (LR), Decision Tree (DT), Random Forest (RF), and Support Vector Machine (SVM), as shown

in Tables 1 and 2. Random Forest (RF) algorithm always achieve good performance over different methods.

The algorithms were tested with different resampling methods.

2.5.2. Deep learning models

Different deep learning models are used. The models are CNN chosen in natural language

processing (NLP) tasks because the filter slides a few words over the sentence matrices. This makes CNNs

work well for classification tasks LSTM achieves good results because it can handle long-term dependencies

by keeping a memory at all times, and GRU has achieved great success in many sequential tasks, as shown in

Table 3.

2.5.3. Fine-tune a pre-trained bert model

The use of a pretrained model has many advantages. It lowers the carbon footprint, lowers

computation expenses, and enables to use cutting-edge models without having to train one from scratch. For

a variety of purposes, Transformers offers access to thousands of pretrained models. A pretrained model is

one that has been trained on a dataset particular to the task. This is referred to as fine-tuning, a very effective

training method. Fine-tuning BERT is a process that permits it to demonstrate various downstream errands;

independent of the text form BERT model uses a self-attention mechanism to unify the word vectors as

inputs that include bidirectional cross attention between two sentences. Primarily, there exist some fine-

tuning.

2.6. Models’ evaluation

The performance of the models is measured by different measurements, which are

− Accuracy measures correctly identified samples out of all the samples [21].

𝑇𝑃+𝑇𝑁

Accuracy = (2)

𝑇𝑃+𝑇𝑁+𝐹𝑃+𝐹𝑁∗100

where: TP is the number of positive samples that were appropriately labeled.

TN is the number of negative samples that were accurately labeled.

FP is the number of negative samples that have been mislabeled as positive.

FN is the number of positive samples that were mislabeled negatively.

− Precision and Recall: Recall measures the model's ability to accurately recognize the occurrence of a

positive class instance [22], [23].

Int J Artif Intell, Vol. 12, No. 1, March 2023: 488-495

Int J Artif Intell ISSN: 2252-8938 493

𝑇𝑃

precision = (3)

𝑇𝑃+𝐹𝑃

𝑇𝑃

Recall = (4)

𝑇𝑃+𝐹𝑁

− F-Score: The harmonic identification of precision and recall [24].

2∗𝑝𝑟𝑒𝑐𝑖𝑠𝑖𝑜𝑛∗𝑅𝑒 𝑐𝑎𝑙𝑙

𝐹1_𝑆𝑐𝑜𝑟𝑒 = (5)

𝑝𝑟𝑒𝑐𝑖𝑠𝑖𝑜𝑛+𝑅𝑒 𝑐𝑎𝑙𝑙

3. RESULTS AND DISCUSSION

In this sectio, the results obtained in our experiments for the COVID-19 dataset. The regular

machine learning algorithms using the two sizes of TF-IDF feature extraction method including unigram and

bigram representation. The random forest and support vector machine algorithms achieved the highest

results, as shown in Table 1 and Table 2 with bigram representation and lemmatization method instead of

stemming, stemming is faster, but lemmatization is more accurate.

Table 1. The performance of regular machine learning algorithms using stemming [25]

N-gram Algorithm Accuracy Precision Recall F1-score

Unigram 96.57 96.22 96.57 95.47

Bigram RF 96.63 96.41 96.63 95.52

Unigram 96.17 95.64 96.17 94.55

Bigram NB 96.24 95.68 96.24 94.72

Unigram 96.22 95.19 96.23 95.35

Bigram DT 96.14 95.08 96.18 95.26

Unigram 96.28 95.4 96.28 95.01

Bigram LR 96.38 95.73 96.38 95.17

Unigram 96.63 96.42 96.63 95.54

Bigram SVM 96.64 96.45 96.64 95.53

Table 2. The performance of regular machine learning algorithms using lemmatization

N_gram Algorithm Accuracy Precision Recall F1_score

Unigram 97.1 96.9 97.0 95.6

Bigram RF 97.65 97.1 97.2 96.3

Unigram 97.2 96.6 97.3 95.6

Bigram NB 97.12 96.9 97.6 95.7

Unigram 97.4 95.71 97.41 95.92

Bigram DT 97.2 95.9 97.01 95.8

Unigram 97.15 96.4 97.91 96.2

Bigram LR 97.1 96.32 97.1 96.5

Unigram 97.4 97.7 97.4 96.9

Bigram SVM 97.71 97.5 97.2 96.74

Deep learning models achieved better performance than regular machine learning algorithms

because of their ability to learn the discriminatory features through the multiple hidden layers with the word

embedding feature extraction method. The deep learning models are CNN, LSTM, GRU compared to the

pre_trained model BERT. LSTM achieved higher results than other deep learning models using bert as

encoder representation, as shown in Table 3. While using BERT as a bidirectional encoder representation

from transformers model and a classifier, it achieves the highest accuracy.

Table 3. The performance of deep learning models compared to pre_trained and classifier bert

Feature Extraction Method Model Accuracy Precision Recall F1-score

Glove 92.6 94.2 93.2 93.6

Bert CNN 86.6 86.2 85.4 86.5

Glove LSTM 97.92 97.3 97.4 97.3

Bert 99.1 99.2 99.6 99.2

Glove GRU 91.7 93.2 92.5 92.4

Bert 82.9 83.2 84.8 83.4

BERT 98.83 98.4 99.4 99.3

Automated COVID-19 misinformation checking system using … (Marina Azer)

494 ISSN: 2252-8938

In Figure 3 we compare our results to the best-known literature results since any precise work is

required. Fake news detection using pre-trained model BERT as a pre-trained model and a classifier achieved

higher accuracy than other models. But while using BERT as a pre-trained model with LSTM model, it

achieved enhancements in the results and achieved the best results than all other models achieved on the

same dataset in [25] while using modified LSTM and modified GRU.

99.5

Accuracy

99

98.5

98

97.5

Model

Figure 3. Comparison between our models and best models

4. CONCLUSION

One of the most challenging things for a human being is detecting bogus news. This work has

presented a system to detect misleading health information. Two methodologies applied machine learning and

deep learning approaches.in machine learning lemmatization method achieved enhancement than using

stemming. The approaches of deep learning outperform the methodology of machine learning. The best solution

is obtained through the combination of the deep learning models with pre-trained models each model

performance is discussed. The content characteristics in this study are employed in the binary categorization.

After detecting the credibility, the user can check experts’s sayings about any rumors related to COVID-19. In

the future, work will include enhanced information from the Twitter official WHO, UNICEF, and ICRC, which

we have collected from the bottom up. An extended framework will be offered for data coverage written in

another language such as Arabic, Spanish and Spanish and categorizes data by utilizing different pre-trained

models to identify their performance effects. In the classification, we will aim to use several features.

REFERENCES

[1] P. Tanwar and P. Rai, “A proposed system for opinion mining using machine learning, nlp and classifiers,” IAES International Journal of

Artificial Intelligence, vol. 9, no. 4, pp. 726–733, 2020, doi: 10.11591/ijai.v9.i4.pp726-733.

[2] N. J. Conroy, V. L. Rubin, and Y. Chen, “Automatic deception detection: methods for finding fake news,” Proceedings of the Association

for Information Science and Technology, vol. 52, no. 1, pp. 1–4, 2015, doi: 10.1002/pra2.2015.145052010082.

[3] H. Allcott and M. Gentzkow, “Social media and fake news in the 2016 election,” Journal of Economic Perspectives, vol. 31, no. 2,

pp. 211–236, May 2017, doi: 10.1257/jep.31.2.211.

[4] World Health Organization, “Novel coronavirus (2019-nCoV) situation report-11 [Informe de situación-11 del nuevo coronavirus (2019-

nCoV)],” 2020.

[5] C. S. Atodiresei, A. Tǎnǎselea, and A. Iftene, “Identifying fake news and fake users on twitter,” Procedia Computer Science, vol. 126,

pp. 451–461, 2018, doi: 10.1016/j.procS.2018.07.279.

[6] V. Pérez-Rosas, B. Kleinberg, A. Lefevre, and R. Mihalcea, “Automatic detection of fake news,” in COLING 2018 - 27th International

Conference on Computational Linguistics, Proceedings, 2018, pp. 3391–3401.

[7] P. V. A. Sivasangari V and S. R. R, “A modern approach to identify the fake news using machine learning,” International Journal of Pure

and Applied Mathematics, vol. 118, no. 20, pp. 3787–3795, 2018.

[8] M. Sreedevi, G. Vijay Kumar, K. Kiran Kumar, B. Aruna, and A. Yadav, “Prediction of fake tweets using machine learning algorithms,”

2021, pp. 188–194.

[9] C. Castillo, M. Mendoza, and B. Poblete, “Information credibility on twitter,” in Proceedings of the 20th international conference on

World wide web - WWW ’11, 2011, no. January, p. 675, doi: 10.1145/1963405.1963500.

[10] C. Castillo, M. Mendoza, and B. Poblete, “Predicting information credibility in time-sensitive social media,” Internet Research, vol. 23,

no. 5, pp. 560–588, 2013, doi: 10.1108/IntR-05-2012-0095.

[11] B. D. Horne and S. Adali, “This just in: fake news packs a lot in title, uses simpler, repetitive content in text body, more similar to satire

than real news,” 2017.

[12] R. and Jehad and S. A.Yousif, “Fake news classification using random forest and decision tree (J48),” Al-Nahrain Journal of Science,

vol. 23, no. 4, pp. 49–55, 2020, doi: 10.22401/anjs.23.4.09.

[13] E. Tacchini, G. Ballarin, M. L. Della Vedova, S. Moret, and L. de Alfaro, “Some like it hoax: automated fake news detection in social

networks,” in CEUR Workshop Proceedings, Apr. 2017, pp. 1–12, [Online]. Available: http://arxiv.org/abs/1704.07506.

[14] E. J. Briscoe, D. S. Appling, and H. Hayes, “Cues to deception in social media communications,” in Proceedings of the Annual Hawaii

International Conference on System Sciences, 2014, pp. 1435–1443, doi: 10.1109/HICSS.2014.186.

[15] Y. Zhu et al., “Aligning books and movies: towards story-like visual explanations by watching movies and reading books,” in Proceedings

Int J Artif Intell, Vol. 12, No. 1, March 2023: 488-495

Int J Artif Intell ISSN: 2252-8938 495

of the IEEE International Conference on Computer Vision, 2015, vol. 2015 Inter, pp. 19–27, doi: 10.1109/ICCV.2015.11.

[16] J. Devlin, M. W. Chang, K. Lee, and K. Toutanova, “BERT: Pre-training of deep bidirectional transformers for language understanding,”

in NAACL HLT 2019 - 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human

Language Technologies - Proceedings of the Conference, 2019, pp. 4171–4186.

[17] L. Tian, X. Zhang, and J. H. Lau, “Rumour detection via zero-shot cross-lingual transfer learning,” Lecture Notes in Computer Science

(including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 12975 LNAI, pp. 603–618, 2021,

doi: 10.1007/978-3-030-86486-6_37.

[18] L. Cui and D. Lee, “CoAID: COVID-19 healthcare misinformation dataset,” arXiv:2006.00885, 2020, doi: 10.48550/arXiv.2006.00885.

[19] S. Robertson, “Understanding inverse document frequency: on theoretical arguments for IDF,” Journal of Documentation, vol. 60, no. 5,

pp. 503–520, 2004, doi: 10.1108/00220410410560582.

[20] N. K. Manaswi, Deep learning with applications using Python. Berkeley, CA: Apress, 2018.

[21] B. Guo, Y. Ding, L. Yao, Y. Liang, and Z. Yu, “The future of false information detection on social media: new perspectives and trends,”

ACM Computing Surveys, vol. 53, no. 4, 2020, doi: 10.1145/3393880.

[22] M. Cantarella, N. Fraccaroli, and R. G. Volpe, “Does fake news affect voting behaviour?,” SSRN Electronic Journal, no. June, 2019,

doi: 10.2139/ssrn.3402913.

[23] S. Afroz, M. Brennan, and R. Greenstadt, “Detecting hoaxes, frauds, and deception in writing style online,” in Proceedings - IEEE

Symposium on Security and Privacy, 2012, pp. 461–475, doi: 10.1109/SP.2012.34.

[24] C. Goutte and E. Gaussier, “A probabilistic interpretation of precision, recall and f-score, with implication for evaluation,” in Lecture

Notes in Computer Science, vol. 3408, Springer Berlin Heidelberg, 2005, pp. 345–359.

[25] D. S. Abdelminaam, F. H. Ismail, M. Taha, A. Taha, E. H. Houssein, and A. Nabil, “CoAID-DEEP: an optimized intelligent framework

for automated detecting COVID-19 misleading information on twitter,” IEEE Access, vol. 9, no. December 2019, pp. 27840–27867, 2021,

doi: 10.1109/ACCESS.2021.3058066.

BIOGRAPHIES OF AUTHORS

Marina Azer is currently a Lecturer Assistant in the computer science department,

Modern Academy for Computer Science and Management Technology, Egypt, since 2012. Hols a

bachelor of computer science. She achieved her master's degree from Helwan University. She has

worked on several research topics, her research interests are Neural network, Machine learning.

She can be contacted at email: Marina.esam21@fci.bu.edu.eg.

Mohamed Taha is an Assistant Professor at Benha University, Faculty of Computers

and Artificial intelligence, Computer Science Department, Egypt. He received his M.Sc. degree

and his Ph.D. degree in computer science at Ain Shams University, Egypt, in February 2009 and

July 2015. His research interest's concern: Computer Vision (Object Tracking-Video Surveillance

Systems), Digital Forensics (Image Forgery Detection – Document Forgery Detection - Fake

Currency Detection), Image Processing (OCR), Computer Network (Routing Protocols - Security),

Augmented Reality, Cloud Computing, and Data Mining (Association Rules Mining-Knowledge

Discovery). Taha has contributed more than 20+ technical papers to international journals and

conferences. He can be contacted at email: Mohamed.taha@fci.bu.edu.eg.

Hala H. Zayed received the B.Sc. degree (Hons.) in electrical engineering and the

M.Sc. and Ph.D. degrees in electronics engineering from Benha University, in 1985, 1989, and

1995, respectively. She is currently a Professor at the Information Technology and Computer

Science Faculty, Nile University, Egypt. Her research interests include computer vision,

biometrics, machine learning, image forensics and image processing. She can be contacted at

email: hhelmy@nu.edu.eg.

Mahmoud Gadallah received the B.Sc. in electrical engineering in 1979, the M.Sc.

in 1984 Faculty of Engineering, Cairo University, and Ph.D. in 1991 from Cranfield Institute of

Technology (Cranfield University now), United Kingdom. He is now a professor at the modern

academy for computer science and Management Technology, Cairo, Egypt. He has worked on

several research topics as image processing, pattern recognition, computer vision, and natural

language processing. He can be contacted at email: mgadallah1956@gmail.com.

Automated COVID-19 misinformation checking system using … (Marina Azer)

You might also like

- Sick Well AnalysisDocument22 pagesSick Well AnalysisAnonymous sW2KixNo ratings yet

- PMCA Data Center Relocation (DCR) Pre-Planning GuideDocument71 pagesPMCA Data Center Relocation (DCR) Pre-Planning GuideJohn Sarazen100% (5)

- Irjet V6i154 PDFDocument2 pagesIrjet V6i154 PDFANSHUL SHARMANo ratings yet

- Identifying Fake News Using Real Time AnalyticsDocument9 pagesIdentifying Fake News Using Real Time AnalyticsIJRASETPublicationsNo ratings yet

- Transformer Based Automatic COVID-19 Fake News DetDocument12 pagesTransformer Based Automatic COVID-19 Fake News DetAnton LushkinNo ratings yet

- Transformer Based Automatic COVID-19 Fake News Detection SystemDocument12 pagesTransformer Based Automatic COVID-19 Fake News Detection SystemM NandiniNo ratings yet

- Detection of Fake News Using Machine Learning AlgorithmsDocument7 pagesDetection of Fake News Using Machine Learning AlgorithmsIJRASETPublicationsNo ratings yet

- 2-Convolutional Neural Network With Margin Loss For Fake News DetectionDocument12 pages2-Convolutional Neural Network With Margin Loss For Fake News DetectionMr. Saqib UbaidNo ratings yet

- Fake News Synopsis 1Document6 pagesFake News Synopsis 1VAIBHAV SRIVASTAVANo ratings yet

- Fake News Detection Using Naïve Bayes and Long Short Term Memory AlgorithmsDocument7 pagesFake News Detection Using Naïve Bayes and Long Short Term Memory AlgorithmsIAES IJAINo ratings yet

- Fake News Detection Using Deep LearningDocument10 pagesFake News Detection Using Deep LearningIJRASETPublicationsNo ratings yet

- Ieee PaperDocument4 pagesIeee PaperMadhu YadwadNo ratings yet

- Aimll Report Fake News DetectionDocument27 pagesAimll Report Fake News DetectionKarna JaswanthNo ratings yet

- Fake News Detection Using Machine LearningDocument4 pagesFake News Detection Using Machine LearningEditor IJTSRDNo ratings yet

- Fake News Detection Based On Word and Document Embedding Using Machine Learning ClassifiersDocument11 pagesFake News Detection Based On Word and Document Embedding Using Machine Learning ClassifiersFarid Ali MousaNo ratings yet

- 1 s2.0 S0378437119317546 MainDocument17 pages1 s2.0 S0378437119317546 MainDanteNo ratings yet

- Kuwait CSDocument8 pagesKuwait CSErhueh Kester AghoghoNo ratings yet

- Fake News Detection Based On Word and Document Embedding Using Machine Learning ClassifiersDocument12 pagesFake News Detection Based On Word and Document Embedding Using Machine Learning ClassifiersMahrukh ZubairNo ratings yet

- Fake News Detection Using Source Information and Bayes ClassifierDocument7 pagesFake News Detection Using Source Information and Bayes ClassifierNafisa SadafNo ratings yet

- Vol 11 2Document4 pagesVol 11 2bhuvanesh.cse23No ratings yet

- Mathematics 11 01992 v2Document21 pagesMathematics 11 01992 v2Mohammed SultanNo ratings yet

- Fake News Detection JournalDocument10 pagesFake News Detection JournalAkhilaNo ratings yet

- Detection of Misinformation About Covid-19 in Brazilian Portuguese Whatsapp Messages Using Deep LearningDocument12 pagesDetection of Misinformation About Covid-19 in Brazilian Portuguese Whatsapp Messages Using Deep LearningmlisboarochaNo ratings yet

- A Smart System For Fake News Detection Using Machine LearningDocument7 pagesA Smart System For Fake News Detection Using Machine LearningAditya AdmutheNo ratings yet

- Fake News Detection Using NLPDocument9 pagesFake News Detection Using NLPIJRASETPublicationsNo ratings yet

- I Jeter 0210122022Document5 pagesI Jeter 0210122022WARSE JournalsNo ratings yet

- A Smart System For Fake News Detection Using Machine LearningDocument7 pagesA Smart System For Fake News Detection Using Machine Learningakshay tarateNo ratings yet

- News Summarization and AuthenticationDocument3 pagesNews Summarization and AuthenticationShashaNo ratings yet

- A Review Tackling The Fake News Epidemic Using A Machine Learning AlgorithmDocument7 pagesA Review Tackling The Fake News Epidemic Using A Machine Learning AlgorithmIJRASETPublicationsNo ratings yet

- Fake and Real News Detection Using PythonDocument7 pagesFake and Real News Detection Using Pythonsanthoshsanthosh20202021No ratings yet

- ML Research PaperDocument11 pagesML Research PaperAmith RNo ratings yet

- Fake News DetectionDocument28 pagesFake News DetectionRehan MunirNo ratings yet

- Neural Networks: Chahat Raj, Priyanka MeelDocument33 pagesNeural Networks: Chahat Raj, Priyanka MeelmadhusundarNo ratings yet

- Fake News Detection Using Machine Learning AlgorithmsDocument10 pagesFake News Detection Using Machine Learning Algorithms121710306055 THAPPETA ASHOK KUMAR REDDYNo ratings yet

- Fake Information Detection by Gaining Knowledge of The Usage of Machine LearningDocument5 pagesFake Information Detection by Gaining Knowledge of The Usage of Machine LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Fake News Detection Using Machine Learning: A ReviewDocument6 pagesFake News Detection Using Machine Learning: A ReviewIjaems JournalNo ratings yet

- Algorithm and Ai Chatbot Technologies and The Threats They Pose 2Document7 pagesAlgorithm and Ai Chatbot Technologies and The Threats They Pose 2api-707718552No ratings yet

- Fake News Detection Using Machine Learning Algorithms IJERTCONV9IS03104Document10 pagesFake News Detection Using Machine Learning Algorithms IJERTCONV9IS03104favour chimaNo ratings yet

- AI ReportDocument42 pagesAI Reportabhi abhiNo ratings yet

- University OF Narowal: Name:-Umair Aslam Class: - Bs Cs 1 (M) Subject: - ICT Assignment: - 1Document3 pagesUniversity OF Narowal: Name:-Umair Aslam Class: - Bs Cs 1 (M) Subject: - ICT Assignment: - 1UmairNo ratings yet

- Daa - Mini - Project (1) OrginalDocument21 pagesDaa - Mini - Project (1) OrginalHarsh GuptaNo ratings yet

- Fake News Detection Using Machine Learning Report FinalDocument26 pagesFake News Detection Using Machine Learning Report Finalkhs660164No ratings yet

- PBHE0490 Nandal Chapter15 ProofDocument24 pagesPBHE0490 Nandal Chapter15 ProofJerrryyNo ratings yet

- A Narrative Review of Medical Image Processing by Deep Learning Models: Origin To COVID-19Document22 pagesA Narrative Review of Medical Image Processing by Deep Learning Models: Origin To COVID-19Mochamad Sandi NofiansyahNo ratings yet

- Fake News DetectionDocument6 pagesFake News DetectionOnsa piarusNo ratings yet

- Hybrid Deep Learning Model For Automatic Fake News DetectionDocument11 pagesHybrid Deep Learning Model For Automatic Fake News Detectionrushendra.umbNo ratings yet

- Advancements in Machine Learning For Combatting Misinformation: A Comprehensive Analysis of Fake News Detection StrategiesDocument4 pagesAdvancements in Machine Learning For Combatting Misinformation: A Comprehensive Analysis of Fake News Detection StrategiesInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Fake News Detection System Using Machine LearningDocument4 pagesFake News Detection System Using Machine LearningVelumani sNo ratings yet

- FND 230512094314 02deaf99Document12 pagesFND 230512094314 02deaf99sudulagunta aksharaNo ratings yet

- Vaccine + MisinformationDocument11 pagesVaccine + MisinformationNafisa SadafNo ratings yet

- Approaches To Identify Fake News: A Systematic Literature ReviewDocument10 pagesApproaches To Identify Fake News: A Systematic Literature ReviewPaurush OmarNo ratings yet

- Transformer-Based Approach Fake News Detection Based On News Content and Social ContextsDocument28 pagesTransformer-Based Approach Fake News Detection Based On News Content and Social Contextsyazhini shanmugamNo ratings yet

- Fake News Classification Using Transformer Based Enhanced LSTM and BERTDocument8 pagesFake News Classification Using Transformer Based Enhanced LSTM and BERTDonlot AjaNo ratings yet

- Fake News Detection Using Machine LearniDocument13 pagesFake News Detection Using Machine LearniTefe100% (1)

- Synopsis Minor Project-2Document5 pagesSynopsis Minor Project-2Satyajeet RoutNo ratings yet

- Fake News Spreader Detection Using Naïve Bayes Classifier and Logistic RegressionDocument5 pagesFake News Spreader Detection Using Naïve Bayes Classifier and Logistic RegressionInternational Journal of Innovative Science and Research Technology100% (1)

- Fake News - 01Document5 pagesFake News - 01sudulagunta aksharaNo ratings yet

- HAWKSEYE - A Machine Learning-Based Technique For Fake News Detection With IoTDocument5 pagesHAWKSEYE - A Machine Learning-Based Technique For Fake News Detection With IoTInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- A Closer Look at Fake News DetectionDocument5 pagesA Closer Look at Fake News DetectionYeni panjaitanNo ratings yet

- Case-Initiated COVID-19Document4 pagesCase-Initiated COVID-19Ikmal ShahromNo ratings yet

- 1 s2.0 S2590005623000346 MainDocument10 pages1 s2.0 S2590005623000346 Maintheboringboy5972No ratings yet

- Fragmented-Cuneiform-Based Convolutional Neural Network For Cuneiform Character RecognitionDocument9 pagesFragmented-Cuneiform-Based Convolutional Neural Network For Cuneiform Character RecognitionIAES IJAINo ratings yet

- Segmentation and Yield Count of An Arecanut Bunch Using Deep Learning TechniquesDocument12 pagesSegmentation and Yield Count of An Arecanut Bunch Using Deep Learning TechniquesIAES IJAINo ratings yet

- Toward Accurate Amazigh Part-Of-Speech TaggingDocument9 pagesToward Accurate Amazigh Part-Of-Speech TaggingIAES IJAINo ratings yet

- Early Detection of Tomato Leaf Diseases Based On Deep Learning TechniquesDocument7 pagesEarly Detection of Tomato Leaf Diseases Based On Deep Learning TechniquesIAES IJAINo ratings yet

- Photo-Realistic Photo Synthesis Using Improved Conditional Generative Adversarial NetworksDocument8 pagesPhoto-Realistic Photo Synthesis Using Improved Conditional Generative Adversarial NetworksIAES IJAINo ratings yet

- Performance Analysis of Optimization Algorithms For Convolutional Neural Network-Based Handwritten Digit RecognitionDocument9 pagesPerformance Analysis of Optimization Algorithms For Convolutional Neural Network-Based Handwritten Digit RecognitionIAES IJAINo ratings yet

- Handwritten Digit Recognition Using Quantum Convolution Neural NetworkDocument9 pagesHandwritten Digit Recognition Using Quantum Convolution Neural NetworkIAES IJAINo ratings yet

- Optimizer Algorithms and Convolutional Neural Networks For Text ClassificationDocument8 pagesOptimizer Algorithms and Convolutional Neural Networks For Text ClassificationIAES IJAINo ratings yet

- Deep Learning-Based Classification of Cattle Behavior Using Accelerometer SensorsDocument9 pagesDeep Learning-Based Classification of Cattle Behavior Using Accelerometer SensorsIAES IJAINo ratings yet

- Defending Against Label-Flipping Attacks in Federated Learning Systems Using Uniform Manifold Approximation and ProjectionDocument8 pagesDefending Against Label-Flipping Attacks in Federated Learning Systems Using Uniform Manifold Approximation and ProjectionIAES IJAINo ratings yet

- A Novel Approach To Optimizing Customer Profiles in Relation To Business MetricsDocument11 pagesA Novel Approach To Optimizing Customer Profiles in Relation To Business MetricsIAES IJAINo ratings yet

- Pneumonia Prediction On Chest X-Ray Images Using Deep Learning ApproachDocument8 pagesPneumonia Prediction On Chest X-Ray Images Using Deep Learning ApproachIAES IJAINo ratings yet

- An Efficient Convolutional Neural Network-Based Classifier For An Imbalanced Oral Squamous Carcinoma Cell DatasetDocument13 pagesAn Efficient Convolutional Neural Network-Based Classifier For An Imbalanced Oral Squamous Carcinoma Cell DatasetIAES IJAINo ratings yet

- Parallel Multivariate Deep Learning Models For Time-Series Prediction: A Comparative Analysis in Asian Stock MarketsDocument12 pagesParallel Multivariate Deep Learning Models For Time-Series Prediction: A Comparative Analysis in Asian Stock MarketsIAES IJAINo ratings yet

- Anomaly Detection Using Deep Learning Based Model With Feature AttentionDocument8 pagesAnomaly Detection Using Deep Learning Based Model With Feature AttentionIAES IJAINo ratings yet

- Partial Half Fine-Tuning For Object Detection With Unmanned Aerial VehiclesDocument9 pagesPartial Half Fine-Tuning For Object Detection With Unmanned Aerial VehiclesIAES IJAINo ratings yet

- Model For Autism Disorder Detection Using Deep LearningDocument8 pagesModel For Autism Disorder Detection Using Deep LearningIAES IJAINo ratings yet

- Intra-Class Deep Learning Object Detection On Embedded Computer SystemDocument10 pagesIntra-Class Deep Learning Object Detection On Embedded Computer SystemIAES IJAINo ratings yet

- Implementing Deep Learning-Based Named Entity Recognition For Obtaining Narcotics Abuse Data in IndonesiaDocument8 pagesImplementing Deep Learning-Based Named Entity Recognition For Obtaining Narcotics Abuse Data in IndonesiaIAES IJAINo ratings yet

- Classifying Electrocardiograph Waveforms Using Trained Deep Learning Neural Network Based On Wavelet RepresentationDocument9 pagesClassifying Electrocardiograph Waveforms Using Trained Deep Learning Neural Network Based On Wavelet RepresentationIAES IJAINo ratings yet

- Machine Learning-Based Stress Classification System Using Wearable Sensor DevicesDocument11 pagesMachine Learning-Based Stress Classification System Using Wearable Sensor DevicesIAES IJAINo ratings yet

- An Improved Dynamic-Layered Classification of Retinal DiseasesDocument13 pagesAn Improved Dynamic-Layered Classification of Retinal DiseasesIAES IJAINo ratings yet

- An Automated Speech Recognition and Feature Selection Approach Based On Improved Northern Goshawk OptimizationDocument9 pagesAn Automated Speech Recognition and Feature Selection Approach Based On Improved Northern Goshawk OptimizationIAES IJAINo ratings yet

- Sampling Methods in Handling Imbalanced Data For Indonesia Health Insurance DatasetDocument10 pagesSampling Methods in Handling Imbalanced Data For Indonesia Health Insurance DatasetIAES IJAINo ratings yet

- Choosing Allowability Boundaries For Describing Objects in Subject AreasDocument8 pagesChoosing Allowability Boundaries For Describing Objects in Subject AreasIAES IJAINo ratings yet

- Under-Sampling Technique For Imbalanced Data Using Minimum Sum of Euclidean Distance in Principal Component SubsetDocument14 pagesUnder-Sampling Technique For Imbalanced Data Using Minimum Sum of Euclidean Distance in Principal Component SubsetIAES IJAINo ratings yet

- Combining Convolutional Neural Networks and Spatial-Channel "Squeeze and Excitation" Block For Multiple-Label Image ClassificationDocument7 pagesCombining Convolutional Neural Networks and Spatial-Channel "Squeeze and Excitation" Block For Multiple-Label Image ClassificationIAES IJAINo ratings yet

- Detecting Cyberbullying Text Using The Approaches With Machine Learning Models For The Low-Resource Bengali LanguageDocument10 pagesDetecting Cyberbullying Text Using The Approaches With Machine Learning Models For The Low-Resource Bengali LanguageIAES IJAINo ratings yet

- Comparison of Feature Extraction and Auto-Preprocessing For Chili Pepper (Capsicum Frutescens) Quality Classification Using Machine LearningDocument10 pagesComparison of Feature Extraction and Auto-Preprocessing For Chili Pepper (Capsicum Frutescens) Quality Classification Using Machine LearningIAES IJAINo ratings yet

- Investigating Optimal Features in Log Files For Anomaly Detection Using Optimization ApproachDocument9 pagesInvestigating Optimal Features in Log Files For Anomaly Detection Using Optimization ApproachIAES IJAINo ratings yet

- 100 Leads in 90 DaysDocument5 pages100 Leads in 90 DaysAjay GomesNo ratings yet

- P121.pdf (En US)Document142 pagesP121.pdf (En US)Planeador MantenimientoNo ratings yet

- TA-BVS240 243 EN LowDocument14 pagesTA-BVS240 243 EN LowdilinNo ratings yet

- Ma 0702 05 en 00 - Setup ManualDocument214 pagesMa 0702 05 en 00 - Setup ManualRoronoa ZorroNo ratings yet

- Forte III Spec Sheet v061Document1 pageForte III Spec Sheet v061Kenny SharpensteenNo ratings yet

- Advanced Process Technology Ultra Low On-Resistance Dynamic DV/DT Rating 175°C Operating Temperature Fast Switching Fully Avalanche RatedDocument8 pagesAdvanced Process Technology Ultra Low On-Resistance Dynamic DV/DT Rating 175°C Operating Temperature Fast Switching Fully Avalanche RatedLiver Haro OrellanesNo ratings yet

- Cummins CaseStudy FINAL NewDocument6 pagesCummins CaseStudy FINAL NewneerajmprakashNo ratings yet

- Digital Bangladesh: Your IT DestinationDocument28 pagesDigital Bangladesh: Your IT DestinationEnamul Hafiz LatifeeNo ratings yet

- Experiment No.: Aim: - To Measure Three Phase Power and Power Factor in A Balanced Three Phase Circuit UsingDocument5 pagesExperiment No.: Aim: - To Measure Three Phase Power and Power Factor in A Balanced Three Phase Circuit Usinggejal akshmiNo ratings yet

- (Chapter 8) Data Structures and CAATTs For Data ExtractionDocument30 pages(Chapter 8) Data Structures and CAATTs For Data ExtractionTrayle HeartNo ratings yet

- Managed IT Services RFP TemplateDocument11 pagesManaged IT Services RFP Templateedpaala67% (3)

- Heslb Loan Application GuidelinesDocument8 pagesHeslb Loan Application GuidelinesCleverence KombeNo ratings yet

- Huawei LTE RNP Introduction1Document31 pagesHuawei LTE RNP Introduction1Hân Trương100% (1)

- F330B 23Document110 pagesF330B 23ניקולאי איןNo ratings yet

- (Model Information) : LCD Color TelevisionDocument5 pages(Model Information) : LCD Color TelevisionАртём ДончуковNo ratings yet

- Debug 1214Document2 pagesDebug 1214Ahmad SyifaNo ratings yet

- Mechanics of Materials - Column PDFDocument16 pagesMechanics of Materials - Column PDFzahraNo ratings yet

- Payment Information: Spark Mastercard Business Worldelite Account Ending in 2103Document11 pagesPayment Information: Spark Mastercard Business Worldelite Account Ending in 2103Lucy Marie RamirezNo ratings yet

- DR - Sri.Sri - Sri.Shivakumara Mahaswamy College of Engineering Department of Civil Engineering I InternalDocument2 pagesDR - Sri.Sri - Sri.Shivakumara Mahaswamy College of Engineering Department of Civil Engineering I InternalCivil EngineeringNo ratings yet

- Section 20 - Brickwork: Complying With MS 522 and Section DDocument8 pagesSection 20 - Brickwork: Complying With MS 522 and Section DmonNo ratings yet

- Horquillas CascadeDocument56 pagesHorquillas CascadeBeatriz Miranda Mellado100% (1)

- 5 - Certificado InmetroDocument5 pages5 - Certificado InmetroDanilo MarinhoNo ratings yet

- Checklist Test Environment: Pass Description RemarksDocument4 pagesChecklist Test Environment: Pass Description RemarksGuru PrasadNo ratings yet

- Principles of Purchasing Nd1 Bam 117Document36 pagesPrinciples of Purchasing Nd1 Bam 117Alexander GabrielNo ratings yet

- Installation Information Emg Models: J, Ja, J-Cs Set (4 /5-String)Document4 pagesInstallation Information Emg Models: J, Ja, J-Cs Set (4 /5-String)Juninho ESPNo ratings yet

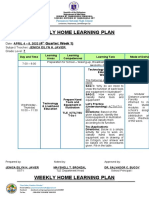

- Weekly Home Learning Plan: (4 Quarter: Week 1)Document8 pagesWeekly Home Learning Plan: (4 Quarter: Week 1)JenicaEilynNo ratings yet

- Studio Infinity - Library Case StudyDocument5 pagesStudio Infinity - Library Case StudyGayathriNo ratings yet

- Acclarix LX9 Series Datasheet-V2.2Document27 pagesAcclarix LX9 Series Datasheet-V2.2Marcos CharmeloNo ratings yet