Professional Documents

Culture Documents

Lab Manual 06 - P&DC

Uploaded by

Nitasha HumaCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Lab Manual 06 - P&DC

Uploaded by

Nitasha HumaCopyright:

Available Formats

Lab # 6 – Parallel & Distributed Computing (CPE-421)

Lab Manual # 6

Objective:

To study the communication between MPI processes

Theory:

It is important to observe that when a program running with MPI, all processes use the

same compiled binary, and hence all processes are running the exact same code. What in an

MPI distinguishes a parallel program running on P processors from the serial version of the

code running on P processors? Two things distinguish the parallel program:

Each process uses its process rank to determine what part of the algorithm instructions

are meant forit.

Processes communicate with each other in order to accomplish the finaltask.

Even though each process receives an identical copy of the instructions to be

executed, this does not imply that all processes will execute the same instructions. Because

each process is able to obtain its process rank (using MPI_Comm_rank). It can determine

which part of the code it is supposed to run. This is accomplished through the use of IF

statements. Code that is meant to be run by one particular process should be enclosed within

an IF statement, which verifies the process identification number of the process. If the code is

not placed within IF statements specific to a particular id, then the code will be executed by

allprocesses.

The second point, communicating between processes; MPI communication can be

summed up in the concept of sending and receiving messages. Sending and receiving is

done with the following two functions: MPI Send and MPIRecv.

M PI_Send

int MPI_Send( void* message /* in */, int count /* in */,

MPI Datatype datatype /* in */, int dest /* in */, int tag /*

in */, MPI Comm comm /* in */ )

M PI_Recv

int MPI_Recv( void* message /* out */, int count /* in

*/, MPI Datatype datatype /* in */, int source /* in */, int

tag /* in */, MPI Comm comm /* in */, MPI Status* status /*

out*/)

Understanding the Argument Lists:

message: starting address of the send/recvbuffer.

count: number of elements in the send/recvbuffer.

datatype: data type of the elements in the sendbuffer.

source: process rank to send the data.

dest: process rank to receive the data.

tag: messagetag.

comm: communicator.

status: statusobject

Raheel Amjad 2018-CPE-07

Lab # 6 – Parallel & Distributed Computing (CPE-421)

An Example Program:

Key Points:

In general, the message array for both the sender and receiver should be of the same

type and both of size at leastdatasize.

In most cases the sendtype and recvtypeareidentical.

The tag can be any integer between0-32767.

MPI Recvmay use for the tag the wildcard MPI ANY TAG. This allows an MPI Recv

to receive from a send using anytag.

MPI Send cannot use the wildcard MPI ANY TAG. A speci.c tag must be

specified.

MPI Recvmay use for the source the wildcard MPI ANY SOURCE. This allows an

MPI Recv to receive from a send from anysource.

MPI Send must specify the process rank of the destination. No wild card exists.

An Example Program #2:

To Calculate the Sum of Given Numbers in Parallel:

Raheel Amjad 2018-CPE-07

Lab # 6 – Parallel & Distributed Computing (CPE-421)

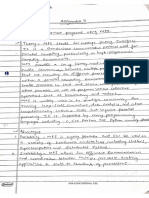

Conclusion:

Raheel Amjad 2018-CPE-07

You might also like

- Lab Manual 07 - P&DCDocument3 pagesLab Manual 07 - P&DCNitasha HumaNo ratings yet

- Parallel ProgrammingDocument44 pagesParallel ProgrammingAmitNo ratings yet

- Mpi Unit 5 Part 2 1Document65 pagesMpi Unit 5 Part 2 1Kaiwalya RautNo ratings yet

- WINSEM2022-23 CSE4001 ELA VL2022230503461 Reference Material IV 13-12-2022 MPIDocument52 pagesWINSEM2022-23 CSE4001 ELA VL2022230503461 Reference Material IV 13-12-2022 MPIDev Rishi ThakurNo ratings yet

- Nscet E-Learning Presentation: Listen Learn LeadDocument54 pagesNscet E-Learning Presentation: Listen Learn Leaddurai muruganNo ratings yet

- 6.3 Mpi: The Message Passing Interface: (Team Lib)Document5 pages6.3 Mpi: The Message Passing Interface: (Team Lib)vasantNo ratings yet

- BSP Design StrategyDocument38 pagesBSP Design StrategyRacheNo ratings yet

- Fdi 2008 Lecture8Document34 pagesFdi 2008 Lecture8api-27351105No ratings yet

- MPI Using Java PDFDocument22 pagesMPI Using Java PDFSwapnil ShindeNo ratings yet

- Cs6801 - Multicore Architectures and Programming 2 Marks Q & A Unit Iv - Distributed Memory Programming With MpiDocument15 pagesCs6801 - Multicore Architectures and Programming 2 Marks Q & A Unit Iv - Distributed Memory Programming With MpiMangalamNo ratings yet

- Mpi Ug in FortranDocument59 pagesMpi Ug in Fortranmisdemeanor01No ratings yet

- MpiDocument30 pagesMpianshulvyas23No ratings yet

- Introduction To The Message Passing Interface (MPI) : Parallel and High Performance ComputingDocument41 pagesIntroduction To The Message Passing Interface (MPI) : Parallel and High Performance ComputingDebleena MitraNo ratings yet

- Slides 07-1Document57 pagesSlides 07-1Muhammad AltamashNo ratings yet

- Module 3 Solutions PCS Ia2 Q.banksDocument13 pagesModule 3 Solutions PCS Ia2 Q.banksmd shakil ahsan mazumderNo ratings yet

- Message Passing Interface (MPI) ProgrammingDocument11 pagesMessage Passing Interface (MPI) ProgrammingHussien Shahata Abdel AzizNo ratings yet

- Point-to-Point Communication: MPI Send MPI RecvDocument4 pagesPoint-to-Point Communication: MPI Send MPI RecvlatinwolfNo ratings yet

- Parallel and Distributed Computing Lab Digital Assignment - 5Document7 pagesParallel and Distributed Computing Lab Digital Assignment - 5ajayNo ratings yet

- Send and ReceiveDocument11 pagesSend and ReceiveSalina RanabhatNo ratings yet

- HPC Lecture40Document25 pagesHPC Lecture40suratsujitNo ratings yet

- Message Passing Interface (MPI) ProgrammingDocument11 pagesMessage Passing Interface (MPI) ProgramminggopitheprinceNo ratings yet

- A Specimen of Parallel Programming: Parallel Merge Sort ImplementationDocument6 pagesA Specimen of Parallel Programming: Parallel Merge Sort ImplementationrazinelbNo ratings yet

- Ex 5 (Mpi-Ii) : Reg No: 19BCE2028 Name: Pratham Shah Slot: L47+L48Document4 pagesEx 5 (Mpi-Ii) : Reg No: 19BCE2028 Name: Pratham Shah Slot: L47+L48Pratham ShahNo ratings yet

- Programming With The CPI-C APIDocument5 pagesProgramming With The CPI-C APIrachmat99No ratings yet

- Cluster Computing: Dr. C. Amalraj 19/04/2021 The University of Moratuwa Amalraj@uom - LKDocument45 pagesCluster Computing: Dr. C. Amalraj 19/04/2021 The University of Moratuwa Amalraj@uom - LKNishshanka CJNo ratings yet

- Fortran Mpi TutorialDocument29 pagesFortran Mpi TutorialВладимир СмирновNo ratings yet

- Parallel & Distributed Computing: MPI - Message Passing InterfaceDocument49 pagesParallel & Distributed Computing: MPI - Message Passing InterfaceSibghat RehmanNo ratings yet

- PDCLabMan UpdatedDocument46 pagesPDCLabMan UpdateddakshitaNo ratings yet

- Message Passing Interface (MPI) : EC3500: Introduction To Parallel ComputingDocument40 pagesMessage Passing Interface (MPI) : EC3500: Introduction To Parallel ComputingMaria Isaura Lopez100% (1)

- Lab Manual 09 - P&DCDocument3 pagesLab Manual 09 - P&DCNitasha HumaNo ratings yet

- Mpi 9a10 PDFDocument68 pagesMpi 9a10 PDFnckpourlasNo ratings yet

- An Introduction To Parallel Computing With MPI Computing Lab IDocument9 pagesAn Introduction To Parallel Computing With MPI Computing Lab IcartamenesNo ratings yet

- Lab Assesment 10 Parallel & Distributed Computing (L31+32) : Dated: 18/10/2020 Assessment 10 Muskan Agrawal 18BCE0707Document3 pagesLab Assesment 10 Parallel & Distributed Computing (L31+32) : Dated: 18/10/2020 Assessment 10 Muskan Agrawal 18BCE0707madhu agrawalNo ratings yet

- MPI Tutorial: MPI (Message Passing Interface)Document29 pagesMPI Tutorial: MPI (Message Passing Interface)Tarak Nath NandiNo ratings yet

- Parallel Computing Lab Manual PDFDocument51 pagesParallel Computing Lab Manual PDFSAMINA ATTARINo ratings yet

- Message Passing BasicsDocument40 pagesMessage Passing BasicsmstkaracaNo ratings yet

- Message Passing Interface (MPI)Document14 pagesMessage Passing Interface (MPI)Judah OkeleyeNo ratings yet

- Master in High Performance Computing Advanced Parallel Programming MPI: Nonblocking Collective CommunicationsDocument1 pageMaster in High Performance Computing Advanced Parallel Programming MPI: Nonblocking Collective Communicationsnijota1No ratings yet

- MigrationDocument6 pagesMigrationSĩ Anh NguyễnNo ratings yet

- Programming Assignment: On OpenmpDocument19 pagesProgramming Assignment: On OpenmpyogeshNo ratings yet

- Intro To MPI: Hpc-Support@duke - EduDocument56 pagesIntro To MPI: Hpc-Support@duke - EduBabisIgglezosNo ratings yet

- Distributed-Memory Parallel Programming With MPI: Supervised By: Dr. Shaima HagrasDocument20 pagesDistributed-Memory Parallel Programming With MPI: Supervised By: Dr. Shaima Hagrasmohamed samyNo ratings yet

- PDC 5 PDFDocument7 pagesPDC 5 PDFShivam AhujaNo ratings yet

- Computer Structures - MPIDocument16 pagesComputer Structures - MPIYomal WijesingheNo ratings yet

- Clase 4 - Tutorial de MPIDocument35 pagesClase 4 - Tutorial de MPIEnzo BurgaNo ratings yet

- HPCS Lab5Document5 pagesHPCS Lab5Baza DanychNo ratings yet

- MPI Tutorial: MPI (Message Passing Interface)Document29 pagesMPI Tutorial: MPI (Message Passing Interface)John HeitherNo ratings yet

- MPI Master2017Document143 pagesMPI Master2017sasori SamoNo ratings yet

- Open MpiDocument88 pagesOpen MpiSibghat RehmanNo ratings yet

- Introduction To MPI Ranger LonestarDocument67 pagesIntroduction To MPI Ranger Lonestartareqkh1No ratings yet

- Lap-Trinh-Song-Song - Pham-Quang-Dung - Chapter-Mpi - (Cuuduongthancong - Com)Document33 pagesLap-Trinh-Song-Song - Pham-Quang-Dung - Chapter-Mpi - (Cuuduongthancong - Com)Thảo Nguyên TrầnNo ratings yet

- Lecture 17Document33 pagesLecture 17Bushi BaloochNo ratings yet

- Lab Assesment 9 Parallel & Distributed Computing (L31+32) : Dated: 16/10/2020 Assessment 9 Muskan Agrawal 18BCE0707Document4 pagesLab Assesment 9 Parallel & Distributed Computing (L31+32) : Dated: 16/10/2020 Assessment 9 Muskan Agrawal 18BCE0707madhu agrawalNo ratings yet

- M1 - Python For Machine Learning - Maria SDocument46 pagesM1 - Python For Machine Learning - Maria Sshyam krishnan sNo ratings yet

- ECE 1747H: Parallel Programming: Message Passing (MPI)Document67 pagesECE 1747H: Parallel Programming: Message Passing (MPI)Hamid Ali ArainNo ratings yet

- Code: First Method:: (1) Write A C Program Using Open MP To Estimate The Value of PI (Use Minimum Two Methods)Document8 pagesCode: First Method:: (1) Write A C Program Using Open MP To Estimate The Value of PI (Use Minimum Two Methods)Salina RanabhatNo ratings yet

- PC 7Document13 pagesPC 7harshNo ratings yet

- PC 7Document11 pagesPC 7harshNo ratings yet

- The Message Passing Interface (MPI)Document18 pagesThe Message Passing Interface (MPI)Prasanna NayakNo ratings yet

- Lab Manual 08 - P&DCDocument2 pagesLab Manual 08 - P&DCNitasha HumaNo ratings yet

- Lab Manual 09 - P&DCDocument3 pagesLab Manual 09 - P&DCNitasha HumaNo ratings yet

- Lab Manual 04 - P&DCDocument7 pagesLab Manual 04 - P&DCNitasha HumaNo ratings yet

- (2019-CPE-28) MP Lab 7 Nitasha HumaDocument5 pages(2019-CPE-28) MP Lab 7 Nitasha HumaNitasha HumaNo ratings yet

- (2019-CPE-28) MP Lab 5,6 Nitasha HumaDocument19 pages(2019-CPE-28) MP Lab 5,6 Nitasha HumaNitasha HumaNo ratings yet

- Assignment #03: Course Instructor / Lab EngineerDocument8 pagesAssignment #03: Course Instructor / Lab EngineerNitasha HumaNo ratings yet

- (2019-CPE-28) MP Assignment 1Document14 pages(2019-CPE-28) MP Assignment 1Nitasha HumaNo ratings yet

- (2019-CPE-28) MP Assignment 2Document14 pages(2019-CPE-28) MP Assignment 2Nitasha HumaNo ratings yet

- Assignment 4: Course Instructor / Lab EngineerDocument8 pagesAssignment 4: Course Instructor / Lab EngineerNitasha HumaNo ratings yet

- RSLinx Classic Release Notes v2.59.00Document24 pagesRSLinx Classic Release Notes v2.59.00Luis Alberto RamosNo ratings yet

- Changes in E3 Workspace ConfigurationDocument4 pagesChanges in E3 Workspace ConfigurationamitobikramcNo ratings yet

- DD Vstor40 x64UI2BBBDocument6 pagesDD Vstor40 x64UI2BBBwilsonnascNo ratings yet

- Computer BasicsDocument206 pagesComputer Basicszipzapdhoom67% (3)

- COMPUTE!'s Second Book of AtariDocument257 pagesCOMPUTE!'s Second Book of AtariremowNo ratings yet

- DynaFlex sDM2X-8 S61313.02 R5.11 210412.1443 UM DraftDocument240 pagesDynaFlex sDM2X-8 S61313.02 R5.11 210412.1443 UM Draftpepe fernandezNo ratings yet

- Checkpoint R65 VPN Admin GuideDocument668 pagesCheckpoint R65 VPN Admin GuideIgor JukicNo ratings yet

- 1 Numbering SystemsDocument22 pages1 Numbering SystemsShubham VermaNo ratings yet

- CSVTU Operating System Unit 1Document43 pagesCSVTU Operating System Unit 1Kapil NagwanshiNo ratings yet

- Quiz CSADocument9 pagesQuiz CSAMichael John PedrasaNo ratings yet

- Copa 2014Document16 pagesCopa 2014Vijay SharmaNo ratings yet

- OB1Document4 pagesOB1Alexander TenorioNo ratings yet

- Admin Installing SIS DVD 2011BDocument3 pagesAdmin Installing SIS DVD 2011Bmahmod alrousan0% (1)

- CMD Command ListDocument3 pagesCMD Command ListKetan100% (5)

- ITE S22 23 in ClassExercise Ch4 2Document2 pagesITE S22 23 in ClassExercise Ch4 2Fajr AlraisNo ratings yet

- GreppingDocument5 pagesGreppingsrikanthNo ratings yet

- 19 Os Viva QuestionsDocument2 pages19 Os Viva QuestionssendhilmcaNo ratings yet

- Accelerating Dependent Cache Misses With An Enhanced Memory ControllerDocument29 pagesAccelerating Dependent Cache Misses With An Enhanced Memory ControllerDealNo ratings yet

- Course Outline of CSE 211 PDFDocument4 pagesCourse Outline of CSE 211 PDFSafialIslamAyonNo ratings yet

- CCNA 1 (v5.1 + v6.0) Chapter 11 Exam Answers 2018 - 100% FullDocument1 pageCCNA 1 (v5.1 + v6.0) Chapter 11 Exam Answers 2018 - 100% FullsssNo ratings yet

- CIT0592 - IDS Mini Project (NWS3 S5)Document15 pagesCIT0592 - IDS Mini Project (NWS3 S5)AMMAR AMRANNo ratings yet

- Basic Structure of Computers RevisionsDocument4 pagesBasic Structure of Computers RevisionsdeivasigamaniNo ratings yet

- Backup and Restore Controller - v2-0 - ENDocument50 pagesBackup and Restore Controller - v2-0 - ENaggelos AngelNo ratings yet

- Configuring A JOB in T24Document2 pagesConfiguring A JOB in T24France CalNo ratings yet

- Build An ESP8266 Web ServerDocument21 pagesBuild An ESP8266 Web Serverpinguino2820100% (1)

- CCD-9S52: 5Sgc Windows 180 KB 2004 / 08 / 25 Match More Media 5Sgb - 1Document10 pagesCCD-9S52: 5Sgc Windows 180 KB 2004 / 08 / 25 Match More Media 5Sgb - 1metax77No ratings yet

- Lab 02Document10 pagesLab 02Nathan100% (1)

- Anti-Virus Comparative: Performance Test (Suite Products)Document13 pagesAnti-Virus Comparative: Performance Test (Suite Products)HeryNo ratings yet

- Arm Base Boot Requirements 1.0: Platform Design DocumentDocument43 pagesArm Base Boot Requirements 1.0: Platform Design DocumentBob DengNo ratings yet

- Chillispot - How To Setup A AP - DD-WRT&ChillspotDocument3 pagesChillispot - How To Setup A AP - DD-WRT&Chillspotirhas86No ratings yet