Professional Documents

Culture Documents

Speech Emotion Recognition System For Human Interaction Applications

Uploaded by

Sahar FawziOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Speech Emotion Recognition System For Human Interaction Applications

Uploaded by

Sahar FawziCopyright:

Available Formats

Speech Emotion Recognition System for Human Interaction

Applications

Mai El Seknedy Sahar Fawzi .

Nile University Nile University

Giza, Egypt Giza, Egypt

Mai.Magdy@nu.edu.eg sfawzi@nu.edu.eg

Abstract— Speech emotion recognition systems are needed the quality of service and analyze the client’s psychological

nowadays in many human interaction applications such as attitude to take needed actions on the spot during the call.

call-centers, e-learning, autonomous driver emotion Furthermore, e-learning is emerging at incredible rates due

detection, and physiological diseases analysis. The existing to the current situation. It will be beneficial to have an

speech emotion recognition systems focus mainly on a emotional state indication of students/attendants to

single corpus. On the other hand, the speech emotion differentiate if they are frustrated, angry, happy, calm, or

recognition performance on cross-corpus is still an ongoing neutral. This will lead to high improvement in social

challenge in the research domain. This paper presents a communication and ways of transferring information [2].

study for speech emotion recognition tested on 3 widely Moreover, SER is taking an emerging role in the medical

used languages (English, German and French) using sector where identifying the patient’s response to the

RAVDESS, EmoDB and CaFE datasets. Four Machine prescribed medicine or therapy is very important in deciding

learning classifiers: Multi-Layer Perceptron, Support Vector the best medical treatment program. The authors of this

Machine, Random Forest and Logistic Regression have been research work [3] mentioned the impact of SER on Autism

used. A newly developed feature set was introduced Spectrum Disorder (ASD) patients who usually suffer from

consisting of main speech features as prosodic features, defects in facial expressions. Thanks to this advanced

spectral features and energy. Very promising results were technology, they can offer a psychophysiological alternative

obtained using this feature-set even when compared with the modality through SER to analyze emotions through speech.

benchmarked feature-set “Interspeech 2009”. Furthermore, Emotions play an important role in interpreting human

Feature importance techniques were used to study the feelings since that research has discovered the power of

feature importance per each classifier across each corpus. emotions in defining human social interactions [4].

From our results, it was found that SVM is the best classifier

from recognition rates and running performance point of In this research, we provide a model that is capable of

view. The model achieved an accuracy of 70.56% on identifying the effect of emotional state on the end-user

RADVESS, 85.97% on Emodb and 70.61% on CaFE. customer in the previously mentioned applications. It also

tackles the challenge of the cross-corpus SER system

Keywords: Speech emotion recognition, Cross corpus, Mel especially that there are a lot of SER researches targeting a

frequency cepstral coefficients, prosodic features, Mel- single language and not taking into consideration the cross-

spectrogram features, Acted datasets corpus model generalization. This will enable the SER

system to interact in real-life applications which are not just

I. INTRODUCTION unilingual. This work studies the effect of different feature

set combinations as the prosodic related features (intonation,

S peech Emotion Recognition (SER) has a wide range of

applications in human interacting systems that can

comprehend human emotions to enhance the interactive

Fundamental Frequency), spectral features (Contrast), ZCR,

RMS, MFCCs and Mel Spectrograms to predict accurate

emotions for single and cross-corpus. Furthermore, it studies

experience. Useful applications include human-computer

the impact of each of the introduced languages with the

interaction, mental health analysis, autonomous vehicles,

features. The model performance is tested on 3 benchmarked

commercial applications, call centers, web-based e-learning,

acted databases: RAVDESS, EmoDB and CaFE in 3

computer games analytics and psychological diseases

different languages (English, German and French). To

analysis. For example, call center agents can have triggers of

compare our developed feature-set performance, we tested

the customer state which helps to handle the customers more

the model performance on the benchmarked

efficiently.SER can also be integrated with the churn

INTERSPEECH 2009 feature set (IS09) which is based on

prediction model as in telecom companies [1]. In other

the INTERSPEECH 2009 Emotion Challenge [21], [32]. For

words, the call center agencies will be able to have an alarm

classification, four supervised machine learning models

for unsatisfied, satisfied, or neutral customers to analyze the

including Support Vector Machine (SVM), Multi-Layer

behavioral study of the customers. This will help to improve

Perceptron (MLP), Simple Logistic Regression (SLR) and

978-1-6654-4076-9/21/$31.00 ©2021 IEEE

Random Forest (RF) was used. Then we analyzed the dependent on the corpus used, the language and the

relationship between each classifier and the used features to classification algorithm.

get insights about the most leading features per classifier. Regarding, Classification we can see that there are a wide

The performance of speech emotion recognition is analyzed variety of ML algorithms used in SER domain as Hidden

using 10 folds to ensure the model generalization and Markov models (HMM), Gaussian Mixture Models (GMM),

stability. Moreover, different testing-training splits (80-20 Support Vector Machine (SVM), tree-based Models

and 90-10) were applied to compare our results versus (Random Forest), K-Nearest Neighbor (K-NN), Logistic

previous research works. We used mainly 3 evaluation Regression and recently most popular Artificial Neural

metrics which are accuracy, recall rate and precision. Networks (ANN) during previous researches studies they

showed that each classifier has its own advantage and

This paper is organized as follows: a literature review with limitation [8], [11], [24], [26]. Also, the previous literature

advances in SER is presented in Section 2, the methodology review showed that recently there’s a great focus on the

is presented in Section 3 including the datasets, feature multi-lingual corpus where many researchers started to focus

extraction, the models and the experimental setup, Section 4 on adapting the cross-corpus concept after realizing that

presents the results of the experiments and finally, Section 5 training and evaluating the model on a single language won’t

introduced our conclusion and future work. perform as good as when applied on the cross-corpus

dataset. So, we can see that the stream is moving towards the

II. LITERATURE REVIEW cross-corpus models [10], [12], [13], [7], [26]. Most recent

Speech emotion recognition is the ability to interpret the research focuses on the new AI techniques for SER

speaker’s emotional state from his speech. Over the past classification as ensemble learning which is the process by

decade, SER research has shown a significant increase and which multiple models, such as classifiers, are combined to

solve a particular computational intelligence problem using

has highlighted multiple factors for better emotion

different techniques as majority voting, it showed the

recognition such as the datasets, speech extracted features

capability of enhancing the prediction results as in [8], [14],

and classifiers more details can be found in this survey [5].

[26].

For, the databases many types have been used in SER

systems as acted, Elicited and non-acted [5]. Also, the Artificial neural networks are currently the most dominant

database’s psychographic factors affect the SER stream in SER as CNN, LSTM, Auto-encoders, RNN and

performance as the speaker’s gender and age have been attention-based model [10] they are showing very promising

studied in research [6]. One key player in SER systems is results especially CNN. Where recently the ordinary feature

features used, where a precise set of features that sets are being fed to the network as MFCCS or through a

successfully describe the emotions can enhance greatly the whole new paradigm of training pre-defined CNN model

recognition success rate. Various types of features have been (Transfer Learning) on spectrogram images of the speech as

used in SER systems as Prosodic features which mainly in [10] [15]. Some authors studied the impact of passing

describe the intonation and rhythm of the speech signal such directly the raw speech signal to the network and letting the

as pitch, intensity and fundamental frequency F0 [5], [7], network learn the speech features itself [16]. Multimodal

[8]. Along with spectral features (spectral contrast, spectral systems with deep neural networks took place as well as

bandwidth, centroid and cutoff), signal energy features recently we can find integration between speech and text for

(RMS), Mel spectrogram features are used as feature vector emotion classifications as in [30] and between speech and

and as images in new deep learning studies. Then, the most visual images as in [31].

famous and widely used feature in SER domain is Mel-

frequency cepstral coefficients (MFCC) as it represents III. METHODOLOGY

speech in a better way by taking the advantage of auditory In this research, supervised machine learning

perception of humans and was proved to contain rich speech algorithms were used to automatically detect the speaker’s

characteristics. MFCC was excellent at expressing emotions emotions. Three free open sources acted datasets were used

through speech and was used in many studies as [2], [9], in training and evaluation, using four classification

[24], [26], [27], [29]. algorithms to identify the speaker’s emotions.

Also, recently the new methods as deep neural networks A. Datasets

showed a high interest in MFCC Features as the authors of

RAVDESS: Ryerson Audio-Visual Database of Emotional

research [10] depends on MFCCs along with spectrogram

Speech and Song is a dynamic, gender-balanced dataset of

images to train deep neural network system using CNN and

lexically-matched statements in an American accent

LSTM. Furthermore, there are linear prediction coefficients

consisting of 24 actors (12 male and 12 female). It includes

(LPC) based features and Voice Quality Features as Jitter 8 emotions: angry, happy, neutral, sad, calm, fearful,

and Shimmer [27] that are used as well beside prosodic and surprise, and disgust. Each expression is produced at two

spectral features. There’s no general approved set of levels of emotional intensity and neutral expression. It

features for precise emotion recognition results it’s consists of total utterances of 1440 wav files with a sampling

rate of 48 kHz [17].

978-1-6654-4076-9/21/$31.00 ©2021 IEEE

MFCCs coefficients to the first 14 as mainly speech

EmoDB: Berlin Database of Emotional Speech is a German information are carried in first 20 coefficient and Mel

language emotional corpus consisting of 5 male and 5 spectrogram filters to first 8 based on best practices. The

female actors speaking ten utterances in 7 emotions: angry, statistical functions were increased to 6 functions. We

happy, neutral, sad, boredom, fear and disgust. It consists of used librosa python package to generate both feature sets.

total utterances of 535 wav files with a sampling rate of 16 Further details about both feature sets are displayed in

kHz [18]. Table.2.

To validate our developed featureset performance we tried

CaFE: Canadian French Emotional speech database consists another benchmarked featureset INTERSPEECH 2009

of 6 different sentences consisting of 6 male and 6 female Paralinguistic Challenge feature set (IS09) which was

actors speaking in 7 emotions: angry, happy, neutral, sad, used before in many papers and proved accurate results n

surprise, fear and disgust. Each expression is produced at 2 SER systems [12], [32]. The IS09 feature set contains 384

different intensities. It consists of total utterances of 936 wav features that result from a systematic combination of 16

files with a sampling rate of 192 kHz. It is provided as well Low-Level Descriptors (LLDs) and corresponding first

downsampled with a sampling rate of 48 kHz which is used order delta coefficients with 12 functions. The16 LLDs

during this research [19]. consist of zero-crossing-rate (ZCR), root mean square

(RMS) frame energy, pitch frequency, harmonics-to-noise

Summary details about databases are as shown in Table.1. ratio (HNR) and MFCC. OpenSmile toolkit was used to

generate this featureset.

Table.1 – Databases description

Table.2 – Feature sets description

Emotion Number

Dataset Language Utterances Labels of Actors Feature Description Tool Statistical

angry, happy, functions

RAVDESS English 1440 neutral, sad, 24 Feature- set1 - 40 MFCC Librosa -Mean

calm, fearful, - Mel-spectrogram (python -Standard

surprise and - RMS library) deviation

disgust

- 12 Chroma

angry, happy, Total

- Tonnetz

EmoDB German 535 neutral, sad, 10

- 8 Contrast features:

boredom, fear

and disgust

194

angry, happy, Feature- set2 - 14 MFCC Librosa + -Min

CaFE French 936 neutral, sad, 12 - 8 Mel-spectrogram pYAAPT -Max

surprise, fear - RMS (pitch -Standard

and disgust - 12 Chroma tracking deviation

- Tonnetz algorithm -Mean

- 8 Contrast in python) -Range

- Zero crossing rate (max-min)

- Fundamental -

B. Features extraction Percentiles

frequency (F0)

- Pitch (25, 50,

Different features have been extracted and used in - Signal’s low- 75,90)

SER systems, but there is no generic or precise set of frequency band

mean energy

features that can be used for best results. Also, it was Total

found through this research that the ML algorithm used is features:

playing a role as well in feature effectiveness and feature 122

importance. Also, each emotion can be represented or IS09 - RMS Opensmile -Min

(INTERSPEECH - 12 MFCC Tool -Max

captured from different speech features as “Angry” you 2009 Emotion - ZCR -Standard

will find high pitch frequency with a lot of fluctuations, Challenge - Voicing deviation

while the “Neutral” emotion trajectories curve is flatter. feature set) probability -Mean

On the other hand, from past researches, the majority - F0 -Range

(max-min)

agreed on a generic feature baseline and they classified -maxPos

features into two generic main streams: Prosodic and -minPos

Spectral features [20]. -linregc1

In this paper, we developed 2 new feature sets based on -linregc2

-

previous work experiences. So, we first developed generic linregerrQ

Featureset-1 containing: MFFCCs (first 40 coefficients), -skewness

Mel-spectrogram (with mel bank of 128 filters), spectral -kurtosis

contrast, Root Mean square, the tonal centroid features

Total

(tonnetez), chroma features using 2 statistical functions. features:

Then, we enhanced featureset-1 by including prosodic 384

Features (F0, pitch frequencies, Signal’s low-frequency

band mean energy ), Zero-crossing rate and reduced

978-1-6654-4076-9/21/$31.00 ©2021 IEEE

Table.4 – Top 10 features using Information gain per language

C. Feature Importance Language MLP SVM Random Logestic Regression

Forest

Choosing the most suitable features to describe the speech RADVES ZCR(1) MFCC(8) Mel- MFCC(7)

signal is considered a key player in enhancing SER S MFCC(5) Pitch(1) Spec(5) ZCR(1)

Pitch (1) ZCR(1) MFCC(2) Contrast (1)

performance. During, this analysis we explored feature F0(1) RMS(2) Pitch (1)

ranking per each language to analyze how language Contrast(2) Contrast(1)

databases are affected by a group of features. Also, studied EmoDB Pitch(3) MFCC(7) RMS (1) MFCC(7)

classifier impact and how each classifier is impacted by the ZCR(1) pitch(2) MFCC (4) Pitch(2)

feature’s subset. Firstly we used the filter method based on a Mel-Spec(3)

MFCC(1)

tonnetz(1) Mean-

Energy (1)

Tonnetz (1)

statistical model to get an overview of feature importance RMS(2) Mel-

w.r.t each language and then used the wrapped method Spec(2)

ZCR(1)

“permutation importance” to study the top features per each F0

classifier

CaFE F0 MFCC(9) MFCC(4) MFCC(7)

1. Information Gain (IG): here, we used the filter method MFCC(9) F0 Contrast(1) Tonnetez(1)

which pick-up the intrinsic properties of the features RMS(1) Mel-Spec(1)

measured via univariate statistics. This method relies on Mel-

Spec(3)

Information gain between each feature variable to the Pitch(1)

target variable. We then selected the top 10 features per

each language as in Table.3. The number provided is the It was found that there are similarites between both

count of statistical functions that appeared per each methods but our aim was to look from statitical point of

feature. view (IG) for model as well as per classifer impact (PI)

Table.3 – Top 10 features using Information gain per language D. Feature Scaling

Language Features Many machine learning algorithms work better

RADVESS Mel spectrogram (8) when features are normalized on a relatively similar scale

RMS (3)

and close to normally distributed. It works on reducing

MFCC (6)

F0 (1) those variations of speakers, languages and recordings

Signal Mean Energy (1) environmental conditions effect on the recognition

EmoDB Pitch (3) process. There are many Normalization techniques as

MFCC (6) Standard Scaler and Minimum and Maximum Scaler

ZCR

(MMS) [23],[26]. Minimum and Maximum scaler (MMS)

CaFE RMS (1) method is used during this work after the extraction of

Mel spectrogram (1) features to transform the features by scaling each one to a

MFCC (2)

F0

given range from 0-1 AS calculated in Eq. (1). The choice

Pitch (2) of MMS was selected after experimenting both MMS and

Contrast (2) Standard scaler where MMS has shown better results.

Signal Mean Energy (1) Also, from literature review as paper[26] MMS was used.

2. Permutation Importance (PI): is a model-agnostic 𝑋𝑠𝑐𝑎𝑙𝑒𝑑 = (𝑋 − min) / (max −min) (1)

global explanation method that provides insights into a

machine learning model’s behavior. It estimates and Where X is the input features and min/max is the features

ranks feature importance based on the impact each ranges.

feature has on the trained machine learning model’s

predictions [22]. Table.4 shows the top 10 features E. Machine Learning Models

(weighted per statistical functions) per each classifier During, this research four classification techniques were

per each corpus. And, MFCC is the most dominant considered: Firstly, Support vector Machine (SVM) which is

feature across all the classifiers. Where in Logistic known to perform well in higher dimension data as with

Regression MFCC appeared 7 times out of the top 10 in audio data, that’s why it’s one the most popular classifiers in

3 corpuses as well as SVM where in CaFE dataset SER field owing its high running speed and accurate results

MFCC appeared 9 times in the top 10. Mel-Spectrogram [6], [24], [26]. Secondly, Random forest tree based ensemble

features can be found highest with RADVESS database classifier another benchmark classifier which is widely used

which complements Information gain results as well.

in SER [26], [27]. Thirdly, Logistic Regression Algorithm is

It’s analyzed also that random forest is more sensitive to

used also to analyze the linear model's performance [27].

mel-spectrogram than other classifiers. In SVM, MFCC

and pitch features are more dominant.while, MLP is Finally, Multi-Layer Perceptron (MLP) is a feedforward

more dynamic with respect to each language. neural network algorithm that can be considered as the first

978-1-6654-4076-9/21/$31.00 ©2021 IEEE

thread of neural networks classification algorithms which 𝑇𝑝

𝑃𝑟𝑒𝑐𝑖𝑠𝑖𝑜𝑛 = (3)

𝑇𝑝 + 𝐹𝑝

showed great results [2].

For Hyper-parameters tuning GridSearchCV method is used Recall: is how many of the actual positive

to fine-tune the classifier’s parameters. In SVM the kernel emotional classes were correctly predicted.

𝑇𝑝

function used is rbf, the decision function shape is set to a 𝑅𝑒𝑐𝑎𝑙𝑙 = (4)

𝑇𝑝 + 𝐹𝑛

one-vs-rest (ovr) decision function of shape (n_samples, Confusion Matrix: is another way to analyze how

n_classes) and the regularization parameter (C) set to 10 many samples were miss-classified by the model by

(Inverse of regularization strength). In Random Forest 500 giving a comparison between actual and predicted

trees (n_estimators) with maximum depth of tress equal 20 labels.

and “entropy” criterion function (The function to measure

the quality of a split) is used .where, in Logistic Regression IV.RESULTS AND DISCUSSION

“lbfgs” solver was used with l2 norm for penalization This section elaborates the classification model results for

(penalty) and maximum iterations for a solver to converge emotion recognition and shows a comparison between this

equal to 1000 (max_iter). For, MLP the number of neurons paperwork with previous related researches. The recognition

in the hidden layer is 400 neurons, the solver used is ‘adam’ performance was analyzed using 4 ML classifiers: MLP,

, the activation method is set to the default ‘relu’, the size of SVM, Random Forest and Logistic Regression through 3

mini-batches is 5 for stochastic optimizers (batch_size), the databases from 3 different languages (English, German, and

learning rate is ‘constant’.Table.5 shows more details of French): RADVESS, EmoDB, CaFE. 10 K folds were used

for Model evaluation to ensure the model generalization and

classifier’s parameters used.

stability, also different data splits (90:10) were applied to be

able to compare our results versus previous work.

Table.5 – Hyper parameters tuning for classifiers

The following analyses were performed:

Model Hyperparameters

1. Single corpus SER

Multi-Layer alpha=0.0001, batch_size=5,

2. Cross corpus SER

Perceptron solver='adam',hidden_layer_sizes=(400,),

learning_rate=constant, max_iter=300

SVM kernel='rbf' , C=10, gamma=0.001 , 4.1 Single corpus SER

decision_function_shape='ovr'

This Study focuses on single-corpus training to test the

Random criterion='entropy' , n_estimators=500

Forest ,max_depth=20

performance of the SER system on developed feature-sets

using different corpus to have a reference for model’s

Logistic C=1.0 , solver='lbfgs' , penalty='l2', max_iter = 1000

performance within-corpus when compared to cross-corpus.

Regression

Table.6 shows the recognition accuracy, precision and recall

(Acc, Prc, Rec.) of SER system using 10folds trained on 3

F. Evaluation Metrics languages. Where, Model was run on all emotions for each

corpus: RADVESS on 8 emotions, EmoDB and CaFE on 7

10-fold cross-validation was applied to ensure

emotions.

statistical stability and generalization of the model.

Where, for RADVESS corpus best accuracy of 70.56% with

Where, in 10-fold cross-validation, the database is

randomly partitioned into 10 equal size subsamples. Of precision of 69.96% and recall of 70.07% using SVM and

the 10 subsamples, 1 subsample which is 10% of the IS09 feature set and nearly similar results of accuracy

database is considered as the testing data to validate the 70.42% using SVM and Feature-set2, coming in second

classification model, and the remaining 9 subsamples place accuracy of 68.06% and precision 68.89% obtained

are used as training data. The reported accuracy is the using MLP and feature-set2. And, this is by far an

average of the 10 folds tests. improvement to previous work as in research [2] where they

We used 4 evaluation metrics during our experiments. obtained max accuracy of 66.04% using 7 emotions on

Accuracy: where it gives an overall measure of the RADVESS. An Accuracy of 85.97% was achieved for

percentage of correctly classified instances. EmoDB corpus using SVM and feature-set2 with a precision

𝑇𝑝 + 𝑇𝑛 of 86.71% which is an enhancement on paper [29] where

𝐴𝑐𝑐𝑢𝑟𝑎𝑐𝑦 = (2) they achieved 81.5% using LPCC, LFPC, MFCC, MFMC as

𝑇𝑝 + 𝑇𝑛 + 𝐹𝑝 + 𝐹𝑛

Where, Tp: True positive (positive examples predicted positive) featureset. And, finally for CaFE dataset accuracy of

Tn: True negative (negative examples predicted negatve) 70.61% was achieved using featureset-2 and SVM as

Fp: False positive (negative examples predicted positive) classifer. Overall of SER performance feature-set2 showed

Fn: False negative (negative examples predicted negative) a promising results compared to featureset-1 and

Precision: is how many of the correctly predicted benchmarked IS09 (INTERSPEECH 2009 emotion

emotional classes were positive. challenge) [32].

978-1-6654-4076-9/21/$31.00 ©2021 IEEE

Table.6 – SER performance for each of the 3 datasets using different featuresets and classifiers (using 10Folds for evaluation)

RADVESS

Feature-set MLP SVM Random Forest Logistic Regression

Acc Prc Rec Acc Prc Rec Acc Prc Rec Acc Prc Rec

Featureset-1 63.54% 65.36% 62.51% 57.92% 57.25% 55.64% 55.35% 57.97% 53.78% 50.42% 49.34% 47.87%

Featureset-2 68.06% 68.89% 67.16% 70.42% 69.25% 68.21% 62.97% 64.63% 62.84% 58.81% 59.19% 57.99%

IS09 64.93% 65.76% 64.77% 70.56% 69.96% 70.07% 59.31% 62.06% 57.56% 62.64% 62.39% 62.10%

Emodb

Feature-set MLP SVM Random Forest Logistic Regression

Acc Prc Rec Acc Prc Rec Acc Prc Rec Acc Prc Rec

Featureset-1 78.12% 77.39% 76.99% 82.42% 81.06% 81.81% 70.27% 70.79% 67.92% 77.00% 77.00% 75.22%

Featureset-2 84.86% 85.65% 84.37% 85.97% 86.71% 85.33% 74.01% 73.71% 70.67% 82.61% 83.76% 81.42%

.

IS09 81.28% 81.26% 82.12% 84.69% 85.43% 85.24% 74.56% 76.84% 71.35% 80.75% 80.70% 81.19%

CaFE

Feature-set MLP SVM Random Forest Logistic Regression

Acc Prc Rec Acc Prc Rec Acc Prc Rec Acc Prc Rec

Featureset-1 57.48% 57.63% 58.02% 59.51% 60.17% 61.17% 51.50% 52.51% 51.11% 51.71% 52.53% 52.76%

Featureset-2 69.62% 68.82% 69.31% 70.61% 70.02% 69.47% 63.69% 63.54% 58.49% 65.87% 65.41% 62.87%

IS09 55.24% 55.94% 55.97% 58.99% 59.44% 59.42% 49.37% 51.37% 49.44% 54.92% 55.39% 55.70%

Table.7 – Comparison of SER performance of proposed system with that of existing research

Paper Classifier Features Database and no. of Accuracy

Emotions

J. Ancilin et al (2021) [29] SVM MFMC EmoDB,7 emotions 81.50%

(using 10 folds CV)

S. G. Koolagudi et al (2020) [11] LSTM MFCC EmoDB,5 emotions:: happy, LSTM+MFCC: 44.19 %

Logistic Regression IS09 sad, fear, anger and neutral SVM+ IS09: 88.37%

(LR) LR+ IS09: 85%

SVM (using leave one speaker out)

Bhavan et al (2019) [28] Bagged ensemble of MFCCs, spectral centroids and MFCC EmoDB,7 emotions 92.45%

SVMs derivatives (using 90:10 data split)

MLP Feature-set2 EmoDB,7 emotions SVM+FS2: 85.97%

Proposed SVM IS09 SVM+IS09: 84.69%

MLP+FS2:84.86%

(using 10 folds CV)

SVM+FS2: 88.89%

SVM+IS09:87.04%

(using 90:10 data split)

A. J. et al (2020) [2] Random Forest MFCCs, RMS, Zero Crossings ,Spectral RADVESS,7 emotions 66.04%

Smoothness (using 10 folds CV)

J. Ancilin et al (2021) [29] SVM MFMC RADVESS,8 emotions 64.31%

(using 10 folds CV)

A. Koduru et al (2020) [24] SVM Pitch , energy, ZCR ,Wavelet , MFCCs RADVESS,4 emotions: SVM:70%

LDA Angry, Happy , Neutral Sad LDA:65%

Dtree -Trained on sample (30-40) Dtree:85%

out of each emotion (Evaluation criteria not specified)

MLP Feature-set2 RADVESS,8 emotions SVM+IS09: 70.56%

Proposed SVM IS09 SVM+FS2: 70.42%

MLP+FS2: 68.06%

MLP+FS2:82.22% - 4 emo

(using 10 folds)

SVM+FS2: 77.08%

SVM+IS09:72.92%

(using 90:10 data split)

978-1-6654-4076-9/21/$31.00 ©2021 IEEE

A comparison between our results and previous work is as 50.00%

shown in Table.7 we tried to follow same evaluation criteria EmoDB

of each paper. This research shows promising results in 40.00%

CaFE

Accuracy

comparison with previous work where it enhanced 4.47% in 30.00%

EmoDB Recognition rate in comparison with paperwork

[29] as they used improved MFMC to train SVM model. 20.00%

For, RADVESS our model achieved 4.54% better than SER 10.00%

in paper [2] and a 6.25% performance increase than SER

system in paper [29]. Also, we trained our model on 4 0.00%

emotions to compare our results versus paper [24] which MLP SVM RF LR

achieved 85% using Dtree on RADVESS data subset Classifer

compared to our model which achieved 82.22% when Fig.2 – SER Accuracy Training on RADVESS

trained on the whole dataset. Tr

Fig.3 shows the results obtained when training on both

250 corpuses EmoDB and CaFE while using RADVESS as test

RADVESS data. A recognition rate of 46.67% was achieved using

200

EmoDB Random Forest. The anger recognition gets 56%, neutral

Time in seconds

150 43%, happy 33%, and sad 43%.Fig.4 shows the confusion

CaFE matrix where it can be seen that neutral is being mixed up

100

with sad in 38 test samples.

50

48.00%

0 RADVESS

46.00%

Training time

Training time

Training time

Training time

Testing time

Testing time

Testing time

Testing time

Accuracy

44.00%

42.00%

40.00%

MLP SVM RF LR

38.00%

Classifer MLP SVM RF LR

Fig.1- Model running performance using feature-set2 Classifer

Fig.3 – SER Accuracy training on EmoDB and CaFE

Fig.1 shows analyses for model performance where it was

found that MLP takes the longest time to train the model and

it was expected as it’s a feed forward Neural Network Model

with 400 neuron.Specially, if the data size is big as incase of

RADVESS corpus it took around 227 seconds. Random

Forest comes in second place and it’s justified as it’s from

the ensemble models category and takes time to run through

the whole trees. It took 10 seconds for RADVESS and 4

seconds for CaFE. SVM showed the best training and testing

time even over Logistic Regression.

4.2 Cross corpus SER

In this study, we present the results of cross-corpus Fig.4 – Confusion matrix when training on EmoDB and CaFE

training where we explored the performance of the model and testing on RADVESS

using the cross-corpus dataset. During this experiment we Table.8 shows the prediction results when using cross

used Featureset-2 on the 4 common emotions on corpuses: corpus as training datasets composing the 3 languages.

angry, happy, neutral and sad. Data were split to train-test Promising results were found where, RADVESS gets

80:20 ratio. Firstly we used RADVSESS as a training corpus 79.26%, EmoDB 88.24% and CaFE gets 82.35%. These

since it’s the largest and tested on the other 2 datasets as findings show very promising potential to our model,

shown in fig.2.The model achieved an accuracy of 41.18% emphasize model generalization. We believe that better

on EmoDB as test data using Random Forest as a classifier. results can be obtained if the model is enriched with more

Also, we achieved an accuracy of 43.56% on EmoDB as test training samples from cross corpuses.

data and MLP as a classifier.

978-1-6654-4076-9/21/$31.00 ©2021 IEEE

Table.9 shows a comparison between our propsed SER [2] A. J. and R. A. L. Matsane, “The use of Automatic Speech Recognition in

education for identifying attitudes of the Speakers,” in IEEE Asia-Pacific Conference

system and previous work.Authors used many cross-corpus on Computer Science and Data Engineering (CSDE), 2020.

combinations to explore the SER system cross-corpus [3] M. A. Rashidan et al., “Technology-Assisted Emotion Recognition for Autism

Spectrum Disorder (ASD) Children: A Systematic Literature Review,” IEEE Access,

geenralization performance. vol. 9, pp. 33638–33653, 2021.

[4] “sklearn.feature_selection.mutual_info_classif— scikit-learn 0.24.2

Table.8– Comparison of SER performance when trained on cross corpus documentation.” [Online]. Available: https://scikit-

learn.org/stable/modules/generated/sklearn.feature_selection.mutual_info_classif.html.

Corpus MLP SVM Random Logistic [Accessed: 18-May-2021].

Forest Regression [5] M. B. Akçay and K. Oğuz, “Speech emotion recognition: Emotional models,

databases, features, preprocessing methods, supporting modalities, and classifiers,”

RADVESS 74.81% 79.26% 79.26% 65.19% Speech Commun., vol. 116, no. October 2019, pp. 56–76, 2020.

EmoDB 83.82% 88.24% 88.24% 66.34% [6] L. Abdel-Hamid, “Egyptian Arabic speech emotion recognition using prosodic,

CaFE 65.35% 70.30% 64.36% 82.35% spectral and wavelet features,” Speech Commun., vol. 122, pp. 19–30, 2020

[7] S. Mirsamadi, E. Barsoum and C. Zhang, "Automatic speech emotion

Table.9– Comparison of Cross-Corpus SER with prevous work recognition using recurrent neural networks with local attention," 2017 IEEE

International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2017,

Paper Classifier Testing Training corpus Accuracy pp. 2227-2231

corpus (%) [8] M. El Ayadi, M. S. Kamel, and F. Karray, “Survey on speech emotion

recognition: Features, classification schemes, and databases,” Pattern Recognit., vol.

W. Zehra1 et SMO EmoDB URDU 63.26 44, no. 3, pp. 572–587, 2011.

al (2021) [26] [9] S. Lalitha, D. Geyasruti, R. Narayanan, and M. Shravani, “Emotion Detection

Using MFCC and Cepstrum Features,” Procedia Comput. Sci., vol. 70, pp. 29–35,

M. Mustaqee DSCNN RADVESS IEMOCAP 56.5

2015.

et al (2020)

[10] K. A. Araño, P. Gloor, C. Orsenigo, and C. Vercellis, “When Old Meets New:

[15]

Emotion Recognition from Speech Signals,” Cognit. Comput., no. April, 2021.

CNN- RADVESS IEMOCAP 41.9

[11] S. G. Koolagudi, Y. V. S. Murthy, and S. P. Bhaskar, “Choice of a classifier,

J. Parry et al LSTM

based on properties of a dataset: case study-speech emotion recognition,” Int. J.

(2019) [25] CNN RADVESS IEMOCAP, 65.67 Speech Technol., vol. 21, no. 1, pp. 167–183, 2018.

CNN- EmoDB EMOVO,EmoDB, 69.72 [12] S. Goel and H. Beigi, “Cross-Lingual Cross-Corpus Speech Emotion

LSTM EPST,RAVDESS, Recognition,” arXiv, 2020.

SAVEE [13] Z. T. Liu, A. Rehman, M. Wu, W. H. Cao, and M. Hao, “Speech emotion

SVM EmoDB EmoDB, CaFE, 88.24 recognition based on formant characteristics feature extraction and phoneme type

SVM RADVESS RADVESS 79.26 convergence,” Inf. Sci. (Ny)., vol.

Proposed [14] A. Bhavan, P. Chauhan, Hitkul, and R. R. Shah, “Bagged support vector

Random RADVESS EmoDB, CaFE 46.67

machines for emotion recognition from speech,” Knowledge-Based Syst., vol. 184, p.

Forest

104886, 2019.

MLP EmoDB RADVESS 43.56

[15] Mustaqeem and S. Kwon, “A CNN-assisted enhanced audio signal processing

for speech emotion recognition,” Sensors (Switzerland), vol. 20, no. 1, 2020.

[16] N. Vryzas, L. Vrysis, M. Matsiola, R. Kotsakis, C. Dimoulas, and G. Kalliris,

"Continuous Speech Emotion Recognition with Convolutional Neural Networks," J.

V.CONCLUSION Audio Eng. Soc., vol. 68, no. 1/2, pp. 14-24 , 2020.

[17] S. Livingstone and F. Russo, The Ryerson Audio-Visual Database of Emotional

In this paper, different speech features with their Speech and Song (RAVDESS), vol. 13. 2018.

[18] F. Burkhardt, A. Paeschke, M. Rolfes, W. Sendlmeier, and B. Weiss, “A

combinations were used to train 4 different ML models database of German emotional speech,” 9th Eur. Conf. Speech Commun. Technol., no.

where we studied the correlation between each feature and January, pp. 1517–1520, 2005.

[19] P. Gournay , O. Lahaie and R. Lefebvre , “A Canadian French Emotional

the classifier and which feature has the highest impact on Speech Dataset” ,18th ACM Multimedia Systems Conference , 2018.

each classifier and it was found that MFCC is one of the [20] M. B. Akçay and K. Oğuz, “Speech emotion recognition: Emotional models,

databases, features, preprocessing methods, supporting modalities, and

most dominant features across the 4 classifiers. We achieved classifiers,” Speech Commun., vol. 116, no. October 2019, pp. 56–76, 2020.

around 4.5% enhancement on results of both corpuses [21] “About openSMILE — openSMILE Documentation.”

[Online].Available:https://audeering.github.io/opensmile/about.html#capabilities.

EmoDB and RADVESS compared to the current SER. SVM [Accessed: 18-May-2021].

proved to be the most optimum classifier for SER when it [22] “Permutation feature importance with scikit-learn.”[Online]. Available:

https://scikit-learn.org/stable/modules/permutation_importance.html.

comes to accuracy and efficiency. MLP is a very promising [23] T. J. Sefara, “The Effects of Normalisation Methods on Speech Emotion

classifier that confirms the direction of research nowadays Recognition,” Proc. - 2019 Int. Multidiscip. Inf. Technol. Eng. Conf. IMITEC 2019,

no. November 2019, 2019.

towards neural networks with its different types. Moreover, [24] A. Koduru, H. B. Valiveti, and A. K. Budati, “Feature extraction algorithms to

we study the impact of training the model on different improve the speech emotion recognition rate,” Int. J. Speech Technol., vol. 23, no. 1,

pp. 45–55, 2020.

datasets and it proved its generalization capability. We [25] Parry, J., Palaz, D., Clarke, G., Lecomte, P., Mead, R., Berger, M.A., & Hofer,

reached promising results where recognition rates of the "Analysis of Deep Learning Architectures for Cross-Corpus Speech Emotion

Recognition", Interspeech, 2019.

cross-corpus system are 79.26% for RADVESS, 88.24% for [26] W. Zehra1, A. R. Javed2, Z. Jalil2, H. U. Khan3 and T. R. Gadekallu4, “Cross

EmoDB and 82.35% for CaFE. That’s of course can be corpus multi-lingual speech emotion recognition using ensemble learning,“ Complex

Intell. Syst., vol. 7, pp.1845–1854, 2021.

enhanced more in future work. [27] Klaylat, S., Osman, R. Zantout and Hamandi, L. , “Emotion recognition in Arabic

For future work, we can see great opportunity for speech, “Analog Integr Circ Sig Process”, vol. 96, pp.337–351, 2018

[28] Bhavan, A., Chauhan, P., Hitkul, and Shah, R.R., “Bagged support vector

ensemble learning classifiers and neural network classifiers machines for emotion recognition from speech”, “Knowl. Based Syst.”, vol.184 ,2019.

with their different types as CNN, LSTM and transfer [29] J. Ancilin and A. Milton, “Improved speech emotion recognition with Mel

frequency magnitude coefficient”,”Applied Acoustics”,vol. 179,pp.108046,2021

learning models. Also, consider adding more languages to [30] Z. Peng, Y. Lu, S. Pan and Y. Liu, "Efficient Speech Emotion Recognition Using

enrich the cross-corpus model. Multi-Scale CNN and Attention," ICASSP 2021 - 2021 IEEE International Conference

on Acoustics, Speech and Signal Processing (ICASSP), 2021, pp. 3020-3024

[31] N. -C. Ristea, L. C. Duţu and A. Radoi, "Emotion Recognition System from

VI.REFERENCES Speech and Visual Information based on Convolutional Neural Networks," 2019

International Conference on Speech Technology and Human-Computer Dialogue

[1] M. Bojanić, V. Delić, and A. Karpov, “Call redistribution for a call center based (SpeD), 2019, pp. 1-6

on speech emotion recognition,” Appl. Sci., vol. 10, no. 13, pp. 6–8, 2020. [32] Schuller, B., Steidl, S., and Batliner, A. ,” The INTERSPEECH 2009 emotion

challenge” , INTERSPEECH,2010.

978-1-6654-4076-9/21/$31.00 ©2021 IEEE

You might also like

- XEmoAccent Embracing Diversity in Cross-Accent EmoDocument19 pagesXEmoAccent Embracing Diversity in Cross-Accent Emoa.agrimaNo ratings yet

- Speech-Emotion-Recognition Using SVM, Decision Tree and LDA ReportDocument7 pagesSpeech-Emotion-Recognition Using SVM, Decision Tree and LDA ReportAditi BiswasNo ratings yet

- Bangla Speech Emotion Recognition and Cross-Lingual Study Using Deep CNN and BLSTM NetworksDocument15 pagesBangla Speech Emotion Recognition and Cross-Lingual Study Using Deep CNN and BLSTM NetworksJose AngelNo ratings yet

- Real-Time Speech Emotion Recognition Using Deep LeDocument40 pagesReal-Time Speech Emotion Recognition Using Deep LeKEY TECHNOLOGIESNo ratings yet

- Project ReportDocument106 pagesProject ReportPerinban DNo ratings yet

- Speech Emotion Recognition Using Deep LearningDocument6 pagesSpeech Emotion Recognition Using Deep LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Deep Learning Based Emotion Recognition System Using Speech Features and TranscriptionsDocument12 pagesDeep Learning Based Emotion Recognition System Using Speech Features and TranscriptionskunchalakalyanimscNo ratings yet

- Speech Emotion Recognition: Methods and Cases Study: January 2018Document9 pagesSpeech Emotion Recognition: Methods and Cases Study: January 2018nehaNo ratings yet

- Research PaperDocument5 pagesResearch PaperakshatNo ratings yet

- Arabic English Speech Emotion Recognition SystemDocument5 pagesArabic English Speech Emotion Recognition SystemSahar FawziNo ratings yet

- Automatic Speech Emotion Recognition Using Support Vector MachineDocument5 pagesAutomatic Speech Emotion Recognition Using Support Vector MachineRohit SoniNo ratings yet

- Paper IEEE EAIS 2020Document5 pagesPaper IEEE EAIS 2020vaseem akramNo ratings yet

- Speech Emotion Recognition: Submitted by Manoj Rajput 2019PEC5303Document11 pagesSpeech Emotion Recognition: Submitted by Manoj Rajput 2019PEC5303Girdhar Gopal GautamNo ratings yet

- Gender, Age and Emotion Recognition Using Speech AnalysisDocument6 pagesGender, Age and Emotion Recognition Using Speech AnalysisAnil Kumar BNo ratings yet

- Research Paper On Speech Emotion Recogtion SystemDocument9 pagesResearch Paper On Speech Emotion Recogtion SystemGayathri ShivaNo ratings yet

- Speech Emotion Recognition Using Deep Learning Techniques: A ReviewDocument19 pagesSpeech Emotion Recognition Using Deep Learning Techniques: A ReviewAkhila RNo ratings yet

- ASERS-LSTM: Arabic Speech Emotion Recognition System Based On LSTM ModelDocument9 pagesASERS-LSTM: Arabic Speech Emotion Recognition System Based On LSTM ModelsipijNo ratings yet

- Pre ProcessingDocument54 pagesPre Processingzelvrian 2No ratings yet

- A Comprehensive Review of Speech Emotion Recognition SystemsDocument20 pagesA Comprehensive Review of Speech Emotion Recognition SystemsR B SHARANNo ratings yet

- IJRPR4210Document12 pagesIJRPR4210Gayathri ShivaNo ratings yet

- Chethana H N REPORTDocument12 pagesChethana H N REPORTGirdhar Gopal GautamNo ratings yet

- Sensors: Speech Emotion Recognition With Heterogeneous Feature Unification of Deep Neural NetworkDocument15 pagesSensors: Speech Emotion Recognition With Heterogeneous Feature Unification of Deep Neural NetworkMohammed AnsafNo ratings yet

- Feeler: Emotion Classification of Text Using Vector Space ModelDocument7 pagesFeeler: Emotion Classification of Text Using Vector Space ModelJulius BataNo ratings yet

- An Ensemble of Traditional Supervised and Deep Learning Models For Arabic Text-Based Emotion Detection and AnalysisDocument7 pagesAn Ensemble of Traditional Supervised and Deep Learning Models For Arabic Text-Based Emotion Detection and AnalysisInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Emotion Recognition On Speech Signals Using Machine LearningDocument6 pagesEmotion Recognition On Speech Signals Using Machine LearningSoum SarkarNo ratings yet

- Getahun 2016Document8 pagesGetahun 2016Minda BekeleNo ratings yet

- Applsci 12 09188 v2Document17 pagesApplsci 12 09188 v2SAMIA FARAJ RAMADAN ABD-HOODNo ratings yet

- Information Sciences: Zhen-Tao Liu, Abdul Rehman, Min Wu, Wei-Hua Cao, Man HaoDocument17 pagesInformation Sciences: Zhen-Tao Liu, Abdul Rehman, Min Wu, Wei-Hua Cao, Man HaoAbdallah GrimaNo ratings yet

- Emotion and Depression Detection From SpeechDocument9 pagesEmotion and Depression Detection From SpeechJosemeire Silva Dos SantosNo ratings yet

- 10 - Recurrent Neural Network Based Speech EmotionDocument13 pages10 - Recurrent Neural Network Based Speech EmotionAlex HAlesNo ratings yet

- Análisis Del Movimiento Facial Más Allá de Las Expresiones Emocionales - CompressedDocument21 pagesAnálisis Del Movimiento Facial Más Allá de Las Expresiones Emocionales - CompressedDAVID MARTINEZNo ratings yet

- Emotion Classification Using Deep Learning TechniqueDocument21 pagesEmotion Classification Using Deep Learning TechniqueAbhimanyu PugazhandhiNo ratings yet

- Is Deception Emotional An Emotion DrivenDocument5 pagesIs Deception Emotional An Emotion DrivenDavidLeucasNo ratings yet

- (IJCST-V10I3P27) :abin Joseph, Ameena A.K, Lamia Thasni O Nysam, Abeera V PDocument10 pages(IJCST-V10I3P27) :abin Joseph, Ameena A.K, Lamia Thasni O Nysam, Abeera V PEighthSenseGroupNo ratings yet

- ASERS-CNN: Arabic Speech Emotion Recognition System Based On CNN ModelDocument9 pagesASERS-CNN: Arabic Speech Emotion Recognition System Based On CNN ModelsipijNo ratings yet

- Sensors 23 06212 v2Document20 pagesSensors 23 06212 v2vaseem akramNo ratings yet

- Emotion Recognition from Speech Using SER TechniqueDocument13 pagesEmotion Recognition from Speech Using SER TechniqueDeepak SubramaniamNo ratings yet

- Speech Communication: Qirong Mao, Guopeng Xu, Wentao Xue, Jianping Gou, Yongzhao ZhanDocument10 pagesSpeech Communication: Qirong Mao, Guopeng Xu, Wentao Xue, Jianping Gou, Yongzhao ZhanAbdallah GrimaNo ratings yet

- Effective Modelling of Human Expressive States From Voice by Adaptively Tuning The Neuro-Fuzzy Inference SystemDocument10 pagesEffective Modelling of Human Expressive States From Voice by Adaptively Tuning The Neuro-Fuzzy Inference SystemIAES IJAINo ratings yet

- Human Emotion Detection With Speech Recognition Using Mel-Frequency Cepstral Coefficient and CNN - NewDocument2 pagesHuman Emotion Detection With Speech Recognition Using Mel-Frequency Cepstral Coefficient and CNN - NewakshatNo ratings yet

- Speech Based Emotion Recognition Using Machine LearningDocument6 pagesSpeech Based Emotion Recognition Using Machine LearningIJRASETPublicationsNo ratings yet

- Winter Semester 2021-22 CSE4020-Machine Learning Digital Assignment-1Document20 pagesWinter Semester 2021-22 CSE4020-Machine Learning Digital Assignment-1STYXNo ratings yet

- Speech Emotion Recognition Using Machine Learning - A Systematic ReviewDocument25 pagesSpeech Emotion Recognition Using Machine Learning - A Systematic ReviewSustainable SushiNo ratings yet

- EpochSER MTADocument35 pagesEpochSER MTArjlhqNo ratings yet

- 1 PBDocument12 pages1 PBAbdallah GrimaNo ratings yet

- Classification of Emotions From Speech Using Implicit FeaturesDocument6 pagesClassification of Emotions From Speech Using Implicit Featuresswati asnotkarNo ratings yet

- Improving Hate Speech Detection of Urdu Tweets Using Sentiment AnalysisDocument10 pagesImproving Hate Speech Detection of Urdu Tweets Using Sentiment AnalysisMessalti Ahmed zoubirNo ratings yet

- Neutrosophic Speech Recognition Algorithm For Speech Under Stress by Machine LearningDocument12 pagesNeutrosophic Speech Recognition Algorithm For Speech Under Stress by Machine LearningScience DirectNo ratings yet

- A Review On Speech Recognition Methods: Ram Paul Rajender Kr. Beniwal Rinku Kumar Rohit SainiDocument7 pagesA Review On Speech Recognition Methods: Ram Paul Rajender Kr. Beniwal Rinku Kumar Rohit SainiRahul SharmaNo ratings yet

- An Evolutionary Optimization Method For Selecting Features For Speech Emotion RecognitionDocument9 pagesAn Evolutionary Optimization Method For Selecting Features For Speech Emotion RecognitionTELKOMNIKANo ratings yet

- Voice Recognizationusing SVMand ANNDocument6 pagesVoice Recognizationusing SVMand ANNZinia RahmanNo ratings yet

- Speech Emotion Recognition System Using Recurrent Neural Network in Deep LearningDocument7 pagesSpeech Emotion Recognition System Using Recurrent Neural Network in Deep LearningIJRASETPublicationsNo ratings yet

- AffectNet Onecolumn-2Document31 pagesAffectNet Onecolumn-2assasaa asasaNo ratings yet

- (IJIT-V6I5P9) :amarjeet SinghDocument9 pages(IJIT-V6I5P9) :amarjeet SinghIJITJournalsNo ratings yet

- Facial Emotion Recognition Using Convolution Neural Network: AbstractDocument6 pagesFacial Emotion Recognition Using Convolution Neural Network: AbstractKallu kaliaNo ratings yet

- Irjet V10i1154 240111110029 F406b7aaDocument6 pagesIrjet V10i1154 240111110029 F406b7aaR B SHARANNo ratings yet

- Emotion Detction research paperDocument4 pagesEmotion Detction research paperLibrarian SCENo ratings yet

- An Application: Emo-Prediction Using Sentiment AnalysisDocument3 pagesAn Application: Emo-Prediction Using Sentiment AnalysisInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Information Sciences: Luefeng Chen, Wanjuan Su, Yu Feng, Min Wu, Jinhua She, Kaoru HirotaDocument14 pagesInformation Sciences: Luefeng Chen, Wanjuan Su, Yu Feng, Min Wu, Jinhua She, Kaoru HirotaagrimaABDELLAHNo ratings yet

- Comparative Study On Stroke Lesion Core Segmentation in CTP ImagesDocument6 pagesComparative Study On Stroke Lesion Core Segmentation in CTP ImagesSahar FawziNo ratings yet

- Hala PaperDocument6 pagesHala PaperSahar FawziNo ratings yet

- Dahlia Conf - Paper Effat-V5Document4 pagesDahlia Conf - Paper Effat-V5Sahar FawziNo ratings yet

- Arabic English Speech Emotion Recognition SystemDocument5 pagesArabic English Speech Emotion Recognition SystemSahar FawziNo ratings yet

- Consistency Analysis - Nile Conference V1!7!2021Document4 pagesConsistency Analysis - Nile Conference V1!7!2021Sahar FawziNo ratings yet

- Quality Management Plan Airline Management SystemDocument7 pagesQuality Management Plan Airline Management SystemNisar Ahmad0% (1)

- GFRAS - NELK - Module 4 Professional EthicsDocument39 pagesGFRAS - NELK - Module 4 Professional EthicsAssisi-marillac GranteesNo ratings yet

- Parenting QuestionnaireDocument4 pagesParenting QuestionnaireshobhaNo ratings yet

- SportsDocument9 pagesSportsErin CarrollNo ratings yet

- A Project Report On Role of Packaging On Consumer Buying Behaviordocument TranscriptDocument36 pagesA Project Report On Role of Packaging On Consumer Buying Behaviordocument TranscriptIqra RajpootNo ratings yet

- Cheena Francesca Luciano - Q2-PERDEV-WEEK 4-WorksheetDocument3 pagesCheena Francesca Luciano - Q2-PERDEV-WEEK 4-WorksheetCheena Francesca LucianoNo ratings yet

- April 2020Document14 pagesApril 2020Aji saifullahNo ratings yet

- Administrative Law SyllabusDocument29 pagesAdministrative Law SyllabusAiman HassanNo ratings yet

- Guidelines in Writing A Movie ReviewDocument2 pagesGuidelines in Writing A Movie ReviewTer RisaNo ratings yet

- Module 4 - Motivation-1Document24 pagesModule 4 - Motivation-1Sujith NNo ratings yet

- Difference Between Fashion and StyleDocument2 pagesDifference Between Fashion and Stylewikki86No ratings yet

- Conference BrochureDocument2 pagesConference BrochureSri GaneshNo ratings yet

- Elementary Math Lessons Cover Counting, NumbersDocument10 pagesElementary Math Lessons Cover Counting, NumbersSHAIREL GESIMNo ratings yet

- Tesda Courses Offered in Cebu International AcademyDocument3 pagesTesda Courses Offered in Cebu International AcademyodesageNo ratings yet

- Thesis Statement and Topic Sentence ExercisesDocument5 pagesThesis Statement and Topic Sentence Exercisesgbv8rcfq100% (2)

- Graduate Student Yearly ReportDocument12 pagesGraduate Student Yearly Reportapi-655744515No ratings yet

- ArmDocument5 pagesArmMahrukh SaeedNo ratings yet

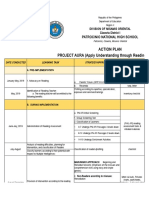

- Action Plan For Reading ProgramDocument11 pagesAction Plan For Reading ProgramVince Rayos Cailing100% (3)

- RationalPlan Desktop Project Management GuideDocument43 pagesRationalPlan Desktop Project Management GuidebayoakacNo ratings yet

- Ase2000 V2.28 Um PDFDocument292 pagesAse2000 V2.28 Um PDFamin rusydiNo ratings yet

- BGCSE PHYSICS Assessment SyllabusDocument41 pagesBGCSE PHYSICS Assessment Syllabustebogo modisenyaneNo ratings yet

- Analysis of Algorithms + McconnellDocument3 pagesAnalysis of Algorithms + McconnellNDNUPortfolioNo ratings yet

- Intro MS WordDocument23 pagesIntro MS Wordmonica saturninoNo ratings yet

- Brian Canuno - Decision Making ScenariosDocument3 pagesBrian Canuno - Decision Making ScenariosBrian TiangcoNo ratings yet

- Enhancing Opportunities For Nutrition Education in Public Schools in The PhilippinesDocument53 pagesEnhancing Opportunities For Nutrition Education in Public Schools in The PhilippinesLianne VergaraNo ratings yet

- Psy 1100 - Social Diversity Interview PaperDocument6 pagesPsy 1100 - Social Diversity Interview Paperapi-608744142No ratings yet

- Leeds University's 400 Arabic Manuscript CollectionDocument68 pagesLeeds University's 400 Arabic Manuscript CollectionAxx A AlNo ratings yet

- How To Develop A Super Memory and Learn Like A Genius With Jim Kwik Nov 2018 LaunchDocument12 pagesHow To Develop A Super Memory and Learn Like A Genius With Jim Kwik Nov 2018 LaunchCarolina Ávila67% (3)

- UAB Nursing CDP Log and AssignmentDocument2 pagesUAB Nursing CDP Log and AssignmentMichelle MorrisonNo ratings yet

- Ubs BrochureDocument44 pagesUbs BrochureSarabjit SinghNo ratings yet