Professional Documents

Culture Documents

Spark - RDD CS DESIGN

Spark - RDD CS DESIGN

Uploaded by

Ghada abbesOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Spark - RDD CS DESIGN

Spark - RDD CS DESIGN

Uploaded by

Ghada abbesCopyright:

Available Formats

SPARK & RDD

Function Transformations Description

Shared Variables on Action Functions Description

map(function) Returns a new RDD by applying function on element

Spark Returns a new dataset formed by selecting those

count() Get the number of data elements in the RDD

filter(function) collect() Get all the data elements in the RDD as an array

CHEAT SHEET

Broadcast variables: It is a read only variable which will be copied to the elements of the source on which function returns true

It is used to aggregate data elements into the RDD

worker only once. It is similar to the Distributor cache in MapReduce. reduce(function)

filterByRange(lower, upper) Returns an RDD with elements in the specified range by taking two arguments and returning one

We can set, destroy and unpersist these values. It is used to save the

take(n) It is used to fetch the first n elements of the RDD

copy of data across all the nodes It is similar to the map function but the function

Spark & RDD Basics

flatMap(function) It is used to execute function for each data element

Example syntax: returns a sequence instead of a value foreach(function)

in the RDD

broadcastVariable = sparkContext.broadcast(500) reduceByKey(function,[num Tasks]) It is used to aggregate values of a key using a function. first() It retrieves the first data element of the RDD

broadcastVariable.value It is used to write the content of RDD to a text file or

groupByKey([num Tasks]) To convert(K,V) to (K, <iterable V>) saveastextfile(path)

Apache Spark Accumulators: The worker can only add using an associative operation, it set of text files to the local system

is usually used in parallel sums and only a driver can read an accumulator takeordered(n, It will return the first n elements of RDD using either

It is an open source, Hadoop compatible fast and expressive cluster distinct([num Tasks]) This is used to eliminated duplicates from RDD

value. It is same as counter in MapReduce. Basically, accumulators are [ordering]) the natural order or a custom comparator

computing platform

variables that can be incremented in a distributed tasks and used for mapPartitions(function) Runs separately on each partition of RDD RDD Persistence Method

aggregating information Description

Functions

RDD Example syntax: mapPartitionsWithIndex(function)

Provides function with an integer value representing

It stores the RDD in an available cluster

the index of the partition MEMORY_ONLY (default level)

exampleAccumulator = sparkContext.accumulator(1) memory as deserialized Java object

The core concept in Apache Spark is RDD (Resilient Distributed Datasheet) , sample(withReplacement, Samples a fraction of data using a given random

exampleAccumulator.add(5) This will store RDD as a deserialized Java

which is an immutable distributed collection of data which is partitioned fraction, seed) number generating seeds

object. If the RDD does not fit in the cluster

across machines in a cluster. This returns a new RDD containing all elements and MEMORY_AND_DISK

union() memory it stores the partitions on the disk

Transformation: It is an operation on an RDD such as filter (), map () or Unified Libraries in arguments from source RDD

and reads them

union () that yields another RDD. Spark intersection()

Returns a new RDD that contains an intersection of

MEMORY_ONLY_SER

This stores RDD as a serialized Java object,

Action: It is an operation that triggers a computation such as count (), first elements in the datasets this is more CPU intensive

Spark SQL: It is a Spark module which allows working with structured

(), take(n) or collect (). Cartesian() Returns the Cartesian product of all pair of elements This option is same as above but stores in a

data. The data querying is supported by SQL or HQL MEMORY_ONLY_DISK_SER

Partition: It is a logical division of data stored on a node in a cluster disk when the memory is not sufficient

New RDD created by removing the elements from the

Spark Streaming: It is used to build scalable application which provides subtract() DISC_ONLY This option stores RDD only on the disk

source RDD in common with arguments

fault tolerant streaming. It also processes in real time using web server This is same as the other levels except that

Spark Context logs, Facebook logs etc. in real time.

It joins two elements of the dataset with common MEMORY_ONLY_2,

the partitions are replicated on 2 slave

join(RDD,[numTasks]) arguments. When invoked on (A,B) and (A,C) it MEMORY_AND_DISK_2, etc.

Mlib(Machine Learning): It is a scalable machine learning library and nodes

It holds a connection with spark cluster management

creates a new RDD (A,(B,C))

Driver: The process of running the main () function of an application and provides various algorithms for classification, regression, clustering etc. Persistence Method

Graph X: It is an API for graphs. This module can efficiently find the cogroup(RDD,[numTasks]) It converts (A,B) to (A, <iterable B>) Description

creating the SparkContext is managed by driver Function

Worker: Any node which can run program on the cluster is called worker shortest path for static graphs. It is used to avoid unnecessary recomputation .

Cluster Manager cache()

This is same as persist(MEMORY_ONLY)

Components of Spark RDD It is used to allocate resources to each application in a driver program. There are 3 types persist([Storage Level]) Persisting the RDD with the default storage level

Executors: It consists of multiple tasks; basically it is a JVM process sitting on of cluster managers which are supported by Apache Spark Marking the RDD as non persistent and removing

unpersist()

all nodes. Executors receive the tasks, deserialize it and run it as a task. Partitions • Standalone the block from memory and disk

Executors utilize cache so that so that the tasks can run faster. • Mesos It saves a file inside the checkpoint directory and

checkpoint()

Tasks: Jars along with the code is a task • Yarn all the reference of its parent RDD will be removed

Compute function

Node: It comprises of a multiple executors

Spark SQL Spark Mlib

Machine Learning GraphX

RDD: It is a big data structure which is used to represent data that cannot be Hive support Streaming

stored on a single machine. Hence, the data is distributed, partitioned and Dependencies

Apache Spark (Core engine)

split across the computers.

Tachyon

Input: Every RDD is made up of some input such as a text file, Hadoop file

Meta-data (opt) Distributed Memory-centric Storage system

etc.

Hadoop Distributed File System

Output: An output of functions in Spark can produce RDD, it is functional as

a function one after other receives an input RDD and outputs an output Partioner (opt) MESOS or YARN

FURTHERMORE:

RDD. Cluster Resource manager Spark Certification Training Course

You might also like

- Spark InterviewDocument17 pagesSpark InterviewDastagiri SahebNo ratings yet

- SELLICS-Amazon PPC TheUltimateGuideDocument30 pagesSELLICS-Amazon PPC TheUltimateGuideSaad ButtNo ratings yet

- PySpark Transformations TutorialDocument58 pagesPySpark Transformations Tutorialravikumar lanka100% (1)

- Sap HR Abap Interview FaqDocument6 pagesSap HR Abap Interview Faqvaibhavsam0766No ratings yet

- Spark ArchitectureDocument12 pagesSpark ArchitectureabikoolinNo ratings yet

- Ch08 Physical Model TemplateDocument6 pagesCh08 Physical Model TemplateG Chandra ShekarNo ratings yet

- GEH-6721 Vol II PDFDocument908 pagesGEH-6721 Vol II PDFMARIO OLIVIERINo ratings yet

- Vdoc - Pub Processing An Introduction To ProgrammingDocument571 pagesVdoc - Pub Processing An Introduction To ProgrammingAxel Ortiz RicaldeNo ratings yet

- 4 - Action and RDD TransformationsDocument25 pages4 - Action and RDD Transformationsravikumar lankaNo ratings yet

- SparkDocument17 pagesSparkRavi KumarNo ratings yet

- Spark Transformations and ActionsDocument24 pagesSpark Transformations and ActionschandraNo ratings yet

- Based On Various Online Platform Resources Like IITK, IITM, Simplilearn, Upgrad, Coursera EtcDocument28 pagesBased On Various Online Platform Resources Like IITK, IITM, Simplilearn, Upgrad, Coursera EtcJCTNo ratings yet

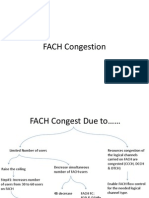

- FACH CongestionDocument12 pagesFACH Congestionshawky_menisy100% (2)

- 4a Resilient Distributed Datasets Etc PDFDocument46 pages4a Resilient Distributed Datasets Etc PDF23522020 Danendra Athallariq Harya PNo ratings yet

- SparkDocument13 pagesSparkthunuguri santoshNo ratings yet

- Ab Initio - V1.4Document15 pagesAb Initio - V1.4Praveen JoshiNo ratings yet

- Unit-5 SparkDocument24 pagesUnit-5 Sparknosopa5904No ratings yet

- BDA-MapReduce (1) 5rfgy656yhgvcft6Document60 pagesBDA-MapReduce (1) 5rfgy656yhgvcft6Ayush JhaNo ratings yet

- Lecture 6.5 - Python Map FunctionDocument7 pagesLecture 6.5 - Python Map Functiondidarulislam2460No ratings yet

- Open Spark ShellDocument12 pagesOpen Spark ShellRamyaKrishnanNo ratings yet

- Cloud Computing ProfDocument11 pagesCloud Computing Profniveshgarg2025No ratings yet

- Big Data - SparkDocument72 pagesBig Data - SparkSuprasannaPradhan100% (1)

- Map ReduceDocument8 pagesMap ReduceVishakha SharmaNo ratings yet

- Unit 3Document27 pagesUnit 3Radhamani VNo ratings yet

- BDA Unit-2Document11 pagesBDA Unit-2Varshit KondetiNo ratings yet

- Hadoop (Mapreduce)Document43 pagesHadoop (Mapreduce)Nisrine MofakirNo ratings yet

- Unit-2 Bda Kalyan - PagenumberDocument15 pagesUnit-2 Bda Kalyan - Pagenumberrohit sai tech viewersNo ratings yet

- Distributed and Cloud ComputingDocument58 pagesDistributed and Cloud Computing18JE0254 CHIRAG JAINNo ratings yet

- Big Data Analysis With Scala and Spark: Heather MillerDocument17 pagesBig Data Analysis With Scala and Spark: Heather MillerddNo ratings yet

- New 78Document1 pageNew 78Ramanjaneyulu KancharlaNo ratings yet

- New 79Document1 pageNew 79Ramanjaneyulu KancharlaNo ratings yet

- RDD Programing 8Document28 pagesRDD Programing 8chandrasekhar yerragandhulaNo ratings yet

- Introduction To Big Data With Apache Spark: Uc BerkeleyDocument43 pagesIntroduction To Big Data With Apache Spark: Uc BerkeleyKarthigai SelvanNo ratings yet

- Map Reduce Tutorial-1Document7 pagesMap Reduce Tutorial-1jefferyleclercNo ratings yet

- DSBDA Manual Assignment 11Document6 pagesDSBDA Manual Assignment 11kartiknikumbh11No ratings yet

- Unit 4-1Document6 pagesUnit 4-1chirag suresh chiruNo ratings yet

- New 77Document1 pageNew 77Ramanjaneyulu KancharlaNo ratings yet

- BDA Lab 5Document6 pagesBDA Lab 5Mohit GangwaniNo ratings yet

- Implementation of K-Means Algorithm Using Different Map-Reduce ParadigmsDocument6 pagesImplementation of K-Means Algorithm Using Different Map-Reduce ParadigmsNarayan LokeNo ratings yet

- Map ReduceDocument18 pagesMap ReduceSoni NsitNo ratings yet

- Unit 4 Iot II ..Document19 pagesUnit 4 Iot II ..Keerthi SadhanaNo ratings yet

- Hadoop KaruneshDocument14 pagesHadoop KaruneshMukul MishraNo ratings yet

- Pyspark Questions & Scenario BasedDocument25 pagesPyspark Questions & Scenario BasedSowjanya VakkalankaNo ratings yet

- Task SparkDocument4 pagesTask SparkAzza A. AzizNo ratings yet

- Bda Expt5 - 60002190056Document5 pagesBda Expt5 - 60002190056krNo ratings yet

- Map ReduceDocument40 pagesMap ReduceHareen ReddyNo ratings yet

- What Is MapReduceDocument6 pagesWhat Is MapReduceSundaram yadavNo ratings yet

- Spark Transformations and ActionsDocument4 pagesSpark Transformations and ActionsjuliatomvaNo ratings yet

- Map ReduceDocument10 pagesMap ReduceVikas SinhaNo ratings yet

- 2011 Hyunjung Park VLDB Map-Reduce, RAMP, Big DataDocument4 pages2011 Hyunjung Park VLDB Map-Reduce, RAMP, Big DataSunil ThaliaNo ratings yet

- Big Data 4 VivekDocument3 pagesBig Data 4 VivekPulkit AhujaNo ratings yet

- Unit Ii Iintroduction To Map ReduceDocument4 pagesUnit Ii Iintroduction To Map ReducekathirdcnNo ratings yet

- System Design and Implementation 5.1 System DesignDocument14 pagesSystem Design and Implementation 5.1 System DesignsararajeeNo ratings yet

- Big Data Analytics (2017 Regulation) : Hadoop Distributed File System (HDFS)Document7 pagesBig Data Analytics (2017 Regulation) : Hadoop Distributed File System (HDFS)cskinitNo ratings yet

- Map Reduce AlgorithmDocument2 pagesMap Reduce AlgorithmjefferyleclercNo ratings yet

- Hadoop Interview Questions FaqDocument14 pagesHadoop Interview Questions FaqmihirhotaNo ratings yet

- 1 Purpose: Single Node Setup Cluster SetupDocument1 page1 Purpose: Single Node Setup Cluster Setupp001No ratings yet

- Basics of HadoopDocument19 pagesBasics of HadoopvenkataNo ratings yet

- Chapter 4 - Understanding Map Reduce FundamentalsDocument45 pagesChapter 4 - Understanding Map Reduce FundamentalsWEGENE ARGOWNo ratings yet

- Hadoop ArchitectureDocument8 pagesHadoop Architecturegnikithaspandanasridurga3112No ratings yet

- 621 RcmdsFromClassDocument17 pages621 RcmdsFromClassFaizan SiddiquiNo ratings yet

- YARNDocument52 pagesYARNrajaramduttaNo ratings yet

- Master The Mapreduce Computational Engine in This In-Depth Course!Document2 pagesMaster The Mapreduce Computational Engine in This In-Depth Course!Sumit KNo ratings yet

- Friends Around The World While Playing Games Online? As of This Year, There Are 300 MillionDocument3 pagesFriends Around The World While Playing Games Online? As of This Year, There Are 300 MillionSlodineNo ratings yet

- Best Practice #2 - Choose MQTT As Your IIoT Messaging ProtocolDocument2 pagesBest Practice #2 - Choose MQTT As Your IIoT Messaging ProtocolAbilio JuniorNo ratings yet

- ITCC in Riyadh Residential Complex J10-13300 16787-1 MATV System SystemDocument9 pagesITCC in Riyadh Residential Complex J10-13300 16787-1 MATV System SystemuddinnadeemNo ratings yet

- KM Overview by Joe Hessmiller of CAIDocument38 pagesKM Overview by Joe Hessmiller of CAIhessmijNo ratings yet

- Avtron Manual LinkDocument3 pagesAvtron Manual LinkFranklin Abel Sonco CruzNo ratings yet

- Annapolis Micro Systems, Inc. WILDSTAR™ 5 For PCI-Express: Virtex 5 Based Processor BoardDocument4 pagesAnnapolis Micro Systems, Inc. WILDSTAR™ 5 For PCI-Express: Virtex 5 Based Processor BoardRon HuebnerNo ratings yet

- 7897 BDocument368 pages7897 BfroxplusNo ratings yet

- Assumptions and DependenciesDocument3 pagesAssumptions and Dependencieshardik_ranupraNo ratings yet

- Survey MonkeyDocument5 pagesSurvey MonkeyTiana LowNo ratings yet

- Msbte QB Ste (22518)Document9 pagesMsbte QB Ste (22518)Jay BhakharNo ratings yet

- Serial Communications: ObjectivesDocument26 pagesSerial Communications: ObjectivesAmy OliverNo ratings yet

- Tranceiver Product CatalogDocument48 pagesTranceiver Product CatalogAT'on 張伊源No ratings yet

- Khurshid CVDocument3 pagesKhurshid CVtradingview2406No ratings yet

- Resume CV AWDocument1 pageResume CV AWAdriano WendlerNo ratings yet

- Arria 10 HPS External Memory Interface Guidelines: Quartus Prime Software v17.0Document13 pagesArria 10 HPS External Memory Interface Guidelines: Quartus Prime Software v17.0Bandesh KumarNo ratings yet

- 10 Deadly Sins in Incident HandlingDocument8 pages10 Deadly Sins in Incident Handlingdanielfiguero5293100% (1)

- About The Joint Industry Project READI and Main Achievements, Erik Østby and Paal Frode LarsenDocument12 pagesAbout The Joint Industry Project READI and Main Achievements, Erik Østby and Paal Frode LarsenSriramNo ratings yet

- PrestaShop Developer Guide PDFDocument36 pagesPrestaShop Developer Guide PDFandrei_bertea7771No ratings yet

- TN Installation Mov11Document10 pagesTN Installation Mov11khaldoun samiNo ratings yet

- Security PDFDocument251 pagesSecurity PDFEdison Caiza0% (1)

- Mcafee Epolicy Orchestrator 5.10.0 Product Guide 1-31-2023Document483 pagesMcafee Epolicy Orchestrator 5.10.0 Product Guide 1-31-2023Eric CastilloNo ratings yet

- Program Book SIET 2019Document21 pagesProgram Book SIET 2019Dian ParamithaNo ratings yet

- INFO - ImportanteDocument1 pageINFO - ImportanteDio FubyNo ratings yet

- Exam 98-367:: Security FundamentalsDocument2 pagesExam 98-367:: Security FundamentalsKIERYE100% (1)