Professional Documents

Culture Documents

Kang (2021)

Uploaded by

Syara Shazanna ZulkifliCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Kang (2021)

Uploaded by

Syara Shazanna ZulkifliCopyright:

Available Formats

eISSN: 1975-5937

Open Access

Review Journal of Educational Evaluation

J Educ Eval Health Prof 2021;18:17 • https://doi.org/10.3352/jeehp.2021.18.17 for Health Professions

Sample size determination and power analysis using the

G*Power software

Hyun Kang*

Department of Anesthesiology and Pain Medicine, Chung-Ang University College of Medicine, Seoul, Korea

Appropriate sample size calculation and power analysis have become major issues in research and publication processes. However, the

complexity and difficulty of calculating sample size and power require broad statistical knowledge, there is a shortage of personnel with

programming skills, and commercial programs are often too expensive to use in practice. The review article aimed to explain the basic

concepts of sample size calculation and power analysis; the process of sample estimation; and how to calculate sample size using

G*Power software (latest ver. 3.1.9.7; Heinrich-Heine-Universität Düsseldorf, Düsseldorf, Germany) with 5 statistical examples. The

null and alternative hypothesis, effect size, power, alpha, type I error, and type II error should be described when calculating the sample

size or power. G*Power is recommended for sample size and power calculations for various statistical methods (F, t, χ2, Z, and exact

tests), because it is easy to use and free. The process of sample estimation consists of establishing research goals and hypotheses, choos-

ing appropriate statistical tests, choosing one of 5 possible power analysis methods, inputting the required variables for analysis, and se-

lecting the “calculate” button. The G*Power software supports sample size and power calculation for various statistical methods (F, t, χ2,

z, and exact tests). This software is helpful for researchers to estimate the sample size and to conduct power analysis.

Keywords: Biometry; Correlation of data; Research personnel; Sample size; Software

Introduction population, making it very important to determine the appropri-

ate sample size to answer the research question [1,2]. However,

Background/rationale the sample size is often arbitrarily chosen or reflects limits of re-

If research can be conducted among the entire population of source allocation. However, this method of determination is of-

interest, the researchers would obtain more accurate findings. ten not scientific, logical, economical, or even ethical.

However, in most cases, conducting a study of the entire popula- From a scientific viewpoint, research should provide an accu-

tion is impractical, if not impossible, and would be inefficient. In rate estimate of the therapeutic effect, which may lead to evi-

some cases, it is more accurate to conduct a study of appropriate- dence-based decisions or judgments. Studies with inappropriate

ly selected samples than to conduct a study of the entire popula- sample sizes or powers do not provide accurate estimates and

tion. Therefore, researchers use various methods to select sam- therefore report inappropriate information on the treatment ef-

ples representing the entire population, to analyze the data from fect, making evidence-based decisions or judgments difficult. If

the selected samples, and to estimate the parameters of the entire the sample size is too small, even if a large therapeutic effect is

observed, the possibility that it could be caused by random varia-

tions cannot be excluded. In contrast, if the sample size is too

*Corresponding email: roman00@cau.ac.kr

large, too many variables—beyond those that researchers want

Editor: Sun Huh, Hallym University, Korea

Received: June 27, 2021; Accepted: July 12, 2021 to evaluate in the study—may become statistically significant.

Published: July 30, 2021 Some variables may show a statistically significant difference,

This article is available from: http://jeehp.org even if the difference is not meaningful. Thus, it may be difficult

2021 Korea Health Personnel Licensing Examination Institute

This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in

any medium, provided the original work is properly cited.

www.jeehp.org (page number not for citation purposes) 1

J Educ Eval Health Prof 2021;18:17 • https://doi.org/10.3352/jeehp.2021.18.17

to determine which variables are valid. Null and alternative hypotheses

From an economic point of view, studies with too large a sam- A hypothesis is a testable statement of what researchers predict

ple size may lead to a waste of time, money, effort, and resources, will be the outcome of a trial. There are 2 basic types of hypothe-

especially if availability is limited. Studies with too small a sample ses: the null hypothesis and the alternative hypothesis. H0: The

size provide low power or imprecise estimates; therefore, they null hypothesis is a statement that there is no difference between

cannot answer the research questions, which also leads to a waste groups in terms of a mean or proportion. H1: The alternative hy-

of time, money, effort, and resources. For this reason, considering pothesis is contradictory to the null hypothesis.

limited resources and budget, sample size calculation and power

analysis may require a trade-off between cost-effectiveness and Effect size

power [3,4]. The effect size shows the difference or strength of relation-

From an ethical point of view, studies with too large a sample ships. It also represents a minimal clinically meaningful differ-

size cause the research subjects to waste their effort and time, ence [12]. As the size, distribution, and units of the effect size

and may also expose the research subjects to more risks and in- vary between studies, standardization of the effect size is usually

conveniences. performed for sample size calculation and power analysis.

Considering these scientific, economic, and ethical aspects, The choice of the effect size may vary depending on the study

sample size calculation is critical for research to have adequate design, outcome measurement method, and statistical method

power to show clinically meaningful differences. Some investiga- used. Of the many different suggested effect sizes, the G*Power

tors believe that underpowered research is unethical, except for software automatically provides the conventional effect size val-

small trials of interventions for rare diseases and early phase trials ues suggested by Cohen by moving the cursor onto the blank re-

in the development of drugs or devices [5]. gion of “effect size” in the “input parameters” field [12].

Although there have been debates on sample size calculation

and power analysis [3,4,6], the need for an appropriate sample Power, alpha, type I error, and type II error

size calculation has become a major trend in research [1,2,7-11]. A type I error, or false positive, is the error of rejecting a null

hypothesis when it is true, and a type II error, or false negative, is

Objectives the error of accepting a null hypothesis when the alternative hy-

This study aimed to explain the basic concepts of sample size pothesis is true.

calculation and power analysis; the process of sample estimation; Intuitively, type I errors occur when a statistically significant

and how to calculate sample size using the G*Power software difference is observed, despite there being no difference in reali-

(latest ver. 3.1.9.7; Heinrich-Heine-Universität Düsseldorf, Düs- ty, and type II errors occur when a statistically significant differ-

seldorf, Germany; http://www.gpower.hhu.de/) with 5 statistical ence is not observed, even when there is truly a difference (Ta-

examples. ble 1). In Table 1, the significance level (α) represents the maxi-

mum allowable limit of type I error, and the power represents

Basic concept: what to know before the minimum allowable limit of accepting the alternative hy-

performing sample size calculation and pothesis when the alternative hypothesis is true.

power analysis If the results of a statistical analysis are non-significant, there

are 2 possibilities for the non-significant results: (1) correctly ac-

In general, the sample size calculation and power analysis are cepting the null hypothesis when the null hypothesis is true and

determined by the following factors: effect size, power (1-β), sig- (2) erroneously accepting the null hypothesis when the alterna-

nificance level (α), and type of statistical analysis [1,7]. The In- tive hypothesis is true. The latter occurs when the research meth-

ternational Committee of Medical Journal Editors recommends od does not have enough power. If the power of the study is not

that authors describe statistical methods with sufficient detail to

enable a knowledgeable reader with access to the original data to

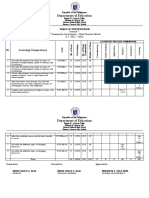

Table 1. Types of statistical error and power and confidence levels

verify the reported results [12], and the same principle should be

Decision

followed for the description of sample size calculation or power Null hypothesis

Accept Ho Reject Ho

analysis. Thus, the following factors should be described when

Ho is true Correct (confidence level, 1-α) Type I error (α)

calculating the sample size or power. Ho is false Type II error (β) Correct (power, 1-β)

H0, null hypothesis.

www.jeehp.org (page number not for citation purposes) 2

J Educ Eval Health Prof 2021;18:17 • https://doi.org/10.3352/jeehp.2021.18.17

known, it is not possible to interpret whether the negative results “statistical test” drop-down menu. This can also be carried out by

are due to possibility (1) or possibility (2). Thus, it is important selecting the variable (correlation and regression, means, propor-

to consider power when planning studies. tions, variance, and generic) and the study design for which sta-

tistical tests are performed from the test menu located at the top

Process of sample size calculation and of the screen and sub-menu.

power analysis

Third, choose 1 of 5 possible power analysis methods

In many cases, the process of sample size calculation and pow- This choice can be made by considering the variables to be cal-

er analysis is too complex and difficult for common programs to culated and the given variables. Researchers can select 1 of the 5

be feasible. To calculate sample size or perform power analysis, following types in the “type of power analysis” drop-down menu

some programs require a broad knowledge of statistics and/or (Table 2).

software programming, and other commercial programs are too An a priori analysis is a sample size calculation performed be-

expensive to use in practice. fore conducting the study and before the design and planning

To avoid the need for extensive knowledge of statistics and stage of the study; thus, it is used to calculate the sample size N,

software programming, herein, we demonstrate the process of which is necessary to determine the effect size, desired α level,

sample size and power calculation using the G*Power software, and power level (1-β). As an a priori analysis provides a method

which has a graphical user interface (GUI). The G*Power soft- for controlling type I and II errors to prove the hypothesis, it is

ware is easy to use for calculating sample size and power for vari- an ideal method of sample size and power calculation.

ous statistical methods (F, t, χ2, Z, and exact tests), and can be In contrast, a post-hoc analysis is typically conducted after the

downloaded for free at www.psycho.uni-duesseldorf.de/abteilun- completion of the study. As the sample size N is given, the power

gen/aap/gpower3. G*Power also provides effect size calculators level (1-β) is calculated using the given N, the effect size, and the

and graphics options. Sample size and power calculations using desired α level. Post-hoc power analysis is a less ideal type of sam-

G*Power are generally performed in the following order. ple size and power calculation than a priori analysis as it only

controls α, and not β. Post-hoc power analysis is criticized be-

First, establish the research goals and hypotheses cause the type II error calculated using the results of negative

The research goals and hypotheses should be elucidated. The clinical trials is always high, which sometimes leads to incorrect

null and alternative hypotheses should be presented, as discussed conclusions regarding power [13,14]. Thus, post-hoc power

above. analysis should be cautiously used for the critical evaluation of

studies with large type II errors.

Second, choose appropriate statistical tests

G*Power software provides statistical methods in these 2 ways. Fourth, input the required variables for analysis and select

the “calculate” button

Distribution-based approach In the “input parameters” area of the main window of G*Pow-

Investigators can select the distribution-based approach (exact, er, the required variables for analysis can be entered. If informa-

F, t, χ2, and z tests) using the “test family” drop-down menu. tion is available to calculate the effect size from a pilot study or a

previous study, the effect size calculator window can be opened

Design-based approach by checking the “determine→” button, and the effect size can be

Investigators can select the design-based approach using the calculated using this information.

Table 2. Power analysis methods

Type Independent variable Dependent variable

1. A priori Power (1-β), significance level (α), and effect size N

2. Compromise Effect size, N, q= β/α Power (1-β), significance level (α)

3. Criterion Power (1-β), effect size, N Significance level (α), criterion

4. Post-hoc Significance level (α), effect size, N Power (1-β)

5. Sensitivity Significance level (α), power (1-β), N Effect size

N, sample size; q=β/α, error probability ratio, which indicates the relative proportionality or disproportionality of the 2 values.

www.jeehp.org (page number not for citation purposes) 3

J Educ Eval Health Prof 2021;18:17 • https://doi.org/10.3352/jeehp.2021.18.17

Five statistical examples of using G*Power Example: a priori

Let us assume that researchers are planning a study to investi-

G*Power shows the following menu bars at the top of the gate the analgesic efficacy of 2 drugs. Drug A has traditionally

main window when the program starts up: “file,” “edit,” “view,” been used for postoperative pain control and drug B is a newly

“tests,” “calculator,” and “help” (Fig. 1A). Under these menu developed drug. In order to compare the efficacy of drug B with

bars, there is another row of tabs, namely, “central and noncen- that of drug A, a pain score using a Visual Analog Scale (VAS)

tral distributions” and “protocol of power analyses.” The “central will be measured at 6 hours postoperation. The researchers want

and noncentral distribution” tab shows the distribution plot of to determine the sample size for the null hypothesis to be reject-

null and alternative hypotheses and α and β values (Fig. 1B). ed with a 2-tailed test, α = 0.05, and β = 0.2. The number of pa-

Moreover, checking the “protocol of power analyses” tab shows tients in each group is equal.

the results of the calculation, including the name of the test, type

of power analysis, and input and output parameters, which can When the effect size is determined: If the effect size to be found

be cleared, saved, and printed using the “clear,” “save,” and is determined, the procedure for calculating the sample size is

“print” buttons, respectively, which are located at the right side very easy. For the sample size calculation of a statistical test,

of the results (Fig. 1C). G*Power provides the effect size conventions as “small,” “medi-

In the middle of the main screen, drop-down menus named um” and “large,” based on Cohen’s suggestions [12]. These pro-

“test family,” “statistical test,” and “type of power analysis” are lo- vide conventional effect size values that are different for different

cated, where the appropriate statistical test and type of power tests.

analysis can be selected. Moving the cursor onto the blank of “effect size” in the “input

In the lower part, in the “input parameters” field, information parameters” field will show the conventional effect size values

regarding the sample size calculation or power analysis can be suggested by G*Power. For the sample size calculation of the

entered, and in the “output parameters” field, the results for sam- t-test, G*Power software provides the conventional effect size

ple size calculation or power analysis will appear. On the left side values of 0.2, 0.5, and 0.8 for small, medium, and large effect siz-

of the “input parameters” field, there is a “determine→” button. es, respectively. In this case, we attempted to calculate the sample

Clicking the “determine→” button will lead to the effect size cal- size using a medium effect size (0.5).

culator window, where the effect size can be calculated by input- After opening G*Power, go to “test > means > two independent

ting information (Fig. 1D). groups.” Then, the main window shown in Fig. 1A appears. Here,

In the lowest part of the main screen, there are 2 buttons as the sample size is calculated before the conduction of the

named “X-Y plot for a range of values” and “calculate.” Checking study, set “type of power analysis” as “A priori: compute required

the “X-Y plot for a range of values” button leads to the plot win- sample size-given α, power, and effect size.” Since the researchers

dow, where the graphs or tables for the α error probability, power decided to use a medium effect size, 2-sided testing, α = 0.05,

(1-β error probability), effect size, or total sample size can be ob- β = 0.2, and an equal sample size in both groups, select “two” for

tained (Fig. 1E). Checking the “calculate” button enables the cal- the “tail(s)” drop-down menu and input 0.5 for the blank of “ef-

culation of the sample size or power. fect size d,” 0.05 for the blank of “α err prob,” 0.8 for the blank of

“power (1-β err prob),” and 1 for the blank of “Allocation ratio

Examples 1. Two-sample or independent t-test: t-test N2/N1.” Upon pushing the “calculate” button, the sample size

The 2-sample t-test (also known as the independent t-test or for group 1, the sample size for group 2, and the total sample size

Student t-test) is a statistical test that compares the mean values will be computed as 64, 64, and 128, respectively, as shown in the

of 2 independent samples. The null hypothesis is that the differ- output parameters of the main window (Fig. 1B).

ence in group means is 0, and the alternative hypothesis is that Upon clicking on the “protocol of power analyses” tab in the

the difference in group means is different from 0. upper part of the window, the input and output of the power cal-

culation can be automatically obtained. The results can be

H0: μ1-μ2 = 0 cleared, saved, and printed using the “clear,” “save,” and “print”

H1: μ1-μ2≠0 buttons, respectively (Fig. 1C).

H0: null hypothesis

H1: alternative hypothesis When the effect size is not determined: Next, let us consider

μ1, μ2: means of each sample the case in which the effect size is not determined in the study

www.jeehp.org (page number not for citation purposes) 4

J Educ Eval Health Prof 2021;18:17 • https://doi.org/10.3352/jeehp.2021.18.17

A B C

D E F

G H

Fig. 1. Using the G*Power software for the 2-independent sample t-test. (A) Main window before a priori sample size calculation using

an effect size, (B) main window after a priori sample size calculation using an effect size, (C) main window showing the protocol for

power analyses, (D) effect size calculator window, (E) plot window, (F) main window before a priori sample size calculation not using an

effect size, (G) main window after a priori sample size calculation not using an effect size, and (H) main window before post-hoc power

analysis.

www.jeehp.org (page number not for citation purposes) 5

J Educ Eval Health Prof 2021;18:17 • https://doi.org/10.3352/jeehp.2021.18.17

design similar to that described above. In fact, the effect size is “mean group 2,” and 2 for “SD σ group 2,” and then click the “cal-

not determined on many occasions and it is debatable whether a culate and transfer to main window” button. Then, the corre-

determined effect size can be applied when designing a study. sponding effect size “0.7844645” will be calculated automatically

Thus, when the effect size value is arbitrarily determined, we and appear in the blank space for “effect size d” in the main win-

should provide a logical rationale for the arbitrarily selected ef- dow (Fig. 1H). Because we decided to use 2-sided testing,

fect size. When the effect size is not determined, the effect size α = 0.05, and an equal sample size (n = 30) in both groups, we se-

value can only be assumed using the variables from other previ- lected “two” in the tail(s) drop-down menu, and entered 0.05 for

ous studies, pilot studies, or experiences. “α err prob,” 30 for “sample size group 1,” and 30 for “sample size

In this example, as there was no previous study comparing the group 2.” Pushing the “calculate” button will compute “power

efficacy of drug A to that of drug B, we will assume that the re- (1-β err prob)” as 0.8479274.

searchers conducted a pilot study with 10 patients in each group,

and the means and standard deviations (SDs) of the VAS for Examples 2. Dependent t-test: t-test

drug A and B were 7 ± 3 and 5 ± 2, respectively. The dependent t-test (paired t-test) is a statistical test that

In the main window, push the “determine” button; then, the compares the means of 2 dependent samples. The null hypothe-

effect size calculator window will appear (Fig. 1D). Here, there sis is that the difference between the means of dependent groups

are 2 options: “n1! = n2” and “n1 = n2.” Because we assume an is 0, and the alternative hypothesis is that the difference between

equal sample size for 2 groups, we check the “n1 = n2” box. the means of dependent groups is not equal to 0.

Since the pilot study showed that the mean and SD of the VAS

for drug A and drug B were 7 ± 3 and 5 ± 2, respectively, input 7 H0: μ1-μ2 = 0

for “mean group 1,” 3 for “SD σ group 1,” 5 for “mean group 2,” H1: μ1-μ2≠0

and 2 for “SD σ group 2,” and then click the “calculate and trans- H0: null hypothesis

fer to main window” button. H1: alternative hypothesis

Then, the corresponding effect size “0.7844645” will be auto- μ1, μ2: means of each sample from dependent groups

matically calculated and appear in the blank space for “effect size

d” in the main window (Fig. 1F). Example: a priori

As described above, we decided to use 2-sided testing, α = 0.05, Imagine a study examining the effect of a diet program on

β = 0.2, and an equal sample size in both groups. We selected weight loss. In this study, the researchers plan to enroll partici-

“two” in the “tail(s)” drop-down menu and entered 0.05 for “α err pants, weigh them, enroll them in a diet program, and weigh

prob,” 0.8 for “power (1-β err prob),” and 1 for “allocation ratio them again. The researchers want to determine the sample size

N2/N1.” Clicking the “calculate” button computes “sample size for the null hypothesis to be rejected with a 2-tailed test, α = 0.05,

group 1,” “sample size group 2,” and “total sample size” as 27, 27, and β = 0.2.

and 54, respectively, in the output parameters area (Fig. 1G).

When the effect size is determined: After opening G*Power, go

Example: post hoc to “test > means > two dependent groups (matched pairs).”

A clinical trial comparing the efficacy of 2 analgesics, drugs A In the main window, set “type of power analysis” as “a priori:

and B, was conducted. In this study, 30 patients were enrolled in compute required sample size-given α, power, and effect size”

each group, and the means and SDs of the VAS for drugs A and B (Fig. 2A). Since the researchers decided to use 2-sided testing,

were 7 ± 3 and 5 ± 2, respectively. The researchers wanted to de- α = 0.05, and β = 0.2, select “two” for the tail(s) drop-down menu,

termine the power using a 2-tailed test, α = 0.05, and β = 0.2. and input 0.05 for the blank of “α err prob” and 0.8 for the blank

After opening G*Power, go to “test > means > two independent of “power (1-β err prob).” Unlike the case for the t-test, as the

groups.” In the main window, select “type of power analysis” as number of participants is expected to be equal in the paired t-test,

“post hoc: compute achieved power-given α, sample size and ef- the blank for “allocation ratio N2/N1” is not provided. In this

fect size,” and then push the “determine” button. In the effect size case, calculate the sample size using a small effect size (0.2); thus,

calculator window, since a clinical trial was conducted with equal input 0.2 for “effect size dz.” Upon pushing the “calculate” button,

group sizes, showing that the means and SDs of the VAS for drug the total sample size will be computed as 199 in the output pa-

A and B were 7 ± 3 and 5 ± 2, respectively, check the “n1 = n2” rameter area (Fig. 2B).

box and input 7 for “mean group 1,” 3 for “SD σ group 1,” 5 for

www.jeehp.org (page number not for citation purposes) 6

J Educ Eval Health Prof 2021;18:17 • https://doi.org/10.3352/jeehp.2021.18.17

A B C

Fig. 2. Using the G*Power software for the dependent t-test. (A) Main window before a priori sample size calculation using an effect size,

(B) main window after a priori sample size calculation using an effect size, and (C) effect size calculator window.

When the effect size is not determined: Suppose the researchers Example: post hoc

conducted a pilot study to calculate the sample size for the study Assume that a clinical trial comparing participants’ weight be-

investigating the effect of the diet program on weight loss. In this fore and after the diet program was conducted. In this study, 100

pilot study, the mean and SD before and after the diet program patients were enrolled, the means and SDs before and after the

were 66 ±12 kg and 62 ± 11 kg, respectively, and the correlation diet program were 66 ± 12 kg and 62 ± 11 kg, respectively, and

between the weights before and after the diet program was 0.7. the correlation between the weights before and after the diet pro-

Click the “determine” button in the main window and then gram was 0.7. The researchers wanted to determine the power

check the “from group parameters” box from the 2 options “from for 2-tailed testing and α = 0.05.

differences” and “from group parameters” in the effect size calcu- After opening G*Power, go to “test > means > two dependent

lator window, as the group parameters are known. groups (matched pairs).” In the main screen, select “type of pow-

Since the pilot study showed that the means and SDs of the re- er analysis” as “post hoc: compute achieved power-given α, sam-

sults before and after the diet program were 66 ± 12 kg and ple size and effect size,” and then push the “determine” button. In

62 ± 11 kg, respectively, and the correlation between the results the effect size calculator screen, select the “from group parame-

before and after the diet program was 0.7, input 66 for “mean ters” check box from the 2 options “from differences” and “from

group 1,” 62 for “mean group 2,” 12 for “SD group 1,” 11 for “SD group parameters” as the group parameters are known. In this ef-

group 2,” and 0.7 for “correlation between groups,” and then click fect size calculator window, as a clinical trial showed that the

the “calculate and transfer to main window” button (Fig. 2C). means and SDs of the results before and after the diet program

Then, the corresponding effect size “0.4466556” will be calculat- were 66 ± 12 kg and 62 ± 11 kg, respectively, and the correlation

ed automatically and appear at the blank space for “effect size dz” between the results before and after the diet program was 0.7, in-

in the main screen and in the effect size calculator screen. In the put 66 for “mean group 1,” 62 for “mean group 2,” 12 for “SD

main window, as we decided to use 2-sided testing, α = 0.05, and group 1,” 1 for “SD group 2,” and 0.7 for “correlation between

β = 0.2, we will select “two” in the “tail(s)” drop-down menu, and groups,” and then click the “calculate and transfer to main win-

input 0.05 for “α err prob” and 0.8 for “power (1-β err prob).” dow” button. Then, the corresponding effect size “0.4466556”

Pushing the “calculate” button will compute the “total sample will be calculated automatically and appear in the blank space for

size” as 42. “effect size dz” in the main window and in the effect size calcula-

tor window.

In the main window, as we decided to use 2-sided testing and

www.jeehp.org (page number not for citation purposes) 7

J Educ Eval Health Prof 2021;18:17 • https://doi.org/10.3352/jeehp.2021.18.17

α = 0.05 and the enrolled number of patients was 100, select 5 ± 2, respectively, in 5 patients for each group.

“two” in the “tail(s)” drop-down menu and input 0.05 for “α err Push the “determine” button and the effect size calculator win-

prob” and 100 for “total sample size.” Pushing the “calculate” but- dow will appear. Here, we can find 2 options: “effect size from

ton will compute “power (1-β err prob)” as 0.9931086. means” and “effect size from variance.” Select “effect size from

means” in the “select procedure” drop-down menu and select 4

Examples 3. One-way analysis of variance: F-test in the “number of groups” drop-down menu.

One-way analysis of variance (ANOVA) is a statistical test that Here, as G*Power does not provide the common SD, we must

compares the means of 3 or more samples. The null hypothesis is calculate this. The formula for the common SD is as follows:

that all k means are identical, and the alternative hypothesis is

that at least 2 of the k means differ. (n1 – 1)S12 + (n2 – 1)S22 + (n3 – 1)S32 + (n4 – 1)S42

spooled =

((n1 – 1) + (n2 – 1) + (n3 – 1) + (n4 – 1) – 4)

H0: μ1 = μ2 = ⋯ = μk

H1: the means are not all equal Spooled: common SD

H0: null hypothesis S1, S2, S3, S4: SDs in each group

H1: alternative hypothesis n1, n2, n3, n4: numbers of patients in each group

μ1, μ2, ⋯ μk: means of each sample from the independent Using the above formula, we obtain the following:

groups (1, 2, ⋯, k)

(5 – 1)22+ (5 – 1)12+ (5 – 1)12+ (5 – 1)22

spooled =

The assumptions of the ANOVA test are as follows: (1) inde- ((5 – 1 + (5 – 1) + (5 – 1 + (5 – 1) – 4)

pendence of observations, (2) normal distribution of dependent

variables, and (3) homogeneity of variance. Herein, the common SD will be 1.58. Thus, input 1.58 into the

blank of “SD σ within each group.” As the means and numbers of

Example: a priori patients in each group are 2, 4, 5, and 5 and 5, 5, 5, and 5, respec-

Assume that a study investigated the effects of 4 analgesics: A, tively, input each value, and then click the “calculate and transfer

B, C, and D. Pain will be measured at 6 hours postoperatively us- to main window” button. Then, the corresponding effect size

ing a VAS. The researchers wanted to determine the sample size “0.7751550” will be automatically calculated and appear in the

for the null hypothesis to be rejected at α = 0.05 and β = 0.2. blank for “effect size f” in the main window.

Since we decided to use 4 groups, α = 0.05, and β = 0.2, input 4

When the effect size is determined: For the ANOVA test, Co- for “number of groups,” 0.05 for “α err prob,” and 0.8 for “power

hen suggested the effect sizes of “small,” “medium,” and “large” as (1-β err prob).” Pushing the “calculate” button computes the total

0.1, 0.25, and 0.4, respectively [12], and G*Power provides con- sample size as 24, as shown in the “total sample size” in the “out-

ventional effect size values when the cursor is moved onto the put parameters” area.

“effect size” in the “input parameters” field. In this case, we calcu-

lated the sample size using a medium effect size (0.25). Example: post hoc

After opening G*Power, go to “test > means > many groups: Assume that a clinical trial showed the following means and

ANOVA: one-way (one independent variable).” In the main win- SDs of VAS at 6 hours postoperation in drugs A, B, C, and D :

dow, set “type of power analysis” as “a priori: compute required 2 ± 2, 4 ± 1, 5 ± 1, and 5 ± 2, respectively, in 20 patients for each

sample size-given α, power, and effect size.” Since we decided to group. The researchers want to determine the power with

use a medium effect size, α = 0.05, and β = 0.2, we enter 0.25 for 2-tailed testing, α = 0.05, and β = 0.2.

“effect size f,” 0.05 for “α err prob,” and 0.8 for “power (1-β err After opening G*Power, go to “test > means > many groups:

prob).” Moreover, as we compared 4 analgesics, we input 4 for ANOVA: one-way (one independent variable).” In the main

“number of groups.” Pushing the “calculate” button computes the screen, select “type of power analysis” as “post hoc: compute

total sample size as 180, as shown in the “total sample size” in the achieved power-given α, sample size and effect size,” and then

“output parameters” area. push the “determine” button to show the effect size calculator

When the effect size is not determined: Assume that a pilot screen.

study showed the following means and SDs (of VAS) at 6 hours Using the above formula, the common SD is 1.58. Thus, input

postoperation for drugs A, B, C, and D: 2 ± 2, 4 ± 1, 5 ± 1, and 1.58 into the blank of “SD σ within each group.” As the means

www.jeehp.org (page number not for citation purposes) 8

J Educ Eval Health Prof 2021;18:17 • https://doi.org/10.3352/jeehp.2021.18.17

and numbers of patients in each group are 2, 4, 5, and 5, and 20, of determination.

20, 20, and 20, respectively, input these values into the corre-

sponding blank; next, click the “calculate and transfer to main Example: a priori

window” button. Consider a hypothetical study investigating the correlation be-

Then, the corresponding effect size “0.7751550” and the total tween height and weight in pediatric patients. The researchers

number of patients will be calculated automatically and appear in wanted to determine the sample size for the null hypothesis to be

the blanks for “effect size f” and “total sample size” on the main rejected with 2-tailed testing, α = 0.05, and β = 0.2. In the pilot

screen. study, the Pearson correlation coefficient for the sample was 0.5.

Since the clinical trial used 4 groups and we decided to use After opening G*Power, go to “test > correlation and regres-

α = 0.05, input 4 for “number of groups” and 0.05 for “α err prob.” sion > correlation: bivariate normal model.” In the main screen,

Pushing the “calculate” button will compute the power as set “type of power analysis” as “a priori: compute required sam-

0.9999856 at “power (1-β err prob)” in the “output parameters” ple size-given α, power, and effect size.” Because we decided to

area. use 2-sided testing, α = 0.05, and β = 0.2, the Pearson correlation

coefficient in the null hypothesis is 0, and the Pearson correla-

Examples 4. Correlation–Pearson r tion coefficient in the sample was 0.7, in the pilot study, select

A correlation is a statistic that measures the relationship be- “two” for the “tail(s)” drop-down menu, and input 0.5 for “cor-

tween 2 continuous variables. The Pearson correlation coeffi- relation ρ H1,” 0.05 for “α err prob,” 0.8 for “power (1-β err

cient is a statistic that shows the strength of the relationship be- prob),” and 0 for “correlation ρ H1.” Upon pushing the “calcu-

tween 2 continuous variables. The Greek letter ρ (rho) represents late” button, the total sample size will be computed as 29 in the

the Pearson correlation coefficient in a population, and r rep- “output parameters” area.

resents the Pearson correlation coefficient in a sample. The null G*Power also provides an option to calculate the sample size

hypothesis is that the Pearson correlation coefficient in the pop- using the coefficient of determination. If the coefficient of deter-

ulation is 0, and the alternative hypothesis is that the Pearson mination is known, push the “determine” button in the main

correlation coefficient in the population is not equal to 0. window, input the value into the blank of “coefficient of determi-

nation ρ2,” and then click the “calculate and transfer to main win-

H0: ρ = 0 dow” button. Finally, push the “calculate” button to compute the

H1: ρ≠0 total sample size.

H0: null hypothesis

H1: alternative hypothesis Example: post hoc

ρ: Pearson correlation coefficient in the population Assume that a clinical trial investigated the correlation between

height and weight in pediatric patients. In this study, 50 pediatric

The Pearson correlation coefficient in the population (ρ, rho) patients were enrolled, and the sample Pearson correlation coef-

ranges from −1 to 1, where −1 represents a perfect negative linear ficient was 0.5. The researchers wanted to determine the power

correlation, 0 represents no linear correlation, and 1 represents a at the 2-tailed and α = 0.05 levels.

perfect positive linear correlation. The coefficient of determina- After opening G*Power, go to “test > correlation and regres-

tion (ρ2) is calculated by squaring the Pearson correlation coeffi- sion > correlation: bivariate normal model.” In the main screen,

cient in the population (ρ) and is interpreted as “the percent of select “type of power analysis” as “post hoc: compute achieved

variation in one continuous variable explained by the other con- power-given α, sample size, and effect size.” Then, as the clinical

tinuous variable” [15]. trial showed that the sample Pearson correlation coefficient was

For correlations, Cohen suggested the effect sizes of “small,” 0.5 and the number of enrolled patients was 50, input 0.5 for

“medium,” and “large” as 0.1, 0.3, and 0.5, respectively [12]. “correlation ρ H1,” 0 for “correlation ρ H1,” and 50 for “total sam-

However, G*Power does not provide a sample size calculation ple size.” As the researchers want to determine the power at the

using the effect size for correlations. Therefore, this article does 2-tailed and α = 0.05 levels, select “two” for the tail(s) drop-down

not present a sample size calculation using the effect size. Instead, menu and input 0.05 for “α err prob.”

an example is provided of sample size calculation using the ex- Then, the corresponding power “0.9671566” will be calculated

pected population Pearson correlation coefficient or coefficient automatically and appear at the blank for “power (1-β err prob)”

www.jeehp.org (page number not for citation purposes) 9

J Educ Eval Health Prof 2021;18:17 • https://doi.org/10.3352/jeehp.2021.18.17

in the output parameter area of the main screen. N2/N1,” and 0.3 and 0.1 for “proportion p2” and “proportion

p1,” respectively.

Example 5. Two independent proportions: chi-square test Pushing the “calculate” button will compute “sample size group

The chi-square test, also known as the χ2 test, is used to com- 1,” “sample size group 2,” and “total sample size” as 62, 62, and

pare 2 proportions of independent samples. G*Power provides 124, respectively.

the options to compare 2 proportions of independent samples,

namely “two independent groups: inequality, McNemar test,” Example: post hoc

“two independent groups: inequality, Fisher’s exact test,” “two in- Assume that a clinical trial compared the effect of 2 treatments,

dependent groups: inequality, unconditional exact,” “two inde- A and B, on the incidence of post-herpetic neuralgia. In this

pendent groups: inequality with offset, unconditional exact,” and study, researchers enrolled 101 and 98 patients, respectively, and

“two independent groups: inequality, z-test.” In this article, we in- post-herpetic neuralgia occurred in 31 and 9 patients after treat-

troduce the “two independent groups: inequality, z-test” because ments A and B, respectively. The incidence of post-herpetic neu-

many statistical software programs provide similar options. ralgia in the groups that received treatments A and B was 0.307

The null hypothesis is that the difference in proportions of in- (31/101) and 0.092 (9/98), respectively.

dependent groups is 0, and the alternative hypothesis is that the The researchers wanted to determine the power using 2-tailed

difference in proportions of independent groups is not equal to 0. testing and α = 0.05. After opening G*Power, go to “test > propor-

tions > two independent groups: inequality, z-test.” In the main

H0: π1-π2 = 0 screen, select “type of power analysis” as “post hoc: compute

H1: π1-π2≠0 achieved power-given α, sample size and effect size.” Then, as a

clinical trial showed that the incidence of post-herpetic neuralgia

As G*Power does not provide sample size calculation using the in the groups that received treatments A and B was 0.307 and

effect size for this option, this is not provided in this article; in- 0.092, respectively, and the number of enrolled patients was 101

stead, an example of sample size calculation using the expected and 98, respectively, input 0.307 for “proportion p2,” 0.092 for

proportions of each group is provided. “proportion p1,” 101 for “sample size group 2,” and 98 for “sam-

ple size group 1.”

Example: a priori As researchers wanted to determine the power for 2-tailed test-

Assume that a study examined the effects of 2 treatments, for ing and α = 0.05, select “two” for the tail(s) drop-down menu and

which the measure of the effect is a proportion. Treatment A has input 0.05 for “α err prob.” Pushing the “calculate” button will

been traditionally used for the prevention of post-herpetic neu- compute “power (1-β err prob)” as 0.9715798 in the “output pa-

ralgia, and treatment B is a newly developed treatment. The re- rameter” area of the main window.

searchers wanted to determine the sample size for the null hy-

pothesis to be rejected using 2-tailed testing, α = 0.05, and β = 0.2. Sample size calculation considering the

The number of patients was equal in both groups. Suppose the drop-out rate

researchers conducted a pilot study examining the effects of

treatments A and B. In the pilot study, the proportions of When conducting a study, drop-out of study subjects or

post-herpetic neuralgia development were 0.3 and 0.1, respec- non-compliance to the study protocol is inevitable. Therefore,

tively, for treatments A and B. we should consider the drop-out rate when calculating the sam-

After opening G*Power, go to “test > proportions > two inde- ple size. When calculating the sample size considering the drop-

pendent groups: inequality, z-test.” In the main window, set out rate, the formula for the sample size calculation is as below:

“type of power analysis” as “a priori: compute required sample

size-given α, power, and effect size.” N

Nd =

Because we decided to use 2-sided testing, α = 0.05, and N(1 – d)

β = 0.2, there was an equal sample size in both groups, and the N: sample size before considering drop-out

proportions of post-herpetic neuralgia development were 0.3 d: expected drop-out rate

and 0.1 for treatments A and B, respectively, in the pilot study, se- ND: sample size considering drop-out

lect “two” for the “tail(s)” drop-down menu, and input 0.05 for “α

err prob,” 0.8 for “power (1-β err prob),” 1 for “allocation ratio Let us assume that a sample size for a study was calculated as

www.jeehp.org (page number not for citation purposes) 10

J Educ Eval Health Prof 2021;18:17 • https://doi.org/10.3352/jeehp.2021.18.17

100. If the drop-out rate during the study process is expected to References

be 20% (0.2), the sample size considering drop-out will be 125.

100 1. In J, Kang H, Kim JH, Kim TK, Ahn EJ, Lee DK, Lee S, Park

= 125

(1 – 0.2) JH. Tips for troublesome sample-size calculation. Korean J An-

esthesiol 2020;73:114-120. https://doi.org/10.4097/kja.19497

Conclusion 2. Kang H. Sample size determination for repeated measures de-

sign using G Power software. Anesth Pain Med 2015;10:6-15.

Appropriate sample size calculation and power analysis have https://doi.org/10.17085/apm.2015.10.1.6

become major issues in research and analysis. The G*Power soft- 3. Moher D, Dulberg CS, Wells GA. Statistical power, sample size,

ware supports sample size and power calculation for various sta- and their reporting in randomized controlled trials. JAMA

tistical methods (F, t, χ2, z, and exact tests). G*Power is easy to 1994;272:122-124. https://doi.org/10.1001/jama.1994.0352

use because it has a GUI and is free. This article provides guid- 0020048013

ance on the application of G*Power to calculate sample size and 4. Freiman JA, Chalmers TC, Smith H Jr, Kuebler RR. The im-

power in the design, planning, and analysis stages of a study. portance of beta, the type II error and sample size in the design

and interpretation of the randomized control trial: survey of 71

ORCID “negative” trials. N Engl J Med 1978;299:690-694. https://doi.

org/10.1056/NEJM197809282991304

Hyun Kang: https://orcid.org/0000-0003-2844-5880 5. Halpern SD, Karlawish JH, Berlin JA. The continuing unethical

conduct of underpowered clinical trials. JAMA 2002;288:358-

Authors’ contributions 362. https://doi.org/10.1001/jama.288.3.358

6. Edwards SJ, Lilford RJ, Braunholtz D, Jackson J. Why “under-

Conceptualization, data curation, formal analysis, writing– powered” trials are not necessarily unethical. Lancet

original draft, writing–review & editing: HK. 1997;350:804-807. https://doi.org/10.1016/s0140-6736(97)

02290-3

Conflict of interest 7. Ko MJ, Lim CY. General considerations for sample size estima-

tion in animal study. Korean J Anesthesiol 2021;74:23-29.

No potential conflict of interest relevant to this article was re- https://doi.org/10.4097/kja.20662

ported. 8. Kang H. Statistical messages from ARRIVE 2.0 guidelines. Ko-

rean J Pain 2021;34:1-3. https://doi.org/10.3344/kjp.2021.

Funding 34.1.1

9. Percie du Sert N, Hurst V, Ahluwalia A, Alam S, Avey MT, Baker

None. M, Browne WJ, Clark A, Cuthill IC, Dirnagl U, Emerson M,

Garner P, Holgate ST, Howells DW, Karp NA, Lazic SE, Lidster

Data availability K, MacCallum CJ, Macleod M, Pearl EJ, Petersen OH, Rawle F,

Reynolds P, Rooney K, Sena ES, Silberberg SD, Steckler T,

None. Wurbel H. The ARRIVE guidelines 2.0: updated guidelines for

reporting animal research. PLoS Biol 2020;18:e3000410.

Acknowledgments https://doi.org/10.1371/journal.pbio.3000410

10. Altman DG, Schulz KF, Moher D, Egger M, Davidoff F, El-

None. bourne D, Gotzsche PC. The revised CONSORT statement

for reporting randomized trials: explanation and elaboration.

Supplementary materials Ann Intern Med 2001;134:663-694. https://doi.org/10.7326/

0003-4819-134-8-200104170-00012

Supplement 1. Audio recording of the abstract. 11. Von Elm E, Altman DG, Egger M, Pocock SJ, Gotzsche PC,

Vandenbroucke JP; STROBE Initiative. The Strengthening the

Reporting of Observational Studies in Epidemiology (STROBE)

statement: guidelines for reporting observational studies. PLoS

www.jeehp.org (page number not for citation purposes) 11

J Educ Eval Health Prof 2021;18:17 • https://doi.org/10.3352/jeehp.2021.18.17

Med 2007;4:e296. https://doi.org/10.1371/journal.pmed. doi.org/10.1592/phco.21.5.405.34503

0040296 14. Hoenig JM, Heisey DM. The abuse of power: the pervasive fal-

12. International Committee of Medical Journal Editors. Uniform lacy of power calculations for data analysis. Am Stat 2001;

requirements for manuscripts submitted to biomedical journals. 55:19-24. https://doi.org/10.1198/000313001300339897

JAMA 1997;277:927-934. https://doi.org/10.1001/jama.277. 15. Faul F, Erdfelder E, Buchner A, Lang AG. Statistical power anal-

11.927 yses using G*Power 3.1: tests for correlation and regression

13. Levine M, Ensom MH. Post hoc power analysis: an idea whose analyses. Behav Res Methods 2009;41:1149-1160. https://doi.

time has passed? Pharmacotherapy 2001;21:405-409. https:// org/10.3758/BRM.41.4.1149

www.jeehp.org (page number not for citation purposes) 12

You might also like

- Sample Size Estimation and Power Analysis For Clinical Research StudiesDocument7 pagesSample Size Estimation and Power Analysis For Clinical Research StudiesFrida RasyidNo ratings yet

- Clinical Prediction Models: A Practical Approach to Development, Validation, and UpdatingFrom EverandClinical Prediction Models: A Practical Approach to Development, Validation, and UpdatingNo ratings yet

- Sample Size Calculation: Basic Principles: Review ArticleDocument5 pagesSample Size Calculation: Basic Principles: Review Articlenmmathew mathewNo ratings yet

- Sample Size Justification PreprintDocument32 pagesSample Size Justification PreprintRinaldy AlidinNo ratings yet

- Sample Size Computations and Power Analysis With The SAS SystemDocument8 pagesSample Size Computations and Power Analysis With The SAS SystemAhmad Abdullah Najjar100% (2)

- Sample Size Justification: Collabra: PsychologyDocument28 pagesSample Size Justification: Collabra: PsychologyPathy CallañaupaNo ratings yet

- Determinacion Tamaños Muestra Exp Clinicos (Correlación)Document7 pagesDeterminacion Tamaños Muestra Exp Clinicos (Correlación)Camilo LilloNo ratings yet

- Sample Size Calculations Basic Principles and Common PitfallsDocument6 pagesSample Size Calculations Basic Principles and Common PitfallsArturo Martí-CarvajalNo ratings yet

- Sample Size JustificationDocument28 pagesSample Size JustificationADRIANNE OCTAVONo ratings yet

- Sample Size Calculations: Basic Principles and Common PitfallsDocument6 pagesSample Size Calculations: Basic Principles and Common PitfallsRasha Samir SryoNo ratings yet

- Sample Size Determination: BY DR Zubair K.ODocument43 pagesSample Size Determination: BY DR Zubair K.OOK ViewNo ratings yet

- Sample Size Determination: BY DR Zubair K.ODocument43 pagesSample Size Determination: BY DR Zubair K.OMakmur SejatiNo ratings yet

- Sample Size Estimation in Clinical ResearchDocument9 pagesSample Size Estimation in Clinical ResearchAshish GuptaNo ratings yet

- General Considerations For Sample Size Estimation in Animal StudyDocument7 pagesGeneral Considerations For Sample Size Estimation in Animal StudyGhaniyyatul KhudriNo ratings yet

- Sample Size Justification: This Is An Unsubmitted Preprint Shared For FeedbackDocument31 pagesSample Size Justification: This Is An Unsubmitted Preprint Shared For FeedbackCucuNo ratings yet

- Defining Hypothesis TestingDocument17 pagesDefining Hypothesis TestingRv EstebanNo ratings yet

- 2021 - Lakens Preprint On Sample Size JustificationDocument31 pages2021 - Lakens Preprint On Sample Size JustificationBeth LloydNo ratings yet

- Stating Null Hypothesis in Research PaperDocument7 pagesStating Null Hypothesis in Research Paperalrasexlie1986100% (2)

- Biometry NoteDocument89 pagesBiometry NotetofakalidNo ratings yet

- Is 30 The Magic Number? Issues in Sample Size Estimation: Current TopicDocument5 pagesIs 30 The Magic Number? Issues in Sample Size Estimation: Current TopicJair ArellanoNo ratings yet

- G PowerDocument5 pagesG PowerJembia Hassan HussinNo ratings yet

- Sample Size Calculation in Clinical Trials: MedicineDocument5 pagesSample Size Calculation in Clinical Trials: MedicineKarolina PolskaNo ratings yet

- Efficient Ways Exist To Obtain The Optimal Sample Size in Clinical Trials in Rare DiseasesDocument7 pagesEfficient Ways Exist To Obtain The Optimal Sample Size in Clinical Trials in Rare DiseasesGonzalo Báez VNo ratings yet

- Biostatistics Series Module 2. Overview of Hypothesis TestingDocument9 pagesBiostatistics Series Module 2. Overview of Hypothesis TestingrazoblancoNo ratings yet

- Current Sample Size Conventions: Flaws, Harms, and AlternativesDocument7 pagesCurrent Sample Size Conventions: Flaws, Harms, and Alternativesbijugeorge1No ratings yet

- An Overview of Meta-Analysis For Clinicians: Young Ho LeeDocument7 pagesAn Overview of Meta-Analysis For Clinicians: Young Ho Leetatiana alexandraNo ratings yet

- 1 s2.0 S0022202X18322243 MainDocument5 pages1 s2.0 S0022202X18322243 MainRandriamiharyNo ratings yet

- How To Calculate Sample Size in Animal StudiesDocument6 pagesHow To Calculate Sample Size in Animal StudiesMaico Roberto LuckmannNo ratings yet

- Sample Size Calculations: Basic Principles and Common PitfallsDocument7 pagesSample Size Calculations: Basic Principles and Common PitfallsMyat MinNo ratings yet

- Power, Effect and Sample Size Using GPowerDocument5 pagesPower, Effect and Sample Size Using GPowercalin84No ratings yet

- Q2 Module 5 - Data Analysis Using Statistics and Hypothesis TestingDocument9 pagesQ2 Module 5 - Data Analysis Using Statistics and Hypothesis TestingJester Guballa de LeonNo ratings yet

- NIH Public Access: Author ManuscriptDocument8 pagesNIH Public Access: Author Manuscript292293709No ratings yet

- Solution IMT-120 3Document8 pagesSolution IMT-120 3Kishor kumar BhatiaNo ratings yet

- Research Sampling and Sample Size Determination: A Practical ApplicationDocument14 pagesResearch Sampling and Sample Size Determination: A Practical Applicationkafe karichoNo ratings yet

- Marshall Jonker Inferent Stats 2010Document12 pagesMarshall Jonker Inferent Stats 2010Kenny BayudanNo ratings yet

- G Power CalculationDocument9 pagesG Power CalculationbernutNo ratings yet

- BM 31 1 010502Document27 pagesBM 31 1 010502RomNo ratings yet

- Analysis of Bayesian Posterior Significance and Effect Size Indices For The Two-Sample T-Test To Support Reproducible Medical ResearchDocument18 pagesAnalysis of Bayesian Posterior Significance and Effect Size Indices For The Two-Sample T-Test To Support Reproducible Medical ResearchJoão CabralNo ratings yet

- Tamanho Amostra - em Veterinaria - ESTEDocument13 pagesTamanho Amostra - em Veterinaria - ESTELetícia GonçalvesNo ratings yet

- Statistical Primer: Sample Size and Power Calculations-Why, When and How?Document6 pagesStatistical Primer: Sample Size and Power Calculations-Why, When and How?Lazar VelickiNo ratings yet

- How To Calculate Sample Size in Animal Studies?: Educational ForumDocument5 pagesHow To Calculate Sample Size in Animal Studies?: Educational Forumintan wahyuNo ratings yet

- Peerj 07 6918Document25 pagesPeerj 07 6918Miguel Barata GonçalvesNo ratings yet

- Research Paper Inferential StatisticsDocument7 pagesResearch Paper Inferential Statisticsskpcijbkf100% (1)

- Gelman Carlin 2014 Beyond Power Calculations Assessing Type S Sign and Type M Magnitude ErrorsDocument11 pagesGelman Carlin 2014 Beyond Power Calculations Assessing Type S Sign and Type M Magnitude ErrorsSergio Henrique O. SantosNo ratings yet

- Formulating Causal Questions and Principled Statistical AnswersDocument26 pagesFormulating Causal Questions and Principled Statistical AnswersEnrico RipamontiNo ratings yet

- Applications of Hypothesis Testing for Environmental ScienceFrom EverandApplications of Hypothesis Testing for Environmental ScienceNo ratings yet

- More About The Basic Assumptions of T-Test: Normality and Sample SizeDocument5 pagesMore About The Basic Assumptions of T-Test: Normality and Sample SizeMariano Reynaldo Gallo RuelasNo ratings yet

- Hypothesis TestingDocument21 pagesHypothesis TestingMisshtaCNo ratings yet

- Gruijters & Peters (2022)Document17 pagesGruijters & Peters (2022)fairies-dustxNo ratings yet

- HYPOTHESIS TESTING - EditedDocument12 pagesHYPOTHESIS TESTING - EditedsalesNo ratings yet

- Study Design and Choosing A Statistical Test ReadDocument9 pagesStudy Design and Choosing A Statistical Test ReadKevin TangNo ratings yet

- MC Math 13 Module 11Document17 pagesMC Math 13 Module 11Raffy BarotillaNo ratings yet

- Hypothesis Meaning in Research PaperDocument4 pagesHypothesis Meaning in Research Paperafknqbqwf100% (2)

- Reglas de Predicción ClínicaDocument7 pagesReglas de Predicción ClínicaCambriaChicoNo ratings yet

- nrn3475 c3Document1 pagenrn3475 c3cat chiNo ratings yet

- How To Calculate Sample Size in Randomized Controlled TrialDocument4 pagesHow To Calculate Sample Size in Randomized Controlled TrialArturo Martí-CarvajalNo ratings yet

- How To Calculate Sample Size in Animal StudiesDocument6 pagesHow To Calculate Sample Size in Animal StudiessidomoyoNo ratings yet

- Believe It or Not - How Much Can We Rely On Published Data On Potential Drug Targets - Nature Reviews Drug DiscoveryDocument14 pagesBelieve It or Not - How Much Can We Rely On Published Data On Potential Drug Targets - Nature Reviews Drug DiscoverycipinaNo ratings yet

- The Debate About P-Values: Ying LU, Ilana Belitskaya-LevyDocument5 pagesThe Debate About P-Values: Ying LU, Ilana Belitskaya-LevyBamz HariyantoNo ratings yet

- PDF To WordDocument21 pagesPDF To WordKamrul HasanNo ratings yet

- Validation of The Malay Version of The de Jong Gierveld Loneliness ScaleDocument8 pagesValidation of The Malay Version of The de Jong Gierveld Loneliness ScaleSyara Shazanna ZulkifliNo ratings yet

- Sample Size Determination For Survey Research and Non-Probability Sampling Techniques: A Review and Set of RecommendationsDocument21 pagesSample Size Determination For Survey Research and Non-Probability Sampling Techniques: A Review and Set of RecommendationsSyara Shazanna ZulkifliNo ratings yet

- Zuhaida Hussein Et Al. - 2021 - Loneliness and Health Outcomes Among Malaysian Older AdultsDocument8 pagesZuhaida Hussein Et Al. - 2021 - Loneliness and Health Outcomes Among Malaysian Older AdultsSyara Shazanna ZulkifliNo ratings yet

- Institute of Graduate Studies (Igs)Document46 pagesInstitute of Graduate Studies (Igs)Syara Shazanna ZulkifliNo ratings yet

- PreviewpdfDocument48 pagesPreviewpdfSyara Shazanna ZulkifliNo ratings yet

- Perceived Organizational Support: Why Caring About Employees CountsDocument24 pagesPerceived Organizational Support: Why Caring About Employees CountsSyara Shazanna ZulkifliNo ratings yet

- File ID: RO2021 Mohd Faiz Transcriber: Syara Shazanna Binti Zulkifli Key NoteDocument3 pagesFile ID: RO2021 Mohd Faiz Transcriber: Syara Shazanna Binti Zulkifli Key NoteSyara Shazanna ZulkifliNo ratings yet

- Request For Payment For Individual Consultant/Vendor: ASB's Grants Management GuideDocument1 pageRequest For Payment For Individual Consultant/Vendor: ASB's Grants Management GuideSyara Shazanna ZulkifliNo ratings yet

- RYSE Verbatim Transcription Rules (UpdatedDocument3 pagesRYSE Verbatim Transcription Rules (UpdatedSyara Shazanna ZulkifliNo ratings yet

- Act 265 - Employment Act 1955Document120 pagesAct 265 - Employment Act 1955gurl_troublezNo ratings yet

- Tentative Schedule For Mid Odd Semester Exam 2023-24Document7 pagesTentative Schedule For Mid Odd Semester Exam 2023-24gimtce GIMTCE2022No ratings yet

- Life After Ashes: Understanding The Impact of Business Loss To The Livelihood of San Fernando City Wet Market VendorsDocument10 pagesLife After Ashes: Understanding The Impact of Business Loss To The Livelihood of San Fernando City Wet Market Vendorsalrichj29No ratings yet

- Aptitude Tests For Job ApplicantsDocument30 pagesAptitude Tests For Job ApplicantsMboowa YahayaNo ratings yet

- Nonlinear Modeling of RC Structures Using Opensees: University of Naples Federico IiDocument66 pagesNonlinear Modeling of RC Structures Using Opensees: University of Naples Federico IiJorge Luis Garcia ZuñigaNo ratings yet

- Reinstatement Management PlanDocument38 pagesReinstatement Management Planvesgacarlos-1No ratings yet

- Astm D 2979 PDFDocument3 pagesAstm D 2979 PDFMiftahulArifNo ratings yet

- Session Nos. 26 - 31: Cities of Mandaluyong and PasigDocument25 pagesSession Nos. 26 - 31: Cities of Mandaluyong and PasigLego AlbertNo ratings yet

- Department of Education: Learning CompetencyDocument2 pagesDepartment of Education: Learning CompetencyShaira May Tangonan CaragNo ratings yet

- For 'Best Practices in O&M Safety' PDFDocument153 pagesFor 'Best Practices in O&M Safety' PDFSachinGoyalNo ratings yet

- SP Q4 Week 2 HandoutDocument10 pagesSP Q4 Week 2 HandoutLenard BelanoNo ratings yet

- Lesson - 03 - Focus On Plastic FootprintsDocument59 pagesLesson - 03 - Focus On Plastic FootprintsMariaNo ratings yet

- Density (Unit Weight), Yield, and Air Content (Gravimetric) of ConcreteDocument4 pagesDensity (Unit Weight), Yield, and Air Content (Gravimetric) of ConcretemickyfelixNo ratings yet

- INCOMPATIBILITIESDocument27 pagesINCOMPATIBILITIESArk Olfato Parojinog100% (1)

- Weekly Home Learning Plan (Q3) Self-Learning Module 4 (Week 5)Document2 pagesWeekly Home Learning Plan (Q3) Self-Learning Module 4 (Week 5)Richie MacasarteNo ratings yet

- FNCPDocument3 pagesFNCPDarcey NicholeNo ratings yet

- Review Iftekher 2013Document11 pagesReview Iftekher 2013RezaNo ratings yet

- MCR3U Unit #1 NotesDocument12 pagesMCR3U Unit #1 NotespersonNo ratings yet

- Sheree Hendersonresume 2Document3 pagesSheree Hendersonresume 2api-265774249No ratings yet

- Makalah Bahasa InggrisDocument7 pagesMakalah Bahasa InggrisRima Novianti100% (1)

- IHS Markit Seed Market Analysis and Data InfographicDocument1 pageIHS Markit Seed Market Analysis and Data Infographictripurari pandeyNo ratings yet

- Komatsu Wb93r 5 Shop ManualDocument20 pagesKomatsu Wb93r 5 Shop Manualsandra100% (32)

- English 900 - 01Document147 pagesEnglish 900 - 01Hnin Hnin AungNo ratings yet

- Prospectus: 1 ReservationsDocument8 pagesProspectus: 1 ReservationsvarunNo ratings yet

- Cars MasonryDocument1 pageCars MasonryAbdullah MundasNo ratings yet

- Combinatorics 2 Solutions UHSMCDocument5 pagesCombinatorics 2 Solutions UHSMCWalker KroubalkianNo ratings yet

- VERITAS Cluster Server For UNIX, Fundamentals: Lesson 6 VCS Configuration MethodsDocument50 pagesVERITAS Cluster Server For UNIX, Fundamentals: Lesson 6 VCS Configuration MethodsNirmal AsaithambiNo ratings yet

- AaaaaDocument3 pagesAaaaaAnonymous C3BD7OdNo ratings yet

- Word Formation - Open Cloze Exercises Unit 1Document2 pagesWord Formation - Open Cloze Exercises Unit 1Fatima Luz RuizNo ratings yet

- Engineering Thought Intelligence Fictional Intellectual Behave Exercise 1 ComputationDocument4 pagesEngineering Thought Intelligence Fictional Intellectual Behave Exercise 1 Computationposgas aceNo ratings yet

- Unit 5Document3 pagesUnit 5api-665951284No ratings yet