0% found this document useful (0 votes)

149 views48 pagesHuman Error I

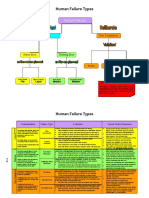

The document discusses human error classification including omission and commission errors as well as slips, lapses and mistakes. It also covers factors that contribute to errors and several human error models.

Uploaded by

Wong Yan XinCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

149 views48 pagesHuman Error I

The document discusses human error classification including omission and commission errors as well as slips, lapses and mistakes. It also covers factors that contribute to errors and several human error models.

Uploaded by

Wong Yan XinCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd