Professional Documents

Culture Documents

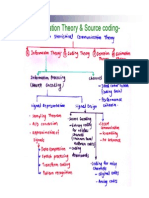

DC Unit - 4

Uploaded by

Hari krishna Pinakana0 ratings0% found this document useful (0 votes)

8 views5 pagesOriginal Title

DC Unit - 4 (4)

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

8 views5 pagesDC Unit - 4

Uploaded by

Hari krishna PinakanaCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 5

1. What is mutual information and explain the properties 3. Explain the code redundancy and the code variance.

associated to it. Ans:

Ans: In digital communication, code redundancy refers to the

deliberate addition of extra bits or symbols to the transmitted

data to detect and correct errors that may occur during

transmission. Redundancy is added through techniques such

as error detection codes (e.g., parity bits, checksums) and

error correction codes (e.g., Hamming codes, Reed-Solomon

codes). These codes provide the ability to identify and

recover from transmission errors, ensuring reliable data

communication.

Code variance, in the context of digital communication, refers

to the variability in the encoding or modulation schemes used

to represent data. Different encoding or modulation

techniques can be employed to transmit the same

information over a communication channel. Code variance

allows for flexibility in adapting to different channel

conditions and optimizing the efficiency and robustness of

the transmission. For example, in digital modulation,

techniques like Amplitude Shift Keying (ASK), Frequency Shift

Keying (FSK), and Phase Shift Keying (PSK) represent different

ways to vary the carrier signal to encode digital information.

4. Calculate the amount of information if binary digits occur

with equal likelihood in binary PCM.

Ans:

In binary Pulse Code Modulation (PCM) where binary digits

occur with equal likelihood, the amount of information can

be calculated using Shannon's formula for entropy.

Entropy (H) is a measure of the average amount of

information contained in each symbol of a discrete source.

For binary digits with equal likelihood, the probability of

occurrence for each digit is 0.5.

The formula to calculate entropy is:

H = - Σ (p * log2(p))

Where:

Σ represents the summation over all possible

symbols.

2. Prove that the mutual information of the channel is p represents the probability of each symbol

symmetric i.e., I (X; Y) = I (Y; X) occurring.

Ans: For binary PCM with equal likelihood, we have two symbols

(0 and 1) each occurring with a probability of 0.5.

Plugging these values into the formula, we get:

H = - (0.5 * log2(0.5) + 0.5 * log2(0.5))

Simplifying the equation:

H = - (0.5 * (-1) + 0.5 * (-1))

H = - (-0.5 + -0.5)

H=1

Therefore, in binary PCM where binary digits occur with equal

likelihood, the amount of information per symbol is 1 bit.

5. Explain the properties of entropy.

Ans:

6. Briefly describe about the Code tree, Trellis and State

Diagram for a Convolution Encoder.

Ans:

7. Draw the state diagram, tree diagram, and trellis diagram

for k=3, rate 1/3 code generated by g1(x) = 1+x2, g2(x) =

1+x and g3(x) = 1+x+x2.

Ans:

8. A memory-less source emits six messages with

probabilities 0.3, 0.25, 0.15, 0.12, 0.1 and 0.08. Find the

Huffman code. Determine its average word length,

efficiency and redundancy

Ans:

9. A code is composed of dots and dashes. Assume that the

dash is three times as long as the dot and has one-third

the probability of occurrence.

(i) Calculate the information in a dot and that in a dash

(ii) Calculate the average information in the dot-dash code.

(iii) Assume that a dot lasts for 10ms and that this same time

interval is allowed between symbols.

Calculate the average rate of information transmission.

Ans:

You might also like

- A Huffman-Based Text Encryption Algorithm: H (X) Leads To Zero Redundancy, That Is, Has The ExactDocument7 pagesA Huffman-Based Text Encryption Algorithm: H (X) Leads To Zero Redundancy, That Is, Has The ExactWalter BirchmeyerNo ratings yet

- EC 401 Information Theory & Coding: 4 CreditsDocument50 pagesEC 401 Information Theory & Coding: 4 CreditsMUHAMAD ANSARINo ratings yet

- A Huffman-Based Text Encryption Algorithm: H (X) Leads To Zero Redundancy, That Is, Has The ExactDocument7 pagesA Huffman-Based Text Encryption Algorithm: H (X) Leads To Zero Redundancy, That Is, Has The ExactMalik ImranNo ratings yet

- Lesson 4 Information TheoryDocument39 pagesLesson 4 Information Theorymarkangelobautista86No ratings yet

- Source Coding OmpressionDocument34 pagesSource Coding Ompression최규범No ratings yet

- Lecture Notes Sub: Error Control Coding and Cryptography Faculty: S Agrawal 1 Semester M.Tech, ETC (CSE)Document125 pagesLecture Notes Sub: Error Control Coding and Cryptography Faculty: S Agrawal 1 Semester M.Tech, ETC (CSE)Yogiraj TiwariNo ratings yet

- All CodingDocument52 pagesAll CodingAHMED DARAJNo ratings yet

- Chapter 2 ComDocument76 pagesChapter 2 ComAnwar MohammedNo ratings yet

- Module 1Document29 pagesModule 1Raghavendra ILNo ratings yet

- Lossless Compression: Huffman Coding: Mikita Gandhi Assistant Professor AditDocument39 pagesLossless Compression: Huffman Coding: Mikita Gandhi Assistant Professor Aditmehul03ecNo ratings yet

- Chapter 5: Introduction To Information Theory and Coding: Efficient and Reliable CommunicationDocument22 pagesChapter 5: Introduction To Information Theory and Coding: Efficient and Reliable CommunicationYosef KirosNo ratings yet

- Ece-V-Information Theory & Coding (10ec55) - AssignmentDocument10 pagesEce-V-Information Theory & Coding (10ec55) - AssignmentLavanya Vaishnavi D.A.No ratings yet

- Huffman CodingDocument23 pagesHuffman CodingNazeer BabaNo ratings yet

- Concatenation and Implementation of Reed - Solomon and Convolutional CodesDocument6 pagesConcatenation and Implementation of Reed - Solomon and Convolutional CodesEditor IJRITCCNo ratings yet

- 3-1-Lossless CompressionDocument10 pages3-1-Lossless CompressionMuhamadAndiNo ratings yet

- Why Needed?: Without Compression, These Applications Would Not Be FeasibleDocument11 pagesWhy Needed?: Without Compression, These Applications Would Not Be Feasiblesmile00972No ratings yet

- 1 Theory: Morse Code, A Famous Type of CodeDocument5 pages1 Theory: Morse Code, A Famous Type of CodeLando ParadaNo ratings yet

- Chapter 3 FECDocument10 pagesChapter 3 FECSachin KumarNo ratings yet

- Raptor Codes: Amin ShokrollahiDocument1 pageRaptor Codes: Amin ShokrollahiRajesh HarikrishnanNo ratings yet

- An Introduction To Arithmetic Coding: Glen G. Langdon, JRDocument15 pagesAn Introduction To Arithmetic Coding: Glen G. Langdon, JRFeroza MirajkarNo ratings yet

- DC Assignments - 18-19Document4 pagesDC Assignments - 18-19Allanki Sanyasi RaoNo ratings yet

- Matm FinalsDocument6 pagesMatm FinalsAlvin MurilloNo ratings yet

- Information Theory: Mohamed HamadaDocument19 pagesInformation Theory: Mohamed HamadaSudesh KumarNo ratings yet

- Cha 02Document45 pagesCha 02muhabamohamed21No ratings yet

- Coding Theory and ApplicationsDocument5 pagesCoding Theory and Applicationsjerrine20090% (1)

- Huffman and Lempel-Ziv-WelchDocument14 pagesHuffman and Lempel-Ziv-WelchDavid SiegfriedNo ratings yet

- Unit I Information Theory & Coding Techniques P IDocument48 pagesUnit I Information Theory & Coding Techniques P IShanmugapriyaVinodkumarNo ratings yet

- Ict AssignmentDocument3 pagesIct Assignment123vidyaNo ratings yet

- IT1251 Information Coding TechniquesDocument23 pagesIT1251 Information Coding TechniquesstudentscornersNo ratings yet

- IT Lecture 0Document9 pagesIT Lecture 019PD34 - SNEHA PNo ratings yet

- Performance Analysis of Reed-Solomon Codes Concatinated With Convolutional Codes Over Awgn ChannelDocument6 pagesPerformance Analysis of Reed-Solomon Codes Concatinated With Convolutional Codes Over Awgn ChannelkattaswamyNo ratings yet

- A High Speed Reed-Solomon DecoderDocument8 pagesA High Speed Reed-Solomon DecoderRAJKUMAR SAMIKKANNUNo ratings yet

- DC Module 1Document36 pagesDC Module 1Manas ShettyNo ratings yet

- Source Coding and Channel Coding For Mobile Multimedia CommunicationDocument19 pagesSource Coding and Channel Coding For Mobile Multimedia CommunicationSarah StevensonNo ratings yet

- 2 marsk-ITCDocument8 pages2 marsk-ITClakshmiraniNo ratings yet

- DIP Lecture Note - Image CompressionDocument23 pagesDIP Lecture Note - Image Compressiondeeparahul2022No ratings yet

- Coding 7Document7 pagesCoding 7Emperor'l BillNo ratings yet

- Lec 3 Huffman CodingDocument9 pagesLec 3 Huffman CodingAashish MathurNo ratings yet

- Conditions of Occurrence of Events: EntropyDocument35 pagesConditions of Occurrence of Events: EntropyRIET COLLEGENo ratings yet

- Huffman Coding A Case Study of A ComparisonDocument2 pagesHuffman Coding A Case Study of A ComparisonSIDDHARTH GUPTANo ratings yet

- Huffman Coding TechniqueDocument13 pagesHuffman Coding TechniqueAnchal RathoreNo ratings yet

- Information Theory and Coding (Lecture 1) : Dr. Farman UllahDocument32 pagesInformation Theory and Coding (Lecture 1) : Dr. Farman Ullahasif habibNo ratings yet

- (Karrar Shakir Muttair) CodingDocument33 pages(Karrar Shakir Muttair) CodingAHMED DARAJNo ratings yet

- Compression Algorithms: Hu Man and Lempel-Ziv-Welch (LZW) : HapterDocument17 pagesCompression Algorithms: Hu Man and Lempel-Ziv-Welch (LZW) : Hapterrafael ribasNo ratings yet

- Information Theory and Coding PDFDocument150 pagesInformation Theory and Coding PDFhawk eyesNo ratings yet

- Unit 1Document45 pagesUnit 1Abhishek Bose100% (2)

- Information Theory and Coding 2marksDocument12 pagesInformation Theory and Coding 2marksPrashaant YerrapragadaNo ratings yet

- Huffman Coding AssignmentDocument7 pagesHuffman Coding AssignmentMavine0% (1)

- Low Density Parity Check CodesDocument21 pagesLow Density Parity Check CodesPrithvi Raj0% (1)

- Anna University Exams Nov Dec 2019 - Regulation 2017 Ec8501 Digital Communication Part B & Part C QuestionsDocument2 pagesAnna University Exams Nov Dec 2019 - Regulation 2017 Ec8501 Digital Communication Part B & Part C QuestionsMohanapriya.S 16301No ratings yet

- Ict AssignmentDocument3 pagesIct AssignmentShanmugapriyaVinodkumarNo ratings yet

- DCS Module 1Document46 pagesDCS Module 1Sudarshan GowdaNo ratings yet

- Information Coding TechniquesDocument42 pagesInformation Coding TechniquesexcitekarthikNo ratings yet

- Analysis and Design of Raptor Codes For Joint Decoding Using Information Content EvolutionDocument5 pagesAnalysis and Design of Raptor Codes For Joint Decoding Using Information Content EvolutionTempaNo ratings yet

- Algorithmic Results in List DecodingDocument91 pagesAlgorithmic Results in List DecodingIip SatrianiNo ratings yet

- Lesson - Chapter 1-1 - Overview of Digital CommunicationDocument62 pagesLesson - Chapter 1-1 - Overview of Digital CommunicationQuan HoangNo ratings yet

- Bit-Wise Arithmetic Coding For Data CompressionDocument16 pagesBit-Wise Arithmetic Coding For Data CompressionperhackerNo ratings yet

- 2015 Chapter 7 MMS ITDocument36 pages2015 Chapter 7 MMS ITMercy DegaNo ratings yet

- Quiz 2 CISDocument4 pagesQuiz 2 CISbonnyme.00No ratings yet

- MeowDocument1 pageMeowkzypt57247No ratings yet

- Introduction To The InternetDocument38 pagesIntroduction To The InternetRr NgayaanNo ratings yet

- Service Manual Nw-A30 1.1Document54 pagesService Manual Nw-A30 1.1alexandru ilieNo ratings yet

- Presentation AllotDocument10 pagesPresentation AllotPandu PriambodoNo ratings yet

- CMOS Image Sensor, 5.1 MP, 1/2.5": Product OverviewDocument2 pagesCMOS Image Sensor, 5.1 MP, 1/2.5": Product Overviewsree kumarNo ratings yet

- Catalyst 5000 Family - Multilayer SwitchesDocument28 pagesCatalyst 5000 Family - Multilayer SwitchesRobison Meirelles juniorNo ratings yet

- EXPLORER 710 Data SheetDocument2 pagesEXPLORER 710 Data SheetJiso JisoNo ratings yet

- PT. Sentra Studia Indonesia (SSI)Document36 pagesPT. Sentra Studia Indonesia (SSI)dimasNo ratings yet

- Archer C7 AC1750 User GuideDocument140 pagesArcher C7 AC1750 User GuideMatti ReisNo ratings yet

- Configuring CBACDocument50 pagesConfiguring CBACreferenceref31No ratings yet

- User Manual: Dragon Touch Outdoor PTZ Security Camera OD10Document62 pagesUser Manual: Dragon Touch Outdoor PTZ Security Camera OD10taponcete69No ratings yet

- Lawo Compact ENDocument15 pagesLawo Compact ENEdilsn CruzNo ratings yet

- CSE-ND-2020-CS 8591-Computer Networks-711204806-X10320 (CS8591)Document3 pagesCSE-ND-2020-CS 8591-Computer Networks-711204806-X10320 (CS8591)habsjbNo ratings yet

- DCN BitsDocument489 pagesDCN Bitskuderu harshithaNo ratings yet

- WLAN Simulations Using Huawei eNSP For E-Laboratory in Engineering SchoolsDocument25 pagesWLAN Simulations Using Huawei eNSP For E-Laboratory in Engineering SchoolsALIGNDALNo ratings yet

- CLEVO Notebook NH55 NH58RCQDocument5 pagesCLEVO Notebook NH55 NH58RCQMsi InfotronicNo ratings yet

- Test Bank 7Document28 pagesTest Bank 7نزوا نزتظNo ratings yet

- (Attached) WSCE 2021 - Speaker Consent Form - EngDocument2 pages(Attached) WSCE 2021 - Speaker Consent Form - EngTikoto BackupNo ratings yet

- Catalog Tacteasy Ta-75 - 1624667248Document3 pagesCatalog Tacteasy Ta-75 - 1624667248duc nguyenNo ratings yet

- UD12829B - Hik-Connect Android Mobile Client - User Manual - 3.7.0 - PDF - en-US - 20190313Document103 pagesUD12829B - Hik-Connect Android Mobile Client - User Manual - 3.7.0 - PDF - en-US - 20190313Julio LopezNo ratings yet

- AKD HMI Modbus Communications Manual EN (REV A)Document8 pagesAKD HMI Modbus Communications Manual EN (REV A)péter kisNo ratings yet

- Andia Sfarsitul Lumii Donlowd - Google SearchDocument1 pageAndia Sfarsitul Lumii Donlowd - Google Search68v9xwsn7jNo ratings yet

- CMU-CS S252 - Introduction To Network - Telecommunication Technology - 2020S - Lecture Slides - 2Document26 pagesCMU-CS S252 - Introduction To Network - Telecommunication Technology - 2020S - Lecture Slides - 2Quang Trần MinhNo ratings yet

- Module 1 - Introduction To Web SystemDocument30 pagesModule 1 - Introduction To Web SystemMr. EpiphanyNo ratings yet

- Epson L1210 L1250Document2 pagesEpson L1210 L1250MasmuhiqNo ratings yet

- DxdiagDocument26 pagesDxdiagNicole AlzateNo ratings yet

- Magic Mirror: The Magpi Issue 54Document2 pagesMagic Mirror: The Magpi Issue 54Chandu ChandrakanthNo ratings yet

- Basics of Storage TechnologyDocument13 pagesBasics of Storage TechnologyAnonymous 4eoWsk3No ratings yet

- TestDocument8 pagesTestCHINTU KUMARNo ratings yet