Professional Documents

Culture Documents

Microcontroller Based American Sign Language Recognition and Language Translation System Using IoT Application.

Uploaded by

jaeco WegoOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Microcontroller Based American Sign Language Recognition and Language Translation System Using IoT Application.

Uploaded by

jaeco WegoCopyright:

Available Formats

Microcontroller Based American Sign Language Recognition and

Language Translation System using IoT application.

A Capstone Design Proposal

Presented to the Faculty of the

Information and Communications Technology Program

STI College of Meycauayan

Jherome Lourence M. Baisa

Jaztin R. Belga

Walter Borja

Jae Clark B. Dela Torre

September 22, 2023

ENDORSEMENT FORM FOR PROPOSAL DEFENSE

TITLE OF RESEARCH: Microcontroller Based American Sign Language

Recognition and Language Translation System

using IoT application.

NAME OF PROPONENTS: Jherome Lourence M. Baisa

Jaztin R. Belga

Walter A. Borja

Jae Clark B. Dela Torre

In Partial Fulfilment of the Requirements

for the degree Bachelor of Science in Computer Engineering

has been examined and is recommended for Proposal Defense.

ENDORSED BY:

Engr. Noel Jason O. Lusung, MIT

Capstone Design Adviser

APPROVED FOR PROPOSAL DEFENSE:

Engr. Noel Jason O. Lusung, MIT

Capstone Design Coordinator

NOTED BY:

<Program Head's Given Name MI. Family Name>

Program Head

September 22, 2023

FT-ARA-020-00 | STI College of Meycauayan ii

APPROVAL SHEET

This capstone design proposal titled: Microcontroller Based American Sign Language

Recognition and Language Translation System using IoT application. prepared and

submitted by Jherome Laurence M. Baisa, Jaztin R. Belga, Walter A. Borja, and Jae

Clark B. Dela Torre, in partial fulfilment of the requirements for the degree of Bachelor

of Science in Computer Engineering, has been examined and is recommended for

acceptance an approval.

Engr. Noel Jason O. Lusung, MIT

Capstone Design Adviser

Accepted and approved by the Capstone Design Review Panel

in partial fulfilment of the requirements for the degree of

Bachelor of Science in Computer Engineering

<Panelists' Given Name MI. Family Name> <Panelists' Given Name MI. Family Name>

Panel Member Panel Member

<Panelists' Given Name MI. Family Name>

Lead Panelist

Noted:

<Capstone Design Coordinator's Given <Program Head's Given Name MI. Family

Name MI. Family Name> Name>

Capstone Design Coordinator Program Head

September 22, 2023

FT-ARA-020-00 | STI College of Meycauayan iii

TABLE OF CONTENTS

Page

Title Page i

Endorsement Form for Proposal Defense ii

Approval Sheet iii

Table of Contents iv

Introduction 5

Background of the problem

Overview of the current state of technology

Objectives of the study

Scope and limitations of the study

FT-ARA-020-00 | STI College of Meycauayan iv

INTRODUCTION

This capstone project, titled "Microcontroller Based American Sign Language

Recognition and Language Translation System using IoT Application," addresses the

need to improve communication for individuals with hearing impairments, particularly

those who use American Sign Language (ASL). The project combines electronics,

software development, and IoT technology to create an innovative solution that

recognizes ASL gestures and translates them into spoken or written language. By

bridging the communication gap, this system aims to enhance accessibility and

inclusivity for ASL users, enabling them to communicate seamlessly with individuals

who may not be fluent in ASL. The project's methodology, system architecture,

implementation details, and potential impact on improving the lives of those with hearing

impairments will be discussed in subsequent sections. Ultimately, this endeavor aspires to

foster global communication and inclusiveness, empowering individuals with hearing

impairments in an interconnected world.

Background of the problem

Communication is a important thing humans do, yet individuals with hearing

impairments, who rely on American Sign Language (ASL) for expression, face a

significant communication barrier when interacting with those who do not understand

ASL. This gap leads to social isolation, miscommunication, and limited access to

education and employment opportunities. While technology has revolutionized

communication, it has not adequately addressed the needs of ASL users. The scarcity of

ASL interpreters further exacerbates the issue. Thus, the project "Microcontroller Based

American Sign Language Recognition and Language Translation System using IoT

Application" is born out of the pressing need to bridge this communication divide. By

harnessing microcontroller technology and the Internet of Things (IoT), it aims to

develop a precise and accessible ASL recognition and translation system, facilitating

effective communication and promoting inclusivity in an increasingly interconnected

world.

FT-ARA-020-00 | STI College of Meycauayan 5

Overview of the current state of the technology

1. ASL Recognition Systems: ASL recognition systems have made significant

progress in recent years, with the integration of computer vision and machine

learning techniques. These systems leverage cameras and sensors to track the

movements of ASL gestures. However, their accuracy and speed can vary, and

they often struggle with complex, nuanced signs.

2. Machine Translation: Machine translation technology has enabled the

conversion of ASL into spoken or written language. Yet, the translations can

sometimes lack the subtleties and context of ASL, leading to potential

misinterpretations.

3. Wearable Devices: Some wearable devices, such as smart gloves equipped with

sensors, have been developed to aid ASL communication. These devices capture

hand movements and convert them into text or speech. However, their cost and

accessibility remain barriers for widespread adoption.

4. Mobile Applications: Mobile applications have emerged to facilitate ASL

learning and communication, but many lack real-time recognition and translation

capabilities.

5. IoT Integration: IoT technology has not been extensively incorporated into ASL

recognition and translation systems, leaving room for innovation in connecting

ASL users with mainstream communication channels.

6. Accessibility: While there has been progress in making technology more

accessible to individuals with hearing impairments, there is a need for solutions

that seamlessly integrate with everyday communication platforms, breaking down

barriers to effective interaction.

FT-ARA-020-00 | STI College of Meycauayan 6

Objectives of the study

1. Develop a Robust ASL Recognition System: Create a highly accurate and

efficient American Sign Language recognition system that can identify and

interpret a wide range of ASL gestures in real-time, considering variations in

signing styles and expressions.

2. Implement Language Translation Algorithms: Develop algorithms for

translating ASL gestures into spoken or written language with a focus on

preserving the nuances and contextual meaning of ASL expressions for more

accurate and natural translations.

3. Integrate IoT Connectivity: Incorporate Internet of Things (IoT) technology to

enable seamless connectivity between the ASL recognition system and various

communication devices and platforms, allowing ASL users to communicate with

non-ASL speakers effortlessly.

4. Ensure Accessibility and User-Friendliness: Design the system with a user-

centric approach, emphasizing accessibility for individuals with hearing

impairments. Ensure that the system is intuitive, user-friendly, and adaptable to

different user preferences and needs.

5. Optimize for Real-Time Communication: Aim for low latency in recognition

and translation processes to support real-time communication, making it suitable

for both casual conversations and more formal settings.

6. Evaluate Accuracy and Reliability: Conduct rigorous testing and evaluation to

assess the accuracy and reliability of the ASL recognition and translation system

under various conditions and with diverse user groups.

FT-ARA-020-00 | STI College of Meycauayan 7

7. Raise Awareness and Accessibility: Promote awareness and accessibility by

advocating for the adoption of the system in educational institutions, workplaces,

and public spaces to foster inclusivity and improve communication for individuals

with hearing impairments.

Scope and limitations of the study

Scope:

1. Create a robust ASL recognition system capable of accurately identifying and

interpreting a wide range of ASL gestures in real-time.

2. Integrate IoT connectivity to enable ASL users to communicate with non-ASL

speakers through various communication devices and platforms.

3. Prioritize accessibility and user-friendliness in the system's design, ensuring it

caters to the needs of individuals with hearing impairments.

4. Optimize the system for real-time communication, targeting low latency to

support both casual conversations and formal interactions.

5. Conduct thorough testing and evaluation to assess the accuracy and reliability of

the ASL recognition and translation system under diverse conditions and user

groups.

6. Consider cost-effective solutions to enhance accessibility for ASL users with

limited resources.

Limitations

1. Recognition Complexity: The system's accuracy may vary with the complexity

of ASL gestures, and it may face challenges in recognizing nuanced signs or signs

performed at high speeds.

FT-ARA-020-00 | STI College of Meycauayan 8

2. Translation Nuances: The translation of ASL into spoken or written language

may not capture the full depth of ASL's expressiveness and may require

continuous refinement to enhance naturalness.

3. Hardware Constraints: The project will operate within the constraints of

available microcontroller hardware, which may impact processing power and

memory capabilities.

4. IoT Infrastructure: The system's effectiveness will depend on the availability

and reliability of IoT infrastructure, including network connectivity and device

compatibility.

5. User Learning Curve: Users may need some time to adapt to the system, and its

success may depend on user training and familiarity with technology.

6. Cost Considerations: While efforts will be made to keep the system cost-

effective, there may be limitations in achieving affordability for all potential

users.

FT-ARA-020-00 | STI College of Meycauayan 9

You might also like

- Computer-Aided Design TechniquesFrom EverandComputer-Aided Design TechniquesE. WolfendaleNo ratings yet

- Semantic ComputingFrom EverandSemantic ComputingPhillip C.-Y. SheuNo ratings yet

- IoT Smart Garbage Segregation and Trashbin Indicator SystemDocument9 pagesIoT Smart Garbage Segregation and Trashbin Indicator Systemjaeco WegoNo ratings yet

- Iratj 08 00240Document6 pagesIratj 08 00240Noman Ahmed DayoNo ratings yet

- Microcontroller Based Air Pollution Monitoring System With Automated Air Purifier Using IoT ApplicationDocument10 pagesMicrocontroller Based Air Pollution Monitoring System With Automated Air Purifier Using IoT Applicationjaeco WegoNo ratings yet

- A Survey On Natural Language To SQL Query GeneratorDocument6 pagesA Survey On Natural Language To SQL Query GeneratorIJRASETPublicationsNo ratings yet

- Touch Free Challan Form Filling System Using Face DetectionDocument6 pagesTouch Free Challan Form Filling System Using Face DetectionIJRASETPublicationsNo ratings yet

- A.I Assistant: Nandha Arts and Science CollegeDocument55 pagesA.I Assistant: Nandha Arts and Science CollegeLoofahNo ratings yet

- Capstone Project Guidelines - 11Document41 pagesCapstone Project Guidelines - 11Rodjean SimballaNo ratings yet

- Sign Language To Text ConverterDocument16 pagesSign Language To Text ConverterAditya RajNo ratings yet

- Seminar 1Document20 pagesSeminar 1arolenecynthiaNo ratings yet

- Sign Doc 2 - MergedDocument42 pagesSign Doc 2 - MergedSREYAS KNo ratings yet

- Speech Recognition Using Machine LearningDocument8 pagesSpeech Recognition Using Machine LearningSHARATH L P CSE Cob -2018-2022No ratings yet

- Final IndianSign IjrtescopusDocument8 pagesFinal IndianSign IjrtescopusKhushal DasNo ratings yet

- Project ReportDocument16 pagesProject ReportNandhini SenthilvelanNo ratings yet

- Design of Voice Command Access Using MatlabDocument5 pagesDesign of Voice Command Access Using MatlabIJRASETPublicationsNo ratings yet

- Voice To Text Using ASR and HMMDocument6 pagesVoice To Text Using ASR and HMMIJRASETPublicationsNo ratings yet

- Voice Recognition - 103626Document47 pagesVoice Recognition - 103626sp2392546No ratings yet

- Speech Recognition Using Correlation TecDocument8 pagesSpeech Recognition Using Correlation TecAayan ShahNo ratings yet

- Man RepDocument20 pagesMan RepSarthak ChaudhariNo ratings yet

- Conversational AI AssistantDocument6 pagesConversational AI AssistantIJRASETPublicationsNo ratings yet

- Team 2 Minor ProjectDocument31 pagesTeam 2 Minor ProjectKESAVAN SNo ratings yet

- Mathematics 11 03729Document20 pagesMathematics 11 03729RENI YUNITA 227056004No ratings yet

- Spotting Fingerspelled Words From Sign Language Video by Temporally Regularized Canonical Component AnalysisDocument7 pagesSpotting Fingerspelled Words From Sign Language Video by Temporally Regularized Canonical Component AnalysissandeepNo ratings yet

- T-Bot ReportDocument64 pagesT-Bot ReportVivek JhaNo ratings yet

- Voice Recognition Thesis PhilippinesDocument7 pagesVoice Recognition Thesis Philippinesafknvhdlr100% (2)

- Voice Assistant NotepadDocument9 pagesVoice Assistant NotepadIJRASETPublicationsNo ratings yet

- A Computer Remote Control System Based On Speech RDocument17 pagesA Computer Remote Control System Based On Speech RShalini KumaranNo ratings yet

- Project ReDocument37 pagesProject Replabita borboraNo ratings yet

- MinorProject (Voice Recognition)Document34 pagesMinorProject (Voice Recognition)priyamNo ratings yet

- Sign Language Detection Using Deep LearningDocument5 pagesSign Language Detection Using Deep LearningIJRASETPublicationsNo ratings yet

- Smart Embedded Device For Object and Text Recognition Through Real Time Video Using Raspberry PIDocument7 pagesSmart Embedded Device For Object and Text Recognition Through Real Time Video Using Raspberry PIVeeresh AnnigeriNo ratings yet

- Real-Time Object and Face Monitoring Using Surveillance VideoDocument8 pagesReal-Time Object and Face Monitoring Using Surveillance VideoIJRASETPublicationsNo ratings yet

- Speech Recognition and Synthesis Tool: Assistive Technology For Physically Disabled PersonsDocument6 pagesSpeech Recognition and Synthesis Tool: Assistive Technology For Physically Disabled PersonsArsène CAKPONo ratings yet

- Artificial Intelligence For Speech RecogDocument5 pagesArtificial Intelligence For Speech RecogApoorva R.V.No ratings yet

- The Android Application For Sending SmsDocument5 pagesThe Android Application For Sending SmsLav KumarNo ratings yet

- Artificial Intelligence For Speech RecognitionDocument5 pagesArtificial Intelligence For Speech RecognitionIJRASETPublicationsNo ratings yet

- Gesture Language Translator Using Raspberry PiDocument7 pagesGesture Language Translator Using Raspberry PiIJRASETPublicationsNo ratings yet

- Sign Language TranslatorDocument4 pagesSign Language TranslatorInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Python IDESoftwarepaperDocument14 pagesPython IDESoftwarepaperNgọc YếnNo ratings yet

- Cyber Bullying Detection Using Machine Learning-IJRASETDocument10 pagesCyber Bullying Detection Using Machine Learning-IJRASETIJRASETPublicationsNo ratings yet

- Bluetooth Security DissertationDocument5 pagesBluetooth Security DissertationWriteMyPaperForMeIn3HoursCanada100% (1)

- PRJ FinalDocument33 pagesPRJ Finalkkat60688No ratings yet

- Module 2 - Lesson1Document16 pagesModule 2 - Lesson1maritel dawaNo ratings yet

- Machine Learning Based Text To Speech Converter For Visually ImpairedDocument9 pagesMachine Learning Based Text To Speech Converter For Visually ImpairedIJRASETPublicationsNo ratings yet

- Speech Recognition Using Ic HM2007Document31 pagesSpeech Recognition Using Ic HM2007Nitin Rawat100% (4)

- Audio To Sign Language TranslatorDocument5 pagesAudio To Sign Language TranslatorIJRASETPublicationsNo ratings yet

- Secure Mobile Application Developments Critical Issues and Challenges IJERTCONV2IS02007Document4 pagesSecure Mobile Application Developments Critical Issues and Challenges IJERTCONV2IS02007omkarNo ratings yet

- Speaker Recognition Using MATLABDocument75 pagesSpeaker Recognition Using MATLABPravin Gareta95% (64)

- Video Conferencing WebAppDocument6 pagesVideo Conferencing WebAppIJRASETPublicationsNo ratings yet

- AI Voice Assistant Using NLP and Python Libraries (M.A.R.F)Document8 pagesAI Voice Assistant Using NLP and Python Libraries (M.A.R.F)International Journal of Innovative Science and Research TechnologyNo ratings yet

- Voice To Text Conversion Using Deep LearningDocument6 pagesVoice To Text Conversion Using Deep LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- NVDADocument9 pagesNVDAAnonymous 75M6uB3OwNo ratings yet

- 7122446426Document109 pages7122446426shashank madhwalNo ratings yet

- An Enabler of Devsecops For Self-Service Cybersecurity MonitoringDocument31 pagesAn Enabler of Devsecops For Self-Service Cybersecurity MonitoringPranjal GuravNo ratings yet

- Title Def TemplateDocument9 pagesTitle Def Templatejohn.i.bones92No ratings yet

- Prompt Engineering ; The Future Of Language GenerationFrom EverandPrompt Engineering ; The Future Of Language GenerationRating: 3.5 out of 5 stars3.5/5 (2)

- Basics of Programming: A Comprehensive Guide for Beginners: Essential Coputer Skills, #1From EverandBasics of Programming: A Comprehensive Guide for Beginners: Essential Coputer Skills, #1No ratings yet

- Rest Security by Example Frank KimDocument62 pagesRest Security by Example Frank KimneroliangNo ratings yet

- Sample Swot For An InstitutionDocument8 pagesSample Swot For An Institutionhashadsh100% (3)

- Punching Report - Unikl MsiDocument9 pagesPunching Report - Unikl MsiRudyee FaiezahNo ratings yet

- HW 1 Eeowh 3Document6 pagesHW 1 Eeowh 3정하윤No ratings yet

- Totem Tribe CheatsDocument7 pagesTotem Tribe Cheatscorrnelia1No ratings yet

- Industrial Automation Guide 2016Document20 pagesIndustrial Automation Guide 2016pedro_luna_43No ratings yet

- Chapter 2 - The Origins of SoftwareDocument26 pagesChapter 2 - The Origins of Softwareلوي وليدNo ratings yet

- Security Policies and Security ModelsDocument10 pagesSecurity Policies and Security Modelsnguyen cuongNo ratings yet

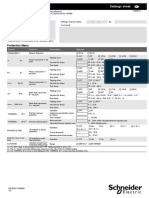

- VIP400 Settings Sheet NRJED311208ENDocument2 pagesVIP400 Settings Sheet NRJED311208ENVăn NguyễnNo ratings yet

- BTM380 F12Document7 pagesBTM380 F12Kevin GuérinNo ratings yet

- Floor Cleaner - SynopsisDocument12 pagesFloor Cleaner - SynopsisTanvi Khurana0% (2)

- God The Myth: by 6litchDocument4 pagesGod The Myth: by 6litchAnonymous nJ1YlTWNo ratings yet

- It Is Vital To Good Project Management To Be Meticulously Honest in Estimat Ing The Time Required To Complete Each of The Various Tasks Included in The Project. Note ThatDocument16 pagesIt Is Vital To Good Project Management To Be Meticulously Honest in Estimat Ing The Time Required To Complete Each of The Various Tasks Included in The Project. Note ThatSuraj SinghalNo ratings yet

- IPsec - GNS3Document9 pagesIPsec - GNS3khoantdNo ratings yet

- Unit 1 Real Numbers - Activities 1 (4º ESO)Document3 pagesUnit 1 Real Numbers - Activities 1 (4º ESO)lumaromartinNo ratings yet

- 5G Smart Port Whitepaper enDocument27 pages5G Smart Port Whitepaper enWan Zulkifli Wan Idris100% (1)

- Classification Tree - Utkarsh Kulshrestha: Earn in G Is in Learnin G - Utkarsh KulshresthaDocument33 pagesClassification Tree - Utkarsh Kulshrestha: Earn in G Is in Learnin G - Utkarsh KulshresthaN MaheshNo ratings yet

- User Guide 1531 Clas - 3ag - 21608 - Ahaa - Rkzza - Ed01Document338 pagesUser Guide 1531 Clas - 3ag - 21608 - Ahaa - Rkzza - Ed01iaomsuet100% (2)

- Computerized Control Consoles: MCC Classic 50-C8422/CDocument8 pagesComputerized Control Consoles: MCC Classic 50-C8422/CLaura Ximena Rojas NiñoNo ratings yet

- MS Intelligent StreamingDocument9 pagesMS Intelligent Streamingder_ronNo ratings yet

- General-Controller-Manual-VVVFDocument47 pagesGeneral-Controller-Manual-VVVFBeltran HéctorNo ratings yet

- State of The ArtDocument14 pagesState of The ArtsuryaNo ratings yet

- Formulae For Vendor RatingDocument3 pagesFormulae For Vendor RatingMadhavan RamNo ratings yet

- ControlMaestro 2010Document2 pagesControlMaestro 2010lettolimaNo ratings yet

- Oracle K Gudie For RAC11g - Install - WindowsDocument180 pagesOracle K Gudie For RAC11g - Install - WindowsMuhammad KhalilNo ratings yet

- BK Vibro - DDAU3 - Wind - BrochureDocument12 pagesBK Vibro - DDAU3 - Wind - BrochurePedro RosaNo ratings yet

- Visual Foxpro Bsamt3aDocument32 pagesVisual Foxpro Bsamt3aJherald NarcisoNo ratings yet

- Netcat ManualDocument7 pagesNetcat ManualRitcher HardyNo ratings yet

- Implementation With Ruby Features: One Contains Elements Smaller Than V The Other Contains Elements Greater Than VDocument12 pagesImplementation With Ruby Features: One Contains Elements Smaller Than V The Other Contains Elements Greater Than VXyz TkvNo ratings yet

- Sub QueriesDocument16 pagesSub QueriesakshayNo ratings yet