Professional Documents

Culture Documents

A Aaaaaaa

Uploaded by

Roopali ChavanOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

A Aaaaaaa

Uploaded by

Roopali ChavanCopyright:

Available Formats

The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume XLII-2/W12, 2019

Int. Worksh. on “Photogrammetric & Computer Vision Techniques for Video Surveillance, Biometrics and Biomedicine”, 13–15 May 2019, Moscow, Russia

AUTOMATIC LIP-READING OF HEARING IMPAIRED PEOPLE

D. Ivanko, D. Ryumin, A. Karpov

St. Petersburg Institute for Informatics and Automation of the Russian Academy of Sciences, SPIIRAS, Saint-Petersburg,

Russian Federation – denis.ivanko11@gmail.com, dl_03.03.1991@mail.ru, karpov@iias.spb.su

Commission II WG II/5

KEY WORDS: Lip-reading, hearing impaired people, region-of-interest detection, visual speech recognition

ABSTRACT:

Inability to use speech interfaces greatly limits the deaf and hearing impaired people in the possibility of human-machine interaction.

To solve this problem and to increase the accuracy and reliability of the automatic Russian sign language recognition system it is

proposed to use lip-reading in addition to hand gestures recognition. Deaf and hearing impaired people use sign language as the main

way of communication in everyday life. Sign language is a structured form of hand gestures and lips movements involving visual

motions and signs, which is used as a communication system. Since sign language includes not only hand gestures, but also lip

movements that mimic vocalized pronunciation, it is of interest to investigate how accurately such a visual speech can be recognized

by a lip-reading system, especially considering the fact that the visual speech of hearing impaired people is often characterized with

hyper-articulation, which should potentially facilitate its recognition. For this purpose, thesaurus of Russian sign language

(TheRusLan) collected in SPIIRAS in 2018-19 was used. The database consists of color optical FullHD video recordings of 13

native Russian sign language signers (11 females and 2 males) from “Pavlovsk boarding school for the hearing impaired”. Each of

the signers demonstrated 164 phrases for 5 times. This work covers the initial stages of this research, including data collection, data

labeling, region-of-interest detection and methods for informative features extraction. The results of this study can later be used to

create assistive technologies for deaf or hearing impaired people.

1. INTRODUCTION considerably large changes in shapes when it is in different

location in the sentence (Yang et al., 2010). Sign Language

Automatic lip-reading, also known as visual speech recognition involves the use of different parts of the body – not only hands

(VSR), has received a lot of attention in the recent years for its and fingers movements. Deaf people use lips movements as a

potential use in applications of human-machine interaction, sign part of a sign language in general, even though they cannot hear

language recognition, audio-visual speech recognition, biometry acoustic speech. Moreover, the lips movements of hearing

and biomedicine (Katsaggelos et al., 2015). Most people can impaired people are usually characterized with hyper-

understand a few unspoken words by lip-reading, and many articulation. This fact, potentially, enables the opportunity to

hearing impaired individuals are quite proficient at this skill. better recognize the visual speech of such group of people,

The idea of interpreting silent speech has been around for long since lip movements become more pronounced.

time. Automatic visual lip-reading was initially proposed as an

enhancement to speech recognition in noisy environments It is well known that hearing-impaired people and those

(Petajan, 1984). VSR are commonly used to improve the listening in noisy acoustic conditions (noise, reverberation,

reliability and robustness of audio speech recognition systems. multiple speakers) rely heavily on the visual input to eliminate

However, there is a group of people for which the use of audio ambiguity in acoustic speech elements. Although a significant

speech is not possible. Lip-reading plays an important role in amount of research has been devoted to the topic of visual

communication by the hearing-impaired. speech decoding, the problem of lip reading for hearing

impaired people remains an open issue in the field (Akbari et

According to statistics reported in (Ryumin et al., 2019) over al., 2018).

5% of the world population – or 466 million people – has

disabling hearing loss (432 million adults and 34 million From the literature review, the most common sign languages

children). It is estimated that by 2050 over 900 million people – recognition researches are based on American Sign Language,

or one in every ten people – will have disabling hearing loss. Indian Sign Language and Arabic Sign Language. Some early

For the deaf and speech-impaired community, sign language efforts on sign language recognition can be dated back to 1993,

(SL) serves as useful tools for daily interaction (Denby et al., where gesture recognition techniques are adapted from speech

2010). Official data report about 120000 people in Russian and handwriting recognition techniques (Darrell et al., 1993). A

Federation (and about 100000 people in other countries) using comprehensive study of the development of the field of sign

Russian sign language as their main way of communication. language recognition is given in the work (Cheok et al., 2017).

Sign language is a structured form of hand gestures involving The focus of this research is on improving the accuracy and

visual motions and signs, which is used as a communication robustness of automatic Russian sign language recognition via

system. SL recognition includes the whole process of tracking adding lip-reading module to hand gesture recognition system.

and identifying the signs performed and converting into This paper covers the initial stages of this research, including

semantically meaningful words and expressions (Cheok et al., data collection, data labeling, region-of-interest detection and

2017). Majority of sign language involves only upper part of the methods for informative features extraction.

body from waist level upwards. Besides, the same sign can have

This contribution has been peer-reviewed.

https://doi.org/10.5194/isprs-archives-XLII-2-W12-97-2019 | © Authors 2019. CC BY 4.0 License. 97

The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume XLII-2/W12, 2019

Int. Worksh. on “Photogrammetric & Computer Vision Techniques for Video Surveillance, Biometrics and Biomedicine”, 13–15 May 2019, Moscow, Russia

2. DATA AND TOOLS

The corpus (database) is mandatory for learning any modern

speech recognition system based on probabilistic models and

machine learning techniques. There are already a number of

large commercial or free databases for learning acoustic speech

recognition systems for many of the world languages. However,

one major obstacle to the current research on visual speech

recognition is the lack of suitable databases. In contrast to the

richness of audio speech corpora, only few databases are

publicly available for visual-only or audio-visual speech

recognition systems (Zhou et al., 2014). Most of them include a

limited number of speakers and a small vocabulary. Databases

on the Russian sign language, suitable for VSR training, are

practically non-existent. For this reason, in 2018, a Thesaurus

of Russian sign language (TheRuSLan) (Ryumin et al. 2019)

was recorded in SPIIRAS.

2.1 TheRuSLan

TheRusLan comprises recordings of 13 (11 females and 2

males) native signers of Russian sign language from “Pavlovsk

boarding school for the hearing impaired” or “Deaf-Mute Figure 1. Example of signers during a recording session

school” (the oldest institution for the hearing impaired in

Russia). Each signer demonstrated 164 phrases for 5 times. phoneme for acoustic speech, a viseme is the minimal

Total number of samples in the corpora is 10660. Screenshots distinguishable unit of visual speech. The number of visemes is

of the signers in the course of recording sessions are shown in language-dependent and for Russian there are 14-20

Fig. 1. The corpus consists of color optical FullHD distinguishable speech units in different works. In the current

(1920×1080) video files, infrared video files (512×424) and study we used a list of 20 viseme classes (table 2), proposed in

depth video files (512×424), it also includes feature files (with (Ivanko et. al, 2018a).

25 skeletal reference dots on each frame), text files of temporal

annotation into phrases, words and gesture classes (was made Viseme Corresponding Viseme Corresponding

manually by an expert). A brief summary of the contents of the Class phonemes Class phonemes

database is presented in Table 1. All the recordings were V1 sil (pause) V11 b, b’, p, p’

organized into a logically structured database that comprises a V2 a, a! V12 f, f’, v, v’

file with information about all the speakers and recording V3 i, i! V13 s, s’,z, z’,c

parameters.

V4 o! V14 sсh

V5 e!, e V15 sh

V6 y, y! V16 j

Parameter Value

V7 u, u! V17 h, h’

V8 l, l’, r, r’ V18 ch

Number of signers 13

V9 d, d’,t, t’,n, n’ V19 m, m’

Phrases per signer 164

V10 g, g’, k, k’ V20 zh

Number of repetitions 5

Number of samples per signer 820 Table 2. Viseme classes and phoneme-to-viseme mapping

Total number of samples 10 660

Recording device MS Kinect V2 State-of-the-art semi-automatic approach to viseme labeling of

Resolution (color) FullHD (1920×1080) multimedia databases is performed using speech recognition

Resolution (depth sensor) 512×424 system with subsequent expert correction of temporal

Resolution (infrared camera) 512×424 annotation. In the present study, the use of this method is not

Distance to camera 1.0-2 m. possible due to the complete absence of acoustic data.

Duration (total) 7 hours 56 min. Therefore, the entire temporal labeling of the database on the

Duration (per signer) ~36 min viseme classes was done manually by experts. The following is

Number of frames with gestures ~30-35 000 an example of the labeling (format: start time (sec.) - end time

(per signer) (sec.) – phoneme class mark – viseme class mark ) of the

Average age of signers 24 database:

Total amount of data ~4 TB

Table 1. Contents of TheRuSLan database 0.00 0.31 SIL V1

0.31 0.37 m V19

2.2 Data labelling 0.37 0.46 a V2

0.46 0.53 l V8

Proper temporal labeling of data into viseme classes is 0.53 0.62 a V2

necessary for training any practical lip-reading system in the 0.62 0.70 k V10

scope of statistical methods of speech recognition. As well as a 0.70 0.89 o! V4

0.89 1.04 SIL V1

This contribution has been peer-reviewed.

https://doi.org/10.5194/isprs-archives-XLII-2-W12-97-2019 | © Authors 2019. CC BY 4.0 License. 98

The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume XLII-2/W12, 2019

Int. Worksh. on “Photogrammetric & Computer Vision Techniques for Video Surveillance, Biometrics and Biomedicine”, 13–15 May 2019, Moscow, Russia

3. PROPOSED METHOD

In general, visual speech recognition is the process of

converting sequences of mouth region images into text. Every

practical lip-reading system necessarily include 4 main

processing stages, such as image acquisition, region of interest

(ROI) localization, feature extraction and speech recognition.

More detailed description of the used VSR system is described

in the work (Ivanko et al., 2017).

In research works, the image acquisition stage is often replaced

by the step of selecting a representative database, which is

necessary for system training in the framework of the statistical

approach to speech recognition. The next important step in

visual speech recognition is finding the ROI on the image, since

the quality of ROI has a strong influence on the recognition

results. Two main approaches to solve this task are often found Figure 3. Full 2D face shape model (left) and the face

in the literature: Haar-like feature based boosted classification landmarks localization algorithm results (right).

framework and the active appearance model. In this work we

used both methods: Haar-like method for pixel-based features To extract useful visual speech information, the first step is to

extraction and AAM for geometry-based features. locate the lips region that contains the most valuable

information about pronounced speech. For face and lips regions

Visual data are typically stored as video sequences and contain detection we apply AAM-based algorithm implemented in the

a lot of information that is irrelevant to the pronounced speech. Dlib open source computer vision library (King, 2009).The

Such information includes the variability of the visual main idea is to match the statistical model of object shape and

appearances of different speakers. The visual features should be appearance, containing a set of 68 facial landmarks (20 in the

relatively small and sufficiently informative about the uttered mouth region), to a new image. An example of used face shape

speech, at the same time they must show a certain level of model and the algorithm results depicted in Fig. 3.

invariance against redundant information or noise in videos.

Despite many years of research, there is no any visual feature This face search algorithm shows high reliability in finding the

set universally accepted for representing visual speech. The faces in the given image. One of the major drawbacks of the

most widely used features are pixel-based (image-based) (Hong method is false detection, i.e. detection of faces on the

et al., 2006) and geometry-based (Kumar et al., 2017). In this furnishing, environment, clothing, etc. By reducing the search

paper, we use the modification to the method recently proposed area (above the neck landmark), we managed to eliminate the

in (Ivanko et al., 2018b) and apply it for the task of automatic error of false detection in TheRuSLan database.

visual speech recognition of hearing impaired people.

3.2 Parametric representation

3.1 Region-of-interest detection

In the current study we used two different state-of-the-art

TheRuSLan database contains video recordings of signers in approaches for parametric representation of visual speech:

full growth. On each frame of video data, the search for 25 key geometry-based and pixel-based.

points of the human skeleton model was carried out using

Kinect 2.0 in-build AAM based algorithm (Kar, 2010). An Geometry-based features extraction was done using method

example of a detected skeleton model is shown in Fig. 2. similar to (Ivanko et. al, 2018c). The main idea of the method is

after the normalization of the coordinates of 20 founded in the

Based on the obtained skeleton coordinates, we searched for the lips region landmarks, we calculate the Euclidean distances

ROI (face and mouth region) in the area above the neck between certain landmarks (according to table 3) and save them

landmark. Thus, we significantly reduced the number of false in the feature vector. Landmarks numbers in the table

alarms of the face detection algorithm on various objects of the correspond to the map (Howell et al., 2016), depicted in Fig. 3.

environment.

# landmarks (№) # landmarks (№)

1 49 - 61 13 54 - 64

2 61 - 60 14 64 - 53

3 60 - 68 15 64 - 52

4 68 - 59 16 52 - 63

5 59 - 67 17 52 - 62

6 67 - 58 18 62 - 51

7 67 - 57 19 62 - 50

8 57 - 66 20 50 - 61

9 66 - 56 21 62 - 68

10 56 - 65 22 63 - 67

11 65 - 55 23 64 - 66

12 65 - 54 24 61 - 65

Table 3. Feature extraction lanmarks

Figure 2. 25 key landmarks of Kinect 2.0 human skeleton model

This contribution has been peer-reviewed.

https://doi.org/10.5194/isprs-archives-XLII-2-W12-97-2019 | © Authors 2019. CC BY 4.0 License. 99

The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume XLII-2/W12, 2019

Int. Worksh. on “Photogrammetric & Computer Vision Techniques for Video Surveillance, Biometrics and Biomedicine”, 13–15 May 2019, Moscow, Russia

After ROI detection the pixel-based visual features are

calculated as a result of the following processing steps:

greyscale conversion, histogram alignment, normalization of the

detected mouth images to 32×32 pixels, mapping to a 32-

dimentional feature vector using a principal component analysis

(Fig. 5).

3.3 Challenges

Sign language involves a large number of hand movements and

often they cover the lips region. The structure of the Russian

sign language is such that the interaction of the hand with the

mouth is necessary for a certain number of gestures. A similar

example can be seen in Figure 4. This overlap effect is a serious

problem for an automatic sign language recognition system.

In such circumstances, the algorithm for detecting lips region

works with an error and either narrows the mouth area to its

visible part (Fig. 4, top left), or tries to model the invisible part

(Fig. 4, top right). In both cases, it is difficult to achieve optimal

recognition accuracy and it is obvious that such examples

should be excluded from the training set.

In this paper, we propose to use a simple method for detecting

such cases. Using the coordinates of skeletal model obtained by Figure 4. An examples of overlap effect in Russian sign

Kinect in-build algorithm, on each frame we check how close language

the area of the hand to the face region. Those video frames on

which the hand area intersects with the face area are 5. Normalize the coordinates of the obtained landmarks.

automatically marked and excluded from the training set. Thus, 6. Calculate a number of Euclidean distances between

we managed to significantly reduce the amount of irrelevant landmarks for geometry-based features extraction.

data. 7. Calculate PCA-based visual features.

8. Save 24-dimentional geometry-based feature vector

3.4 Description of the parametric representation method and 32-dimentional pixel-based feature vector.

A general pipeline of the lips parametric representation method The proposed method allows determining speaker’s lip region

is presented in Figure 5. Proposed method involves sequential in the video stream during articulation and it represents the

execution of the following steps: region of interest as a feature vector with the dimensionality of

56 components (24 for geometry-based features and 32 for

1. Acquisition a video frame from the Kinect 2.0 device pixel-based features). The method also allows automatically

or video file. detect the intersection between hand and face regions, and

2. Calculate coordinates of 25 landmarks of the human exclude inappropriate data from the training set. This

skeleton model. parametrical representation can be used for the training of an

3. Crop the search area above the neck landmark and use automatic system of Russian sign language recognition and it is

the facial landmark detection algorithm to find 68 facial planned for further studies to augment the performance of

key points (20 in the mouth region). existing baseline.

4. Check the intersection of the face and hand regions.

Figure 5. General pipeline of the parametric representation method

This contribution has been peer-reviewed.

https://doi.org/10.5194/isprs-archives-XLII-2-W12-97-2019 | © Authors 2019. CC BY 4.0 License. 100

The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume XLII-2/W12, 2019

Int. Worksh. on “Photogrammetric & Computer Vision Techniques for Video Surveillance, Biometrics and Biomedicine”, 13–15 May 2019, Moscow, Russia

4. CONCLUSIONS AND FUTURE WORK Ivanko et al., 2017. D. Ivanko, A. Karpov, D. Ryumin, I.

Kipyatkova, A. Saveliev, V. Budkov, M. Zelezny, Using a high-

The paper discusses the possibility of use additional modality speed video Camera for robust audio–visual speech recognition

(lip-reading) to improve the accuracy and robustness of in acoustically noisy conditions. In: SPECOM 2017, LNAI

automatic Russian sign language recognition system. Such 10458, pp 757-766.

studies have not been conducted before and it is of great

practical interest to investigate how good visual speech of Ivanko et al., 2018b. D. Ivanko, D. Ryumin, I. Kipyatkova, A.

hearing impaired people can be recognized. The present study is Karpov, Lip-Reading Using Pixel-based and Geometry-based

the first step towards this direction and covers following stages: Features for Multimodal Human-robot Interfaces. In: Zavalishin

data collection, data labeling, region-of-interest detection and Readings 2019, in press.

methods for informative features extraction.

Ivanko et al., 2018c. D. Ivanko, D. Ryumin, A. Axyonov, M.

We also proposed method for parametric representation of Zelezny, Designing advanced geometric features for automatic

visual speech with adaptation to overlapping of hand and mouth Russian visual speech recognition. In: Proceedings of the 20th

regions. The feature vectors obtained using this method will International Conference on Speech and Computer (SPECOM

later be used to train the lip-reading system. Due to its 2018), pp. 245-255.

versatility, this method can also be used for different tasks of

biometrics, computer vision, etc. Kar, 2010. A. Kar, Skeletal tracking using Microsoft Kinect, In:

Methodology, vol. 1, pp. 1-11.

In further research, we are going to use statistical approaches,

e.g. based on some types of Hidden Markov Models or deep Katsaggelos et al., 2015. K. Katsaggelos, S. Bahaadini, R.

neural networks for development of a robust and accurate Molina, Audiovisual fusion: challenges and new approaches. In:

Russian sign language recognition system. The results of this Proceedings of the IEEE, vol. 103, no. 9, pp 1635-1653.

study can later be used to create assistive technologies for deaf

or hearing impaired people. King, 2009. D. E. King, Dlib-ml: A Machine Learning Toolkit,

Journal of Machine Learning Research, vol. 10, pp. 1755-1758

ACKNOWLEDGEMENTS Kumar et al., 2017. S. Kumar, MK. Bhuyan, B. Chakraborty,

This research is financially supported by the Ministry of Extraction of texture and geometrical features from informative

Science and Higher Education of the Russian Federation, facial regions for sign language recognition. In: Journal of

agreement No. 14.616.21.0095 (reference Multimodal User Interfaces (JMUI), vol. 11, no. 2, pp. 227-

RFMEFI61618X0095). 239.

Petajan, 1984. E. D. Petajan, Automatic lipreading to enhance

REFERENCES speech recognition. In: IEEE Communications Society Global

Akbari et al., 2018. H. Akbari, H. Arora, L. Cao, N. Mesgarani, Telecommunications Conference, Atlanta, USA.

LIP2AUD-SPEC: Speech reconstruction from silent lip

Ryumin et al., 2019. D. Ryumin, D. Ivanko, A. Axyonov, A.

movements video. Proceedings of ICASSP2018, pp. 2516-2520.

Karpov, I. Kagirov, M. Zelezny, Human-robot interaction with

Cheok et al., 2017. M. J. Cheok, Z. Omar, M. H. Jaward, A smart shopping trolley using sign language: data collection. In:

review of hand gesture and sign language recognition IEEE the 1st International Workshop on Pervasive Computing

techniques. International Journal of Machine Learning and and Spoken Dialogue Systems Technology (PerDial 2019), in

Cybernetics, vol. 10, no. 1, pp. 131-153. press.

Darrell et al., 1993. T. Darrell, A. Pentland, Space-time Yang et al., 2010. R. Yang, S. Sarkar, B. Loeding, Handling

gestures, Proceedings on Computer Vision and Pattern movement epenthesis and hand segmentation ambiguities in

Recognition, IEEE computer society conference, pp. 335-340. continuous sign language recognition using nested dynamic

programming, IEEE Transactions on Pattern Analysis and

Denby et al., 2010. B. Denby, T. Schultz, K. Honda, T. Hueber, Machine Intelligence, vol. 32, no.3, pp. 462-477.

J. M. Gilbert, J. S. Brumberg, Silent speech interfaces, Journal

on Speech Communication, vol. 52, pp. 270-287. Zhou et al., 2014. Z. Zhou, G. Zhao, X. Hong, M. Pietikainen,

A review of recent advances in visual speech decoding. Image

Hong et al., 2006. S. Hong, H. Yao, Y. Wan, R. Chen, A PCA and Vision Computing, vol. 32, 590-605.

based visual DCT feature extraction method for lip-reading. In:

Proceedings of the intelligent informatics, hiding multimedia

and signal processing, pp 321-326.

Howell et al., 2016. D. Howell, S. Cox, B. Theobald, Visual

units and confusion modelling for automatic lip-reading. In:

Image and Vision Computing, vol. 51, pp. 1-12.

Ivanko et al., 2018a. D. Ivanko, A. Karpov, D. Fedotov, I.

Kipyatkova, D. Ryumin, Dm. Ivanko, W. Minker, M. Zelezny,

Multimodal speech recognition: increasing accuracy using high

speed video data. In: Journal of Multimodal User Interfaces,

vol. 12, no. 4, pp. 319-328.

This contribution has been peer-reviewed.

https://doi.org/10.5194/isprs-archives-XLII-2-W12-97-2019 | © Authors 2019. CC BY 4.0 License. 101

You might also like

- Lithuanian Speech Liepa For Development of Human-Computer Interfaces...Document13 pagesLithuanian Speech Liepa For Development of Human-Computer Interfaces...Xiomara RodriguezNo ratings yet

- Development of A Software Module For Recognizing TDocument8 pagesDevelopment of A Software Module For Recognizing TRENI YUNITA 227056004No ratings yet

- Research Paper On Sign Language InterpretingDocument6 pagesResearch Paper On Sign Language Interpretinggw1g9a3s100% (1)

- Ganek 2018Document9 pagesGanek 2018anastasiaaaaaNo ratings yet

- Visual Speech RecognitionDocument24 pagesVisual Speech RecognitionHassanEL-BannaMahmoudNo ratings yet

- A Comprehensive Review On Indian Sign Language For Deaf and Dumb PeopleDocument8 pagesA Comprehensive Review On Indian Sign Language For Deaf and Dumb PeopleIJRASETPublicationsNo ratings yet

- Methodologies For Sign Language Recognition A SurveyDocument4 pagesMethodologies For Sign Language Recognition A SurveyInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- ALICE: An Open-Source Tool For Automatic Measurement of Phoneme, Syllable, and Word Counts From Child-Centered Daylong RecordingsDocument49 pagesALICE: An Open-Source Tool For Automatic Measurement of Phoneme, Syllable, and Word Counts From Child-Centered Daylong RecordingsGermán CapdehouratNo ratings yet

- UntitledDocument7 pagesUntitledAlexander CalimlimNo ratings yet

- Crasborn HSK ToappearDocument16 pagesCrasborn HSK Toappearbabahamou44No ratings yet

- Levis 2018Document9 pagesLevis 2018Javeria ZaidiNo ratings yet

- Survey Sign Language Production 2023Document23 pagesSurvey Sign Language Production 2023Nguyễn KoiNo ratings yet

- 1 s2.0 S0885230819302992 MainDocument16 pages1 s2.0 S0885230819302992 MainAbdelkbir WsNo ratings yet

- Spoken Corpora PDFDocument25 pagesSpoken Corpora PDFGeorgeNo ratings yet

- Research Paper Sign LanguageDocument5 pagesResearch Paper Sign Languageeh15arsx100% (1)

- Review On Machine Translation System For Ethiopian Sign LanguageDocument5 pagesReview On Machine Translation System For Ethiopian Sign LanguageashenafiNo ratings yet

- Lit Man 2018Document17 pagesLit Man 2018Binkei TzuyuNo ratings yet

- Paralinguistics in Speech and Language-State-Of-The-Art and The ChallengeDocument36 pagesParalinguistics in Speech and Language-State-Of-The-Art and The ChallengeSandeep JaiswalNo ratings yet

- Acoustic Modeling Based On Deep Learning For Low-RDocument17 pagesAcoustic Modeling Based On Deep Learning For Low-RSumeya HusseinNo ratings yet

- 633 1592 1 PBDocument14 pages633 1592 1 PBRobertoNo ratings yet

- Research Proposal CapstoneDocument18 pagesResearch Proposal Capstoneapi-734948540No ratings yet

- Dialectal Variability in Spoken Language: A Comprehensive Survey of Modern Techniques For Language Dialect Identification in Speech SignalsDocument14 pagesDialectal Variability in Spoken Language: A Comprehensive Survey of Modern Techniques For Language Dialect Identification in Speech Signalsindex PubNo ratings yet

- The Influence of The Visual Modality On PDFDocument10 pagesThe Influence of The Visual Modality On PDFmkamadeva2010No ratings yet

- Paper 3308Document19 pagesPaper 3308shuchis785No ratings yet

- Lecture - 2 - MMCT - Audio-Visual Integration PDFDocument92 pagesLecture - 2 - MMCT - Audio-Visual Integration PDFRaghav BansalNo ratings yet

- Lecture - 2 - MMCT - Audio-Visual Integration PDFDocument92 pagesLecture - 2 - MMCT - Audio-Visual Integration PDFRaghav BansalNo ratings yet

- Evolution of Language From The Aphasia PerspectiveDocument3 pagesEvolution of Language From The Aphasia PerspectiveLaura Arbelaez ArcilaNo ratings yet

- Adaptation of The Token Test in Standard Indonesian: Bernard A. J. Jap, Chysanti ArumsariDocument8 pagesAdaptation of The Token Test in Standard Indonesian: Bernard A. J. Jap, Chysanti ArumsariNeurologiundip PpdsNo ratings yet

- AKIN PROJECT SIGN LANGUAGE (Tensor)Document22 pagesAKIN PROJECT SIGN LANGUAGE (Tensor)Samuel Bamgboye kobomojeNo ratings yet

- A Tool To Convert Audio/Text To Sign Language Using Python LibrariesDocument11 pagesA Tool To Convert Audio/Text To Sign Language Using Python LibrariesNEHA BHATINo ratings yet

- 05Document9 pages05Minji HaerinNo ratings yet

- Andin 2014Document8 pagesAndin 2014Ariana MondacaNo ratings yet

- Aging and Working Memory Modulate The Ability To BDocument15 pagesAging and Working Memory Modulate The Ability To BemilijaNo ratings yet

- Research Paper Topics On Sign LanguageDocument4 pagesResearch Paper Topics On Sign Languagegatewivojez3100% (1)

- Erwt 4353Document19 pagesErwt 4353dfgsgdfgsNo ratings yet

- The Handbook of Phonetic Sciences: Title InformationDocument652 pagesThe Handbook of Phonetic Sciences: Title InformationYotaNo ratings yet

- Speech Technologies and The Assessment of Second Language Speaking Approaches Challenges and OpportunitiesDocument17 pagesSpeech Technologies and The Assessment of Second Language Speaking Approaches Challenges and Opportunitiesleio.wuNo ratings yet

- Qualitative Advanced Technology Via American Sign LanguageDocument4 pagesQualitative Advanced Technology Via American Sign LanguageRegina DanielsNo ratings yet

- Sign Language TranslationDocument23 pagesSign Language Translation陳子彤No ratings yet

- Jproc 2012 2237151Document24 pagesJproc 2012 2237151Sana IsamNo ratings yet

- Hand Gesture Recognition Using Anchor Points For Real Time Predictions, Integrated With Voice Over (Text To Speech)Document13 pagesHand Gesture Recognition Using Anchor Points For Real Time Predictions, Integrated With Voice Over (Text To Speech)IJRASETPublicationsNo ratings yet

- Adaptation of The Token Test in Standard Indonesian: Makara Human Behavior Studies in Asia August 2017Document9 pagesAdaptation of The Token Test in Standard Indonesian: Makara Human Behavior Studies in Asia August 2017Revina BustamiNo ratings yet

- 4C C007 2009-Poster valutazioneCNRDocument2 pages4C C007 2009-Poster valutazioneCNRClaudia S. BianchiniNo ratings yet

- AdalyaDocument10 pagesAdalyaSana IsamNo ratings yet

- Multimodal Perception of Prominence in Spontaneous Speech - Jiménez-Bravo and Marrero-Aguiar, 2020Document18 pagesMultimodal Perception of Prominence in Spontaneous Speech - Jiménez-Bravo and Marrero-Aguiar, 2020Miguel Jiménez-BravoNo ratings yet

- Different Modeling For Amharic PDFDocument14 pagesDifferent Modeling For Amharic PDFInderjeetSinghNo ratings yet

- 09205294-Silent Speech Interface (Topic-3)Document27 pages09205294-Silent Speech Interface (Topic-3)Divya SuvarnaNo ratings yet

- bhz128 Lenguaje Habla Segregacion RedDocument12 pagesbhz128 Lenguaje Habla Segregacion RedLidaConstanzaMendezNo ratings yet

- 10.1093@arclin@acv081 LT PDFDocument11 pages10.1093@arclin@acv081 LT PDFgustavNo ratings yet

- Corpus Linguistics and Vocabulary: A Commentary On Four StudiesDocument9 pagesCorpus Linguistics and Vocabulary: A Commentary On Four Studiessisifo80No ratings yet

- 17.10.23 Reading Psycholinguistic and Neurolinguistic Perspectives On Sign Languages - For StudentsDocument3 pages17.10.23 Reading Psycholinguistic and Neurolinguistic Perspectives On Sign Languages - For Studentsgogukristina9No ratings yet

- Comparison of Iranian Monolingual and Bilingual EFL StudentsDocument12 pagesComparison of Iranian Monolingual and Bilingual EFL StudentsNaheed Saif Ullah 68-FA/MSTS/F19No ratings yet

- Teaching Through PicturesDocument15 pagesTeaching Through PicturesNicoleta VelescuNo ratings yet

- Chen Pichler 2012 HSKDocument40 pagesChen Pichler 2012 HSKapi-247786123No ratings yet

- Sign Language Recognition System Using Machine LearningDocument6 pagesSign Language Recognition System Using Machine LearningIJRASETPublicationsNo ratings yet

- Using Linguistic Typology To Enrich Multilingual Lexicons: The Case of Lexical Gaps in KinshipDocument10 pagesUsing Linguistic Typology To Enrich Multilingual Lexicons: The Case of Lexical Gaps in KinshipTemulen KhNo ratings yet

- Final - PSL - 3177 7183 1 SMDocument13 pagesFinal - PSL - 3177 7183 1 SMKhushal DasNo ratings yet

- Audio To Indian Sign Language TranslatorDocument6 pagesAudio To Indian Sign Language TranslatorIJRASETPublicationsNo ratings yet

- Advances in Automatic Speech Recognition: From Audio-Only To Audio-Visual Speech RecognitionDocument6 pagesAdvances in Automatic Speech Recognition: From Audio-Only To Audio-Visual Speech RecognitionInternational Organization of Scientific Research (IOSR)No ratings yet

- Synchronization of Speed of DC Motors For Rolling Mills: D.Reddy PranaiDocument5 pagesSynchronization of Speed of DC Motors For Rolling Mills: D.Reddy PranaiBandi GaneshNo ratings yet

- Tank Erection Itp Org ChartDocument34 pagesTank Erection Itp Org Chartvasantha kumar100% (1)

- Elevator Traffic AnalysisDocument52 pagesElevator Traffic Analysisstanjack99No ratings yet

- Pes2ug20cs282 - Rohit V Shastry - Sec E - Week 8Document12 pagesPes2ug20cs282 - Rohit V Shastry - Sec E - Week 8ROHIT V SHASTRYNo ratings yet

- Building Automation - Impact On Energy EfficiencyDocument108 pagesBuilding Automation - Impact On Energy EfficiencyJonas Barbosa0% (1)

- Step by Step Procedure in Considering Lighting LayoutDocument9 pagesStep by Step Procedure in Considering Lighting LayoutA.M BravoNo ratings yet

- Servicenow Certified System Administrator Exam SpecificationDocument6 pagesServicenow Certified System Administrator Exam Specificationibmkamal-1No ratings yet

- CS 20Document208 pagesCS 20Ilarion CiobanuNo ratings yet

- EZ-SCREEN LS Daily Checkout Card (Cascaded Systems) - 179482 Rev ADocument2 pagesEZ-SCREEN LS Daily Checkout Card (Cascaded Systems) - 179482 Rev AMoy D-aNo ratings yet

- Year 3 Reasoning Test Set 2 Paper ADocument8 pagesYear 3 Reasoning Test Set 2 Paper AbayaNo ratings yet

- Wireless Remote Control For Eot Crane Using Avr Micro Controller IJERTCONV5IS20024 PDFDocument4 pagesWireless Remote Control For Eot Crane Using Avr Micro Controller IJERTCONV5IS20024 PDFsujit kcNo ratings yet

- Digital Marketing For FarmersDocument5 pagesDigital Marketing For Farmerssuresh mpNo ratings yet

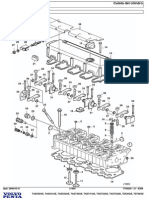

- 6.cylinder Head and ValvetrainDocument14 pages6.cylinder Head and ValvetrainKuba SwkNo ratings yet

- Htk-Caravan 08-2018 en PDFDocument52 pagesHtk-Caravan 08-2018 en PDFJose Manuel MarinNo ratings yet

- (Norton History of Science) Cardwell, Donald Stephen Lowell - Wheels, Clocks, and Rockets - A History of Technology-W. W. Norton & Company (1995)Document499 pages(Norton History of Science) Cardwell, Donald Stephen Lowell - Wheels, Clocks, and Rockets - A History of Technology-W. W. Norton & Company (1995)Begüm YoldaşNo ratings yet

- Exam 1Document8 pagesExam 1Nina Selin OZSEKERCINo ratings yet

- Pokemon CheatDocument12 pagesPokemon CheatGenZheroNo ratings yet

- 500KVA Diesel Generator SetDocument43 pages500KVA Diesel Generator SetTASHIDINGNo ratings yet

- Echo PPT260 Parts ManualDocument44 pagesEcho PPT260 Parts ManualTim MckennaNo ratings yet

- Volvo TAD731-2-3GEDocument299 pagesVolvo TAD731-2-3GEmoises83% (6)

- PHP Coding Sample QuestionDocument63 pagesPHP Coding Sample QuestionAbhinav Raj EJAqXCJLYLNo ratings yet

- Container Catalogue 2019-2020 V1.0 NEWDocument48 pagesContainer Catalogue 2019-2020 V1.0 NEWمعمر حميدNo ratings yet

- PB II Xii Maths QP Jan 2023Document6 pagesPB II Xii Maths QP Jan 2023VardhiniNo ratings yet

- Logan F60 Picture Framing Dust Cover Trimmer: Instruction ManualDocument2 pagesLogan F60 Picture Framing Dust Cover Trimmer: Instruction ManualMustafa PandzicNo ratings yet

- Chapter Three: Basic Cost Management ConceptsDocument23 pagesChapter Three: Basic Cost Management ConceptsAli AquinoNo ratings yet

- Ferreira Et Al 2023Document13 pagesFerreira Et Al 2023Giselle FerreiraNo ratings yet

- RUS Bulletin 1728F-806 Viewing Instructions: To Jump To A Topic by Using Its BookmarkDocument91 pagesRUS Bulletin 1728F-806 Viewing Instructions: To Jump To A Topic by Using Its BookmarkjosehenriquezsotoNo ratings yet

- Text Detection and Recognition For Images of Medical Laboratory Reports With A Deep Learning ApproachDocument10 pagesText Detection and Recognition For Images of Medical Laboratory Reports With A Deep Learning ApproachKumar SajalNo ratings yet

- It Cse 6TH Sem Int Q P PDFDocument86 pagesIt Cse 6TH Sem Int Q P PDFMikasa AckermanNo ratings yet

- 06- شيت للمخزون باللغة الانجليزيةDocument6 pages06- شيت للمخزون باللغة الانجليزيةEngFaisal AlraiNo ratings yet