Professional Documents

Culture Documents

实验测试2

实验测试2

Uploaded by

qq1497560005Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

实验测试2

实验测试2

Uploaded by

qq1497560005Copyright:

Available Formats

总结一下之后要做的尝试

1. 目前的,history-session 过 max pool, probattention 获得 history-embedding

Current-session 和 history-embedding 交互得到 current-embedding

门控融合机制进行融合:

2. current seq 后先经过一个 lstm,再喂入到 full attention 中

简单的一个单层 lstm:

Recall@5:10.0491 Recall@10:12.8006 Recall@20:15.9131 MRR@5:6.6535 MRR10@10:7.0188 MMR@20:7.2338

加了一层 lstm:

Recall@5:4.9560 Recall@10:7.0082 Recall@20:9.6431 MRR@5:2.9428 MRR10@10:3.2153 MMR@20:3.3962

3. current seq 做的 full attention 不要去查询 history seq

Recall@5:13.8037 Recall@10:16.3538 Recall@20:19.0501 MRR@5:10.3453 MRR10@10:10.6110 MMR@20:10.7560

4. current seq 先做一个自注意力操作再与 history 做注意力交互

Recall@5:13.5419 Recall@10:15.5174 Recall@20:18.1125 MRR@5:10.7029 MRR10@10:10.9626 MMR@20:11.1134

5. history seq 的 max pool 用软注意力来替换 pool=softmax

6. full attention 变成 probattention

有 randomseed :

Recall@5:13.2117 Recall@10:15.4744 Recall@20:17.9601 MRR@5:9.9671 MRR10@10:10.1696 MMR@20:10.2854

无 randomseed:

Recall@5:13.9448 Recall@10:16.1616 Recall@20:18.5736 MRR@5:10.3597 MRR10@10:10.6553 MMR@20:10.8223

Recall@5:13.9366 Recall@10:16.3364 Recall@20:18.7331 MRR@5:10.3257 MRR10@10:10.6471 MMR@20:10.8127

Recall@5:14.5112 Recall@10:16.8569 Recall@20:19.3742 MRR@5:10.7699 MRR10@10:11.0819 MMR@20:11.2562

Recall@5:14.0521 Recall@10:16.3671 Recall@20:18.7843 MRR@5:10.4677 MRR10@10:10.7778 MMR@20:10.9450

Recall@5:13.7076 Recall@10:16.1135 Recall@20:18.6912 MRR@5:10.0605 MRR10@10:10.3786 MMR@20:10.5572

Recall@5:14.1452 Recall@10:16.4080 Recall@20:18.8395 MRR@5:10.5720 MRR10@10:10.8741 MMR@20:11.0414

Recall@5: 14.38 Recall@10: 17.06 Recall@20: 19.98 MRR@5: 10.36 MRR10@10: 10.71 MMR@20: 10.91

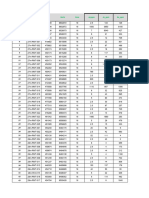

调参:

先确定模型内部有哪些参数可以调整:

1. probattention 处

head 多头 2.

2. Drop out

python train_last.py --data=xing --mode=transformer --user_ --adj=adj_all --dataset=all_data --batchSize=100 --buffer_size=200000 --encoder_attention=probattention --gate_ --

history_=stamp --pool=softmax --dropout=0.5

Prob attention_drop out

Full attention drop out

Softmax_reduce_sum 那里还可以接一个 dropout 这个先不加

0.05:

Recall@5:14.1687 Recall@10:16.4969 Recall@20:19.0256 MRR@5:10.4243 MRR10@10:10.7372 MMR@20:10.9109

Recall@5:13.9417 Recall@10:16.2505 Recall@20:18.7301 MRR@5:10.3315 MRR10@10:10.6401 MMR@20:10.8109

Recall@5:14.5399 Recall@10:17.0133 Recall@20:19.6084 MRR@5:10.5960 MRR10@10:10.9243 MMR@20:11.1041

Recall@5:14.3108 Recall@10:16.7065 Recall@20:19.2812 MRR@5:10.5793 MRR10@10:10.8995 MMR@20:11.0780

14.24 16.6168 19.1613 10.4827 10.8002 10.9760

0.1:

Recall@5:14.4519 Recall@10:16.8507 Recall@20:19.4172 MRR@5:10.6695 MRR10@10:10.9902 MMR@20:11.1673

Recall@5:14.2771 Recall@10:16.7566 Recall@20:19.3333 MRR@5:10.5064 MRR10@10:10.8380 MMR@20:11.0154

Recall@5:13.9714 Recall@10:16.5450 Recall@20:19.2342 MRR@5:10.4534 MRR10@10:10.6918 MMR@20:10.8223

Recall@5:14.2086 Recall@10:16.4131 Recall@20:18.8456 MRR@5:10.4735 MRR10@10:10.7663 MMR@20:10.9337

14.2275 16.6413 19.2075 10.5257 10.8216 10.984675

0.2:

Recall@5:14.1115 Recall@10:16.4898 Recall@20:19.0194 MRR@5:10.3429 MRR10@10:10.6594 MMR@20:10.8345

Recall@5:14.1820 Recall@10:16.6002 Recall@20:19.2495 MRR@5:10.8180 MRR10@10:11.0113 MMR@20:11.1230

Recall@5:14.1033 Recall@10:16.6452 Recall@20:19.2751 MRR@5:10.6639 MRR10@10:10.8818 MMR@20:11.0058

Recall@5:13.9928 Recall@10:16.6391 Recall@20:19.2894 MRR@5:10.2886 MRR10@10:10.6435 MMR@20:10.8269

14.0974 16.5935 19.2083 10.52835 10.799 10.9475

0.3:

Recall@5:14.4315 Recall@10:16.7505 Recall@20:19.2935 MRR@5:10.5703 MRR10@10:10.8211 MMR@20:10.9957

Recall@5:14.3916 Recall@10:16.9632 Recall@20:19.6431 MRR@5:10.3947 MRR10@10:10.7387 MMR@20:10.9247

Recall@5:14.4530 Recall@10:16.8436 Recall@20:19.5256 MRR@5:10.5821 MRR10@10:10.8219 MMR@20:11.0080

Recall@5:14.1483 Recall@10:16.4601 Recall@20:19.0164 MRR@5:10.3799 MRR10@10:10.6889 MMR@20:10.8653

14.3560 16.7543 19.3700 10.4818 10.7676 10.9484

0.4:

Recall@5:14.2076 Recall@10:16.7853 Recall@20:19.5051 MRR@5:10.6252 MRR10@10:10.8758 MMR@20:10.9982

Recall@5:14.0695 Recall@10:16.7229 Recall@20:19.4581 MRR@5:10.1752 MRR10@10:10.5311 MMR@20:10.7207

Recall@5:13.7035 Recall@10:16.2474 Recall@20:19.0603 MRR@5:10.4731 MRR10@10:10.7087 MMR@20:10.8334

Recall@5:14.1912 Recall@10:16.7065 Recall@20:19.2832 MRR@5:10.5603 MRR10@10:10.7889 MMR@20:10.9160

14.04295 16.6155 19.3266 10.4584 10.7261 10.8645

0.5:

Recall@5:13.8620 Recall@10:16.4652 Recall@20:19.1534 MRR@5:10.3600 MRR10@10:10.5931 MMR@20:10.7167

Recall@5:14.0706 Recall@10:16.5716 Recall@20:19.1840 MRR@5:10.9406 MRR10@10:11.1707 MMR@20:11.2925

Recall@5:13.6339 Recall@10:16.2536 Recall@20:19.0767 MRR@5:10.3911 MRR10@10:10.6369 MMR@20:10.7760

Recall@5:13.6217 Recall@10:16.2352 Recall@20:19.0920 MRR@5:10.8702 MRR10@10:11.0963 MMR@20:11.2278

13.7905 16.3814 19.1265 10.6404 10.8743 11.0032

3. Learn Rate

目前 0.001

0.002:

Recall@5:14.2382 Recall@10:16.8088 Recall@20:19.6564 MRR@5:10.2364 MRR10@10:10.5809 MMR@20:10.7769

Recall@5:14.3763 Recall@10:16.8712 Recall@20:19.5337 MRR@5:10.4305 MRR10@10:10.7635 MMR@20:10.9479

0.003:

Recall@5:11.1002 Recall@10:13.3701 Recall@20:15.7873 MRR@5:8.0180 MRR10@10:8.3199 MMR@20:8.4868

Recall@5:13.8292 Recall@10:16.2843 Recall@20:18.7362 MRR@5:10.1399 MRR10@10:10.4670 MMR@20:10.6369

lr 0.005

Recall@5:8.9315 Recall@10:10.6503 Recall@20:12.5798 MRR@5:6.5577 MRR10@10:6.7874 MMR@20:6.9210

Recall@5:8.9080 Recall@10:10.5204 Recall@20:12.3129 MRR@5:6.6151 MRR10@10:6.8310 MMR@20:6.9549

lr 0.0005

Recall@5:13.7352 Recall@10:15.5665 Recall@20:17.4335 MRR@5:11.0658 MRR10@10:11.1855 MMR@20:11.2567

Recall@5:12.6810 Recall@10:14.7628 Recall@20:17.1605 MRR@5:10.4317 MRR10@10:10.5814 MMR@20:10.6681

Lr 0.0001

Recall@5:10.3599 Recall@10:11.2444 Recall@20:12.3732 MRR@5:8.9321 MRR10@10:9.0317 MMR@20:9.0866

Recall@5:11.2178 Recall@10:12.2055 Recall@20:13.4100 MRR@5:9.6674 MRR10@10:9.7988 MMR@20:9.8786

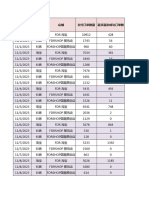

4. Gelu 和 Relu

Relu:

Recall@5:13.9448 Recall@10:16.1616 Recall@20:18.5736 MRR@5:10.3597 MRR10@10:10.6553 MMR@20:10.8223

Recall@5:13.9366 Recall@10:16.3364 Recall@20:18.7331 MRR@5:10.3257 MRR10@10:10.6471 MMR@20:10.8127

Recall@5:14.5112 Recall@10:16.8569 Recall@20:19.3742 MRR@5:10.7699 MRR10@10:11.0819 MMR@20:11.2562

Recall@5:14.0521 Recall@10:16.3671 Recall@20:18.7843 MRR@5:10.4677 MRR10@10:10.7778 MMR@20:10.9450

14.1112 16.4305 18.8663 10.4808 10.7905 10.9590

Gelu:

Recall@5:14.5501 Recall@10:16.7178 Recall@20:19.1247 MRR@5:10.8559 MRR10@10:11.1448 MMR@20:11.3117

Recall@5:14.1687 Recall@10:16.4468 Recall@20:18.8507 MRR@5:10.5795 MRR10@10:10.8835 MMR@20:11.0495

Recall@5:14.0624 Recall@10:16.4315 Recall@20:19.0961 MRR@5:10.3128 MRR10@10:10.6298

Recall@5:13.8978 Recall@10:16.2883 Recall@20:18.8978 MRR@5:10.1791 MRR10@10:10.4979 MMR@20:10.6776

14.16975 16.4711 18.9923 10.4818 10.789 11.0129

python train_last.py --data=xing --mode=transformer --user_ --adj=adj_all --dataset=all_data --batchSize=100 --buffer_size=200000 --encoder_attention=probattention --gate_ --history_=stamp

必须解决种子问题,先挂种子

Recall@5:13.2771 Recall@10:15.3722 Recall@20:17.5828 MRR@5:9.9691 MRR10@10:10.2496 MMR@20:10.4020

Recall@5:13.2444 Recall@10:15.4448 Recall@20:17.8374 MRR@5:9.8961 MRR10@10:10.1903 MMR@20:10.3550

调整了种子在别的文件加了 random

Recall@5:13.3753 Recall@10:15.4519 Recall@20:17.5910 MRR@5:10.0348 MRR10@10:10.3128 MMR@20:10.4601

Recall@5:13.3160 Recall@10:15.3374 Recall@20:17.5286 MRR@5:9.9966 MRR10@10:10.2662 MMR@20:10.4172

不行啊

随机初始化加了 seed:

1.1Recall@5:12.3906 Recall@10:13.9550 Recall@20:15.5133 MRR@5:10.0397 MRR10@10:10.2493 MMR@20:10.3567

1.2Recall@5:13.0358 Recall@10:15.2372 Recall@20:17.5542 MRR@5:10.0397 MRR10@10:10.2493 MMR@20:10.3567

2.1Recall@5:12.3354 Recall@10:13.8456 Recall@20:15.4980 MRR@5:9.9286 MRR10@10:10.1328 MMR@20:10.2466

2.2Recall@5:12.7434 Recall@10:15.1360 Recall@20:17.5378 MRR@5:9.9286 MRR10@10:10.1328 MMR@20:10.2466

用原始的 tensorflow1 尝试一下 最后一次再不行就不管种子了:

1.1 Recall@5:12.1718 Recall@10:13.9867 Recall@20:15.8517 MRR@5:9.5176 MRR10@10:9.7595 MMR@20:9.8879

1.2Recall@5:12.8569 Recall@10:15.2802 Recall@20:17.8650 MRR@5:9.5176 MRR10@10:9.7595 MMR@20:9.8879

2.1 Recall@5:12.0849 Recall@10:13.7628 Recall@20:15.6687 MRR@5:9.4805 MRR10@10:9.7047 MMR@20:9.8356

You might also like

- 现代汉语语料库词频表CorpusWordlistDocument273 pages现代汉语语料库词频表CorpusWordlistamokNo ratings yet

- 102 01工程經濟試題Document5 pages102 01工程經濟試題張新豐No ratings yet

- FunctionDocument3 pagesFunctionapi-463579132No ratings yet

- 三角函數 維基教科書自由的教學讀本Document19 pages三角函數 維基教科書自由的教學讀本219826582No ratings yet

- تخفیف 24 ساعتهDocument1 pageتخفیف 24 ساعتهmiraliNo ratings yet

- RYCO DC NEW PRICE July 15 2022 SPECIALDocument5 pagesRYCO DC NEW PRICE July 15 2022 SPECIALGilbert ValsoteNo ratings yet

- Old PR 2018Document102 pagesOld PR 2018lclokendra49077No ratings yet

- UntitledDocument64 pagesUntitled李业彬No ratings yet

- G组 - 实验8 流体流动Document54 pagesG组 - 实验8 流体流动只是顆李子No ratings yet

- CY3Document2 pagesCY3Elsa SimanjuntakNo ratings yet

- NET CalDocument4 pagesNET CalONEPIECENo ratings yet

- Adangen 10.000Document1 pageAdangen 10.000Aureilio Arellano PurbaNo ratings yet

- IC127673Document2 pagesIC127673amir.yousefi7813No ratings yet

- K Value Parameter Adjustment Details-2020.06.22Document1 pageK Value Parameter Adjustment Details-2020.06.22truongdmcNo ratings yet

- 3NB系列泵说明书Document36 pages3NB系列泵说明书lionelNo ratings yet

- 现代汉语语料库词语频率表Document273 pages现代汉语语料库词语频率表Ruo LinNo ratings yet

- 现代汉语语料库词频表CorpusWordlistDocument273 pages现代汉语语料库词频表CorpusWordlistamokNo ratings yet

- 发动机设计课程设计Document38 pages发动机设计课程设计周庆卓No ratings yet

- 2022Document5 pages2022lexsader1218No ratings yet

- New Hose Price List - 2022Document6 pagesNew Hose Price List - 2022email mobileNo ratings yet

- AfiniaDocument5 pagesAfiniaRoqueGabrielEcheverriNo ratings yet

- Sewa LhanDocument5 pagesSewa LhanWin Kherbax KherbuxNo ratings yet

- 古代汉语语料库字频表Document138 pages古代汉语语料库字频表laneguardingNo ratings yet

- METARDocument2 pagesMETARDmitry ShovgenyukNo ratings yet

- Pencapaian AgustusDocument1 pagePencapaian AgustusFrans JacksonNo ratings yet

- 1093f6d 980Document225 pages1093f6d 980wangNo ratings yet

- 数学Document150 pages数学赖晓鑫100% (1)

- 近似值Document38 pages近似值Nicole YeeNo ratings yet

- BB 83 D 86 F 4600 CFBCDocument10 pagesBB 83 D 86 F 4600 CFBCyufeng.660205No ratings yet

- b3&b4 Blok Temel Tum Noktalar - 07032023-25032023 Tarihi ArasiDocument26 pagesb3&b4 Blok Temel Tum Noktalar - 07032023-25032023 Tarihi ArasiEyP 99No ratings yet

- WeeklAttendance Sheet - Att.6 Aug-2 Sept.2023Document9 pagesWeeklAttendance Sheet - Att.6 Aug-2 Sept.2023Walid AhmedNo ratings yet

- 918E1547793 庫存Document8 pages918E1547793 庫存Tony HsuNo ratings yet

- Tab HydrauDocument2 pagesTab HydrauWassim ChaouachiNo ratings yet

- 流體流動實驗Document22 pages流體流動實驗只是顆李子No ratings yet

- NTC熱敏電阻 200KDocument4 pagesNTC熱敏電阻 200Kbbn88hg21No ratings yet

- METAR Es PDFDocument2 pagesMETAR Es PDFMaster DarkNo ratings yet

- Recu HotelDocument1 pageRecu HotelBou TallNo ratings yet

- Tabla de Pesos de PosteDocument2 pagesTabla de Pesos de Postetobias daniel valdes caiñaNo ratings yet

- MTBF LectureDocument26 pagesMTBF LectureEricYangYuKunNo ratings yet

- 美制螺纹Document16 pages美制螺纹ADA PrecisionNo ratings yet

- Basic Price Update 02 Oct 2020Document8 pagesBasic Price Update 02 Oct 2020Dupni DavyNo ratings yet

- Combined Footing DetailsDocument4 pagesCombined Footing DetailsK KarthikNo ratings yet

- Data Originla 2.2Document14 pagesData Originla 2.2Joseph AntonyNo ratings yet

- Standard Time PERODUA-1Document8 pagesStandard Time PERODUA-1Muhammad Nur HakimNo ratings yet

- Froxy #16CV6LTkGeXn210HtDocument17 pagesFroxy #16CV6LTkGeXn210Htrafie pubgmNo ratings yet

- ARU2Document51 pagesARU2thedNo ratings yet

- .TW - V Voltacon-7FCA9E24D07246188EADDocument2 pages.TW - V Voltacon-7FCA9E24D07246188EADAbdu AliNo ratings yet

- 流體流動實驗Document23 pages流體流動實驗只是顆李子No ratings yet

- HTTP ProxiesDocument49 pagesHTTP ProxiesSelena GillespieNo ratings yet

- فیش اسفند 1400Document2 pagesفیش اسفند 1400mohsenNo ratings yet

- گزارش خرید و فروشDocument5 pagesگزارش خرید و فروشvpn1 vpn1No ratings yet

- 桁架腹杆节点焊缝计算Document8 pages桁架腹杆节点焊缝计算msh741053515No ratings yet

- 10822344 無圍壓縮試驗Document7 pages10822344 無圍壓縮試驗Mike MikeNo ratings yet

- 数学题Document11 pages数学题Joanne AngNo ratings yet

- 船舶設計組阻力與推進Document3 pages船舶設計組阻力與推進tatbn2000No ratings yet

- 分點 - 2020 10 12Document450 pages分點 - 2020 10 12Lee EdwardNo ratings yet

- Rep Subbase RamDocument7 pagesRep Subbase RamCONSORCIO INSTITUCIONES EDUCATIVASNo ratings yet

- 3月份FDR淘宝抖音退款统计表Document63 pages3月份FDR淘宝抖音退款统计表d5oveleeNo ratings yet

- 第八章人工神经网络天气预报方法Document11 pages第八章人工神经网络天气预报方法杨静No ratings yet

- 手把手带你学懂 SVM PDFDocument118 pages手把手带你学懂 SVM PDFxiangqi KongNo ratings yet

- R语言金融深度学习Document6 pagesR语言金融深度学习Anonymous gZN0lInWNo ratings yet

- 2023 中国人工智能系列白皮书 - 深度学习Document461 pages2023 中国人工智能系列白皮书 - 深度学习zjummwNo ratings yet

- 2 05-Bagging算法与随机森林Document50 pages2 05-Bagging算法与随机森林chengkai yuNo ratings yet