Professional Documents

Culture Documents

Ensemble Methods

Uploaded by

mesemo tadiwos0 ratings0% found this document useful (0 votes)

4 views6 pagesb

Copyright

© © All Rights Reserved

Available Formats

DOCX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this Documentb

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

4 views6 pagesEnsemble Methods

Uploaded by

mesemo tadiwosb

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

You are on page 1of 6

Ensemble Methods, what are they?

Ensemble methods is a

machine learning technique that combines several base models

in order to produce one optimal predictive model. To better

understand this definition lets take a step back into ultimate

goal of machine learning and model building. This is going to

make more sense as I dive into specific examples and why

Ensemble methods are used.

I will largely utilize Decision Trees to outline the definition and

practicality of Ensemble Methods (however it is important to

note that Ensemble Methods do not only pertain to Decision

Trees).

A Decision Tree determines the predictive value based on

series of questions and conditions. For instance, this simple

Decision Tree determining on whether an individual should

play outside or not. The tree takes several weather factors into

account, and given each factor either makes a decision or asks

another question. In this example, every time it is overcast, we

will play outside. However, if it is raining, we must ask if it is

windy or not? If windy, we will not play. But given no wind, tie

those shoelaces tight because were going outside to play.

Decision Trees can also solve quantitative problems as well

with the same format. In the Tree to the left, we want to know

wether or not to invest in a commercial real estate property. Is

it an office building? A Warehouse? An Apartment building?

Good economic conditions? Poor Economic Conditions? How

much will an investment return? These questions are answered

and solved using this decision tree.

When making Decision Trees, there are several factors we

must take into consideration: On what features do we make

our decisions on? What is the threshold for classifying each

question into a yes or no answer? In the first Decision Tree,

what if we wanted to ask ourselves if we had friends to play

with or not. If we have friends, we will play every time. If not,

we might continue to ask ourselves questions about the

weather. By adding an additional question, we hope to greater

define the Yes and No classes.

This is where Ensemble Methods come in handy! Rather than

just relying on one Decision Tree and hoping we made the

right decision at each split, Ensemble Methods allow us to take

a sample of Decision Trees into account, calculate which

features to use or questions to ask at each split, and make a

final predictor based on the aggregated results of the sampled

Decision Trees.

Types of Ensemble Methods

1. BAGGing, or Bootstrap AGGregating. BAGGing gets its

name because it combines Bootstrapping and Aggregation

to form one ensemble model. Given a sample of data,

multiple bootstrapped subsamples are pulled. A Decision

Tree is formed on each of the bootstrapped subsamples.

After each subsample Decision Tree has been formed, an

algorithm is used to aggregate over the Decision Trees to

form the most efficient predictor. The image below will help

explain:

Given a Dataset, bootstrapped subsamples are pulled. A Decision Tree is

formed on each bootstrapped sample. The results of each tree are

aggregated to yield the strongest, most accurate predictor.

2. Random Forest Models. Random Forest Models can be

thought of as BAGGing, with a slight tweak. When deciding

where to split and how to make decisions, BAGGed Decision

Trees have the full disposal of features to choose from.

Therefore, although the bootstrapped samples may be slightly

different, the data is largely going to break off at the same

features throughout each model. In contrary, Random Forest

models decide where to split based on a random selection of

features. Rather than splitting at similar features at each node

throughout, Random Forest models implement a level of

differentiation because each tree will split based on different

features. This level of differentiation provides a greater

ensemble to aggregate over, ergo producing a more accurate

predictor. Refer to the image for a better understanding.

Similar to BAGGing, bootstrapped subsamples are pulled from

a larger dataset. A decision tree is formed on each subsample.

HOWEVER, the decision tree is split on different features (in

this diagram the features are represented by shapes).

In Summary

The goal of any machine learning problem is to find a single

model that will best predict our wanted outcome. Rather than

making one model and hoping this model is the best/most

accurate predictor we can make, ensemble methods take a

myriad of models into account, and average those models to

produce one final model. It is important to note that Decision

Trees are not the only form of ensemble methods, just the most

popular and relevant in DataScience today.

You might also like

- Module 3.5 Ensemble Learning XGBoostDocument26 pagesModule 3.5 Ensemble Learning XGBoostDuane Eugenio AniNo ratings yet

- Choosing Right ClassifierDocument36 pagesChoosing Right ClassifierDuno patelNo ratings yet

- EnsembleDocument2 pagesEnsembleShivareddy GangamNo ratings yet

- Random Forest SummaryDocument6 pagesRandom Forest SummaryNkechi KokoNo ratings yet

- What Is Ensemble LearningDocument4 pagesWhat Is Ensemble LearningDevyansh GuptaNo ratings yet

- AIML Final Cpy WordDocument15 pagesAIML Final Cpy WordSachin ChavanNo ratings yet

- Random ForestDocument18 pagesRandom ForestendaleNo ratings yet

- Handout9 Trees Bagging BoostingDocument23 pagesHandout9 Trees Bagging Boostingmatthiaskoerner19100% (1)

- Ensemble Learning: Wisdom of The CrowdDocument12 pagesEnsemble Learning: Wisdom of The CrowdRavi Verma100% (1)

- Ch5 Data ScienceDocument60 pagesCh5 Data ScienceIngle AshwiniNo ratings yet

- Random Forest Algorithms - Comprehensive Guide With ExamplesDocument13 pagesRandom Forest Algorithms - Comprehensive Guide With Examplesfaria shahzadiNo ratings yet

- Research Paper On Decision Tree AlgorithmDocument8 pagesResearch Paper On Decision Tree Algorithmc9j9xqvy100% (1)

- Machine Learning 6Document28 pagesMachine Learning 6barkha saxenaNo ratings yet

- ML AssDocument21 pagesML AssMalicha GalmaNo ratings yet

- Bagging BoostingDocument3 pagesBagging BoostingSudheer RedusNo ratings yet

- What Is Bagging and How It Works Machine Learning 1615649432Document5 pagesWhat Is Bagging and How It Works Machine Learning 1615649432Kshatrapati Singh100% (1)

- Decision TreesDocument53 pagesDecision TreesKarl100% (1)

- Bagging and BoostingDocument4 pagesBagging and Boostingsumit rakeshNo ratings yet

- Decision Trees in Machine Learning - by Prashant Gupta - Towards Data ScienceDocument6 pagesDecision Trees in Machine Learning - by Prashant Gupta - Towards Data ScienceAkash MukherjeeNo ratings yet

- Outlines: Statements of Problems Objectives Bagging Random Forest Boosting AdaboostDocument14 pagesOutlines: Statements of Problems Objectives Bagging Random Forest Boosting Adaboostendale100% (1)

- Decision TreeDocument38 pagesDecision TreePedro CatenacciNo ratings yet

- Ensemble LearningDocument7 pagesEnsemble LearningGabriel Gheorghe100% (1)

- Random Forest (RF) : Decision TreesDocument3 pagesRandom Forest (RF) : Decision TreesDivya NegiNo ratings yet

- Random Forest RegressionDocument2 pagesRandom Forest Regressiontomasjunio36No ratings yet

- Random Forests - Consolidating Decision Trees - Paperspace BlogDocument9 pagesRandom Forests - Consolidating Decision Trees - Paperspace BlogNkechi KokoNo ratings yet

- Understanding Random ForestDocument12 pagesUnderstanding Random ForestBS GNo ratings yet

- Bagging and Random Forest Presentation1Document23 pagesBagging and Random Forest Presentation1endale100% (2)

- Set-01.DT & Random ForestDocument28 pagesSet-01.DT & Random ForestRafi AhmedNo ratings yet

- 07 - Model Selection & BuildingDocument17 pages07 - Model Selection & BuildingOmar BenNo ratings yet

- Decision Making Tools: Decision Tree Payback AnalysisDocument2 pagesDecision Making Tools: Decision Tree Payback AnalysisMuhamamd Asfand YarNo ratings yet

- Introduction To Bagging and Ensemble Methods - Paperspace BlogDocument7 pagesIntroduction To Bagging and Ensemble Methods - Paperspace BlogNkechi KokoNo ratings yet

- BoostingDocument13 pagesBoostingPankaj PandeyNo ratings yet

- Lecture Notes - Random Forests PDFDocument4 pagesLecture Notes - Random Forests PDFSankar SusarlaNo ratings yet

- ML Module 5 2022 PDFDocument31 pagesML Module 5 2022 PDFjanuary100% (1)

- Data Mining Question SetDocument5 pagesData Mining Question SetRyan TranNo ratings yet

- ID4 Algorithm - Incremental Decision Tree LearningDocument9 pagesID4 Algorithm - Incremental Decision Tree LearningNirmal Varghese Babu 2528No ratings yet

- Unit Iir20Document22 pagesUnit Iir20Yadavilli VinayNo ratings yet

- Ensemble Learning Algorithms With Python Mini CourseDocument20 pagesEnsemble Learning Algorithms With Python Mini CourseJihene BenchohraNo ratings yet

- Konsep EnsembleDocument52 pagesKonsep Ensemblehary170893No ratings yet

- Week 7 - Tree-Based ModelDocument8 pagesWeek 7 - Tree-Based ModelNguyễn Trường Sơn100% (1)

- Bankruptcy Prediction Model 24-04-2017Document22 pagesBankruptcy Prediction Model 24-04-2017Shagufta TahsildarNo ratings yet

- Tree-Based ModelDocument21 pagesTree-Based ModelNachyAloysiusNo ratings yet

- Decision Tree & Random ForestDocument16 pagesDecision Tree & Random Forestreshma acharyaNo ratings yet

- AIUnit IIDocument47 pagesAIUnit IIomkar dhumalNo ratings yet

- Lecture 05 Random Forest 07112022 124639pmDocument25 pagesLecture 05 Random Forest 07112022 124639pmMisbahNo ratings yet

- Decision Tree Algorithm Tutorial With Example in RDocument23 pagesDecision Tree Algorithm Tutorial With Example in Rgouthamk5151No ratings yet

- Machine Learning With Random Forests and Decision Trees - A Visual Guide For Beginners by Scott HartshornDocument73 pagesMachine Learning With Random Forests and Decision Trees - A Visual Guide For Beginners by Scott HartshornIvaylo KrustevNo ratings yet

- Lecture Note 5Document7 pagesLecture Note 5vivek guptaNo ratings yet

- Decision Tree Algorithm, ExplainedDocument20 pagesDecision Tree Algorithm, ExplainedAli KhanNo ratings yet

- Random ForestDocument25 pagesRandom Forestabdala sabryNo ratings yet

- Machine Learning With BoostingDocument212 pagesMachine Learning With Boostingelena.echavarria100% (1)

- Random ForestDocument22 pagesRandom ForestKizifiNo ratings yet

- 1.decision Trees ConceptsDocument70 pages1.decision Trees ConceptsSuyash JainNo ratings yet

- MLDocument3 pagesMLAptech PitampuraNo ratings yet

- Learn The Basics Of Decision Trees A Popular And Powerful Machine Learning AlgorithmFrom EverandLearn The Basics Of Decision Trees A Popular And Powerful Machine Learning AlgorithmNo ratings yet

- Machine Learning Explained - Algorithms Are Your FriendDocument7 pagesMachine Learning Explained - Algorithms Are Your FriendpepeNo ratings yet

- 08 Decision - TreeDocument9 pages08 Decision - TreeGabriel GheorgheNo ratings yet

- Machine Learning With Random Forests - by Knoldus Inc. - Knoldus - Technical Insights - MediumDocument12 pagesMachine Learning With Random Forests - by Knoldus Inc. - Knoldus - Technical Insights - Mediumdimo.michevNo ratings yet

- Dtree&rfDocument26 pagesDtree&rfMohit SoniNo ratings yet

- ML CH Part 2Document19 pagesML CH Part 2mesemo tadiwosNo ratings yet

- ML CH Part 2Document19 pagesML CH Part 2mesemo tadiwosNo ratings yet

- IntroductionDocument11 pagesIntroductionmesemo tadiwosNo ratings yet

- OOSAD Chapter 5Document56 pagesOOSAD Chapter 5mesemo tadiwosNo ratings yet

- Chap1 - Introduction To Machine LearningDocument40 pagesChap1 - Introduction To Machine Learningmesemo tadiwosNo ratings yet

- ML CH Part 2Document19 pagesML CH Part 2mesemo tadiwosNo ratings yet

- 22 ChaleDocument18 pages22 Chalemesemo tadiwosNo ratings yet

- 22 ChaleDocument18 pages22 Chalemesemo tadiwosNo ratings yet

- 22 ChaleDocument18 pages22 Chalemesemo tadiwosNo ratings yet

- Assessment For Learning: 10 Principles. Research-Based Principles To Guide Classroom Practice Assessment For LearningDocument4 pagesAssessment For Learning: 10 Principles. Research-Based Principles To Guide Classroom Practice Assessment For LearningNicolás MuraccioleNo ratings yet

- Episode8 170202092249Document16 pagesEpisode8 170202092249Jan-Jan A. ValidorNo ratings yet

- (If Available, Write The Indicated MELC) : 098 Chipeco Avenue, Barangay 3, Calamba CityDocument9 pages(If Available, Write The Indicated MELC) : 098 Chipeco Avenue, Barangay 3, Calamba CityKristel EbradaNo ratings yet

- The Teaching Profession: MARCH 24, 2021Document14 pagesThe Teaching Profession: MARCH 24, 2021Alondra SiggayoNo ratings yet

- DLL - Science 4 - Q2 - W8Document4 pagesDLL - Science 4 - Q2 - W8Ej RafaelNo ratings yet

- Training Toolkit For Volunteers in Criminal Justice System Pilot Evaluation ReportDocument41 pagesTraining Toolkit For Volunteers in Criminal Justice System Pilot Evaluation ReportTiago LeitãoNo ratings yet

- Feedback Log 1Document3 pagesFeedback Log 1api-660565967No ratings yet

- 718 GrantproposalDocument3 pages718 Grantproposalapi-298674342No ratings yet

- Boyd - Udl Guideline ChecklistDocument2 pagesBoyd - Udl Guideline Checklistapi-639102060No ratings yet

- ENGLISH 6 - Q2 Module 2Document6 pagesENGLISH 6 - Q2 Module 2jommel vargasNo ratings yet

- Creating A Presidential Commission To Survey Philippine EducationDocument8 pagesCreating A Presidential Commission To Survey Philippine EducationCarie Justine EstrelladoNo ratings yet

- Operant Conditioning WorksheetDocument2 pagesOperant Conditioning Worksheetapi-24015734750% (2)

- Emotional: Winaldi Angger Pamudi (2213510017) M.khalil Gibran Siregar (2213510001) Ririn Alfitri (2213510009)Document8 pagesEmotional: Winaldi Angger Pamudi (2213510017) M.khalil Gibran Siregar (2213510001) Ririn Alfitri (2213510009)lolasafitriNo ratings yet

- Absolute Value and IntegersDocument7 pagesAbsolute Value and IntegersMarina AcuñaNo ratings yet

- Tpls 0206Document226 pagesTpls 0206NoviNo ratings yet

- TLED-HE 104 INTRODUCTION TO ICT SPECIALIZATION - Syllabus-ISODocument9 pagesTLED-HE 104 INTRODUCTION TO ICT SPECIALIZATION - Syllabus-ISOEdgar Bryan Nicart83% (6)

- 2010 SEC - Science Curriculum Guide PDFDocument41 pages2010 SEC - Science Curriculum Guide PDFMichael Clyde lepasanaNo ratings yet

- Ted Book of BooksDocument3 pagesTed Book of BooksIveta NikolovaNo ratings yet

- Essay - PleDocument18 pagesEssay - PleKshirja SethiNo ratings yet

- Lesson Plan in SPA Theatre ArtsDocument4 pagesLesson Plan in SPA Theatre ArtsXyloNo ratings yet

- STE-Computer-Programming-Q4 - MODULE 8Document20 pagesSTE-Computer-Programming-Q4 - MODULE 8Rosarie CharishNo ratings yet

- DLL - Empowerment - Technologies - For MergeDocument35 pagesDLL - Empowerment - Technologies - For MergeHerwin CapiñanesNo ratings yet

- Challenges in TeachingDocument45 pagesChallenges in TeachingAngelica ViveroNo ratings yet

- Understanding The Gmat Exam PDFDocument13 pagesUnderstanding The Gmat Exam PDFileaders04No ratings yet

- What We Know About Gen Z 2020 (Onehope)Document19 pagesWhat We Know About Gen Z 2020 (Onehope)Tommy BarqueroNo ratings yet

- Tutorial Letter 101/3/2023 International Trade ECS3702 Semesters 1 and 2 Department of EconomicsDocument14 pagesTutorial Letter 101/3/2023 International Trade ECS3702 Semesters 1 and 2 Department of Economicsdalron pedroNo ratings yet

- Department of Education: Detailed Lesson PlanDocument2 pagesDepartment of Education: Detailed Lesson PlanKrizzie Jade CailingNo ratings yet

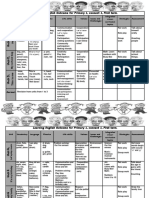

- Learning English Outcome For Primary 1, Connect 1, First TermDocument3 pagesLearning English Outcome For Primary 1, Connect 1, First TermJUST LIFENo ratings yet

- Cambridge International Examinations: Chemistry 0620/22 May/June 2017Document3 pagesCambridge International Examinations: Chemistry 0620/22 May/June 2017pNo ratings yet

- Permit To StudyDocument5 pagesPermit To StudyMichael Vallejos Magallanes ZacariasNo ratings yet