Professional Documents

Culture Documents

Balanced K-Means Revisited-5

Uploaded by

jefferyleclercOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Balanced K-Means Revisited-5

Uploaded by

jefferyleclercCopyright:

Available Formats

154

results in a trade-off between the clustering quality and the running time. For now, we deal with this

problem by introducing the increasing penalty factor f and define

scale(iter + 1) = f · scalemin (iter) where f ≥ 1. (7)

3.4. Partly remaining fraction c

In the standard k-means algorithm, the cluster sizes do not need to be known during the assignment

step of a data point because they are not necessary for the computation of the cost of assigning a data

point to a cluster. However, if we include a penalty term as indicated in Equation 2, we have to know

the cluster sizes during the assignment of a data point.

The calculation of the cluster sizes itself is quite simple, but the crucial question is when to update the

cluster sizes. This could take place after every complete iteration of the algorithm, after the assignment

phase of every data point, or before and after the assignment phase of every data point (in the sense of

removing a data point from its cluster before its assignment starts). Indeed, none of these approaches is

really good, each has its shortcomings, like producing oscillating or overlapping clusters. The most

promising approach, which we chose, is the following. A data point is removed partially from its old

cluster before its assignment and only after its new assignment it is removed completely from its old

cluster and added to its new cluster. In this way, during the assignment of a data point, the point only

partially belongs to its old cluster. To define partially, we introduce the constant c with 0 < c < 1,

which we refer to as the partly remaining fraction of a data point.

4. Algorithm BKM+

The proposed algorithm is presented in Algorithm 1. We call it balanced k-means revisited (BKM+).

In each iteration of the algorithm, each data point is assigned to the cluster j which minimizes the

cost term ||xi − c j ||2 + pnow · n j . In this process, the data point is first partly removed from its old cluster,

see function RemovePoint, and afterward added to its new cluster and completely removed from its

old cluster, see function AddPoint. Directly after the computation of the cost term, the variable pnext,i ,

corresponding to min jnew ∈{1,...,k} {scale(iter, i, jnew )}, is computed using the function PenaltyNext, which

implements Equation 4. If this number is a candidate for pnext , its value is taken. After all data points are

assigned, the penalty term pnow is set for the following iteration by multiplying pnext by the increasing

penalty factor f . Afterward, the assignments of the data points start again. The algorithm terminates as

soon as the largest cluster is at most ∆nmax data points larger than the smallest cluster.

4.1. Optimization of parameters c and f

The algorithm contains two parameters that have to be determined: The partly remaining fraction

c for defining the fraction of a data point that belongs to its old cluster during its assignment, and the

increasing penalty factor f , which determines the factor by which the minimum penalty that is necessary

in the next iteration to make a data point change to a smaller cluster is multiplied.

For c, a value between 0.15 and 0.20 is reasonable in every situation. The choice of f is a trade-off

between the clustering quality and the running time: if the focus is on the clustering quality, f should

be chosen as 1.01 or smaller, whereas if the running time is critical, setting f to 1.05 or 1.10 is the

better choice. A compromise is to choose f as a function depending on the number of iterations. In

Applied Computing and Intelligence Volume 3, Issue 2, 145–179

163

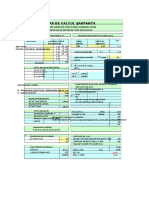

Table 2. Results for the SSE, NMI, and running time if a balanced clustering is required, n

denotes the size of the data set, d its dimension, and k refers to the number of clusters sought

in the data set.

Dataset (n,d,k) Algo SSE NMI Time (s)

S1 RKM 1.089e+13 0.948 0.11

(5000,2,15) BKM 1.100e+13 0.946 3364.98

BKM+ 1.097e+13 0.946 0.10

BKM++ 1.090e+13 0.947 0.11

S2 RKM 1.428e+13 0.921 0.12

(5000,2,15) BKM 1.431e+13 0.920 3504.10

BKM+ 1.456e+13 0.917 0.09

BKM++ 1.430e+13 0.922 0.10

S3 RKM 1.734e+13 0.796 0.14

(5000,2,15) BKM 1.736e+13 0.797 12074.14

BKM+ 1.736e+13 0.798 0.07

BKM++ 1.734e+13 0.797 0.08

S4 RKM 1.651e+13 0.729 0.16

(5000,2,15) BKM 1.652e+13 0.728 9621.18

BKM+ 1.652e+13 0.729 0.08

BKM++ 1.651e+13 0.730 0.09

A1 RKM 1.221e+10 0.984 0.08

(3000,2,20) BKM 1.221e+10 0.984 452.45

BKM+ 1.224e+10 0.983 0.03

BKM++ 1.221e+10 0.984 0.04

A2 RKM 2.037e+10 0.989 0.30

(5250,2,35) BKM 2.037e+10 0.989 2188.20

BKM+ 2.059e+10 0.983 0.12

BKM++ 2.038e+10 0.989 0.14

A3 RKM 2.905e+10 0.991 0.80

(7500,2,50) BKM 2.905e+10 0.991 7447.09

BKM+ 2.931e+10 0.986 0.40

BKM++ 2.906e+10 0.991 0.42

birch1 RKM 9.288e+13 0.989 91.68

(100k, 2, 100) BKM - -

BKM+ 9.299e+13 0.984 27.29

BKM++ 9.288e+13 0.989 31.38

Continue on next page

Applied Computing and Intelligence Volume 3, Issue 2, 145–179

173

Maximum Difference in the Cluster Sizes

≤1 ≤3 ≤5

1e6

Increasing Penalty Factor f

1.4 6.83 6.51 6.35 6.69 6.94 7.08 8.70 1.4 6.24 6.18 6.19 6.19 6.23 6.16 6.19 1.4 6.22 6.20 6.22 6.27 6.30 6.24 6.22 8

7

1.2 5.39 5.28 5.33 5.26 5.14 5.20 6.56 1.2 5.23 5.33 5.31 5.27 5.16 5.19 5.04 1.2 5.39 5.50 5.42 5.45 5.31 5.39 5.23

1.1 4.71 4.37 4.34 4.34 4.36 4.34 4.88 1.1 4.81 4.65 4.67 4.66 4.58 4.61 4.46 1.1 5.04 4.96 5.05 4.97 4.90 4.94 4.82 6

MSE

1.05 4.16 4.06 4.04 4.03 4.05 4.01 4.05 1.05 4.27 4.25 4.25 4.29 4.18 4.14 4.10 1.05 4.49 4.63 4.54 4.48 4.55 4.30 4.40 5

1.01 4.12 3.99 3.95 3.93 3.93 3.92 3.93 1.01 4.01 4.06 3.99 3.98 3.97 3.96 3.94 1.01 4.22 4.23 4.21 4.16 4.17 4.09 4.06

1.001 4.10 3.99 3.94 3.94 3.90 3.90 3.93 1.001 3.96 3.96 3.96 3.95 3.95 3.94 3.93 1.001 4.12 4.09 4.17 4.04 4.09 4.04 3.98

4

1.0001 4.12 3.98 3.94 3.94 3.91 3.91 3.92 1.0001 3.95 4.01 3.93 3.97 3.93 3.93 3.92 1.0001 4.13 4.15 4.01 4.07 4.05 4.05 3.99

1

01

05

1

15

01

01

05

1

15

1

05

1

15

4

00

0.

0.

0.

0.

0.

0.

00

0.

0.

0.

0.

0.

0.

0

0.

0.

0.

0.

0.

0.

0.

0.

0.

Increasing Penalty Factor f

1.4 0.18 0.18 0.18 0.18 0.17 0.18 0.19 1.4 0.16 0.16 0.16 0.16 0.16 0.16 0.18 1.4 0.16 0.15 0.15 0.15 0.15 0.16 0.17 3.0

2.5

1.2 0.26 0.26 0.26 0.25 0.25 0.25 0.27 1.2 0.22 0.23 0.22 0.22 0.22 0.22 0.24 1.2 0.22 0.22 0.22 0.22 0.22 0.22 0.24 2.0

Time in sec

1.1 0.38 0.39 0.39 0.39 0.39 0.38 0.38 1.1 0.34 0.34 0.33 0.33 0.34 0.33 0.33 1.1 0.33 0.33 0.33 0.33 0.33 0.32 0.32 1.5

1.05 0.56 0.57 0.58 0.57 0.58 0.57 0.57 1.05 0.51 0.51 0.51 0.51 0.51 0.51 0.51 1.05 0.51 0.51 0.50 0.50 0.50 0.50 0.50 1.0

1.01 1.36 1.39 1.38 1.39 1.40 1.38 1.43 1.01 1.31 1.32 1.29 1.30 1.32 1.30 1.33 1.01 1.30 1.31 1.28 1.29 1.31 1.29 1.33

0.5

1.001 2.55 2.56 2.59 2.61 2.60 2.64 2.83 1.001 2.49 2.48 2.48 2.50 2.53 2.56 2.72 1.001 2.49 2.48 2.47 2.50 2.52 2.55 2.72

1.0001 2.84 2.91 2.91 2.92 2.92 2.95 3.25 1.0001 2.79 2.83 2.80 2.81 2.84 2.86 3.15 1.0001 2.78 2.83 2.79 2.81 2.83 2.85 3.14

1

01

05

1

15

1

01

05

1

15

1

05

1

15

4

00

0.

0.

0.

00

0.

0.

0.

00

0.

0.

0.

0.

0.

0.

0.

0.

0.

0.

0.

0.

0.

0.

0.

Partly Remaining Fraction c Partly Remaining Fraction c Partly Remaining Fraction c

Figure 12. Effect of parameter selection on MSE and processing time for data set A3.

Applied Computing and Intelligence Volume 3, Issue 2, 145–179

You might also like

- Balanced K-Means Revisited-6Document2 pagesBalanced K-Means Revisited-6jefferyleclercNo ratings yet

- Perkerasan KakuDocument5 pagesPerkerasan KakuTeknik SipilNo ratings yet

- Table: Element Forces - Frames Frame Station Outputcase Casetype P V2 V3 TDocument7 pagesTable: Element Forces - Frames Frame Station Outputcase Casetype P V2 V3 Talvi joanNo ratings yet

- Work Programme Jan, Feb, March-2017Document59 pagesWork Programme Jan, Feb, March-2017parvainfratechNo ratings yet

- An Example of The Backward Elimination ProcessDocument8 pagesAn Example of The Backward Elimination ProcessMohammedseid AhmedinNo ratings yet

- S. No. Chainage Structure Number Type SizeDocument46 pagesS. No. Chainage Structure Number Type SizeHarish Kumar MahavarNo ratings yet

- Agc - Rsbipl (JV) : Date: 10-10-2020 Highway Status-Gadchiroli - Ashti ProjectDocument2 pagesAgc - Rsbipl (JV) : Date: 10-10-2020 Highway Status-Gadchiroli - Ashti ProjectAli MuzaffarNo ratings yet

- Column-3 - Platform at El +8800Document12 pagesColumn-3 - Platform at El +8800Tech DesignNo ratings yet

- CAM Charges Working For The Month of May 2023Document1 pageCAM Charges Working For The Month of May 2023adnandani2882No ratings yet

- Cash OutflowDocument1 pageCash OutflowMarvin MessiNo ratings yet

- Kirby South East Asia: Aisc 2005 AsdDocument3 pagesKirby South East Asia: Aisc 2005 AsdthiệnNo ratings yet

- Staad Steel Building New ReportDocument3 pagesStaad Steel Building New ReportAkarshGowdaNo ratings yet

- All CompkDocument63 pagesAll CompkSurianshah shahNo ratings yet

- Fender Base Plate Analysis: Static CaseDocument7 pagesFender Base Plate Analysis: Static CaseBhavesh ShiyaniNo ratings yet

- RA Bill AbstractDocument1 pageRA Bill AbstractpraveenNo ratings yet

- Midas Gen: 1. Design ConditionDocument1 pageMidas Gen: 1. Design ConditionCSEC Uganda Ltd.No ratings yet

- Nh-44a Work Program Upto 31.12.2019 For Rce-IIDocument1 pageNh-44a Work Program Upto 31.12.2019 For Rce-IIMAROTHU MOHAN MANOJNo ratings yet

- 02.calculation Box Culvert - PKGA3-1Document27 pages02.calculation Box Culvert - PKGA3-1OktayNo ratings yet

- Processing Report Novikucakucica Merged ChunkDocument6 pagesProcessing Report Novikucakucica Merged ChunkJosip KovilićNo ratings yet

- Lenco Exact (Autosaved) AaaaaDocument22 pagesLenco Exact (Autosaved) AaaaatofikkemalNo ratings yet

- Table: Element Forces - Frames Frame Station Outputcase Casetype P V2 V3 TDocument7 pagesTable: Element Forces - Frames Frame Station Outputcase Casetype P V2 V3 Talvi joanNo ratings yet

- Cost ControlDocument24 pagesCost ControlMazenNo ratings yet

- (Rev - mdp17) Volume Poli Polia - Bts - Kolaka.koltim 2020Document373 pages(Rev - mdp17) Volume Poli Polia - Bts - Kolaka.koltim 2020Grian DavinciNo ratings yet

- Earth Work QtyDocument21 pagesEarth Work Qtyලහිරු විතානාච්චිNo ratings yet

- InvoiceDocument4 pagesInvoiceFiroz ShaikhNo ratings yet

- CableDocument1 pageCablenaveentsipvtNo ratings yet

- Diseño ColDocument3 pagesDiseño ColHernan Ramiro Suyo LarutaNo ratings yet

- Sub Contractor Interim Payment CertificateDocument10 pagesSub Contractor Interim Payment CertificateUditha AnuruddthaNo ratings yet

- Chair ReportDocument38 pagesChair Reportapi-356401993No ratings yet

- Live Load Distribution Factor CalculationsDocument5 pagesLive Load Distribution Factor CalculationsBunkun15No ratings yet

- Chirai Anjar Rigid Pavement Design As Per IRC 58 2011Document60 pagesChirai Anjar Rigid Pavement Design As Per IRC 58 2011chetan c patilNo ratings yet

- Sub Contractor Interim Payment CertificateDocument1 pageSub Contractor Interim Payment CertificateUditha AnuruddthaNo ratings yet

- Kirby South East Asia: Aisc 2005 AsdDocument3 pagesKirby South East Asia: Aisc 2005 AsdthiệnNo ratings yet

- FeareportDocument7 pagesFeareportapi-404637171No ratings yet

- Fea ReportDocument16 pagesFea Reportapi-457374363No ratings yet

- Isolated Footing DesignDocument10 pagesIsolated Footing DesignSemahegn Gebiru100% (1)

- Five Story Building-Modal AnalysisDocument5 pagesFive Story Building-Modal AnalysisMD NoimNo ratings yet

- Previous Estimate V/s Latest EstimateDocument2 pagesPrevious Estimate V/s Latest EstimateRajarathinam1235463No ratings yet

- Column Formwork and Concrete Costing TablesDocument2 pagesColumn Formwork and Concrete Costing TablesAzwani AbdullahNo ratings yet

- Thermal RatingDocument16 pagesThermal RatingChetan PrajapatiNo ratings yet

- 1 Level:: 2.1 Material PropertiesDocument3 pages1 Level:: 2.1 Material Propertiesjose Carlos Franco ReyesNo ratings yet

- 1 Level:: 2.1 Material PropertiesDocument3 pages1 Level:: 2.1 Material Propertiesjose Carlos Franco ReyesNo ratings yet

- (PDF) Dimensionare Sarpanta LEMN (Capriori, Pane, Popi)Document9 pages(PDF) Dimensionare Sarpanta LEMN (Capriori, Pane, Popi)Daiana BercuciuNo ratings yet

- PC No.19 Updated 1Document661 pagesPC No.19 Updated 1Amalina YaniNo ratings yet

- Rates 1st 2022 (All Rates)Document49 pagesRates 1st 2022 (All Rates)Waheed AhmadNo ratings yet

- Technical SchedulesDocument100 pagesTechnical SchedulesaryannetNo ratings yet

- MAt 650Document1 pageMAt 650Aek JanNo ratings yet

- Beam Formwork and Concrete Costing TablesDocument2 pagesBeam Formwork and Concrete Costing TablesAzwani AbdullahNo ratings yet

- Lab Report: Applied PhysicsDocument7 pagesLab Report: Applied PhysicsMasoodNo ratings yet

- Tepo Sole Co., LTD: 1. General InformationDocument3 pagesTepo Sole Co., LTD: 1. General InformationAlpha ScimathNo ratings yet

- Pow 17J00012Document41 pagesPow 17J00012amroussyNo ratings yet

- Design of Combined-Footing: Input DataDocument7 pagesDesign of Combined-Footing: Input DataUzziel Abib GabiolaNo ratings yet

- Table: Element Forces - Frames Frame Station Outputcase Casetype P V2 V3 TDocument7 pagesTable: Element Forces - Frames Frame Station Outputcase Casetype P V2 V3 Talvi joanNo ratings yet

- SITE Estimate of Pile Cap, Reatining Wall, Raft SlabDocument13 pagesSITE Estimate of Pile Cap, Reatining Wall, Raft Slabsaikat mondalNo ratings yet

- Calculo Hidraulico TuberiasDocument3 pagesCalculo Hidraulico TuberiasCord Javi NicoNo ratings yet

- Slab 400Document1 pageSlab 400Aek JanNo ratings yet

- Processing Report ALINIRANJE CHUNKOVA Merged ChunkDocument10 pagesProcessing Report ALINIRANJE CHUNKOVA Merged ChunkJosip KovilićNo ratings yet

- C1 SubstructureDocument6 pagesC1 SubstructureEdan John HernandezNo ratings yet

- Concrete Beam DesignDocument3 pagesConcrete Beam Designheherson juanNo ratings yet

- 2023 Data, Analytics, and Artificial Intelligence Adoption Strategy-HDocument4 pages2023 Data, Analytics, and Artificial Intelligence Adoption Strategy-HjefferyleclercNo ratings yet

- HadoopDocument7 pagesHadoopjefferyleclercNo ratings yet

- SAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-16Document3 pagesSAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-16jefferyleclercNo ratings yet

- Balanced K-Means Revisited-1Document3 pagesBalanced K-Means Revisited-1jefferyleclercNo ratings yet

- SAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-1QDocument2 pagesSAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-1QjefferyleclercNo ratings yet

- Paper DviDocument7 pagesPaper DvijefferyleclercNo ratings yet

- 2023 Data, Analytics, and Artificial Intelligence Adoption Strategy-ADocument7 pages2023 Data, Analytics, and Artificial Intelligence Adoption Strategy-AjefferyleclercNo ratings yet

- SAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-1EDocument2 pagesSAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-1EjefferyleclercNo ratings yet

- 2023 Data, Analytics, and Artificial Intelligence Adoption Strategy-3Document3 pages2023 Data, Analytics, and Artificial Intelligence Adoption Strategy-3jefferyleclercNo ratings yet

- SAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-17Document3 pagesSAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-17jefferyleclercNo ratings yet

- SAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-PDocument3 pagesSAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-PjefferyleclercNo ratings yet

- SAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-9Document4 pagesSAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-9jefferyleclercNo ratings yet

- SAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-ODocument3 pagesSAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-OjefferyleclercNo ratings yet

- SAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-ADocument6 pagesSAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-AjefferyleclercNo ratings yet

- SAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-14Document3 pagesSAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-14jefferyleclercNo ratings yet

- A Distance-Based Kernel For Classification Via Support Vector Machines - PMC-17Document1 pageA Distance-Based Kernel For Classification Via Support Vector Machines - PMC-17jefferyleclercNo ratings yet

- SAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-5Document4 pagesSAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-5jefferyleclercNo ratings yet

- SAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-4Document3 pagesSAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP Community-4jefferyleclercNo ratings yet

- SAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP CommunityDocument3 pagesSAP HANA PAL - K-Means Algorithm or How To Do Cust... - SAP CommunityjefferyleclercNo ratings yet

- Tutorial For K Means Clustering in Python Sklearn - MLK - Machine Learning Knowledge-5Document3 pagesTutorial For K Means Clustering in Python Sklearn - MLK - Machine Learning Knowledge-5jefferyleclercNo ratings yet

- Data Visualization Cheat Sheet For Basic Machine Learning Algorithms - by Boriharn K - Mar, 2024 - Towards Data ScienceDocument3 pagesData Visualization Cheat Sheet For Basic Machine Learning Algorithms - by Boriharn K - Mar, 2024 - Towards Data SciencejefferyleclercNo ratings yet

- Fuzzy K-Mean Clustering in Mapreduce On Cloud Based Hadoop: Dweepna GargDocument4 pagesFuzzy K-Mean Clustering in Mapreduce On Cloud Based Hadoop: Dweepna GargjefferyleclercNo ratings yet

- 1 s2.0 S1877050923018549 MainDocument5 pages1 s2.0 S1877050923018549 MainjefferyleclercNo ratings yet

- Embed and Conquer: Scalable Embeddings For Kernel K-Means On MapreduceDocument9 pagesEmbed and Conquer: Scalable Embeddings For Kernel K-Means On MapreducejefferyleclercNo ratings yet

- Paper2014 - 9 Efficient Means Approximation WithDocument11 pagesPaper2014 - 9 Efficient Means Approximation WithjefferyleclercNo ratings yet

- The K-Means Clustering Algorithm in Java - BaeldungDocument38 pagesThe K-Means Clustering Algorithm in Java - BaeldungjefferyleclercNo ratings yet

- LGS - Guidelines For Postgraduate WritingDocument34 pagesLGS - Guidelines For Postgraduate WritingjefferyleclercNo ratings yet

- SSRN Id1310053Document31 pagesSSRN Id1310053jefferyleclercNo ratings yet

- Rutgers Lib 27209 - PDF 1Document231 pagesRutgers Lib 27209 - PDF 1jefferyleclercNo ratings yet

- Proposal Defense Evaluation Form ODUJA OLUBAYO 110057657Document4 pagesProposal Defense Evaluation Form ODUJA OLUBAYO 110057657jefferyleclercNo ratings yet

- Presentation Rheinmetall Hand GrenadesDocument24 pagesPresentation Rheinmetall Hand Grenadesn0dazeNo ratings yet

- Mindfulness in Primary School Children ADocument15 pagesMindfulness in Primary School Children AMuhammad Irfan AneesNo ratings yet

- Fgene 10 00472Document9 pagesFgene 10 00472Francisco CostaNo ratings yet

- Subsurface Investigation For Foundations - Code of Practice: Indian StandardDocument52 pagesSubsurface Investigation For Foundations - Code of Practice: Indian StandardBodhraj singh solanki50% (2)

- Csa 320Document3 pagesCsa 320Pith SophakdeyNo ratings yet

- KTN-70A OME Rev01 PDFDocument66 pagesKTN-70A OME Rev01 PDFZakaria ChowdhuryNo ratings yet

- POM10 SMCourse Review FINALDocument52 pagesPOM10 SMCourse Review FINALMyles WatsonNo ratings yet

- SS ZG653 (RL1.2) : Software Architecture: BITS PilaniDocument12 pagesSS ZG653 (RL1.2) : Software Architecture: BITS PilaniAditya AggarwalNo ratings yet

- Assignment 1Document4 pagesAssignment 1hbtalvi100% (1)

- Everyday2 M8 S2 Student GrammarDocument2 pagesEveryday2 M8 S2 Student GrammarIvan MQNo ratings yet

- Numerology Along With JyotishDocument11 pagesNumerology Along With JyotishAnonymous jGdHMEODVmNo ratings yet

- Applied Partial Differential Equations - J. David Logan-1998Document2 pagesApplied Partial Differential Equations - J. David Logan-1998Jeremy Mac LeanNo ratings yet

- Essay About TechnologyDocument24 pagesEssay About TechnologyTemo Abashidze100% (2)

- Second Law Part 2Document10 pagesSecond Law Part 2Sivan RajNo ratings yet

- Simplified Modified Compression Field Theory For Calculating Shear Strength of Reinforced Concrete ElementsDocument11 pagesSimplified Modified Compression Field Theory For Calculating Shear Strength of Reinforced Concrete ElementsTooma David100% (1)

- CNS - Unit 2Document29 pagesCNS - Unit 2alameenNo ratings yet

- Task 2 - WHS Compliance PlanDocument4 pagesTask 2 - WHS Compliance PlanCheryl Ivonne ARCE PACHECONo ratings yet

- UI Design Featuring WPF Part 1 CP114 5Document40 pagesUI Design Featuring WPF Part 1 CP114 5aleiAlhepSNo ratings yet

- Saint PatrickDocument1 pageSaint PatrickAnalía Cimolai0% (1)

- uC-GUI UserDocument920 pagesuC-GUI Userpmih1No ratings yet

- Cuttack and BhubaneshwarDocument43 pagesCuttack and BhubaneshwarShatabdi SinhaNo ratings yet

- Textbook of Respiratory Disease in Dogs and Cats Diaphragmatic HerniaDocument9 pagesTextbook of Respiratory Disease in Dogs and Cats Diaphragmatic HerniaDiego CushicóndorNo ratings yet

- Lesson 4 Module Title: Leadership TrainingDocument8 pagesLesson 4 Module Title: Leadership TrainingAnamae Detaro DarucaNo ratings yet

- Static Force AnalysisDocument16 pagesStatic Force AnalysisMohamed Ashraf HindyNo ratings yet

- Investigation of Post Processing Techniques To Reduce The Surface Roughness of Fused Deposition Modeled PartsDocument14 pagesInvestigation of Post Processing Techniques To Reduce The Surface Roughness of Fused Deposition Modeled PartsIAEME PublicationNo ratings yet

- Free Online Auto Repair Manualhbydr PDFDocument1 pageFree Online Auto Repair Manualhbydr PDFcoachtable3No ratings yet

- Orca Share Media1575800911767Document88 pagesOrca Share Media1575800911767John Carlo O. BallaresNo ratings yet

- The Role of Co-Creation Experience in Engaging Customers With Service BrandsDocument16 pagesThe Role of Co-Creation Experience in Engaging Customers With Service BrandsEdy Yulianto PutraNo ratings yet

- Ken Auletta: Media MaximsDocument32 pagesKen Auletta: Media MaximsMattDellingerNo ratings yet

- Jonah Study GuideDocument29 pagesJonah Study GuideErik Dienberg100% (1)