Professional Documents

Culture Documents

Artificial Intelligence With Respect To Voilation of International Human Rights of The Lgbtqai

Uploaded by

parichaya reddy0 ratings0% found this document useful (0 votes)

10 views1 pageThe document discusses how artificial intelligence used for content moderation can unintentionally restrict the freedom of speech of LGBTQIA+ communities by not understanding context. AI tools trained only on language are unable to discern whether terms like "bitch" or references to gender/sexual identities are being used in an empowering or hateful way. This poses risks to LGBTQIA+ visibility and expression online, potentially having a disempowering impact despite the goal of protecting vulnerable groups from hate speech. The document analyzes how AI has incorrectly flagged non-toxic tweets as offensive due simply to the presence of common words sometimes used by the LGBTQIA+ community.

Original Description:

Original Title

Artificial Intelligence With Respect to Voilation of International Human Rights of the Lgbtqai

Copyright

© © All Rights Reserved

Available Formats

DOCX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentThe document discusses how artificial intelligence used for content moderation can unintentionally restrict the freedom of speech of LGBTQIA+ communities by not understanding context. AI tools trained only on language are unable to discern whether terms like "bitch" or references to gender/sexual identities are being used in an empowering or hateful way. This poses risks to LGBTQIA+ visibility and expression online, potentially having a disempowering impact despite the goal of protecting vulnerable groups from hate speech. The document analyzes how AI has incorrectly flagged non-toxic tweets as offensive due simply to the presence of common words sometimes used by the LGBTQIA+ community.

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

10 views1 pageArtificial Intelligence With Respect To Voilation of International Human Rights of The Lgbtqai

Uploaded by

parichaya reddyThe document discusses how artificial intelligence used for content moderation can unintentionally restrict the freedom of speech of LGBTQIA+ communities by not understanding context. AI tools trained only on language are unable to discern whether terms like "bitch" or references to gender/sexual identities are being used in an empowering or hateful way. This poses risks to LGBTQIA+ visibility and expression online, potentially having a disempowering impact despite the goal of protecting vulnerable groups from hate speech. The document analyzes how AI has incorrectly flagged non-toxic tweets as offensive due simply to the presence of common words sometimes used by the LGBTQIA+ community.

Copyright:

© All Rights Reserved

Available Formats

Download as DOCX, PDF, TXT or read online from Scribd

You are on page 1of 1

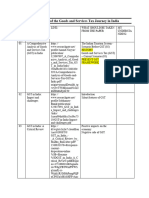

ARTIFICIAL INTELLIGENCE WITH RESPECT TO VOILATION OF

INTERNATIONAL HUMAN RIGHTS OF THE LGBTQAI+ COMMUNITY

1. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3259344

Fighting Hate Speech, Silencing Drag Queens? Artificial Intelligence in Content

Moderation and Risks to LGBTQ Voices Online- LGBTQIA+ freedom of speech is being

restricted by Artificial Intelligence.

AI tools developed to analyze text-based content are not yet able to understand

context. Differently from these other studies, however, this article approaches

the issue from the perspective of the LGBTQ community to highlight how

content moderation technologies could affect LGBTQ visibility.

This is not a flaw, since these algorithms make their decisions based on language

alone, irrespective of who says it and in what context.

This is particularly interesting when one of the main reasons behind the

development of these tools is to support vulnerable communities by dealing

with hate speech targeting such groups. If these tools might prevent 730 T. Dias

Oliva et al. LGBTQ people from expressing themselves and speaking up against

what they themselves consider to be toxic, harmful, or hateful, their net impact

may be disempowering, rather than helpful.

In this paper we can see that Artificial Intelligence block the use of certain terms

such as terrorism, bitch, LGBTQ, etc.…. Sometimes I couldn’t assess the context

such terms are being used in. These words are used to self-empower by People

of LGBTQ. This paper analyses toxicity of the data taken from the twitter, where

some tweets are not toxic but declared toxic or offensive by the artificial

intelligence due to presence of the common words like bitch, gay, lesbian etc.…

2. https://academic.oup.com/hrlr/article/20/4/607/6023108-

You might also like

- Annotated BibliographyDocument4 pagesAnnotated Bibliographyapi-529177927No ratings yet

- Hate Speech On Social MediaDocument11 pagesHate Speech On Social MediaGopi KrishnaNo ratings yet

- Artificial Intelligence With Respect To Voilation of International Human Rights of The LgbtqaiDocument1 pageArtificial Intelligence With Respect To Voilation of International Human Rights of The LgbtqaiBADDAM PARICHAYA REDDYNo ratings yet

- Stem5 Pluto RRLDocument10 pagesStem5 Pluto RRLRosedel BarlongayNo ratings yet

- TSC002 HateSpeech TS FNLDocument11 pagesTSC002 HateSpeech TS FNLNatasha's NaturelandNo ratings yet

- Hate Speech Research PaperDocument4 pagesHate Speech Research Paperzrdhvcaod100% (3)

- 3 Detection of Hate Speech in Social Networks A SurvDocument19 pages3 Detection of Hate Speech in Social Networks A SurvAlex HAlesNo ratings yet

- Nishchay 2019373 EI EndSemDocument4 pagesNishchay 2019373 EI EndSemNishchay SidhNo ratings yet

- A Framework For Early Detection of Antisocial Behavior On Twitter Using NLP PDFDocument12 pagesA Framework For Early Detection of Antisocial Behavior On Twitter Using NLP PDFMeandrick MartinNo ratings yet

- B15-Content - Analysis - in - Social - Media (1) - BbhavaniDocument59 pagesB15-Content - Analysis - in - Social - Media (1) - Bbhavanigandhambhavani2003No ratings yet

- Human Rights 31 03 2024-1-1Document9 pagesHuman Rights 31 03 2024-1-1soumya254singhNo ratings yet

- Research Paper Topics On Social NetworkingDocument7 pagesResearch Paper Topics On Social Networkingfvffv0x7100% (1)

- Copy of Template For A Perfect Annotated Bibliography 9Document1 pageCopy of Template For A Perfect Annotated Bibliography 9api-669655261No ratings yet

- Aggression and Violent Behavior: Naganna Chetty, Sreejith AlathurDocument11 pagesAggression and Violent Behavior: Naganna Chetty, Sreejith AlathurΑγγελικήΝιρούNo ratings yet

- Ameya - Book ChapterDocument7 pagesAmeya - Book ChapterMeera MathewNo ratings yet

- Fighting Hate Speech, Silencing Drag Queens? Artificial Intelligence in Content Moderation and Risks To LGBTQ Voices OnlineDocument33 pagesFighting Hate Speech, Silencing Drag Queens? Artificial Intelligence in Content Moderation and Risks To LGBTQ Voices OnlineLuyi ZhangNo ratings yet

- Harnessing AI for a More Just and Peaceful World: Preventing the Deep State and Global Race War: 1A, #1From EverandHarnessing AI for a More Just and Peaceful World: Preventing the Deep State and Global Race War: 1A, #1No ratings yet

- Censorship Essay Thesis StatementDocument4 pagesCensorship Essay Thesis Statementygsyoeikd100% (2)

- Sambitan - Activity 5Document3 pagesSambitan - Activity 5api-711542617No ratings yet

- Term Paper About LGBTDocument7 pagesTerm Paper About LGBTcdkxbcrif100% (1)

- An Essay About AbortionDocument6 pagesAn Essay About Abortionb7263nq2100% (2)

- Research Paper Internet CensorshipDocument5 pagesResearch Paper Internet Censorshipegw4qvw3100% (1)

- Do We Need Custodians of KnowledgeDocument2 pagesDo We Need Custodians of KnowledgepabloNo ratings yet

- Research Paper Hate CrimesDocument4 pagesResearch Paper Hate Crimesyhclzxwgf100% (1)

- D8326029acc188e1 AI Tmp5aegbe1jDocument7 pagesD8326029acc188e1 AI Tmp5aegbe1jhafizshoaib5567No ratings yet

- Social Media Based Hate Speech Detection Using Machine LearningDocument10 pagesSocial Media Based Hate Speech Detection Using Machine LearningIJRASETPublicationsNo ratings yet

- English 115Document5 pagesEnglish 115api-478229786No ratings yet

- Research Paper On Racism PDFDocument7 pagesResearch Paper On Racism PDFzmvhosbnd100% (1)

- Project 3 Final DraftDocument5 pagesProject 3 Final Draftapi-573370631No ratings yet

- Twitter 2 ComparativestudyDocument13 pagesTwitter 2 ComparativestudyShah Fazlur Rahman SuadNo ratings yet

- Research Paper CensorshipDocument8 pagesResearch Paper Censorshipc9sf7pe3100% (1)

- The Algorithmic Imaginary Exploring The Ordinary Affects of Facebook AlgorithmsDocument16 pagesThe Algorithmic Imaginary Exploring The Ordinary Affects of Facebook AlgorithmsGloriaNo ratings yet

- Unique Social Issue Research Paper TopicsDocument4 pagesUnique Social Issue Research Paper Topicsmgrekccnd100% (1)

- The Terrorist Screening Database (TSDB) and Human Experimentation: 1AFrom EverandThe Terrorist Screening Database (TSDB) and Human Experimentation: 1ANo ratings yet

- Combating Anti-Blackness in The AI CommunityDocument11 pagesCombating Anti-Blackness in The AI Communitypauloco123No ratings yet

- Exam 2 English 8Document4 pagesExam 2 English 8Daniela Guáqueta GarciaNo ratings yet

- Refelction Paper Rise of The TrollsDocument1 pageRefelction Paper Rise of The TrollsMicah MagallanoNo ratings yet

- The Chaos MachineDocument23 pagesThe Chaos MachineLeonardo SPNo ratings yet

- Racist Call-Outs and Cancel Culture On TwitterDocument11 pagesRacist Call-Outs and Cancel Culture On Twitterkarma colaNo ratings yet

- Unveiling The Lived Sexual Self EthnosemDocument33 pagesUnveiling The Lived Sexual Self EthnosemあきとーえいとNo ratings yet

- SELFDocument13 pagesSELFmarxeulee xxNo ratings yet

- Minority Vulnerability ThesisDocument7 pagesMinority Vulnerability Thesisgjgd460r100% (2)

- The Internet Is Changing The Face of Communication and CultureDocument3 pagesThe Internet Is Changing The Face of Communication and CulturedabbstedNo ratings yet

- Muhammad Irwan Hardiansyah - Critical Discourse AnalysisDocument5 pagesMuhammad Irwan Hardiansyah - Critical Discourse AnalysisIrwan HardiansyahNo ratings yet

- Feeling Alone Among 317 Million OthersDocument39 pagesFeeling Alone Among 317 Million OthersKristiani DitaNo ratings yet

- I M Rough DraftDocument7 pagesI M Rough Draftapi-546484875No ratings yet

- How To YayDocument3 pagesHow To YayAlper Tamay ArslanNo ratings yet

- The Intersection of Sociology and Artificial IntelligenceDocument2 pagesThe Intersection of Sociology and Artificial IntelligenceMuhammad NafeesNo ratings yet

- Research Paper On Hate GroupsDocument8 pagesResearch Paper On Hate Groupsfyrc79tv100% (1)

- How To Avoid Online LynchingDocument4 pagesHow To Avoid Online Lynchingmerterdem0660No ratings yet

- Gays in The Military EssayDocument10 pagesGays in The Military Essayaxmljinbf100% (2)

- The Bullying Game - Sexism Based Toxic Language Analysis On OnlineDocument17 pagesThe Bullying Game - Sexism Based Toxic Language Analysis On OnlineEileen MenesesNo ratings yet

- Explanatory Synthesis MarquesDocument8 pagesExplanatory Synthesis MarquesMiguel MarquesNo ratings yet

- Gay and Lesbian ThesisDocument6 pagesGay and Lesbian Thesisjennifergrahamgilbert100% (2)

- Emerging Technologies and LawDocument11 pagesEmerging Technologies and LawAditya Pratap SinghNo ratings yet

- Compromise and Resistance Sexual Minority's Cant in Social MediaDocument4 pagesCompromise and Resistance Sexual Minority's Cant in Social MediaFlora ChenNo ratings yet

- Chapter 1Document1 pageChapter 1parichaya reddyNo ratings yet

- An Overview of The Goods and Services Tax Journey in IndiaDocument3 pagesAn Overview of The Goods and Services Tax Journey in Indiaparichaya reddyNo ratings yet

- Enforecement of Orders of The Supreme Court by The Civil CourtDocument4 pagesEnforecement of Orders of The Supreme Court by The Civil Courtparichaya reddyNo ratings yet

- Constitutional Law Document FinalDocument5 pagesConstitutional Law Document Finalparichaya reddyNo ratings yet