Professional Documents

Culture Documents

Qohfi 0004

Qohfi 0004

Uploaded by

data.ana180 ratings0% found this document useful (0 votes)

5 views2 pagesOriginal Title

QOHFI-0004

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

5 views2 pagesQohfi 0004

Qohfi 0004

Uploaded by

data.ana18Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 2

MyanBERTa: A Pre-trained Language Model For

Myanmar

Aye Mya Hlaing Win Pa Pa

Natural Language Processing Lab. Natural Language Processing Lab.

University of Computer Studies, Yangon University of Computer Studies, Yangon

Yangon, Myanmar Yangon, Myanmar

ayemyahlaing@ucsy.edu.mm winpapa@ucsy.edu.mm

Abstract— In recent years, BERT-based pre-trained language

models have dramatically changed the NLP field due to their II. BUILDING MYANBERTA

ability of allowing the same pre-trained model to successfully In this section, we descibe the creation of Myanmar

tackle a wide range of NLP tasks. Many studies highlight that

corpus, model architecture and training configuration for

language specific monolingual pre-trained models outperform

multilingual models in many downstream NLP tasks. In this pretraining MyanBERTa.

paper, we developed monolingual Myanmar pre-trained model A. Myanmar Corpus

called MyanBERTa on word segmented Myanmar dataset and

evaluated it on three downstream NLP tasks including name entity Pretraining BERT language model relies on large size of

recognition (NER), part-of-speech (POS) tagging and word plain text corpora. MyanBERTa is trained on a combination

segmentation (WS). The performance of MyanBERTa was of MyCorpus [3] and Myanmar data collected from blogs and

compared with the previous pre-trained model MyanmarBERT news websites. For Myanmar language, some preprocessing

and M-BERT for NER and POS tasks, and the Conditional steps needs to be done on raw data to provide the effective

Random Field (CRF) approach for WS task. Experimental results usage of corpus. Therefore, standardizing encoding and word

show that MyanBERTa consistently outperforms MyanmarBERT, segmentation are done on Myanmar corpus to form the word

M-BERT and CRF. We release our MyanBERTa model to

segmented corpus that might be promote the effective usage

promote NLP researches for Myanmar language and become a

resource for NLP researchers. MyanBERTa model is available at: of pre-training model on NLP downstream tasks.

http://www.nlpresearch-ucsy.edu.mm/myanberta.html Finally, we got the word segmented Myanmar corpus of

size 2.1G. It consists of 5.9M sentences with 135M words.

Keywords— MyanBERTa, Pre-trained Language Model, The detail statistics for Myanmar corpus used for pretraining

Name Entity Recognition, Part-of-Speech Tagging, Word language model is shown in Table I.

Segmentation, Myanmar

B. Model Architecture

I. INTRODUCTION The architecture of MyanBERTa is based on RoBERTa[2]

In recent years, pre-trained language models, especially which makes some modifications on BERT pretraining for

BERT (Bidirectional Encoder Representations from more robust performance. Due to limited resources and to

facilitate the comparison with MyanmarBERT [3], we opt to

Transformers) [1], have recently become extremely popular

train a single RoBERTa based model using the original

because of the effectiveness of the same pre-trained model to architectures of BERTBASE (L=12, H=768, A=12) with total

successfully tackle a broad set of natural language processing parameters of 110M. The number of layers (i.e., Transformer

(NLP) tasks. While pre-trained Multilingual BERT (M- blocks) is denoted as L, the hidden size as H, and the number

BERT) models 1 have a remarkable ability to support low- of self-attention heads as A. Though, the original RoBERTa

resource languages, a monolingual BERT models can get [2] uses a larger byte-level BPE vocabulary on dataset without

better performance than the Multilingual models. any additional preprocessing or tokenization of the input, we

In terms of pre-trained monolingual language models on apply 30,522 byte-level BPE vocabulary on word tokenized

Myanmar language, to the best of our knowledge, there are Myanmar corpus.

only two published works on monolingual Myanmar

language modeling based on BERT, [3] and [4]. The C. Pretraining

monolingual MyanmarBERT [3] well learns of POS tagging The model was trained for 528K steps using Tesla K80

task than M-BERT, however, the effectiveness of that model GPUs. Due to memory constraints, we use the batch size of 8

is less obvious in NER task compared to M-BERT. In [4], with sequence length 512. It takes approximately 6 days for

sentence-piece segmentation is directly applied on raw pretraining MyanBERTa. We use Huggingface's

Myanmar corpus. Intuitively, pre-trained model based on transformers [5] for both pre-training and fine-tuning.

word-level segmented data might perform well than raw

dataset that has not been applied any kind of tokenization. TABLE I. STATISTICS OF MYANMAR CORPUS

Due to the above concerns, we developed a BERT-based Number of Number of

language model for Myanmar language which we name Source Size

Sentences Words

MyanBERTa. We trained MyanBERTa with byte-level Byte- MyCorpus [3] 3,535,794 83 M 1.3 G

Pair Encoding (BPE) vocabulary containing 30K subword

units, which is learned after word segmentation on Myanmar News and Blog Websites 2,456,505 53 M 0.8 G

dataset. We evaluated this model on three downstream NLP Total 5,992,299 136 M 2.1 G

tasks: name entity recognition (NER), part-of-speech (POS)

tagging and word segmentation (WS).

1

https://github.com/google-research/bert/blob/master/multilingual.md

XXX-X-XXXX-XXXX-X/XX/$XX.00 ©20XX IEEE

III. EXPERIMENTAL SETUP AND RESULTS TABLE II. PERFORMANCE SCORES ON NER AND POS

The performance of MyanBERT is investigated on three

Pre-trained Models NER (F1) POS (F1)

downstream Myanmar NLP tasks: NER, POS and WS by

using F1 score. M-BERT 93.4% 86.6%

A. Datasets for NER, POS and WS MyanmarBERT [3] 93.3% 91.4%

In fine-tuning our pre-trained MyanBERTa model, MyanBERTa 93.5% 95.1%

Myanmar NE tagged corpus (60,500 sentences) manually

annotated with the predefined NE tags proposed in [6] was

employed for NER task and the publicly available POS dataset TABLE III. PERFORMANCE SCORES ON WS

[7] which consists of 11K sentences for POS tagging. The Models F1

same experimental data setting as [3] was applied for NER and

POS tasks. For WS dataset, the word segmented Myanmar CRF 96.6%

corpus (48,950 sentences) was prepared by applying BIES MyanBERTa 97.3%

(Begin, Inside, End, and Single) scheme in labelling each

syllable in the corpus. In summary, we proved that our pre-trained

MyanBERTa is effective on all three downstream tasks.

B. Fine-tuning MyanBERTa

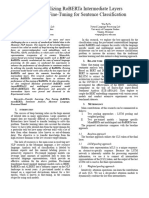

For NER, POS and WS downstream tasks, we fine-tune IV. CONCLUSTION

MyanBERTa for each task and dataset independently as A Myanmar monolingual pre-trained model,

shown in Fig. 1. The task-specific models such as NER, POS MyanBERTa, fine-tuned on three tasks, NER, POS and Word

and WS models are formed by incorporating MyanBERTa segmentation is described in this paper with the purpose of

with one additional output layer. In the figure, E represents publicly available resource releasing for various Myanmar

the input embedding which is the sum of token embeddings, NLP tasks. The detail settings of experiments on pre-training

Ti represents the contextual representation of token i, [CLS] and fine-tuning MyanBERTa are discussed in this paper. The

is the special symbol for classification output. Tag 1 to Tag performance of MyanBERTa is proved on fine-tuning three

N represent the output tags for each Tok 1 to Tok N, downstream tasks, and the absolute improvements over

respectively. previous MyanmarBERT and M-BERT are achieved.

During the fine-tune process, the batch size of 32 with In the future, the pre-trained MyanBERTa can be used to

the maximum sequence length 256 and the Adam optimizer improve other important Myanmar language processing tasks

with the learning rate of 5e-5 was used. We fine-tune in 30 such as Sentiment Analysis, Natural Language Inference,

epochs on each training data and evaluate the task Machine Translation, Automatic speech recognition and also

to benchmark pre-trained Myanmar BERT models with larger

performance on the validation set. And then select the best

dataset.

model checkpoint to report the final result on the test set.

C. Experimental Results REFERENCES

[1] Devlin, Jacob, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova.

In Table II, we can see that fine-tuning MyanBERTa "Bert: Pre-training of deep bidirectional transformers for language

outperforms MyanmarBERT and M-BERT on both NER and understanding." arXiv preprint arXiv:1810.04805 (2018).

POS tasks. Particularly, MyanBERTa achieves better F1 [2] Liu, Yinhan, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi

scores of 93.5% on NER task and 95.1% on POS task which Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, and Veselin

are 0.2% and 3.7% absolute improvement over Stoyanov. "Roberta: A robustly optimized bert pretraining approach."

arXiv preprint arXiv:1907.11692 (2019).

MyanmarBERT.

[3] Saw Win, Win Pa Pa, "MyanmarBERT:Myanmar Pre-trained

The comparison of pre-trained MyanBERTa model and Language Model using BERT", The 19th IEEE Conference on

CRF model which is the same setting as [8] on WS task is Computer Applications (ICCA), 2020.

shown in Table III. As we can see, MyanBERTa get 0.7% [4] Jiang, Shengyi, Xiuwen Huang, Xiaonan Cai, and Nankai Lin. "Pre-

higher F1 score than the CRF. trained Models and Evaluation Data for the Myanmar Language." In

International Conference on Neural Information Processing, pp. 449-

458. Springer, Cham, 2021.

Tag 1 Tag 2 ... Tag N

[5] Wolf, Thomas, Lysandre Debut, Victor Sanh, Julien Chaumond,

Clement Delangue, Anthony Moi, Pierric Cistac et al. "Huggingface's

C T1 T2 ... TN transformers: State-of-the-art natural language processing." arXiv

preprint arXiv:1910.03771 (2019).

[6] Mo, Hsu Myat, and Khin Mar Soe. "Myanmar named entity corpus and

MyanBERTa its use in syllable-based neural named entity recognition." International

Journal of Electrical & Computer Engineering (2088-8708) 10, no. 2

(2020).

E[CLS] E1 E2 ... EN

[7] Htike, Khin War War, Ye Kyaw Thu, Win Pa Pa, Zuping Zhang,

Yoshinori Sagisaka, and Naoto Iwahashi. "Comparison of six POS

[CLS] Tok 1 Tok 2 ... Tok N tagging methods on 10K sentences Myanmar language (Burmese) POS

tagged corpus." In Proceedings of the CICLING. 2017.

[8] Pa, Win Pa, Ye Kyaw Thu, Andrew Finch, and Eiichiro Sumita. "Word

Input Sentence

boundary identification for Myanmar text using conditional random

Fig. 1. An illustration of fine-tuning MyanBERTa on downstream NLP fields." In International Conference on Genetic and Evolutionary

tasks: NER, POS and WS Computing, pp. 447-456. Springer, Cham, 2015

You might also like

- Real-World Natural Language Processing: Practical applications with deep learningFrom EverandReal-World Natural Language Processing: Practical applications with deep learningNo ratings yet

- The Diverse Landscape of Large Language Models Deepsense AiDocument16 pagesThe Diverse Landscape of Large Language Models Deepsense AiYoussef OuyhyaNo ratings yet

- Improving BERT-Based Text Classification With Auxiliary Sentence and Domain KnowledgeDocument13 pagesImproving BERT-Based Text Classification With Auxiliary Sentence and Domain KnowledgeLarissa FelicianaNo ratings yet

- Advanced Natural Language Processing With TensorFlow 2 (2021, Packt) - Libgen - LiDocument465 pagesAdvanced Natural Language Processing With TensorFlow 2 (2021, Packt) - Libgen - LiRongrong FuNo ratings yet

- Digital Modulations using MatlabFrom EverandDigital Modulations using MatlabRating: 4 out of 5 stars4/5 (6)

- CM Template 2.0 - Story Template (2) 100Document5 pagesCM Template 2.0 - Story Template (2) 100sankharNo ratings yet

- Companies in NOIDADocument4 pagesCompanies in NOIDASamarth Dargan33% (12)

- Python Text Mining: Perform Text Processing, Word Embedding, Text Classification and Machine TranslationFrom EverandPython Text Mining: Perform Text Processing, Word Embedding, Text Classification and Machine TranslationNo ratings yet

- Cdroh 0019Document2 pagesCdroh 0019data.ana18No ratings yet

- Problem Statement:: Rule-Based Machine Translation (RBMT), Statistical Machine Translation (SMT), NeuralDocument4 pagesProblem Statement:: Rule-Based Machine Translation (RBMT), Statistical Machine Translation (SMT), NeuralGovind MessiNo ratings yet

- BERT Explained - State of The Art Language Model For NLP - by Rani Horev - Towards Data ScienceDocument8 pagesBERT Explained - State of The Art Language Model For NLP - by Rani Horev - Towards Data ScienceOnlyBy Myself100% (1)

- A Study On A Joint Deep Learning Model FDocument6 pagesA Study On A Joint Deep Learning Model Fdata.ana18No ratings yet

- Rebertsubmission116 NWDocument26 pagesRebertsubmission116 NWsheepriyanka322No ratings yet

- Preprint JesusDocument2 pagesPreprint JesusJesus UrbanejaNo ratings yet

- Lora and QloraDocument5 pagesLora and QloraVidityaNo ratings yet

- Gupta Et Al 2019 - Character-Based NMT With TransformerDocument16 pagesGupta Et Al 2019 - Character-Based NMT With TransformermccccccNo ratings yet

- 1 s2.0 S0952197623011831 Main - PDF WT - SummariesDocument5 pages1 s2.0 S0952197623011831 Main - PDF WT - SummariesHanane GríssetteNo ratings yet

- D.Y. Kim, H.Y. Chan, G. Evermann, M.J.F. Gales, D. Mrva, K.C. Sim, P.C. WoodlandDocument4 pagesD.Y. Kim, H.Y. Chan, G. Evermann, M.J.F. Gales, D. Mrva, K.C. Sim, P.C. WoodlandTroyNo ratings yet

- Note 1015202360148 PMDocument4 pagesNote 1015202360148 PMNussiebah GhanemNo ratings yet

- Research Method - 2Document6 pagesResearch Method - 2sonu23144No ratings yet

- CH 5Document16 pagesCH 521dce106No ratings yet

- Review On Language Translator Using Quantum Neural Network (QNN)Document4 pagesReview On Language Translator Using Quantum Neural Network (QNN)International Journal of Engineering and TechniquesNo ratings yet

- Comparison of Pre-Trained Word Vectors For Arabic Text Classification Using Deep Learning ApproachDocument4 pagesComparison of Pre-Trained Word Vectors For Arabic Text Classification Using Deep Learning ApproachShamsul BasharNo ratings yet

- TermpaperDocument6 pagesTermpaperKisejjere RashidNo ratings yet

- 11 - Vietnamese Text Classification and Sentiment BasedDocument3 pages11 - Vietnamese Text Classification and Sentiment BasedPhan Dang KhoaNo ratings yet

- Terminology-Aware Sentence Mining For NMT Domain AdaptationDocument7 pagesTerminology-Aware Sentence Mining For NMT Domain AdaptationYgana AnyNo ratings yet

- Analysis of The Evolution of Advanced Transformer-Based Language Models: Experiments On Opinion MiningDocument16 pagesAnalysis of The Evolution of Advanced Transformer-Based Language Models: Experiments On Opinion MiningIAES IJAINo ratings yet

- Indonesian Part of Speech Tagging Using Maximum Entropy Markov Model On Indonesian Manually Tagged CorpusDocument9 pagesIndonesian Part of Speech Tagging Using Maximum Entropy Markov Model On Indonesian Manually Tagged CorpusIAES IJAINo ratings yet

- Abstract and RefrancesDocument8 pagesAbstract and RefrancesGuddi ShelarNo ratings yet

- TermpaperDocument6 pagesTermpaperKisejjere RashidNo ratings yet

- Deep Learning Long-Short Term Memory (LSTM) For Indonesian Speech Digit Recognition Using LPC and MFCC FeatureDocument5 pagesDeep Learning Long-Short Term Memory (LSTM) For Indonesian Speech Digit Recognition Using LPC and MFCC FeatureBouhafs AbdelkaderNo ratings yet

- Boosting The Performance of Transformer ArchitectuDocument6 pagesBoosting The Performance of Transformer ArchitectuGetnete degemuNo ratings yet

- Named Entity Recognition Using Deep LearningDocument21 pagesNamed Entity Recognition Using Deep LearningZerihun Yitayew100% (1)

- NICT ATR Chinese-Japanese-English Speech-To-Speech Translation SystemDocument5 pagesNICT ATR Chinese-Japanese-English Speech-To-Speech Translation SystemFikadu MengistuNo ratings yet

- 2020 Paclic-1 32Document7 pages2020 Paclic-1 32Vanessa DouradoNo ratings yet

- Research PaperDocument6 pagesResearch PaperBhushanNo ratings yet

- NMT Based Similar Language Translation For Hindi - MarathiDocument4 pagesNMT Based Similar Language Translation For Hindi - MarathiKrishna GuptaNo ratings yet

- Speaker Dependent Continuous Kannada Speech Recognition Using HMMDocument4 pagesSpeaker Dependent Continuous Kannada Speech Recognition Using HMMMuhammad Hilmi FaridhNo ratings yet

- New Research On Transfer Learning Model of Named EDocument9 pagesNew Research On Transfer Learning Model of Named EAKASH GUPTANo ratings yet

- Sementation HTKDocument3 pagesSementation HTKhhakim32No ratings yet

- Effective Preprocessing Based Neural Machine Translation For English To Telugu Cross-Language Information RetrievalDocument10 pagesEffective Preprocessing Based Neural Machine Translation For English To Telugu Cross-Language Information RetrievalIAES IJAINo ratings yet

- LSTM Neural Reordering Feature For Statistical Machine TranslationDocument6 pagesLSTM Neural Reordering Feature For Statistical Machine Translationmuhammad shoaibNo ratings yet

- 1 s2.0 S2095809922006324 MainDocument20 pages1 s2.0 S2095809922006324 MainJuan ZarateNo ratings yet

- Baskar22 InterspeechDocument5 pagesBaskar22 InterspeechreschyNo ratings yet

- Improving Language Understanding by Generative Pre-Training - by Ceshine Lee - Veritable - MediumDocument19 pagesImproving Language Understanding by Generative Pre-Training - by Ceshine Lee - Veritable - Mediumtimsmith1081574No ratings yet

- W09-3508 (PDF Library)Document4 pagesW09-3508 (PDF Library)uday3040No ratings yet

- RoBERTa: Language Modelling in Building Indonesian Question-Answering SystemsDocument8 pagesRoBERTa: Language Modelling in Building Indonesian Question-Answering SystemsTELKOMNIKANo ratings yet

- LLM 1Document6 pagesLLM 1anavariNo ratings yet

- Patoary 2020Document4 pagesPatoary 2020Nagaraj LutimathNo ratings yet

- Overview of Myanmar-English Machine Translation System - NICTDocument29 pagesOverview of Myanmar-English Machine Translation System - NICTLion CoupleNo ratings yet

- Improving Neural Machine Translation Models With Monolingual DataDocument11 pagesImproving Neural Machine Translation Models With Monolingual DataYgana AnyNo ratings yet

- NLP m4Document97 pagesNLP m4priyankap1624153No ratings yet

- Seminar NotesDocument7 pagesSeminar NotesPooja VinodNo ratings yet

- Bidirectional LSTM-CRF For Named Entity RecognitionDocument10 pagesBidirectional LSTM-CRF For Named Entity Recognitionpe te longNo ratings yet

- Language Recognition For Telephone and Video Speech: The JHU HLTCOE Submission For NIST LRE17Document6 pagesLanguage Recognition For Telephone and Video Speech: The JHU HLTCOE Submission For NIST LRE17moodle teacher4No ratings yet

- Script To Speech For Marathi LanguageDocument4 pagesScript To Speech For Marathi LanguageAshwinee Jagtap BartakkeNo ratings yet

- NLP Project Final Report1Document10 pagesNLP Project Final Report1hewepo4344No ratings yet

- Investigation of Full-Sequence Training of Deep Belief Networks For Speech RecognitionDocument4 pagesInvestigation of Full-Sequence Training of Deep Belief Networks For Speech Recognitionchikka2515No ratings yet

- Large Language Model Cheat SheetDocument1 pageLarge Language Model Cheat SheetRawaa Ben BrahimNo ratings yet

- Approaches For Neural-Network Language Model Adaptation: August 2017Document6 pagesApproaches For Neural-Network Language Model Adaptation: August 2017Advay RajhansaNo ratings yet

- Neural Network Seminar AnirbanDocument13 pagesNeural Network Seminar Anirbananirbanbhowmick88No ratings yet

- The Orbitdb Field ManualDocument90 pagesThe Orbitdb Field ManualaredhubNo ratings yet

- Actix Spotlight Desktop User GuideDocument268 pagesActix Spotlight Desktop User Guidesolarisan6No ratings yet

- Structural Health Monitoring System Using IOT and Wireless TechnologiesDocument7 pagesStructural Health Monitoring System Using IOT and Wireless TechnologiesRahul KumarNo ratings yet

- Operation Manual: P-Type Programmable ControllersDocument210 pagesOperation Manual: P-Type Programmable ControllersІван ШпачинськийNo ratings yet

- Security Concerns With Cloud Computing: Presented ByDocument22 pagesSecurity Concerns With Cloud Computing: Presented Byhunt testNo ratings yet

- NMS-KD-0010-en - V01.01 - N2000-DP11, - DP21, - DP31 User ManualDocument18 pagesNMS-KD-0010-en - V01.01 - N2000-DP11, - DP21, - DP31 User Manualjezrel marvinNo ratings yet

- Manual Lenord 3278 - bg823x - eDocument95 pagesManual Lenord 3278 - bg823x - ecostas1182No ratings yet

- SAP Enterprise Asset Management, Add-On For MRO 3.0 SP01 by HCL For S4HANA 1709 - EWI Fiori App Feature Scope DescriptionDocument113 pagesSAP Enterprise Asset Management, Add-On For MRO 3.0 SP01 by HCL For S4HANA 1709 - EWI Fiori App Feature Scope DescriptionlymacsauokNo ratings yet

- Legal and Policy Issues of Virtual Property: Nikolaos VolanisDocument13 pagesLegal and Policy Issues of Virtual Property: Nikolaos VolanisMădălina GavriluțăNo ratings yet

- Technopopulism: The Emergence of A Discursive Formation: Marco DeseriisDocument18 pagesTechnopopulism: The Emergence of A Discursive Formation: Marco DeseriisMimi ANo ratings yet

- Open DMR - Fasco Motors Thailand Ltd.Document9 pagesOpen DMR - Fasco Motors Thailand Ltd.Annabella1234No ratings yet

- Honours Degree MOOCS (NPTEL) CoursesDocument5 pagesHonours Degree MOOCS (NPTEL) CoursesPamarNo ratings yet

- Bec Pelc Lesson Plan in MakabayanDocument2 pagesBec Pelc Lesson Plan in MakabayanJayCesarNo ratings yet

- Rukkila EmmiDocument62 pagesRukkila EmmiIcedoNo ratings yet

- ARTNET Receiver V3.inoDocument4 pagesARTNET Receiver V3.inoESIN NISENo ratings yet

- NZ BIM Handbook Appendix+Ei Project BIM Brief Example April 19Document11 pagesNZ BIM Handbook Appendix+Ei Project BIM Brief Example April 19Carlos MejiaNo ratings yet

- Unit 4 Role of Computers in Management: StructureDocument11 pagesUnit 4 Role of Computers in Management: StructureMuhammad MuzzammilNo ratings yet

- Installation Instruction For PDI SoftwareDocument3 pagesInstallation Instruction For PDI SoftwareSebastian CartagenaNo ratings yet

- Liebert Hipulse D Data SheetDocument6 pagesLiebert Hipulse D Data SheetDharmesh ChanawalaNo ratings yet

- Group 7 Wireless Internet ReportDocument13 pagesGroup 7 Wireless Internet ReportJoshua YarjahNo ratings yet

- 2012 Radan DatasheetsDocument20 pages2012 Radan DatasheetsBebe WeNo ratings yet

- 2 The Ultimate Guide To Scope Creep (Updated For 2022)Document1 page2 The Ultimate Guide To Scope Creep (Updated For 2022)Pricelda Villa-BorreNo ratings yet

- Need For Speed - Most Wanted 5-1-0 (USA)Document10 pagesNeed For Speed - Most Wanted 5-1-0 (USA)Cyriaque Ange Bertin NIAMIENNo ratings yet

- WifirelayDocument3 pagesWifirelaymathieuNo ratings yet

- STUDENTS - Evergreen International Student Application With FlywireDocument6 pagesSTUDENTS - Evergreen International Student Application With Flywirehakeem AtandaNo ratings yet

- 5101 0b R3 OverviewDocument7 pages5101 0b R3 OverviewDaniel Alejandro OrtegaNo ratings yet

- Level Pro ITC 450 Quick StartDocument18 pagesLevel Pro ITC 450 Quick StartYolandaNo ratings yet

- Computing: Pearson Higher Nationals inDocument6 pagesComputing: Pearson Higher Nationals inTiến Anh HoàngNo ratings yet