Professional Documents

Culture Documents

TCP (Transmission Control Protocol) : Unit - 5 Transport Layer and Congestion

Uploaded by

arijitkar0x7Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

TCP (Transmission Control Protocol) : Unit - 5 Transport Layer and Congestion

Uploaded by

arijitkar0x7Copyright:

Available Formats

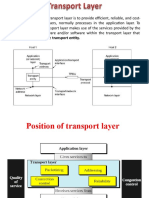

Unit - 5 Transport layer and Congestion

Saturday, April 20, 2024 4:43 PM

TCP (Transmission Control Protocol)

TCP is a core protocol in the Internet Protocol Suite, operating at the transport layer. It is a connection -oriented, reliable, and byte-stream protocol designed to provide end-to-end

communication between applications running on different hosts across a network.

Features of TCP:

1. Connection-Oriented: TCP establishes a logical end-to-end connection between two hosts before data transmission begins. This connection is established through a three -way

handshake process, ensuring reliable communication.

2. Reliable Data Transfer: TCP ensures reliable data delivery by implementing mechanisms such as sequencing, acknowledgments, retransmissions, and check sums. It guarantees

that data arrives at the destination in the correct order and without errors or duplication.

3. Flow Control: TCP implements flow control mechanisms to prevent the sender from overwhelming the receiver with data. The receiver can adver tise a receive window size,

indicating the amount of data it can accept at a time.

4. Congestion Control: TCP includes congestion control algorithms, such as slow start, congestion avoidance, and fast retransmit/fast recovery, to d etect and respond to network

congestion. These algorithms adjust the sending rate to prevent overwhelming the network and improve overall performance.

5. Byte-Stream Service: TCP treats the data as a continuous stream of bytes, rather than individual packets or messages. The receiving application ca n read the data as a continuous

stream, without worrying about the underlying packet boundaries.

6. Full-Duplex Communication: TCP supports full-duplex communication, allowing data to be transmitted simultaneously in both directions between the connected hosts.

7. Data Segmentation and Reassembly: TCP divides the application data into segments, which are then encapsulated into IP packets for transmission over the network . At the

receiving end, TCP reassembles the segments into the original byte stream.

8. Multiplexing and Demultiplexing: TCP allows multiple applications on a single host to communicate concurrently by using port numbers to identify and different iate between

different connections.

9. Error Detection and Recovery: TCP uses checksums and sequence numbers to detect errors in the received data and initiate retransmissions or request retrans missions from the

sender, ensuring reliable data delivery.

10. Connection Termination: TCP provides a graceful connection termination process, ensuring that all data is reliably delivered before closing the conne ction.

Advantages of TCP:

• Reliable end-to-end data transfer

• Flow control and congestion control mechanisms

• Guaranteed in-order delivery of data

• Connection-oriented communication for consistent data streams

• Error detection and recovery mechanisms

Disadvantages of TCP:

• Additional overhead due to connection establishment, acknowledgments, and retransmissions

• Potential for head-of-line blocking, where out-of-order segments can delay the delivery of subsequent data

• Limited performance for applications that require low latency or high throughput

TCP is widely used for applications that require reliable data transfer, such as web browsing, file transfers, email, and rem ote access services. It provides robust mechanisms for

ensuring data integrity and reliability, making it a crucial protocol for many internet applications and services.

UDP (User Datagram Protocol)

UDP is a core protocol in the Internet Protocol Suite, operating at the transport layer. It is a connectionless, unreliable, and datagram-oriented protocol designed for efficient data

transfer in scenarios where reliability is not critical or can be handled by the application layer.

Features of UDP:

1. Connectionless: UDP is a connectionless protocol, meaning that it does not establish a dedicated end-to-end connection between the communicating hosts before data

transmission. Each UDP datagram is treated independently, without any prior handshaking or connection establishment.

2. Unreliable Data Transfer: UDP does not provide any mechanisms for guaranteed delivery, error checking, or retransmissions. Datagrams sent via UDP may a rrive out of order, be

duplicated, or go missing without any notification.

3. Datagram-Oriented: UDP treats data as individual, self-contained datagrams or packets, rather than a continuous stream of bytes. Each datagram is independent and contains

source and destination port numbers for multiplexing and demultiplexing.

4. No Flow Control or Congestion Control: UDP does not implement any flow control or congestion control mechanisms. It is the responsibility of the application layer o r higher-

level protocols to handle these aspects, if required.

5. Low Overhead: UDP has a simple header structure and lacks the additional overhead associated with connection establishment, acknowledgments , and retransmissions found in

TCP. This makes it more efficient for applications that prioritize speed over reliability.

6. Multiplexing and Demultiplexing: Similar to TCP, UDP uses port numbers to identify and differentiate between different applications or services running on the same host.

7. Lightweight and Fast: Due to its simplicity and lack of reliability mechanisms, UDP is generally faster and more efficient than TCP, making it suit able for applications that require

low latency and high throughput.

8. Broadcast and Multicast Support: UDP supports broadcasting and multicasting, allowing data to be sent to multiple destinations simultaneously, making it usefu l for applications

like video streaming, online gaming, and file distribution.

Advantages of UDP:

• Low overhead and efficient data transfer

• Suitable for applications that prioritize speed over reliability

• Ideal for time-sensitive applications like video streaming, online gaming, and voice over IP (VoIP)

Computer Networks Page 1

• Ideal for time-sensitive applications like video streaming, online gaming, and voice over IP (VoIP)

• Supports broadcasting and multicasting

Disadvantages of UDP:

• No guaranteed delivery or data integrity mechanisms

• No flow control or congestion control mechanisms

• Applications need to implement their own reliability and error handling mechanisms

• Potential for packet loss or out-of-order delivery

UDP is commonly used in applications where some data loss is acceptable or can be handled by the application layer, such as v ideo streaming, online gaming, Domain Name System

(DNS) queries, and real-time multimedia applications. It provides a simple and efficient way to transmit data without the overhead of TCP's reliabili ty mechanisms, making it suitable

for scenarios where speed and low latency are priorities.

Congestion

Congestion in computer networks refers to the state where the demand for network resources exceeds the available capacity, re sulting in excessive delays, packet losses, and poor

overall performance.

Causes of Congestion:

1. Insufficient Bandwidth: When the amount of traffic on a network link or path exceeds the available bandwidth, congestion can occur. This can happen d ue to an increase in the

number of users, applications with high bandwidth demands, or inefficient bandwidth allocation.

2. Bursty Traffic: Some applications, such as file transfers or multimedia streaming, generate traffic in bursts, which can lead to temporary co ngestion if the network resources are

not capable of handling these bursts effectively.

3. Broadcast Storms: In networks with broadcast or multicast traffic, excessive broadcast or multicast packets can cause congestion by overwhelmin g network devices and

consuming significant bandwidth.

4. Bottlenecks: Congestion can occur at specific points in the network where there are bottlenecks, such as slower links, overloaded routers, or mismatched interface speeds

between network components.

5. Inadequate Buffering: Insufficient buffer space in network devices like routers or switches can lead to packet drops and congestion when traffic bu rsts exceed the available buffer

capacity.

6. Network Attacks: Malicious network attacks, such as Denial of Service (DoS) or Distributed Denial of Service (DDoS) attacks, can intentionally generate excessive traffic, leading to

congestion and disruption of network services.

Effects of Congestion:

1. Increased Latency: Congestion can cause significant delays in packet delivery, resulting in higher latency and poor performance for time -sensitive applications like Voice over IP

(VoIP) or online gaming.

2. Packet Loss: When network devices become overwhelmed with traffic, they may start dropping packets due to buffer overflows or resource exh austion, leading to data loss and

retransmissions.

3. Throughput Degradation: Congestion can reduce the overall throughput of the network, as packets experience longer queuing delays, retransmissions, an d increased contention

for limited resources.

4. Quality of Service (QoS) Degradation: Applications with specific QoS requirements, such as voice or video streaming, may experience poor quality or service disrupt ions due to

congestion-induced packet loss and delay.

5. Network Instability: Severe congestion can lead to network instability, where routing protocols or other network mechanisms may fail to operate co rrectly, potentially causing

further disruptions or even network outages.

6. Application Performance Issues: Congestion can negatively impact the performance of applications that rely on network communication, leading to slow response times,

incomplete data transfers, or application failures.

To mitigate congestion and its effects, network administrators employ various techniques, such as bandwidth management, traff ic shaping, load balancing, QoS mechanisms, and

network capacity planning. Additionally, congestion control algorithms, such as those implemented in TCP, aim to detect and r espond to congestion by adjusting the transmission rate

and preventing further congestion build up.

Congestion Control Techniques

Open Loop Techniques: These techniques try to prevent or avoid congestion by making decisions upfront, without considering the current state of the network. Some algorithms that

fall under open loop techniques mentioned in the document are:

1. Leaky Bucket Algorithm

2. Token Bucket Algorithm

Closed Loop Techniques: These techniques allow the network to enter a congested state, detect the congestion, and then take corrective measures. Some closed loop techniques

mentioned in the document are:

1. Admission Control

2. Choke Packets

3. Hop-by-Hop Choke Packets

4. Load Shedding

5. Slow Start (a proactive technique)

The document also mentions that closed loop algorithms can be further divided into explicit feedback algorithms (like Choke P ackets) and implicit feedback algorithms (like Slow Start,

where the source deduces congestion based on local observations).

Leaky Bucket Algorithm:

The leaky bucket algorithm is an open-loop congestion control technique that works on the principle of a metaphorical bucket with a small hole at the bottom. The b ucket represents

a buffer, and the hole represents the network interface's constant output rate. Here's how it works:

1. When a host has a packet to send, the packet is placed into the bucket (buffer).

2. The bucket has a fixed capacity, and any packets that arrive when the bucket is full are discarded.

3. The bucket leaks at a constant rate, meaning that the network interface transmits packets at a constant rate, determined by t he size of the hole.

4. This algorithm converts bursty traffic from the host into a uniform stream of packets.

The implementation of the leaky bucket algorithm typically involves a finite queue that outputs packets at a finite rate. If there is room in the queue, incoming packets are queued;

otherwise, they are discarded.

Advantages:

• Simple implementation

• Enforces a constant output rate

• Prevents bursts from overwhelming the network

Disadvantages:

Computer Networks Page 2

Disadvantages:

• Inflexible, as it enforces a rigid output pattern

• Excess packets are discarded, leading to potential data loss

• Does not adapt to network conditions or available bandwidth

Token Bucket Algorithm:

The token bucket algorithm is an enhancement over the leaky bucket algorithm, designed to provide more flexibility and better utilize available bandwidth. It works on the concept of

a bucket that holds tokens, which represent permission to transmit packets.

1. Tokens are generated at regular intervals and added to the bucket.

2. The bucket has a maximum capacity, limiting the number of tokens it can hold.

3. When a packet is ready to be sent, a token is removed from the bucket, and the packet is transmitted.

4. If there are no tokens in the bucket, the packet cannot be sent and must wait until tokens become available.

The token bucket algorithm allows bursty traffic to be transmitted as long as there are tokens available in the bucket. Howev er, the limit of the burst is restricted by the number of

tokens in the bucket at any given time.

Advantages:

• Flexibility: Allows for bursts of traffic when tokens are available, leading to better network utilization.

• Burst Tolerance: Can handle short bursts of traffic more effectively by accumulating tokens during periods of low traffic.

• Fairness: Regulates traffic flow based on tokens, ensuring fairness among different traffic sources.

• Efficient Bandwidth Utilization: Can utilize available bandwidth more efficiently by transmitting bursts when possible.

Disadvantages:

• More complex implementation compared to the leaky bucket algorithm.

• Requires careful configuration of token generation rate and bucket size to balance burst tolerance and fairness.

Comparison and Superiority:

While both algorithms aim to regulate traffic flow and prevent congestion, the token bucket algorithm is generally considered superior to the leaky bucket algorithm for several

reasons:

1. Flexibility: The token bucket algorithm allows for more flexibility in handling bursty traffic, whereas the leaky bucket algorithm enforce s a rigid pattern at the output stream,

regardless of the input pattern.

2. Efficiency: The token bucket algorithm can utilize the available bandwidth more efficiently by allowing bursts of traffic when tokens are available, leading to better network

utilization.

3. Burst Tolerance: The token bucket algorithm can handle short bursts of traffic more effectively, as it allows the accumulation of tokens durin g periods of low traffic, which can be

used to transmit bursts later.

4. Fairness: By regulating the traffic flow based on tokens, the token bucket algorithm ensures fairness among different traffic sources , preventing any single source from

monopolizing the available bandwidth.

However, it's important to note that the choice between these algorithms depends on the specific requirements and constraints of the network environment. In scenarios where a

strict, constant rate of traffic is desired, the leaky bucket algorithm may be more suitable, while the token bucket algorithm is generally preferred when dealing with bursty traffic and

the need for more flexible bandwidth utilization.

The token bucket algorithm is widely used in various networking applications, such as traffic shaping, rate limiting, and qua lity of service (QoS) mechanisms, due to its ability to

provide controlled bursts while maintaining overall bandwidth constraints.

Token bucket

Leaky bucket

Closed loop congestion control techniques

Admission Control:

Admission control is a closed-loop congestion control technique used in virtual circuit networks. It involves regulating the establishment of new connections based on the current

state of the network. Here's how it works:

1. The network constantly monitors its resources and utilization levels.

2. When congestion is detected, the network can take one of the following actions:

○ Deny new connections: No new virtual circuits or connections are established until the congestion subsides. This approach is commonly used in telephone networks, where

new calls are blocked when the exchange is overloaded.

○ Careful routing: New connections are allowed, but they are routed carefully to avoid congested areas or routers within the network.

○ Negotiation: During connection setup, the host specifies its traffic requirements (volume, quality of service, maximum delay, etc.), and the network reserves the necessary

resources along the path before allowing the actual data transfer.

Computer Networks Page 3

resources along the path before allowing the actual data transfer.

Admission control ensures that new connections are only admitted if the network has sufficient resources to accommodate them without degrading the performance of existing

connections.

Choke Packets:

Choke packets are a closed-loop congestion control technique used in both virtual circuit and datagram networks. It involves sending explicit feedback to the source when congestion

is detected.

1. Each router monitors its resources and output line utilization.

2. If the utilization of an output line exceeds a predefined threshold, it enters a "warning" state.

3. The router sends a choke packet back to the source of the traffic causing the congestion.

4. The original packet is tagged (a bit is set in the header) to prevent other routers along the path from generating additional choke packets for the same packet.

5. Upon receiving a choke packet, the source reduces its traffic to the specified destination by a fixed percentage (e.g., 50%).

6. If subsequent choke packets are received for the same destination, the source further reduces its traffic by a smaller percentage (e.g., 25%).

The choke packet technique provides explicit feedback to the source, allowing it to adjust its transmission rate and alleviate congestion.

Hop-by-Hop Choke Packets:

Hop-by-Hop choke packets are an advancement over the traditional choke packet technique. They are designed to address the issue of high-speed, long-distance links, where sending

a choke packet all the way back to the source may not be effective, as many packets may have already been transmitted before the choke packet reaches the source.

Here's how Hop-by-Hop choke packets work:

1. When a router detects congestion, it sends a choke packet back towards the source.

2. As the choke packet traverses the path back to the source, each intermediate router along the way curtails the traffic flow between itself and the next upstream router.

3. This immediate reduction in traffic flow at each hop allows for a more rapid response to congestion, as opposed to waiting for the choke packet to reach the source.

4. Intermediate routers must dedicate additional buffers to accommodate the incoming traffic until the upstream routers reduce their transmission rates.

The Hop-by-Hop choke packet algorithm provides a faster response to congestion by gradually reducing the traffic flow at each hop, rather than waiting for the choke packet to reach

the source before any action is taken.

Both the choke packet and Hop-by-Hop choke packet techniques are closed-loop congestion control mechanisms that rely on explicit feedback to the source or intermediate routers

to adjust the traffic flow and alleviate congestion.

Limitations of Choke Packets and Hop-by-Hop Choke Packets:

While choke packets and Hop-by-Hop choke packets are effective congestion control techniques, they have some limitations:

1. Overhead: Sending choke packets introduces additional overhead and traffic into the network, which can further contribute to congestion, especially in cases of severe

congestion.

2. Delayed Response: In large networks with high propagation delays, the time it takes for choke packets to reach the source or intermediate routers can result in a delayed

response to congestion.

3. Granularity: Choke packets typically instruct sources or routers to reduce their transmission rates by a fixed percentage, which may not be optimal for all scenarios. More granular

control over the rate adjustment may be desirable.

4. Synchronization: If multiple sources receive choke packets simultaneously, they may all reduce their transmission rates in synchrony, leading to oscillations in network utilization

and potential instability.

5. Fairness: The choke packet mechanism does not inherently ensure fairness among different traffic flows, as sources may respond differently to choke packets based on their

individual implementations.

6. Complexity: Implementing Hop-by-Hop choke packets can be more complex than traditional choke packets, as it requires coordination among multiple routers along the path.

Despite these limitations, choke packets and Hop-by-Hop choke packets remain useful tools in congestion control, especially in scenarios where explicit feedback is preferred over

implicit congestion detection mechanisms.

Load Shedding:

Load shedding is a simple and effective closed-loop congestion control technique. It involves selectively dropping packets when congestion is detected in the network.

1. When a router or network device detects congestion, it starts dropping packets from the incoming traffic.

2. There are different methods for selecting which packets to drop:

○ Random Drop: Packets are randomly selected and dropped, regardless of their priority or importance.

○ Priority-based Drop: Packets are marked with different priority levels by the sender, and the router drops packets from lower priority classes first, preserving higher priority

traffic.

3. By dropping packets, the router or network device reduces the overall load on the network, alleviating congestion.

Load shedding is a reactive technique that is triggered when congestion is detected. It is a simple and effective way to reduce network load, but it comes at the cost of potential data

loss and reduced throughput for affected flows.

Flow Control vs Congestion Control:

While flow control and congestion control are related concepts, they serve different purposes and operate at different levels. Let's explore the differences between the two in detail.

Flow Control:

Flow control is a mechanism that regulates the transmission of data between two directly communicating devices or nodes in a network. It is a point-to-point mechanism that

operates at the data link or transport layer of the OSI model. The primary goal of flow control is to prevent the sender from overwhelming the receiver with data, ensuring that the

receiver's buffer does not overflow.

Flow control involves the following key aspects:

• It is implemented through feedback mechanisms, where the receiver informs the sender about its ability to receive data.

• It is local in nature, concerning only the sender and receiver.

• It deals with the rate of data transfer between the two nodes to prevent buffer overflow or underflow.

• Examples of flow control mechanisms include stop-and-wait, sliding window, and credit-based approaches.

Congestion Control:

Congestion control, on the other hand, is a mechanism that operates at a broader network level, addressing the issue of managing traffic flow across the entire network or internet. Its

primary objective is to prevent or mitigate the occurrence of congestion, which can lead to performance degradation, packet loss, and potential network collapse.

Congestion control involves the following key aspects:

• It is a global mechanism that considers the overall network conditions and resource utilization.

• It aims to maintain the total traffic in the network below the level at which performance deteriorates significantly.

• It involves monitoring network resources and adjusting the transmission rates of sources accordingly.

• Examples of congestion control techniques include leaky bucket, token bucket, choke packets, and TCP congestion control mechanisms like Slow Start and Congestion

Avoidance.

While flow control focuses on the sender-receiver relationship and buffer management, congestion control takes a broader view, considering the entire network path and resource

availability. Effective congestion control mechanisms often rely on feedback from the network, such as packet loss or delay indicators, to detect and respond to congestion.

It's important to note that both flow control and congestion control are essential for ensuring reliable and efficient data communication in computer networks. Flow control operates

at a local level, while congestion control operates at a global level, coordinating the overall traffic flow across the network.

Computer Networks Page 4

In practice, flow control and congestion control mechanisms often work together to achieve optimal network performance. For example, in the Transmission Control Protocol (TCP),

flow control is implemented through the advertised receive window, while congestion control is achieved through mechanisms like Slow Start, Congestion Avoidance, and Fast

Retransmit/Fast Recovery.

Slow Start (a proactive technique):

Slow Start is a proactive congestion control technique used in the Transmission Control Protocol (TCP). It is designed to prevent the sudden introduction of a large amount of traffic

into the network, which could aggravate existing congestion.

Here's how Slow Start works:

1. When a new TCP connection is established, the sender is initially restricted to sending only a small amount of data, typicall y one maximum segment size (MSS).

2. For each acknowledgment (ACK) received from the receiver, the sender is allowed to increase the amount of data it can transmi t by one MSS.

3. This process continues, with the sender's congestion window (the amount of data it can transmit) increasing exponentially for each round-trip time (RTT) until a threshold is

reached or congestion is detected.

4. If congestion is detected (e.g., through packet loss or timeouts), the sender enters a congestion avoidance phase, where the congestion window is reduced and increased more

gradually to probe for available bandwidth.

The Slow Start algorithm ensures that new TCP connections do not abruptly introduce a large amount of traffic into the network, which could exacerbate existing congestion. Instead,

it gradually increases the transmission rate until it reaches an appropriate level or encounters congestion.

Slow Start is a proactive technique because it anticipates and tries to prevent congestion from occurring in the first place, rather than reacting to it after it has already happened. It is

an essential part of TCP's congestion control mechanisms and contributes to the overall stability and fairness of the Internet.

TCP congestion control

TCP congestion control refers to the mechanism that prevents congestion from happening or removes it after congestion takes place. When congestion takes place in the network,

TCP handles it by reducing the size of the sender’s window. The window size of the sender is determined by the following two factors:

• Receiver window size

• Congestion window size

Receiver Window Size

It shows how much data can a receiver receive in bytes without giving any acknowledgment.

Things to remember for receiver window size:

1. The sender should not send data greater than that of the size ofreceiver window.

2. If the data sent is greater than that of the size of the receiver’s window, then it causes retransmissionof TCP due to the dr opping of TCP segment.

3. Hence sender should always send data that is less than or equal to the size of the receiver’s window.

4. TCP header is used for sending the window size of the receiver to the sender.

Congestion Window

It is the state of TCP that limits the amount of data to be sent by the sender into the network even before receiving the acknowledgment.

Following are the things to remember for the congestion window:

1. To calculate the size of the congestion window, different variants of TCP and methods are used.

2. Only the sender knows the congestion window and its size and it is not sent over the link or network. The formula for determining the sender’s window size is:

Sender window size = Minimum (Receiver window size, Congestion window size)

1. Slow Start:

The Slow Start phase is the initial phase of TCP's congestion control algorithm. It is designed to gradually increase the transmission rate until it reaches an optimal level or encounters

congestion. The steps involved in the Slow Start phase are:

• The sender initially sets the congestion window (cwnd) to a small value, typically one maximum segment size (MSS).

• For each acknowledgment (ACK) received from the receiver, the sender increases the cwnd by one MSS.

• This process continues, resulting in an exponential increase in the congestion window until it reaches a threshold called the slow start threshold (ssthresh).

• If congestion is detected (through packet loss or timeout), the Slow Start phase ends, and the Congestion Avoidance phase beg ins.

2. Congestion Avoidance:

The Congestion Avoidance phase is designed to prevent further congestion by increasing the congestion window more gradually than in the Slow Start phase. The steps involved in the

Congestion Avoidance phase are:

• The ssthresh is set to half the current cwnd value when congestion is detected.

• The cwnd is also set to the new ssthresh value.

• For each ACK received, the cwnd is increased by 1 MSS.

• This results in a linear increase in the congestion window, allowing the sender to probe for additional available bandwidth cautiously.

• If congestion is detected again, the Congestion Avoidance phase is re-entered with updated ssthresh and cwnd values.

3. Fast Retransmit:

The Fast Retransmit phase is triggered when the sender receives duplicate ACKs from the receiver, indicating that some segments have been lost. The steps involved in the Fast

Retransmit phase are:

• If the sender receives three duplicate ACKs, it assumes that a segment has been lost.

• The sender retransmits the missing segment without waiting for a timeout.

Computer Networks Page 5

• The sender retransmits the missing segment without waiting for a timeout.

• The ssthresh is set to max(FlightSize / 2, 2 * MSS), where FlightSize is the amount of outstanding data in the network.

• The cwnd is set to the new ssthresh value plus 3 * MSS.

• The Fast Recovery phase is then entered.

4. Fast Recovery:

The Fast Recovery phase is designed to quickly recover from the detected packet loss and continue data transmission without going through the Slow Start phase again. The steps

involved in the Fast Recovery phase are:

• For each additional duplicate ACK received, the cwnd is increased by one MSS.

• This allows the sender to transmit additional data without waiting for a retransmission timeout.

• When the missing segment is acknowledged, the cwnd is set to the ssthresh value.

• The Congestion Avoidance phase is then entered.

These four phases work together to achieve reliable data transmission while adapting to network conditions and congestion. The Slow Start phase helps to probe for available

bandwidth gradually, the Congestion Avoidance phase allows for controlled growth, and the Fast Retransmit and Fast Recovery phases enable efficient recovery from packet loss.

Computer Networks Page 6

You might also like

- Cn2 AssignmentDocument11 pagesCn2 AssignmentVeer ShahNo ratings yet

- User Datagram Protocol (Udp)Document22 pagesUser Datagram Protocol (Udp)Harsh IsamaliaNo ratings yet

- Difference Between TCP and UDP PDFDocument8 pagesDifference Between TCP and UDP PDFosama425No ratings yet

- Acn - Dec 2018Document22 pagesAcn - Dec 2018shraddha_mundadadaNo ratings yet

- E-Commerce UNIT VDocument10 pagesE-Commerce UNIT Vsri vallideviNo ratings yet

- Unit 5: Transport LayerDocument17 pagesUnit 5: Transport LayerSagar wagleNo ratings yet

- CB - EN.U4AIE22016 Computer Networking AssignmentDocument3 pagesCB - EN.U4AIE22016 Computer Networking AssignmentPranNo ratings yet

- DCC Unit V Lecture NotesDocument8 pagesDCC Unit V Lecture NotesMaheedhar ReddyNo ratings yet

- TCP IP Over Satellite - Yi ZhangDocument8 pagesTCP IP Over Satellite - Yi ZhangAhmadHambaliNo ratings yet

- Unit 4Document42 pagesUnit 4sakshamsharma7257No ratings yet

- 01 OverviewDocument56 pages01 OverviewPrathik KharviNo ratings yet

- Networks: Network Protocols: Defines Rules and Conventions For Communication Between Network DevicesDocument5 pagesNetworks: Network Protocols: Defines Rules and Conventions For Communication Between Network DevicesSadia MunawarNo ratings yet

- Message Delivery TypesDocument4 pagesMessage Delivery TypesEvertonNo ratings yet

- Dev Sanskriti VishwavidyalayaDocument8 pagesDev Sanskriti VishwavidyalayaAnshika SharmaNo ratings yet

- Unit 4 Part A 1. What Is TCP?Document4 pagesUnit 4 Part A 1. What Is TCP?Gowri Shankar S JNo ratings yet

- DCN 11 Transport LayerDocument53 pagesDCN 11 Transport LayerShiza SahooNo ratings yet

- CN Unit 5 Computer Network NotesDocument33 pagesCN Unit 5 Computer Network Notesgdsc.pragatiNo ratings yet

- Communication ProtocolsTCPDocument11 pagesCommunication ProtocolsTCPDhiviyansh Punamiya OT3 - 433No ratings yet

- Chapter 5Document52 pagesChapter 5Pratham SuhasiaNo ratings yet

- Chapter 5Document8 pagesChapter 5yashthakare9267No ratings yet

- Unit IV Transport LAYERDocument27 pagesUnit IV Transport LAYERNagaraj VaratharajNo ratings yet

- TCP UdpDocument18 pagesTCP UdpSaurav VermaNo ratings yet

- Unit 2 - Advance Computer Networks - WWW - Rgpvnotes.inDocument21 pagesUnit 2 - Advance Computer Networks - WWW - Rgpvnotes.inprince keshriNo ratings yet

- Unit 5Document10 pagesUnit 5Dhanashri DevalapurkarNo ratings yet

- Unit 3-1Document23 pagesUnit 3-1sanketmhetre018No ratings yet

- IT Chapter 5Document15 pagesIT Chapter 5Alice HovsepyanNo ratings yet

- Anc 2022Document12 pagesAnc 2022nzumahezronNo ratings yet

- Lecture 5Document11 pagesLecture 5Rylan2911No ratings yet

- TCPDocument4 pagesTCPMalek GhNo ratings yet

- Unit Iv Transport Layer Overview of Transport LayerDocument27 pagesUnit Iv Transport Layer Overview of Transport LayerNavin DasNo ratings yet

- Week 2Document146 pagesWeek 2gojan39660No ratings yet

- Assignment 3 of CNDocument31 pagesAssignment 3 of CNHira WaheedNo ratings yet

- Day16 TransportLayerDocument13 pagesDay16 TransportLayerAshu ChauhanNo ratings yet

- Ex No: 9 Study of UDP PerformanceDocument33 pagesEx No: 9 Study of UDP Performancenganeshv1988No ratings yet

- VPNDocument12 pagesVPNrohitcom13No ratings yet

- CN-Mod4 Notes - DRVSDocument18 pagesCN-Mod4 Notes - DRVSNuthan RNo ratings yet

- El 303 SP 21 CCN Lec 7 Tcip ProtocolsDocument73 pagesEl 303 SP 21 CCN Lec 7 Tcip ProtocolsBilal Ahmed MemonNo ratings yet

- Protocol Interview QuestionsDocument10 pagesProtocol Interview QuestionsSam RogerNo ratings yet

- Unit-14 - Communications TechnologyDocument75 pagesUnit-14 - Communications TechnologyCLARA D SOUZA THOMASNo ratings yet

- DCN Unit-3saqDocument6 pagesDCN Unit-3saqsravan kumarNo ratings yet

- Itc4303 - L4&L5Document80 pagesItc4303 - L4&L5KhalifaNo ratings yet

- Reg No: 1947234: Performance Problems in Computer NetworksDocument5 pagesReg No: 1947234: Performance Problems in Computer NetworksAbhiNo ratings yet

- IS 103 Chapter 4Document20 pagesIS 103 Chapter 4Batang PasawaiNo ratings yet

- Chapter 4 AJPDocument69 pagesChapter 4 AJPSR BHATIANo ratings yet

- Packet Switching: GROUP 2: Understanding Network Protocols I. Definition of Network ProtocolsDocument18 pagesPacket Switching: GROUP 2: Understanding Network Protocols I. Definition of Network ProtocolsLiezl LagrimasNo ratings yet

- ThirdNotes Unit 2Document11 pagesThirdNotes Unit 2Rabin karkiNo ratings yet

- Packet Loss - Email TroubleshootingDocument29 pagesPacket Loss - Email TroubleshootingAndrew NkhuwaNo ratings yet

- Bscit Sem 6 Iot Material Unit 4Document11 pagesBscit Sem 6 Iot Material Unit 4paratevedant1403No ratings yet

- Traditional Nonconverged Network: Traditional Data Traffic CharacteristicsDocument40 pagesTraditional Nonconverged Network: Traditional Data Traffic CharacteristicsCesar CriolloNo ratings yet

- UNIT 4 ACN UpdatedDocument68 pagesUNIT 4 ACN UpdatedagasyabutoliaNo ratings yet

- TCP and MobilityDocument8 pagesTCP and MobilityJuan LopezNo ratings yet

- All Unit ChatgptDocument75 pagesAll Unit ChatgptSAKSHAM PRASADNo ratings yet

- حل اسئلة شبكات الحاسوب الدور الاول 2023 en0Document10 pagesحل اسئلة شبكات الحاسوب الدور الاول 2023 en0jasmhmyd205No ratings yet

- Top Networking Interview QuestionsDocument18 pagesTop Networking Interview QuestionsMD SUMON HOSSAINNo ratings yet

- Transport Layer: Internet Protocol SuiteDocument6 pagesTransport Layer: Internet Protocol SuiterohanNo ratings yet

- Transport LayerDocument8 pagesTransport LayerKrishna VamsiNo ratings yet

- Data Communication: Submitted By: Dilruba Yasmin I'd: 2019110531095 Batch: 53Document25 pagesData Communication: Submitted By: Dilruba Yasmin I'd: 2019110531095 Batch: 53Sadia SumiNo ratings yet

- Hacking Network Protocols: Unlocking the Secrets of Network Protocol AnalysisFrom EverandHacking Network Protocols: Unlocking the Secrets of Network Protocol AnalysisNo ratings yet

- Introduction to Internet & Web Technology: Internet & Web TechnologyFrom EverandIntroduction to Internet & Web Technology: Internet & Web TechnologyNo ratings yet

- Exploded View Part List ML 3471Document25 pagesExploded View Part List ML 3471gesssmNo ratings yet

- Cisco Paket Tracer 2.9.1Document3 pagesCisco Paket Tracer 2.9.1reza supriatnaNo ratings yet

- Red Hat Enterprise Linux-6-6.8 Release Notes-En-UsDocument65 pagesRed Hat Enterprise Linux-6-6.8 Release Notes-En-Uskamel najjarNo ratings yet

- Corinex MV Compact Gateway Datasheet PDFDocument2 pagesCorinex MV Compact Gateway Datasheet PDFAndrea QuadriNo ratings yet

- Comandos para Walidar Switch SanDocument2 pagesComandos para Walidar Switch SanSergio SergioNo ratings yet

- Sensor Temp y Humedad Dht11Document7 pagesSensor Temp y Humedad Dht11JuanKar Riveiro VilaboaNo ratings yet

- MDI UG EnglishDocument52 pagesMDI UG EnglishAlexandre Anderson AlvesNo ratings yet

- MiTOP-E3 T3 PDFDocument120 pagesMiTOP-E3 T3 PDFcristytrs787878No ratings yet

- The Evolution of Next-Gen Optical NetworksDocument19 pagesThe Evolution of Next-Gen Optical NetworksPriyantanu100% (1)

- EtherWAN SE6304-00B User ManualDocument106 pagesEtherWAN SE6304-00B User ManualJMAC SupplyNo ratings yet

- Distributed Computing Solved MCQs (Set-2)Document6 pagesDistributed Computing Solved MCQs (Set-2)YilmaNo ratings yet

- The H41q and H51q System Families: Programmable SystemsDocument37 pagesThe H41q and H51q System Families: Programmable SystemsObinna Benedict ObiojiNo ratings yet

- How To Set Up The IBM V7000Document7 pagesHow To Set Up The IBM V7000amit_post2000No ratings yet

- Nesoft Configuration Command Line ReferenceDocument243 pagesNesoft Configuration Command Line ReferenceandaihiepNo ratings yet

- CSS 3rd Quarter ExamDocument2 pagesCSS 3rd Quarter ExamChrist Ian100% (3)

- AVEVA Edge Whatsnew ScottKortierDocument121 pagesAVEVA Edge Whatsnew ScottKortierh2oxtremeNo ratings yet

- Designing and Tuning High Speed Data Loading - Thomas KejserDocument41 pagesDesigning and Tuning High Speed Data Loading - Thomas KejserWasis PrionggoNo ratings yet

- Arduino UnoDocument3 pagesArduino Unodavidperez92No ratings yet

- Red Hat Enterprise Linux 6 Deployment Guide en USDocument746 pagesRed Hat Enterprise Linux 6 Deployment Guide en USSushant KhandelwalNo ratings yet

- CVD Icon LibraryDocument5 pagesCVD Icon LibraryDonoban MezaNo ratings yet

- Zyxel Cli Reference Guide Nwa1123 Acv2 5Document167 pagesZyxel Cli Reference Guide Nwa1123 Acv2 5kim phuNo ratings yet

- Iot Book Part1 en 2.0Document198 pagesIot Book Part1 en 2.0Es LimNo ratings yet

- Chapter 5 Computer Networks - Notes ExerciseDocument20 pagesChapter 5 Computer Networks - Notes Exerciseasad ali100% (1)

- EPSON Media Projector - UsersGuideDocument170 pagesEPSON Media Projector - UsersGuideTajouryNo ratings yet

- E104-BT51A Datasheet EN v1.1Document47 pagesE104-BT51A Datasheet EN v1.1ivasi ivasiNo ratings yet

- Module 5 - IP Security, Transport and Tunnel Modes - Lecture NotesDocument22 pagesModule 5 - IP Security, Transport and Tunnel Modes - Lecture Notesxgy5807No ratings yet

- G1425GB Users Manual Nokia Shanghai Bell 2adzrg1425gb Ex 1 7Document194 pagesG1425GB Users Manual Nokia Shanghai Bell 2adzrg1425gb Ex 1 7Wagner VictoriaNo ratings yet

- A Final Requirement in English 105: "Unauthorized Use of Cellular Phones: An Unending Issue in Classrooms"Document6 pagesA Final Requirement in English 105: "Unauthorized Use of Cellular Phones: An Unending Issue in Classrooms"Joshua Rocel AlianzaNo ratings yet

- Netsupport ManualDocument393 pagesNetsupport ManualNur HidayatNo ratings yet

- SOT SOAP Assignment Report: I. One SOAP Service Which With Four MethodsDocument4 pagesSOT SOAP Assignment Report: I. One SOAP Service Which With Four MethodsTimotheeCNo ratings yet