0% found this document useful (0 votes)

17 views11 pagesProbability Distributions Explained

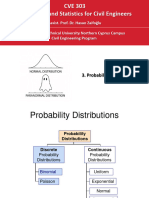

The document discusses various discrete and continuous probability distributions, including Bernoulli, Binomial, Poisson, Uniform, Exponential, and Normal distributions. It provides definitions, probability functions, expectations, variances, and examples for each distribution type. The document emphasizes the applications of these distributions in real-world scenarios, such as legal cases and sales predictions.

Uploaded by

oyodaanjelineCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

17 views11 pagesProbability Distributions Explained

The document discusses various discrete and continuous probability distributions, including Bernoulli, Binomial, Poisson, Uniform, Exponential, and Normal distributions. It provides definitions, probability functions, expectations, variances, and examples for each distribution type. The document emphasizes the applications of these distributions in real-world scenarios, such as legal cases and sales predictions.

Uploaded by

oyodaanjelineCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd