0% found this document useful (0 votes)

80 views36 pagesChapter 7

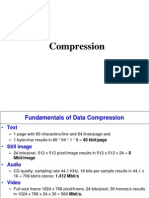

Chapter 7 discusses multimedia data compression, explaining the processes of lossless and lossy compression, their goals, and constraints. It covers various methods such as Run Length Coding and Huffman Coding, emphasizing the importance of removing redundancy and exploiting human perception. Additionally, it highlights the applications of compression in reducing data volume for transmission and storage, along with standards like JPEG and MPEG.

Uploaded by

ENBAKOM ZAWUGACopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

80 views36 pagesChapter 7

Chapter 7 discusses multimedia data compression, explaining the processes of lossless and lossy compression, their goals, and constraints. It covers various methods such as Run Length Coding and Huffman Coding, emphasizing the importance of removing redundancy and exploiting human perception. Additionally, it highlights the applications of compression in reducing data volume for transmission and storage, along with standards like JPEG and MPEG.

Uploaded by

ENBAKOM ZAWUGACopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd