0% found this document useful (0 votes)

48 views63 pagesChapter 2-Compression Techniques

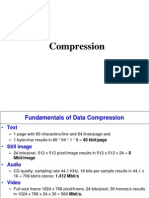

Data compression reduces the number of bits needed to represent data, which is essential for efficient storage and transmission, particularly for large files like audio and video. There are two main types of compression: lossless, which retains all original data, and lossy, which sacrifices some data for higher compression rates. Various algorithms, such as Run-Length Encoding and Huffman Coding, are used to achieve data compression by identifying and eliminating redundancy.

Uploaded by

Resika UmayanthaCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd

0% found this document useful (0 votes)

48 views63 pagesChapter 2-Compression Techniques

Data compression reduces the number of bits needed to represent data, which is essential for efficient storage and transmission, particularly for large files like audio and video. There are two main types of compression: lossless, which retains all original data, and lossy, which sacrifices some data for higher compression rates. Various algorithms, such as Run-Length Encoding and Huffman Coding, are used to achieve data compression by identifying and eliminating redundancy.

Uploaded by

Resika UmayanthaCopyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PDF, TXT or read online on Scribd