Professional Documents

Culture Documents

Fulltext 1

Fulltext 1

Uploaded by

Miguel SánchezOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Fulltext 1

Fulltext 1

Uploaded by

Miguel SánchezCopyright:

Available Formats

On Using Learning Automata to Model a Students Behavior in a Tutorial-like System

Khaled Hashem1 and B. John Oommen2

School of Computer Science, Carleton University, Ottawa, Canada, K1S 5B6 khaled hashem@external.mckinsey.com 2 Chancellors Professor; Fellow: IEEE and Fellow: IAPR School of Computer Science, Carleton University, Ottawa, Canada, K1S 5B6. Also an Adjunct Professor with Agder University College in Grimstad, Norway oommen@scs.carleton.ca

1

Abstract. This paper presents a new philosophy to model the behavior of a Student in a Tutorial-like system using Learning Automata (LA). The model of the Student in our system is inferred using a higher-level of LA, referred to Meta-LA, which attempt to characterize the learning model of the Students (or Student Simulators), while the latter use the Tutorial-like system. To our knowledge, this is the rst published result which attempts to infer the learning model of an LA when it is treated externally as a black box, whose outputs are the only observable quantities. Additionally, our paper represents a new class of Multi-Automata systems, where the Meta-LA communicate with the Students, also modelled using LA, in a synchronous scheme of interconnecting automata. Keywords: Automata. Student Modelling, Tutorial-like Systems, Learning

Introduction

The Student is the focal point of interest in any Tutorial system. The latter is customized to maximize the learning curve for the Student. In order for the Tutorial system to achieve this, it needs to treat every student according to his1 particular skills and abilities. The systems model for the Student permits such a customization. The aim of this paper is to present a new philosophy to model how a Student learns while using a Tutorial-like system, that is based on the theory of stochastic Learning Automata (LA). The LA will enable the system to determine the learning model of the Student, which, consequently, will enable the Tutoriallike system to improve the way it deals (i.e., presents material, teaches and evaluates) with the Student to customize the learning experience. In this paper, we propose what we call a Meta-LA strategy which can be used to examine the Student model. As the name implies, we have used LA as

1

For the ease of communication, we request the permission to refer to the entities involved (i.e. the Teacher, Student, etc.) in the masculine.

H.G. Okuno and M. Ali (Eds.): IEA/AIE 2007, LNAI 4570, pp. 813822, 2007. c Springer-Verlag Berlin Heidelberg 2007

814

K. Hashem and B.J. Oommen

a learning tool/mechanism which steers the inference procedure achieved by the Meta-LA. To be more specic, we have assumed that a Student can be modelled as being one of the following types: slow learner , normal learner , or fast learner. In order for the system to determine the learning model of the Student, it monitors the consequent actions of the Student and uses the Meta-LA to unwrap the learning model. The proposed Meta-LA scheme has been tested for numerous (benchmarks) environments, and the results obtained are remarkable. Indeed, to our knowledge, this is the rst published result which attempts to infer the learning model of an LA when it is treated externally as a black box, whose outputs are the only observable quantities. This paper is necessarily brief; more details of all the modules and experimental results are found in [4]. Using machine learning in Student modelling was the focus of a few previous studies (a more detailed review is found in [4]). Legaspi and Sison [5] modelled the tutor in ITS using reinforcement learning with the temporal dierence method as the central learning procedure. Beck [3] used reinforcement learning to learn to associate superior teaching actions with certain states of the students knowledge. Baes and Mooney implemented ASSERT [2], which used reinforcement learning in student modelling to capture novel student errors using only correct domain knowledge. Our method is distinct from all the above. 1.1 Stochastic Learning Automaton

The stochastic automaton tries to reach a solution to a problem without any information about the optimal action. By interacting with an Environment, a stochastic automaton can be used to learn the optimal action oered by that Environment [6]. A random action is selected based on a probability vector, and then from the observation of the Environments response, the action probabilities are updated, and the procedure is repeated. A stochastic automaton that behaves in this way to improve its performance is called a Learning Automaton (LA). In the rst LA designs, the transition and the output functions were time invariant, and for this reason these LA were considered xed structure automata. Later, a class of stochastic automata known in the literature as Variable Structure Stochastic Automata (VSSA) was introduced. In the denition of a VSSA, the LA is completely dened by a set of actions (one of which is the output of the automaton), a set of inputs (which is usually the response of the Environment) and a learning algorithm, T . The learning algorithm [6] operates on a vector (called the Action Probability vector) P(t) = [p1 (t), . . . , pr (t)]T , where pi (t) (i = 1, . . . , r) is the probability that the automaton will select the action i at time t, pi (t) = Pr[(t) = i ], i = 1, . . . , r, and it satises r i=1 pi (t) = 1 t. Note that the algorithm T : [0,1]r A B [0,1]r is an updating scheme where A = {1 , 2 , . . . , r }, 2 r < , is the set of output actions of the automaton, and B is the set of responses from the Environment. Thus, the updating is such that

On Using Learning Automata to Model a Students Behavior

815

P(t+1) = T (P(t), (t), (t)), where P(t) is the action probability vector, (t) is the action chosen at time t, and (t) is the response it has obtained. If the mapping T is chosen in such a manner that the Markov process has absorbing states, the algorithm is referred to as an absorbing algorithm [6]. Ergodic VSSA have also been investigated [6,8]. These VSSA converge in distribution with the asymptotic distribution being independent of the initial vector. Thus, while ergodic VSSA are suitable for non-stationary environments, automata with absorbing barriers are preferred in stationary environments. In practice, the relatively slow rate of convergence of these algorithms constituted a limiting factor in their applicability. In order to increase their speed of convergence, the concept of discretizing the probability space was introduced [8,10]. This concept is implemented by restricting the probability of choosing an action to a nite number of values in the interval [0,1]. Pursuit and Estimator-based LA were introduced to be faster schemes, since they pursue what can be reckoned to be the current optimal action or the set of current optimal schemes [8]. The updating algorithm improves its convergence results by using the history to maintain an estimate of the probability of each action being rewarded, in what is called the reward-estimate vector. LA have been used in systems that have incomplete knowledge about the environment in which they operate [1]. They have been used in telecommunications and telephone routing, image data compression, pattern recognition, graph partitioning, object partitioning, and vehicle path control [1]. 1.2 Contributions of This paper

This paper presents a novel approach for modelling how the Student learns in a tutoring session. We believe that successful modelling of Students will be fundamental in implementing the task of eective Tutorial-like systems. Thus, the salient contributions of this paper (claried extensively in [4]) are: The concept of Meta-LA, in Student modelling, which helps to build a higherlevel learning concept, that has been shown to be advantageous for customizing the learning experience of the Student in an ecient way. The Student Modeler, using the Meta-LA, will observe the action of the Student concerned and the characteristics of the Environment. It will use these observations to build a learning model for each Student and see whether he is a slow, normal, or fast learner. Unlike traditional Tutorial systems, which do not consider the psychological perspective of the Students, we model their cognitive state. This will enable the Tutorial-like system to customize its teaching strategy.

Intelligent Tutorial and Tutorial-like Systems

Intelligent Tutorial Systems (ITSs) are special educational software packages that involve Articial Intelligence (AI) techniques and methods to represent the knowledge, as well as to conduct the learning interaction [7].

816

K. Hashem and B.J. Oommen

An ITS mainly consists of three modules, the domain model (knowledge domain), the student model, and the pedagogical model, (which represent the tutor model itself). Self [9] dened these components as the tripartite architecture for an ITS the what (domain model), the who (student model), and the how (tutoring model). More details of these dierences are found in [4]. 2.1 Tutorial-like Systems

Tutorial-like systems share some similarities with the well-developed eld of Tutorial systems. Thus, for example, they model the Teacher, the Student, and the Domain knowledge. However, they are dierent from traditional Tutorial systems in the characteristics of their models, etc. as will be highlighted below. 1. Dierent Type of Teacher. In Tutorial systems, as they are developed today, the Teacher is assumed to have perfect information about the material to be taught. Also, built into the model of the Teacher is the knowledge of how the domain material is to be taught, and a plan of how it will communicate and interact with the Student(s). The Teacher in our Tutorial-like system possesses dierent features. First of all, one fundamental dierence is that the Teacher is uncertain of the teaching material he is stochastic. Secondly, the Teacher does not initially possess any knowledge about How to teach the domain subject. Rather, the Teacher himself is involved in a learning process and he learns what teaching material has to be presented to the particular Student. 2. No Real Students. A Tutorial system is intended for the use of real-life students. Its measure of accomplishment is based on the performance of these students after using the system, and it is often quantied by comparing their progress with other students in a control group, who would use a real-life Tutor. In our Tutorial-like system, there are no real-life students who use the system. The system could be used by either: (a) Students Simulators, that mimic the behavior and actions of real-life students using the system. (b) An articial Entity which, in itself, could be another software component that needs to learn specic domain knowledge. 3. Uncertain Course Material. Unlike the domain knowledge of traditional Tutorial systems where the knowledge is, typically, well dened, the domain knowledge teaching material presented in our Tutorial-like system contains material that has some degree of uncertainty. The teaching material contains questions, each of which has a probability that indicates the certainty of whether the answer to the question is in the armative. 4. School of Students. Traditional Tutorial Systems deal with a Teacher who teaches Students, but they do not permit the Students to interact with each other. A Tutorial-like system assumes that the Teacher is dealing with a School of Students where each learns from the Teacher on his own, and can also learn from his colleagues if he desires.

On Using Learning Automata to Model a Students Behavior

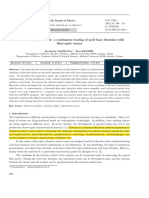

Meta-LA (Student Modeler)

817

Env. For Meta-LA

Observe

Observe

(n)

Student LA

(n)

Teaching Environment < , ,c>

Fig. 1. Modelling Using a Network of LA

Network Involving the LA/Meta-LA

Our Tutorial-like system incorporates multiple LA which are, indirectly, interconnected with each other. This can be perceived to be a system of interconnected automata [11]. Since such systems of interconnecting automata may be one of two models, namely, synchronous and sequential, we observe that our system represents a synchronous model. The LA in our system and their interconnections are illustrated in Figure 1. In a traditional interconnected automata the response from the Environment associated with one automaton represents the input to another automaton. However, our system has a rather unique LA interaction model. While the Student LA is aecting the Meta-LA, there is no direct connection between both of them. The Meta-LA Environment monitors the performance of the Student LA over a period of time, and depending on that, its Environment would penalize or reward the action of the Meta-LA. This model represents a new structure of interconnection which can be viewed as being composed of two levels: a higher-level automaton, i.e., the Meta-LA, and a lower-level automaton, which is the Student LA. The convergence of the higher-level automaton is dependent on the behavior of lower-level automaton. Based on these dierences, and additional ones listed in [4], the reader will observe the uniqueness of such an interaction of modules, as: 1. The Meta-LA has no Environment of its own. 2. The Environment for the Meta-LA consists of observations, which must be perceived as an Environment after doing some processing of the signals observed. 3. The Meta-LA has access to the Environment of the lower level, including the set of the penalty probabilities that are unknown to the lower-level LA.

818

K. Hashem and B.J. Oommen

4. The Meta-LA has access to the actions chosen by the lower-level LA. 5. Finally, the Meta-LA has access to the responses made by the lower-level Environment. In conclusion, we observe that items (3)-(5) above collectively constitute the Environment for the Meta-LA. Such a modelling is unknown in the eld of LA.

Formalization of the Student Model

To simplify issues, we assume that the learning model of the Student is one of these three types2 : 1. A FSSA model, which represents a Slow Learner. 2. A VSSA model, which represents a Normal Learner. 3. A Pursuit model LA, which represents a Fast Learner. The Student model will use three specic potential LA, each of which serve as an action for a higher-level automaton (i.e., a Meta-LA). In our present instantiation, the Meta-LA which is used to learn the best possible model for the Student Simulator is a 4-tuple: {, , P, T }, where: = {1 , 2 , 3 }, in which 1 is the action corresponding to a FSSA model (Slow Learner). 2 is the action corresponding to a VSSA model (Normal Learner). 3 is the action corresponding to a Pursuit model LA(Fast Learner). = {0, 1}, in which = 0 implies a Reward for the present action chosen, and = 1 implies a Penalty for the present action chosen. P = [p1 , p2 , p3 ]T , where pi (n) is the current probability of the Student Simulator being represented by i . T is the probability updating rule given as a map as: T : (P, , ) P. Each of these will now be claried. 1. is the input which the Meta-LA receives (or rather, infers). For a xed number of queries, which we shall refer to as the Window, the Meta-LA assumes that the Student Simulators model is i . Let (t) be the rate of learning of the lower LA at time t. By inspecting the way by which the Student Simulator learns within this Window, it infers whether this current model should be rewarded or penalized. We propose to achieve this by using two thresholds, which we refer to as {j |1 j 2} where the Student is considered to be a slow learner if his learning rate is less than 1 , a fast learner if his learning rate is greater than 2 , and a normal learner if his learning rate is between 1 and 2 .

2

Additional details of such a modelling phenomenon are included in [4], and omitted here in the interest of brevity. Also, extending this to consider a wider spectrum of learning models is rather straightforward.

On Using Learning Automata to Model a Students Behavior

819

2. P is the action probability vector which contains the probabilities that the Meta-LA assigns to each of its actions. At each instant t, the Meta-LA randomly selects an action (t) = i . The probability that the automaton selects action i , at time t, is the action probability pi (t) = Pr[(t) = i ], r where i=1 pi (t) = 1 t, i=1, 2, 3. Initially, the Meta-LA will have an equal probability for each of the three learning models as: [1/3 1/3 1/3]T By observing the sequence of {(t)}, the Meta-LA updates P(t) as per the function T , described presently. One of the action probabilities, pj , will, hopefully, converges to a value as close to unity as we want. 3. T is the updating algorithm, or the reinforcement scheme used to update the Meta-LAs action probability vector, and is represented by: P(t+1) = T [P(t), (t), (t)].

Experimental Results

This section presents the experimental results obtained by testing the prototype implementation of the Meta-LA. The simulations were performed for two different types of Environments, two 4-action Environments and two benchmark 10-action Environments. These Environments represent the teaching problem, which contains the uncertain course material of the Domain model associated with the Tutorial-like system. In all the tests performed, an algorithm was considered to have converged, if the probability of choosing an action was greater than or equal to a threshold T (0 < T 1). In our simulation, T was set to be 0.99 and the number of experiments (NE ) was set to be 75. The Meta-LA has been implemented as a Discretized LA, the DLRI scheme, with a resolution parameter N=40. The Student Simulator was implemented to mimic three typical types of Students as follows: Slow Learner: The Student Simulator used a FSSA, in the form of a Tsetlin LN,2N automaton, which had two states for each action. Normal Learner: The Student Simulator used a VSSA, in the form of a LRI , with =0.005. Fast Learner: The Student Simulator utilized the Pursuit PLRI , with =0.005. The rate of learning associated with the Student is a quantity that is dicult to quantify. For that, the Meta-LA Environment calculates the loss-function of the Student Simulator LA. For a Window of n choice-response feedback cycles of the Student Simulator, the loss-function is dened by: 1 ni ci , n where ni is the number of instances an action i is selected inside the Window and, ci is the penalty probability associated with action i of the lower-level LA. We consider the rate of learning for the Student to be a function of the lossfunction and time, as illustrated in Figure 2. This reects the fact that the loss function for any learning Student, typically, decreases with time (for all types of learners) as he learns more, and as his knowledge improves by time.

820

K. Hashem and B.J. Oommen

Loss Function

Fast Learner

Normal Learner Slow Learner

Time

Fig. 2. Loss Function and

5.1

Environments with 4-Actions and 10-Actions

The 4-action Environment represents a Multiple-Choice question with 4 options. The Student needs to learn the content of this question, and conclude that the answer for this question is the action which possesses the minimum penalty probability. Based on the performance of the Student, the Meta-LA is supposed to learn the learning model of the Student. The results of this simulation are presented in Table 1.

Table 1. Convergence of Meta-LA for Dierent Students Learning Models in FourAction Environments

Env. 1 2 PLRI LRI Tsetlin # of % correct # of % correct # of % correct Iter. convergence Iter. convergence Iter. convergence 607 790 EA : EB : 100% 97% 0.7 0.1 0.5 0.45 1037 1306 0.3 0.84 87% 89% 0.2 0.76 1273 1489 91% 85%

EA EB

0.50-0.31 0.60-0.35 0.28-0.17 0.37-0.24

Reward probabilities are:

To be conservative, the simulation assumes that when the Student starts using the system, the results during a transient learning phase, are misleading and inaccurate. Therefore, the rst 200 iterations are neglected by the Meta-LA. The results obtained were quite remarkable. Consider the case of EA , where the best action was 1 . While an FSSA converged in 1273 iterations3 , the LRI converged in 1037 iterations and the Pursuit PLRI converged in 607 iterations. By using the values of 1 and 2 as specied in Table 1, the Meta-LA was able to characterize the true learning capabilities of the Student 100% of the time when the lower-level LA used a Pursuit PLRI . The lowest accuracy of the Meta-LA

3

The gures include the 200 iterations involved in the transient phase.

On Using Learning Automata to Model a Students Behavior

821

for Environment EA was 87%. The analogous results for EB were 97% (at its best accuracy) and 85% for the Slow Learner. The power of our scheme should be obvious ! On the other hand, the 10-action Environment represents a Multiple-Choice question with 10 options. Table 2 show the results of this simulation.

Table 2. Convergence of Meta-LA for Dierent Students Learning Models in TenAction Environments

Env. 1 2 PLRI LRI Tsetlin # of % correct # of % correct # of % correct Iter. convergence Iter. convergence Iter. convergence 668 616 0.3 0.84 95% 100% 0.2 0.76 0.4 0.2 748 970 0.5 0.4 93% 85% 0.4 0.6 0.3 0.7 1369 1140 0.5 0.5 96% 96% 0.2 0.3

EA EB

0.55-0.31 0.65-0.40 0.41-0.17 0.51-0.26

Reward probabilities are: EA : 0.7 0.5 EB : 0.1 0.45

Similar to the 4-action Environments, the results obtained for the 10-action Environments were very fascinating. For example, we consider the case of EA , where the best action was 1 . While an FSSA converged in 1369 iterations, the LRI converged in 748 iterations and the Pursuit PLRI converged in 668 iterations. By using the values of 1 and 2 as specied in Table 2, the MetaLA was able to determine the true learning capabilities of the Student 95% of the time, when the lower-level LA used a Pursuit PLRI . The lowest accuracy of the Meta-LA for Environment EA was 93%. By comparison, for EB , the best accuracy was 100% for the Fast Leaner and the worst accuracy was 85% for the Slow Learner. The details of more simulation results can be found in [4], and are omitted here in the interest of brevity. We believe that the worst-case accuracy of 15% (for the inexact inference) is within a reasonable limit. Indeed, in a typical learning environment, the Teacher may, incorrectly, assume the wrong learning model for the Student. Thus, we believe that our Meta-LA learning paradigm is remarkable considering that these are the rst reported results for inferring the nature of an LA if it is modelled as a black box.

Conclusion and Future Work

This paper presented a novel strategy for a Tutorial-like system inferring the model of a Student. The Student Modeler itself uses a LA as an internal mechanism to determine the learning model of the Student, so that it could be used in the Tutorial-like system to customize the learning experience for each Student. To achieve this, the Student Modeler uses a higher-level automaton, a so-called

822

K. Hashem and B.J. Oommen

Meta-LA, which observes the Student Simulator LAs actions and the teaching Environment, and attempts to characterize the learning model of the Student (or Student Simulator), while the latter uses the Tutorial-like system. From a conceptual perspective, the paper presents a new approach for using interconnecting automata, where the behaviorial sequence of one automaton aects the other automaton. From the simulation results, we can conclude that this approach is a feasible and valid mechanism applicable for implementing a Student modelling process. The results shows that the Student Modeler was successful in determining the Student learning model in a high percentage of the cases sometimes with an accuracy of 100%. We believe that our approach can also be ported for use in traditional Tutorial Systems. By nding a way to measure the rate of learning for real-life Students, the Student Modeler should be able to approximate the learning model for these Students. This would decrease the complexity of implementing Student models in these systems too. The incorporation of all these concepts into a Tutorial-like system is the topic of research which we are currently pursuing.

References

1. Agache, M., Ooommen, B.J.: Generalized pursuit learning schemes: New fam- ilies of continuous and discretized learning automata. IEEE Trans. Syst. Man. Cybern. 32(6), 738749 (2002) 2. Baes, P., Mooney, R.: Renement-based student modeling and automated bug library construction. J. of AI in Education 7(1), 75116 (1996) 3. Beck, J.: Learning to teach with a reinforcement learning agent. In: Proceedings of The Fifteenth National Conference on AI/IAAI, p. 1185, Madison, WI (1998) 4. Hashem, K., Oommen, B.J.: Modeling a students behavior in a tutorial-like system using learning automata. Unabridged version of this paper 5. Legaspi, R.S., Sison, R.C.: Modeling the tutor using reinforcement learning. In: Proceedings of the Philippine Comp. Sc. Congress (PCSC), pp. 194196 (2000) 6. Narendra, K.S., Thathachar, M.A.L.: Learning Automata: An Introduction. Prentice-Hall, New Jersey (1989) 7. Omar, N., Leite, A.S.: The learning process mediated by ITS and conceptual learning. In: Int. Conference On Engineering Education, Rio de Janeiro (1998) 8. Oommen, B.J, Agache, M.: Continuous and discretized pursuit learning schemes: Various algorithms and their comparison. IEEE Trans. Syst. Man. Cybern. SMC 31(B), 277287 (2001) 9. Self, J.: The dening characteristics of intelligent tutoring systems research: ITSs care, precisely. International J. of AI in Education 10, 350364 (1999) 10. Thathachar, M.A.L., Oommen, B.J.: Discretized reward-inaction learning automata. Journal of Cybernetics and Information Science 2429, Spring (1979) 11. Thathachar, M.A.L., Sastry, P.S.: Varieties of learning automata: An overview. IEEE Trans. Syst. Man. Cybern. 32(6), 711722 (2002)

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5807)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (842)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1091)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Coupling of Optical Fibers and Scattering in Fibers PDFDocument11 pagesCoupling of Optical Fibers and Scattering in Fibers PDFRenan Portela JorgeNo ratings yet

- Introduction To Crystallography IntroducDocument5 pagesIntroduction To Crystallography IntroducFajar AzmiNo ratings yet

- NLTK Installation GuideDocument13 pagesNLTK Installation GuideMoTechNo ratings yet

- Coral CoversDocument10 pagesCoral CoversNadia AldyzaNo ratings yet

- ACC/AHA 2002 Guideline Update For The Management of Chronic Stable Angina PDFDocument125 pagesACC/AHA 2002 Guideline Update For The Management of Chronic Stable Angina PDFข้าวหอมมะลิ ลั๊นลาNo ratings yet

- My Homework Lesson 3 Hands On Estimate and Measure MassDocument4 pagesMy Homework Lesson 3 Hands On Estimate and Measure Masscyqczyzod100% (1)

- Astm D6089-97 (2002)Document3 pagesAstm D6089-97 (2002)MANo ratings yet

- Law & MoralityDocument4 pagesLaw & Moralityjienlee0% (1)

- Achille Mbembe - Provincializing FranceDocument36 pagesAchille Mbembe - Provincializing Francechristyh3No ratings yet

- Hugh-Jones - 4 Etnología AmazonicaDocument22 pagesHugh-Jones - 4 Etnología AmazonicaFernanda SilveiraNo ratings yet

- A Different Point of View: A Continuous Tracing of Acid-Base Titration With Fiber-Optic SensorDocument4 pagesA Different Point of View: A Continuous Tracing of Acid-Base Titration With Fiber-Optic SensorAnita Catur WNo ratings yet

- Iso 50001 EmsDocument31 pagesIso 50001 EmsDeepak KumarNo ratings yet

- Personal Philosophy of Teaching and LearningDocument3 pagesPersonal Philosophy of Teaching and Learningapi-250116205No ratings yet

- Program Semester Genap Kelas XDocument1 pageProgram Semester Genap Kelas XPurwanti WahyuningsihNo ratings yet

- Educ 5312-Research Recep BatarDocument6 pagesEduc 5312-Research Recep Batarapi-290710546No ratings yet

- The Microscope and Its Use: Lab ReportDocument4 pagesThe Microscope and Its Use: Lab ReportArbab GillNo ratings yet

- Accounting Information SystemDocument79 pagesAccounting Information Systemవెంకటరమణయ్య మాలెపాటిNo ratings yet

- Getting Started With Tally 9Document63 pagesGetting Started With Tally 9Zahida MariyamNo ratings yet

- Project EvaluationDocument6 pagesProject EvaluationKashaf Fatima100% (2)

- Sid Meier's Alpha Centauri ManualDocument120 pagesSid Meier's Alpha Centauri ManualWarmongering100% (1)

- Notification Mizoram University Director Hindi Officer Private Secretary Assistant Other PostsDocument9 pagesNotification Mizoram University Director Hindi Officer Private Secretary Assistant Other PostsAdityaNo ratings yet

- Toshihiko Izutsu's Semantic HermeneuticsDocument24 pagesToshihiko Izutsu's Semantic HermeneuticsphotopemNo ratings yet

- tiếng anh 30-40 lần 3 đã có đáp án đến hết đề 35Document50 pagestiếng anh 30-40 lần 3 đã có đáp án đến hết đề 35TUYẾN ĐẶNGNo ratings yet

- Physics Lab - PHY102 Manual - Monsoon 2016 (With Correction) PDFDocument82 pagesPhysics Lab - PHY102 Manual - Monsoon 2016 (With Correction) PDFlanjaNo ratings yet

- Squire GSR DatasheetDocument2 pagesSquire GSR DatasheetStanley Ochieng' Ouma100% (3)

- Analytics 2022 12 17 021848Document106 pagesAnalytics 2022 12 17 021848rares corsiucNo ratings yet

- Selective Attention: April 2019Document5 pagesSelective Attention: April 2019Jing HuangNo ratings yet

- D41 1003 - C - EN - Compressive Strength - Flexible AlveolatDocument9 pagesD41 1003 - C - EN - Compressive Strength - Flexible AlveolatTeoTyJayNo ratings yet

- E 986 - 97 - RTK4NGDocument3 pagesE 986 - 97 - RTK4NGLucia Dominguez100% (1)

- Lab 1 FoundryDocument14 pagesLab 1 FoundryUsman Taib100% (2)