Professional Documents

Culture Documents

A New Two-Phase Sampling Algorithm For Discovering Association Rules

Uploaded by

anand_gsoft3603Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

A New Two-Phase Sampling Algorithm For Discovering Association Rules

Uploaded by

anand_gsoft3603Copyright:

Available Formats

A New Two-Phase Sampling Algorithm for Discovering Association Rules

Data mining techniques have been widely used in various applications. Data mining extract novel and useful knowledge from large repositories of data and has become an effective analysis and decision means in corporation. The sharing of data for data mining can bring a lot of advantages for research and business collaboration. Data mining is becoming an increasingly important tool to transform the data into information. The volume of electronically accessible data in warehouse and on the internet is growing faster, scalability of mining is a major concern and classical mining algorithms require one or more passes over the entire database can take one hours or even days to execute and in the future the problem will become worse, to avoid this problem using a sample of data as the synopsis is a popular technique that can scale very well as the data grow. Mining and analysis algorithms require one or more computationally intensive passes over the entire database become slow and worse in future. In Data Mining, Association Rule Mining is a popular and well researched method for discovering relations between variables in a large database and the information can be used as the basis for decisions about marketing activities such as market basket analysis, product placements etc. This project is based on Apriori, SRS (Simple Random Sampling) and FAST (Finding Associations from Sampled Transactions) algorithm to generate association rules and also for discovering the rules in a large database. In a large database by applying Apriori, Simple Random Sampling and FAST algorithm the user can find a best algorithm of calculating the strong and weak rule of the dataset. The user can calculate the time difference and accuracy in order to find an efficient result of discovering the association rules.

HARDWARE CONFIGURATION: Processor Processor Speed Memory (RAM) Hard Disk Floppy Drive Monitor Keyboard Mouse : Pentium IV : 1.7 GHz : 256 MB : 10 GB : 3 1.44 MB Drive : Samsung Color Monitor : 104 keys Intel Keyboard : Intel Optical Mouse

SOFTWARE CONFIGURATION

Operating System : Windows XP Front End Tool : Microsoft Visual Basic .Net 2008 Back End Tool : Microsoft SQL Server 2000

EXISTING SYSTEM: The study of existing system has enlightened the limitation of the system and so it has paved a way for the proposed system. The Problem of finding a relationship between variables in a large database is not as easy as possible. LIMITATION OF EXISTING SYSTEM: Limited amount of memory Need complete list of database Data may be scattered and poorly accessible Requires many database scans Expensive Lossy compressed synopsis (sketch) of data Scalability of mining algorithm is a major concern

PROPOSED SYSTEM: The basis for the proposed system is the recognition of the need for improving the existing system. The proposed system aims at overcoming the drawbacks of the existing system. An important aspect of the new system is that it should be easy to incorporate change. The user should be able to make changes without any difficulty at any time. The proposed system of association rules is done using the Apriori, Simple Random Sampling and FAST,EASE. The proposed system is developed using Visual Basic.NET as the front end and MS SQL server as the background. FEATURES OF PROPOSED SYSTEM: Uses large item set property Save memory space Easily implemented Reduced costs Reduced field time Increase accuracy Provide security Excellent user friendliness Simple Errors can be easily measured

Modules Description : This project is based on FAST, EASE, Apriori and Simple Random Sampling for discovering

association rules in large database.

Apriori Algorithm : The Apriori algorithm is a classic algorithm for learning association rules and it is mainly used to

designed and operate on database containing the transactions.

Simple Random Sampling : The Simple Random Sampling is considered separately and it randomly displays the database and

check for the support and confidence in order to find the best rule. Simple Random Sampling can

make sampling a viable means for attaining both high performance and acceptably accurate results.

FAST Algorithm : FAST (Finding Associations from Sampled Transactions), a refined sampling-based mining algorithm that is distinguished from prior algorithms by its novel two phase approach to sample collection. In Phase I a large sample is collected to quickly and accurately estimate the support of each item in the database. In Phase II, a small final sample is obtained by excluding outlier transactions in such a manner that the support of each item in the final sample is as close as possible to the estimated support of the item in the entire database. Indeed, our numerical experiments indicate that for any fixed computing budget, FAST identify frequent itemsets and fewer false itemsets than sampling-based algorithms. FAST can identify most frequent itemsets in a database at an overall cost that is much lower than that of classical algorithms. In this project A New Two Phase Sampling Algorithm for Discovering Association Rules the user can find out the best comparison time and variation between the algorithms. In a large dataset, first the Apriori algorithm has been applied to find the support and confidence in order to find the strong rule and weak rule, and then randomly display the dataset and find the strong rule and weak rule based on the support and confidence of the dataset. At last the FAST (Finding Associations from Sampled Transactions) algorithm has been used in a large dataset to find out the strong and weak rule based on the support and confidence of the dataset. By applying the three algorithms the user can calculate the correct time and accuracy and also the user can find out the best algorithm from calculating the time difference.

EASE Algorithm : In this paper we introduce a novel data-reduction method, called ease (Epsilon Approximation: Sampling Enabled), that is especially designed for categorical count data. This algorithm is an outgrowth of earlier work by Chen, et al. on the fast datareduction method. Both ease and fast start with a relatively large simple random sample of transactions and deterministically trim the sample to create a final

subsample whose distance" from the complete database is as small as possible. For

reasons of computational efficiency, both algorithms subsample as close" to the original database if the high-level aggregates of the subsample normalized by the total number of data points are close" to the normalized aggregates in the database. These normalized aggregates typically correspond to 1-itemset or 2-itemset supports in the association-rule setting or, in the setting of a contingency table, relative marginal or cell frequencies

Apply EASE Algorithm Highlight with Blue and Red Color

TABLE NAME : Dataset_master | Primary Key : DS_ID

COLUMN NAME DS_ID DS_TRANS

DATATYPE Numeric Text

DESCRIPTION Dataset Identification Dataset Transaction data

TABLE NAME : Result_analysis | Primary Key : Tran_no

COLUMN NAME TRAN_NO TYPE SNO STARTED_TIME ELAPSED_TIME RULES

DATATYPE Numeric Text Numeric Datetime Datetime Text

DESCRIPTION Transaction Number Transaction Type Serial Number Started Time Elapsed time Rules

APRIORI

FINDING RULES

SIMPLE RANDOM SAMPLE

FINDING RULES

FAST TESTING

FINDING RULES

APPLY EASE ALGORITHM

RESULT ANALYSIS

You might also like

- Implementation of An Efficient Algorithm: 2. Related WorksDocument5 pagesImplementation of An Efficient Algorithm: 2. Related WorksJournal of Computer ApplicationsNo ratings yet

- Data Mining For Biological Data Analysis: Glover Eric Leo Cimi Smith CalvinDocument8 pagesData Mining For Biological Data Analysis: Glover Eric Leo Cimi Smith CalvinEric GloverNo ratings yet

- Image Content With Double Hashing Techniques: ISSN No. 2278-3091Document4 pagesImage Content With Double Hashing Techniques: ISSN No. 2278-3091WARSE JournalsNo ratings yet

- LSJ1512 - Progressive Duplicate DetectionDocument5 pagesLSJ1512 - Progressive Duplicate DetectionSwetha PattipakaNo ratings yet

- Data Mining PPT 7Document14 pagesData Mining PPT 7RUSHIKESH SAWANTNo ratings yet

- Performance Study of Association Rule Mining Algorithms For Dyeing Processing SystemDocument10 pagesPerformance Study of Association Rule Mining Algorithms For Dyeing Processing SystemiisteNo ratings yet

- Scientific Writing Parallel Computing V2Document15 pagesScientific Writing Parallel Computing V2VD MirelaNo ratings yet

- Incremental Association Rule Mining Using Promising Frequent Itemset AlgorithmDocument5 pagesIncremental Association Rule Mining Using Promising Frequent Itemset AlgorithmAmaranatha Reddy PNo ratings yet

- (IJCST-V4I2P44) :dr. K.KavithaDocument7 pages(IJCST-V4I2P44) :dr. K.KavithaEighthSenseGroupNo ratings yet

- AbstractsDocument3 pagesAbstractsManju ScmNo ratings yet

- Efficient Parallel Data Mining With The Apriori Algorithm On FpgasDocument16 pagesEfficient Parallel Data Mining With The Apriori Algorithm On FpgasKumara PrasannaNo ratings yet

- Efficient Algorithm For Mining Frequent Patterns Java ProjectDocument38 pagesEfficient Algorithm For Mining Frequent Patterns Java ProjectKavya SreeNo ratings yet

- Research Paper Apriori AlgorithmDocument7 pagesResearch Paper Apriori Algorithmxhzscbbkf100% (1)

- Self Learning Real Time Expert SystemDocument12 pagesSelf Learning Real Time Expert SystemCS & ITNo ratings yet

- Online Mining for Association Rules and Collective Anomalies in Data StreamsDocument10 pagesOnline Mining for Association Rules and Collective Anomalies in Data Streamsjaskeerat singhNo ratings yet

- Analysis of Time Series Rule Extraction Techniques: Hima Suresh, Dr. Kumudha RaimondDocument6 pagesAnalysis of Time Series Rule Extraction Techniques: Hima Suresh, Dr. Kumudha RaimondInternational Organization of Scientific Research (IOSR)No ratings yet

- New Algorithms for Fast Discovery of Association Rules Using Clustering and Single Database ScanDocument24 pagesNew Algorithms for Fast Discovery of Association Rules Using Clustering and Single Database ScanMade TokeNo ratings yet

- Data Mining Nov10Document2 pagesData Mining Nov10Harry Johal100% (1)

- Analysis and Implementation of FP & Q-FP Tree With Minimum CPU Utilization in Association Rule MiningDocument6 pagesAnalysis and Implementation of FP & Q-FP Tree With Minimum CPU Utilization in Association Rule MiningWARSE JournalsNo ratings yet

- 49 1530872658 - 06-07-2018 PDFDocument6 pages49 1530872658 - 06-07-2018 PDFrahul sharmaNo ratings yet

- Mining of Frequent Item With BSW Chunking: Pratik S. Chopade Prof. Priyanka MoreDocument4 pagesMining of Frequent Item With BSW Chunking: Pratik S. Chopade Prof. Priyanka MoreEditor IJRITCCNo ratings yet

- Compusoft, 3 (10), 1108-115 PDFDocument8 pagesCompusoft, 3 (10), 1108-115 PDFIjact EditorNo ratings yet

- Anomalous Topic Discovery in High Dimensional Discrete DataDocument4 pagesAnomalous Topic Discovery in High Dimensional Discrete DataBrightworld ProjectsNo ratings yet

- Efficient Data-Reduction Methods For On-Line Association Rule DiscoveryDocument19 pagesEfficient Data-Reduction Methods For On-Line Association Rule DiscoveryAsir DavidNo ratings yet

- A Rapid Hybird Clustring Algorithm For A Large Volumes of HighDocument77 pagesA Rapid Hybird Clustring Algorithm For A Large Volumes of HighRenowntechnologies VisakhapatnamNo ratings yet

- Apriori Based Novel Frequent Itemset Mining Mechanism: Issn NoDocument8 pagesApriori Based Novel Frequent Itemset Mining Mechanism: Issn NoWARSE JournalsNo ratings yet

- Novel and Efficient Approach For Duplicate Record DetectionDocument5 pagesNovel and Efficient Approach For Duplicate Record DetectionIJAERS JOURNALNo ratings yet

- An Optimized Distributed Association Rule Mining Algorithm in Parallel and Distributed Data Mining With XML Data For Improved Response TimeDocument14 pagesAn Optimized Distributed Association Rule Mining Algorithm in Parallel and Distributed Data Mining With XML Data For Improved Response TimeAshish PatelNo ratings yet

- Document-Oriented Database: This Database Is Known As A, It Is ADocument53 pagesDocument-Oriented Database: This Database Is Known As A, It Is Akanishka saxenaNo ratings yet

- Advanced Eclat Algorithm For Frequent Itemsets GenerationDocument19 pagesAdvanced Eclat Algorithm For Frequent Itemsets GenerationSabaniNo ratings yet

- Dynamic Oracle Performance Analytics: Using Normalized Metrics to Improve Database SpeedFrom EverandDynamic Oracle Performance Analytics: Using Normalized Metrics to Improve Database SpeedNo ratings yet

- Knowledge-Based Interactive Postmining of Association Rules Using OntologiesDocument3 pagesKnowledge-Based Interactive Postmining of Association Rules Using OntologiesMuthumanikandan HariramanNo ratings yet

- Unit 1 - 1Document3 pagesUnit 1 - 1Vijay ManeNo ratings yet

- Feature Subset Selection With Fast Algorithm ImplementationDocument5 pagesFeature Subset Selection With Fast Algorithm ImplementationseventhsensegroupNo ratings yet

- AbstracttDocument5 pagesAbstracttAlfred JeromeNo ratings yet

- Huffman CompressionDocument37 pagesHuffman CompressionMaestroanonNo ratings yet

- 25836647Document8 pages25836647Hoàng Duy ĐỗNo ratings yet

- Data Mining ReportDocument15 pagesData Mining ReportKrishna KiranNo ratings yet

- 8.research Plan-N.M MISHRADocument3 pages8.research Plan-N.M MISHRAnmmishra77No ratings yet

- ResearchDocument2 pagesResearchpostscriptNo ratings yet

- Efficient Transaction Reduction in Actionable Pattern Mining For High Voluminous Datasets Based On Bitmap and Class LabelsDocument8 pagesEfficient Transaction Reduction in Actionable Pattern Mining For High Voluminous Datasets Based On Bitmap and Class LabelsNinad SamelNo ratings yet

- Complexity Problems Handled by Big Data Prepared by Shreeya SharmaDocument9 pagesComplexity Problems Handled by Big Data Prepared by Shreeya SharmaPrincy SharmaNo ratings yet

- INFO408 DatabaseDocument6 pagesINFO408 DatabaseTanaka MatendNo ratings yet

- Anomaly Detection in LTE KPI With Machine LaerningDocument15 pagesAnomaly Detection in LTE KPI With Machine LaerningsatyajitNo ratings yet

- Logica Fuzzy y Redes NeuronalesDocument10 pagesLogica Fuzzy y Redes Neuronalesmivec32No ratings yet

- Data Mining and Database Systems: Where Is The Intersection?Document5 pagesData Mining and Database Systems: Where Is The Intersection?Juan KardNo ratings yet

- A Novel Datamining Based Approach For Remote Intrusion DetectionDocument6 pagesA Novel Datamining Based Approach For Remote Intrusion Detectionsurendiran123No ratings yet

- 1999 Ripple JoinDocument12 pages1999 Ripple JoinMacedo MaiaNo ratings yet

- 97 PPTDocument27 pages97 PPTRachel GraceNo ratings yet

- Data Mining and Database Systems Where Is The IntersectionDocument5 pagesData Mining and Database Systems Where Is The Intersectionjorge051289No ratings yet

- Data Mining Lab ReportDocument6 pagesData Mining Lab ReportRedowan Mahmud RatulNo ratings yet

- DATA MINING and MACHINE LEARNING. PREDICTIVE TECHNIQUES: ENSEMBLE METHODS, BOOSTING, BAGGING, RANDOM FOREST, DECISION TREES and REGRESSION TREES.: Examples with MATLABFrom EverandDATA MINING and MACHINE LEARNING. PREDICTIVE TECHNIQUES: ENSEMBLE METHODS, BOOSTING, BAGGING, RANDOM FOREST, DECISION TREES and REGRESSION TREES.: Examples with MATLABNo ratings yet

- A Robust and Efficient Real Time Network Intrusion Detection System Using Artificial Neural Network in Data MiningDocument10 pagesA Robust and Efficient Real Time Network Intrusion Detection System Using Artificial Neural Network in Data MiningijitcsNo ratings yet

- Rescuing Data From Decaying and Moribund Clinical Information SystemsDocument6 pagesRescuing Data From Decaying and Moribund Clinical Information SystemsMulya Nurmansyah ArdisasmitaNo ratings yet

- Autonomous Rule Creation For Intrusion Detection: 2011 IEEE Symposium On Computational Intelligence in Cyber SecurityDocument9 pagesAutonomous Rule Creation For Intrusion Detection: 2011 IEEE Symposium On Computational Intelligence in Cyber SecuritySara KhanNo ratings yet

- IEEE 2012 Titles AbstractDocument14 pagesIEEE 2012 Titles AbstractSathiya RajNo ratings yet

- E-Furniture House: Online Furniture Store ManagementDocument15 pagesE-Furniture House: Online Furniture Store Managementanand_gsoft3603No ratings yet

- Table of ContentsDocument5 pagesTable of Contentsanand_gsoft3603No ratings yet

- E-Furniture House: Online Furniture Store ManagementDocument15 pagesE-Furniture House: Online Furniture Store Managementanand_gsoft3603No ratings yet

- TD DFD ErdDocument4 pagesTD DFD Erdanand_gsoft3603No ratings yet

- E-Furniture House: Online Furniture Store ManagementDocument15 pagesE-Furniture House: Online Furniture Store Managementanand_gsoft3603No ratings yet

- First and SecondDocument3 pagesFirst and Secondanand_gsoft3603No ratings yet

- DFDDocument4 pagesDFDanand_gsoft3603No ratings yet

- A Study On Reverse Logistics Towards Retail Store ProductsDocument1 pageA Study On Reverse Logistics Towards Retail Store Productsanand_gsoft3603No ratings yet

- Barry GuardDocument14 pagesBarry Guardanand_gsoft3603No ratings yet

- E-Work Order Monitoring System: 2010 As Front End Tool and Microsoft SQL Server 2008 As Back End ToolDocument1 pageE-Work Order Monitoring System: 2010 As Front End Tool and Microsoft SQL Server 2008 As Back End Toolanand_gsoft3603No ratings yet

- Chapter - 1: 1.1 Meaning and Definition of Performance AppraisalDocument8 pagesChapter - 1: 1.1 Meaning and Definition of Performance Appraisalanand_gsoft3603No ratings yet

- Food ConsumptionDocument22 pagesFood Consumptionanand_gsoft3603No ratings yet

- Care Giver Suppliers QuestionnaireDocument5 pagesCare Giver Suppliers Questionnaireanand_gsoft3603No ratings yet

- Data Flow DiagramDocument3 pagesData Flow Diagramanand_gsoft3603No ratings yet

- Research Methodology, Data Collection and Analysis of Employee SurveyDocument3 pagesResearch Methodology, Data Collection and Analysis of Employee Surveyanand_gsoft36030% (1)

- Sony TV Brand Awareness StudyDocument9 pagesSony TV Brand Awareness Studyanand_gsoft3603No ratings yet

- Secure ATM Withdrawals with Fingerprint AuthenticationDocument2 pagesSecure ATM Withdrawals with Fingerprint Authenticationanand_gsoft3603No ratings yet

- Research Methodology, Data Collection and Analysis of Employee SurveyDocument3 pagesResearch Methodology, Data Collection and Analysis of Employee Surveyanand_gsoft36030% (1)

- Online Shopping DFDDocument5 pagesOnline Shopping DFDanand_gsoft3603No ratings yet

- Title of The ProjectDocument1 pageTitle of The Projectanand_gsoft3603No ratings yet

- Table NameDocument1 pageTable Nameanand_gsoft3603No ratings yet

- SdaDocument6 pagesSdaanand_gsoft3603No ratings yet

- System Testing and ImplementationDocument6 pagesSystem Testing and Implementationanand_gsoft3603No ratings yet

- Student Registration & File Upload Database TablesDocument2 pagesStudent Registration & File Upload Database Tablesanand_gsoft3603No ratings yet

- Index: 3.1.1 Drawbacks 3.2.1 FeaturesDocument1 pageIndex: 3.1.1 Drawbacks 3.2.1 Featuresanand_gsoft3603No ratings yet

- Vanet AlgorithmDocument1 pageVanet Algorithmanand_gsoft3603No ratings yet

- Important Telugu WordsDocument1 pageImportant Telugu Wordsanand_gsoft3603No ratings yet

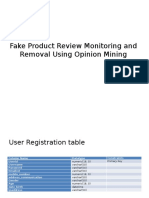

- Fake Product Review Monitoring and Removal Using Opinion MiningDocument10 pagesFake Product Review Monitoring and Removal Using Opinion Mininganand_gsoft3603No ratings yet

- User, Product, Review Database TablesDocument6 pagesUser, Product, Review Database Tablesanand_gsoft3603No ratings yet

- Backup Old CodeDocument2 pagesBackup Old Codeanand_gsoft3603No ratings yet

- Since 1977 Bonds Payable SolutionsDocument3 pagesSince 1977 Bonds Payable SolutionsNah HamzaNo ratings yet

- Parison of Dia para FerroDocument4 pagesParison of Dia para FerroMUNAZIRR FATHIMA F100% (1)

- SAP MM ReportsDocument59 pagesSAP MM Reportssaprajpal95% (21)

- A Grammar of Awa Pit (Cuaiquer) : An Indigenous Language of South-Western ColombiaDocument422 pagesA Grammar of Awa Pit (Cuaiquer) : An Indigenous Language of South-Western ColombiaJuan Felipe Hoyos García100% (1)

- Adafruit Color SensorDocument25 pagesAdafruit Color Sensorarijit_ghosh_18No ratings yet

- Ð.Ð.Á Valvoline Áóìá Áíáâáóç Ñéôóùíáó 9.6.2019: Omaäa ADocument6 pagesÐ.Ð.Á Valvoline Áóìá Áíáâáóç Ñéôóùíáó 9.6.2019: Omaäa AVagelis MoutoupasNo ratings yet

- Body Mechanics and Movement Learning Objectives:: by The End of This Lecture, The Student Will Be Able ToDocument19 pagesBody Mechanics and Movement Learning Objectives:: by The End of This Lecture, The Student Will Be Able TomahdiNo ratings yet

- NasaDocument26 pagesNasaMatei BuneaNo ratings yet

- LV 2000L AD2000 11B 16B Metric Dimension Drawing en 9820 9200 06 Ed00Document1 pageLV 2000L AD2000 11B 16B Metric Dimension Drawing en 9820 9200 06 Ed00FloydMG TecnominNo ratings yet

- MS Excel Word Powerpoint MCQsDocument64 pagesMS Excel Word Powerpoint MCQsNASAR IQBALNo ratings yet

- ISO-14001-2015 EMS RequirementsDocument17 pagesISO-14001-2015 EMS Requirementscuteboom1122No ratings yet

- All About Bearing and Lubrication A Complete GuideDocument20 pagesAll About Bearing and Lubrication A Complete GuideJitu JenaNo ratings yet

- Black Veil BridesDocument2 pagesBlack Veil BridesElyza MiradonaNo ratings yet

- Pro Ducorit UkDocument2 pagesPro Ducorit Uksreeraj1986No ratings yet

- ASTM D5895 - 2020 Tiempo SecadoDocument4 pagesASTM D5895 - 2020 Tiempo SecadoPablo OrtegaNo ratings yet

- 4 Types and Methods of Speech DeliveryDocument2 pages4 Types and Methods of Speech DeliveryKylie EralinoNo ratings yet

- Biokimia - DR - Maehan Hardjo M.biomed PHDDocument159 pagesBiokimia - DR - Maehan Hardjo M.biomed PHDHerryNo ratings yet

- Difference between Especially and SpeciallyDocument2 pagesDifference between Especially and SpeciallyCarlos ValenteNo ratings yet

- MT Series User Manual MT4YDocument28 pagesMT Series User Manual MT4YDhani Aristyawan SimangunsongNo ratings yet

- Solutions for QAT1001912Document3 pagesSolutions for QAT1001912NaveenNo ratings yet

- PREXC Operational Definition and Targets CY 2019 - 2020Document12 pagesPREXC Operational Definition and Targets CY 2019 - 2020iamaj8No ratings yet

- Kafka Netdb 06 2011 PDFDocument15 pagesKafka Netdb 06 2011 PDFaarishgNo ratings yet

- Inventory Valiuation Raw QueryDocument4 pagesInventory Valiuation Raw Querysatyanarayana NVSNo ratings yet

- Dbms PracticalDocument31 pagesDbms Practicalgautamchauhan566No ratings yet

- 4-7 The Law of Sines and The Law of Cosines PDFDocument40 pages4-7 The Law of Sines and The Law of Cosines PDFApple Vidal100% (1)

- Training Report PRASADDocument32 pagesTraining Report PRASADshekharazad_suman85% (13)

- NView NNM (V5) Operation Guide PDFDocument436 pagesNView NNM (V5) Operation Guide PDFAgoez100% (1)

- Airbag Inflation: The Airbag and Inflation System Stored in The Steering Wheel. See MoreDocument5 pagesAirbag Inflation: The Airbag and Inflation System Stored in The Steering Wheel. See MoreShivankur HingeNo ratings yet

- Vsphere Storage PDFDocument367 pagesVsphere Storage PDFNgo Van TruongNo ratings yet