Professional Documents

Culture Documents

Data Preprocessing

Uploaded by

yeswanth chowdary nidamanuri0 ratings0% found this document useful (0 votes)

6 views47 pagesCopyright

© © All Rights Reserved

Available Formats

PPTX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PPTX, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

6 views47 pagesData Preprocessing

Uploaded by

yeswanth chowdary nidamanuriCopyright:

© All Rights Reserved

Available Formats

Download as PPTX, PDF, TXT or read online from Scribd

You are on page 1of 47

Data Preprocessing

Managing Data with R

• One of the challenges faced while working

with massive datasets involves gathering,

preparing, and otherwise managing data from

a variety of sources.

Saving, loading, and removing R

data

structures

• To save a data structure to a file that can be

reloaded later or transferred to another

system, use the save() function.

• The save() function writes one or more R data

structures to the location specified by the file

parameter.

• Suppose you have three objects named x, y,

and z that you would like to save in a

• permanent file.

> save(x, y, z, file = "mydata.RData")

• The load() command can recreate any data

structures that have been saved to an .RData

file. To load the mydata.RData file we saved in

the preceding code, simply type:

> load("mydata.RData")

• After working on an R session for sometime,

you may have accumulated a number of data

structures.

• The ls() listing function returns a vector of all

the data structures currently in the memory.

> ls()

[1] "blood" "flu_status" "gender" "m"

[5] "pt_data" "subject_name" "subject1"

"symptoms"

[9] "temperature"

• R will automatically remove these from its memory upon

quitting the session, but for large data structures, you may

want to free up the memory sooner.

• The rm() remove function can be used for this purpose. For

example, to eliminate the m and subject1 objects, simply

type:

> rm(m, subject1)

• The rm() function can also be supplied with a character vector

of the object names to be removed. This works with the ls()

function to clear the entire R session:

> rm(list=ls())

Importing and saving data from

CSV files

• The most common tabular text file format is

the CSV (Comma-Separated Values) file,

which as the name suggests, uses the comma

as a delimiter.

• The CSV files can be imported to and

exported from many common applications. A

CSV file representing the medical dataset

constructed previously could be stored as:

subject_name,temperature,flu_status,gender,bl

ood_type

• John Doe,98.1,FALSE,MALE,O

• Jane Doe,98.6,FALSE,FEMALE,AB

• Steve Graves,101.4,TRUE,MALE,A

• Given a patient data file named pt_data.csv

located in the R working directory, the

read.csv() function can be used as follows to

load the file into R:

> pt_data <- read.csv("pt_data.csv",

stringsAsFactors = FALSE)

• By default, R assumes that the CSV file includes a

header line listing the names of the features in

the dataset.

• If a CSV file does not have a header, specify the

optionheader = FALSE, as shown in the following

command, and R will assign default

• feature names in the V1 and V2 forms and so on:

> mydata <- read.csv("mydata.csv",

stringsAsFactors = FALSE, header = FALSE)

• To save a data frame to a CSV file, use the

write.csv() function. If your data frame is

named pt_data, simply enter:

> write.csv(pt_data, file = "pt_data.csv",

row.names = FALSE)

Exploring and understanding data

• After collecting data and loading it into R's

data structures, the next step in the machine

learning process involves examining the data

in detail.

• We will explore the usedcars.csv dataset,

which contains actual data about used cars.

• Since the dataset is stored in the CSV form, we

can use the read.csv() function to load the

data into an R data frame:

> usedcars <- read.csv("usedcars.csv",

stringsAsFactors = FALSE)

Exploring the structure of data

• One of the first questions to ask is how the

dataset is organized.

• The str() function provides a method to

display the structure of R data structures such

as data frames, vectors, or lists. It can be used

to create the basic outline for our data

dictionary:

> str(usedcars)

• Using such a simple command, we learn a

wealth of information about the dataset.

Exploring numeric variables

• To investigate the numeric variables in the

used car data, we will employ a common set

of measurements to describe values known as

summary statistics.

• The summary() function displays several

common summary statistics. Let's take a look

at a single feature, year:

> summary(usedcars$year)

• We can also use the summary() function to

obtain summary statistics for several numeric

variables at the same time:

> summary(usedcars[c("price", "mileage")])

Measuring the central tendency –

mean and median

• Measures of central tendency are a class of

statistics used to identify a value that falls in

the middle of a set of data.

• You most likely are already familiar with one

common measure of center: the average. In

common use, when something is deemed

average, it falls somewhere between the

extreme ends of the scale.

• R also provides a mean() function, which

calculates the mean for a vector of numbers:

> mean(c(36000, 44000, 56000))

[1] 45333.33

• summary() output listed mean values for the

price and mileage variables. The means

suggest that the typical used car in this

dataset was listed at a price of $12,962 and

had an mileage of 44,261.

• Another commonly used measure of central

tendency is the median, which is the value

that occurs halfway through an ordered list of

values.

• As with the mean, R provides a median()

function, which we can apply to our salary

data, as shown in the following example:

> median(c(36000, 44000, 56000))

[1] 44000

Measuring spread – quartiles and

the five-number

summary

• To measure the diversity, we need to employ another

type of summary statistics that is concerned with the

spread of data, or how tightly or loosely the values

are spaced.

• The five-number summary is a set of five statistics

that roughly depict the spread of a feature's values.

1. Minimum (Min.)

2. First quartile, or Q1 (1st Qu.)

3. Median, or Q2 (Median)

4. Third quartile, or Q3 (3rd Qu.)

5. Maximum (Max.)

• Minimum and maximum are the most

extreme feature values, indicating the smallest

and largest values, respectively.

• R provides the min() and max() functions to

calculate these values on a vector of data.

• In R, range() function returns both the

minimum and maximum value.

range(usedcars$price)

• Combining range() with the diff() difference

function allows you to examine the range of

data

> diff(range(usedcars$price))

• The quartiles divide a dataset into four

portions.

• The seq() function is used to generate vectors

of evenly-spaced values. This makes it easy to

obtain other slices of data, such as the

quintiles (five groups), as shown in

• the following command:

• > quantile(usedcars$price, seq(from = 0, to =

1, by = 0.20))

• 0% 20% 40% 60% 80% 100%

• 3800.0 10759.4 12993.8 13992.0 14999.0

21992.0

Exploring categorical variables

• The used car dataset had three categorical

variables: model, color, and transmission.

• Additionally, we might consider treating the

year variable as categorical; although it has

been loaded as a numeric (int) type vector,

each year is a category that could apply to

multiple cars.

• A table that presents a single categorical

variable is known as a one-way table.

• The table() function can be used to generate

one-way tables for our used car data.

> table(usedcars$year)

> table(usedcars$model)

> table(usedcars$color)

• The table() output lists the categories of the

nominal variable and a count of the number of

values falling into this category.

• R can also perform the calculation of table

proportions directly, by using the prop.table()

command on a table produced by the table()

function:

model_table <- table(usedcars$model)

prop.table(model_table)

• The results of prop.table() can be combined

with other R functions to transform the

output.

> color_pct <- table(usedcars$color)

> color_pct <- prop.table(color_pct) * 100

> round(color_pct, digits = 1)

Exploring relationships between

variables

• So far, we have examined variables one at a

time, calculating only univariate statistics.

• bivariate relationships, which consider the

relationship between two variables.

• Relationships of more than two variables are

called multivariate relationships.

Data preprocessing

• Data preprocessing is the initial phase of

Machine Learning where data is prepared for

machine learning models.

Steps in Data Preprocessing

• Step 1: Importing the Dataset

• Step 2: Handling the Missing Data

• Step 3: Encoding Categorical Data.

• Step 4: Splitting the Dataset into the Training

and Test sets

• Training set

• Test set

• Step 5: Feature Scaling

• training_set

• test_set

Step 1: Importing the dataset

• Here is how to achieve this.

Dataset = read_csv('data.csv')

• This code imports our data stored in CSV

format.

• We can have a look at our data using the

‘view()’ function:

view(Dataset)

Step 2: Handling the missing data

• Before implementing our machine learning

models, this problem needs to be solved,

otherwise it will cause a serious problem to

our machine learning models. Therefore, it’s

our responsibility to ensure this missing data is

eliminated from our dataset using the most

appropriate technique.

• Here are two techniques we can use to handle missing data:

1.Delete the observation reporting the missing data:This technique is

suitable when dealing with big datasets and with very few missing values i.e.

deleting one row from a dataset with thousands of observations can not

affect the quality of the data.

When the dataset reports many missing values, it can be very dangerous to

use this technique. Deleting many rows from a dataset can lead to the loss of

crucial information contained in the data.

To ensure this does not happen, we make use of an appropriate technique

that has no harm to the quality of the data.

• Replace the missing data with the average of

the feature in which the data is missing:

• This technique is the best way so far to deal

with the missing values. Many statisticians

make use of this technique over that of the

first one.

Dataset$Age = ifelse(is.na(Dataset$Age),

ave(Dataset$Age, FUN = function (x)mean(x,

na.rm = TRUE)),Dataset$Age)

• What does the code above really do?

• Dataset$Age: simply take the Age column

from our dataset.

• In the Age column, we’ve just taken that from our data set, we

need to replace the missing data, and at the same time keep

the data that is not missing.

• This objective is achieved by the use of the if-else statement.

Our ifelse statement is taking three parameters:

• The first parameter is if the condition is true.

• The second parameter is the value we input if the condition is

true.

• The third parameter is the action we take if the condition is

false.

Our condition is is.na(Dataset$Age)

• This will tell us if a value in the Dataset$Age is

missing or not. It returns a logical output, YES

if a value is missing and NO if a value is not

missing.

• The second parameter, the ‘ave()’ function,

finds the mean of the Age column.

• Because this column reports NA values, we

need to exclude the null data in the calculation

of the mean, otherwise we shall obtain the

mean as NA.

• This is the reason we pass na.rm = TRUE in our

mean function just as to declare those values

that should be used and those should be

excluded when calculating the mean of the

vector Age.

• The third condition is the value that will be

returned if the value in the Age column of the

dataset is not missing.

• The missing value that was in the Age column

of our data set has successfully been replaced

with the mean of the same column.

• We do the same for the Salary column.

Step 3: Encoding categorical data

• Encoding refers to transforming text data into numeric data.

Encoding Categorical data simply means we are transforming

data that fall into categories into numeric data.

• In our dataset, the Country column is Categorical data with 3

levels i.e. France, Spain, and Germany. The purchased column

is Categorical data as well with 2 categories, i.e. YES and NO.

• The machine models we built on our dataset are based on

mathematical equations and it’s only take numbers in those

equations.

• To transform a categorical variable into

numeric, we use the factor() function.

• Let start by encoding the Country column.

Dataset$Country = factor(Dataset$Country,

levels = c('France','Spain','Germany'), labels =

c(1.0, 2.0 , 3.0 ))

We do the same for the purchased column.

Step 4: Splitting the dataset into

the training and test set

• In machine learning, we split data into two

parts:

• Training set: The part of the data that we

implement our machine learning model on.

• Test set: The part of the data that we evaluate

the performance of our machine learning

model on.

• library(caTools)# required library for data

splition

• set.seed(123)

• split = sample.split(Dataset$Purchased,

SplitRatio = 0.8)# returns true if observation

goes to the Training set and false if

observation goes to the test set.

• #Creating the training set and test set

separately

• training_set = subset(Dataset, split == TRUE)

• test_set = subset(Dataset, split == FALSE)

• training_set

• test_set

Step 5: Feature scaling

• It’s a common case that in most datasets,

features also known as inputs, are not on the

same scale. Many machine learning models

are Euclidian distant-based.

• It happens that, the features with the large

units dominate those with small units when it

comes to calculation of the Euclidian distance

and it will be as if those features with small

units do not exist.

• To ensure this does not occur, we need to encode our

features so that they all fall in the range between -3

and 3. There are several ways we can use to scale our

features. The most used one is the standardization

and normalization technique.

• The normalization technique is used when the data is

normally distributed while standardization works

with both normally distributed and the data that is

not normally distributed.

• The formula for these two techniques is shown

below.

• training_set[, 2:3] = scale(training_set[, 2:3])

• test_set[, 2:3] = scale(test_set[, 2:3])

• training_set

• test_set

If we fail to do so, R will show us an error such as:

• training_set = scale(training_set)# returns an error

• The reason is that our encoded columns are not

treated as numeric entries.

You might also like

- Introduction To Data Science With R ProgrammingDocument91 pagesIntroduction To Data Science With R ProgrammingVimal KumarNo ratings yet

- Introduction To RDocument36 pagesIntroduction To RRefael LavNo ratings yet

- Basics of R Programming and Data Structures PDFDocument80 pagesBasics of R Programming and Data Structures PDFAsmatullah KhanNo ratings yet

- Data Manipulation and Visualization in RDocument58 pagesData Manipulation and Visualization in RKundan VanamaNo ratings yet

- R LecturesDocument10 pagesR LecturesApam BenjaminNo ratings yet

- Unit-1 (Part-2) : Loading and Handling Data in RDocument78 pagesUnit-1 (Part-2) : Loading and Handling Data in RAshok ReddyNo ratings yet

- R TutorialDocument39 pagesR TutorialASClabISBNo ratings yet

- R Programming SlidesDocument73 pagesR Programming SlidesYan Jun HoNo ratings yet

- Managing and Understanding DataDocument42 pagesManaging and Understanding DataBlue WhaleNo ratings yet

- R - Lecture 4Document37 pagesR - Lecture 4mxmlan21No ratings yet

- UntitledDocument59 pagesUntitledSylvin GopayNo ratings yet

- Introduction to Data Science and Basic Data Analytics using RDocument32 pagesIntroduction to Data Science and Basic Data Analytics using RAkash Varma JampanaNo ratings yet

- Managing and Understanding DataDocument20 pagesManaging and Understanding DataBlue WhaleNo ratings yet

- Pandas For Machine Learning: AcadviewDocument18 pagesPandas For Machine Learning: AcadviewYash BansalNo ratings yet

- STATS LAB Basics of R PDFDocument77 pagesSTATS LAB Basics of R PDFAnanthu SajithNo ratings yet

- Functions and PackagesDocument7 pagesFunctions and PackagesNur SyazlianaNo ratings yet

- MIT 302 - Statistical Computing II - Tutorial 02Document5 pagesMIT 302 - Statistical Computing II - Tutorial 02evansojoshuzNo ratings yet

- Introduction To R: Arin Basu MD MPH DataanalyticsDocument33 pagesIntroduction To R: Arin Basu MD MPH DataanalyticsANo ratings yet

- ML FileDocument12 pagesML Filehdofficial2003No ratings yet

- R ProgrammingDocument35 pagesR Programmingharshit rajNo ratings yet

- Introduction To RDocument20 pagesIntroduction To Rseptian_bbyNo ratings yet

- DM File - MergedDocument37 pagesDM File - MergedCSD70 Vinay SelwalNo ratings yet

- Modul 04Document31 pagesModul 04J Warneck GultømNo ratings yet

- ST 540: An Introduction To RDocument6 pagesST 540: An Introduction To RVeru ReenaNo ratings yet

- Ai - Phase 3Document9 pagesAi - Phase 3Manikandan NNo ratings yet

- Tidyverse: Core Packages in TidyverseDocument8 pagesTidyverse: Core Packages in TidyverseAbhishekNo ratings yet

- R GettingstartedDocument7 pagesR GettingstartedChandra Prakash KhatriNo ratings yet

- Introduction To R Programming 1691124649Document79 pagesIntroduction To R Programming 1691124649puneetbdNo ratings yet

- What Is A Data Structure?: Data Structures in Data ScienceDocument24 pagesWhat Is A Data Structure?: Data Structures in Data ScienceMeghna ChoudharyNo ratings yet

- ECONOMY OF DIFFERENT COUNTRIESDocument24 pagesECONOMY OF DIFFERENT COUNTRIESrs5370No ratings yet

- DatapreprocessingDocument8 pagesDatapreprocessingNAVYA TadisettyNo ratings yet

- 2 UndefinedDocument86 pages2 Undefinedjefoli1651No ratings yet

- What Is Compiler in Datastage - Compilation Process in DatastageDocument14 pagesWhat Is Compiler in Datastage - Compilation Process in DatastageshivnatNo ratings yet

- Vectors, Factors, Lists, Arrays and Dataframes: Introduction To ProgrammingDocument87 pagesVectors, Factors, Lists, Arrays and Dataframes: Introduction To ProgrammingAYUSH RAVINo ratings yet

- Talk 2-Data Structures in R-UnlockedDocument52 pagesTalk 2-Data Structures in R-UnlockedAYUSH RAVINo ratings yet

- R Programming For NGS Data AnalysisDocument5 pagesR Programming For NGS Data AnalysisAbcdNo ratings yet

- Stock Data Pivoted into DataFrames for AnalysisDocument4 pagesStock Data Pivoted into DataFrames for Analysisyogesh patil100% (1)

- #Create Vector of Numeric Values #Display Class of VectorDocument10 pages#Create Vector of Numeric Values #Display Class of VectorAnooj SrivastavaNo ratings yet

- R Lecture#2Document56 pagesR Lecture#2Muhammad HamdanNo ratings yet

- Data Structures Lec PDFDocument156 pagesData Structures Lec PDFTigabu YayaNo ratings yet

- BIG DATA ANALYTICS INTRODUCTIONDocument65 pagesBIG DATA ANALYTICS INTRODUCTIONMurtaza VasanwalaNo ratings yet

- Bdo Co1 Session 4Document43 pagesBdo Co1 Session 4s.m.pasha0709No ratings yet

- Introduction To R Installation: Data Types Value ExamplesDocument9 pagesIntroduction To R Installation: Data Types Value ExamplesDenis ShpekaNo ratings yet

- RANDOM FOREST (Binary Classification)Document5 pagesRANDOM FOREST (Binary Classification)Noor Ul HaqNo ratings yet

- R Short TutorialDocument5 pagesR Short TutorialPratiush TyagiNo ratings yet

- Time Series Analysis With R - Part IDocument23 pagesTime Series Analysis With R - Part Ithcm2011No ratings yet

- Advanced C Concepts and Programming: First EditionFrom EverandAdvanced C Concepts and Programming: First EditionRating: 3 out of 5 stars3/5 (1)

- Sort Pandas DataFrameDocument37 pagesSort Pandas DataFrameB. Jennifer100% (1)

- Data Exploration With R I PDFDocument21 pagesData Exploration With R I PDFatiqah ariffNo ratings yet

- Mini Project - Factor Hair Analysis: Sravanthi.MDocument24 pagesMini Project - Factor Hair Analysis: Sravanthi.MSweety SekharNo ratings yet

- Part I: Introductory Materials: Introduction To RDocument25 pagesPart I: Introductory Materials: Introduction To Rpratiksha patilNo ratings yet

- An Introduction To R: 1 BackgroundDocument17 pagesAn Introduction To R: 1 BackgroundDeepak GuptaNo ratings yet

- Implementing Machine Learning AlgorithmsDocument43 pagesImplementing Machine Learning AlgorithmsPankaj Singh100% (1)

- Differentiate Between Data Type and Data StructuresDocument11 pagesDifferentiate Between Data Type and Data StructureskrishnakumarNo ratings yet

- FP Unit 3Document105 pagesFP Unit 3Dimple GullaNo ratings yet

- Tutorial 03 - Introduction To DataDocument7 pagesTutorial 03 - Introduction To DataHuzaima QuddusNo ratings yet

- Aindump.70 452.v2010!11!12.byDocument71 pagesAindump.70 452.v2010!11!12.byvikas4cat09No ratings yet

- Data Structure NotesDocument171 pagesData Structure NoteskavirajeeNo ratings yet

- Unit 3 (MCQ)Document9 pagesUnit 3 (MCQ)yeswanth chowdary nidamanuriNo ratings yet

- Classify Mobile Phone Spam with Naive BayesDocument35 pagesClassify Mobile Phone Spam with Naive BayesGaganvir kaurNo ratings yet

- R VectorsDocument7 pagesR Vectorsyeswanth chowdary nidamanuriNo ratings yet

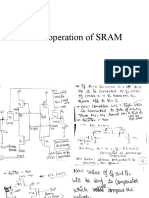

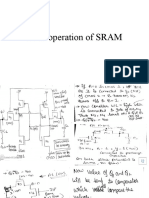

- Write Operation of SRAMDocument2 pagesWrite Operation of SRAMyeswanth chowdary nidamanuriNo ratings yet

- Synchronous Sequential Logic: UNIT-4Document52 pagesSynchronous Sequential Logic: UNIT-4yeswanth chowdary nidamanuriNo ratings yet

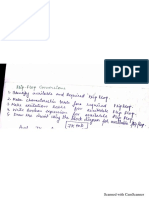

- Flip Flop COnversionsDocument3 pagesFlip Flop COnversionsyeswanth chowdary nidamanuriNo ratings yet

- SRAM Read Operation ExplainedDocument4 pagesSRAM Read Operation Explainedyeswanth chowdary nidamanuriNo ratings yet

- Read Operation of SRAMDocument4 pagesRead Operation of SRAMyeswanth chowdary nidamanuriNo ratings yet

- Memories CompleteDocument51 pagesMemories Completeyeswanth chowdary nidamanuriNo ratings yet

- Software Configuration ManagementDocument33 pagesSoftware Configuration Managementyeswanth chowdary nidamanuriNo ratings yet

- Flip Flops (SR, JK, D)Document8 pagesFlip Flops (SR, JK, D)yeswanth chowdary nidamanuriNo ratings yet

- Flip Flop COnversionsDocument3 pagesFlip Flop COnversionsyeswanth chowdary nidamanuriNo ratings yet

- Software MaintainenceDocument29 pagesSoftware Maintainenceyeswanth chowdary nidamanuriNo ratings yet

- Medicinal Plant Identification Using Machine Learning".InDocument27 pagesMedicinal Plant Identification Using Machine Learning".Inrajesh mechNo ratings yet

- Change in Mangrove SoilDocument16 pagesChange in Mangrove Soilkarthika gopiNo ratings yet

- Air-Writing Recognition Using Deep Convolutional and Recurrent Neural Network ArchitecturesDocument6 pagesAir-Writing Recognition Using Deep Convolutional and Recurrent Neural Network ArchitecturesVeda GorrepatiNo ratings yet

- Topic 4Document32 pagesTopic 4hmood966No ratings yet

- 05 Deep LearningDocument53 pages05 Deep LearningAgus SugihartoNo ratings yet

- Designing a 2-in-1 weighing scale and egg sorting machineDocument20 pagesDesigning a 2-in-1 weighing scale and egg sorting machineANCHETA, Yuri Mark Christian N.No ratings yet

- A Machine Learning Project ReportDocument12 pagesA Machine Learning Project ReportSparsh DhamaNo ratings yet

- Journal PublicationsDocument13 pagesJournal PublicationsSaritaNo ratings yet

- Proposal TemplateDocument15 pagesProposal Templatezafararham233No ratings yet

- Lyzhov 20 ADocument10 pagesLyzhov 20 AammuaratiNo ratings yet

- Data Science Projects For Final YearDocument1 pageData Science Projects For Final YearNageswar MakalaNo ratings yet

- Artificial Neural Network PHD ThesisDocument5 pagesArtificial Neural Network PHD Thesisimddtsief100% (2)

- Application of Soft Computing Techniques (KCS 056Document38 pagesApplication of Soft Computing Techniques (KCS 056you • were • trolledNo ratings yet

- LLaMA 2Document77 pagesLLaMA 2권오민 / 학생 / 전기·정보공학부No ratings yet

- LogiQA: A Challenge Dataset For Machine Reading Comprehension With Logical ReasoningDocument7 pagesLogiQA: A Challenge Dataset For Machine Reading Comprehension With Logical Reasoningyvonnewjy97No ratings yet

- Automated Seismic InterpretationDocument38 pagesAutomated Seismic InterpretationAhmedNo ratings yet

- Detecting Fake News with NLP and BlockchainDocument83 pagesDetecting Fake News with NLP and BlockchainKeerthana MurugesanNo ratings yet

- State of Charge SoC Estimation of Battery Energy Storage System BESS Using Artificial Neural Network ANN Based On IoT - Enabled Embedded SystemDocument7 pagesState of Charge SoC Estimation of Battery Energy Storage System BESS Using Artificial Neural Network ANN Based On IoT - Enabled Embedded SystemSarath SanthoshNo ratings yet

- 02 -Bharghav Fake News DetectionDocument49 pages02 -Bharghav Fake News DetectionDileep Kumar UllamparthiNo ratings yet

- Introduction To Machine Learning, Neural Networks, and Deep LearningDocument12 pagesIntroduction To Machine Learning, Neural Networks, and Deep LearningAdit SanurNo ratings yet

- 1.1 Deep Structural Enhanced Network For Document ClusteringDocument16 pages1.1 Deep Structural Enhanced Network For Document ClusteringAli Ahmed ShaikhNo ratings yet

- AIML Final Cpy WordDocument15 pagesAIML Final Cpy WordSachin ChavanNo ratings yet

- People Identification Via Tongue Print Using Fine-Tuning Deep LearningDocument9 pagesPeople Identification Via Tongue Print Using Fine-Tuning Deep LearningIJRES teamNo ratings yet

- Lecture - 5 - ValidationDocument30 pagesLecture - 5 - ValidationbberkcanNo ratings yet

- Mini Proj RCT 222 PDFDocument34 pagesMini Proj RCT 222 PDF4073 kolakaluru mounishaNo ratings yet

- An Overview of Backdoor Attacks Against Deep Neural Networks and Possible DefencesDocument27 pagesAn Overview of Backdoor Attacks Against Deep Neural Networks and Possible DefencesAnwar ShahNo ratings yet

- Paper 38-Inspection System For Glass Bottle Defect ClassificationDocument10 pagesPaper 38-Inspection System For Glass Bottle Defect Classificationporix86No ratings yet

- Plant Leaf Disease PredictionDocument14 pagesPlant Leaf Disease PredictionSUSHANT KUMAR PANDEYNo ratings yet

- Related WorkedDocument10 pagesRelated WorkedZinNo ratings yet

- Masters in Data Science BrochureDocument20 pagesMasters in Data Science BrochureHeisenberg FredNo ratings yet