Professional Documents

Culture Documents

Innovation 24 Finalppt

Uploaded by

Appu0 ratings0% found this document useful (0 votes)

2 views10 pagesOriginal Title

Innovation_24_finalppt

Copyright

© © All Rights Reserved

Available Formats

PPTX, PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PPTX, PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

2 views10 pagesInnovation 24 Finalppt

Uploaded by

AppuCopyright:

© All Rights Reserved

Available Formats

Download as PPTX, PDF, TXT or read online from Scribd

You are on page 1of 10

AI for custom

documents

Team Name: DocSpeak

Team Members: 1) Apurv Jadhav

2) Jayaram Iyer

3) Vedant Kesarkar

4) Soham Jadhav

Affiliated Institute: SIES GST

Problem

Statement

Some existing problems with

LLM:-

• Limit of text you can input

• Outdated knowledge for

• Comparison from multiple

sources

Motivation

• It becomes very difficult as a student going through the

prescribed textbooks by MU for the subjects I am studying.

• In such a case it would be easy if I could just provide a

document to an LLM and let it do the work for me.

• The project we created helps the user provide custom

knowledge to the LLM and communicate with it.

• It also enables them to provide multiple documents about the

same topic and make comparisons between them.

Methodology 1. Data Ingestion: Convert each PDF document into text format

since PDFs are binary. Split the text into manageable chunks.

2. Text Embedding: Use OpenAI (specifically GPT-4) to create

embeddings (number representations) of the text chunks. These

embeddings serve as numerical representations of the documents.

3. Vector Store: Store these embeddings in a vector store, essentially

a database, organized by namespaces corresponding to different

documents or years.

4. Query Processing:

• For single-document queries: Extract the relevant namespace

from the query, retrieve the embeddings from the corresponding

vector store, and generate responses using GPT-4.

• For multi-document queries: Extract years mentioned in the

query, map them to their corresponding namespaces, retrieve

embeddings from multiple namespaces, and combine them to

generate responses.

Innovation

• Multi-Document Querying: Users can query

across multiple PDFs simultaneously, enabling

trend analysis and insights extraction.

• Dynamic Namespace Extraction: Automatic

identification of document identifiers streamlines

querying across multiple documents.

• Intelligent Contextual Analysis: Leveraging

GPT-3.5, our system provides accurate responses

tailored to query and document context.

• Source Attribution: Enhances transparency by

displaying sources used for response generation,

empowering informed decision-making.

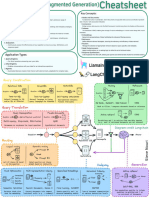

Flowchart

Implementation

• Data Preparation: Load PDF

documents, convert to text, split into

chunks, and assign namespaces (year +

document identifier).

• Vector Storage: Use a service like

PineCone to store embeddings organized

by namespaces.

• Query Handling: Extract years from

queries, map to namespaces, retrieve

relevant embeddings, and generate

responses using GPT-4.

Future Scope

1. Advanced Image Extraction:

Implement cutting-edge image processing algorithms to extract text and

relevant information from images, enabling users to query and interact

with both textual and visual content.

2. Visual Data Analytics:

Develop tools for visual data analytics, enabling users to gain insights and

derive meaning from visual content through interactive visualizations and

data exploration techniques.

3. Generative Image Query:

Integrate cutting-edge generative models like DALL-E to generate images

based on user queries, allowing for visually rich and diverse search

results.

Conclusion

Our project revolutionizes document exploration by seamlessly integrating generative AI

technologies. With a focus on user experience and customization, we offer intuitive features for

informed decision-making and meaningful outcomes through document exploration. This

transformative paradigm shift empowers users to maximize productivity, enhance understanding,

and take action with confidence.

References

• A Comprehensive Overview of Large Language Models--Humza Naveed1, Asad Ullah Khan1, Shi Qiu2,

Muhammad Saqib (2024)

• Vector Database: Storage and Retrieval Technique, Challenge--Yikun Han, Chunjiang Liu, and Pengfei

Wang (2024)

• Pinecone: A Vector Database for Analytics at Scale- Qian Yu, Justin Culbreth, Laurent Amar, Vivien

Nguyen, Jiaxuan Huang, Eugene Vinitsky, and Samay Dhawan.(2021)

• AI-Based Custom Test Case Generation for Software Testing-- Huiqun Yu, Wei Huang, Hongyu Zhang,

Qian Li, and Xiaolong Xie. (2018)

You might also like

- Easychair Preprint: Pallavi Kohakade and Sujata JadhavDocument5 pagesEasychair Preprint: Pallavi Kohakade and Sujata JadhavsauravNo ratings yet

- Bda Super ImpDocument35 pagesBda Super ImpWWE ROCKERSNo ratings yet

- Data Scientist Nanodegree SyllabusDocument16 pagesData Scientist Nanodegree SyllabuslavanyaNo ratings yet

- RAG_1708257109Document5 pagesRAG_1708257109Rakesh ShindheNo ratings yet

- EmbeddingsDocument13 pagesEmbeddingsbigdata.vamsiNo ratings yet

- Textbook Reference Database Management Systems: Chapter 1Document34 pagesTextbook Reference Database Management Systems: Chapter 1zNo ratings yet

- TOPIC ANALYSIS PRESENTATIONDocument23 pagesTOPIC ANALYSIS PRESENTATIONNader AlFakeehNo ratings yet

- Literature Review of Library Management System PDFDocument6 pagesLiterature Review of Library Management System PDFc5rek9r4No ratings yet

- Data Management For Research: Aaron Collie, MSU Libraries Lisa Schmidt, University ArchivesDocument66 pagesData Management For Research: Aaron Collie, MSU Libraries Lisa Schmidt, University Archivesbala_07123No ratings yet

- Information Retrieval & Data Mining: Smart PC ExplorerDocument14 pagesInformation Retrieval & Data Mining: Smart PC ExplorerSaurabh SinghNo ratings yet

- 07 - Object DBMSsDocument36 pages07 - Object DBMSsAkun BobokanNo ratings yet

- Week 1Document28 pagesWeek 1Pac SaQiiNo ratings yet

- Web Information RetrievalDocument10 pagesWeb Information RetrievalBaniNo ratings yet

- Yellow Black Diagonal Blocks Basic Simple PresentationDocument13 pagesYellow Black Diagonal Blocks Basic Simple PresentationShaneNo ratings yet

- E-Content StandardsDocument39 pagesE-Content StandardsYazhini VenkatesanNo ratings yet

- Reviews and Comments For Cross Domain Recommendations and Decision MakingDocument5 pagesReviews and Comments For Cross Domain Recommendations and Decision MakingAnkush BabbarNo ratings yet

- AdditionalDocument1,008 pagesAdditionalKanishk AgrawalNo ratings yet

- Chapter 1 To 4Document45 pagesChapter 1 To 4phoenixgirl1980zNo ratings yet

- Chat-Bots Project PresentationDocument33 pagesChat-Bots Project PresentationRamanjot KaurNo ratings yet

- Cse 511Document7 pagesCse 511Ioana Raluca TiriacNo ratings yet

- Lecture1 IntroductionDocument27 pagesLecture1 IntroductionElon DuskNo ratings yet

- Construction of Ontology-Based Software Repositories by Text MiningDocument8 pagesConstruction of Ontology-Based Software Repositories by Text MiningHenri RameshNo ratings yet

- Dbms MANUALDocument98 pagesDbms MANUALYogita GhumareNo ratings yet

- Lecture 10 - Data Mining in PracticeDocument41 pagesLecture 10 - Data Mining in PracticejohndeuterokNo ratings yet

- Science BSC Information Technology Semester 5 2019 November Next Generation Technologies CbcsDocument21 pagesScience BSC Information Technology Semester 5 2019 November Next Generation Technologies Cbcsnikhilsingh9637115898No ratings yet

- Large Scale Semantic Data Integration And: Analytics Through Cloud: A Case Study in BioinformaticsDocument25 pagesLarge Scale Semantic Data Integration And: Analytics Through Cloud: A Case Study in Bioinformaticsarteepu4No ratings yet

- I Certify That The Work Submitted For This Assignment Is My Own and Research Sources Are Fully AcknowledgedDocument17 pagesI Certify That The Work Submitted For This Assignment Is My Own and Research Sources Are Fully AcknowledgedLê Văn PhươngNo ratings yet

- 19.Document21 pages19.강명훈No ratings yet

- Text Mining: An IntroductionDocument37 pagesText Mining: An Introductionsneha kinNo ratings yet

- Text Mining - AnalyticsDocument35 pagesText Mining - AnalyticsSukeshan RNo ratings yet

- Programming with Data SyllabusDocument9 pagesProgramming with Data Syllabusinhytrv6vt7byuin32No ratings yet

- Contextual Information Search Based On Domain Using Query ExpansionDocument4 pagesContextual Information Search Based On Domain Using Query ExpansionInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Analyze Qualitative DataDocument17 pagesAnalyze Qualitative DataCocNewbieNo ratings yet

- Tutorial 1Document14 pagesTutorial 1Sanjay DahiyaNo ratings yet

- Machine Learning For Cyber: Unit 1: IntroductionDocument23 pagesMachine Learning For Cyber: Unit 1: IntroductionClarismundo Melo de SouzaNo ratings yet

- Info RetrievalDocument14 pagesInfo RetrievalSIDDHINo ratings yet

- Open Archive Library: MeaningDocument4 pagesOpen Archive Library: MeaningmoregauravNo ratings yet

- DWDM Unit 2Document46 pagesDWDM Unit 2sri charanNo ratings yet

- Modern Information Retrieval: Computer Engineering Department Fall 2005Document19 pagesModern Information Retrieval: Computer Engineering Department Fall 2005Oliver QueenNo ratings yet

- Interactive Query and Search in Semistructured Databases: Roy Goldman, Jennifer WidomDocument7 pagesInteractive Query and Search in Semistructured Databases: Roy Goldman, Jennifer WidompostscriptNo ratings yet

- Data Mining Techniques and ApplicationsDocument19 pagesData Mining Techniques and Applicationsshamiruksha katarakiNo ratings yet

- Data and Business Intelligence: Bidgoli, MIS, 10th Edition. © 2021 CengageDocument19 pagesData and Business Intelligence: Bidgoli, MIS, 10th Edition. © 2021 CengageMose MosehNo ratings yet

- FinalProject DescriptionDocument5 pagesFinalProject DescriptionBhanu ReddyNo ratings yet

- Combining Lexical and Semantic Features For Short Text ClassificationDocument9 pagesCombining Lexical and Semantic Features For Short Text ClassificationTroy CabrillasNo ratings yet

- Csit1232 (2021 - 07 - 30 08 - 37 - 35 UTC)Document11 pagesCsit1232 (2021 - 07 - 30 08 - 37 - 35 UTC)Anil KumarNo ratings yet

- Issues and Challenges For Digital ResourcesDocument6 pagesIssues and Challenges For Digital ResourcesAnonymous CwJeBCAXpNo ratings yet

- Literature Review in Computer Science ResearchDocument7 pagesLiterature Review in Computer Science Researchguzxwacnd100% (2)

- Cse3087 Information-retrieval-And-Organization Eth 1.0 0 Information Retrieval and Organization-convertedDocument3 pagesCse3087 Information-retrieval-And-Organization Eth 1.0 0 Information Retrieval and Organization-convertedJaswanthh KrishnaNo ratings yet

- Data Architect Nanodegree Program SyllabusDocument12 pagesData Architect Nanodegree Program SyllabusibrahimnoohNo ratings yet

- Introduction to eResearch: Linking People to ResourcesDocument17 pagesIntroduction to eResearch: Linking People to Resourcespolen deoneNo ratings yet

- Unit 1: Introduction and Data Pre-ProcessingDocument71 pagesUnit 1: Introduction and Data Pre-Processingmanju kakkarNo ratings yet

- Rule-Based Knowledge Extraction from CVsDocument7 pagesRule-Based Knowledge Extraction from CVsMichael PineroNo ratings yet

- Text Mining & Applications in Social Media: by Anthony YangDocument30 pagesText Mining & Applications in Social Media: by Anthony YangAhmed GamalNo ratings yet

- Introduction To Information RetrievalDocument50 pagesIntroduction To Information RetrievalasmaNo ratings yet

- DM Mod 1Document17 pagesDM Mod 1brandon paxtonNo ratings yet

- information retrievalDocument62 pagesinformation retrievallatigudataNo ratings yet

- Chapter 1Document71 pagesChapter 1Samay D NaikNo ratings yet

- RepoAI: Automated Project Reporting with SummariesDocument6 pagesRepoAI: Automated Project Reporting with SummariesKshithij R KikkeriNo ratings yet

- Lect7 IoT BigData1Document28 pagesLect7 IoT BigData1Eng:Mostafa Morsy MohamedNo ratings yet

- Industrial Training Report-PrakashDocument21 pagesIndustrial Training Report-PrakashPrakash KumarNo ratings yet

- Communications Law Liberties Restraints and The Modern Media PDFDocument2 pagesCommunications Law Liberties Restraints and The Modern Media PDFYvetteNo ratings yet

- It TestDocument2 pagesIt Testhaisbzaman0% (6)

- POPS PCMS WalkthroughDocument338 pagesPOPS PCMS WalkthroughVerne Gonzales Jr.No ratings yet

- TscanDocument751 pagesTscanCorina LuceroNo ratings yet

- 1001 Songs You Must Hear Before You Die 27526Document7 pages1001 Songs You Must Hear Before You Die 27526Nicholas HelmstetterNo ratings yet

- Pro-Watch 4.5 Release Notes Jan 16 2019 PDFDocument137 pagesPro-Watch 4.5 Release Notes Jan 16 2019 PDFTiago DutraNo ratings yet

- Install or Upgrade FEMAP License ServerDocument5 pagesInstall or Upgrade FEMAP License Serverantonio carlos peixoto de miranda gomesNo ratings yet

- Ilnas-En Iso 10407-2:2008Document9 pagesIlnas-En Iso 10407-2:2008Ulviyye ElesgerovaNo ratings yet

- Live UpdateDocument7 pagesLive UpdatepatrontransNo ratings yet

- Normas Pest Management ScienceDocument7 pagesNormas Pest Management ScienceCarine Mesquita Eduardo BarrosNo ratings yet

- Busi 610 Literature Review OutlineDocument7 pagesBusi 610 Literature Review Outlinefvg4bacd100% (1)

- Dissertation 1984 OrwellDocument6 pagesDissertation 1984 OrwellCanSomeoneWriteMyPaperForMeMadison100% (1)

- Diploma Digital Assignment Technical Learner Guide v1Document13 pagesDiploma Digital Assignment Technical Learner Guide v1rameestvrNo ratings yet

- APRS Telemetry Toolkit PDFDocument15 pagesAPRS Telemetry Toolkit PDFCT2IWWNo ratings yet

- Thesis Title For English Language TeachingDocument8 pagesThesis Title For English Language TeachingGina Rizzo100% (2)

- Temenos Marketplace Product Certification GuidanceDocument11 pagesTemenos Marketplace Product Certification GuidanceMohamed Amin MessaoudNo ratings yet

- Operation and Qualification of Stability Chamber IVDocument21 pagesOperation and Qualification of Stability Chamber IVsystacare remedies100% (1)

- 2021-2022 HCI Portfolio Guidelines 20211004Document2 pages2021-2022 HCI Portfolio Guidelines 20211004Khushi ShahNo ratings yet

- Beat Saber songs troubleshootingDocument2 pagesBeat Saber songs troubleshootingD ENo ratings yet

- Evota New LFD CatalogueDocument4 pagesEvota New LFD Cataloguesunil kambleNo ratings yet

- William J. "Bill" Mccalpin Edpp, Cdia, Mit, Lit The Xenos Group (972) 857-0776 Xplor Global Conference Los Angeles, Ca 1999Document103 pagesWilliam J. "Bill" Mccalpin Edpp, Cdia, Mit, Lit The Xenos Group (972) 857-0776 Xplor Global Conference Los Angeles, Ca 1999Ashish GuptaNo ratings yet

- Smartform Spool To PDF To EmailDocument14 pagesSmartform Spool To PDF To EmailnileshforyouNo ratings yet

- MKS TFT28 DataSheetDocument21 pagesMKS TFT28 DataSheetMonroy QuispeNo ratings yet

- Examples Branding PDF AccentureDocument2 pagesExamples Branding PDF AccentureElizabethNo ratings yet

- Delta Human Machine Interface DOP-100 Series: Automation For A Changing WorldDocument42 pagesDelta Human Machine Interface DOP-100 Series: Automation For A Changing WorldRakesh (Max Group)No ratings yet

- SH (NA) - 081228ENG-K - GOT2000 Series MES Interface Function Manual For GT Works3 Version1Document222 pagesSH (NA) - 081228ENG-K - GOT2000 Series MES Interface Function Manual For GT Works3 Version1AGUSTIN MORALESNo ratings yet

- A4 - GIGI Brazilian Cheeky Thong Bikini Bottom - GigipatternsDocument10 pagesA4 - GIGI Brazilian Cheeky Thong Bikini Bottom - GigipatternsTeodoro MendezNo ratings yet

- 101 Most Useful Websites On The InternetDocument5 pages101 Most Useful Websites On The Internetbionicras100% (1)

- Passport Management System Project Documentation PDFDocument10 pagesPassport Management System Project Documentation PDFMnNo ratings yet