Professional Documents

Culture Documents

2 Probabilistic Robot

Uploaded by

Stefano Romeu ZeplinOriginal Description:

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

2 Probabilistic Robot

Uploaded by

Stefano Romeu ZeplinCopyright:

Available Formats

City College of New York

1

Dr. Jizhong Xiao

Department of Electrical Engineering

City College of New York

jxiao@ccny.cuny.edu

Probabilistic Robotics

Advanced Mobile Robotics

City College of New York

2

Robot Navigation

Fundamental problems to provide a mobile

robot with autonomous capabilities:

Where am I going

Whats the best way there?

Where have I been? how to create an

environmental map with imperfect sensors?

Where am I? how a robot can tell where

it is on a map?

What if youre lost and dont have a map?

Mapping

Localization

Robot SLAM

Path Planning

Mission Planning

City College of New York

3

Representation of the Environment

Environment Representation

Continuos Metric

x,y,u

Discrete Metric

metric grid

Discrete Topological

topological grid

City College of New York

4

Localization, Where am I?

?

Odometry, Dead Reckoning

Localization base on external sensors,

beacons or landmarks

Probabilistic Map Based Localization

Observation

Map

data base

Prediction of

Position

(e.g. odometry)

P

e

r

c

e

p

t

i

o

n

Matching

Position Update

(Estimation?)

raw sensor data or

extracted features

predicted position

position

matched

observati ons

YES

Encoder

P

e

r

c

e

p

t

i

o

n

City College of New York

5

Localization Methods

Mathematic Background, Bayes Filters

Markov Localization:

Central idea: represent the robots belief by a probability distribution

over possible positions, and uses Bayes rule and convolution to update

the belief whenever the robot senses or moves

Markov Assumption: past and future data are independent if one knows the

current state

Kalman Filtering

Central idea: posing localization problem as a sensor fusion problem

Assumption: gaussian distribution function

Particle Filtering

Central idea: Sample-based, nonparametric Filter

Monte-Carlo method

SLAM (simultaneous localization and mapping)

Multi-robot localization

City College of New York

6

Markov Localization

Applying probability theory to robot localization

Markov localization uses an

explicit, discrete representation for the probability of

all position in the state space.

This is usually done by representing the environment by a

grid or a topological graph with a finite number of possible

states (positions).

During each update, the probability for each state

(element) of the entire space is updated.

City College of New York

7

Markov Localization Example

Assume the robot position is one-

dimensional

The robot is placed

somewhere in the

environment but it is

not told its location

The robot queries its

sensors and finds out it

is next to a door

City College of New York

8

Markov Localization Example

The robot moves one

meter forward. To

account for inherent

noise in robot motion the

new belief is smoother

The robot queries its

sensors and again it

finds itself next to a

door

City College of New York

9

Probabilistic Robotics

Falls in between model-based and behavior-

based techniques

There are models, and sensor measurements, but they are

assumed to be incomplete and insufficient for control

Statistics provides the mathematical glue to integrate

models and sensor measurements

Basic Mathematics

Probabilities

Bayes rule

Bayes filters

City College of New York

10

The next slides are provided by the

authors of the book "Probabilistic

Robotics, you can download from the

website:

http://www.probabilistic-robotics.org/

City College of New York

11

Probabilistic Robotics

Mathematic Background

Probabilities

Bayes rule

Bayes filters

City College of New York

12

Probabilistic Robotics

Key idea:

Explicit representation of uncertainty using

the calculus of probability theory

Perception = state estimation

Action = utility optimization

City College of New York

13

Pr(A) denotes probability that proposition A is true.

Axioms of Probability Theory

1 ) Pr( 0 s s A

1 ) Pr( = True

) Pr( ) Pr( ) Pr( ) Pr( B A B A B A . + = v

0 ) Pr( = False

City College of New York

14

A Closer Look at Axiom 3

B

B A.

A

B

True

) Pr( ) Pr( ) Pr( ) Pr( B A B A B A . + = v

City College of New York

15

Using the Axioms

) Pr( 1 ) Pr(

0 ) Pr( ) Pr( 1

) Pr( ) Pr( ) Pr( ) Pr(

) Pr( ) Pr( ) Pr( ) Pr(

A A

A A

False A A True

A A A A A A

=

+ =

+ =

. + = v

City College of New York

16

Discrete Random Variables

X denotes a random variable.

X can take on a countable number of values in {x

1

,

x

2

, , x

n

}.

P(X=x

i

), or P(x

i

), is the probability that the random

variable X takes on value x

i

.

P( ) is called probability mass function.

E.g.

02 . 0 , 08 . 0 , 2 . 0 , 7 . 0 ) ( = Room P

.

City College of New York

17

Continuous Random Variables

X takes on values in the continuum.

p(X=x), or p(x), is a probability density function.

E.g.

}

= e

b

a

dx x p b a x ) ( )) , ( Pr(

x

p(x)

City College of New York

18

Joint and Conditional Probability

P(X=x and Y=y) = P(x,y)

If X and Y are independent then

P(x,y) = P(x) P(y)

P(x | y) is the probability of x given y

P(x | y) = P(x,y) / P(y)

P(x,y) = P(x | y) P(y)

If X and Y are independent then

P(x | y) = P(x)

City College of New York

19

Law of Total Probability, Marginals

=

y

y x P x P ) , ( ) (

=

y

y P y x P x P ) ( ) | ( ) (

=

x

x P 1 ) (

Discrete case

}

=1 ) ( dx x p

Continuous case

}

= dy y p y x p x p ) ( ) | ( ) (

}

= dy y x p x p ) , ( ) (

City College of New York

20

Bayes Formula

) ( y x p

evidence

prior likelihood

) (

) ( ) | (

) (

= =

y P

x P x y P

y x P

) ( ) | ( ) ( ) | ( ) , ( x P x y P y P y x P y x P = =

Posterior probability distribution

) ( x y p

) (x p

Prior probability distribution

If y is a new sensor reading

Generative model, characteristics of the sensor

) ( y p

Does not depend on x

City College of New York

21

Normalization

) ( ) | (

1

) (

) ( ) | (

) (

) ( ) | (

) (

1

x P x y P

y P

x P x y P

y P

x P x y P

y x P

x

= =

= =

q

q

y x

x

y x

y x

y x P x

x P x y P x

|

|

|

aux ) | ( :

aux

1

) ( ) | ( aux :

q

q

=

=

=

Algorithm:

City College of New York

22

Bayes Rule

with Background Knowledge

) | (

) | ( ) , | (

) , | (

z y P

z x P z x y P

z y x P =

City College of New York

23

Conditioning

Total probability:

}

}

}

=

=

=

dz z P z y x P y x P

dz z P z x P x P

dz z x P x P

) ( ) , | ( ) (

) ( ) | ( ) (

) , ( ) (

City College of New York

24

Conditional Independence

) | ( ) | ( ) , ( z y P z x P z y x P =

) , | ( ) ( y z x P z x P =

) , | ( ) ( x z y P z y P =

equivalent to

and

City College of New York

25

Simple Example of State Estimation

Suppose a robot obtains measurement z

What is P(open|z)?

City College of New York

26

Causal vs. Diagnostic Reasoning

P(open|z) is diagnostic.

P(z|open) is causal.

Often causal knowledge is easier to

obtain.

Bayes rule allows us to use causal

knowledge:

) (

) ( ) | (

) | (

z P

open P open z P

z open P =

count frequencies!

City College of New York

27

Example

P(z|open) = 0.6 P(z|open) = 0.3

P(open) = P(open) = 0.5

67 . 0

3

2

5 . 0 3 . 0 5 . 0 6 . 0

5 . 0 6 . 0

) | (

) ( ) | ( ) ( ) | (

) ( ) | (

) | (

= =

+

=

+

=

z open P

open p open z P open p open z P

open P open z P

z open P

z raises the probability that the door is open.

City College of New York

28

Combining Evidence

Suppose our robot obtains another

observation z

2

.

How can we integrate this new

information?

More generally, how can we estimate

P(x| z

1

...z

n

)?

City College of New York

29

Recursive Bayesian Updating

) , , | (

) , , | ( ) , , , | (

) , , | (

1 1

1 1 1 1

1

=

n n

n n n

n

z z z P

z z x P z z x z P

z z x P

Markov assumption: z

n

is independent of z

1

,...,z

n-1

if

we know x.

) ( ) | (

) , , | ( ) | (

) , , | (

) , , | ( ) | (

) , , | (

... 1

... 1

1 1

1 1

1 1

1

x P x z P

z z x P x z P

z z z P

z z x P x z P

z z x P

n i

i

n

n n

n n

n n

n

[

=

=

=

=

q

q

City College of New York

30

Example: Second Measurement

P(z

2

|open) = 0.5 P(z

2

|open) = 0.6

P(open|z

1

)=2/3

625 . 0

8

5

3

1

5

3

3

2

2

1

3

2

2

1

) | ( ) | ( ) | ( ) | (

) | ( ) | (

) , | (

1 2 1 2

1 2

1 2

= =

+

=

+

=

z open P open z P z open P open z P

z open P open z P

z z open P

z

2

lowers the probability that the door is open.

City College of New York

31

Actions

Often the world is dynamic since

actions carried out by the robot,

actions carried out by other agents,

or just the time passing by

change the world.

How can we incorporate such actions?

City College of New York

32

Typical Actions

The robot turns its wheels to move

The robot uses its manipulator to grasp an

object

Plants grow over time

Actions are never carried out with absolute

certainty.

In contrast to measurements, actions generally

increase the uncertainty.

City College of New York

33

Modeling Actions

To incorporate the outcome of an action

u into the current belief, we use the

conditional pdf

P(x|u,x)

This term specifies the pdf that

executing u changes the state from x

to x.

City College of New York

34

Integrating the Outcome of Actions

}

= ' ) ' ( ) ' , | ( ) | ( dx x P x u x P u x P

= ) ' ( ) ' , | ( ) | ( x P x u x P u x P

Continuous case:

Discrete case:

City College of New York

35

Example: Closing the door

City College of New York

36

State Transitions

P(x|u,x) for u = close door:

If the door is open, the action close door

succeeds in 90% of all cases.

open closed 0.1 1

0.9

0

P(open)=5/8 P(closed)=3/8

City College of New York

37

Example: The Resulting Belief

) | ( 1

16

1

8

3

1

0

8

5

10

1

) ( ) , | (

) ( ) , | (

) ' ( ) ' , | ( ) | (

16

15

8

3

1

1

8

5

10

9

) ( ) , | (

) ( ) , | (

) ' ( ) ' , | ( ) | (

u closed P

closed P closed u open P

open P open u open P

x P x u open P u open P

closed P closed u closed P

open P open u closed P

x P x u closed P u closed P

=

= - + - =

+

=

=

= - + - =

+

=

=

City College of New York

38

Bayes Filters: Framework

Given:

Stream of observations z and action data u:

Sensor model P(z|x).

Action model P(x|u,x).

Prior probability of the system state P(x).

Wanted:

Estimate of the state X of a dynamical system.

The posterior of the state is also called Belief:

) , , , | ( ) (

1 1 t t t t

z u z u x P x Bel =

} , , , {

1 1 t t t

z u z u d =

City College of New York

39

Markov Assumption

Underlying Assumptions

Static world, Independent noise

Perfect model, no approximation errors

) , | ( ) , , | (

1 : 1 : 1 1 : 1 t t t t t t t

u x x p u z x x p

=

) | ( ) , , | (

: 1 : 1 : 0 t t t t t t

x z p u z x z p =

State transition probability

Measurement probability

Markov Assumption:

past and future data are independent if one knows the current state

City College of New York

40

1 1 1

) ( ) , | ( ) | (

}

=

t t t t t t t

dx x Bel x u x P x z P q

Bayes Filters

) , , , | ( ) , , , , | (

1 1 1 1 t t t t t

u z u x P u z u x z P q =

Bayes

z = observation

u = action

x = state

) , , , | ( ) (

1 1 t t t t

z u z u x P x Bel =

Markov

) , , , | ( ) | (

1 1 t t t t

u z u x P x z P q =

Markov

1 1 1 1 1

) , , , | ( ) , | ( ) | (

}

=

t t t t t t t t

dx u z u x P x u x P x z P q

1 1 1 1

1 1 1

) , , , | (

) , , , , | ( ) | (

}

=

t t t

t t t t t

dx u z u x P

x u z u x P x z P

q

Total prob.

Markov

1 1 1 1 1 1

) , , , | ( ) , | ( ) | (

}

=

t t t t t t t t

dx z z u x P x u x P x z P q

City College of New York

41

Bayes Filter Algorithm

1. Algorithm Bayes_filter( Bel(x),d ):

2. q=0

3. If d is a perceptual data item z then

4. For all x do

5.

6.

7. For all x do

8.

9. Else if d is an action data item u then

10. For all x do

11.

12. Return Bel(x)

) ( ) | ( ) ( ' x Bel x z P x Bel =

) ( ' x Bel + =q q

) ( ' ) ( '

1

x Bel x Bel

=q

' ) ' ( ) ' , | ( ) ( ' dx x Bel x u x P x Bel

}

=

1 1 1

) ( ) , | ( ) | ( ) (

}

=

t t t t t t t t

dx x Bel x u x P x z P x Bel q

City College of New York

42

Bayes Filters are Familiar!

Kalman filters

Particle filters

Hidden Markov models

Dynamic Bayesian networks

Partially Observable Markov Decision Processes

(POMDPs)

1 1 1

) ( ) , | ( ) | ( ) (

}

=

t t t t t t t t

dx x Bel x u x P x z P x Bel q

City College of New York

43

Summary

Bayes rule allows us to compute

probabilities that are hard to assess

otherwise.

Under the Markov assumption, recursive

Bayesian updating can be used to

efficiently combine evidence.

Bayes filters are a probabilistic tool for

estimating the state of dynamic systems.

City College of New York

44

Thank You

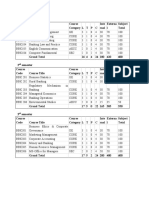

Homework 3: Exercises 1, 2 and 3 on pp36~37 of textbook,

Due date March 5

Next class: Feb 21, 2012

Homework 2: Due date: Feb 27

You might also like

- Probabilistic Robot 2 101222080103 Phpapp01Document54 pagesProbabilistic Robot 2 101222080103 Phpapp01vpresenceNo ratings yet

- Quiz I Review Probabilistic Systems Analysis 6.041/6.431: October 7, 2010Document27 pagesQuiz I Review Probabilistic Systems Analysis 6.041/6.431: October 7, 2010Birbhadra DhunganaNo ratings yet

- MCMC - Markov Chain Monte Carlo: One of The Top Ten Algorithms of The 20th CenturyDocument31 pagesMCMC - Markov Chain Monte Carlo: One of The Top Ten Algorithms of The 20th CenturyTigerHATS100% (1)

- Bayes FilterDocument37 pagesBayes Filterfizyolog63No ratings yet

- Introduction To Mobile RoboticsDocument36 pagesIntroduction To Mobile RoboticsAhmad RamadhaniNo ratings yet

- Introduction Statistics Imperial College LondonDocument474 pagesIntroduction Statistics Imperial College Londoncmtinv50% (2)

- An Introduction To Objective Bayesian Statistics PDFDocument69 pagesAn Introduction To Objective Bayesian Statistics PDFWaterloo Ferreira da SilvaNo ratings yet

- MCMC BriefDocument69 pagesMCMC Briefjakub_gramolNo ratings yet

- Elec2600 Lecture Part III HDocument237 pagesElec2600 Lecture Part III HGhoshan JaganathamaniNo ratings yet

- Tema5 Teoria-2830Document57 pagesTema5 Teoria-2830Luis Alejandro Sanchez SanchezNo ratings yet

- Bayesian Modelling Tuts-12-15Document4 pagesBayesian Modelling Tuts-12-15ShubhsNo ratings yet

- BME I5100 Biomedical Signal Processing: Lucas C. Parra Biomedical Engineering Department City College of New YorkDocument34 pagesBME I5100 Biomedical Signal Processing: Lucas C. Parra Biomedical Engineering Department City College of New YorkparrexNo ratings yet

- Lecture1 2023Document44 pagesLecture1 2023proddutkumarbiswasNo ratings yet

- A 3D Ray Tracing ApproachDocument21 pagesA 3D Ray Tracing ApproachDebora Cores CarreraNo ratings yet

- Vorlesung02 Statistical Models in SimulationDocument42 pagesVorlesung02 Statistical Models in SimulationkelazindagikaNo ratings yet

- ProbabilityDocument62 pagesProbabilityRajesh Dwivedi100% (1)

- Introduction To Predictive LearningDocument101 pagesIntroduction To Predictive LearningVivek KumarNo ratings yet

- Markov Chain Order Estimation and The Chi-Square DivergenceDocument19 pagesMarkov Chain Order Estimation and The Chi-Square DivergenceYXZ300No ratings yet

- MATH 437/ MATH 535: Applied Stochastic Processes/ Advanced Applied Stochastic ProcessesDocument7 pagesMATH 437/ MATH 535: Applied Stochastic Processes/ Advanced Applied Stochastic ProcessesKashif KhalidNo ratings yet

- IntroductionDocument35 pagesIntroductionzakizadehNo ratings yet

- Holonomic and Nonholonomic ConstraintsDocument20 pagesHolonomic and Nonholonomic Constraintsoltinn0% (1)

- Introduction To ROBOTICS: Inverse Kinematics Jacobian Matrix Trajectory PlanningDocument34 pagesIntroduction To ROBOTICS: Inverse Kinematics Jacobian Matrix Trajectory PlanningMohammad Emran RusliNo ratings yet

- Introduction To ROBOTICS: Inverse Kinematics Jacobian Matrix Trajectory PlanningDocument34 pagesIntroduction To ROBOTICS: Inverse Kinematics Jacobian Matrix Trajectory PlanningChernet TugeNo ratings yet

- Kalman Filter S13Document67 pagesKalman Filter S13Dan SionovNo ratings yet

- Which Way Did It Go? The Quantum EraserDocument6 pagesWhich Way Did It Go? The Quantum EraserShweta SridharNo ratings yet

- R O M H A H A: Educed Rder Odeling Ybrid Pproach Ybrid PproachDocument30 pagesR O M H A H A: Educed Rder Odeling Ybrid Pproach Ybrid PproachLei TuNo ratings yet

- Mathematics in Machine LearningDocument83 pagesMathematics in Machine LearningSubha OPNo ratings yet

- Introduction To Population PKDocument45 pagesIntroduction To Population PKTran Nhat ThangNo ratings yet

- Applied Probability Lecture 3: Rajeev SuratiDocument14 pagesApplied Probability Lecture 3: Rajeev Suratisarpedon3289No ratings yet

- Fourth Sem IT Dept PDFDocument337 pagesFourth Sem IT Dept PDFSangeeta PalNo ratings yet

- Lec 2Document23 pagesLec 2Eric KialNo ratings yet

- Monte Carlo Statistical MethodsDocument289 pagesMonte Carlo Statistical MethodscasesilvaNo ratings yet

- COS495 Lecture14 MarkovLocalizationDocument30 pagesCOS495 Lecture14 MarkovLocalizationK04Anoushka TripathiNo ratings yet

- Correlation and Regression AnalysisDocument23 pagesCorrelation and Regression AnalysisMichael EdwardsNo ratings yet

- Introduction To ROBOTICS: Kinematics of Robot ManipulatorDocument44 pagesIntroduction To ROBOTICS: Kinematics of Robot Manipulatorharikiran3285No ratings yet

- Tutorial Part I Information Theory Meets Machine Learning Tuto - Slides - Part1Document46 pagesTutorial Part I Information Theory Meets Machine Learning Tuto - Slides - Part1apdpjpNo ratings yet

- Department of Statistics Module Code: STA1142: School of Mathematical and Natural SciencesDocument19 pagesDepartment of Statistics Module Code: STA1142: School of Mathematical and Natural SciencesNtanganedzeni MandiwanaNo ratings yet

- PQT NotesDocument337 pagesPQT NotesDot Kidman100% (1)

- Probabilitic Modelling of Anatomical ShapesDocument73 pagesProbabilitic Modelling of Anatomical ShapesHarshoi KrishannaNo ratings yet

- Probability & Statistics - Review: Modeling & SimulationDocument30 pagesProbability & Statistics - Review: Modeling & SimulationAhmad ShdifatNo ratings yet

- Particlefilter EqualizationDocument4 pagesParticlefilter Equalizationnguyentai325No ratings yet

- Regression and CorrelationDocument48 pagesRegression and CorrelationKelly Hoffman100% (1)

- Lecture 05 - Probability (4.1-4.3)Document12 pagesLecture 05 - Probability (4.1-4.3)Vann MataganasNo ratings yet

- Discrete Random VariablesDocument46 pagesDiscrete Random VariablessyahmiNo ratings yet

- An Introduction To Basic Statistics & Probability (Shenek Heyward)Document40 pagesAn Introduction To Basic Statistics & Probability (Shenek Heyward)Elder FutharkNo ratings yet

- A Survey of Dimension Reduction TechniquesDocument18 pagesA Survey of Dimension Reduction TechniquesEulalio Colubio Jr.No ratings yet

- MAE 300 TextbookDocument95 pagesMAE 300 Textbookmgerges15No ratings yet

- Probability and Random Processes 2023Document43 pagesProbability and Random Processes 2023Samuel mutindaNo ratings yet

- Combinatorics Meets Potential Theory: Philippe D'Arco Valentina LacivitaDocument17 pagesCombinatorics Meets Potential Theory: Philippe D'Arco Valentina Lacivitareqiqie reqeqeNo ratings yet

- First Returns of The Symmetric Random Walk When P Q Basic Statistics and Data AnalysisDocument7 pagesFirst Returns of The Symmetric Random Walk When P Q Basic Statistics and Data AnalysisshahinmaNo ratings yet

- PQT Anna University Notes KinindiaDocument337 pagesPQT Anna University Notes KinindiaJayanthi KeerthiNo ratings yet

- Whole Digital Communication PPT-libreDocument319 pagesWhole Digital Communication PPT-librePiyush GuptaNo ratings yet

- Good Review Materials: - Samples From A Random Variable Are Real NumbersDocument5 pagesGood Review Materials: - Samples From A Random Variable Are Real Numberstesting123No ratings yet

- Interpolation Produces That Matches Exactly. The Function Then Can Be Utilized To ApproximateDocument44 pagesInterpolation Produces That Matches Exactly. The Function Then Can Be Utilized To ApproximategraphicalguruNo ratings yet

- Hidden Markov ModelDocument32 pagesHidden Markov ModelAnubhab BanerjeeNo ratings yet

- Radically Elementary Probability Theory. (AM-117), Volume 117From EverandRadically Elementary Probability Theory. (AM-117), Volume 117Rating: 4 out of 5 stars4/5 (2)

- Nonlinear Functional Analysis and Applications: Proceedings of an Advanced Seminar Conducted by the Mathematics Research Center, the University of Wisconsin, Madison, October 12-14, 1970From EverandNonlinear Functional Analysis and Applications: Proceedings of an Advanced Seminar Conducted by the Mathematics Research Center, the University of Wisconsin, Madison, October 12-14, 1970Louis B. RallNo ratings yet

- Applications of Derivatives Errors and Approximation (Calculus) Mathematics Question BankFrom EverandApplications of Derivatives Errors and Approximation (Calculus) Mathematics Question BankNo ratings yet

- A Quaternion Gradient Operator For Color Image Edge DetectionDocument5 pagesA Quaternion Gradient Operator For Color Image Edge DetectionStefano Romeu ZeplinNo ratings yet

- 2n2222 STDocument5 pages2n2222 STperro sNo ratings yet

- D D D D D D D: Positive-Voltage RegulatorsDocument13 pagesD D D D D D D: Positive-Voltage RegulatorsStefano Romeu ZeplinNo ratings yet

- Features Description: SBVS017A - AUGUST 2001Document12 pagesFeatures Description: SBVS017A - AUGUST 2001Stefano Romeu ZeplinNo ratings yet

- ICL7106, ICL7107, ICL7107S: 3 / Digit, LCD/LED Display, A/D Converters FeaturesDocument16 pagesICL7106, ICL7107, ICL7107S: 3 / Digit, LCD/LED Display, A/D Converters FeaturesStefano Romeu ZeplinNo ratings yet

- The Structure of Relationship Between Atttension and IntelligenceDocument23 pagesThe Structure of Relationship Between Atttension and IntelligenceInsaf Ali Farman AliNo ratings yet

- Tyba B.A.-GEOGRAPHY-SYLLABUSDocument53 pagesTyba B.A.-GEOGRAPHY-SYLLABUSsharifaNo ratings yet

- At.3212 - Determining The Extent of TestingDocument12 pagesAt.3212 - Determining The Extent of TestingDenny June CraususNo ratings yet

- Credit Card Fraud Detection Using Machine Learning: Ruttala Sailusha V. GnaneswarDocument7 pagesCredit Card Fraud Detection Using Machine Learning: Ruttala Sailusha V. Gnaneswarsneha salunkeNo ratings yet

- Module 1 First WeekDocument5 pagesModule 1 First WeekJellamae Arreza100% (1)

- ITM Chapter 5 New On Probability DistributionsDocument16 pagesITM Chapter 5 New On Probability DistributionsAnish Kumar SinghNo ratings yet

- Oracle - White Paper - The Bayesian Approach To Forecasting (Demantra-Bayesian-White-Paper) PDFDocument7 pagesOracle - White Paper - The Bayesian Approach To Forecasting (Demantra-Bayesian-White-Paper) PDFVictor MillanNo ratings yet

- CS181 HW0Document9 pagesCS181 HW0Frank GengNo ratings yet

- Homework #3 - Answers Economics 113 Introduction To Econometrics Professor Spearot Due Wednesday, October 29th, 2008 - Beginning of ClassDocument2 pagesHomework #3 - Answers Economics 113 Introduction To Econometrics Professor Spearot Due Wednesday, October 29th, 2008 - Beginning of ClassCheung TiffanyNo ratings yet

- Impact of Interest Rate On Profitability of Commercial Banks in NepalDocument11 pagesImpact of Interest Rate On Profitability of Commercial Banks in Nepalsangita KcNo ratings yet

- (Research Methods in Linguistics) Aek Phakiti-Experimental Research Methods in Language Learning-Continuum Publishing Corporation (2014) PDFDocument381 pages(Research Methods in Linguistics) Aek Phakiti-Experimental Research Methods in Language Learning-Continuum Publishing Corporation (2014) PDFYaowarut Mengkow100% (1)

- Stressors Among Grade 12 HUMSS Students On Pandemic LearningDocument21 pagesStressors Among Grade 12 HUMSS Students On Pandemic LearningEllaNo ratings yet

- USMLE Epidemiology Biostats PDFDocument1 pageUSMLE Epidemiology Biostats PDFlalaNo ratings yet

- BBA BANKING 2021-24 (Final) - 3-1Document93 pagesBBA BANKING 2021-24 (Final) - 3-1abhishekgupta_limNo ratings yet

- SbuxDocument8 pagesSbuxBayu Faranandi AngestiNo ratings yet

- Chapter-9 EstimationDocument22 pagesChapter-9 Estimationian92193No ratings yet

- Prediction of Insurance Fraud Detection Using Machine Learning AlgorithmsDocument8 pagesPrediction of Insurance Fraud Detection Using Machine Learning AlgorithmsAmare LakewNo ratings yet

- Unit 3-Anova - Ancova Manova-MancovaDocument11 pagesUnit 3-Anova - Ancova Manova-MancovaRuchi SharmaNo ratings yet

- TE - Syllabus - R2019 July9Document3 pagesTE - Syllabus - R2019 July9B452 AKSHAY SHUKLANo ratings yet

- Frequency Factors K For Gamma and LogDocument2 pagesFrequency Factors K For Gamma and LogMuhammad Amri ImaduddinNo ratings yet

- Glyphworks V8 Training Manual 2012Document136 pagesGlyphworks V8 Training Manual 2012Indranil Bhattacharyya0% (1)

- Final Lab Report-20bci7108Document22 pagesFinal Lab Report-20bci7108rupa sreeNo ratings yet

- RESEARCH PROJECT Final RP PDFDocument41 pagesRESEARCH PROJECT Final RP PDFSonam TamangNo ratings yet

- Pertemuan 1 Pengantar ReliabilityDocument19 pagesPertemuan 1 Pengantar Reliabilitypurdianta yoNo ratings yet

- Design of Packed Bed Reactor Catalyst Based On Shape, SizeDocument14 pagesDesign of Packed Bed Reactor Catalyst Based On Shape, SizeJenz Lee100% (1)

- ProbabilityAndStatistics PDFDocument82 pagesProbabilityAndStatistics PDFkareemyas71200% (1)

- Forecasting Tahoe SaltDocument4 pagesForecasting Tahoe SaltSaumyajit DeyNo ratings yet

- Protocol DKTDocument45 pagesProtocol DKTAbhishek YadavNo ratings yet

- Identification of Multivariate Outliers - Problems and Challenges of Visualization MethodsDocument15 pagesIdentification of Multivariate Outliers - Problems and Challenges of Visualization MethodsEduardoPacaNo ratings yet

- P4 Project ReportDocument28 pagesP4 Project ReportBrij MaheshwariNo ratings yet