Professional Documents

Culture Documents

Optimum Vsslms Algorithm For Acoustic Echo

Optimum Vsslms Algorithm For Acoustic Echo

Uploaded by

Prashant SangulagiOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Optimum Vsslms Algorithm For Acoustic Echo

Optimum Vsslms Algorithm For Acoustic Echo

Uploaded by

Prashant SangulagiCopyright:

Available Formats

Available ONLINE at www.ijcae.

org

IJCAE, Vol.4 Issue 3, September 2013, 365-373

ISSN NO: 0988 0382E www.ijcae.org Page | 365

ISSN NO: 0988-0382E

R

RR E

EE S

SS E

EE A

AA R

RR C

CC H

HH A

AA R

RR T

TT I

II C

CC L

LL E

EE

OPTIMUM VSSLMS ALGORITHM FOR ACOUSTIC ECHO

CANCELLATION

Mallikarjun Talwar

Department of Instrumentation Technology, BKIT Bhalki: 585328. KARNATAKA, INDIA

Mallikarjun Sarsamba

Department of Electronics and Communication, BKIT Bhalki: 585328. KARNATAKA

Prashant Sangulagi

Department of Electronics and Communication, BKIT Bhalki: 585328. KARNATAKA

Raj Reddy

Department of Mechanical Engineering, BKIT Bhalki: 585328. KARNATAKA, INDIA

ABSTRACT

Acoustic echo cancellation is a common occurrence in todays telecommunication systems. It occurs

when an audio source and sink operate in full duplex mode; an example of this is a hands-free loudspeaker

telephone. In this situation the received signal is output through the telephone loudspeaker (audio source), this

audio signal is then reverberated through the physical environment and picked up by the systems microphone

(audio sink). The effect is the return to the distant user of time delayed and attenuated images of their original

speech signal. The signal interference caused by acoustic echo is distracting to both users and causes a reduction

in the quality of the communication. This paper focuses on the use of OPTIMUM VSSLMS ALGORITHM

which shows a better performance compare to LMS and NLMS algorithm

Keywords Adaptive filters, LMS algorithm, NLMS algorithm, Acoustic echo cancellation.

Mallikarjun Talwar et. al / International Journal of Communications And Engineering Vol. 4 Issue 3, Sept. 2013

ISSN NO: 0988 0382E www.ijcae.org Page | 366

I. INTRODUCTION

Acoustic echo occurs when an audio signal is reverberated in a real environment, resulting in the

original intended signal plus attenuated, time-delayed images of this signal. This paper will focus on the

occurrence of acoustic echo in telecommunication systems. Such a system consists of coupled acoustic input

and output devices, both of which are active concurrently. An example of this is a hands-free telephone system.

In this scenario the system has both an active loudspeaker and microphone input operating simultaneously. The

system then acts as both a receiver and transmitter in full duplex mode. When a signal is received by the system,

it is output through the loudspeaker into an acoustic environment. This signal is reverberated within the

environment and returned to the system via the microphone input. These reverberated signals contain time-

delayed images of the original signal, which are then returned to the original sender (Figure 1.1, is the

attenuation , is time delay ). The occurrence of acoustic echo in speech transmission causes signal

interference and reduced quality of communication. The method used to cancel the echo signal is known as

adaptive filtering

Adaptive filters are dynamic filters, which iteratively alter their characteristics in order to achieve an

optimal Desired output. An adaptive filter algorithmically alters its parameters in order to minimize a function

of the difference between the desired output d(n) its actual output y(n) .This function is known as the cost

function of the adaptive algorithm. Figure 1.2 shows a block diagram of the adaptive echo cancellation system

implemented throughout this paper.

Fig.1 Origin of Acoustic echo

Here the filter represents the impulse response of the acoustic environment, represents the

adaptive filter used to cancel the echo signal. The adaptive filter aims to equate its output to the desired

output (the signal reverberated within the acoustic environment). At each iteration the error

signal, is fed back into the filter, where the filter characteristics are altered

accordingly.[2,3]

Fig 1.2: Block diagram of an adaptive Cancellation system

The aim of an adaptive filter is to calculate the difference between the desired signal and the adaptive

Mallikarjun Talwar et. al / International Journal of Communications And Engineering Vol. 4 Issue 3, Sept. 2013

ISSN NO: 0988 0382E www.ijcae.org Page | 367

filter output e(n),This error signal is fed back into the adaptive filter and its coefficients are changed

algorithmically in order to minimize a function of this difference, known as the cost function. In the case of

acoustic echo cancellation, the optimal output of the adaptive filter is equal in value to the unwanted echoed

signal. When the adaptive filter output is equal to desired signal the error signal goes to zero. In this situation the

echoed signal would be completely cancelled and the far user would not hear any of their original speech

returned to them.

II LMS ALGORITHM

The least-mean-square (LMS) algorithm, which is brought forward by Widrow [1,2], is widely used in

adaptive signal processing for its simplicity, less computation and ease of implementation in terms of hardware.

The LMS algorithm is described by the equation:

...(2.1)

...(2.2)

Where is the coefficient vector at time k and , , and are the step size, adaptation

error, and input vector, respectively, at time k From equation (2.1), it can be seen that the LMS algorithm uses

an adaptation error and a variable step size to update the adaptive filter coefficients, the algorithm, namely, the

f great importance to the convergence and stability as well as the stability margin of the LMS

Where tr(R) denote the trace of R and R is the autocorrelation matrix of x, and

value of R

III.NLMS ALGORITHM

One of the primary disadvantages of the LMS algorithm is having a fixed step size parameter for every

iteration. This requires an understanding of the statistics of the input signal prior to commencing the adaptive

filtering operation. In practice this is rarely achievable. Even if we assume the only signal to be input to the

adaptive echo cancellation system is speech, there are still many factors such as signal input power and

amplitude which will affect its performance[3].

The normalized least mean square algorithm (NLMS) is an extension of the LMS algorithm which

bypasses this issue by selecting a different step size value, (n), for each iteration of the algorithm. This step

size is proportional to the inverse of the total expected energy of the instantaneous values of the coefficients of

the input vector x (n). This sum of the expected energies of the input samples is also equivalent to the dot

product of the input vector with itself, and the trace of input vectors auto-correlation matrix, R The normalized

LMS, algorithm utilizes a variable convergence factor that minimizes the instantaneous error. Such a

convergence factor usually reduces the convergence time but increases the misadjustment. The updating

equation of the LMS algorithm can employ a variable convergence factor k in order to improve the

convergence rate. In this case, the updating formula is expressed as

Mallikarjun Talwar et. al / International Journal of Communications And Engineering Vol. 4 Issue 3, Sept. 2013

ISSN NO: 0988 0382E www.ijcae.org Page | 368

Where must be chosen with the objective of achieving a faster convergence. The value of is

given by

IV OPTIMUM VSSLMS ALGORITHM

A number of time-varying step-size algorithms have been proposed to enhance the performance of the

conventional LMS algorithm. Experimentation with these algorithms indicates that their performance is highly

sensitive to the noise disturbance. The present work discusses a new variable step-size LMS-type algorithm

providing fast convergence at early stages of adaptation while ensuring small final misadjustment. The

performance of the algorithm is not affected by existing uncorrelated noise disturbances. Simulation results

comparing the proposed algorithm to current variable step-size algorithm clearly indicate its superior

performance. Since its introduction, the LMS algorithm has been the focus of much study due to its simplicity

and robustness, leading to its implementation in many applications. It is well known that the final excess Mean

Square Error (MSE) is directly proportional to the adaptation step size of the LMS while the convergence time

increases as the step size decreases. This inherent limitation of the LMS necessitates a compromise between the

opposing fundamental requirements of fast convergence rate and small misadjustment demanded in most

adaptive filtering applications. As a result, researchers have constantly looked for alternative means to improve

its performance. One popular approach is to employ a time varying step size in the standard LMS weight update

recursion. This is based on using large step-size values when the algorithm is far from the optimal solution, thus

speeding up the convergence rate. When the algorithm is near the optimum, small step-size values are used to

achieve a low level of misadjustment, thus achieving better overall performance. This can be obtained by

adjusting the step-size value in accordance with some criterion that can provide an approximate measure of the

adaptation Process State.

Several criteria have been used:

o Squared instantaneous error

o Sign changes of successive samples of the gradient

o Attempting to reduce the squared error at each instant

o Cross correlation of input and error

Experimental results show that the performance of existing variable step size (VSS) algorithms is quite

sensitive to the noise disturbance. Their advantageous performance over the LMS algorithm is generally attained

only in a high signal-to-noise environment. This is intuitively obvious by noting that the criteria controlling the

step-size update of these algorithms are directly obtained from the instantaneous error that is contaminated by

the disturbance noise. Since measurement noise is a reality in any practical system, the usefulness of any

adaptive algorithm is judged by its performance in the presence of this noise. The performance of the VSS

algorithm deteriorates in the presence of measurement noise. Hence a new VSS LMS algorithm is proposed,

where the step size of the algorithm is adjusted according to the square of the time-averaged estimate of the

autocorrelation of and . As a result, the algorithm can effectively adjust the step size as in while

maintaining the immunity against independent noise disturbance. The new VSS LMS algorithm allows more

flexible control of misadjustment and convergence time without the need to compromise one for the other[4].

Mallikarjun Talwar et. al / International Journal of Communications And Engineering Vol. 4 Issue 3, Sept. 2013

ISSN NO: 0988 0382E www.ijcae.org Page | 369

A. Algorithm Formulation:

In the adaptation step size is adjusted using the energy of the instantaneous error.

The weight update recursion is given by

...(4.1)

And the step-size update expression is

...(4.2)

Where, , and is set to or when it falls below or above these lower

and upper bounds, respectively. The constant is normally selected near the point of instability of the

conventional LMS to provide the maximum possible convergence speed. The value of is chosen as a

compromise between the desired level of steady state misadjustment and the required tracking capabilities of the

algorithm. The parameter

algorithm. The algorithm has preferable performance over the fixed step-size LMS: At early stages of

adaptation, the error is large, causing the step size to increase, thus providing faster convergence speed. When

the error decreases, the step size decreases, thus yielding smaller misadjustment near the optimum. However,

using the instantaneous error energy as a measure to sense the state of the adaptation process does not perform

as well as expected in the presence of measurement noise. This can be seen from (4.3). The output error of the

identification system is

...(4.3)

Where the desired signal is given by

...(4.4)

is a zero-mean independent disturbance, and is the time-varying optimal weight vector.

Substituting (4.3) and (4.2) in the step-size recursion, we get

...(4.5)

Where is the weight error vector. The input signal autocorrelation matrix, which is

defined as

R= , can be expressed as

Where

and Q is the modal matrix of R.

Using and

...(4.6)

Where the common independence assumption of V(n) and x(n) has been used . Clearly, the term

influences the proximity of the adaptive system to the optimal solution, and is

adjusted accordingly. However, due to the presence of , the step-size update is not an accurate

reflection of the state of adaptation before or after convergence. This reduces the efficiency of the algorithm

significantly. More specifically, close to the optimum, will still be large due to the presence of the noise

term . This results in large misadjustment due to the large fluctuations around the optimum. Therefore,

a different approach is proposed to control step-size adaptation. The objective is to ensure large when the

algorithm is far from the optimum with decreasing as we approach the optimum even in the presence of

this noise. The proposed algorithm achieves this objective by using an estimate of the autocorrelation between

Mallikarjun Talwar et. al / International Journal of Communications And Engineering Vol. 4 Issue 3, Sept. 2013

ISSN NO: 0988 0382E www.ijcae.org Page | 370

and to control step-size update. The estimate is a time average of that is described

as

...(4.7)

a good measure of the proximity to the optimum. Second, it rejects the effect of the uncorrelated noise sequence

on the step-size update. In the early stages of adaptation, the error autocorrelation estimate is large,

optimum, the error autocorrelation approaches zero, resulting in a

the proposed step size update is

given by

...(4.8)

he averaging time constant, i.e., the

quality of the estimation. In stationary environments, previous samples contain information that is relevant to

determining an accurate measure of adaptation state, i.e., the proximity of the adaptive filter coefficients to the

optimal ones. Therefore,

time averaging window should be small enough to allow for forgetting of the deep past and adapting to the

The step size in(4.8) can be rewritten as

(4.9)

Assuming perfect estimation of the autocorrelation of e(n) and e(n-1), we note that as a result of the

averaging operation, the instantaneous behaviour of the step size will be smoother. It is also clear from (4.9) that

the update of

disturbance noise. Finally, the proposed algorithm involves two additional update equations (4.8) and (4.9)

compared with the standard LMS algorithm. Therefore, the added complexity is six multiplications per iteration.

Compared with the VSS LMS algorithm. The algorithm adds a new equation (4.9) and a corresponding

B.Implementation of the OPTI MUM VSS LMS algorithm:

1. The output of the FIR filter, y(n) is calculated as,

2. The value of the error estimation is calculated using equation

3. Step size is updated as,

Were p(n) is

And, (n+1) = , if (n+1) >

= , if (n+1) <

= (n+1) otherwise

Where 0< <

Mallikarjun Talwar et. al / International Journal of Communications And Engineering Vol. 4 Issue 3, Sept. 2013

ISSN NO: 0988 0382E www.ijcae.org Page | 371

4. The tap weights of the FIR vector are updated in preparation for the next iteration, by equation

V. SIMULATION RESULTS

A. Comparison table

Algorithm

Average

Attenuation(dB)

Average Excess

MSE(db)

Multiplication

Operations

LMS -21.32 -67.26 2N+1

NLMS -48.18 -126.49 3N+1

OPTIMUIM

VSSLMS

-50.62 -134.33 3N+8

Fig 5.1 input signal

0 0.5 1 1.5 2 2.5 3

x 10

4

-2

-1.5

-1

-0.5

0

0.5

1

1.5

2

2.5

filter

o

utput

Fig 5.2 Plot of filter output signal

Fig 5.3 plot of estimated error

Mallikarjun Talwar et. al / International Journal of Communications And Engineering Vol. 4 Issue 3, Sept. 2013

ISSN NO: 0988 0382E www.ijcae.org Page | 372

Fig 5.4 plot of mean square error signal

Fig 5.5 plot of attenuation in db

VI.CONCLUSION

A number of time-varying step-size algorithms have been proposed to enhance the performance of the

conventional LMS algorithm. Experimentation with these algorithms indicates that their performance is highly

sensitive to the noise disturbance. The present work discusses a optimum variable step-size LMS-type algorithm

providing fast convergence at early stages of adaptation while ensuring small final misadjustment. The

performance of the algorithm is not affected by existing uncorrelated noise disturbances. Simulation results

comparing the proposed algorithm to current existing algorithms clearly indicate that OPTIMUM VSS LMS

algorithm average attenuation is -50.62 dB and numbers of multiplications 4N+8. It has the greatest attenuation

of above algorithms, and converges much faster than the LMS algorithm. This performance comes at the cost of

computational complexity.

REFERENCES

[1] Widrow.B,(1976),Stationary And nonstationary learning characteristics of the LMS adaptive filter.

Proc.IEEE,64:1151-1162,1976.

[2] Widrow B, Stearn S.D. Adaptive Signal processing.New York:Prentice-Hall,1985.

[3] S. Haykin(2002), Adaptive Filter Theory,Third Edition, NewYork: Prentice-Hall.

Mallikarjun Talwar et. al / International Journal of Communications And Engineering Vol. 4 Issue 3, Sept. 2013

ISSN NO: 0988 0382E www.ijcae.org Page | 373

[4] Hongbing Li, Hailin Tian(2009), A New VSS-LMS AdaptiveFiltering Algorithm and Its Application

in Adaptive NoiseJamming Cancellation System,

[5] Tyseer Aboulnasr,Member,IEEE,and K.Mayyas, Arobust variable step size LMS type

algorithm:Analysis andSimulations,IEEE Trans.on Signal Processing

[6] R.Harris(1986),A variable step size algorithm,IEEETransAcoust.,Speech,Signal

Processing,vol.ASSP-34,pp.499-510.

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5814)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1092)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (844)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (348)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- Sorrentino: Mosby's Textbook For Nursing Assistants, 9th EditionDocument10 pagesSorrentino: Mosby's Textbook For Nursing Assistants, 9th EditionMariam33% (6)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- Coa Memo 88-569 Guidelines For AppraisalDocument14 pagesCoa Memo 88-569 Guidelines For Appraisalrubydelacruz100% (7)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- By Michael Hailu Advisor: Bahran Asrat (PHD)Document88 pagesBy Michael Hailu Advisor: Bahran Asrat (PHD)Micheale HailuNo ratings yet

- OFC Assignment QuestionsDocument9 pagesOFC Assignment QuestionsPrashant SangulagiNo ratings yet

- Fog Paper PrashantDocument6 pagesFog Paper PrashantPrashant SangulagiNo ratings yet

- First International Conference On Recent Innovations in Engineering and Technology-2016Document7 pagesFirst International Conference On Recent Innovations in Engineering and Technology-2016Prashant SangulagiNo ratings yet

- Introduction ... 01 2. What Is Cloud Computing? ....................................................................... 02 3. Types of Clouds ..03Document5 pagesIntroduction ... 01 2. What Is Cloud Computing? ....................................................................... 02 3. Types of Clouds ..03Prashant SangulagiNo ratings yet

- Cascade Approach of DWT-SVD Digital Image WatermarkingDocument5 pagesCascade Approach of DWT-SVD Digital Image WatermarkingPrashant SangulagiNo ratings yet

- DSP Viva QuestionsDocument2 pagesDSP Viva QuestionsPrashant Sangulagi0% (1)

- The Load Monitoring and Protection On Electricity Power Lines Using GSM NetworkDocument6 pagesThe Load Monitoring and Protection On Electricity Power Lines Using GSM NetworkPrashant Sangulagi100% (1)

- Kinetic Evaluation of Ethyl Acetate Production For Local Alimentary Solvents ProductionDocument7 pagesKinetic Evaluation of Ethyl Acetate Production For Local Alimentary Solvents ProductionDiego Nicolas ManceraNo ratings yet

- Netbiter Concept Brochure - WebDocument7 pagesNetbiter Concept Brochure - WebVidian Prakasa AriantoNo ratings yet

- Generator Type Eco 3-1Sn/4: Electrical CharacteristicsDocument5 pagesGenerator Type Eco 3-1Sn/4: Electrical CharacteristicsFaridh AmroullohNo ratings yet

- Cr976a - Westlake TyreDocument1 pageCr976a - Westlake TyreLuis Eduardo PuertoNo ratings yet

- Midterm QM MATERIALSDocument28 pagesMidterm QM MATERIALSTrần Thanh TrúcNo ratings yet

- OpenDSS Circuit Interface PDFDocument6 pagesOpenDSS Circuit Interface PDFLucas GodoiNo ratings yet

- The Scientific Contributions of Paul D. Maclean (1913-2007) : John D. Newman, PHD, and James C. Harris, MDDocument3 pagesThe Scientific Contributions of Paul D. Maclean (1913-2007) : John D. Newman, PHD, and James C. Harris, MDJosé C. Maguiña. Neurociencias.No ratings yet

- Compatibility of ENTP With INFP in Relationships TruityDocument1 pageCompatibility of ENTP With INFP in Relationships TruityJill WuNo ratings yet

- Revised Iesco Book of Financial PowersDocument75 pagesRevised Iesco Book of Financial PowersMuhammad IbrarNo ratings yet

- DIFFERENT TYPE OF DRAWING Based On MediaDocument23 pagesDIFFERENT TYPE OF DRAWING Based On MediaLeslyn Bangibang DiazNo ratings yet

- Aits-2021-Open Test-Jeea-Paper-2Document13 pagesAits-2021-Open Test-Jeea-Paper-2Ayush SrivastavNo ratings yet

- Ground Attack - Special EditionDocument32 pagesGround Attack - Special EditionElisa Mardones100% (1)

- Choroid Plexus CystsDocument2 pagesChoroid Plexus Cystsvalerius83No ratings yet

- Notes On Static and Dynamic FrictionDocument2 pagesNotes On Static and Dynamic FrictiondrhillNo ratings yet

- STRAIGHT LINES (Cet)Document11 pagesSTRAIGHT LINES (Cet)Pratheek KeshavNo ratings yet

- CA3162Document8 pagesCA3162marcelo riveraNo ratings yet

- Theorems On Triangle Inequalities and Parallel Lines Cut by A TransversalDocument2 pagesTheorems On Triangle Inequalities and Parallel Lines Cut by A Transversal20100576No ratings yet

- Grade-5-International Kangaroo Mathematics Contest-Benjamin Level-PkDocument9 pagesGrade-5-International Kangaroo Mathematics Contest-Benjamin Level-PkLaiba ManzoorNo ratings yet

- DC 211261Document3 pagesDC 211261debu beraNo ratings yet

- FL WrightDocument20 pagesFL Wrightarpitvyas67100% (1)

- Partnering With Indonesia For 40 YearsDocument8 pagesPartnering With Indonesia For 40 YearsasfsndNo ratings yet

- WAV252 Quick Reference Guide in English Part 2Document2 pagesWAV252 Quick Reference Guide in English Part 2JACKNo ratings yet

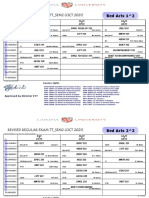

- Revised Regular Exam TT - Sem2 (Oct 2021) : Bed Arts 1 2Document78 pagesRevised Regular Exam TT - Sem2 (Oct 2021) : Bed Arts 1 2kelvinNo ratings yet

- The Seven Deadly SinsDocument47 pagesThe Seven Deadly SinsSilva TNo ratings yet

- BurnerDocument2 pagesBurnerVishnu PatidarNo ratings yet

- Progress Report #1: CO-OP Training in YASREFDocument9 pagesProgress Report #1: CO-OP Training in YASREFMuhammed ajmalNo ratings yet

- Overhaul InfoDocument1 pageOverhaul Infomohamed hamedNo ratings yet