Professional Documents

Culture Documents

GATE CAT - Diagnostic Test Accuracy Studies

GATE CAT - Diagnostic Test Accuracy Studies

Uploaded by

medianaadrianneOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

GATE CAT - Diagnostic Test Accuracy Studies

GATE CAT - Diagnostic Test Accuracy Studies

Uploaded by

medianaadrianneCopyright:

Available Formats

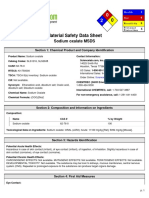

GATE: a Graphic Approach To Evidence based practice

GATE CAT Diagnostic Test Accuracy Studies

Assessed by:

Problem

Critically Appraised Topic (CAT): Applying the 5 steps of Evidence Based Practice

Using evidence from Diagnostic test accuracy studies

Date:

Antibiotik untuk Penyakit Otitis Media

Step 1: Ask a focused 5-part question using PECOT framework

Describe relevant patient/client/population group (be specific about: symptoms, signs, medical condition, age

Population /

group, sex, etc.) that you are considering testing

patient / client

Antibiotik

Exposure (Target

disorder)

Plasebo

Comparison (no

Target disorder)

Describe the test, including levels/categories if relevant, that you are considering doing (note the outcome

Outcome (Test)

in a diagnostic test accuracy study is the test result.

Time is not usually considered explicitly in a diagnostic test accuracy question

Time

Step 2: Access (Search) for the best evidence using the PECOT framework

PECOT item

Primary Search Term

Synonym 1

Synonym 2

Enter

your

key

search

terms

OR

Include relevant

OR

Include relevant

Population /

for P, E & O. C & T seldom

synonym

synonym

Participants /

useful for searching. Add

patients / clients

mesh terms (e.g. sensitivity

AND

& specificity) +/or diagnostic

filter to refine. Use MESH

terms (from PubMed) if

available, then text words.

Exposure (Target

disorder)

Comparison (no

Target disorder)

Outcomes (Test)

(Time)

Limits & Filters

As above

OR

As above

OR

As above

AND

As above

OR

As above

OR

As above

AND

As above

OR

As above

OR

As above

AND

As above

AND

As above

AND

As above

PubMed has Limits (eg age, English language, years) & PubMed Clinical Queries has Filters (e.g. study type) to

help focus your search. List those used.

Databases searched:

Database

Cochrane SRs

Number of

publications (Hits)

Enter number of hits from

Cochrane database search

for Systematic Reviews (SR).

Other Secondary

Sources

PubMed / Ovid Medline

Other

Enter number of hits

from other secondary

sources (specify source)

Enter number of hits from

PubMed /Ovid/etc (specify

database)

Enter number of hits from

other sources (e.g. Google

scholar, Google)

Evidence Selected

Enter the full citation of the publication you have selected to evaluate.

Justification for selection

State the main objectives of the study.

Explain why you chose this publication for evaluation.

V3: 2013: Please contribute your comments and suggestions on this form to: rt.jackson@auckland.ac.nz

Diagnostic test accuracy studies

Step 3: Appraise Study

3a. Describe study by hanging it on the GATE frame (also enter study numbers into the separate excel GATE calculator)

Study Setting Describe when & from where participants recruited (e.g.

what year(s), which country, urban / rural / hospital /

community)

Setting

Population

Eligible Population

Eligible

population

Recruitment

process

Exposure & Comparison

Exposure Group

(EG)

Participants

Comparison Group

(CG)

CG

RS +ve

RS -ve

TP

FN

Outcomes

EG

Define eligible population / main eligibility (inclusion and

exclusion) criteria (e.g. was eligibility based on presenting

symptoms / signs, results of previous tests, or participants

who had received the test or reference standard?

Describe recruitment process (e.g. were eligibles recruited

from hospital admissions / electoral / birth register, etc).

How they were recruited (e.g. consecutive eligibles)?

What percentage of the invited eligibles participated? What

reasons were given for non-participation among those

otherwise eligible?

Allocation

method

Allocated by measurement of Target disorder into

those with disorder (Ref standard +ve) & those

without disorder (Ref standard -ve)

Exposure

(Ref Std. +ve)

Describe reference standard positive disorder: what, how

defined, how measured, when, by whom (level of

expertise?). Include description of categories if more than

yes/no

Describe reference standard negative disorder (as above)

Comparison

(Ref Std. ve)

Describe the diagnostic test: what, how defined, how

measured, when, by whom (level of expertise?). Include

description of categories if more than yes/no

Time

State when test was done in relation to when the reference

standard was done.

O

FN

Time

Outcome(s)

(Test)

TN

T

Outcome

Reported Results

Enter the main reported results

Risk

estimate

Confidence Interval

Sensitivity

Specificity

+ve LR

-ve LR

PPV

NPV

Complete the Numbers on the separate GATE Calculator for Diagnostic Studies

V3: 2013: Please contribute your comments and suggestions on this form to: rt.jackson@auckland.ac.nz

Diagnostic test accuracy studies

Step 3: Appraise Study

3b. Assess risk of errors using RAMboMAN

Risk of

Appraisal questions (RAMboMAN)

errors

Notes

+, x, ?, na

Recruitment/Applicability errors: questions on risks to application of results in practice are in blue boxes

Internal study design errors: questions on risk of errors within study (design & conduct) are in pink boxes

Analyses errors: questions on errors in analyses are in orange boxes

Random error: questions on risk of errors due to chance are in the green box

Key for scoring risk of errors: + = low; x = of concern; ? = unclear; na = not applicable

Recruitment appropriate to study objectives? Is it possible to define groups/populations that the findings are

applicable to?

Score risk of

error as: +,

x, ? or na

(see key

above)

Study Setting appropriate?

Participant Population

Study planned before reference standard

and tests done?

Eligible population appropriate?

Participants similar to all eligibles?

Key personal (risk/prognostic)

characteristics of participants reported?

Appropriate spectrum of participants?

Does the study setting (e.g. what year(s), which country,

urban / rural, hospital / community) support the

applicability of the study results?

Was the study done prospectively or was it a retrospective

use of available data?

If retrospective was the participant population chosen

primarily because of available test data or target disorder

data?

Was the eligible population from which participants were

identified relevant to the study objective?

Were inclusion & exclusion criteria well defined & applied

similarly to all potential eligibles?

Did the recruitment process identify participants likely to

be similar to all eligibles? Was sufficient information given

about eligibles who did not participate?

Was there sufficient information about baseline

characteristics of participants to determine the applicability

of the study results? Was any important information

missing? Was there an appropriate spectrum of people

similar to those in whom the test would be used in

practice?

Allocation to EG & CG done well?

Exposures & Comparisons

Reference standard sufficiently well

defined and well measured so

participants allocated to correct Target

disorder groups?

Reference standard measured prior to

Test? If not, was it measured blind to Test

result?

Prevalence (pre-test probability) of Target

disorder typical of usual practice?

Were reference standard definitions described in sufficient

detail for the measurements to be replicated? Were the

measurements done accurately? Were criteria / cut-off

levels of categories well justified)

Was reference standard administered whatever the test

result and interpreted without knowledge of the test

result? If not, was it likely to cause bias?

Note: If prevalence (pre-test probability) of target disorder

similar to usual practice, these data can be used to help

determine post-test probabilities in practice (also need LRs)

Maintenance in allocated groups and throughout study sufficient?

Proportion of intended participants

receiving both Test and Reference

Was there a particular subgroup of the eligible participants

not given either the Test or the Reference Standard? Was

V3: 2013: Please contribute your comments and suggestions on this form to: rt.jackson@auckland.ac.nz

Standard sufficiently high?

Change in Target disorder/Test status in

period between Test and Reference

Standard being administered

this sufficient to cause important errors?

If there was a considerable delay between Test and

Reference Standard then study could some new events

have occurred or treatment may have been started that

could influence the results of the Test/Ref Standard? If so,

was this sufficient to cause important bias?

blind or objective Measurement of Outcomes: were they done accurately?

Outcomes

Test measured blind to Reference

Standard Status status?

Were Testers aware of whether participants were

Reference Standard positive or negative?

If yes, was this likely to lead to biased measurement?

Test measured objectively?

How objective was the Test measurements (e.g. automatic

test, strict criteria)?

Where significant judgment was required, were

independent adjudicators used?

Was reliability of measures relevant (inter-rater & intrarater), & if so, reported?

Would it be practical to implement this Test in usual

practice? How safe, available, affordable & acceptable

might it be?

Test safe, available, affordable &

acceptable in usual practice?

ANalyses: were they done appropriately?

Results

If Ref Standard +ve & -ve groups not

similar at baseline was this adjusted for in

the analyses?

Estimates of Test sensitivity/specificity

etc given or calculable? Were they

calculated correctly?

Measures of the amount of random error

in estimates given or calculable? Were

they calculated correctly?

Some factors that differ between those with & without the

target disorder could effect test accuracy (e.g. age, obesity,

co-morbidities), although these are typically not reported.

Were raw data reported in enough detail to allow 2x2

tables to be constructed (i.e. TP, FP, FN & TN) in GATE

frame & to calculate estimates of test specificity and

sensitivity if entered into GATE calculator? Were GATE

results similar to reported results?

Were confidence intervals &/or p-values for study results

given or possible to calculate? If they could be entered into

GATE calculator, were GATE results similar to reported

results?

Summary of Study Appraisal

Was the risk of error due to internal study

design & conduct low enough for the

results to be reasonably unbiased?

Was the risk of errors due to study

analyses sufficiently low for the results to

be reasonably unbiased?

Was the amount of random error in study

results effects low enough for the results

to be meaningful?

What is your level of concern about the

applicability of these findings to your

problem?

Use responses to questions in pink boxes above

Use responses from the orange boxes above

Use responses to questions in green box above. Would you

make a different decision if the true effect was close to the

upper confidence limit rather than close to the lower

confidence limit?

Use responses to questions in blue boxes above

V3: 2013: Please contribute your comments and suggestions on this form to: rt.jackson@auckland.ac.nz

Diagnostic test accuracy studies

Step 4: Apply. Consider/weigh up all factors & make (shared) decision to act

The X-factor

Patient & Family

Community

Practitioner

Economic

Epidemiological Evidence

Decision

Values & preferences

System features

Case Circumstances

Legal

Political

Epidemiological evidence: summarise the quality of the

study appraised, the magnitude and precision of the

measure(s) estimated and the applicability of the

evidence. Also summarise its consistency with other

studies (ideally systematic reviews) relevant to the

decision.

Case circumstances: what circumstances (e.g. disease

process/ co-morbidities [mechanistic evidence], social

situation) specifically related to the problem you are

investigating may impact on the decision?

System features: were there any system constraint or

enablers that may impact on the decision?

What values & preferences may need to be considered in

making the decision?

Decision: Taking into account all the factors above what is the best decision in this case?

Step 5: Audit usual practice (For Quality Improvement)

Is there likely to be a gap between your usual practice and best practice for the problem?

V3: 2013: Please contribute your comments and suggestions on this form to: rt.jackson@auckland.ac.nz

You might also like

- Achtung! Cthulhu - Guide To The Eastern FrontDocument125 pagesAchtung! Cthulhu - Guide To The Eastern FrontJand100% (1)

- Bn-Full Instructor GuideDocument128 pagesBn-Full Instructor GuideBounna Phoumalavong100% (2)

- Power of Attention PDFDocument158 pagesPower of Attention PDFSteven Droullard100% (9)

- Acrylic SecretsDocument92 pagesAcrylic SecretsMihaela Toma100% (2)

- Critical AppraisalDocument6 pagesCritical AppraisalRevina AmaliaNo ratings yet

- Critical AppraisalDocument132 pagesCritical AppraisalSanditia Gumilang100% (2)

- The Sultan S Harem in The Ottoman Empire PDFDocument19 pagesThe Sultan S Harem in The Ottoman Empire PDFZara AhmedNo ratings yet

- Sop On Maintenance of Air Handling Unit - Pharmaceutical GuidanceDocument3 pagesSop On Maintenance of Air Handling Unit - Pharmaceutical Guidanceruhy690100% (1)

- Protokol Critical ApraisalDocument16 pagesProtokol Critical ApraisalMustika ayu lestariNo ratings yet

- Tips For Developing and Testing QuestionnairesDocument5 pagesTips For Developing and Testing QuestionnairesMuhammad Ali Bhatti100% (1)

- Sampling and Sample Size Computation: Sampling Methods in ResearchDocument4 pagesSampling and Sample Size Computation: Sampling Methods in ResearchHARIS KKNo ratings yet

- Critical Appraisal Form For Cohort StudyDocument3 pagesCritical Appraisal Form For Cohort StudyFina Adolfina100% (1)

- Kids Box New Generation 3 Pupils BookDocument97 pagesKids Box New Generation 3 Pupils BookMicaela Corado Dedo100% (5)

- GATE CAT Diagnostic StudiesDocument5 pagesGATE CAT Diagnostic StudiesAnonymous mFKwUNNo ratings yet

- Casp For Diagnostic StudyDocument6 pagesCasp For Diagnostic StudyBunga DewanggiNo ratings yet

- CASP Diagnostic Test Checklist 31.05.13Document6 pagesCASP Diagnostic Test Checklist 31.05.13itho23No ratings yet

- Template Critical AppraisalDocument9 pagesTemplate Critical AppraisalFarhana AmaliyahNo ratings yet

- 12 Questions To Help You Make Sense of A Diagnostic Test StudyDocument5 pages12 Questions To Help You Make Sense of A Diagnostic Test StudyHilya NabyllaNo ratings yet

- 12 Questions To Help You Make Sense of A Diagnostic Test StudyDocument5 pages12 Questions To Help You Make Sense of A Diagnostic Test Studyannisa rahmawatiNo ratings yet

- Checklist For Evaluating A Research ReportDocument13 pagesChecklist For Evaluating A Research ReportJerome HubillaNo ratings yet

- CAT Prognosis FormDocument2 pagesCAT Prognosis FormVictoria Perreault QuattrucciNo ratings yet

- 12 Questions To Help You Make Sense of A Diagnostic Test StudyDocument6 pages12 Questions To Help You Make Sense of A Diagnostic Test StudymailcdgnNo ratings yet

- This Checklist Is Based On The Work of The QUADAS2 Team at Bristol UniveristyDocument6 pagesThis Checklist Is Based On The Work of The QUADAS2 Team at Bristol UniveristyAgungSuastikaNo ratings yet

- 12 Questions To Help You Make Sense of A Diagnostic Test StudyDocument6 pages12 Questions To Help You Make Sense of A Diagnostic Test StudyAdenNo ratings yet

- 12 Questions To Help You Make Sense of A Diagnostic Test StudyDocument6 pages12 Questions To Help You Make Sense of A Diagnostic Test StudyAdenNo ratings yet

- STARD Checklist For Reporting of Studies of Diagnostic AccuracyDocument1 pageSTARD Checklist For Reporting of Studies of Diagnostic AccuracyYuranto Eka PutraNo ratings yet

- Mini CAT Exercise AY2021-revised 4-27-21Document15 pagesMini CAT Exercise AY2021-revised 4-27-21Bryan HoangNo ratings yet

- Ebm&Rm Dta Student 2023Document14 pagesEbm&Rm Dta Student 2023Sara Abdi OsmanNo ratings yet

- University of Santo Tomas Faculty of Pharmacy Department of Medical Technology Seminar Diagnostic ResearchDocument4 pagesUniversity of Santo Tomas Faculty of Pharmacy Department of Medical Technology Seminar Diagnostic ResearchAlex MagatNo ratings yet

- Making Evidence More Accessible Using Pictures Rod Jackson 2009Document60 pagesMaking Evidence More Accessible Using Pictures Rod Jackson 2009guillesppNo ratings yet

- Quanreview Form1Document3 pagesQuanreview Form1Nuklear AdiwenaNo ratings yet

- K7 - Critical AppraisalDocument40 pagesK7 - Critical AppraisalFiqih IbrahimNo ratings yet

- University of Santo Tomas Faculty of Pharmacy Department of Medical Technology Seminar 2 Diagnostic ResearchDocument4 pagesUniversity of Santo Tomas Faculty of Pharmacy Department of Medical Technology Seminar 2 Diagnostic ResearchAlex MagatNo ratings yet

- University of Santo Tomas Faculty of Pharmacy Department of Medical Technology Seminar 2 Diagnostic ResearchDocument4 pagesUniversity of Santo Tomas Faculty of Pharmacy Department of Medical Technology Seminar 2 Diagnostic ResearchAlex MagatNo ratings yet

- Checklist For Cohort StudiesDocument5 pagesChecklist For Cohort StudiesHanieva HaraNo ratings yet

- Research Paper With Chi Square TestDocument9 pagesResearch Paper With Chi Square Testshjpotpif100% (1)

- CAT Diagnosis FormDocument2 pagesCAT Diagnosis FormVictoria Perreault QuattrucciNo ratings yet

- Template Journal CritiquingDocument5 pagesTemplate Journal CritiquingBiyaya San PedroNo ratings yet

- Critical Appraisal of Cohort Studies EBMP 1000e108Document2 pagesCritical Appraisal of Cohort Studies EBMP 1000e108thetaggerung100% (1)

- CAT Intervention FormDocument3 pagesCAT Intervention FormVictoria Perreault QuattrucciNo ratings yet

- Checklist For Diagnostic Accuracy-StudiesDocument3 pagesChecklist For Diagnostic Accuracy-StudiesConstanza PintoNo ratings yet

- Menilai Jurnal (CAT)Document53 pagesMenilai Jurnal (CAT)Risya TheupstarNo ratings yet

- Checklist For Controlled TrialsDocument2 pagesChecklist For Controlled TrialsMuhammad IqbalNo ratings yet

- Critically Appraised Topics 23june2014Document33 pagesCritically Appraised Topics 23june2014filchibuffNo ratings yet

- An Introduction To Critical Appraisal: Laura Wilkes Trust Librarian West Suffolk Foundation Trust Laura - Wilkes@wsh - Nhs.ukDocument40 pagesAn Introduction To Critical Appraisal: Laura Wilkes Trust Librarian West Suffolk Foundation Trust Laura - Wilkes@wsh - Nhs.ukAnna SanNo ratings yet

- Sampling and StatsDocument4 pagesSampling and StatsSweet HusainNo ratings yet

- GATE CAT - Diagnostic Test Accuracy Studies: Filters (E.g. Study Type) To Help Focus Your Search. List Those UsedDocument7 pagesGATE CAT - Diagnostic Test Accuracy Studies: Filters (E.g. Study Type) To Help Focus Your Search. List Those UsedHolly SanusiNo ratings yet

- CASP Diagnostic Appraisal Checklist 14oct10Document4 pagesCASP Diagnostic Appraisal Checklist 14oct10Agil SulistyonoNo ratings yet

- Planning A Research: Dr.M.Logaraj, M.D., Professor of Community Medicine SRM Medical CollegeDocument36 pagesPlanning A Research: Dr.M.Logaraj, M.D., Professor of Community Medicine SRM Medical CollegelaviganeNo ratings yet

- Evidence-Based Medicine in Clinical PracticeDocument42 pagesEvidence-Based Medicine in Clinical PracticeNur Rahmah KurniantiNo ratings yet

- Methodology Checklist 2: Randomised Controlled Trials: Pages)Document6 pagesMethodology Checklist 2: Randomised Controlled Trials: Pages)Syahidatul Kautsar NajibNo ratings yet

- Checklist For Systematic ReviewsDocument4 pagesChecklist For Systematic ReviewsAan AchmadNo ratings yet

- Data CollectionDocument31 pagesData Collectionvrvasuki100% (2)

- Research Methods in Computer ScienceDocument25 pagesResearch Methods in Computer ScienceMohd FaizinNo ratings yet

- Other (CD, NR, NA)Document6 pagesOther (CD, NR, NA)Carl JungNo ratings yet

- Evidence Based Medicine For Practicing PhysiciansDocument20 pagesEvidence Based Medicine For Practicing PhysiciansAndreu LominoqueNo ratings yet

- Assessment of The Quality of Randomized Controlled Trials (Autosaved)Document39 pagesAssessment of The Quality of Randomized Controlled Trials (Autosaved)Nehal ElsherifNo ratings yet

- Statistical Analysis - An Overview IntroductionDocument57 pagesStatistical Analysis - An Overview IntroductionRajeev SharmaNo ratings yet

- Research Question (Truth in The Universe) Study Plan (Truth in The Study)Document7 pagesResearch Question (Truth in The Universe) Study Plan (Truth in The Study)natrix029No ratings yet

- Asgmt 6 - Method Section DraftDocument4 pagesAsgmt 6 - Method Section DraftandyNo ratings yet

- Basic Statistical Treatment in ResearchDocument32 pagesBasic Statistical Treatment in ResearchRICARDO LOUISE DOMINGONo ratings yet

- PEDro ScaleDocument2 pagesPEDro Scalesweetpudding0% (1)

- AOTA Evidence-Based Practice Project CAP Worksheet Evidence Exchange Last Updated: 8/25/2016Document5 pagesAOTA Evidence-Based Practice Project CAP Worksheet Evidence Exchange Last Updated: 8/25/2016Marina ENo ratings yet

- The Well-Built, Patient-Oriented Clinical Question: (E.g., Age, Disease/condition, Gender)Document10 pagesThe Well-Built, Patient-Oriented Clinical Question: (E.g., Age, Disease/condition, Gender)Dakong JanuariNo ratings yet

- Ch08 PPT QuantitativeMethodsDocument15 pagesCh08 PPT QuantitativeMethodsFaybrienne DoucetNo ratings yet

- Success Probability Estimation with Applications to Clinical TrialsFrom EverandSuccess Probability Estimation with Applications to Clinical TrialsNo ratings yet

- (WWW - Asianovel.com) - Liu Yao The Revitalization of Fuyao Sect Vol.1 Chapter 1 - Vol.1 Chapter 8Document77 pages(WWW - Asianovel.com) - Liu Yao The Revitalization of Fuyao Sect Vol.1 Chapter 1 - Vol.1 Chapter 8AnjyNo ratings yet

- Honey Encryption New Report - 1Document5 pagesHoney Encryption New Report - 1Cent SecurityNo ratings yet

- #6 Clinical - Examination 251-300Document50 pages#6 Clinical - Examination 251-300z zzNo ratings yet

- Chapter-Ii Project Description 2.1 Type of ProjectDocument20 pagesChapter-Ii Project Description 2.1 Type of ProjectOMSAINATH MPONLINENo ratings yet

- Automatic Bottle Filling SystemDocument3 pagesAutomatic Bottle Filling Systemvidyadhar GNo ratings yet

- Cristalactiv™ Pc105: Ultrafine & Specialty Tio ProductsDocument3 pagesCristalactiv™ Pc105: Ultrafine & Specialty Tio ProductsShweta MahajanNo ratings yet

- 1stquarter2019 CherwelDocument24 pages1stquarter2019 CherwelTin AceNo ratings yet

- Oxnard: Tsunami Evacuation MapsDocument2 pagesOxnard: Tsunami Evacuation MapsVentura County Star100% (1)

- Sep Incoming Exchange Guide PDFDocument13 pagesSep Incoming Exchange Guide PDFhmmmNo ratings yet

- The Times ProjectionDocument5 pagesThe Times ProjectionBelinda NiuNo ratings yet

- Msds PDFDocument5 pagesMsds PDFkleaxeyaNo ratings yet

- D2B Cargo Investment Presentation - Updated - IndiaDocument11 pagesD2B Cargo Investment Presentation - Updated - IndiaHimanshu MalikNo ratings yet

- 463 Galley 3564 2 10 20221105Document7 pages463 Galley 3564 2 10 20221105Kk KumarNo ratings yet

- Workshop 3 - MathsDocument5 pagesWorkshop 3 - MathsJuan David Bernal QuinteroNo ratings yet

- TMF814 Network SimulatorDocument496 pagesTMF814 Network SimulatorNguyen Anh DucNo ratings yet

- Makeup Artist Characteristics and QualitiesDocument5 pagesMakeup Artist Characteristics and QualitiesRizzia Lyneth SargentoNo ratings yet

- CRSBS BrochureDocument2 pagesCRSBS BrochureKarthik PalaniswamyNo ratings yet

- 12th Sprint Reloaded-SolutionsDocument47 pages12th Sprint Reloaded-SolutionsMuhammad Anas BilalNo ratings yet

- The Design of DDS ADPLL Using ARM Micro ControllerDocument15 pagesThe Design of DDS ADPLL Using ARM Micro ControllerIJRASETPublicationsNo ratings yet

- NEET 2020 Exam Date Announced: Application, Eligibility, Dates, Admit Card, ResultDocument23 pagesNEET 2020 Exam Date Announced: Application, Eligibility, Dates, Admit Card, ResultnagaraniNo ratings yet

- Enable Root Access openSUSEDocument4 pagesEnable Root Access openSUSEAgung PambudiNo ratings yet

- 500kVA Dyn5Document1 page500kVA Dyn5edwardNo ratings yet

- High Availability Cluster - Proxmox VEDocument4 pagesHigh Availability Cluster - Proxmox VEDhani MultisolutionNo ratings yet