Professional Documents

Culture Documents

Named Entity Recognition in The Domain of Polish Stock Exchange Reports

Uploaded by

Michał MarcińczukOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Named Entity Recognition in The Domain of Polish Stock Exchange Reports

Uploaded by

Michał MarcińczukCopyright:

Available Formats

Named Entity Recognition in the Domain of Polish Stock Exchange Reports

Micha Marciczuk and Maciej Piasecki

Wrocaw University of Technology

7 czerwca 2010

Project NEKST (Natively Enhanced Knowledge Sharing Technologies) co-nanced by Innovative Economy Programme project POIG.01.01.02-14-013/09

Scope Introduction

Introduction

Overview : a problem of Named Entity Recognition, recognition of PERSON and COMPANY annotations, two corpora of Stock Exchange Reports from an economic domain and Police Reports from a security domain, combination of a machine learning approach with a manually created rules, application of Hidden Markov Models. The corpus was published at http://nlp.pwr.wroc.pl/gpw/download

Micha Marciczuk and Maciej Piasecki (PWr.)

7 czerwca 2010

2 / 15

Scope Task Denition

Task Denition

We dened NEs as language expressions referring to extra-linguistic real or abstract objects of the preselected kinds. We limited the Named Entity Recognition task to identify expressions consiting of proper names refering to PERSON and COMPANY entities.

Examples of correct and incorrect expressions of PERSON type:

correct: R. Dolea, Marek Wiak, Luis Manuel Conceicao do Amaral, person names are part of a company name: Moore Stephens Trzemalski , Krynicki i Partnerzy Kancelaria Biegych Rewidentw Sp . z o.o., location: pl. Jana Pawa II.

Micha Marciczuk and Maciej Piasecki (PWr.)

7 czerwca 2010

3 / 15

Scope Corpora of Economic Domain

Corpora of Economic Domain

Stock Exchange Reports (SCER)

1215 documents, 282 376 tokens, 670 PERSON and 3 238 COMPANY annotations, source http://gpwinfostrefa.pl,

Characteristic

a set of economic reports published by companies, very formal style of writing, a lot of expressions written from an upper case letter that are not proper names, a lot of names of institutions, organizations, companies, people and location.

Micha Marciczuk and Maciej Piasecki (PWr.) 7 czerwca 2010 4 / 15

Scope Corpora of Security Domain

Corpora of Security Domain

Police Reports (CPR)

12 documents, 29 569 tokens, 555 PERSON and 121 COMPANY annotations, source [Gralinski et al., 2009].

Characteristic

a set of statements produced by witnesses and suspects, rather informal style of writing, a lot of pseudonyms and common words that are proper names, a lot of one-word person names.

Micha Marciczuk and Maciej Piasecki (PWr.)

7 czerwca 2010

5 / 15

Scope Corpus Developement

Corpus Developement

To annotate the corpora we developed and used the Inforex system.

System fueatures: web-based does not require installation (requires only a FireFox browser with JavaScript), remote corpora are stored on a server, shared corpora can be simultaneously annotated by many users.

Micha Marciczuk and Maciej Piasecki (PWr.)

7 czerwca 2010

6 / 15

Scope Baselines

Baselines

1

Heuristic matches a sequence of words starting with an upper case letter. For COMPANY the name must end with an activity form, i.e.: SA, LLC, Spka, AG, S.A., Sp., B.V.. Gazetteers matches a sequence of words present in the dictionary of rst names and last names (63 555 entries) or company names (6200 entries) [Piskorski, 2004].

PERSON Precision Recall F1 -measure Precision Recall F1 -measure 0.89 42.45 1.75 9.61 41.19 15.59 CSER CPR COMPANY PERSON COMPANY Heuristic % 0.76 % 19.35 % 0.19 % % 4.42 % 93.87 % 4.13 % % 1.29 % 32.09 % 0.36 % Gazetteers % 37.01 % 46.79 % 21.05 % % 40.54 % 9.02 % 3.31 % % 38.69 % 15.12 % 5.71 %

7 czerwca 2010 7 / 15

Micha Marciczuk and Maciej Piasecki (PWr.)

Scope Recognition Based on HMM

Recognition Based on HMM

LingPipe [Alias-i, 2008] implementation of HMM

7 hidden states for every annotation type, 3 additional states (BOS, EOS, middle token), Witten-Bell smoothing, rst-best decoder based on Viterbis algorithm. Pan Jan Nowak zosta nominowany na stanowisko prezesa (Mr. Jan Nowak was nominated for the chairman position.) (BOS) (E-O-PER) (B-PER) (E-PER) (B-O-PER) (W-O) (W-O) (W-O) (W-O) (W-O) (EOS)

Micha Marciczuk and Maciej Piasecki (PWr.)

7 czerwca 2010

8 / 15

Scope Single Domain Evaluation (PERSON)

Single Domain Evaluation (PERSON)

We performed a 10-fold Cross Validation on the Stock Exchange Corpus for PERSON annotations.

Precision Recall F1 -measure

Heuristic 0.89 % 42.45 % 1.75 %

Gazetters 9.61 % 41.19 % 15.59 %

HMM 64.74 % 93.73 % 76.59 %

Micha Marciczuk and Maciej Piasecki (PWr.)

7 czerwca 2010

9 / 15

Scope Error Analysis

Error Analysis

We have identied 10 types of errors. No. 1 2 3 4 5 6 7 8 9 10 Error type Name of institution, company, etc. Name of location (street, place, etc.) Other proper names Phrases in English Incorrect annotation boundary Common word starting from upper case character Common word starting from lower case character Single character Common word with a spelling error Other full 38 30 2 Matches partial total 91 129 16 46 10 12 21 21 35 35 6 6 26 26 6 6 3 3 46 46

A) 1, 2, 3 incorrect types of annotation recognition of COMPANY and LOCATION, B) 4, 7, 8, 9 lower case and non-alphabetic expressions rule ltering, C) 5 incorrect annotation boundary annotation merging and trimming.

Micha Marciczuk and Maciej Piasecki (PWr.) 7 czerwca 2010 10 / 15

Scope Single Domain Evaluation (PERSON & COMPANY)

Single Domain Evaluation (PERSON & COMPANY)

Referring to the A group of errors, we have re-annotated the CSER with COMPANY annotations and repeated the 10-fold CV .

Precision Recall F1 -measure

PERSON REV COMB 64.74 %* 78.63 % 93.73 %* 94.62 % 76.59 %* 85.89 %

COMPANY 76.56 % 83.14 % 79.71 %

* results from the previous 10-fold CV

Micha Marciczuk and Maciej Piasecki (PWr.)

7 czerwca 2010

11 / 15

Scope Post-ltering

Post-ltering

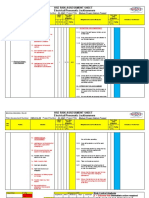

Referring to the B and C groups of errors, we have applied two types of post-processing: annotation ltering and merging.

HMM Precision Recall F1 -measure Precision Recall F1 -measure 64.74 %* 93.73 %* 76.59 %* 78.63 %* 94.62 %* 85.89 %* +ltering +trimming PERSON (REV) 76.27 % 64.85 % 91.64 % 93.88 % 83.25 % 76.71 % PERSON (COMB) 87.16 % 78.76 % 91.33 % 94.77 % 89.20 % 86.02 % +both 75.82 % 91.77 % 83.03 % 86.69 % 91.48 % 89.02 %

* results from the previous 10-fold CV

Micha Marciczuk and Maciej Piasecki (PWr.)

7 czerwca 2010

12 / 15

Scope Cross Domain Evaluation

Cross Domain Evaluation

The system was trained on the Corpus of Stock Exchange and tested on the Corpus of Police Reports.

HMM Precision Recall F1 -measure Precision Recall F1 -measure Precision Recall F1 -measure 7.73 % 48.47 % 35.28 % 29.81 % 39.64 % 34.03 % 12.30 % 56.20 % 20.18 % +ltering +trimming PERSON (REV) 62.91 % 32.16 % 48.29 % 56.22 % 54.64 % 40.92 % PERSON (COMB) 69.75 % 37.13 % 39.46 % 49.37 % 50.40 % 42.38 % COMPANY +both 53.71 % 56.04 % 54.85 % 58.33 % 49.19 % 53.37 % -

Micha Marciczuk and Maciej Piasecki (PWr.)

7 czerwca 2010

13 / 15

Summary Conclusion & Plans

Conclusion & Plans

Conclusion

results of single-domain evaluation are promising, simple rule-based post-processing of HMM can improve the nal results, low domain portability better utilization of gazetteers and rules are needed.

Plans

to extend the schema annotation by LOCATION & ORGANIZATION, to collect a new corpus for cross-domain evaluation, to develop new rules for post-processing (for example to x a problem with sentence segmentation), incorporate other sources of knowledge (rules and gazetteers for majority voting, plWordNet for generalization), new learning models: HMM including morphology, other ML methods with context features (preceding verbs, prepositions, etc.).

Micha Marciczuk and Maciej Piasecki (PWr.) 7 czerwca 2010 14 / 15

References Main papers

Alias-i, LingPipe 3.9.0. http://alias-i.com/lingpipe (October 1, 2008) Graliski, F., Jassem, K., Marciczuk, M., Wawrzyniak, P.: Named Entity Recognition in Machine Anonymization. In: Kopotek, M. A., Przepiorkowski, A., Wierzcho, A. T., Trojanowski, K. (eds.) Recent Advances in Intelligent Information Systems, pp. 247260. Academic Publishing House Exit (2009) Piskorski J.: Extraction of Polish named entities. In: Proceedings of the Fourth International Conference on Language Resources and Evaluation, LREC 2004, pp. 313316. ACL, Prague, Czech Republic (2004)

Micha Marciczuk and Maciej Piasecki (PWr.)

7 czerwca 2010

15 / 15

You might also like

- Performance Evaluation by Simulation and Analysis with Applications to Computer NetworksFrom EverandPerformance Evaluation by Simulation and Analysis with Applications to Computer NetworksNo ratings yet

- Automatic Vehicle License Plate Recognition Using Optimal K-Means With Convolutional Neural Network For Intelligent Transportation SystemsDocument11 pagesAutomatic Vehicle License Plate Recognition Using Optimal K-Means With Convolutional Neural Network For Intelligent Transportation SystemsragouNo ratings yet

- SRM (2011) of I SemDocument112 pagesSRM (2011) of I SemSourabh GangbhojNo ratings yet

- Object Oriented Programming 600206 P.NITHYANANDAM. Asst - ProfessorDocument476 pagesObject Oriented Programming 600206 P.NITHYANANDAM. Asst - ProfessorVignesh WikiNo ratings yet

- Final Presentation - April 28Document28 pagesFinal Presentation - April 28api-587934285No ratings yet

- Object Detection Using YOLODocument9 pagesObject Detection Using YOLOIJRASETPublicationsNo ratings yet

- ENTERFACE 2010 Project Proposal: 1. Introduction and Project ObjectivesDocument7 pagesENTERFACE 2010 Project Proposal: 1. Introduction and Project ObjectiveshajraNo ratings yet

- COBOL Software Modernization: From Principles to Implementation with the BLU AGE MethodFrom EverandCOBOL Software Modernization: From Principles to Implementation with the BLU AGE MethodRating: 1 out of 5 stars1/5 (1)

- Thema Bachelor Thesis WirtschaftsinformatikDocument6 pagesThema Bachelor Thesis Wirtschaftsinformatikafktmeiehcakts100% (1)

- Improved Approach For Logo Detection and RecognitionDocument5 pagesImproved Approach For Logo Detection and RecognitionInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Mivar NETs and logical inference with the linear complexityFrom EverandMivar NETs and logical inference with the linear complexityNo ratings yet

- Modeling and Simulation Support for System of Systems Engineering ApplicationsFrom EverandModeling and Simulation Support for System of Systems Engineering ApplicationsLarry B. RaineyNo ratings yet

- Curriculum Vitae Rahul NilangekarDocument5 pagesCurriculum Vitae Rahul NilangekarRahul NilangekarNo ratings yet

- An Enhanced Method For Human Action Recognit - 2015 - Journal of Advanced ResearDocument7 pagesAn Enhanced Method For Human Action Recognit - 2015 - Journal of Advanced ResearSuresh SeerviNo ratings yet

- Master Thesis Bioinformatics GermanyDocument5 pagesMaster Thesis Bioinformatics GermanyTina Gabel100% (2)

- Fi WebuserDocument18 pagesFi WebuserQuyết ĐàoNo ratings yet

- COSMIC FunctionalSizeClassificationDocument5 pagesCOSMIC FunctionalSizeClassificationSamuel temesgenNo ratings yet

- PreprintTraffic Sign Detection NeurocomputingDocument26 pagesPreprintTraffic Sign Detection Neurocomputingami videogameNo ratings yet

- Accepted Manuscript: 10.1016/j.jss.2017.11.066Document27 pagesAccepted Manuscript: 10.1016/j.jss.2017.11.066Km MNo ratings yet

- Theoretical Foundations of Computer VisiDocument33 pagesTheoretical Foundations of Computer VisiScarllet Osuna TostadoNo ratings yet

- Object-Oriented Technology and Computing Systems Re-EngineeringFrom EverandObject-Oriented Technology and Computing Systems Re-EngineeringNo ratings yet

- Pipelined Processor Farms: Structured Design for Embedded Parallel SystemsFrom EverandPipelined Processor Farms: Structured Design for Embedded Parallel SystemsNo ratings yet

- Research and Application of License Plate RecognitDocument7 pagesResearch and Application of License Plate RecognitCORAL ALONSONo ratings yet

- ECIS02Document10 pagesECIS02nocnexNo ratings yet

- Semantica: Semantically Enhanced Business Process Modelling NotationDocument4 pagesSemantica: Semantically Enhanced Business Process Modelling NotationTomás HernándezNo ratings yet

- Rajasthan Technical University, Kota Detailed Syllabus For B.Tech. (Computer Engineering)Document7 pagesRajasthan Technical University, Kota Detailed Syllabus For B.Tech. (Computer Engineering)shkhwtNo ratings yet

- PHD Thesis On Object TrackingDocument6 pagesPHD Thesis On Object Trackinglindseyjonesclearwater100% (2)

- An Embedded Computer Vision SysytemDocument20 pagesAn Embedded Computer Vision SysytemeviteNo ratings yet

- Official Logo Recognition Based On Multilayer Convolutional Neural Network ModelDocument8 pagesOfficial Logo Recognition Based On Multilayer Convolutional Neural Network ModelTELKOMNIKANo ratings yet

- Geometric Correction of Multi-Projector Data For Dome DisplayDocument11 pagesGeometric Correction of Multi-Projector Data For Dome Displayabdullahnisar92No ratings yet

- Open Electrive I - 3rd Year VI Semester - AICTE 2020-21-12 Oct 20Document15 pagesOpen Electrive I - 3rd Year VI Semester - AICTE 2020-21-12 Oct 20utkarsh mauryaNo ratings yet

- Cognos Query and Analysis Studios: Presented By: Laree Bomar Denise Sober Lesley WilmethDocument16 pagesCognos Query and Analysis Studios: Presented By: Laree Bomar Denise Sober Lesley WilmethvipingovilNo ratings yet

- An Application of SMC To Continuous Validation of Heterogeneous SystemsDocument19 pagesAn Application of SMC To Continuous Validation of Heterogeneous Systemscefep15888No ratings yet

- April 2024 - Top 10 Read Articles in Computer Networks & CommunicationsDocument27 pagesApril 2024 - Top 10 Read Articles in Computer Networks & Communicationsijcncjournal019No ratings yet

- Open Electrive I - 3rd Year VI Semester - AICTE 2020-21 - 9 March 2021Document15 pagesOpen Electrive I - 3rd Year VI Semester - AICTE 2020-21 - 9 March 2021aniruddha sharmaNo ratings yet

- EAES Effective Augmented Embedding Spaces For Text-Based Image CaptioningDocument10 pagesEAES Effective Augmented Embedding Spaces For Text-Based Image CaptioningnhannguyenNo ratings yet

- Embedded Systems: Analysis and Modeling with SysML, UML and AADLFrom EverandEmbedded Systems: Analysis and Modeling with SysML, UML and AADLFabrice KordonNo ratings yet

- HoubenEtAl GTSDBDocument9 pagesHoubenEtAl GTSDBe190501006No ratings yet

- Web-Based Location TrackingDocument104 pagesWeb-Based Location TrackingSedik DriffNo ratings yet

- Efficient Visual Tracking With Stacked Channel-Spatial Attention LearningDocument13 pagesEfficient Visual Tracking With Stacked Channel-Spatial Attention Learningsobuz visualNo ratings yet

- VLP: A Survey On Vision-Language Pre-TrainingDocument19 pagesVLP: A Survey On Vision-Language Pre-TrainingMintesnot FikirNo ratings yet

- Learning OpenCV 3 Computer Vision with Python - Second EditionFrom EverandLearning OpenCV 3 Computer Vision with Python - Second EditionNo ratings yet

- Machine Learning Operations MLOps Overview Definition and ArchitectureDocument14 pagesMachine Learning Operations MLOps Overview Definition and ArchitectureSerhiy YehressNo ratings yet

- 7th SemisterDocument12 pages7th SemisterRishi SangalNo ratings yet

- Improving Multimedia Retrieval With A Video OCRDocument12 pagesImproving Multimedia Retrieval With A Video OCRSujayCjNo ratings yet

- Optical Character Recognition Based Speech Synthesis: Project ReportDocument17 pagesOptical Character Recognition Based Speech Synthesis: Project Reportisoi0% (1)

- SystemC Methodologies and Applications-Müller-KluwerDocument356 pagesSystemC Methodologies and Applications-Müller-KluwerAlessandro ValleroNo ratings yet

- Signal Processing: D.-N. Truong Cong, L. Khoudour, C. Achard, C. Meurie, O. LezorayDocument13 pagesSignal Processing: D.-N. Truong Cong, L. Khoudour, C. Achard, C. Meurie, O. Lezoraydiavoletta89No ratings yet

- SE ReviewcDocument3 pagesSE ReviewcRon JeffersonNo ratings yet

- AML Individual Practical PaulY VfinalDocument7 pagesAML Individual Practical PaulY VfinalLeonardo HernanzNo ratings yet

- Syllabus RGPVDocument5 pagesSyllabus RGPVकिशोरी जूNo ratings yet

- Efficient Point Cloud Pre-Processing Using The Point Cloud LibraryDocument10 pagesEfficient Point Cloud Pre-Processing Using The Point Cloud LibraryAI Coordinator - CSC JournalsNo ratings yet

- Urdu Optical Character Recognition OCR Thesis Zaheer Ahmad Peshawar Its Soruce Code Is Available On MATLAB Site 21-01-09Document61 pagesUrdu Optical Character Recognition OCR Thesis Zaheer Ahmad Peshawar Its Soruce Code Is Available On MATLAB Site 21-01-09Zaheer Ahmad100% (1)

- Whitepaper MLDocument4 pagesWhitepaper MLharichigurupatiNo ratings yet

- Inforex - A Collaborative System For Text Corpora Annotation and AnalysisDocument23 pagesInforex - A Collaborative System For Text Corpora Annotation and AnalysisMichał MarcińczukNo ratings yet

- Lrec2012 Inforex PosterDocument1 pageLrec2012 Inforex PosterMichał MarcińczukNo ratings yet

- Inforex Collaborative System Final PDFDocument9 pagesInforex Collaborative System Final PDFMichał MarcińczukNo ratings yet

- (Presentation) Preliminary Study On Automatic Induction of Rules For Recognition of Semantic Relations Between Proper Names in Polish TextsDocument19 pages(Presentation) Preliminary Study On Automatic Induction of Rules For Recognition of Semantic Relations Between Proper Names in Polish TextsMichał MarcińczukNo ratings yet

- Rich Set of Features For Proper Name Recognition in Polish Texts - Extended AstractDocument4 pagesRich Set of Features For Proper Name Recognition in Polish Texts - Extended AstractMichał MarcińczukNo ratings yet

- Optimizing CRF-based Model For Proper Name Recognition in Polish Texts (2012)Document1 pageOptimizing CRF-based Model For Proper Name Recognition in Polish Texts (2012)Michał MarcińczukNo ratings yet

- Rich Set of Features For Proper Name Recognition in Polish Texts - PresentationDocument17 pagesRich Set of Features For Proper Name Recognition in Polish Texts - PresentationMichał MarcińczukNo ratings yet

- Named Entity Recognition in The Domain of Polish Stock Exchange ReportsDocument15 pagesNamed Entity Recognition in The Domain of Polish Stock Exchange ReportsMichał MarcińczukNo ratings yet

- Michal - Marcinczuk Master Thesis 2007.09.19 BTH RevisedDocument73 pagesMichal - Marcinczuk Master Thesis 2007.09.19 BTH RevisedMichał MarcińczukNo ratings yet

- Study On Named Entity Recognition For Polish Based On Hidden Markov Models - PosterDocument2 pagesStudy On Named Entity Recognition For Polish Based On Hidden Markov Models - PosterMichał MarcińczukNo ratings yet

- Holistic Centre: Case Study 1 - Osho International Meditation Centre, Pune, IndiaDocument4 pagesHolistic Centre: Case Study 1 - Osho International Meditation Centre, Pune, IndiaPriyesh Dubey100% (2)

- Peace Corps Samoa Medical Assistant Office of The Public Service of SamoaDocument10 pagesPeace Corps Samoa Medical Assistant Office of The Public Service of SamoaAccessible Journal Media: Peace Corps DocumentsNo ratings yet

- PHP Listado de EjemplosDocument137 pagesPHP Listado de Ejemploslee9120No ratings yet

- Etsi en 300 019-2-2 V2.4.1 (2017-11)Document22 pagesEtsi en 300 019-2-2 V2.4.1 (2017-11)liuyx866No ratings yet

- 21 and 22 Case DigestDocument3 pages21 and 22 Case DigestRosalia L. Completano LptNo ratings yet

- Rhipodon: Huge Legendary Black DragonDocument2 pagesRhipodon: Huge Legendary Black DragonFigo FigueiraNo ratings yet

- Affin Bank V Zulkifli - 2006Document15 pagesAffin Bank V Zulkifli - 2006sheika_11No ratings yet

- Generator Faults and RemediesDocument7 pagesGenerator Faults and Remediesemmahenge100% (2)

- On Evil - Terry EagletonDocument44 pagesOn Evil - Terry EagletonconelcaballocansadoNo ratings yet

- Sajid, Aditya (Food Prossing)Document29 pagesSajid, Aditya (Food Prossing)Asif SheikhNo ratings yet

- Freedom International School Class 3 - EnglishDocument2 pagesFreedom International School Class 3 - Englishshreyas100% (1)

- Republic of The Philippines Legal Education BoardDocument25 pagesRepublic of The Philippines Legal Education BoardPam NolascoNo ratings yet

- A Letter From Sir William R. Hamilton To John T. Graves, EsqDocument7 pagesA Letter From Sir William R. Hamilton To John T. Graves, EsqJoshuaHaimMamouNo ratings yet

- Job Description: Jette Parker Young Artists ProgrammeDocument2 pagesJob Description: Jette Parker Young Artists ProgrammeMayela LouNo ratings yet

- IO RE 04 Distance Learning Module and WorksheetDocument21 pagesIO RE 04 Distance Learning Module and WorksheetVince Bryan San PabloNo ratings yet

- The Organization of PericentroDocument33 pagesThe Organization of PericentroTunggul AmetungNo ratings yet

- Raiders of The Lost Ark - Indiana JonesDocument3 pagesRaiders of The Lost Ark - Indiana JonesRobert Jazzbob Wagner100% (1)

- Two Gentlemen of VeronaDocument13 pagesTwo Gentlemen of Veronavipul jainNo ratings yet

- Bosaf36855 1409197541817Document3 pagesBosaf36855 1409197541817mafisco3No ratings yet

- Meter BaseDocument6 pagesMeter BaseCastor JavierNo ratings yet

- Naresh Kadyan: Voice For Animals in Rajya Sabha - Abhishek KadyanDocument28 pagesNaresh Kadyan: Voice For Animals in Rajya Sabha - Abhishek KadyanNaresh KadyanNo ratings yet

- Injection Pump Test SpecificationsDocument3 pagesInjection Pump Test Specificationsadmin tigasaudaraNo ratings yet

- The Malacca Strait, The South China Sea and The Sino-American Competition in The Indo-PacificDocument21 pagesThe Malacca Strait, The South China Sea and The Sino-American Competition in The Indo-PacificRr Sri Sulistijowati HNo ratings yet

- Phil. Organic ActDocument15 pagesPhil. Organic Actka travelNo ratings yet

- Gmail - Payment Received From Cnautotool - Com (Order No - Cnautot2020062813795)Document2 pagesGmail - Payment Received From Cnautotool - Com (Order No - Cnautot2020062813795)Luis Gustavo Escobar MachadoNo ratings yet

- S1-TITAN Overview BrochureDocument8 pagesS1-TITAN Overview BrochureصصNo ratings yet

- 9 Electrical Jack HammerDocument3 pages9 Electrical Jack HammersizweNo ratings yet

- Case Study - Lucky Cement and OthersDocument16 pagesCase Study - Lucky Cement and OthersKabeer QureshiNo ratings yet

- AgrippaDocument4 pagesAgrippaFloorkitNo ratings yet

- CV - Cover LetterDocument2 pagesCV - Cover LetterMoutagaNo ratings yet