Professional Documents

Culture Documents

7 1 Convolutional Encoding

7 1 Convolutional Encoding

Uploaded by

Hoàng Phi HảiCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

7 1 Convolutional Encoding

7 1 Convolutional Encoding

Uploaded by

Hoàng Phi HảiCopyright:

Available Formats

7.1 CONVOLUTIONAL ENCODING In Figure 1.2 we presented a typical block diagram of a digital communication system.

A version of this functional diagram, focusing primarily on the convolutional encode/decode and modulateidcmodulate portions of the communication link, is shown in Figure 7.1. The input message source is denoted by the sequence m = m1,m2, . . . , mi, , . . . . where each mi, represents a binary digit (bit), and i is a time index. To be precise. one should denote the elements of m with an index for class mem-bership (e.g.. for binary codes, 1 or 0) and an index for time. However, in this chap-ter, for simplicity, indexing is only used to indicate time (or location within a sequence). We shall assume that each mi is equally likely to be a one or a zero. And independent from digit to digit. Being independent, the bit sequence lacks any redundancy; that is, knowledge about bit mi, gives no information about mj ( i # j ). The encoder transforms each sequence m into a unique codeword sequence U = G(m). Even though the sequence In uniquely defines the sequence U, a key feature of convolutional codes is that a given k-tuple within m does not uniquely define its as-sociated n-tuple within U since the encoding of each k-tuple is not only a function of that k-tuple but is also a function of the K-1 input k-tuples that precede it. The sequence U can be partitioned into a sequence of branch words: U = U1, U3, . . . ,Ui, . . . . . Each branch word Ui is made up of binary code symbols, often called channel syntbois, channel hits, or code hits; unlike the input message bits the code sym-bols are not independent. In a typical communication application. the codeword sequence U modulates a waveform s(t). During transmission, the waveform s(t) is corrupted by noise, re-sulting in a received waveform. S(t) and a demodulated sequence Z = Z1, Z2, .. . ,Zi , . . . , as indicated in Figure 7.1. The task of the decoder is to produce an estimate m = m1, m2. . . . , mi, , . . . of the original message sequence. using the received sequencc Z together with a priori knowledge of the encoding procedure. A general convolut.ional encoder, shown in Figure 7.2, is mechanized with a kK-stage shift register and n modulo-2 adders, where K is the constraint length.

The constraint. length represents the number of k-bit shifts over which a single in-formation bit can inuence the encoder output. At each unit of time, k bits are shifted into the first k stages of the register; all bits in the register are shifted k stages to the right, and the outputs of the n adders are sequentially sampled to yield the binary code symbols or code bits. These code symbols are then used by the modulator to specify the waveforms to be transmitted over the channel. Since there are n code bits for each input group of k message bits, the code rate is k/n message bit per code bit, where k<n. We shall consider only the most commonly used binary convolutional en-coders for which k = 1that is, those encoders in which the message bits are shifted into the encoder one bit at a time, although generalization to higher order alpha-bets is straightforward [1, 2]. For the k = 1 encoder, at the ith unit of time, message bit mi is shifted into the first shift register stage; all previous bits in the register are shifted one stage to the right, and as in the more general case, the outputs of the n adders are sequentially sampled and transmitted. Since there are n code bits for each message bit, the code rate is 1/n. The n code symbols occurring at time tj, com-prise the ith branch word, Ui : U1, U2, , . . . , Um,

where Uij (j = 1, 2. . , . , n) is the jth code symbol belonging to the ith branch word. Note that for the rate 1/n encoder, the kK-stage shift register can be referred to simply as a K-stage register, and the constraint length K, which was expressed in units of k-tuple stages, can be referred to as constraint length in units of bits. 7.2 CONVOLUTIONAL ENCODEH FIEPHESENTATION To describe a convolutional code, one needs to characterize the encoding function G(m), so that given an input sequence m, one can readily compute the output se-quence U. Several methods are used for representing a convolutional encoder. The most popular being the connection pictorial, connection vectors or polynomials, the state diagram, the tree diagram. They are each described below. 7.2.1 Connection Representation We shall use the convolutional encoder, shown in Figure 7.3, as a model for dis-cussing convolutional encoders. The figure illustrates a (2,1) cenvolutional en-coder with constraint length K = 3. There are n = 2 modulo-2 adders; thus the code rate k/n is . At each input bit time, a bit is shifted into the leftmost stage and the bits in the register are shifted one position to the right. Nest, the output switch samples the output of each modulo-2 adder (i.e., rst the upper adder, then the lower adder), thus forming the code symbol pair making up the branch word asso ciated with the bit just inputted. The sampling is repeated for each inputted bit. The choice of connections between the adders and the stages of the register gives rise to the characteristics of the code. Any change in the choice of connections re sults in a different code. The connections are, of course, not chosen or changed ar bitrarily. The problem of choosing connections to yield good distance properties is complicated and has not been solved in general: however, good codes have been found by computer search for all constraint lengths less than about Ell [35]. Unlike a block code that has a fixed word length rt, a convolutional code has no particular block size. However, convolutional codes are often forced into a block structure by periodic trtrr rt rrrirrri.. This requires a number of aero bits to be ap pended to the end of the input data sequence. for the purpose of clearing orjiltrsfr trig the encoding shift register of the data bits. Since the added zeros carry no information, the effecrr've ("ode rare falls below kfrr. To keep the code rate close to fr/rt, the truncation. period is generally made as long as practical.

One way to represent the encoder is to specify a set of rt cor rrreodorr vector .v, one for each of the rt modulo 2 adders. Each vector has dimension K and describes the connection of the encoding shift register to that modulo 2 adder. A one in the tth position of the vector indicates that the corresponding stage in the shift register M1 { First code symbol In utbit _,,_ <~1\L.i,,_ Output pm branch word Second H2 {code symbol Figure 7.3 Convolutlonal encoder (rate K: 3). 7.2 Convolutiorlal Encoder Representation 385is connected to the modulo 2 adder. and a zero in a given position indicates that no connection exists between the stage and the modulo 2 adder. For the encoder es ample in Figure 6.3. we can write the connection vector gt for the upper connec tions and g3 for the lower conrlections as follows: _ g, = 1 l 1 g; = 1 U 1 Now consider that a message vector m = l U 1 is convolntionally encoded with the encoder shown in Figure 7.3. The three message bits are inputted. one at a time. at times :1. :2. and I3. as shown in Figure 7.4. Subsequently. {K 1) = 2 zeros are in putted at times I4 and I5 to ush the register and thus ensure that. the tail end of the message is shifted the full length of the register. The output sequence is seen to be 1 1 '1. O O [J l [J 1 1, where the leftmost symbol represents the earliest transmission. The entire output sequence, including the code symbols as a result of flushing are needed to decode the message. To ush the message from the encoder requires

one less zero than the number of stages in the register. or K ~ 1 flush bits. Another zero input is shown at time I5. for the reader to verify that the ushing is completed at time r5. Thus. a new message can be entered at time r... 7.2.1.1 Impulse Response of the Encoder We can approach the encoder in terms of its impttlse respor tte that is, the response of the encoder to a single "one" bit that moves through it. Consider the contents of the register in Figure 7.3 as a one moves through it: _ Branch word Register ii contents n , 1.1;, ltlll I 1 U10 l U O01 l 1 Input sequence: I t) U Output sequence: l'l ltl ll The output sequence for the input "one" is called the impulse response of the en coder. Then, for the input sequence n1 = 1 ll 1, the output may be found by the su perposition or the linear arfdiriort of the time shifted input impulses as follows: Input In Output l 11 l.O ll ll [if] [ll] OD 1 ll ll) ll Module 2 sum: 1 1 l0 [H] 1.0 1 '1 Observe that this is the same output as that obtained in Figure 7.4. demonstrating that c'onvol'utiortal codes are hner:rjust like the linear block codes of Chapter 6. lt

386 Channel Coding: Part 2 Chap. 7m = U Time Encoder Output $1 $2 H. is $4 is is Figure 7.4 Convolutionally encoding a message [1 H1 U2 ~wi._ 1 1 s Q H2 HT ts 1 ~ a2 J, t"' U U2 as 1 O H2 H1 ~e 1 1 M1 ~<=~ ~ Q H2 sequence with a rate K: 3 encoder. OUTPUT Sequence: 11 70 09 10 11 7.2 Convolutional Encoder Representation 387is from this property of generating the output by the linear addition of time shifted impulses. or the convolution of the input sequence with the impulse response of the encoder. that we derive the name crmvoiiirioriot encoder. Often. this encoder char

actcriaation is presented in terms of an infinite order generator matrix [6]. _ Notice that the c__ffcc:rii1e code rate for the foregoing example with. 3 bit input sequence and 1U bit output sequence is kin = .l.,quite a hit less than the rate that might have been expected from the knowledge that each input data bit yields a pair of output channel bits. The reason for the disparity is that the final data bit into the encoder needs to be shifted through the encoder. All of the output channel bits are needed in the decoding process. If the message had been longer. say 300 bits. the output codeword sequence would contain 604 bits. resulting .in a code rate of 3UO.t6U4n1uch closer t.o 7.2.1.2 Polynomial Representation Sometimes. the encoder connections are characterized by generator polynomi tIl'.f.S'_, similar to those used in Chapter 6 for describing the feedback shift register imple mentation of cyclic codes. We can represent a convolutional encoder with a set of n generator polynomials. one for each of the H modulo 2 adders. Each polynomial is of degree K l or less and describes the connection. of the encoding shift register to that modulo 2. adder. much the same way that a connection vector does. The coefficient of each term in the (K 1) degree polynomial is eit.her 1 or tl. depending on whether a connection exists or does not exist between the shift register and the modulo 2 adder in question. For the encoder example in Figure 7.3. we can write the generator poly nomial g1(X) for the upper connections and g ;(X) for the lower connections as follows: : 1 X2 g3_(X) I l + X3 where the lowest order term in the polynomial corresponds to the input stage of the register. The output sequence is found as follows: UX_) = r1|(X'}g1(X) interlaced with m(X)g2(X)

First. express the message vector m = .l ti l as a polynomialthat is. rn(X) = l + X3. We shall again assume the use of zeros following the message bits. to flush the reg ister. Then the output polynomial U(X). or the output sequence U. of the Figure 7.3 encoder can be found for the input message m as follows: m(X)g.(X} = (1 + X3)(l + X+ X1) =1 + X+ Xi + X4 mtxlgivn = <1 + W1 + X1) 1 + X miX;g.(,ni= 1 + 24+ ex? + xi + gr m(Xig.('X) = 1 + 0X+ OX1 + ox + xi ' oer) = (1,1) + (1in)x+ (0,a)X1 + (1. u).r~ + (1,1)X o=11 ltl no 1 a 11 383 Channel Coding: Part 2 Chap. 7In this example we started with another point of viewnarne ly, that the convolu tional encoder can be treated as a set of cyclic code shift registers. We represented the encoder with polynomial generators as used for describing cyclic codes. However, we arrived at the same output sequence as in Figure 7.4 and at the same output sequence as the impulse response treatment of the preceding section. (For a good presentation of convolutional code structure in the context of linear sequential circuits, see. Reference [7].) 7.2.2 State Representation and the State Diagram A convolutional encoder belongs to a class of devices known as finite state ma chines, which is the general name given to machines that have a memory of past signals. The adjective finite refers to the fact that there are only a finite number of unique states that the machine can encounter. What is meant by the stare of a finite state machine? In the most general sense. the state consists of the smallest amount of information that, together with a current input to the machine, can pre dict the output of the machine. The state provides some knowledge of the past sig

naling events and the restricted set of possible outputs in the future. A future state is restricted by the past state. For a rate lfrr convolutional encoder, the state is rep resented by the contents of the rightmost K l stages (see Figure 7.3). Knowledge of the state together with knowledge of the nest input is necessary and sufficient to determine the nest output. Let the state of the encoder at time r, be dened as X, = tn, _ 1, rn, _;, . . . , rn,_ K ,1. The ith codeword branch U, is completely determined by state X, and the present input bit rrn: thus the state X, represents the past history of the encoder in determining the encoder output. The encoder state is said to be Markov, in the sense that the probability P(lX, ,!X, . X, _ 1, . . . ._ X, ,) of being in state X, ,1, given all previous states. depends only on the most recent state X, ; that is. the probability is equal t.0 PUQ , , IX, ). One way to represent simple encoders is with a srrne diagram; such a representation for the encoder in Figure 7.3 is shown in Figure 7.5. The states. shown in the boxes of the diagram, represent the possible contents of the right most K 1 stages of the register. and the paths between the states represent the output branch words resulting from such state transitions. The states of the register are designated n = U0, b = It), 1' = U1. and d : 11; the diagram shown in Figure 7.5 illustrates all the state transitions that are possible for the encoder in Figure 7.3. There are only two H'Ifl.Sii'i(Jf1S emanating from each state, correspond ing to the two possible input bits. Next to each path between states is written the output branch word associated with the state transition. In drawing the path, we use the convention that a solid line denotes a path associated with an input bit, zero, and a dashed line denotes a path associated with an input bit, one. Notice that it is not possibie in a single transition to move from a given state to any arbitrary state. As a consequence of shifting in one bit at a time, there are only two possible

state transitions that. the register can make at each bit time. For example, if the present encoder state is U0, the ortl'_v possibilities for the state at the next shift are Uil or 10. 7.2 Convolutional Encoder Representation 38900 . Output _.. branch word =00 11 /' I 117* ff} I ""' P B2 . __"' . state .,\ 10 ., |_ |npuibitu /' \ I ____ . K l lnputb|t1 ______." 10 Example 7.1 Convolutinnal Encoding Figure 7.5 Encoder state diagram (rate Q, K: 3}. For the encoder shown in Figure 7.3, show the state changes and the resulting output codeword sequence U for the message sequence m = 1 1 U I l, followed by K 1 = 2 zeros to ush the register. Assume that the initial contents of the register are all zeros. Soimion

input bit rrt, Register contents Branch S I word id C 5" at time 1, State at time ji time 1, i, _ 1 it, H3 UUU no IUD U1] lltl 1U (111 11 101 0; 110 10 U1]. ll illll U1 it Jstater, state Ital S Ci~i C t*'* Output sequence: U = l 1 ll I 390 til] 1|]

l_ U1 ttl 1'. U. 00 ''C C l t2lL'C' * ltli I Iliivir * ol nu U1 til ll Channel Coding: Part 2 Chap. 7Example 7.2 Cnnvolutional Encoding In Example 7.1 the initial contents of the register are all reros. This is equivalent to the condition that the given input sequence is preceded by two zero bits (lhc encoding is a function of the present bit and the K I prior bits]. Repeat Example M with the assumption that the given input sequence is preceded by two one bits. and verify that now the codeword sequence U for input sequence m = I '1 [I .l I is different than the codeword found in Example 7.1. Solnnrm The entry ><* signifies don't know." Branch S word Nile H at time r, Input Register State at time i_ bit in. contents time 1, It 1 I ti, tr; _11_ 1 1 l I ll I I I 1 (I U I] I I O1 U 1

I l U I 10 (I II l 'l 1 [I I ll l 1 U I fl ll I l 1 l I] .l U I ll Ol_1_l U I OI] I 1 lfiillti I, state [E4 I _.,15_.4. f lH _, . p i._A,_4 l Output sequence: U: I U I H ll I {Hi Ill til I I By comparing this result with that of Example ll, we can sec that each branch word of the output sequence U is not onI_v a function of the input bit. but is also a function ofthe K I prior bits. 7.2.3 The Tree Diagram Although the state diagram completely characterizes the encoder. one cannot eas ily use it for tracking the encoder transitions as a function of time since the diagram cannot represent time history. The tree diagram adds the dfrrzerision oftirne to the state diagram. The tree diagram for the convolutional encoder shown in Figure 7.3 is illustrated in Figure 7.6. At each successive input bit time the encoding proce dure can be described by traversing the diagrtnn from left to right. each tree branch describing an output branch word. The branching rule for finding a codeword se quence is as followsz If the input hit is a zero. its associated branch word is found by moving to the next rightmost branch in the upward direction. If the input bit is a one. its branch word is found by moving to the next rightmost branch in the down

ward direction. Assurning that the initial contents of the encoder is all zeros. the 7.2 Convolutional Encoder Representation 391O0 DD n. "i 11 on u 10 11 s,i c1 no 11 l 11 10 t. no 11 b Codeword U1 branch 01 as 10 UU ct i

DO 11 o i 11 1D c DU 10 b

D1 11 b *_i 11 t iii 01 on 111 d i O1 1U iii p 1D Ola ll O oi 11 11 ct ii 10 11 bi 01 10 0 I 11 10 vi DU no ti i 01 01 di 10 11 15 i

00 11 n.i 11 D1 C ii 10 U1 11 (I'D U1 1U GU b U1 d O1 c 10 a 10 oi Figure 7.6 Tree representation it P *3 *4 is of ericode r(rate K: :2). 392 Channel Coding: Part 2 Chap. 7diagram shows that if the first input bit is a zero, the output branch word is OD and, if t.he rst input bit is a one, the output branch word is 11. Similarly, if the first input bit is a one and the second input bit is a zero, the second output branch word is 10. Or, if the first input bit is a one and the second input bit is a one, the second output branch word is 01. Following this procedure we see that the input sequence '1 I 0 1 1 traces the heavy line drawn on the tree diagram in Figure 7.6. This path corresponds to the output codeword sequence 1 1 0 1 ll 1 ll U tl I. The added dimension of time in the tree diagram (compared to the state dia

gram) allows one to dynamically describe the encoder as a function of a particular input sequence. However. can you see one problem in trying to use a tree diagram for describing a sequence of any length? The number of branches increases as a function of 2L, where L is the number of branch words in the sequence. You would quickly run out of paper, and patience. 7.2.4 The Trellis Diagram Observation of the Figure 7.6 tree diagram shows that for this example. the struc ture repeats itself at time I4. after the third branching (in general, the tree structure repeats after K brenchz'ngs_, where K is the constraint. length). We label each node in the tree of Figure 7.6 to correspond to the four possible states in the shift register, as follows: e = U0, b = ll), c = [11, and d = ll. The first branching of the tree struc ture, at time r,, produces a pair of nodes labeled a and b. At each successive branching the number of nodes double. The second branching, at time r2, results in four nodes labeled ti, b, c, and d. After the third branching, there are a tot.al of eight nodes: two are labeled rt, two are labeled b, two are labeled c, and two are labeled d. We can see that all branches emanating from two nodes of the same state gener ate identical branch word sequences. From this point on, the upper and the lower halves of the tree are identical. The reason for this should be obvious from exami nation of the encoder in Figure 7.3. As the fourth input bit enters the encoder on the left, the first input bit is ejected on the right and no longer inuences the output branch words. Consequently, the input sequences 1 0 U x y . . . and U U U x y . . . , where the leftmost bit is the earliest bit, generate the same branch words after the (K = 3)rd branching. This means that any two nodes having the same state label at the same time r, can be merged, since all succeeding pat.hs will be indistinguishable. If we do this to the tree structure of Figure 7.6, we obtain another diagram, called

the trellis diagram. The trellis diagram, by exploiting the repetitive structure, pro vides a more manageable encoder description than does the tree diagram. The trel lis diagram for the eonvolutional encoder of Figure 7.3 is shown in Figure 7.7. In drawing the trellis diagram, we use the same convention that we intro duced with the state diagram a solid line denotes the output generated by an input bit zero, and a dashed line denotes the output generated by an input bit one. The nodes of the trellis characterize the encoder states; the first row nodes corre spond to the state a = UU, the second and subsequent rows correspond to the states b = 10, c = U1, and d = 11. At each unit of time, the trellis requires 2K"! nodes to represent the 2 "1 possible encoder states. The trellis in our example assumes a 7.2 Convolutional Encoder Flepresentation 393I51 I2 I3 I4 $5 5 State 6:00 00 a cg 1* 00 no W 00 \. x \ .,,_ \11 '\11 \,11 X11 ,,11 "R, X, ~.._ H, ~___ Codeword Wt "R . . \ branch b I 10 . \ "\ T1 \,\ 11 \ ii x). ) wkZ ,3: \ \.__ xx \\ Clz/' \\ 10, xx \ x / .1 \ X \\ 10 /< x 10 /<1 10 /(gs 10 g=Q1 I I \\ \ \ s \ \ it \

\\\\ \ \\ \\ \\ \P'l U1 \U'| O1 U1 D1 \U'l it \ \ \. d:11' ' _'_15" *_ 15 _ _ _ __15___ Legend i Input bit 0 input bit 1 Figure 7.7 Encoder trellis diagram (rate in K: 3) fixed periodic structure after trellis depth 3 is reached (at time I4). In the general ease, the fixed structure prevails after depth K is reached. At this point and there after, each of the states can be entered from either of two preceding states. Also, each of the states can transition to one of two states. Of the two outgoing branches. one corresponds to an input bit zero and the other corresponds to an input bit one. On Figure 7.7 the output branch words corresponding to the state transitions appear as labels on the trellis branches. (Jne time interval section of a fully formed encoding trellis structure com pletely denes the code. The only reason for showing several sections is for viewing a code symbol sequence as a function of time. The state of the eonvolutional en coder is represented by the eontcnts of the rightmost K '1 stages in the encoder register. Some authors describe the state as the contents of the leftmost K l stages. Which description is correct? They are both correct in the following sense. Every transition has a starting state and a terminating state. The rightmost K 1 stages describe the starting state for the current input, which is in the leftmost stage (assuming a rate lfn encoder). The leftmost K 1 stages represent the terminat ing state for that transition. A code symbol sequence is characterized by N

branches (representing N data bits) occupying N intervals of time and associated with a particular state at each of N + 1 times (from start to finish). Thus, we launch bits at times I1, r2, . . . , IN. and are interested in state metrics at times I1. I3, . . . , I, ._.,1. The convention used here is that the current bit is located in the leftmost stage {not on a wire leading to that stage), and the rightmost K l stages start in the all zeros state. We refer to this time as the smrr Itrne and label it {|. We refer t.o the conclud ing time of the last transition as the Ierniirmtirig Iirne and label it IN, |. 394 Channel Coding: Part 2 Chap. 77 3 FORMULATION OF THE CONVOLUTIONAL DECODING PROBLEM 7.3.1 Maximum Likelihood Decoding If all input message sequences are equally likely, a decoder that achieves the mini mum probability of error is one that compares the conditional probabilities. also called the liloelilioorl fimcrioris P(Z|Ul ml), where Z is the received sequence and Ul"l is one of the possible transmitted sequences, and chooses the maximum. The decoder chooses Ul mil if P(z|u<'"'l) = max P(2_|U l) (7 1.) over all Ul" 3' ' The moximarn likelihood concept, as stated in Equation (7.1), is a fundamental development of decision theory (sec Appendix B)", it is the formalization of a common sense" way to make decisions when there is statistical knowledge of the possibilities. In the binary demodulation treatment in Chapters 3 and 4 there were only Iwo equally likely possible signals, s|(I) or s3(I), that might have been transmit ted. Therefore, to make the binary maximum likelihood decision, given a received signal, meant only to decide that s,(t) was transmitted if p(zl1~"1) > P(zl ii)

otherwise, to decide that s2(I_) was transmitted. The parameter z represents z(T), the receiver predetection value at the end of each symbol duration time I : T. How ever, when applying maximum likelihood to the eonvolutional decoding problem, we observe that the eonvolutional code has memory (the received sequence repre sents the superposition of current bits and prior bits). Th us, applying maximum likelihood to the decoding of convolutionally encoded bits is performed in the con text of choosing the most likely sequence, as shown in Equation (7.1). There are typically a multitude of possible codeword sequences that. might have been trans mitted. To be specific, for a binary code, a sequence of L branch words is a member of a set of 2L possible sequences. Therefore, in the maximum likelihood context, we can say that the decoder chooses a particular Ul""l as the transmitted sequence if the likelihood P(Z|Ul'l) is greater than the likelihoods of all the other possible transmitted sequences. Such an optimal decoder, which minimizes the error proba bility (for the case where all transmitted sequences are equally likely), is known as a maximum. likelihood decoder. The likelihood functions are given or computed from the specifications of the channel. We will assume that the noise is additive white Gaussian with zero mean and the channel is m.emoryless_, which means that the noise affects each code symbol independently of all the other symbols. For a eonvolutional code of rate Ilri, we can therefore express the likelihood as s 11:18 _lIl= *1: P<Z|v""1)= 1flP(z.lv<r>> = ,_ mule) (121 7.3 Formulation of the Convolutional Decoding Problem

You might also like

- Financial Planning 2nd Edition Mckeown Test BankDocument35 pagesFinancial Planning 2nd Edition Mckeown Test Bankbunkerlulleruc3s100% (26)

- Classic and Romantic German Aesthetics (Cambridge Texts in The History of Philosophy) - J.M. Bernstein, EdDocument357 pagesClassic and Romantic German Aesthetics (Cambridge Texts in The History of Philosophy) - J.M. Bernstein, Edmenaclavazq627180% (5)

- Suggested Chapters and Parts of A Feasibility StudyDocument4 pagesSuggested Chapters and Parts of A Feasibility StudyRofela Hrtlls75% (4)

- Convolutional CodesDocument83 pagesConvolutional CodesRashmi JamadagniNo ratings yet

- Wireless Access Systems Exercise Sheet 2: Coded OFDM Modem: Oals of The XerciseDocument6 pagesWireless Access Systems Exercise Sheet 2: Coded OFDM Modem: Oals of The XerciseRashed IslamNo ratings yet

- Convolutional Coding and Viterbi DecodingDocument30 pagesConvolutional Coding and Viterbi DecodingEmNo ratings yet

- Illuminating The Structure Code and Decoder Parallel Concatenated Recursive Systematic CodesDocument6 pagesIlluminating The Structure Code and Decoder Parallel Concatenated Recursive Systematic Codessinne4No ratings yet

- Design of Convolutional Encoder and Viterbi Decoder Using MATLABDocument6 pagesDesign of Convolutional Encoder and Viterbi Decoder Using MATLABThu NguyễnNo ratings yet

- Viterbi ImplementationDocument19 pagesViterbi Implementationgannoju423No ratings yet

- Convolutional CodeDocument15 pagesConvolutional CodeDhivya LakshmiNo ratings yet

- Implementation of A Turbo Encoder and Turbo Decoder On DSP Processor-TMS320C6713Document5 pagesImplementation of A Turbo Encoder and Turbo Decoder On DSP Processor-TMS320C6713IJERDNo ratings yet

- Gargi Sharma Viterbi Algorithm Term Paper EEL201Document4 pagesGargi Sharma Viterbi Algorithm Term Paper EEL201Gaurav NavalNo ratings yet

- Convolutional Code (II)Document115 pagesConvolutional Code (II)Atef Al-Kotafi Al-AbdiNo ratings yet

- S.72-3320 Advanced Digital Communication (4 CR) : Convolutional CodesDocument26 pagesS.72-3320 Advanced Digital Communication (4 CR) : Convolutional CodesBalagovind BaluNo ratings yet

- Digital Communication Coding: En. Mohd Nazri Mahmud Mphil (Cambridge, Uk) Beng (Essex, Uk)Document25 pagesDigital Communication Coding: En. Mohd Nazri Mahmud Mphil (Cambridge, Uk) Beng (Essex, Uk)Apram SinghNo ratings yet

- Convolutional CodesDocument7 pagesConvolutional CodesgayathridevikgNo ratings yet

- RimaDocument42 pagesRimaEbti Salah ArabNo ratings yet

- Part2 3 Convolutional CodesDocument38 pagesPart2 3 Convolutional CodesMatthew HughesNo ratings yet

- S.72-227 Digital Communication Systems: Convolutional CodesDocument26 pagesS.72-227 Digital Communication Systems: Convolutional Codesjoydeep12No ratings yet

- Convolution CodesDocument14 pagesConvolution CodeskrishnaNo ratings yet

- Micro Coded Transmitter DesignDocument14 pagesMicro Coded Transmitter DesignJay SinghNo ratings yet

- Comparative Study of Turbo Codes in AWGN Channel Using MAP and SOVA DecodingDocument14 pagesComparative Study of Turbo Codes in AWGN Channel Using MAP and SOVA Decodingyemineniraj33No ratings yet

- Dcs Lab ManualDocument33 pagesDcs Lab ManualKumaran SgNo ratings yet

- Digital Communication Systems by Simon Haykin-105Document6 pagesDigital Communication Systems by Simon Haykin-105matildaNo ratings yet

- Field Programmable Gate Array Implementation of 14 Bit Sigma-Delta Analog To Digital ConverterDocument4 pagesField Programmable Gate Array Implementation of 14 Bit Sigma-Delta Analog To Digital ConverterInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Convolutional Codes706760093Document27 pagesConvolutional Codes706760093Feroza MirajkarNo ratings yet

- Simulation of Convolutional EncoderDocument5 pagesSimulation of Convolutional EncoderInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Error Control Coding3Document24 pagesError Control Coding3DuongMinhSOnNo ratings yet

- Graphical Represntation For Convolutional Encoding:: 1.the Code Tree 2.the Code Trellis 3.the State DiagramDocument8 pagesGraphical Represntation For Convolutional Encoding:: 1.the Code Tree 2.the Code Trellis 3.the State DiagramSwetha Singampalli SNo ratings yet

- Description of The Algorithms (Part 1)Document6 pagesDescription of The Algorithms (Part 1)Darshan Yeshwant MohekarNo ratings yet

- N Carrier Modulatio: Version 2 ECE IIT, KharagpurDocument10 pagesN Carrier Modulatio: Version 2 ECE IIT, KharagpurAayush RajvanshiNo ratings yet

- Design of Convolutional Encoder and Viterbi Decoder Using MATLABDocument7 pagesDesign of Convolutional Encoder and Viterbi Decoder Using MATLABwasiullahNo ratings yet

- Practicum Report Digital Telecommunication: Class TT 2E Khusnul Khotimah 1831130043Document18 pagesPracticum Report Digital Telecommunication: Class TT 2E Khusnul Khotimah 1831130043khusnul khotimahNo ratings yet

- Performance Evaluation For Convolutional Codes Using Viterbi DecodingDocument6 pagesPerformance Evaluation For Convolutional Codes Using Viterbi Decodingbluemoon1172No ratings yet

- DSP AssignmentDocument8 pagesDSP Assignmentanoopk222No ratings yet

- DC Coding and Decoding With Convolutional CodesDocument28 pagesDC Coding and Decoding With Convolutional CodesARAVINDNo ratings yet

- 18ec61 DCDocument146 pages18ec61 DCSadashiv BalawadNo ratings yet

- A Turbo Code TutorialDocument9 pagesA Turbo Code Tutorialmehdimajidi797144No ratings yet

- DD and COmodule 2Document97 pagesDD and COmodule 2Madhura N KNo ratings yet

- 1.1.1 Reed - Solomon Code:: C F (U, U, , U, U)Document4 pages1.1.1 Reed - Solomon Code:: C F (U, U, , U, U)Tân ChipNo ratings yet

- Dpco Book1Document4 pagesDpco Book1SELVAM SNo ratings yet

- Ec6501 DC 1Document79 pagesEc6501 DC 1Santosh KumarNo ratings yet

- Channel CodingDocument45 pagesChannel CodingTu Nguyen NgocNo ratings yet

- Viterbi DecodingDocument4 pagesViterbi DecodingGaurav NavalNo ratings yet

- Experimental Verification of Linear Block Codes and Hamming Codes Using Matlab CodingDocument17 pagesExperimental Verification of Linear Block Codes and Hamming Codes Using Matlab CodingBhavani KandruNo ratings yet

- Improved Low Complexity Hybrid Turbo Codes and Their Performance AnalysisDocument3 pagesImproved Low Complexity Hybrid Turbo Codes and Their Performance AnalysisjesusblessmeeNo ratings yet

- RVLC Code 56456444rDocument5 pagesRVLC Code 56456444rAnonymous n30qTRQPoINo ratings yet

- 4.ASK, FSK and PSKDocument13 pages4.ASK, FSK and PSKSai raghavendraNo ratings yet

- QP CODE 21103239 DE December 2021Document8 pagesQP CODE 21103239 DE December 2021AsjathNo ratings yet

- Bhise2008 Paper in IeeeDocument6 pagesBhise2008 Paper in IeeeHarith NawfelNo ratings yet

- Design A Full Adder by Using Two Half Adders. (7M, May-19)Document10 pagesDesign A Full Adder by Using Two Half Adders. (7M, May-19)Sri Silpa Padmanabhuni100% (1)

- Maximum Likelihood Decoding of Convolutional CodesDocument45 pagesMaximum Likelihood Decoding of Convolutional CodesShathis KumarNo ratings yet

- 4a Convolution CodeDocument22 pages4a Convolution Codeتُحف العقولNo ratings yet

- CPM DemodulationDocument3 pagesCPM Demodulationakkarthik0072933No ratings yet

- Line CodesDocument18 pagesLine Codesu-rush100% (1)

- ConvolutionalcodesDocument30 pagesConvolutionalcodesprakash_oxfordNo ratings yet

- Fu-Hua Huang Chapter 3. Turbo Code EncoderDocument14 pagesFu-Hua Huang Chapter 3. Turbo Code EncoderAmiel ZalduaNo ratings yet

- Spread - Spectrum Modulation: Chapter-7Document22 pagesSpread - Spectrum Modulation: Chapter-7Deepika kNo ratings yet

- Turbo Codes 1. Concatenated Coding System: RS Encoder Algebraic Decoder Layer 2Document27 pagesTurbo Codes 1. Concatenated Coding System: RS Encoder Algebraic Decoder Layer 2Shathis KumarNo ratings yet

- Analysis and Design of Multicell DC/DC Converters Using Vectorized ModelsFrom EverandAnalysis and Design of Multicell DC/DC Converters Using Vectorized ModelsNo ratings yet

- Leadership Training NarrativeDocument2 pagesLeadership Training NarrativeJenifer PaduaNo ratings yet

- Chapte 2Document58 pagesChapte 2Rabaa DooriiNo ratings yet

- Writing Part-FormatDocument15 pagesWriting Part-FormatHappyGreg360No ratings yet

- Full Download Test Bank For Introduction To Econometrics 4th Edition James H Stock PDF Full ChapterDocument36 pagesFull Download Test Bank For Introduction To Econometrics 4th Edition James H Stock PDF Full Chaptersingsong.curlyu2r8bw100% (18)

- Possible Effects of Potential Lahars From Cotopaxi Volcano On Housing Market PricesDocument12 pagesPossible Effects of Potential Lahars From Cotopaxi Volcano On Housing Market Pricesdarwin chapiNo ratings yet

- Method Validation NotesDocument15 pagesMethod Validation NotesRamling PatrakarNo ratings yet

- Chapter IIDocument21 pagesChapter IIJubert IlosoNo ratings yet

- Opem 311Document6 pagesOpem 311MARITONI MEDALLANo ratings yet

- 2204 MaterialityDocument8 pages2204 MaterialitydeeptimanneyNo ratings yet

- Countering Criticism of The Warren Report (Clayton P. Nurnad and Ned Bennett), CIA File Number 201-289248 (Psyop Against 'Conspiracy Theorists') (1967)Document3 pagesCountering Criticism of The Warren Report (Clayton P. Nurnad and Ned Bennett), CIA File Number 201-289248 (Psyop Against 'Conspiracy Theorists') (1967)Quibus_Licet100% (1)

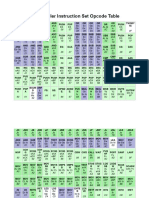

- Intel x86 Assembler Instruction Set Opcode TableDocument9 pagesIntel x86 Assembler Instruction Set Opcode TableMariaKristinaMarasiganLevisteNo ratings yet

- Full Text Anti Graf It TiDocument7 pagesFull Text Anti Graf It TiOlivia AgridulceNo ratings yet

- My Ios TutorialDocument185 pagesMy Ios TutorialBhargav EmnickNo ratings yet

- AbacusDocument13 pagesAbacusdj_jovicaNo ratings yet

- Discrepancies and DivergencesDocument5 pagesDiscrepancies and DivergencesZinck Hansen100% (1)

- Infosys Leadership Development Strategies PDFDocument3 pagesInfosys Leadership Development Strategies PDFanuj.arora02001021No ratings yet

- DLL - Mapeh 4 - Q2 - W6Document6 pagesDLL - Mapeh 4 - Q2 - W6Alexis De LeonNo ratings yet

- Physics ProjectDocument21 pagesPhysics ProjectCoReDevNo ratings yet

- Ayele TilahunDocument81 pagesAyele TilahunJanet Magno De OliveiraNo ratings yet

- JSAloading Test Works ContohDocument2 pagesJSAloading Test Works Contoh6984No ratings yet

- 3RV10314HA10 Datasheet enDocument4 pages3RV10314HA10 Datasheet enGaurav PratapNo ratings yet

- DLL - Mapeh 5 - Q3 - W2Document6 pagesDLL - Mapeh 5 - Q3 - W2Laine Agustin SalemNo ratings yet

- What Is Neo-Liberalism FINALDocument21 pagesWhat Is Neo-Liberalism FINALValentino ArisNo ratings yet

- Seyedeh Fashandi Executive Resume FINALDocument2 pagesSeyedeh Fashandi Executive Resume FINALsfashandi123No ratings yet

- Reservoir EngineeringDocument18 pagesReservoir EngineeringshanecarlNo ratings yet

- Mutual Inhibition, Competition, and Periodicity in A Two Species Chemostat-Like SystemDocument10 pagesMutual Inhibition, Competition, and Periodicity in A Two Species Chemostat-Like Systemmoustafa.mehanna7564No ratings yet

- 11 Grade Instructional Pacing Guide: High School Georgia Standards of Excellence American Literature Curriculum MapDocument12 pages11 Grade Instructional Pacing Guide: High School Georgia Standards of Excellence American Literature Curriculum Mapapi-262173560No ratings yet