Professional Documents

Culture Documents

Informatica Ques

Informatica Ques

Uploaded by

Ashok KumarOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Informatica Ques

Informatica Ques

Uploaded by

Ashok KumarCopyright:

Available Formats

Normalizer What exactly Occurs and levels in Normailizer ?

In the following scenarios what will be the occurs and level ? 1. Id Name Age Marks1 Marks2 Marks3 Marks4. Output should be like this : Id Name Age Marks ( Normalized ) 2. Id Name Marks 1 Rahul 50 1 Rahul 20 2 Sachin 40 2 Sachin 60 3 Rohit 80 Output should be like this : Id Name Marks1 Marks2 1 Rahul 50 20 2 Sachin 40 60 3 Rohit 80 <NULL> To acheive the above results you should go for Sorter,Expression and transaction control in the same order. You sort the incoming data by id and name. So that all the records of a single p erson are in Sequence. In the expression you verify if id and name are changed with respective record. If they are not changed u add a new port for the new mark and pass it to transac tion control marking the record as not changed. If they are changed you flag the record as changes and passs it transaction cont rol. In the transaction control, you verify the change flag. If it says changed you c ommit till the previous record. You have to go for a normalizer transformation if you need to acheive the below type of requirement. Source records id m1 m2 m3 1 100 200 300 2 400 500 600 Target records 1 1 1 2 2 2 100 200 300 400 500 600

Which is like vice versa of you requirement. -----------------------------------------------how to send email if source file does not exist?

Combination of Even Wait,Timer and Assignment Task we can achive ur Task. 1)Create a Workflow Variable Count=0 2)In the event wait give the path of your source File 3)Create the workflow with 2 flows Start--->EventWait---->AssinmentTask(Count=1)---->Sessions ---->Timer------->Linkcondition(Times.status=succeeded and $count=0)--->Email Ta sk Let me know if you need any other info ? Here the Timer Taks(One Hr) is connected parallel to the Even Wait Taks from the Start. So your workflow will wait for the Files until One hr.If you didnt get the File with in one hour then ur email taks will run and shoot an email to 3rd party ven dor and Supporting Team Saying that abort the workkflow or etc..... suppose if u get the file within the file then automatically it will move to the next task.No need to give event Raise.... For more info see the informatica hel p PDF file ---------------------------------------------------------------------------Transaction control transformation? Can any1 put some light on Transaction control transformation ? Expecting one simple example. Also what is effective and ineffective Transaction control transformation ? If you want to commit the target table, based on some condition you go for File Transaction. Example. Take u'r source table as Emp table which comes with standard Oracle installation . Your output will be a set flat files in such a way that a seperate file is gener ated for each dept. Your output files will be like account.txt,marketing.txt..etc When you use transaction control transformation in the middle of your mapping it will be ineffective. basically it is used just before records getting loaded in to target table. it is used to control the control the records entering into the target table. when you find a record which is not suppose to enter the target table, say for e x: when a col [which is not a pk] is null, then you can roll back the entire rec ords loaded till that point. ----------------------------------------------------------How do u change parameter when u move it from development to production. How do u retain variable value when u move it from development to production. How do u reset sequence generator value u move it from development to production . How to delete duplicate values from UNIX. How to find no.of rows commited to the target, when a session fails.How to remov e the duplicate records from flat file (other than using sorter trans. and mappi ng variables) How to generate sequence of values in which target has more than 2billion records.(with sequence generator we can generate upto 2 billion values

only) I have to generate a target field in Informatica which doesn exist in the source table. It is the batch number. There are 1000 rows altogether. The first 100 rows should have the same batch number 100 and the next 100 would have the batch numbe 101 and so on. How can we do using i nformatica? Lets take that we have a Flat File in the Source System and It was in the correc t path then when we ran the workflow and we got the error as "File Not Found", w hat might be the reson? How to load 3 unstructured flat files into single target file? There are 4 columns in the table Store_id, Item, Qty, Price 101, battery, 3, 2.99 101, battery, 1 , 3.19 101, battery, 2, 2.59 I want the output to be like 101, 101, 101, 101, battery, battery, battery, battery, 3, 2.99 1 , 3.19 2, 2.59 2,17.34

How can we do this using Aggrigator? How to find no.of rows commited to the target, when a session fails. Log file How to remove the duplicate records from flat file (other than using sorter tran s. and mapping variables) Dynamic Lookup , sorter and aggregator How to generate sequence of values in which target has more than 2billion records.(with sequence generator we can generate upto 2 billion values only) Create a Stored Procedure in database level and call it I have to generate a target field in Informatica which doesn exist in the source table. It is the batch number. There are 1000 rows altogether. The first 100 rows should have the same batch number 100 and the next 100 would have the batch numbe 101 and so on. How can we do using i nformatica? Source > sorter > sequencegenerator (generate numbers)> expression (batchnumber , decode function) > target Expression :: decode(nexval<=100, nextval , Nextval>100 and Nextval<=200,Nextval+1, Nextval>200 and nextval<=300,nextval+2 , . . Nextval>900 and nextval<=1000,nextval+10 )

Lets take that we have a Flat File in the Source System and It was in the correc t path then when we ran the workflow and we got the error as "File Not Found", w hat might be the reson? Not entered source file name properly at the session level

How to load 3 unstructured flat files into single target file? giveIndirect file option There are 4 columns in the table Store_id, Item, Qty, Price 101, battery, 3, 2.99 101, battery, 1 , 3.19 101, battery, 2, 2.59 I want the output to be like 101, 101, 101, 101, battery, battery, battery, battery, 3, 2.99 1 , 3.19 2, 2.59 2,17.34

How can we do this using Aggrigator? Source > aggregator (group by on store_id , item , qty ) > target Tip :: aggregator will sort the data in descending order if u dnt use sorted inp ut. here is the first 4 questions answers How do u change parameter when u move it from development to production. Which parameters r u talking about? is the relational connection parameters? wor kflow paramets? or other then this? general common concept we can also move the parameter files into the production box How do u retain variable value when u move it from development to production. while doing migration-->you have to select advaced option while importing the ma pping-->after that it will promt you option retain variable value just check in the box and processed How do u reset sequence generator value u move it from development to production . similiar way you will get an option retain sequesnce generator---> How to delete duplicate values from UNIX what it means? u ment scripts? unix is a file system duplicate values menas? In the first question, I am asking abt the mapping parameters and variables. How to delete duplicate records from Unix( this question was asked in an intervi ew).

I guess, we can write shell script to do this, is there any other way? ok for mapping variable while doinf migration it will promt you retain mapping v araible option--->just check in the option So it will update the value as same a s target values generally parameters should be generated through a mapping.... this is one of th e best practices of development. if this is done, u will not face such issues wh ie migration..... this is conceptually possible but I have never sent some seq. nos of Dev to prod ..... Not a single fool uses the sequence nos of dev in prod.... if u wanna still use the same values, u can do as said by Kumara.... for removing duplicates, the optimized way is to use an expression..... this nev er needs building cahce... using unix script, we can use diff of files which is the fastest way of all... can we update data into target without using update strategy transformation? 2)How will you populate the time dimension table ? 3)Suppose one flat file taking loading time of 3 hrs. How u can reduce that loading time to 1 hr -----------------------------------------------------------------------------------------------------------p://manudwhtech.blogspot.com -----------------------ID NAME --- -----10 SACHIN TENDULKAR 20 APJ ABDUL KALAM 30 SANIA

OUTPUT--> TARGET. ID NAME --- -------10 SACHIN TENDULKAR 20 APJ ABDUL KALAM 30 SANIA -------------------------------------------------------how to keep track of how many rows have passed through an expression transformat ion ? suppose I have 10 rows in my source and they all pass through an expression tran sformation ,I need to keep a variable count which keeps updating itself as the r ow passes through . thats my understanding of implementing it ,

please propose a solution , how could we achieve this? ---------------------------------------------------------------------------how to find out size of flat file that load from source ? i meant is it anywhere i can findout in repository tables ? Using ls -s we can find the Size of the Flat-Files.The Informatica Metadata tabl e(REP_SESS_LOG) stores only the (SUCCESSFUL_ROWS) and OPB_FILE_DESC stores the F ile Properties (Delimiter,row_skip,code_page etc).Informatica Metadata Tables ne ver Stores the Size of a flat-file because here size is dynamic. --------------------------------------------------------Suppose I have 2 records having per Ticket Ticket CURR VALUE 123456 GBR 200 123456 EUR 400 Now I need to implement the scenerio in such a way that Currency in the final column is GBP and value (400 ) in this case should be conv erted to GBR ( suppose 250) then 200 + 250 is the final value and currency populated is GPB for this particu lar ticket. 123456 GPB 450 Source (Flat-File)---SQ-->Expression (Used Decode Stmt)--->Target (Flat-File). Let me now if u need any other information ? --------------------------------------------------------------I am using mapping variables and end of the session run i dont want the values t o be saved onto repository. Each time the session gets initiated it should take the initial value=0 and run the maping With out using the Parameter file, can this be accomplished ?? PS:In the help file it is mentioned as We can reset all variable values in workf low manager. Iam not sure how to accomplish this.... Hi, In workflow manager , right click the perticular session and then click in view persistent value. u see the current value of the mapping variable. U can reset values of the particular variable ------------------------------------------------------------------http://prdeepakbabu.wordpress.com/ -----------------------------------------------about pre-sql, post -sql in source qualifer hi any one can explain about pre-sql,post-sql in source qualifier, and in which situations we use? You can specify pre- and post-session SQL in the Source Qualifier transformation and the target instance when you create a mapping. When you create a Session ta

sk in the Workflow Manager you can override the SQL commands on the Mapping tab. You might want to use these commands to drop indexes on the target before the s ession runs, and then recreate them when the session completes. The Integration Service runs pre-session SQL commands before it reads the source . It runs post-session SQL commands after it writes to the target. -----------------------------------------------------------------------Wat exactly you are looking for in SQL Transformation... Here is a lill overview of SQL Transformation Two modes :1) Script Mode - run scripts that are located externally. you specify script name to the transf ormation with - It outputs one row for each input row Ports ScriptName Input ScriptResult Output - Returns PASSED or FAILED ScriptError Output - Returns error 2) Query Mode Allows to run queries that you define in the editor. You can pass strings or par ameters to the query (dynamic query). You can output multiple rows when the quer y has a select statement. Static Dynamic : Full Query - put ~Query_Port~ to pass the full query to the transformation you can pass more than 1 query statment. If the query has SELECT, then you have to configure the output ports Partial Query you can create a port and then write the sql and then use ~Table_Port~ to substi tue the value Connection Type : Specify a connection object or pass the database connection to the transformation at run time. Number of ports should match with the number of columns in the SELECT Default port :SQLErrorPort, Numaffectedports ---------------------------------------------------------------------------------Field value insertion using Parameter file I am facing a problem with parametrization I have about 5-10 mappings and in which all target tables have a common field PR OID , I need to insert a data ID123 to PROID in all the tables using parameter that is with out hard coding , can any one please tell me how it can be achieved my a s ingle parameter file for all 10 mappings Create One Common Parameter File for all ur 10 Mappings.Use the Same Parameter i n all your Mappings Means For each Mapping Create One Output Variable and Assign the Parameter Value to the O/P Port. ----------------------------------------------------------------------------PMREP Command Hi All,

I have exported my file definition by using PMREP - Objectexport command. After i have done some modifications,When i tried to import thru -Objectimport command it was asking me control file.What is the control file?And where it will be sto red in the Informatica installed directories? --------------------------------------------------------Exclude last 5 rows from flatfile Hi Can any one help giving the logic to exclude last 5 rows from flat file if my so urce is varying ? Use expression trans, add a new row_counter column to the stream and populate it ... it's a simple variable column with logic: row_counter=row_counter+1 sort of. .. once you get hold of each row using row counter, use Filter to filter out the la st 5 rows.... note that, 5th row from last = (row_counter-5)th row, at the end!!! SRC->SQ->SEQGEN1->EXP1->SORTER->SEQGEN2->EXP2->FILTER->TARGET 1.Pull all the ports from source qualifier to EXP1.At the same time create SNO p ort in EXP1 2.connect the NEXTVAL port from SEQGEN1 to SNO port in EXP1. Check in the RESET option in SEQGEN1 properties. 3.Now drag all the ports from EXP1 to SORTER.Select the SNO as KEY(Descending). 4.Pull all the ports from SORTER to EXP2.At the same time create SEQNO port in E XP2. 5.connect the NEXTVAL port from SEQGEN2 to SEQNO port in EXP2. Check in the RESE T option in SEQGEN2 properties. 6.Drag all ports to FILTER FROM EXP2.Set the condition as SEQNO>5 7.Now connect the required ports to target.

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5814)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1092)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (844)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (348)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Hunger Games: Catching Fire: Using Digital and Social Media For Brand StorytellingDocument9 pagesThe Hunger Games: Catching Fire: Using Digital and Social Media For Brand StorytellingMo MoNo ratings yet

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Amanda NG Zixuan: Education and TrainingDocument2 pagesAmanda NG Zixuan: Education and Trainingapi-272697715No ratings yet

- Piping Class: PROJ: 2963 REV: 1 DOC: PPAG-100-ET-C-009Document5 pagesPiping Class: PROJ: 2963 REV: 1 DOC: PPAG-100-ET-C-009Santiago GarciaNo ratings yet

- Stoicism and Anti-Stoicism in European Philosophy and Political Thought, 1640-1795Document163 pagesStoicism and Anti-Stoicism in European Philosophy and Political Thought, 1640-1795HadaixNo ratings yet

- Extensive and IntensiveDocument16 pagesExtensive and IntensiveWaleed GujjarNo ratings yet

- Vespa GTV250 Workshop ManualDocument308 pagesVespa GTV250 Workshop Manuallynhaven1No ratings yet

- CT705UN (G) : Commercial Flushometer Floor-Mounted Toilet - 1.0/1.28/1.6 GPFDocument2 pagesCT705UN (G) : Commercial Flushometer Floor-Mounted Toilet - 1.0/1.28/1.6 GPFroberto carlos ArceNo ratings yet

- Introduction 1 FaircloughDocument8 pagesIntroduction 1 FaircloughKashif MehmoodNo ratings yet

- Example Checklist For Piping and HVAC Drawings in Interiour Design ProjectsDocument2 pagesExample Checklist For Piping and HVAC Drawings in Interiour Design Projectskhanh123ctmNo ratings yet

- Basic Calculus Module PDFDocument31 pagesBasic Calculus Module PDFJesse Camille Ballesta ValloNo ratings yet

- ITS665dm Topic2-DataUnderstandingDocument53 pagesITS665dm Topic2-DataUnderstandingMuhammad FadzreenNo ratings yet

- Cowper & Hall (2012) Aspects of Individuation.Document27 pagesCowper & Hall (2012) Aspects of Individuation.Alan MottaNo ratings yet

- CNC PDFDocument99 pagesCNC PDFAdrianNo ratings yet

- MGT162 Group Project - OCTOBER 2022Document3 pagesMGT162 Group Project - OCTOBER 2022mobile hairisNo ratings yet

- Aspect: Euro Modular Frontplates Accessory ModuleDocument1 pageAspect: Euro Modular Frontplates Accessory ModuleNouh RaslanNo ratings yet

- Project 3Document65 pagesProject 3Soham DalviNo ratings yet

- NTSE Stage 1 Delhi Solved Paper 2014Document37 pagesNTSE Stage 1 Delhi Solved Paper 2014ramar.r.k9256No ratings yet

- Assessment Practices - TutorDocument38 pagesAssessment Practices - Tutorszulkifli_14No ratings yet

- Ergonomics - Posture: ChairDocument3 pagesErgonomics - Posture: ChairZegera MgendiNo ratings yet

- Von Roll Annual Report 2012 EDocument103 pagesVon Roll Annual Report 2012 Eraul_beronNo ratings yet

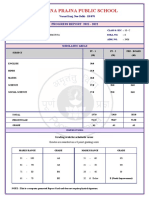

- Poorna Prajna Public School: Progress Report 2021 - 2022Document1 pagePoorna Prajna Public School: Progress Report 2021 - 2022SwagBeast SKJJNo ratings yet

- SOP For Operation and Maintenance of Water Purification SystemDocument2 pagesSOP For Operation and Maintenance of Water Purification SystemSan ThisaNo ratings yet

- International Standard: Iso/Ieee 11073-20601Document15 pagesInternational Standard: Iso/Ieee 11073-20601Sean TinsleyNo ratings yet

- Privacy and Security in AadharDocument5 pagesPrivacy and Security in Aadhar046-ESHWARAN SNo ratings yet

- The Simple Present of The Verb To BeDocument4 pagesThe Simple Present of The Verb To Beultraline1500No ratings yet

- Sor - WRD Gob - 01 - 10 - 12Document383 pagesSor - WRD Gob - 01 - 10 - 12Abhishek sNo ratings yet

- Rab Type 45Document64 pagesRab Type 45Ichaa Cullen-way WilliamsNo ratings yet

- CAPgemini SAPDocument64 pagesCAPgemini SAPFree LancerNo ratings yet

- Maintaining Privacy in Medical Imaging With Federated Learning Deep Learning Differential Privacy and Encrypted ComputationDocument6 pagesMaintaining Privacy in Medical Imaging With Federated Learning Deep Learning Differential Privacy and Encrypted ComputationDr. V. Padmavathi Associate ProfessorNo ratings yet

- TajidDocument4 pagesTajidIrfan Ullah100% (1)