Professional Documents

Culture Documents

Language Trees and Zipping: Olume Umber

Uploaded by

bettyKaloOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Language Trees and Zipping: Olume Umber

Uploaded by

bettyKaloCopyright:

Available Formats

VOLUME 88, NUMBER 4

PHYSICAL REVIEW LETTERS

28 JANUARY 2002

Language Trees and Zipping

Dario Benedetto,1, * Emanuele Caglioti,1, and Vittorio Loreto2,3,

La Sapienza University, Mathematics Department, Piazzale Aldo Moro 5, 00185 Rome, Italy 2 La Sapienza University, Physics Department, Piazzale Aldo Moro 5, 00185 Rome, Italy 3 INFM, Center for Statistical Mechanics and Complexity, Rome, Italy (Received 29 August 2001; revised manuscript received 13 September 2001; published 8 January 2002) In this Letter we present a very general method for extracting information from a generic string of characters, e.g., a text, a DNA sequence, or a time series. Based on data-compression techniques, its key point is the computation of a suitable measure of the remoteness of two bodies of knowledge. We present the implementation of the method to linguistic motivated problems, featuring highly accurate results for language recognition, authorship attribution, and language classication.

DOI: 10.1103/PhysRevLett.88.048702 PACS numbers: 89.70. +c, 01.20. +x, 05.20. y, 05.45.Tp

1

Many systems and phenomena in nature are often represented in terms of sequences or strings of characters. In experimental investigations of physical processes, for instance, one typically has access to the system only through a measuring device which produces a time record of a certain observable, i.e., a sequence of data. On the other hand, other systems are intrinsically described by a string of characters, e.g., DNA and protein sequences, language. When analyzing a string of characters the main question is to extract the information it brings. For a DNA sequence this would correspond to the identication of the subsequences codifying the genes and their specic functions. On the other hand, for a written text one is interested in understanding it, i.e., recognizing the language in which the text is written, its author, the subject treated, and eventually the historical background. The problem being cast in such a way, one would be tempted to approach it from a very interesting point of view: that of information theory [1,2]. In this context the word information acquires a very precise meaning, namely that of the entropy of the string, a measure of the surprise the source emitting the sequences can reserve to us. As is evident, the word information is used with different meanings in different contexts. Suppose now for a while having the ability to measure the entropy of a given sequence (e.g., a text). Is it possible to obtain from this measure the information (in the semantic sense) we were trying to extract from the sequence? This is the question we address in this paper. In particular, we dene in a very general way a concept of remoteness (or similarity) between pairs of sequences based on their relative informational content. We devise, without loss of generality with respect to the nature of the strings of characters, a method to measure this distance based on data-compression techniques. The specic question we address is whether this informational distance between pairs of sequences is representative of the real semantic difference between the sequences. It turns out that the answer is yes, at least in the framework of the examples on which we have implemented the method. We have chosen for our tests some textual corpora and we have 048702-1 0031-9007 02 88(4) 048702(4)$20.00

evaluated our method on the basis of the results obtained on some linguistic motivated problems. Is it possible to automatically recognize the language in which a given text is written? Is it possible to automatically guess the author of a given text? Last but not the least, is it possible to identify the subject treated in a text in a way that permits its automatic classication among many other texts in a given corpus? In all the cases the answer is positive as we shall give evidence of in the following. Before discussing the details of our method let us briey recall the denition of entropy which is closely related to a very old problem, that of transmitting a message without losing information, i.e., the problem of the efcient encoding [3]. The problem of the optimal coding for a text (or an image or any other kind of information) has been enormously studied in the last century. In particular Shannon [1] discovered that there is a limit to the possibility of encoding a given sequence. This limit is the entropy of the sequence. There are many equivalent denitions of entropy, but probably the best denition in this context is the Chaitin Kolmogorov entropy [47]: the entropy of a string of characters is the length (in bits) of the smallest program which produces as output the string. This denition is really abstract. In particular it is impossible, even in principle, to nd such a program. Nevertheless there are algorithms explicitly conceived to approach this theoretical limit. These are the le compressors or zippers. A zipper takes a le and tries to transform it in the shortest possible le. Obviously this is not the best way to encode the le but it represents a good approximation of it. One of the rst compression algorithms is the Lempel and Ziv algorithm (LZ77) [8,9] (used for instance by gzip, zip, and Stacker). It is interesting to briey recall how it works. The LZ77 algorithm nds duplicated strings in the input data. More precisely it looks for the longest match with the beginning of the lookahead buffer and outputs a pointer to that match given by two numbers: a distance, representing how far back the match starts, and a length, representing the number of matching characters. For example, the match of the sequence s1 . . . sn will be represented by the 2002 The American Physical Society 048702-1

VOLUME 88, NUMBER 4

PHYSICAL REVIEW LETTERS

28 JANUARY 2002

pointer d , n, where d is the distance at which the match starts. The matching sequence will then be encoded with a number of bits equal to log2 d 1 log2 n: i.e., the number of bits necessary to encode d and n. Roughly speaking the average distance between two consecutive s1 . . . sn is of the order of the inverse of its occurrence probability. Therefore the zipper will encode more frequent sequences with few bytes and will spend more bytes only for rare sequences. The LZ77 zipper has the following remarkable property: if it encodes a sequence of length L emitted by an ergodic source whose entropy per character is s, then the length of the zipped le divided by the length of the original le tends to s when the length of the text tends to ` (see [8,10], and references therein). In other words, it does not encode the le in the best way but it does it better and better as the length of the le increases. The compression algorithms provide then a powerful tool for the measure of the entropy and the elds of applications are innumerous ranging from theory of dynamical systems [11] to linguistics and genetics [12]. Therefore the rst conclusion one can draw is about the possibility of measuring the entropy of a sequence simply by zipping it. In this paper we exploit this kind of algorithm to dene a concept of remoteness between pairs of sequences. An easy way to understand where our denitions come from is to recall the notion of relative entropy whose essence can be easily grasped with the following example. Let us consider two ergodic sources A and B emitting sequences of 0 and 1: A emits a 0 with probability p and 1 with probability 1 2 p , while B emits 0 with probability q and 1 with probability 1 2 q. As already described, the compression algorithm applied to a sequence emitted by A will be able to encode the sequence almost optimally, i.e., coding a 0 with 2 log2 p bits and a 1 with 2 log2 1 2 p bits. This optimal coding will not be the optimal one for the sequence emitted by B . In particular the entropy per character of the sequence emitted by B in the coding optimal for A will be 2q log2 p 2 1 2 q log2 1 2 p while the entropy per character of the sequence emitted by B in its optimal coding is 2q log2 q 2 1 2 q log2 1 2 q. The number of bits per character wasted to encode the sequence emitted by B with the coding optimal for A is the relative entropy (see Kullback-Leibler [13]) of A and B , SAB p 12p 2q log2 q 2 1 2 q log2 12q . There exist several ways to measure the relative entropy (see, for instance, [10,14]). One possibility is of course to follow the recipe described in the previous example: using the optimal coding for a given source to encode the messages of another source. The path we follow is along this stream. In order to dene the relative entropy between two sources A and B we extract a long sequence A from the source A and a long sequence B as well as a small sequence b from the source B . We create a new sequence A 1 b by simply appending b after A. The sequence A 1 b is now zipped, for example using gzip, and the 048702-2

measure of the length of b in the coding optimized for A will be DAb LA1b 2 LA , where LX indicates the length in bits of the zipped le X . The relative entropy SA per character between A and B will be estimated by SAB DAb 2 DBb jb j , (1) where jb j is the number of characters of the sequence b and DBb jb j LB1b 2 LB jb j is an estimate of the entropy of the source B . Translated in a linguistic framework, if A and B are texts written in different languages, DAb is a measure of the difculty for a generic person of mother tongue A to understand the text written in the language B. Let us consider a concrete example where A and B are two texts written for instance in English and Italian. We take a long English text and we append to it an Italian text. The zipper begins reading the le starting from the English text. So after a while it is able to encode optimally the English le. When the Italian part begins, the zipper starts encoding it in a way which is optimal for the English, i.e., it nds most of the matches in the English part. So the rst part of the Italian le is encoded with the English code. After a while the zipper learns Italian, i.e., it tends progressively to nd most of the matches in the Italian part with respect to the English one, and changes its rules. Therefore if the length of the Italian le is small enough [15], i.e., if most of the matches occur in the English part, the expression (1) will give a measure of the relative entropy. We have checked this method on sequences for which the relative entropy is known, obtaining an excellent agreement between the theoretical value of the relative entropy and the computed value [15]. The results of our experiments on linguistic corpora turned out to be very robust with respect to large variations on the size of the le b [typically 1 15 kilobytes (kbyte) for a typical size of le A of the order of 32 64 kbyte]. These considerations open the way to many possible applications. Though our method is very general [16], in this paper we focus in particular on sequences of characters representing texts, and we shall discuss in particular two problems of computational linguistics: context recognition and the classication of sequences corpora. Language recognition. Suppose we are interested in the automatic recognition of the language in which a given text X is written. The procedure we use considers a collection of long texts (a corpus) in as many different (known) languages as possible: English, French, Italian, Tagalog, . . . . We simply consider all the possible les obtained appending a portion x of the unknown le X to all the possible other les Ai and we measure the differences LAi 1x 2 LAi . The le for which this difference is minimal will select the language closest to the one of the X le, or exactly its language, if the collection of languages contained this language. We have considered in particular a corpus of texts in 10 ofcial languages of the European Union (UE) [17]: Danish, Dutch, English, Finnish, French, 048702-2

VOLUME 88, NUMBER 4

PHYSICAL REVIEW LETTERS

28 JANUARY 2002

German, Italian, Portuguese, Spanish, and Swedish. Each text of the corpus played in turn the role of the text X and all the others the role of the Ai . Using in particular 10 texts per language (giving a total corpus of 100 texts) we have obtained that for any single text the method has recognized the language: this means that for any text X the text Ai for which the difference LAi 1x 2 LAi was minimum was a text written in the same language. Moreover it turned out that, ranking for each X all the texts Ai as a function of the difference LAi 1x 2 LAi , all the texts written in the same language were in the rst positions. The recognition of the language works quite well for length of the X le as small as 20 characters. Authorship attribution. Suppose in this case we are interested in the automatic recognition of the author of a given text X . We shall consider as before a collection, as large as possible, of texts of several (known) authors all written in the same language of the unknown text and we shall look for the text Ai for which the difference LAi 1x 2 LAi is minimum. In order to collect a certain statistics we have performed the experiment using a corpus of 90 different texts [18], using for each run one of the texts in the corpus as unknown text. The results, shown in Table I, feature a rate of success of 93.3%. This rate is the ratio between the number of texts whose author has been recognized (another text of the same author was ranked as rst) and the total number of texts considered. The rate of success increases by considering more rened procedures (performing, for instance, weighted averages over the rst m ranked texts of a given text). There are, of course, uctuations in the success rate for each author and this has to be expected since the writing style is something difcult to grasp and dene; moreover, it can vary a lot in the production of a single author. Classication of sequences. Suppose we have a collection of texts, for instance a corpus containing several

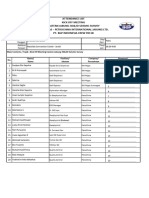

TABLE I. Authorship attribution: For each author depicted we report the number of different texts considered and two measures of success. Number of successes 1 and 2 are the numbers of times another text of the same author was ranked in the rst position or in one of the rst two positions, respectively. Author Alighieri DAnnunzio Deledda Fogazzaro Guicciardini Macchiavelli Manzoni Pirandello Salgari Svevo Verga Total No. of texts 8 4 15 5 6 12 4 11 11 5 9 90 No. of successes 1 8 4 15 4 5 12 3 11 10 5 7 84 No. of successes 2 8 4 15 5 6 12 4 11 10 5 9 89

versions of the same text in different languages, and that we are interested in a classication of this corpus. One has to face two kinds of problems: the availability of large collections of long texts in many different languages and, related to it, the need of a uniform coding for the characters in different languages. In order to solve the second problem we used, for all the texts, the UNICODE [19] standard coding. In order to have the largest possible corpus of texts in different languages we used The Universal Declaration of Human Rights [20], which is considered to be the most often translated document in the world; see [21]. Our method, mutuated by the phylogenetic analysis of biological sequences [22 24], considers the construction of a distance matrix, i.e., a matrix whose elements are the distances between pairs of texts. We dene the distance by SAB DAb 2 DBb DBb 1 DBa 2 DAa DAa , (2) where A and B are indices running on the corpus elements and the normalization factors are chosen in order to be independent of the coding of the original les. Moreover, since the relative entropy is not a distance in the mathematical sense, we make the matrix elements satisfying the triangular inequality. It is important to remark that a rigorous denition of distance between two bodies of knowledge has been proposed by Li and Vitnyi [12]. Starting from the distance matrix one can build a tree representation: phylogenetic trees [24], spanning trees, etc. In our example, we have used the Fitch-Margoliash method [25] of the package PhylIP (phylogeny inference package) [26] which basically constructs a tree by minimizing the net disagreement between the matrix pairwise distances and the distances measured on the tree. Similar results have been obtained with the neighbor algorithm [26]. In Fig. 1 we show the results for over 50 languages widespread on the Euro-Asiatic continent. We can notice that essentially all the main linguistic groups (Ethnologue source [27]) are recognized: Romance, Celtic, Germanic, Ugro-Finnic, Slavic, Baltic, Altaic. On the other hand, one has isolated languages such as the Maltese, which is typically considered an Afro-Asiatic language, and the Basque, which is classied as a non-Indo-European language and whose origins and relationships with other languages are uncertain. Needless to say, a careful investigation of specic linguistics features is not our purpose. In this framework we are interested only in presenting the potentiality of the method for several disciplines. In conclusion, we have presented here a general method to recognize and classify automatically sequences of characters. We have discussed in particular the application to textual corpora in several languages. We have shown how a suitable denition of remoteness between texts, based on the concept of relative entropy, allows us to extract from a text much important information: the language in 048702-3

048702-3

VOLUME 88, NUMBER 4

Romani Balkan [East Europe] OccitanAuvergnat [France] Walloon [Belgique] Corsican [France] Italian [Italy] Sammarinese [Italy] Rhaeto Romance [Switzerland] Friulian [Italy] French [France] Catalan [Spain] Occitan [France] Asturian [Spain] Spanish [Spain] Galician [Spain] Portuguese [Portugal] Sardinian [Italy] Romanian [Romania] Romani Vlach [Macedonia] English [UK] Maltese [Malta] Welsh [UK] Irish Gaelic [Eire] Scottish Gaelic [UK] Breton [France] Faroese [Denmark] Icelandic [Iceland] Swedish [Sweden] Danish [Denmark] Norwegian Bokmal [Norway] Norwegian Nynorsk [Norway] Luxembourgish [Luxembourg] German [Germany] Frisian [Netherlands] Afrikaans Dutch [Netherlands] Finnish [Finland] Estonian [Estonia] Turkish [Turkey] Uzbek [Utzbekistan] Hungarian [Hungary] Basque [Spain] Slovak [Slovakia] Czech [Czech Rep.] Bosnian [Bosnia] Serbian [Serbia] Croatian [Croatia] Slovenian [Slovenia] Polish [Poland] Sorbian [Germany] Lithuanian [Lithuania] Latvian [Latvia] Albanian [Albany]

PHYSICAL REVIEW LETTERS

28 JANUARY 2002

ROMANCE

The authors are grateful to Piero Cammarano, Giuseppe Di Carlo, and Anna Lo Piano for many enlightening discussions. This work has been partially supported by the European Network-Fractals under Contract No. FMRXCT980183, GNFM (INDAM).

CELTIC

GERMANIC

UGROFINNIC ALTAIC

SLAVIC BALTIC

FIG. 1. Language Tree: This gure illustrates the phylogenetic-like tree constructed on the basis of more than 50 different versions of The Universal Declaration of Human Rights. The tree is obtained using the Fitch-Margoliash method applied to a distance matrix whose elements are computed in terms of the relative entropy between pairs of texts. The tree features essentially all the main linguistic groups of the Euro-Asiatic continent (Romance, Celtic, Germanic, UgroFinnic, Slavic, Baltic, Altaic), as well as a few isolated languages such as the Maltese, typically considered an Afro-Asiatic language, and the Basque, classied as a non-Indo-European language, and whose origins and relationships with other languages are uncertain. Notice that the tree is unrooted, i.e., it does not require any hypothesis about common ancestors for the languages. What is important is the relative positions between pairs of languages. The branch lengths do not correspond to the actual distances in the distance matrix.

which it is written, the subject treated as well as its author; on the other hand, the method allows us to classify sets of sequences (a corpus) on the basis of the relative distances among the elements of the corpus itself and organize them in a hierarchical structure (graph, tree, etc.). The method is highly versatile and general. It applies to any kind of corpora of character strings independently of the type of coding behind them: time sequences, language, genetic sequences (DNA, proteins, etc.). It does not require any a priori knowledge of the corpus under investigation (alphabet, grammar, syntax) nor about its statistics. These features are potentially very important for elds where the human intuition can fail: DNA and protein sequences, geological time series, stock market data, medical monitoring, etc. 048702-4

*Electronic address: benedetto@mat.uniroma1.it Electronic address: caglioti@mat.uniroma1.it Electronic address: loreto@roma1.infn.it [1] C. E. Shannon, Bell Syst. Tech. J. 27, 379 (1948); 27, 623 (1948). [2] Complexity, Entropy and Physics of Information, edited by W. H. Zurek (Addison-Wesley, Redwood City, CA, 1990). [3] D. Welsh, Codes and Cryptography (Clarendon Press, Oxford, 1989). [4] A. N. Kolmogorov, Probl. Inf. Transm. 1, 1 (1965). [5] G. J. Chaitin, J. Assoc. Comput. Mach. 13, 547 (1966). [6] G. J. Chaitin, Information Randomness and Incompleteness (World Scientic, Singapore, 1990), 2nd ed. [7] R. J. Solomonov, Inf. Control 7, 1 (1964); 7, 224 (1964). [8] A. Lempel and J. Ziv, IEEE Trans. Inf. Theory 23, 337 343 (1977). [9] J. Ziv and A. Lempel, IEEE Trans. Inf. Theory 24, 530 (1978). [10] A. D. Wyner, Typical Sequences and All That: Entropy, Pattern Matching and Data Compression (1994 Shannon Lecture), A.D. IEEE Information Theory Society Newsletter, July 1995. [11] F. Argenti et al., Information and Dynamical Systems: A Concrete Measurement on Sporadic Dynamics (to be published). [12] M. Li and P. Vitnyi, An Introduction to Kolmogorov Complexity and Its Applications (Springer, New York, 1997), 2nd ed. [13] S. Kullback and R. A. Leibler, Ann. Math. Stat. 22, 79 86 (1951). [14] N. Merhav and J. Ziv, IEEE Trans. Inf. Theory 39, 1280 (1993). [15] D. Benedetto, E. Caglioti, and V. Loreto (to be published). [16] Patent pending CCIAA Roma N. RM2001A000399. [17] europa.eu.int [18] www.liberliber.it [19] See for details: www.unicode.org [20] www.unhchr.ch/udhr/navigate/alpha.htm [21] Guinness World Records 2002 (Guinness World Records, Ltd., Eneld, United Kingdom, 2001). [22] L. L. Cavalli-Sforza and A. W. F. Edwards, Evolution 32, 550 570 (1967); Am. J. Human Genet. 19, 233 257 (1967). [23] J. S. Farris, Advances in Cladistics: Proceedings of the First Meeting of the Willi Hennig Society, edited by V. A. Funk and D. R. Brooks (New York Botanical Garden, Bronx, New York, 1981), pp. 3 23. [24] J. Felsenstein, Evolution 38, 16 24 (1984). [25] W. M. Fitch and E. Margoliash, Science 155, 279 284 (1967). [26] J. Felsenstein, Cladistics 5, 164 166 (1989). [27] www.sil.org/ethnologue

048702-4

You might also like

- Statistical Independence in Probability, Analysis and Number TheoryFrom EverandStatistical Independence in Probability, Analysis and Number TheoryNo ratings yet

- Philology and Information TheoryDocument19 pagesPhilology and Information TheoryAliney SantosNo ratings yet

- Lempel ZivDocument7 pagesLempel ZivDũng Nguyễn TrungNo ratings yet

- Adding Branching to the Strand Space ModelDocument16 pagesAdding Branching to the Strand Space ModelAiouche ChalalaNo ratings yet

- LDPC Codes: An Introduction: Amin ShokrollahiDocument34 pagesLDPC Codes: An Introduction: Amin Shokrollahitsk4b7No ratings yet

- An Approximate String-Matching Algorithm: Jong Yong Kim and John Shawe-Taylor QFDocument11 pagesAn Approximate String-Matching Algorithm: Jong Yong Kim and John Shawe-Taylor QFyetsedawNo ratings yet

- Unit 2 - Part 7 Coding Information Sources: 1 Adaptive Variable-Length CodesDocument5 pagesUnit 2 - Part 7 Coding Information Sources: 1 Adaptive Variable-Length CodesfsaNo ratings yet

- Shang Tries For Approximate String MatchingDocument46 pagesShang Tries For Approximate String MatchingalexazimmermannNo ratings yet

- Partially Ordered Multiset Context-Free Grammars and ID/LP ParsingDocument13 pagesPartially Ordered Multiset Context-Free Grammars and ID/LP ParsingPebri JoinkunNo ratings yet

- Efficient Compression From Non-Ergodic Sources With Genetic AlgorithmsDocument7 pagesEfficient Compression From Non-Ergodic Sources With Genetic Algorithmsazmi_faNo ratings yet

- 2015 Chapter 7 MMS ITDocument36 pages2015 Chapter 7 MMS ITMercy DegaNo ratings yet

- Extracting Noun Phrases From Large-Scale Texts: A Hybrid Approach and Its Automatic EvaluationDocument8 pagesExtracting Noun Phrases From Large-Scale Texts: A Hybrid Approach and Its Automatic EvaluationziraNo ratings yet

- ParsingDocument4 pagesParsingAmira ECNo ratings yet

- Document PDFDocument6 pagesDocument PDFTaha TmaNo ratings yet

- Provably Secure Steganography (Extended Abstract)Document16 pagesProvably Secure Steganography (Extended Abstract)Irfan AlamNo ratings yet

- Sayood DataCompressionDocument22 pagesSayood DataCompressionnannurahNo ratings yet

- Info Theory11Document4 pagesInfo Theory11JohnMichaelP.GuiaoNo ratings yet

- Design and Analysis of Dynamic Huffman Codes: Jeffrey Scott VitterDocument21 pagesDesign and Analysis of Dynamic Huffman Codes: Jeffrey Scott VitterZlatni PresekNo ratings yet

- Optimal Data Compression Algorithm: PergamonDocument16 pagesOptimal Data Compression Algorithm: Pergamonscribdsurya4uNo ratings yet

- Grammatical Methods in Computer Vision: An OverviewDocument23 pagesGrammatical Methods in Computer Vision: An OverviewDuncan Dunn DundeeNo ratings yet

- Journal of Discrete Algorithms: Satoshi Yoshida, Takuya KidaDocument12 pagesJournal of Discrete Algorithms: Satoshi Yoshida, Takuya KidaChaRly CvNo ratings yet

- Coding TheoryDocument203 pagesCoding TheoryoayzNo ratings yet

- Cryptologia Com ClaudiaDocument16 pagesCryptologia Com ClaudiaJose Antonio Moreira XexeoNo ratings yet

- Sparse Topical Coding With Sparse Groups: (Pengm, Xieq, Huangjj, Zhujiahui, Ouyangshuang,) @whu - Edu.cnDocument12 pagesSparse Topical Coding With Sparse Groups: (Pengm, Xieq, Huangjj, Zhujiahui, Ouyangshuang,) @whu - Edu.cnchanceNo ratings yet

- Automata Theory Module 1Document85 pagesAutomata Theory Module 1Anusha k p100% (1)

- Unit V-AI-KCS071Document28 pagesUnit V-AI-KCS071Sv tuberNo ratings yet

- Ancient language reconstruction using probabilistic modelsDocument30 pagesAncient language reconstruction using probabilistic modelsANo ratings yet

- Zipf's and Heaps' Laws For The Natural and Some Related Random TextsDocument13 pagesZipf's and Heaps' Laws For The Natural and Some Related Random Textsdlskjwi90gejwombhsntNo ratings yet

- 04 - Berwick - WebDocument12 pages04 - Berwick - Webabderrahmane bendaoudNo ratings yet

- Hierarchical Topics in Texts Generated by A StreamDocument14 pagesHierarchical Topics in Texts Generated by A StreamDarrenNo ratings yet

- Lemp El Ziv ReportDocument17 pagesLemp El Ziv ReportJohn Lester Cruz EstarezNo ratings yet

- Lect 11Document7 pagesLect 11Randika PereraNo ratings yet

- Data Compression TechniquesDocument11 pagesData Compression Techniquessmile00972No ratings yet

- M.S. Ramaiah Institute of Technology Course on Artificial IntelligenceDocument39 pagesM.S. Ramaiah Institute of Technology Course on Artificial IntelligenceNishitNo ratings yet

- Chapter - 13 (Greedy Technique)Document18 pagesChapter - 13 (Greedy Technique)JenberNo ratings yet

- Using Suffix Arrays To Compute Term Frequency and Document Frequency For All Substrings in A CorpusDocument30 pagesUsing Suffix Arrays To Compute Term Frequency and Document Frequency For All Substrings in A CorpusAhsan AliNo ratings yet

- Compression: Author: Paul Penfield, Jr. Url: TocDocument5 pagesCompression: Author: Paul Penfield, Jr. Url: TocAntónio LimaNo ratings yet

- Chapter 3 Lexical AnalyserDocument29 pagesChapter 3 Lexical AnalyserkalpanamcaNo ratings yet

- Bandwidth Efficient String Reconciliation using PuzzlesDocument24 pagesBandwidth Efficient String Reconciliation using PuzzlesAlone350No ratings yet

- Apostolico LZ GapsDocument10 pagesApostolico LZ GapsUtun JayNo ratings yet

- Introduction To Information Theory and CodingDocument46 pagesIntroduction To Information Theory and CodingGv RaviNo ratings yet

- Assignment No 1 (Data Science) - AshberDocument9 pagesAssignment No 1 (Data Science) - AshberAshber Ur Rehman KhanNo ratings yet

- Longest Common StringDocument40 pagesLongest Common StringIván AveigaNo ratings yet

- Chapter 2 Lexical AnalysisDocument26 pagesChapter 2 Lexical AnalysisElias HirpasaNo ratings yet

- Efficient Lazy Algorithms for Minimal-Interval SemanticsDocument27 pagesEfficient Lazy Algorithms for Minimal-Interval SemanticsgoshhhhhhhhhNo ratings yet

- Binary Jumbled Pattern Matching On Trees and Tree-Like StructuresDocument18 pagesBinary Jumbled Pattern Matching On Trees and Tree-Like StructuresTarif IslamNo ratings yet

- FLAT Notes PDFDocument110 pagesFLAT Notes PDFrameshNo ratings yet

- Improving Tunstall Codes Using Suffix TreesDocument16 pagesImproving Tunstall Codes Using Suffix TreesLidia LópezNo ratings yet

- UntitledDocument116 pagesUntitledTsega TeklewoldNo ratings yet

- J. Daude, L. Padro & G. RigauDocument8 pagesJ. Daude, L. Padro & G. RigaumarkrossoNo ratings yet

- Integer Sorting in O (N SQRT (Log Log N) ) ) Expected Time and Linear SpaceDocument10 pagesInteger Sorting in O (N SQRT (Log Log N) ) ) Expected Time and Linear SpaceshabuncNo ratings yet

- A Preference-First Language Processor Integrating The Unification Grammar and Markov Language Model For Speech Recognition-ApplicationsDocument6 pagesA Preference-First Language Processor Integrating The Unification Grammar and Markov Language Model For Speech Recognition-ApplicationsSruthi VargheseNo ratings yet

- Ai Unit 5Document16 pagesAi Unit 5Mukeshram.B AIDS20No ratings yet

- Textual Characteristics For Language Engineering: Mathias Bank, Robert Remus, Martin SchierleDocument5 pagesTextual Characteristics For Language Engineering: Mathias Bank, Robert Remus, Martin SchierleacouillaultNo ratings yet

- A Brief Introduction To Shannon's Information TheoryDocument13 pagesA Brief Introduction To Shannon's Information Theorychang lichangNo ratings yet

- Case StudiesDocument9 pagesCase Studiesarm masudNo ratings yet

- Ae Chapter2 MathDocument44 pagesAe Chapter2 MathjruanNo ratings yet

- Lecture 1: Problems, Models, and ResourcesDocument9 pagesLecture 1: Problems, Models, and ResourcesAlphaNo ratings yet

- Predicting The Semantic Orientation of Adjectives: Kathy) ©CS, Columbia, EduDocument8 pagesPredicting The Semantic Orientation of Adjectives: Kathy) ©CS, Columbia, EdubettyKaloNo ratings yet

- Stern HoosierDocument38 pagesStern HoosierbettyKaloNo ratings yet

- Tir 02 3 McelroyDocument15 pagesTir 02 3 McelroybettyKaloNo ratings yet

- How a court ruling impacts labor unionsDocument8 pagesHow a court ruling impacts labor unionsbettyKaloNo ratings yet

- The Jukes: A Study of Crime Pauperism Disease and HeredityDocument146 pagesThe Jukes: A Study of Crime Pauperism Disease and HereditybettyKaloNo ratings yet

- Stern HoosierDocument38 pagesStern HoosierbettyKaloNo ratings yet

- Usr GuideDocument33 pagesUsr GuidebettyKaloNo ratings yet

- Kinder Krank He ItDocument92 pagesKinder Krank He ItbettyKaloNo ratings yet

- Tribe of IshmaelDocument29 pagesTribe of IshmaelbettyKaloNo ratings yet

- DisOrientationUHfixed CroppedDocument23 pagesDisOrientationUHfixed CroppedbettyKaloNo ratings yet

- Tiger IntroductionDocument16 pagesTiger IntroductionbettyKaloNo ratings yet

- The Story of John Dillinger: "My God, You're Dillinger!"Document20 pagesThe Story of John Dillinger: "My God, You're Dillinger!"bettyKaloNo ratings yet

- Librarybook Core ModulesDocument70 pagesLibrarybook Core ModulesbettyKaloNo ratings yet

- The Story of John Dillinger: "My God, You're Dillinger!"Document20 pagesThe Story of John Dillinger: "My God, You're Dillinger!"bettyKaloNo ratings yet

- Rqnum 1Document19 pagesRqnum 1bettyKaloNo ratings yet

- 603-862-4352 - Eleta - Exline@unh - EduDocument4 pages603-862-4352 - Eleta - Exline@unh - EdubettyKaloNo ratings yet

- Tiger RegexDocument12 pagesTiger RegexbettyKaloNo ratings yet

- WeberDocument10 pagesWeberbettyKaloNo ratings yet

- Librarybook Core ModulesDocument70 pagesLibrarybook Core ModulesbettyKaloNo ratings yet

- Article A151 1Document2 pagesArticle A151 1bettyKaloNo ratings yet

- 9447 23559 1 SMDocument12 pages9447 23559 1 SMbettyKaloNo ratings yet

- W13 02Document95 pagesW13 02bettyKaloNo ratings yet

- Sahlins Levi Strauss Blog PostDocument5 pagesSahlins Levi Strauss Blog PostAresh VedaeeNo ratings yet

- Usr GuideDocument33 pagesUsr GuidebettyKaloNo ratings yet

- Tree Tagger1Document9 pagesTree Tagger1bettyKaloNo ratings yet

- Taverniers 2004 GrammaticalMetaphors-PPDocument13 pagesTaverniers 2004 GrammaticalMetaphors-PPbettyKaloNo ratings yet

- Server GuideDocument396 pagesServer Guidecarlesorozco894No ratings yet

- Using Sqlite Manager: Advanced Online MediaDocument6 pagesUsing Sqlite Manager: Advanced Online MediaHà Vũ KiênNo ratings yet

- Tiger IntroductionDocument16 pagesTiger IntroductionbettyKaloNo ratings yet

- Magic Mushrooms Growers GuideDocument46 pagesMagic Mushrooms Growers GuidebettyKalo100% (1)

- Jurnal ElektrolisisDocument8 pagesJurnal ElektrolisisMoch Alie MuchitNo ratings yet

- Experiment 1 - Transmission LinesDocument2 pagesExperiment 1 - Transmission Linesengreduardo100% (1)

- Qualitative Theory of Volterra Difference Equations - RaffoulDocument333 pagesQualitative Theory of Volterra Difference Equations - RaffoulJuan Rodriguez100% (1)

- I/A Series Hardware: ® Product SpecificationsDocument20 pagesI/A Series Hardware: ® Product Specificationsrasim_m1146No ratings yet

- Data Sheet Led 5mm RGBDocument3 pagesData Sheet Led 5mm RGBMuhammad Nuzul Nur مسلمNo ratings yet

- 12 Chemistry CBSE Exam Papers 2016 Foreign Set 1 AnswerDocument10 pages12 Chemistry CBSE Exam Papers 2016 Foreign Set 1 AnswerAlagu MurugesanNo ratings yet

- Laboratory Tests On Compensation Grouting, The Influence of Grout BleedingDocument6 pagesLaboratory Tests On Compensation Grouting, The Influence of Grout BleedingChristian SchembriNo ratings yet

- MATH2408/3408 Computational Methods and Differential Equations With Applications 2014-2015Document4 pagesMATH2408/3408 Computational Methods and Differential Equations With Applications 2014-2015Heng ChocNo ratings yet

- Easily control your TV with one remoteDocument4 pagesEasily control your TV with one remoteJeradin_01No ratings yet

- Scanning Electron Microscopy (SEM) : A Review: January 2019Document10 pagesScanning Electron Microscopy (SEM) : A Review: January 2019Abhishek GaurNo ratings yet

- Activated Sludge Rheology A Critical Review On Data Collection and ModellingDocument20 pagesActivated Sludge Rheology A Critical Review On Data Collection and ModellingZohaib Ur RehmanNo ratings yet

- Cubic EOS MATLAB function estimates compressibility factor and molar volumeDocument6 pagesCubic EOS MATLAB function estimates compressibility factor and molar volumeDaniel García100% (1)

- CHAYANIKA MTech 2013-15Document62 pagesCHAYANIKA MTech 2013-15Maher TolbaNo ratings yet

- How Physics Shapes the Game of Bocce BallDocument2 pagesHow Physics Shapes the Game of Bocce BallWill IsWas YangNo ratings yet

- Millison Indonesia Floating SolarDocument29 pagesMillison Indonesia Floating SolarChandNo ratings yet

- Inc Germanium Phosphide: Features.Document4 pagesInc Germanium Phosphide: Features.MatNo ratings yet

- Non-Metallic Inclusions in Steel PropertiesDocument68 pagesNon-Metallic Inclusions in Steel PropertiesDeepak PatelNo ratings yet

- Draft - Daftar Hadir KoM - Update 19.00Document3 pagesDraft - Daftar Hadir KoM - Update 19.00sowongNo ratings yet

- Study of The Degradation of Typical HVAC Materials, Filters and Components Irradiated by UVC Energy - Part I: Literature Search.Document13 pagesStudy of The Degradation of Typical HVAC Materials, Filters and Components Irradiated by UVC Energy - Part I: Literature Search.1moldNo ratings yet

- Physics Investigatory ProjectDocument14 pagesPhysics Investigatory ProjectAbhishek Raja88% (16)

- Che 2164 L1Document34 pagesChe 2164 L1Blue JunNo ratings yet

- Chapter 1 - StabilityDocument9 pagesChapter 1 - StabilityRobeam SolomonNo ratings yet

- Basics of Paint FormulationDocument21 pagesBasics of Paint FormulationTORA75% (4)

- Correcting Astigmatism with Toric IOLsDocument28 pagesCorrecting Astigmatism with Toric IOLsArif MohammadNo ratings yet

- Molecular DeviceDocument26 pagesMolecular Device7semNo ratings yet

- CalculusDocument566 pagesCalculuscustomerx100% (8)

- Surge Arrester General (IN) English PDFDocument16 pagesSurge Arrester General (IN) English PDFBalan PalaniappanNo ratings yet

- Ultimate Strength DesignDocument25 pagesUltimate Strength DesignBryle Steven Newton100% (2)

- Mech 499 Project Virtual and Physical Prototyping of Mechanical Systems Web-Controlled Dog Ball LauncherDocument26 pagesMech 499 Project Virtual and Physical Prototyping of Mechanical Systems Web-Controlled Dog Ball LauncherParesh GoelNo ratings yet