Professional Documents

Culture Documents

Video Denoising Using Sparse and Redundant Representations: (Ijartet) Vol. 1, Issue 3, November 2014

Uploaded by

IJARTETOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Video Denoising Using Sparse and Redundant Representations: (Ijartet) Vol. 1, Issue 3, November 2014

Uploaded by

IJARTETCopyright:

Available Formats

International Journal of Advanced Research Trends in Engineering and Technology (IJARTET)

Vol. 1, Issue 3, November 2014

Video Denoising Using Sparse and Redundant

Representations

B.Anandhaprabakaran1, S.David2

1, 2

Assistant Professor

Department of Electronics and Communication Engineering,

Sri Ramakrishna Engineering College, 2Sri Eashwar Engineering College, Coimbatore, Tamil Nadu, India

Abstract: The quality of video sequences is often reduced by noise, usually assumed white and Gaussian, being

superimposed on the sequence. When denoising image sequences, rather than a single image, the temporal dimension can

be used for gaining in better denoising performance, as well as in the algorithms' speed. Such correlations are further

strengthened with a motion compensation process, for which a Fourier domain noise-robust cross correlation algorithm is

proposed for motion estimation. This algorithm relies on sparse and redundant representations of small patches in the

images. Three different extensions are offered, and all are tested and found to lead to substantial benefits both in denoising

quality and algorithm complexity, compared to running the single image algorithm sequentially.

Keywords: Cross Correlation (CC), Motion estimation, K-SVD, OMP, Sparse representations, Video denoising.

I. INTRODUCTION

VIDEO SIGNALS are often contaminated by noise

during acquisition and transmission. Removing/reducing

noise in video signals (or video denoising) is highly

desirable, as it can enhance perceived image quality,

increase compression effectiveness, facilitate transmission

bandwidth reduction, and improve the accuracy of the

possible subsequent processes such as feature extraction,

object detection, motion tracking and pattern classification.

Video denoising algorithms may be roughly classified based

on two different criteria: whether they are implemented in

the spatial domain or transform domain and whether motion

information is directly incorporated. The high degree of

correlation between adjacent frames is a blessing in

disguise for signal restoration. On the one hand, since

additional information is available from nearby frames, a

better estimate of the original signal is expected. On the

other hand, the process is complicated by the presence of

motion between frames. Motion estimation itself is a

complex problem and it is further complicated by the

presence of noise.One suggested approach that utilizes the

temporal redundancy is motion estimation [4]. The estimated

trajectories are used to filter along the temporal dimension,

either in the wavelet domain [5] or the signal domain [4].

Spatial filtering may also be used, with stronger emphasis in

areas in which the motion estimation is not as reliable. A

similar approach described in [6] detects for each pixel

weather it has undergone motion or not. Spatial filtering is

applied to each image, and for each pixel with no motion

detected, the results of the spatial filtering are recursively

averaged with results from previous frames.

A different approach to video denoising is treating

the image sequence as a 3-D volume, and applying various

transforms to this volume in order to attenuate the noise.

Such transforms can be a Fourier-wavelet transform, an

adaptive wavelet transform [7], or a combination of 2-D and

3-D dual-tree complex wavelet transform [8].

A third approach towards video denoising employs

spatiotemporal adaptive average filtering for each pixel. The

method described in [9] uses averaging of pixels in the

neighborhood of the processed pixel, both in the current

frame and the previous one. The weights in this averaging

are determined according to the similarity of 3 3 patches

around the two pixels matched. The method in [10] extends

this approach by considering a full 3-D neighborhood

around the processed pixel (which could be as large as the

entire sequence), with the weights being computed using

larger patches and, thus, producing more accurate weights.

The current state-of-the-art reported in [11] also finds

similar patches in the neighborhood of each pixel; however,

instead of a weighted averaging of the centers of these

All Rights Reserved 2014 IJARTET

18

International Journal of Advanced Research Trends in Engineering and Technology (IJARTET)

Vol. 1, Issue 3, November 2014

patches, noise attenuation is performed in the transform

domain.

II. IMAGE DENOISING USING SPARSITY AND

REDUNDANCY

In this paper, we explore a method that utilizes

sparse and redundant representations for image sequence

denoising, [1] In the single image setting, the K-SVD

algorithm, is used to train a sparsifying dictionary for the

corrupted image, forcing each patch in the image to have a

sparse representation describing its content. Put in a

maximum a posteriori probability (MAP) framework, the

developed algorithm in [1] leads to a simple algorithm with

state-of-the-art performance for the single image denoising

application.

A method of denoising images based on sparse and

redundant representations is developed and reported in [1]

and [2]. In this section, we provide a brief description of this

algorithm, as it serves as the foundation for the video

denoising we develop in Section III.

In this paper, a video denoising method based on a

spatiotemporal model of motion compensated frames is

proposed. By considering 3-D (spatio-temporal) patches, a

propagation of the dictionary over time, and averaging that

is done on motion compensated neighboring patches both in

space and time. As the dictionary of adjacent frames

(belonging to the same scene) is expected to be nearly

identical, the number of required iterations per frame can be

significantly reduced It is also important to be aware that

video signals are more than simple 3-D extensions of 2-D

static image signals, where the major distinction is the

capability of representing motion information [12]. If the

motion between frames is properly compensated, then the

temporal correlations between the patches can be enhanced.

Effective motion compensation relies on reliable motion

estimation. we opt to use global motion estimation methods

based on cross correlation (CC) [13]-[15]. The challenge

here is that the motion information must be estimated from

noisy video signals rather than the noise free original

signals. To address this issue, another important aspect of

our paper was the and incorporation of a novel noise-robust

motion estimation algorithm, which has not been carefully

examined in previous video denoising algorithms. All of

these modifications lead to substantial benefits both in

complexity and denoising performance.

The structure of the paper is as follows. In Section

II, we describe the principles of sparse and redundant

representations and their deployment to single image

denoising. Section III discusses the generalization to video,

discussing various options of using the temporal dimension

with their expected benefits and drawbacks. Details on the

motion estimation algorithm will be given in Section IV.

Each proposed extension is experimentally validated in

section V. A performance comparison of other methods and

the one introduced in this paper is given. Section VI

summarizes and concludes the paper.

A noisy image Y results from noise v superimposed

on an original image X . We assume the noise to be white,

zero mean

Gaussian noise, with a known standard deviation

Y=X+V where V ~(0,2I)

(1)

The basic assumption of the denoising method

developed in [1] and [2] is that each image patch (of a fixed

size) can be represented as a linear combination of a small

subset of patches (atoms), taken from a fixed dictionary.

Using this assumption, the denoising task can be described

as an energy minimization procedure. The following

functional describes a combination of three penalties to be

minimized

(2)

The first term demands a proximity between the

measured image, Y, and its denoised (and unknown) version

X. The second term demands that each patch from the

reconstructed image (denoted by RijX) can be represented up

to a bounded error by a dictionary D, with coefficients ij .

The third part demands that the number of coefficients

required to represent any patch is small. The values ij are

patch-specific weights. Minimizing this functional with

respect to its unknowns yields the denoising algorithm.

The choice of D is of high importance to the

performance of the algorithm. In [1] it is shown that training

can be done by minimizing (2) with respect toD as well (in

addition to X and ij). The proposed algorithm in [1] is an

iterative block-coordinate relaxation method, that fixes all

the unknowns apart from the one to be updated, and

alternates between the following update stages.

1) Update of the sparse representations {ij} : Assuming that

D and X are fixed, we solve a set of problems of the form

All Rights Reserved 2014 IJARTET

19

International Journal of Advanced Research Trends in Engineering and Technology (IJARTET)

Vol. 1, Issue 3, November 2014

(3)

(5)

per each location [i,j]. This means that we seek for each

patch in the image the sparsest vector to describe it using

atoms from D. In [1], the orthogonal matching pursuit

(OMP) algorithm is used for this task .

2) Update the dictionary D: In this stage, we assume that X

is fixed, and we update one atom at a time in D, while also

updating the coefficients in {ij}ij that use it. This is done

via a rank-one approximation of a residual matrix, as

described in [16].

3) Update the estimated image X : After several rounds of

updates of {ij}ij and D ,the final output image is computed

by fixing these unknowns and minimizing (2) with respect to

X . This leads to the quadratic problem

Defined for t=1,2,..,T.

The temporal repetitiveness of the video sequence can be

further used to improve the algorithm. As consecutive

images Xt and Xt-1 are similar, their corresponding

dictionaries are also expected to be similar. This temporal

coherence can help speed-up the algorithm. Fewer training

iterations are necessary if the initialization for the dictionary

Dt is the one trained for the previous image. The number of

training iterations should not be constant, but rather depend

on the noise level. It appears that the less noisy the sequence,

the more training iterations are needed for each image. In

higher noise levels, adding more iterations hardly results in

any denoising improvement.

Only patches centered in the current image are used

for training the dictionary and cleaning the image. A

compromise between temporal locality and exploiting the

(4)

temporal redundancy is again called for. This compromise is

achieved by using motion compensated patches of the image

which is solved by a simple weighting of the represented

currently denoised, both in training and cleaning.

patches with overlaps, and the original image Y .

Introducing this into the penalty term in (5) leads to the

The improved results obtained by training a dictionary based

modified version

on the noisy image itself stem from the dictionary adapting

to the content of the actual image to be denoised. An added

benefit is that the K-SVD algorithm has noise averaging

built into it, by taking a large set of noisy patches and

creating a small, relatively clean representative set.

III. EXTENSION TO VIDEO DENOISING

In this section, we describe, step by step, the

proposed extensions to handling image sequences. Training

a single dictionary for the entire sequence is problematic;

The scene is expected to change rapidly, and objects that

appear in one frame might not be there five or ten frames

later. This either means that the dictionary will suit some

images more than others, or that it would suit all of the

images but only moderately so. Obtaining state-of-the-art

denoising results requires better adaptation. An alternative

approach could be proposed by defining a locally temporal

penalty term, that on one hand allows the dictionary to adapt

to the scene, and on the other hand, exploits the benefits of

the temporal redundancy.

(6)

Defined for t=1,2,..,T.

(i.e., the patches are taken from three frames).

To gauge the possible speed-up and improvement

achieved by temporally adaptive and propagated dictionary,

we test the required number of iterations to obtain similar

results to the non propagation alternative. Several options for

the number of training iterations that follow the dictionary

propagation are compared to the non propagation (using 15

training iterations per image) option. Dictionary is trained

after propagating the dictionary (from image #10), using 4

training iterations for each image. This comparison shows

All Rights Reserved 2014 IJARTET

20

International Journal of Advanced Research Trends in Engineering and Technology (IJARTET)

Vol. 1, Issue 3, November 2014

that propagation of the dictionary leads to a cleaner version normalized in the frequency domain to have unit energy

with clearer and sharper texture atoms. These benefits are across all frequencies. The phase correlation function is

attributed to the memory induced by the propagation. given by

Indeed, when handling longer sequences, we expect this

memory feature of our algorithm to further benefit in

denoising performance.

(10)

At high noise levels, the redundancy factor (the

ratio between the number of atoms in the dictionary to the

size of an atom) should be smaller. At high noise levels,

To have a close look, let us assume that F2(v) is

obtaining a clean dictionary requires averaging of a large simply a shifted version of F1(v), i.e., F2(v) =F1(vv).

number of patches for each atom. This is why only a Based on the shifting property of the Fourier transform, we

relatively small number of atoms is used. At low noise have F2() = F1() exp{jTv} and Y() = |F1()|2

levels, many details in the image need to be represented by exp{jTv}, and thus

the dictionary. Noise averaging takes a more minor role in

this case. This calls for a large number of atoms, so they can

(11)

represent the wealth of details in the image.

IV. NOISE-ROBUST MOTION ESTIMATION

One of the challenges in the implementation of the

above algorithm is to estimate motion in the presence of

noise. Here, we propose a simple but reliable noise-robust

CC method[3] for global motion estimation at integer pixel

precision. The limitation of using global motion estimation

is that it cannot account for rotation, zooming and local

motion. Let F1(v) and F2(v) represent two image frames,

where v is a spatial integer index vector for the underlying 2D rectangular image lattice. A classical approach to

estimating a global motion vector between the two frames is

the cross correlation method which is based on the

observation that when F2(v) is a shifted version of F1(v), the

position of the peak in the CC function between F1(v) and

F2(u) corresponds to the motion vector. Despite the

simplicity of the idea, the computation of the CC function is

often costly. An equivalent but more efficient approach is to

use the Fourier transform method: Let F() = F {f (v)}

represents the 2-D Fourier transform of an image frame,

where F denotes the Fourier transform operator. Then, the

CC function can be computed as

Which creates an impulse at the true motion vector

position and is zero everywhere else. Both CC and PC

methods were designed with the assumption that there is no

noise in the images. With the presence of noise, their

performance degrades. Our noise-robust cross correlation

(NRCC) function is defined as

(12)

Where |N() |2 is the noise power spectrum (in the

case of white noise, |N() |2 is a constant). To better

understand this, it is useful to formulate the three approaches

(PC, CC, and NRCC) using a unified framework.

In

particular, each method can be viewed a specific weighting

scheme in the Fourier domain

(13)

where the differences lie in the definition of the weighting

function W()

(7)

(8)

The estimated motion vector is given by

(14)

(9)

The PC method assigns uniform weights to all

frequencies, the CC method assigns the weights based on the

An interesting variation of this approach is the total signal power (which is the sum of signal and noise

phase correlation (PC) method where the Fourier spectrum is power), while the NRCC method assigns the weights

All Rights Reserved 2014 IJARTET

21

International Journal of Advanced Research Trends in Engineering and Technology (IJARTET)

Vol. 1, Issue 3, November 2014

proportional to the signal power only (by removing the noise

In this paper

an image sequence denoising

power part). It converges to the CC method when the images algorithm based on sparse and redundant representations is

are noise-free.

used

for

denoising

process.

Applying

motion

estimation/motion compensation is effective in enhancing

V. VALIDATION

the correlations and thus, improving the performance of the

The simulation results of the paper have been denoising process. Three extensions were used: the use of

discussed here. All video sequences are in YUV 4:2:0 spatio-temporal (3-D) atoms, dictionary propagation coupled

format, but only the denoising results of the luma channel with fewer training iterations, and an extended patch-set for

are reported here for algorithm validation. Two objective dictionary training and image cleaning. The experimental

criteria, namely the PSNR and the SSIM, were employed to results show the effectiveness of this method.

provide quantitative quality evaluations of the denoising

results.

PSNR is defined as

(15)

here L is the dynamic range of the image (for 8 bits/pixel

images, L = 255) and MSE is the mean squared error

between the original and distorted images.

SSIM is first calculated within local windows using

(16)

here x and y are the image patches extracted from the local

window from the original and distorted images, respectively.

x, x2, and xy are the mean, variance, and cross-correlation

computed within the local window, respectively. The overall

SSIM score of a video frame is computed as the average

local SSIM scores.

Besides the running time of this method can fill the

requirements of the real time processing. To testify the

advantage of our method, the comparison between present

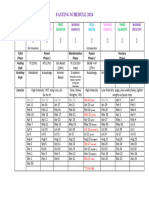

and K_SVD method is shown in Fig. 1.

(a1)

Fig.1 Denoising results of Frame 10 in Salesman sequence corrupted

with noise standard deviation = 20. (a1)(a4) Image frames in the

original, noisy, and K_SVD[1],Proposed one denoised sequences.

TABLE I

PSNR COMPARISONS WITH LATEST VIDEO DENOISING ALGORITHMS

REFERENCES

[1].

Noise

std()

K_SVD[1]

K_SVD[2]

VBM3D

Proposed

10

15

20

50

35.89

37.95

38.33

38.01

33.30

35.17

36.6

35.98

31.80

33.33

35.12

34.23

24.12

26.03

28.49

27.31

VI. CONCLUSION

(a2)

M. Elad and M. Aharon, Image denoising via sparse and

redundant representation over learned dictionaries, IEEE Trans.

Image Process., vol. 15, no. 12, pp. 37363745, Dec. 2006.

[2]. M. Protter and M. Elad, Image sequence denoising via sparse

and redundant representations, IEEE Trans. Image Process.,

vol. 18, no. 1, pp. 2736, Jan. 2009.

[3]. Gijesh Varghese and Zhou Wang Video Denoising Based on a

Spatiotemporal Gaussian Scale Mixture Modelin Proc.IEEE

Int. Sym. Circuits Syst, vol.20,July 2010.

[4]. S. M. M. Rahman, M. O. Ahmad, and M. N. S. Swamy, Video

denoising based on inter-frame statistical modeling of wavelet

All Rights Reserved 2014 IJARTET

22

International Journal of Advanced Research Trends in Engineering and Technology (IJARTET)

Vol. 1, Issue 3, November 2014

coefficients, IEEE Trans. Circuits Syst. Video Tech., vol. 17,

no. 2, pp. 187198, Feb. 2007.

[5]. V. Zlokolica, A. Pizurica, and W. Philips, Wavelet-domain

video denoising based on reliability measures, IEEE Trans.

Circuits Syst. Video Tech., vol. 16, no. 8, pp. 9931007, Aug.

2006.

[6]. A. Pizurica, V. Zlokolica, and W. Philips, Combined wavelet

domain and temporal video denoising, in Proc. IEEE Conf.

Adv. Video Signal-Based Surveillance, Jul. 2003, pp. 334341.

[7]. I. Selesnick and K. Y. Li, Video denoising using 2D and 3D

dual-tree complex wavelet transforms, presented at theWavelet

[10]. A. Buades, B. Coll, and J. M. Morel, Denoising image

sequences does not require motion estimation, in Proc. IEEE

Conf. Advanced Video and Signal Based Surveillance, Sep.

2005, pp. 7074.

[11]. K. Dabov, A. Foi, and K. Egiazarian, Video denoising by

sparse 3D transform-domain collaborative filtering, presented

at the Eur. Signal Processing Conf., Poznan, Poland, Sep. 2007.

[12]. Z. Wang and Q. Li, Video quality assessment using a statistical

model of human visual speed perception, J. Opt. Soc. Am. A,

vol. 24, pp. B61B69, Dec. 2007.

Applications in Signal and Image Processing X (SPIE), San

Diego, CA, Aug. 2003.

[13]. T. S. Huang and R. Y. Tsai, Motion estimation, in Image

Sequence Analysis, T. S. Huang, Ed. Berlin, Germany: SpringerVerlag, 1981, pp. 118.

[8]. N. M. Rajpoot, Z. Yao, and R. G. Wilson, Adaptive wavelet

restoration of noisy video sequences, presented at the Proc. Int.

Conf. Image Processing, Singapore, Oct. 2004.

[14]. R. Manduchi and G. A. Mian, Accuracy analysis for

correlation-based image registration algorithms, in Proc. IEEE

Int. Sym. Circuits Syst., 1993, pp. 834837.

[9]. V. Zlokolica, A. Pizurica, and W. Philips, Video denoising

using multiple class averaging with multiresolution, in Proc.

Lecture Notes in Compute Science (VLVB03), 2003, vol. 2849,

pp. 172179.

[15]. A. M. Tekalp, Digital Video Processing. Upper Saddle River,

NJ: Prentice hall,1995.

All Rights Reserved 2014 IJARTET

23

You might also like

- Spline and Spline Wavelet Methods with Applications to Signal and Image Processing: Volume III: Selected TopicsFrom EverandSpline and Spline Wavelet Methods with Applications to Signal and Image Processing: Volume III: Selected TopicsNo ratings yet

- GPU-Based Elasticity Imaging Algorithms: Nishikant Deshmukh, Hassan Rivaz, Emad BoctorDocument10 pagesGPU-Based Elasticity Imaging Algorithms: Nishikant Deshmukh, Hassan Rivaz, Emad BoctorBrandy WeeksNo ratings yet

- A Novel Approach For Patch-Based Image Denoising Based On Optimized Pixel-Wise WeightingDocument6 pagesA Novel Approach For Patch-Based Image Denoising Based On Optimized Pixel-Wise WeightingVijay KumarNo ratings yet

- A Proposed Hybrid Algorithm For Video Denoisng Using Multiple Dimensions of Fast Discrete Wavelet TransformDocument8 pagesA Proposed Hybrid Algorithm For Video Denoisng Using Multiple Dimensions of Fast Discrete Wavelet TransformInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Convergence of ART in Few ProjectionsDocument9 pagesConvergence of ART in Few ProjectionsLak IhsanNo ratings yet

- Adaptive Mixed Norm Optical Flow Estimation Vcip 2005Document8 pagesAdaptive Mixed Norm Optical Flow Estimation Vcip 2005ShadowNo ratings yet

- Video Denoising Based On Riemannian Manifold Similarity and Rank-One ProjectionDocument4 pagesVideo Denoising Based On Riemannian Manifold Similarity and Rank-One ProjectionSEP-PublisherNo ratings yet

- Image Processing by Digital Filter Using MatlabDocument7 pagesImage Processing by Digital Filter Using MatlabInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- A Novel Background Extraction and Updating Algorithm For Vehicle Detection and TrackingDocument5 pagesA Novel Background Extraction and Updating Algorithm For Vehicle Detection and TrackingSam KarthikNo ratings yet

- A Novel Statistical Fusion Rule For Image Fusion and Its Comparison in Non Subsampled Contourlet Transform Domain and Wavelet DomainDocument19 pagesA Novel Statistical Fusion Rule For Image Fusion and Its Comparison in Non Subsampled Contourlet Transform Domain and Wavelet DomainIJMAJournalNo ratings yet

- VLSI-Assisted Nonrigid Registration Using Modified Demons AlgorithmDocument9 pagesVLSI-Assisted Nonrigid Registration Using Modified Demons AlgorithmAnonymous ZWEHxV57xNo ratings yet

- Video Compression by Memetic AlgorithmDocument4 pagesVideo Compression by Memetic AlgorithmEditor IJACSANo ratings yet

- Full Text 01Document7 pagesFull Text 01Voskula ShivakrishnaNo ratings yet

- An Optimized Pixel-Wise Weighting Approach For Patch-Based Image DenoisingDocument5 pagesAn Optimized Pixel-Wise Weighting Approach For Patch-Based Image DenoisingVijay KumarNo ratings yet

- Multi-Frame Alignment of Planes: Lihi Zelnik-Manor Michal IraniDocument6 pagesMulti-Frame Alignment of Planes: Lihi Zelnik-Manor Michal IraniQuách Thế XuânNo ratings yet

- Wavelet Based Noise Reduction by Identification of CorrelationsDocument10 pagesWavelet Based Noise Reduction by Identification of CorrelationsAmita GetNo ratings yet

- Image Compression Using Back Propagation Neural Network: S .S. Panda, M.S.R.S Prasad, MNM Prasad, Ch. SKVR NaiduDocument5 pagesImage Compression Using Back Propagation Neural Network: S .S. Panda, M.S.R.S Prasad, MNM Prasad, Ch. SKVR NaiduIjesat JournalNo ratings yet

- Underwater Image Restoration Using Fusion and Wavelet Transform StrategyDocument8 pagesUnderwater Image Restoration Using Fusion and Wavelet Transform StrategyMega DigitalNo ratings yet

- Table 22Document5 pagesTable 22nirmala periasamyNo ratings yet

- Ijarece Vol 2 Issue 3 314 319Document6 pagesIjarece Vol 2 Issue 3 314 319rnagu1969No ratings yet

- Wedel Dagm06Document10 pagesWedel Dagm06Kishan SachdevaNo ratings yet

- Novel Wavelet Thresholding for Image DenoisingDocument4 pagesNovel Wavelet Thresholding for Image DenoisingjebileeNo ratings yet

- Expectation-Maximization Technique and Spatial - Adaptation Applied To Pel-Recursive Motion Estimation Sci2004Document6 pagesExpectation-Maximization Technique and Spatial - Adaptation Applied To Pel-Recursive Motion Estimation Sci2004ShadowNo ratings yet

- Image Denoising Using Ica Technique: IPASJ International Journal of Electronics & Communication (IIJEC)Document5 pagesImage Denoising Using Ica Technique: IPASJ International Journal of Electronics & Communication (IIJEC)International Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Video Coding SchemeDocument12 pagesVideo Coding SchemezfzmkaNo ratings yet

- A Prospective Study On Algorithms Adapted To The Spatial Frequency in TomographyDocument6 pagesA Prospective Study On Algorithms Adapted To The Spatial Frequency in TomographySChouetNo ratings yet

- Image Reconstruction 2Document14 pagesImage Reconstruction 2Bilge MiniskerNo ratings yet

- Blur Parameter Identification Using Support Vector Machine: Ratnakar Dash, Pankaj Kumar Sa, and Banshidhar MajhiDocument4 pagesBlur Parameter Identification Using Support Vector Machine: Ratnakar Dash, Pankaj Kumar Sa, and Banshidhar MajhiidesajithNo ratings yet

- Amiri Et Al - 2019 - Fine Tuning U-Net For Ultrasound Image SegmentationDocument7 pagesAmiri Et Al - 2019 - Fine Tuning U-Net For Ultrasound Image SegmentationA BCNo ratings yet

- Rescue AsdDocument8 pagesRescue Asdkoti_naidu69No ratings yet

- IP2000Document6 pagesIP2000jimakosjpNo ratings yet

- A Multi-Feature Visibility Processing Algorithm For Radio Interferometric Imaging On Next-Generation TelescopesDocument14 pagesA Multi-Feature Visibility Processing Algorithm For Radio Interferometric Imaging On Next-Generation TelescopesAnonymous FGY7goNo ratings yet

- A New Approach To Remove Gaussian Noise in Digital Images by Immerkaer Fast MethodDocument5 pagesA New Approach To Remove Gaussian Noise in Digital Images by Immerkaer Fast MethodGeerthikDotSNo ratings yet

- Segmentation of Range and Intensity Image Sequences by ClusteringDocument5 pagesSegmentation of Range and Intensity Image Sequences by ClusteringSeetha ChinnaNo ratings yet

- Bayesian Stochastic Mesh Optimisation For 3D Reconstruction: George Vogiatzis Philip Torr Roberto CipollaDocument12 pagesBayesian Stochastic Mesh Optimisation For 3D Reconstruction: George Vogiatzis Philip Torr Roberto CipollaneilwuNo ratings yet

- Improved Nonlocal Means Based On Pre Classification and Invariant Block MatchingDocument7 pagesImproved Nonlocal Means Based On Pre Classification and Invariant Block MatchingIAEME PublicationNo ratings yet

- Simultaneous Fusion, Compression, and Encryption of Multiple ImagesDocument7 pagesSimultaneous Fusion, Compression, and Encryption of Multiple ImagessrisairampolyNo ratings yet

- (X) Small Object Detection and Tracking - Algorithm, Analysis and Application (2005)Document10 pages(X) Small Object Detection and Tracking - Algorithm, Analysis and Application (2005)Hira RasabNo ratings yet

- Discrete Wavelet Transform Based Image Fusion and De-Noising in FpgaDocument10 pagesDiscrete Wavelet Transform Based Image Fusion and De-Noising in FpgaRudresh RakeshNo ratings yet

- Vision Por ComputadoraDocument8 pagesVision Por ComputadoraMatthew DorseyNo ratings yet

- Real Time Ultrasound Image DenoisingDocument14 pagesReal Time Ultrasound Image DenoisingjebileeNo ratings yet

- Evaluating popular non-linear image filters for CT reconstructionDocument2 pagesEvaluating popular non-linear image filters for CT reconstructionmyertenNo ratings yet

- Artur Loza Et Al. 2014Document4 pagesArtur Loza Et Al. 2014Kadhim1990No ratings yet

- A Fast and Adaptive Method For Image Contrast Enhancement: Computer ScicnccsDocument4 pagesA Fast and Adaptive Method For Image Contrast Enhancement: Computer ScicnccsjimakosjpNo ratings yet

- Performance Enhancement of Video Compression Algorithms With SIMDDocument80 pagesPerformance Enhancement of Video Compression Algorithms With SIMDCarlosNo ratings yet

- Image Enhancement Via Adaptive Unsharp MaskingDocument6 pagesImage Enhancement Via Adaptive Unsharp Maskingavant dhruvNo ratings yet

- A Fast Image Coding Algorithm Based On Lifting Wavelet TransformDocument4 pagesA Fast Image Coding Algorithm Based On Lifting Wavelet TransformRoshan JayswalNo ratings yet

- Constrained Optimization in Seismic Reflection Tomography: A Gauss-Newton Augmented Lagrangian ApproachDocument15 pagesConstrained Optimization in Seismic Reflection Tomography: A Gauss-Newton Augmented Lagrangian ApproachIfan AzizNo ratings yet

- Cerra SCC10 FinalDocument6 pagesCerra SCC10 FinaljayashreeraoNo ratings yet

- PSO Algorithm With Self Tuned Parameter For Efficient Routing in VLSI DesignDocument4 pagesPSO Algorithm With Self Tuned Parameter For Efficient Routing in VLSI Designsudipta2580No ratings yet

- Image Fusion: International Journal of Advanced Research in Computer Science and Software EngineeringDocument4 pagesImage Fusion: International Journal of Advanced Research in Computer Science and Software EngineeringIdris Khan PathanNo ratings yet

- Single Image Dehazing Via Multi-Scale Convolutional Neural NetworksDocument16 pagesSingle Image Dehazing Via Multi-Scale Convolutional Neural NetworksFressy NugrohoNo ratings yet

- Gradual Shot Boundary Detection Using Localized Edge Blocks: SpringerDocument18 pagesGradual Shot Boundary Detection Using Localized Edge Blocks: SpringernannurahNo ratings yet

- IJCER (WWW - Ijceronline.com) International Journal of Computational Engineering ResearchDocument5 pagesIJCER (WWW - Ijceronline.com) International Journal of Computational Engineering ResearchInternational Journal of computational Engineering research (IJCER)No ratings yet

- Thin Filmthicknessprofilemeasurementbythree Wavelengthinterferencecoloranalysis ManuscriptDocument23 pagesThin Filmthicknessprofilemeasurementbythree Wavelengthinterferencecoloranalysis Manuscriptgokcen87No ratings yet

- Biomedical Signal Processing and Control: Sajjad Afrakhteh, Hamed Jalilian, Giovanni Iacca, Libertario DemiDocument11 pagesBiomedical Signal Processing and Control: Sajjad Afrakhteh, Hamed Jalilian, Giovanni Iacca, Libertario DemiANGIE MARCELA ARENAS NUÑEZNo ratings yet

- PSO Algorithm with Self Tuned Parameters for Efficient VLSI RoutingDocument4 pagesPSO Algorithm with Self Tuned Parameters for Efficient VLSI Routingsudipta2580No ratings yet

- Multi Modal Medical Image Fusion Using Weighted Least Squares FilterDocument6 pagesMulti Modal Medical Image Fusion Using Weighted Least Squares FilterInternational Journal of Research in Engineering and TechnologyNo ratings yet

- Optimized Design and Manufacturing of WasteDocument5 pagesOptimized Design and Manufacturing of WasteIJARTETNo ratings yet

- Wireless Notice Board With Wide RangeDocument5 pagesWireless Notice Board With Wide RangeIJARTETNo ratings yet

- Tech Vivasayi Mobile ApplicationDocument3 pagesTech Vivasayi Mobile ApplicationIJARTETNo ratings yet

- Smart Water Management System Using IoTDocument4 pagesSmart Water Management System Using IoTIJARTETNo ratings yet

- Smart Farm Management System For PrecisionDocument5 pagesSmart Farm Management System For PrecisionIJARTETNo ratings yet

- Object Detection and Alerting System inDocument4 pagesObject Detection and Alerting System inIJARTETNo ratings yet

- Privacy Preservation of Fraud DocumentDocument4 pagesPrivacy Preservation of Fraud DocumentIJARTETNo ratings yet

- Real Time Dynamic Measurements andDocument6 pagesReal Time Dynamic Measurements andIJARTETNo ratings yet

- Ready To Travel ApplicationsDocument4 pagesReady To Travel ApplicationsIJARTETNo ratings yet

- Behaviour of Concrete by Using DifferentDocument3 pagesBehaviour of Concrete by Using DifferentIJARTETNo ratings yet

- Non Invasive Blood Glucose Monitoring ForDocument4 pagesNon Invasive Blood Glucose Monitoring ForIJARTETNo ratings yet

- Makeover Features Base Human IdentificationDocument7 pagesMakeover Features Base Human IdentificationIJARTETNo ratings yet

- Hygienic and Quantity Based WaterDocument10 pagesHygienic and Quantity Based WaterIJARTETNo ratings yet

- Experimental Investigation On Concrete WithDocument18 pagesExperimental Investigation On Concrete WithIJARTETNo ratings yet

- Complaint Box Mobile ApplicationDocument4 pagesComplaint Box Mobile ApplicationIJARTETNo ratings yet

- Exam Seating ArrangementDocument3 pagesExam Seating ArrangementIJARTETNo ratings yet

- Comparative Harmonics Analysis of ThreeDocument8 pagesComparative Harmonics Analysis of ThreeIJARTETNo ratings yet

- Smart Door Using Raspberry PiDocument4 pagesSmart Door Using Raspberry PiIJARTETNo ratings yet

- Application of Computer Vision in HumanDocument6 pagesApplication of Computer Vision in HumanIJARTETNo ratings yet

- A High-Availability Algorithm For HypercubeDocument8 pagesA High-Availability Algorithm For HypercubeIJARTETNo ratings yet

- A Non Threshold Based Cluster Head RotationDocument7 pagesA Non Threshold Based Cluster Head RotationIJARTETNo ratings yet

- A Novel Artificial Neural Network and 2dPCABasedDocument9 pagesA Novel Artificial Neural Network and 2dPCABasedIJARTETNo ratings yet

- Review On Field Testing of Protective RelaysDocument4 pagesReview On Field Testing of Protective RelaysIJARTETNo ratings yet

- Wireless Cardiac Patient Monitoring SystemDocument6 pagesWireless Cardiac Patient Monitoring SystemIJARTETNo ratings yet

- Power Transformer Explained PracticallyDocument4 pagesPower Transformer Explained PracticallyIJARTETNo ratings yet

- Insect Detection Using Image Processing and IoTDocument4 pagesInsect Detection Using Image Processing and IoTIJARTETNo ratings yet

- Study of Hypotenuse Slope of Chandrayaan-2 While Landing On The Moon SurfaceDocument3 pagesStudy of Hypotenuse Slope of Chandrayaan-2 While Landing On The Moon SurfaceIJARTETNo ratings yet

- Fire Detection and Alert System Using Convolutional Neural NetworkDocument6 pagesFire Detection and Alert System Using Convolutional Neural NetworkIJARTETNo ratings yet

- Review On Recycled Aluminium Dross and It's Utility in Hot Weather ConcretingDocument3 pagesReview On Recycled Aluminium Dross and It's Utility in Hot Weather ConcretingIJARTETNo ratings yet

- Face Detection and Recognition Using Eigenvector Approach From Live StreamingDocument5 pagesFace Detection and Recognition Using Eigenvector Approach From Live StreamingIJARTETNo ratings yet

- Lean Management AssignmentDocument14 pagesLean Management AssignmentElorm Oben-Torkornoo100% (1)

- INVENTORY MANAGEMENT TechniquesDocument24 pagesINVENTORY MANAGEMENT TechniquesWessal100% (1)

- ProjectDocument86 pagesProjectrajuNo ratings yet

- Astm D 664 - 07Document8 pagesAstm D 664 - 07Alfonso MartínezNo ratings yet

- NSX 100-630 User ManualDocument152 pagesNSX 100-630 User Manualagra04100% (1)

- Serv7107 V05N01 TXT7Document32 pagesServ7107 V05N01 TXT7azry_alqadry100% (6)

- Internal Resistance and Matching in Voltage SourceDocument8 pagesInternal Resistance and Matching in Voltage SourceAsif Rasheed Rajput100% (1)

- X RayDocument16 pagesX RayMedical Physics2124No ratings yet

- History and Development of the Foodservice IndustryDocument23 pagesHistory and Development of the Foodservice IndustryMaria Athenna MallariNo ratings yet

- Final Annotated BibiliographyDocument4 pagesFinal Annotated Bibiliographyapi-491166748No ratings yet

- Kltdensito2 PDFDocument6 pagesKltdensito2 PDFPutuWijayaKusumaNo ratings yet

- Final - WPS PQR 86Document4 pagesFinal - WPS PQR 86Parag WadekarNo ratings yet

- mcs2019 All PDFDocument204 pagesmcs2019 All PDFRheydel BartolomeNo ratings yet

- Appendix 1 Application FormDocument13 pagesAppendix 1 Application FormSharifahrodiah SemaunNo ratings yet

- TNG UPDATE InstructionsDocument10 pagesTNG UPDATE InstructionsDiogo Alexandre Crivelari CrivelNo ratings yet

- How Do I Prepare For Public Administration For IAS by Myself Without Any Coaching? Which Books Should I Follow?Document3 pagesHow Do I Prepare For Public Administration For IAS by Myself Without Any Coaching? Which Books Should I Follow?saiviswanath0990100% (1)

- Understanding 2006 PSAT-NMSQT ScoresDocument8 pagesUnderstanding 2006 PSAT-NMSQT ScorestacobeoNo ratings yet

- Airs-Lms - Math-10 - q3 - Week 3-4 Module 3 Rhonavi MasangkayDocument19 pagesAirs-Lms - Math-10 - q3 - Week 3-4 Module 3 Rhonavi MasangkayRamil J. Merculio100% (1)

- U-KLEEN Moly Graph MsdsDocument2 pagesU-KLEEN Moly Graph MsdsShivanand MalachapureNo ratings yet

- Lecture 29: Curl, Divergence and FluxDocument2 pagesLecture 29: Curl, Divergence and FluxKen LimoNo ratings yet

- Maths SolutionDocument10 pagesMaths SolutionAbhay KumarNo ratings yet

- Project Report Software and Web Development Company: WWW - Dparksolutions.inDocument12 pagesProject Report Software and Web Development Company: WWW - Dparksolutions.inRavi Kiran Rajbhure100% (1)

- Moon Fast Schedule 2024Document1 pageMoon Fast Schedule 2024mimiemendoza18No ratings yet

- School of Public Health: Haramaya University, ChmsDocument40 pagesSchool of Public Health: Haramaya University, ChmsRida Awwal100% (1)

- Quality Improvement Reading Material1Document10 pagesQuality Improvement Reading Material1Paul Christopher PinedaNo ratings yet

- Electrical Design Report For Mixed Use 2B+G+7Hotel BuildingDocument20 pagesElectrical Design Report For Mixed Use 2B+G+7Hotel BuildingEyerusNo ratings yet

- 19 Unpriced BOM, Project & Manpower Plan, CompliancesDocument324 pages19 Unpriced BOM, Project & Manpower Plan, CompliancesAbhay MishraNo ratings yet

- How To Pass in University Arrear Exams?Document26 pagesHow To Pass in University Arrear Exams?arrearexam100% (6)

- Hajj A Practical Guide-Imam TahirDocument86 pagesHajj A Practical Guide-Imam TahirMateen YousufNo ratings yet

- Masterbatch Buyers Guide PDFDocument8 pagesMasterbatch Buyers Guide PDFgurver55No ratings yet