Professional Documents

Culture Documents

Gauss User Manual

Uploaded by

Mys GenieCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Gauss User Manual

Uploaded by

Mys GenieCopyright:

Available Formats

Improved fast Gauss transform

User manual

VIKAS CHANDRAKANT RAYKAR, CHANGJIANG YANG, and RAMANI DURAISWAMI

Department of Computer Science, University of Maryland, CollegePark, MD 20783

{vikas,ramani}@cs.umd.edu

The code was written by Vikas C. Raykar and Changjiang Yang is copyrighted under the Lesser

GPL.

Categories and Subject Descriptors: [IFGT User Manual]: October 18, 2006

1. OKAY, WHAT IS THE GAUSS TRANSFORM?

In scientific computing literature the sum of multivariate Gaussian kernels is called

as the discrete Gauss transform. More formally, for each target point yj Rd the

discrete Gauss transform is defined as,

G(yj ) =

N

X

qi ekyj xi k

/h2

where,

(1)

i=1

{qi R}i=1,...,N are the source weights,

{xi Rd }i=1,...,N are the source points, i.e., the center of the Gaussians,

{yj Rd }j=1,...,M are the target points,

and h R+ is the source scale or bandwidth.

In other words G(yj ) is the total contribution at yj of N Gaussians centered at xi

each with bandwidth h. Each Gaussian is weighted by the term qi .

2. HOW RELEVANT IS THIS TO MACHINE LEARNING?

In most kernel based machine learning algorithms and non-parametric statistics the

key computational task is to compute a linearPcombination of local kernel functions

N

centered on the training data, i.e., f (x) = i=1 qi k(x, xi ), which is the discrete

Gauss transform for the Gaussian kernel. f is the regression/classification function

in case of regularized least squares, Gaussian process regression, support vector

machines, kernel regression, and radial basis function neural networks. For nonparametric density estimation it is the kernel density estimate. Also many kernel

methods like kernel principal component analysis and spectral clustering algorithms

involve computing the eigen values of the Gram matrix. Training Gaussian process

machines involves the solution of a linear system of equations. Solutions to such

problems can be obtained using iterative methods, where the dominant computation

is evaluation of f (x).

IFGT User Manual

Raykar and Duraiswami

3. WHAT IS THE IMPROVED FAST GAUSS TRANSFORM?

The computational complexity to evaluate the discrete Gauss transform (Equation 1) at M target points is O(M N ). This makes the computation for large scale

problems prohibitively expensive. The improved Fast Gauss Transform (IFGT) is

an exact approximation algorithm that reduces the computational complexity

to O(M + N ), at the expense of reduced precision, which however can be arbitrary.

The constant depends on the desired precision, dimensionality of the problem, and

j ) to G(yj )

the bandwidth. Given any > 0, it computes an approximation G(y

PN

such that the maximum absolute error relative to the total weight Q = i=1 |qi | is

upper bounded by , i.e.,

"

#

j ) G(yj )|

|G(y

max

.

(2)

yj

Q

4. WHY IMPROVED?

The IFGT [Raykar et al. 2005; Yang et al. 2005] is an improved version of the

Fast Gauss Transform (FGT) which belongs to the more general fast multipole

methods [Greengard and Rokhlin 1987]. The FGT was first proposed in [Greengard

and Strain 1991] and applied successfully to a few lower dimensional applications

in mathematics and physics. However the algorithm has not been widely used

much in statistics, pattern recognition, and machine learning applications where

higher dimensions occur commonly. An important reason for the lack of use of the

algorithm in these areas is that the performance of the proposed FGT degrades

exponentially with increasing dimensionality. The FGT is practical upto three

dimensions. For the FGT the constant factor in O(M + N ) grows exponentially

with increasing dimensionality d. For the IFGT the constant factor is reduced

to asymptotically polynomial order. The reduction is based on a new multivariate

Taylors series expansion scheme combined with the efficient space subdivision using

the k-center algorithm.

5. CAN YOU BRIEFLY DESCRIBE THE ALGORITHM?

The detailed complete description of the algorithm can be found in our technical

report [Raykar et al. 2005]. The algorithm has four stages.

(1) Determine parameters of algorithm based on specified error bound, kernel bandwidth, and data distribution

(2) Subdivide the d-dimensional space using a k-center clustering based geometric

data structure.

(3) Build a p truncated representation of kernels inside each cluster using a set of

decaying basis functions.

(4) Collect the influence of all the the data in a neighborhood using coefficients at

cluster center and evaluate.

6. HOW DIFFERENT IS IT FROM THE NIPS 2005 PAPER YOU HAD?

The core IFGT algorithm was first presented in [Yang et al. 2005]. The error bound

proposed in the original paper was not tight to be useful in practice. Also the paper

IFGT User Manual

IFGT User Manual

did not suggest any strategy for choosing the parameters to achieve the desired

bound. Users found the selection of parameters hard. Incorrect choice of algorithm

parameters by some authors sometimes lead to poor reported performance of IFGT.

This version provides a method for automatically choosing the parameters.

7. ALL RIGHT, TELL ME HOW TO USE THE CODE?

The IFGT consists of three phases: parameter selection, space subdivision using

farthest point clustering, and the core IFGT algorithm. Refer to example.m in the

distribution.

--------------------------------------------------------------------Gather the input data and the following parameters

--------------------------------------------------------------------% the data dimensionality

d=2;

----------------------------% the number of sources

N=1000;

----------------------------% the number of targets

M=1000;

----------------------------% d x N matrix of N source points in d dimensions.

X=rand(d,N);

----------------------------% d x M matrix of M source points in d dimensions.

Y=rand(d,M);

----------------------------% the bandwidth

h=0.7;

----------------------------IF YOU HAVE NOT SCALED THE DATA TO LIE IN A UNIT HYPERCUBE DO IT NOW.

REMEMBER TO SCALE THE BANDWIDTH ALSO.

----------------------------% the desired error. For many tasks I found the error in the range

% 1e-3 to 1e-6 to be sufficient. However epsil can be arbitrary.

epsil=1e-3;

----------------------------% The upper limit on the number of clusters. If the number of clusters

% chosen is equal to Klimit then increase this value.

Klimit=round(0.2*sqrt(d)*100/h);

---------------------------------------------------------------------

IFGT User Manual

Raykar and Duraiswami

--------------------------------------------------------------------Step 1 Choose the parameters required by the IFGT

% K

-- number of clusters

% p_max

-- maximum truncation number

% r

-- cutoff radius

[K,p_max,r]=ImprovedFastGaussTransformChooseParameters(d,h,epsil,Klimit);

--------------------------------------------------------------------Step 2 Run the k-center clustering

% rx

-- maximum radius of the clusters

% ClusterIndex

-- ClusterIndex[i] varies between 0 to K-1.

% ClusterCenters -- d x K matrix of K cluster centers

% NumPoints

-- number of points in each cluster

% ClusterRadii

-- radius of each cluster

[rx,ClusterIndex,ClusterCenter,NumPoints,ClusterRadii]=...

KCenterClustering(d,N,X,double(K));

--------------------------------------------------------------------Step 3 Update the truncation number

% Initially the truncation number was chosen based on an estimate

% of the maximum cluster radius. But now since we have already run

% the clustering algorithm we know the actual maximum cluster radius.

[p_max]=ImprovedFastGaussTransformChooseTruncationNumber(d,h,epsil,rx);

--------------------------------------------------------------------Step 4 Compute the IFGT

% G_IFGT -- 1 x M vector of the Gauss Transform evaluated.

[G_IFGT]=ImprovedFastGaussTransform(d,N,M,X,h,q,Y,double(p_max),...

double(K),ClusterIndex,ClusterCenter,ClusterRadii,r,epsil);

--------------------------------------------------------------------Direct Computation

[G_direct]=GaussTransform(d,N,M,X,h,q,Y);

IFGT_err=max(abs((G_direct-G_IFGT)))/sum(q);

--------------------------------------------------------------------8. WHY DONT YOU GIVE ME A SINGLE DLL?

I have provided separate programs for parameter selection, space subdivision, and

the core IFGT algorithm. This is because when using the IFGT multiple times

with different q the parameter selection and k-center clustering have to be done

only once. Also anyone interested can try different space sub-division schemes.

There is also IFGT.ma wrapper function which combines all the above. Just

provide the accuracy .

9. WHAT IS THIS DATA ADAPTIVE VERSION?

Another novel idea is that a different truncation number can be chosen for each

data point depending on its distance from the cluster center. A good consequence

of this strategy is that only a few points at the boundary of the clusters have

high truncation numbers. Theoretically we expect to get a much better speed up

IFGT User Manual

IFGT User Manual

since for many points pi < pmax . However some computation resources are used in

determining the truncation numbers based on the distribution of the data points.

As a result this scheme sometimes gives only a slight improvement especially when

there is structure in the data. The actual error is much closer to the target error.

--------------------------------------------------------------------% G_DAIFGT -- 1 x M vector of the Gauss Transform evaluated.

% T

-- 1 x N vector truncation number used for each source.

--------------------------------------------------------------------[G_DAIFGT,T]=DataAdaptiveImprovedFastGaussTransform(d,N,M,X,h,q,Y,...

double(p_max),double(K),ClusterIndex,ClusterCenter,ClusterRadii,r,epsil);

--------------------------------------------------------------------10. HOW GOOD ARE THE PARAMETERS CHOSEN?

We have tried to make the error bounds as tight as possible. However it is still a

bound and the actual error is much less than the target error, typically 3 magnitudes

lower than the target error. So my suggestion is to go easy on the target error. Say

if you want can error of 106 you can safely set = 103 . A good test to to try it

out on a smaller sample size so that you can check the actual error with the target

error. Empirically we also observed that the error does not vary significantly with

the size of the dataset. Also if you wish you can experiment with different K and

p.

11. CAN YOU HANDLE VERY SMALL BANDWIDTHS?

For data scaledto a unit hypercube

I got good speedups when the bandwidths

scaled as h = c d (note that d is the length of the maximum diagonal of a unit

hypercube) for some constant c. For very small kernel bandwidth where each training point only influences the immediate vicinity speedup is poor. More importantly

the cutoff point (i.e., when the IFGT takes less time than the direct method) increases, to be useful for moderately sized datasets. It is not advisable to use IFGT

when the truncation number pmax is very high. For very small bandwidths you can

use the dual-tree methods [Gray and Moore 2003] which rely only on data structures and not on series expansions. The dual-tree methods give good speedup for

small bandwidths while the series based methods such as IFGT give good speedup

for large bandwidths. For many machine learning tasks in large dimensions the

bandwidth has to be moderately large to get good generalization. See Table I for

a recommendation of which method to use.

12. WHAT ABOUT THE SPARSE DATA-SET REPRESENTATION METHODS?

There are many strategies for specific problems which try to reduce this computational complexity by searching for a sparse representation of the data. All these try

to find a reduced subset of the original data-set using either random selection or

greedy approximation. In these methods there is no guarantee on the approximation of the kernel matrix in a deterministic sense. The IFGT uses all the available

data. Also the IFGT can be combined with these methods to obtain much better

speedups.

IFGT User Manual

Raykar and Duraiswami

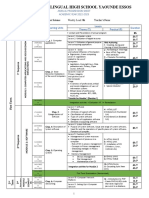

Table I. Summary of the better performing algorithms for different settings of dimension-

ality d and bandwidth h (assuming data is scaled to a unit hypercube). The bandwidth

ranges are approximate.

Small dimensions

d3

Moderate dimensions

3 < d < 10

Large dimensions

d 10

Dual tree

[kd-tree]

Dual tree

[kd-tree]

Dual tree

[Anchors]

Moderate bandwidth

0.1 h 0.5 d

FGT, IFGT

IFGT

Large bandwidth

h 0.5 d

FGT, IFGT

IFGT

Small bandwidth

h 0.1

IFGT

13. SO, WHAT NEXT?

So scale your data, use the code, mail me if you have any questions, brood over

things, and remember to have fun.

REFERENCES

Gray, A. G. and Moore, A. W. 2003. Nonparametric density estimation: Toward computational

tractability. In SIAM International conference on Data Mining. 5

Greengard, L. and Rokhlin, V. 1987. A fast algorithm for particle simulations. J. of Comp.

Physics 73, 2, 325348. 2

Greengard, L. and Strain, J. 1991. The fast Gauss transform. SIAM Journal of Scientific and

Statistical Computing 12, 1, 7994. 2

Raykar, V. C., Yang, C., Duraiswami, R., and Gumerov, N. 2005. Fast computation of sums

of Gaussians in high dimensions. Tech. Rep. CS-TR-4767, Department of Computer Science,

University of Maryland, CollegePark. 2

Yang, C., Duraiswami, R., and Davis, L. 2005. Efficient kernel machines using the improved

fast Gauss transform. In Advances in Neural Information Processing Systems 17, L. K. Saul,

Y. Weiss, and L. Bottou, Eds. MIT Press, Cambridge, MA, 15611568. 2

IFGT User Manual

You might also like

- Further Results On The Dirac Delta Approximation and The Moment Generating Function Techniques For Error Probability Analysis in Fading ChannelsDocument19 pagesFurther Results On The Dirac Delta Approximation and The Moment Generating Function Techniques For Error Probability Analysis in Fading ChannelsAIRCC - IJCNCNo ratings yet

- Document 770Document6 pagesDocument 770thamthoiNo ratings yet

- Empirical Testing of Fast Kernel Density Estimation AlgorithmsDocument6 pagesEmpirical Testing of Fast Kernel Density Estimation AlgorithmsAlon HonigNo ratings yet

- A Sparse Matrix Approach To Reverse Mode AutomaticDocument10 pagesA Sparse Matrix Approach To Reverse Mode Automaticgorot1No ratings yet

- Power Load Forecasting Based On Multi Task Gaussia - 2014 - IFAC Proceedings VolDocument6 pagesPower Load Forecasting Based On Multi Task Gaussia - 2014 - IFAC Proceedings VolKhalid AlHashemiNo ratings yet

- Parametric Query OptimizationDocument12 pagesParametric Query OptimizationJoseph George KonnullyNo ratings yet

- Power Load Forecasting Based On Multi-Task Gaussian ProcessDocument6 pagesPower Load Forecasting Based On Multi-Task Gaussian ProcessKhalid AlHashemiNo ratings yet

- C 3 EduDocument5 pagesC 3 EduAlex MuscarNo ratings yet

- Final Project Report Transient Stability of Power System (Programming Massively Parallel Graphics Multiprocessors Using CUDA)Document5 pagesFinal Project Report Transient Stability of Power System (Programming Massively Parallel Graphics Multiprocessors Using CUDA)shotorbariNo ratings yet

- Matlab Routines for Subset Selection and Ridge Regression in Linear Neural NetworksDocument46 pagesMatlab Routines for Subset Selection and Ridge Regression in Linear Neural NetworksMAnan NanavatiNo ratings yet

- On The GOn The Grid Implementation of A Quantum Reactive Scattering Programrid Implementation of A Quantum Reactive Scattering ProgramDocument17 pagesOn The GOn The Grid Implementation of A Quantum Reactive Scattering Programrid Implementation of A Quantum Reactive Scattering ProgramFreeWillNo ratings yet

- Gauss-Jordan Matrix Inversion Speed-Up Using Gpus With The Consideration of Power ConsumptionDocument7 pagesGauss-Jordan Matrix Inversion Speed-Up Using Gpus With The Consideration of Power ConsumptionAbdelhadiNo ratings yet

- Efficient Hardware Implementation of Hybrid Cosine-Fourier-Wavelet Transforms On A Single FPGADocument4 pagesEfficient Hardware Implementation of Hybrid Cosine-Fourier-Wavelet Transforms On A Single FPGANj NjNo ratings yet

- Near-Ml Detection in Massive Mimo Systems With One-Bit Adcs: Algorithm and Vlsi DesignDocument5 pagesNear-Ml Detection in Massive Mimo Systems With One-Bit Adcs: Algorithm and Vlsi DesignNguyễn Minh TrìnhNo ratings yet

- First ReportDocument14 pagesFirst Reportdnthrtm3No ratings yet

- Journal of Statistical SoftwareDocument23 pagesJournal of Statistical SoftwareasjbndkajbskdNo ratings yet

- Paper 070Document3 pagesPaper 070ManiKumar yadavNo ratings yet

- Power System State Estimation Based On Nonlinear ProgrammingDocument6 pagesPower System State Estimation Based On Nonlinear ProgrammingBrenda Naranjo MorenoNo ratings yet

- From The Initial State, or As From The Moment of Preceding Calculation, One Calculates The Stress Field Resulting From An Increment of DeformationDocument10 pagesFrom The Initial State, or As From The Moment of Preceding Calculation, One Calculates The Stress Field Resulting From An Increment of DeformationTran TuyenNo ratings yet

- Agglomerative Mean-Shift ClusteringDocument7 pagesAgglomerative Mean-Shift ClusteringkarthikNo ratings yet

- Paper Okmf Carla2015Document14 pagesPaper Okmf Carla2015Raul RamosNo ratings yet

- User-Friendly Clustering of Mixed-Type Data in RDocument9 pagesUser-Friendly Clustering of Mixed-Type Data in RJulián BuitragoNo ratings yet

- VHDL models of parallel FIR filters for FPGA implementationDocument6 pagesVHDL models of parallel FIR filters for FPGA implementationanilshaw27No ratings yet

- Jt9D Individual Engine Performance Modelling: Sakhr Abu Darag and Tomas GronstedtDocument7 pagesJt9D Individual Engine Performance Modelling: Sakhr Abu Darag and Tomas GronstedtdausNo ratings yet

- An Efficient Temporal Partitioning Algorithm To Minimize Communication Cost For Reconfigurable Computing SystemsDocument11 pagesAn Efficient Temporal Partitioning Algorithm To Minimize Communication Cost For Reconfigurable Computing SystemsInternational Journal of New Computer Architectures and their Applications (IJNCAA)No ratings yet

- A Combined Neural Network/Gaussian Process Regression Time Series Forecasting System For The NN3 CompetitionDocument5 pagesA Combined Neural Network/Gaussian Process Regression Time Series Forecasting System For The NN3 CompetitionThomas TseNo ratings yet

- Hardware Implementation of Finite Fields of Characteristic ThreeDocument11 pagesHardware Implementation of Finite Fields of Characteristic ThreehachiiiimNo ratings yet

- Matlab GUI for ISOLA Fortran seismic inversion codesDocument34 pagesMatlab GUI for ISOLA Fortran seismic inversion codesGunadi Suryo JatmikoNo ratings yet

- Performance Optimization and Evaluation For Linear CodesDocument8 pagesPerformance Optimization and Evaluation For Linear CodestoudaiNo ratings yet

- ADAM StochasticOptimiz 1412.6980Document15 pagesADAM StochasticOptimiz 1412.6980amirbekr100% (1)

- Ordinary Kriging RDocument19 pagesOrdinary Kriging Rdisgusting4all100% (1)

- Christophe Andrieu - Arnaud Doucet Bristol, BS8 1TW, UK. Cambridge, CB2 1PZ, UK. EmailDocument4 pagesChristophe Andrieu - Arnaud Doucet Bristol, BS8 1TW, UK. Cambridge, CB2 1PZ, UK. EmailNeil John AppsNo ratings yet

- "Digit-Recurrence Algorithms For Division and Square Root With Limited Precision Primitives" Literature SurveyDocument7 pages"Digit-Recurrence Algorithms For Division and Square Root With Limited Precision Primitives" Literature SurveysumathiNo ratings yet

- Dual-Field Multiplier Architecture For Cryptographic ApplicationsDocument5 pagesDual-Field Multiplier Architecture For Cryptographic ApplicationsAkshansh DwivediNo ratings yet

- Frank-Michael Schleif Et Al - Generalized Derivative Based Kernelized Learning Vector QuantizationDocument8 pagesFrank-Michael Schleif Et Al - Generalized Derivative Based Kernelized Learning Vector QuantizationTuhmaNo ratings yet

- I Jsa It 04132012Document4 pagesI Jsa It 04132012WARSE JournalsNo ratings yet

- Time Series Forecast of Electrical Load Based On XGBoostDocument10 pagesTime Series Forecast of Electrical Load Based On XGBoostt.d.sudhakar.staffNo ratings yet

- Gpgpu Final ReportDocument9 pagesGpgpu Final ReportPatrick W. M. AdamsNo ratings yet

- Training Feed Forward Networks With The Marquardt AlgorithmDocument5 pagesTraining Feed Forward Networks With The Marquardt AlgorithmsamijabaNo ratings yet

- Performance Analysis of Partition Algorithms For Parallel Solution of Nonlinear Systems of EquationsDocument4 pagesPerformance Analysis of Partition Algorithms For Parallel Solution of Nonlinear Systems of EquationsBlueOneGaussNo ratings yet

- Reduction of A Symmetric Matrix To Tridiagonal Form - Givens and Householder ReductionsDocument7 pagesReduction of A Symmetric Matrix To Tridiagonal Form - Givens and Householder ReductionsAntonio PeixotoNo ratings yet

- Kruskal PascalDocument13 pagesKruskal PascalIonut MarinNo ratings yet

- 1, Which Is Mainly ComDocument4 pages1, Which Is Mainly ComIgor ErcegNo ratings yet

- NG - Argument Reduction For Huge Arguments: Good To The Last BitDocument8 pagesNG - Argument Reduction For Huge Arguments: Good To The Last BitDerek O'ConnorNo ratings yet

- An Improved Fuzzy Neural Networks Approach For Short-Term Electrical Load ForecastingDocument6 pagesAn Improved Fuzzy Neural Networks Approach For Short-Term Electrical Load ForecastingRramchandra HasabeNo ratings yet

- 016 JCIT Vol6 No12Document7 pages016 JCIT Vol6 No12ladooroyNo ratings yet

- Robust Controller Design For Uncertain Systems: Shreesha.c@manipal - EduDocument4 pagesRobust Controller Design For Uncertain Systems: Shreesha.c@manipal - EduInnovative Research PublicationsNo ratings yet

- A New Parallel Version of The DDSCAT Code For Electromagnetic Scattering From Big TargetsDocument5 pagesA New Parallel Version of The DDSCAT Code For Electromagnetic Scattering From Big TargetsMartín FigueroaNo ratings yet

- PCA Based Image Enhancement in Wavelet DomainDocument5 pagesPCA Based Image Enhancement in Wavelet Domainsurendiran123No ratings yet

- Ijecet: International Journal of Electronics and Communication Engineering & Technology (Ijecet)Document5 pagesIjecet: International Journal of Electronics and Communication Engineering & Technology (Ijecet)IAEME PublicationNo ratings yet

- Efficiency Ordering of StochasDocument14 pagesEfficiency Ordering of StochasTom SmithNo ratings yet

- Full Paper For The ConferenceDocument4 pagesFull Paper For The Conferencesuresh_me2004No ratings yet

- Neural Networks As Radial-Interval Systems Through Learning FunctionDocument3 pagesNeural Networks As Radial-Interval Systems Through Learning FunctionRahul SharmaNo ratings yet

- Trade-Offs in Multiplier Block Algorithms For Low Power Digit-Serial FIR FiltersDocument6 pagesTrade-Offs in Multiplier Block Algorithms For Low Power Digit-Serial FIR FiltersPankaj JoshiNo ratings yet

- Internal ModelDocument15 pagesInternal ModelDiego RamirezNo ratings yet

- Mingjun Zhong Et Al - Classifying EEG For Brain Computer Interfaces Using Gaussian ProcessesDocument9 pagesMingjun Zhong Et Al - Classifying EEG For Brain Computer Interfaces Using Gaussian ProcessesAsvcxvNo ratings yet

- BACKTRACKINGDocument25 pagesBACKTRACKINGAmisha SharmaNo ratings yet

- PAPERDocument6 pagesPAPERMADDULURI JAYASRINo ratings yet

- A 2589 Line Topology Optimization Code Written For The Graphics CardDocument20 pagesA 2589 Line Topology Optimization Code Written For The Graphics Cardgorot1No ratings yet

- Backpropagation: Fundamentals and Applications for Preparing Data for Training in Deep LearningFrom EverandBackpropagation: Fundamentals and Applications for Preparing Data for Training in Deep LearningNo ratings yet

- 2009 JPCPB CHLDocument8 pages2009 JPCPB CHLMys GenieNo ratings yet

- Fabrication and Photocatalytic Property of Novel SDocument9 pagesFabrication and Photocatalytic Property of Novel SMys GenieNo ratings yet

- 1 s2.0 S004565352103592X MainDocument37 pages1 s2.0 S004565352103592X MainMys GenieNo ratings yet

- bài tập rate 2Document12 pagesbài tập rate 2Mys GenieNo ratings yet

- Accepted Article: Title: Bi5+, Bi (3-X) +, and Oxygen Vacancy Induced Bioclxi1-X SolidDocument14 pagesAccepted Article: Title: Bi5+, Bi (3-X) +, and Oxygen Vacancy Induced Bioclxi1-X SolidMys GenieNo ratings yet

- 1 s2.0 S1383586622018913 MainDocument16 pages1 s2.0 S1383586622018913 MainMys GenieNo ratings yet

- ZNS H2S Photocat H2Document8 pagesZNS H2S Photocat H2Mys GenieNo ratings yet

- Synthesis of A Highly E Cient Biocl Single-Crystal Nanodisk Photocatalyst With Exposing (001) FacetsDocument7 pagesSynthesis of A Highly E Cient Biocl Single-Crystal Nanodisk Photocatalyst With Exposing (001) FacetsMys GenieNo ratings yet

- Tuning Band Gaps and Optical Absorption of Biocl Through Doping and Strain Insight Form DFT CalculationsDocument7 pagesTuning Band Gaps and Optical Absorption of Biocl Through Doping and Strain Insight Form DFT CalculationsMys GenieNo ratings yet

- ZN CX NynanoparticlearraysderivedfromametDocument8 pagesZN CX NynanoparticlearraysderivedfromametMys GenieNo ratings yet

- Facile Fabrication of BiOIBiOCl Immobilized FilmsDocument11 pagesFacile Fabrication of BiOIBiOCl Immobilized FilmsMys GenieNo ratings yet

- Twin-Graphene As A Promising Anode Material For Na-Ion Rechargeable BatteriesDocument8 pagesTwin-Graphene As A Promising Anode Material For Na-Ion Rechargeable BatteriesMys GenieNo ratings yet

- 1 s2.0 S2213343720308320 MainDocument12 pages1 s2.0 S2213343720308320 MainMys GenieNo ratings yet

- bài tập rateDocument2 pagesbài tập rateMys Genie100% (1)

- CarbonLetter B C.KuDocument5 pagesCarbonLetter B C.KuMys GenieNo ratings yet

- Ceri D 23 03980Document50 pagesCeri D 23 03980Mys GenieNo ratings yet

- Biologically Synthesized BlackDocument21 pagesBiologically Synthesized BlackMys GenieNo ratings yet

- Coupling Photocatalytic Fuel Cell Based On S-Scheme G-C N /tnasDocument10 pagesCoupling Photocatalytic Fuel Cell Based On S-Scheme G-C N /tnasMys GenieNo ratings yet

- 1 s2.0 S0010854522004593 Main 2Document58 pages1 s2.0 S0010854522004593 Main 2Mys GenieNo ratings yet

- Cu-ZrSe2 ShortDocument7 pagesCu-ZrSe2 ShortMys GenieNo ratings yet

- 51 Lithium Intercalation and Crystal Chemistry of Li3VO4Document4 pages51 Lithium Intercalation and Crystal Chemistry of Li3VO4Mys GenieNo ratings yet

- C4RA10227DDocument9 pagesC4RA10227DMys GenieNo ratings yet

- A Historical Introduction to PhotocatalysisDocument4 pagesA Historical Introduction to PhotocatalysisMys GenieNo ratings yet

- Recent Advances and New Challenges Two-DimensionalDocument47 pagesRecent Advances and New Challenges Two-DimensionalMys GenieNo ratings yet

- Energies: Synthesis of The Zno@Zns Nanorod For Lithium-Ion BatteriesDocument8 pagesEnergies: Synthesis of The Zno@Zns Nanorod For Lithium-Ion BatteriesMys GenieNo ratings yet

- Wend Erich 2016Document33 pagesWend Erich 2016Mys GenieNo ratings yet

- Reaction Mechanism of WASSRDocument47 pagesReaction Mechanism of WASSRMys Genie100% (1)

- Corrosion TestsDocument11 pagesCorrosion Testsk21p81No ratings yet

- Review Photocatalysis 1 PDFDocument15 pagesReview Photocatalysis 1 PDFMys GenieNo ratings yet

- Sell I Photo Effects Semiconductors Energy EnvironmentDocument43 pagesSell I Photo Effects Semiconductors Energy EnvironmentMys GenieNo ratings yet

- Math g3 m2 Full ModuleDocument303 pagesMath g3 m2 Full ModuleRivka ShareNo ratings yet

- cs312 PDFDocument3 pagescs312 PDFAJAY KUMAR RNo ratings yet

- Music Analysis, Retrieval and Synthesis of Audio Signals MARSYAS (George Tzanetakis)Document2 pagesMusic Analysis, Retrieval and Synthesis of Audio Signals MARSYAS (George Tzanetakis)emad afifyNo ratings yet

- Why I Use MATLAB For Forensic Image ProcessingDocument6 pagesWhy I Use MATLAB For Forensic Image ProcessingGayathri ShainaNo ratings yet

- PRECDocument14 pagesPRECAizaz Ali KhanNo ratings yet

- A Model Curriculum For K-12 Computer ScienceDocument45 pagesA Model Curriculum For K-12 Computer ScienceRini SandeepNo ratings yet

- Computer Concepts and C Language: - Prof. Niranjan N.ChiplunkarDocument5 pagesComputer Concepts and C Language: - Prof. Niranjan N.ChiplunkarGebBerheNo ratings yet

- Tutorial Book Scheme Topic 2 Sequence (pg.4-pg32)Document29 pagesTutorial Book Scheme Topic 2 Sequence (pg.4-pg32)Fatin AqilahNo ratings yet

- Summer Internship Final Report 2021Document18 pagesSummer Internship Final Report 2021Janki SolankiNo ratings yet

- Essential Data Science Crash-CourseDocument107 pagesEssential Data Science Crash-Courseben100% (4)

- Datamining Project Proposal (Final Version)Document12 pagesDatamining Project Proposal (Final Version)api-282845094No ratings yet

- PS LS CSC 2022-2023Document3 pagesPS LS CSC 2022-2023Farel KuitchoueNo ratings yet

- Analysis of Algorithms CS 477/677: Recurrences Instructor: George BebisDocument32 pagesAnalysis of Algorithms CS 477/677: Recurrences Instructor: George BebisKritik ManNo ratings yet

- Algorithm and Flow ChartDocument24 pagesAlgorithm and Flow ChartThobius JosephNo ratings yet

- CBCP2101 Computer Programming I PDFDocument145 pagesCBCP2101 Computer Programming I PDFhsjdNo ratings yet

- R7310506-Design and Analysis of AlgorithmsDocument4 pagesR7310506-Design and Analysis of AlgorithmssivabharathamurthyNo ratings yet

- Cracking TCS Digital: My Interview Preparation StrategyDocument8 pagesCracking TCS Digital: My Interview Preparation StrategyKiran maruNo ratings yet

- ACH: A Tool For Analyzing Competing Hypotheses: Technical Description For Version 1.1Document11 pagesACH: A Tool For Analyzing Competing Hypotheses: Technical Description For Version 1.1Neri David MartinezNo ratings yet

- Assign Drums Cables Wizard DiagramDocument1 pageAssign Drums Cables Wizard Diagramrahul.srivastavaNo ratings yet

- Data Structures by Fareed Sem IV 27-Jan-2020 Pages 213 Completed W1Document213 pagesData Structures by Fareed Sem IV 27-Jan-2020 Pages 213 Completed W1Prasad S RNo ratings yet

- What Is An AlgorithmDocument4 pagesWhat Is An AlgorithmAa ANo ratings yet

- Optical Computing PaperDocument5 pagesOptical Computing PaperRashmi JamadagniNo ratings yet

- Machine Learning Meets 5G Wireless: Martin Rowe Comment 1Document7 pagesMachine Learning Meets 5G Wireless: Martin Rowe Comment 1Mitra MirzarezaeeNo ratings yet

- Computational ThinkingDocument27 pagesComputational ThinkingBianca GabrielaNo ratings yet

- Teaching Thinking Without KnowledgeDocument8 pagesTeaching Thinking Without KnowledgeEva Nurul FatimahNo ratings yet

- Mathematical Analysis NonRecursive AlgorithmsDocument15 pagesMathematical Analysis NonRecursive AlgorithmsNIKHIL SOLOMON P URK19CS1045100% (1)

- Constructive ResearchDocument20 pagesConstructive ResearchArivian MangilayaNo ratings yet

- Numerical Mathematics I Lecture NotesDocument255 pagesNumerical Mathematics I Lecture Notesvic1234059No ratings yet

- Possible Algorithms of 2NF and 3NF For DBNorma - A Tool For Relational Database NormalizationDocument7 pagesPossible Algorithms of 2NF and 3NF For DBNorma - A Tool For Relational Database NormalizationIDESNo ratings yet

- CSC320T12Document23 pagesCSC320T12Rizky PetrucciNo ratings yet