Professional Documents

Culture Documents

How To Perfect A Chocolate Soufflé and Other Important Problems

Uploaded by

xiuhtlaltzinOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

How To Perfect A Chocolate Soufflé and Other Important Problems

Uploaded by

xiuhtlaltzinCopyright:

Available Formats

Neuron

Previews

How to Perfect a Chocolate Souffle

and Other Important Problems

Timothy E.J. Behrens1,2,* and Gerhard Jocham1,*

1FMRIB Centre, University of Oxford, John Radcliffe Hospital, Oxford OX3 9DU, UK

2Wellcome Trust Centre for Neuroimaging, 12 Queen Square, London WC1N 3BG, UK

*Correspondence: behrens@fmrib.ox.ac.uk (T.E.J.B.), gjocham@fmrib.ox.ac.uk (G.J.)

DOI 10.1016/j.neuron.2011.07.004

When learning to achieve a goal through a complex series of actions, humans often group several actions into

a subroutine and evaluate whether the subroutine achieved a specific subgoal. A new study reports brain

responses consistent with such hierarchical reinforcement learning.

To culinary novices like ourselves, it midbrain show firing rate changes that from another, and because the number

seems something of a miracle that the appear remarkably consistent with of possible actions they might choose

chocolate souffle came into existence. prediction error signaling: firing rates from is immense. It is clear, however,

Baking a good souffle requires so many increase when a reward is better than ex- that humans have more sophisticated

complex steps and processes (http:// pected and decrease when worse than strategies in their learning armory. One

www.bbcgoodfood.com/recipes/2922/ expected (Schultz, 2007). In rodents, such strategy, well known to both

hot-chocolate-souffl-) that, at first glance, causal interference with these neurons computer scientists and chefs, is termed

it would seem to be an impossible art to induces artificial learning (Tsai et al., hierarchical reinforcement learning (HRL;

perfect. When the first souffle failed to 2009). In human imaging studies, it is Botvinick et al., 2009). Here, sequences

rise, how did the chef know, for example, also possible to find midbrain prediction- of actions may be grouped together into

whether the ganache was under-velvety, error signals (DArdenne et al., 2008), subroutines (make a ganache or whip

or the creme patisserie over-floury? but, for technical reasons, such signals some egg whites). Each of these subrou-

Current theories of how the brain learns are more commonly found in dopamino- tines may be evaluated according to its

from its successes and failures offer scant ceptive regions in the striatum (ODoherty, own subgoals, and if these subgoals are

advice to the budding soufflist. However, 2004) and prefrontal cortex (Rushworth not met, they will generate their own

in this issue of Neuron, Ribas-Fernandes and Behrens, 2008). prediction errors. These pseudo-reward

and colleagues (2011) demonstrate neural RL has had a tremendous impact on prediction errors (PPEs) are distinct from

correlates of a learning strategy that cognitive neuroscience due to its power reward prediction errors because they

dramatically simplifies not only this impor- in explaining behavioral and neural data. are not associated with eventual reward,

tant problem, but also nearly every real- However, in the real world, simple actions but with an internally set subgoal that is

world example of human learning. rarely lead directly to rewards. Instead, a stepping stone toward the eventual

Reinforcement learning (RL) is a central the pursuit of reward (or souffle) often outcome. Hence, in a hierarchical frame-

feature of human and animal behavior. requires many actions to be taken, each work, RPEs are used to learn which

Actions that result in good outcomes depending on the last. In such a world, it combinations of subroutines lead to

(termed rewards or reinforcers) are is a complex problem to understand how rewarding outcomes, whereas PPEs are

repeated more often than those that do learning should occur when an outcome used to learn which combinations of

not, increasing the likely number of future is different from expected (the souffle actions (and sub-subroutines!) lead to

rewards. This simplistic form of learning wont rise), as it is not clear which actions a subgoal. Because they may only be

can be ameliorated by keeping an esti- or combinations of actions should be held attributed to the small number of actions

mate of precisely how much reward can responsible for a prediction error, and in the subroutine, PPEs substantially

be expected from any given action (an therefore which should be adjusted for reduce the complexity of learning

actions value). Now, high-value actions the next attempt. Solving this problem (Figure 1): if the egg whites are droopy, it

may be repeated more frequently than using a standard RL approach becomes cannot be the chocolates fault!

low-value ones, and, when outcomes exponentially more difficult as the number It is the neural correlates of these PPEs

are different from what was expected, of actions increases. Learning to cook that form the focus of Ribas-Fernandes

action values may be updated to drive a souffle would seem an intractable et al. (2011). Here, we suspect mainly

future behavior. This difference between problem! for practical reasons, subjects were not

received and expected reward is termed In a complex world, then, standard RL asked to bake souffles in the MRI

the reward prediction error (RPE) and is approaches suffer because it is difficult scanner. Instead, they performed a task

thought to be a major neural substrate to evaluate intermediate actions with devised in the world of robotics to probe

for learning and behavioral control. Dopa- respect to the final outcome, because HRL. Using a joystick, participants navi-

mine neurons in the primate and rodent they cannot distinguish one type of error gated a lorry to collect a package and

Neuron 71, July 28, 2011 2011 Elsevier Inc. 203

Neuron

Previews

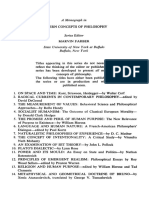

A B

Figure 1. Conventional Chef Is Confused and Has No Souffle, but Fortunately Hierarchical Chef Has Enough Souffle for Everybody

In conventional reinforcement learning (A), the agent goes through all steps until the final goal is reached. If the souffle is worse than expected, any of the actions

may be to blame. The learning problem can be drastically simplified by hierarchical reinforcement learning (HRL, B). In this example, the agent learns three

subroutines (SR1SR3). Each of these subroutines leads to its associated subgoal (SG1SG3). If one of the subgoals is not achieved, only the three candidate

actions of the corresponding subroutine need to be evaluated.

deliver it to a target location. In this task, existing hierarchy? Alternatively, repre- tion is that activity in the region is

there is one final goal (delivery of the sentations of specific goals and outcomes more concerned with behavioral update

package to the target), which can be split can be found in the ventromedial pre- caused by the outcome than caused by

into two subroutines (driving to collect the frontal and orbitofrontal (Burke et al., the reward prediction error per se (Rush-

package and transporting the package 2008) cortices. Might these same regions worth and Behrens, 2008).

to the target). Ingeniously, in some trials update subgoal representations? In a Further similarities can be found in

the experimenter moves the package series of three experiments, the authors subcortical structures. PPEs, like RPEs,

such that the distance to the subgoal demonstrate activity that is instead con- are coded positively in the ventral striatum

(the package) will change but the overall sistent with a third hypothesis: neural and negatively in the habenular complex.

distance to the eventual target will remain responses to pseudo-reward prediction Although it is not yet clear whether the

the same. This causes a PPE with no errors show remarkable similarity to reported PPE activity recruits the dopami-

associated RPE (as the subject may be familiar RPE responses. nergic mechanisms famous for coding

further from the package but is equally far Using EEG, previous studies have RPEs, this latter finding makes it a likely

from eventual reward). In other trials, the shown RPE correlations in a characteristic possibility. Cells in the monkey lateral

experimenter again moves the package, midline voltage wave termed the feed- habenula not only code RPEs negatively,

but now to a spot selected such that back-related negativity (FRN; Holroyd but they also causally inhibit the firing of

distances to both subgoal and target and Krigolson, 2007). In the current study, dopamine cells in the ventral tegmental

remain the same, eliciting neither type of this same negative deflection can be seen area (Matsumoto and Hikosaka, 2007).

prediction error. Hence, by comparing in response to a PPE. The source of the The data presented in Ribas-Fernandes

neural activity between these trial types, FRN is often assumed to lie in the dorsal et al. (2011) therefore raise the possibility

the authors are able to isolate responses anterior cingulate cortex (ACC), and, that prediction error responses at different

caused by PPEs. when the hierarchical task is taken into levels of a hierarchical learning problem

How, then, would the brain respond the MRI scanner, PPE-related activity is recruit the same neuronal mechanisms.

to a pseudo-reward prediction error? A indeed found in the ACC BOLD signal Previous theories have considered the

number of possibilities seemed reason- (Ribas-Fernandes et al., 2011). While role of dopamine in learning from re-

able. Hierarchical organization is already reward prediction errors can be found in warding events. It is now likely that these

thought to exist in the lateral prefrontal single-unit activity in the ACC (Matsumoto same mechanisms can control the learning

cortex, with more rostral regions repre- et al., 2007), the current observation of complex internal goals and subgoals.

senting more abstract and temporally by Ribas-Fernandes et al. (2011) that As we move to more complex models of

extended plans (make ganache) and pseudo-rewards, as well as fictive learning, the potential for common predic-

more caudal regions executing more rewards (Hayden et al., 2009), cause tion error mechanisms places strong

concrete and immediate actions (snap similar activity requires a theory of ACC constraints on the types of models that

chocolate bar) (Koechlin et al., 2003). Might processing that goes beyond simple re- should be considered. However, this idea

hierarchical PPE mechanisms utilize this ward-and-error processing. One sugges- immediately raises a new problem. How

204 Neuron 71, July 28, 2011 2011 Elsevier Inc.

Neuron

Previews

does the brain know which level of the in a wide variety of situations. However, DArdenne, K., McClure, S.M., Nystrom, L.E., and

Cohen, J.D. (2008). Science 319, 12641267.

hierarchy has generated the error? Theo- humans also exhibit behavioral flexibility

retically, RPEs and PPEs can be generated that cannot be explained by HRL strate- Daw, N.D., Gershman, S.J., Seymour, B., Dayan,

by the same event, even in opposite gies. For example, if an apple falls from P., and Dolan, R.J. (2011). Neuron 69, 12041215.

directions. Should the value of the action a tree on a windy day, the next day we

Hayden, B.Y., Pearson, J.M., and Platt, M.L.

or the value of the subroutine be updated? might shake the tree and expect another (2009). Science 324, 948950.

This question is left unaddressed in the to fall, even if we have never shaken

current study, but an intriguing possibility a tree before. If the souffle is burnt, it is Holroyd, C.B., and Krigolson, O.E. (2007). Psycho-

is that the hierarchical organization in more likely due to too much time in the physiology 44, 913917.

the prefrontal cortex can solve this prob- oven than to too much chocolate in the Koechlin, E., Ody, C., and Kouneiher, F. (2003).

lem in concert with the striatum. Striatal ganache. This type of learning relies on Science 302, 11811185.

circuits may gate error signals to the a causal understanding (or model) of the

Matsumoto, M., and Hikosaka, O. (2007). Nature

appropriate prefrontal cells (Badre and world and our interactions with it and is

447, 11111115.

Frank, 2011). also a major recent focus in behavioral

By arranging actions and combinations neuroscience (Daw et al., 2011). It is Matsumoto, M., Matsumoto, K., Abe, H., and

of actions into a hierarchy, and by intro- hoped that by studying such strategies Tanaka, K. (2007). Nat. Neurosci. 10, 647656.

ducing intermediate subgoals, HRL can both separately and in combination,

ODoherty, J.P. (2004). Curr. Opin. Neurobiol. 14,

explain complex behaviors that cannot modern neuroscientists will make big 769776.

be explained by more traditional learning strides toward understanding the deter-

theories. Not only is learning dramatically minants of human behavior. Ribas-Fernandes, J.J.F., Solway, A., Diuk, C.,

McGuire, J.T., Barto, A.G., Niv, Y., and Botvinick,

simplified, but also subroutines can be M.M. (2011). Neuron 71, this issue, 370379.

transferred between learning problems. REFERENCES

Egg-whisking skills perfected during Rushworth, M.F., and Behrens, T.E. (2008). Nat.

Neurosci. 11, 389397.

souffle baking may prove useful for Badre, D., and Frank, M.J. (2011). Cereb. Cortex, in

tomorrow nights lemon mousse. More press. Published online June 21, 2011.

Schultz, W. (2007). Annu. Rev. Neurosci. 30,

prosaically, the complex sequence of Botvinick, M.M., Niv, Y., and Barto, A.C. (2009). 259288.

muscle commands required, for example, Cognition 113, 262280.

Tsai, H.C., Zhang, F., Adamantidis, A., Stuber,

to move a limb may be combined into Burke, K.A., Franz, T.M., Miller, D.N., and Schoen- G.D., Bonci, A., de Lecea, L., and Deisseroth, K.

a single subroutine (or action!) and used baum, G. (2008). Nature 454, 340344. (2009). Science 324, 10801084.

Neuron 71, July 28, 2011 2011 Elsevier Inc. 205

You might also like

- An Overview On Indications and Chemical Composition of Aromatic Waters (Hydrosols)Document18 pagesAn Overview On Indications and Chemical Composition of Aromatic Waters (Hydrosols)xiuhtlaltzinNo ratings yet

- 8 Biological Properties and Uses of Honey A Concise Scientific Review PDFDocument11 pages8 Biological Properties and Uses of Honey A Concise Scientific Review PDFxiuhtlaltzinNo ratings yet

- A Review On Melaleuca Alternifolia (Tea Tree) OilDocument7 pagesA Review On Melaleuca Alternifolia (Tea Tree) OilxiuhtlaltzinNo ratings yet

- Hair Cosmetics An OverviewDocument21 pagesHair Cosmetics An OverviewxiuhtlaltzinNo ratings yet

- Article1380712636 - Manyi-Loh Et Al PDFDocument9 pagesArticle1380712636 - Manyi-Loh Et Al PDFxiuhtlaltzinNo ratings yet

- Antioxidant Properties of Tea and Herbal Infusions - A Short ReportDocument4 pagesAntioxidant Properties of Tea and Herbal Infusions - A Short ReportxiuhtlaltzinNo ratings yet

- Herb BookDocument37 pagesHerb Booksystermoon100% (1)

- Lemon Grass (Cymbopogon Citratus) Essential Oil As A Potent Anti-Inflammatory and Antifungal DrugsDocument10 pagesLemon Grass (Cymbopogon Citratus) Essential Oil As A Potent Anti-Inflammatory and Antifungal DrugsxiuhtlaltzinNo ratings yet

- 1 s2.0 S2221169115001033 Main PDFDocument11 pages1 s2.0 S2221169115001033 Main PDFxiuhtlaltzinNo ratings yet

- The Chemotaxonomy of Common Sage (Salvia Officinalis) Based On The Volatile ConstituentsDocument12 pagesThe Chemotaxonomy of Common Sage (Salvia Officinalis) Based On The Volatile ConstituentsxiuhtlaltzinNo ratings yet

- 1 s2.0 S2225411016300438 MainDocument5 pages1 s2.0 S2225411016300438 MainHusni MubarokNo ratings yet

- Effects of Almond Milk On Body Measurements and Blood PressureDocument6 pagesEffects of Almond Milk On Body Measurements and Blood PressurexiuhtlaltzinNo ratings yet

- Detal PasteDocument8 pagesDetal PastePrasanna BabuNo ratings yet

- Evaluation of Activities of Marigold Extract On Wound Healing of Albino Wister RatDocument4 pagesEvaluation of Activities of Marigold Extract On Wound Healing of Albino Wister RatxiuhtlaltzinNo ratings yet

- Essential Oils and Herbal Extracts Show Stronger Antimicrobial Activity than Methylparaben in Cosmetic EmulsionDocument6 pagesEssential Oils and Herbal Extracts Show Stronger Antimicrobial Activity than Methylparaben in Cosmetic EmulsionxiuhtlaltzinNo ratings yet

- Essential Oils As Alternatives To Antibiotics in Swine ProductionDocument11 pagesEssential Oils As Alternatives To Antibiotics in Swine ProductionxiuhtlaltzinNo ratings yet

- 1 s2.0 S0254629910001559 Main PDFDocument9 pages1 s2.0 S0254629910001559 Main PDFxiuhtlaltzinNo ratings yet

- Chamomile (Matricaria Recutita) May Have Antidepressant Activity in Anxious Depressed Humans - An Exploratory StudyDocument10 pagesChamomile (Matricaria Recutita) May Have Antidepressant Activity in Anxious Depressed Humans - An Exploratory StudyxiuhtlaltzinNo ratings yet

- Antifungal Activity of The Essential Oil From Calendula Officinalis L. (Asteraceae) Growing in BrazilDocument3 pagesAntifungal Activity of The Essential Oil From Calendula Officinalis L. (Asteraceae) Growing in BrazilxiuhtlaltzinNo ratings yet

- Essential Oil Composition and Antimicrobial Interactions of Understudied Tea Tree SpeciesDocument8 pagesEssential Oil Composition and Antimicrobial Interactions of Understudied Tea Tree SpeciesxiuhtlaltzinNo ratings yet

- ChamomileDocument13 pagesChamomileguriakkNo ratings yet

- Article1380713061 - Moradkhani Et Al PDFDocument7 pagesArticle1380713061 - Moradkhani Et Al PDFxiuhtlaltzinNo ratings yet

- Orange Sorting by Applying Pattern Recognition On Colour ImageDocument7 pagesOrange Sorting by Applying Pattern Recognition On Colour ImagexiuhtlaltzinNo ratings yet

- 1 s2.0 S1878535211000232 Main PDFDocument7 pages1 s2.0 S1878535211000232 Main PDFxiuhtlaltzinNo ratings yet

- Anticariogenic and Phytochemical Evaluation of Eucalyptus Globules Labill.Document6 pagesAnticariogenic and Phytochemical Evaluation of Eucalyptus Globules Labill.xiuhtlaltzinNo ratings yet

- Changes of Some Chemical Substances and Antioxidant Capacity of Mandarin Orange Segments During Can ProcessingDocument7 pagesChanges of Some Chemical Substances and Antioxidant Capacity of Mandarin Orange Segments During Can ProcessingxiuhtlaltzinNo ratings yet

- The Antioxidant Effect of Melissa Officinalis Extract Regarding The Sunflower Oil Used in Food Thermal AplicationsDocument4 pagesThe Antioxidant Effect of Melissa Officinalis Extract Regarding The Sunflower Oil Used in Food Thermal AplicationsxiuhtlaltzinNo ratings yet

- Ars 2010 3697 PDFDocument34 pagesArs 2010 3697 PDFxiuhtlaltzinNo ratings yet

- Effects of Chocolate Intake On Perceived Stress A Controlled Clinical StudyDocument9 pagesEffects of Chocolate Intake On Perceived Stress A Controlled Clinical StudyxiuhtlaltzinNo ratings yet

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (399)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (894)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (344)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (587)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2219)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (119)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Euphemisms in English: A Brief History and Examples of Sexual EuphemismsDocument9 pagesEuphemisms in English: A Brief History and Examples of Sexual EuphemismsDorotea ŠvrakaNo ratings yet

- Topic - AIDocument20 pagesTopic - AIAG05 Caperiña, Marcy Joy T.No ratings yet

- Simone de Beauvoir - ScribdDocument3 pagesSimone de Beauvoir - ScribdAshley MalcomNo ratings yet

- What Are Levels of Analysis and What Do They Contribute To International Relations Theory?Document23 pagesWhat Are Levels of Analysis and What Do They Contribute To International Relations Theory?Ver Madrona Jr.100% (2)

- Singular VS Plural and Present Progressive (Maha)Document3 pagesSingular VS Plural and Present Progressive (Maha)Psych 0216No ratings yet

- Language Practice 1: Choose The Correct WordDocument32 pagesLanguage Practice 1: Choose The Correct WordĐơ Kảm XúcNo ratings yet

- CBLM Basic Work in A Team EnvironmentDocument22 pagesCBLM Basic Work in A Team EnvironmentJoenathan Alinsog Villavelez75% (4)

- Ernest Sosa - The Raft and The PyramidDocument9 pagesErnest Sosa - The Raft and The PyramidGuido Danno TanaNo ratings yet

- Child Development Concept AnalysisDocument3 pagesChild Development Concept AnalysisShanshan LiNo ratings yet

- 30DayWM Call17 With Dr. Joe RubinoDocument35 pages30DayWM Call17 With Dr. Joe Rubinolisa252100% (1)

- Chapter 6 AP Psych OutlineDocument8 pagesChapter 6 AP Psych OutlineJenny Gan100% (1)

- How To Read A Film-J MonacoDocument38 pagesHow To Read A Film-J MonacobendjaniNo ratings yet

- Test Success Strategies Manual To TOEFLDocument19 pagesTest Success Strategies Manual To TOEFLAndrés HuertasNo ratings yet

- Web Quest. - Week 1docxDocument3 pagesWeb Quest. - Week 1docxbavlygamielNo ratings yet

- D. C. Mathur Naturalistic Philosophies of Experience Studies in James, Dewey and Farber Against The Background of Husserls Phenomenology 1971Document169 pagesD. C. Mathur Naturalistic Philosophies of Experience Studies in James, Dewey and Farber Against The Background of Husserls Phenomenology 1971andressuareza88No ratings yet

- Pitch Anything - An Innovative Method For Presenting, Persuading, and Winning The Deal Oren KlaffDocument9 pagesPitch Anything - An Innovative Method For Presenting, Persuading, and Winning The Deal Oren KlaffJon LecNo ratings yet

- Technical EnglishDocument11 pagesTechnical EnglishAjay KumarNo ratings yet

- DLP Eng.-8 - Q2 - NOV. 8, 2022Document6 pagesDLP Eng.-8 - Q2 - NOV. 8, 2022Kerwin Santiago ZamoraNo ratings yet

- Foucault On The Question of The Author PDFDocument26 pagesFoucault On The Question of The Author PDFjeromemasamakaNo ratings yet

- 初中英语语法术语Document6 pages初中英语语法术语周晨No ratings yet

- 以英语为母语的留学生叹词习得情况考查及偏误分析Document48 pages以英语为母语的留学生叹词习得情况考查及偏误分析黄氏贤No ratings yet

- 5 Types Informational Text StructuresDocument3 pages5 Types Informational Text StructuresRholdan Simon AurelioNo ratings yet

- The Power of FarDocument12 pagesThe Power of Fargenn katherine gadunNo ratings yet

- A Research Paper On "Study of Employee's Performance Appraisal System"Document6 pagesA Research Paper On "Study of Employee's Performance Appraisal System"Anonymous CwJeBCAXpNo ratings yet

- Kindergarten Fine Motor SkillsDocument94 pagesKindergarten Fine Motor SkillsVero MoldovanNo ratings yet

- Research DefinitionsDocument51 pagesResearch DefinitionsHari PrasadNo ratings yet

- Example Lesson Plan 3: Review or Preview (If Applicable)Document5 pagesExample Lesson Plan 3: Review or Preview (If Applicable)api-247776755No ratings yet

- Book Reviews: The Modern LanguageDocument12 pagesBook Reviews: The Modern LanguageVassilis PetrakisNo ratings yet

- Articulo - Ntersection of Ubuntu Pedagogy and Social Justice +Document8 pagesArticulo - Ntersection of Ubuntu Pedagogy and Social Justice +Jesus GuillermoNo ratings yet

- 1.reseach 2Document2 pages1.reseach 2guful robertNo ratings yet