Professional Documents

Culture Documents

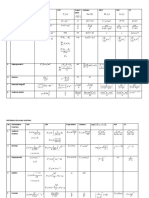

EE 6340: Information Theory Quiz II - Apr/10/2014: I TH I TH 1 2 3 4

Uploaded by

Dominic MendozaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

EE 6340: Information Theory Quiz II - Apr/10/2014: I TH I TH 1 2 3 4

Uploaded by

Dominic MendozaCopyright:

Available Formats

EE 6340: Information Theory

Quiz II - Apr/10/2014

Remarks

• You are allowed to bring one formula sheet (hand-written).

• If anything is not clear, make/state your assumptions and proceed.

Problems

1. (5 pts) Let source X has four outcomes {1, 2, 3, 4} with pi being probability of ith

outcome. Let a binary Huffman code is constructed for X and `i denote the length of

codeword for ith outcome. It is given that p1 > p2 = p3 = p4 .

(a) Show that if p1 > 0.4 then `1 = 1.

(b) Show (by example) that if p1 = 0.4 then a Huffman code exists with `1 > 1.

2. (5 pts) Let X be a random variable with K outcomes. Let H3 (X) denote the entropy

of X in ternary units. An instantaneous ternary code is found for this source with

expected code length L = H3 (X). Show that K is odd.

3. (10 pts) Consider the 3-input 3-output discrete memoryless channel illustrated below.

(a) What is the channel capacity for p = 1?

(b) What is the channel capacity for p = 0.5?

(c) Show that, for any value of p, channel capacity C ≥ 1 (bit per channel use).

(d) Find the channel capacity for a general value of p.

X Y

1−p

0 0

p

p

1 1

1−p

2 1

2

You might also like

- A Course of Mathematics for Engineerings and Scientists: Volume 5From EverandA Course of Mathematics for Engineerings and Scientists: Volume 5No ratings yet

- Stat NoteDocument8 pagesStat NoteRaihan Kabir RifatNo ratings yet

- Introduction To Information Theory Channel Capacity and ModelsDocument36 pagesIntroduction To Information Theory Channel Capacity and ModelsSunil SharmaNo ratings yet

- Solution Manual for an Introduction to Equilibrium ThermodynamicsFrom EverandSolution Manual for an Introduction to Equilibrium ThermodynamicsNo ratings yet

- Introduction To Information Theory Channel Capacity and ModelsDocument36 pagesIntroduction To Information Theory Channel Capacity and ModelssasirekaarumugamNo ratings yet

- Illustration of The K2 Algorithm For Learning Bayes Net StructuresDocument7 pagesIllustration of The K2 Algorithm For Learning Bayes Net StructuresAlonso PalominoNo ratings yet

- HW 5Document4 pagesHW 5Tommy TangNo ratings yet

- Revision of Lecture 1: Q Bits R R Q Q (Bits/symbol) I (M P Log R R R) ? M, PDocument18 pagesRevision of Lecture 1: Q Bits R R Q Q (Bits/symbol) I (M P Log R R R) ? M, PAbdullah KhalidAbdulhafidNo ratings yet

- ECE 361: Problem Set 4: Problems and Solutions Capacity and SamplingDocument5 pagesECE 361: Problem Set 4: Problems and Solutions Capacity and SamplingAnne DeichertNo ratings yet

- REsolução de ProblemaDocument2 pagesREsolução de ProblemaNei WinchesterNo ratings yet

- Probability and Statistics Class 3Document42 pagesProbability and Statistics Class 3creation portalNo ratings yet

- Tutorial 1Document5 pagesTutorial 1Jayesh SongaraNo ratings yet

- MR 1 2023 Eulers Totient FunctionDocument11 pagesMR 1 2023 Eulers Totient FunctionDebmalya DattaNo ratings yet

- EECS 121 Lecture 7 - Intro to Affine and Linear CodesDocument9 pagesEECS 121 Lecture 7 - Intro to Affine and Linear CodespyaridadaNo ratings yet

- Art 09 - P-Adic Numbers Applied On An Elliptic Curve CryptographyDocument17 pagesArt 09 - P-Adic Numbers Applied On An Elliptic Curve CryptographyTokyNo ratings yet

- Antwoorden Oefentoets ElektromagnetismeDocument3 pagesAntwoorden Oefentoets ElektromagnetismeJustDavid PoellNo ratings yet

- Liz Usefulness Doc 04Document27 pagesLiz Usefulness Doc 04ssfofoNo ratings yet

- Midterm 2: Sc-215 Probability and Statistics Winter 2012 Duration: 1HrDocument3 pagesMidterm 2: Sc-215 Probability and Statistics Winter 2012 Duration: 1HrHarshita AnandNo ratings yet

- Bernoulli, Poisson, Erlang DistributionsDocument4 pagesBernoulli, Poisson, Erlang Distributionsמיכאל סויסהNo ratings yet

- Lecture 6 PDFDocument5 pagesLecture 6 PDFravi srivastavaNo ratings yet

- Homework 1: 14th Jan 2021Document3 pagesHomework 1: 14th Jan 2021Ananyan KalepalliNo ratings yet

- Kappa Test For Agreement Between Two RatersDocument12 pagesKappa Test For Agreement Between Two RatersscjofyWFawlroa2r06YFVabfbajNo ratings yet

- Summary Table Distribution FunctionDocument3 pagesSummary Table Distribution FunctionGrumpy CatNo ratings yet

- T6 MultivariateFunction QuestionsDocument2 pagesT6 MultivariateFunction QuestionsBoeJoeMoe LoeNo ratings yet

- Information Theory-Homework Exercises: 1 Entropy, Source CodingDocument18 pagesInformation Theory-Homework Exercises: 1 Entropy, Source CodingrayanmatNo ratings yet

- Kummer's Theorem ExplainedDocument6 pagesKummer's Theorem ExplainedEhab AhmedNo ratings yet

- Kraft-McMillan InequalityDocument5 pagesKraft-McMillan InequalityAlice KarayiannisNo ratings yet

- Multichannel ModulationDocument25 pagesMultichannel ModulationMario E VillaltaNo ratings yet

- Young's, Minkowski's, and H Older's Inequalities: September 13, 2011Document6 pagesYoung's, Minkowski's, and H Older's Inequalities: September 13, 2011Fustei BogdanNo ratings yet

- Understanding Limiting Distribution of Markov ChainsDocument2 pagesUnderstanding Limiting Distribution of Markov Chains蔡于飛No ratings yet

- Introduction To Information Theory Channel Capacity and ModelsDocument30 pagesIntroduction To Information Theory Channel Capacity and Models文李No ratings yet

- Sample Question Paper 1Document2 pagesSample Question Paper 1katikireddy srivardhanNo ratings yet

- Examiners' Commentaries 2020: MT1186 Mathematical Methods Important NoteDocument9 pagesExaminers' Commentaries 2020: MT1186 Mathematical Methods Important NoteSeptian Nendra SaidNo ratings yet

- Shannon's noiseless coding theorem proven for prefix codesDocument4 pagesShannon's noiseless coding theorem proven for prefix codesSharmain QuNo ratings yet

- 1940 10erdosDocument4 pages1940 10erdosvahidmesic45No ratings yet

- 3. ActivitiesDocument5 pages3. ActivitiesjasmineNo ratings yet

- Exercise 2Document2 pagesExercise 2ParanoidNo ratings yet

- NP-Easy, NP-Complete & NP-Hard Problems SolvedDocument1 pageNP-Easy, NP-Complete & NP-Hard Problems SolvedgowthamkurriNo ratings yet

- 1 Org LogDocument60 pages1 Org LogBassel SafwatNo ratings yet

- Lesson 3: Topics: Methods of Formal ProofsDocument4 pagesLesson 3: Topics: Methods of Formal ProofsMarkanthony FrancoNo ratings yet

- Expected Lengths and The Paradox of The Common ManDocument6 pagesExpected Lengths and The Paradox of The Common ManSophie GermainNo ratings yet

- The Blahut-Arimoto Algorithm for Calculating Channel CapacityDocument8 pagesThe Blahut-Arimoto Algorithm for Calculating Channel CapacityMecobioNo ratings yet

- (A) (I) Let p0, p1, q0, q1, r0, r1 Be Atoms Capturing The States of Three Cells Called P, Q, andDocument4 pages(A) (I) Let p0, p1, q0, q1, r0, r1 Be Atoms Capturing The States of Three Cells Called P, Q, andAbdul MateenNo ratings yet

- Probability2012 (Team)Document2 pagesProbability2012 (Team)Haitao SuNo ratings yet

- F2019a ExamDocument9 pagesF2019a ExamInfected MonkeyNo ratings yet

- PRMO Mock 2019 PDFDocument4 pagesPRMO Mock 2019 PDFAshok Dargar100% (3)

- Fall 2009: I I I IDocument4 pagesFall 2009: I I I IRobinson Ortega MezaNo ratings yet

- Discrete Channels Memory and Entropy PropertiesDocument156 pagesDiscrete Channels Memory and Entropy PropertiesRakshaNo ratings yet

- Information TheoryDocument38 pagesInformation TheoryprinceNo ratings yet

- Logic Computation Theory Hw2sDocument2 pagesLogic Computation Theory Hw2skhedma anasNo ratings yet

- Homework 4: Problem 1Document2 pagesHomework 4: Problem 1Sanjay KumarNo ratings yet

- Codes Over The P-Adic IntegersDocument16 pagesCodes Over The P-Adic IntegersFrancisco AriasNo ratings yet

- 070 Bernoulli BinomialDocument2 pages070 Bernoulli BinomialJuscal KwrldNo ratings yet

- Bernoulli and Binomial Distributions ExplainedDocument2 pagesBernoulli and Binomial Distributions ExplainedMohammad AslamNo ratings yet

- Aops Community 2015 Indonesia Mo ShortlistDocument5 pagesAops Community 2015 Indonesia Mo ShortlistJeffersonNo ratings yet

- Rohini 41292706916Document6 pagesRohini 41292706916Sadia Islam NeelaNo ratings yet

- Review of The Automata-Theoretic Approach To Model-CheckingDocument25 pagesReview of The Automata-Theoretic Approach To Model-CheckingIlyassNo ratings yet

- 1st OIMC 2021 Online Math Cup Day I ProblemsDocument1 page1st OIMC 2021 Online Math Cup Day I ProblemsBayezid BostamiNo ratings yet

- Chapter 1 Propositional Logic Solved ProblemsDocument11 pagesChapter 1 Propositional Logic Solved Problemskim aeongNo ratings yet

- How To Calculate Fade MarginDocument11 pagesHow To Calculate Fade MarginMasood Ahmed100% (1)

- SPC 2016Document68 pagesSPC 2016Dominic MendozaNo ratings yet

- Statistical process control charts for quality managementDocument10 pagesStatistical process control charts for quality managementDominic MendozaNo ratings yet

- Intro To HF RadioDocument25 pagesIntro To HF RadioRuchi DeviNo ratings yet

- NoticeDocument1 pageNoticeam3s22No ratings yet

- Basic Question Bank With Answers and ExplanationsDocument275 pagesBasic Question Bank With Answers and ExplanationsAnonymous eWMnRr70qNo ratings yet

- Pe 1998 05 PDFDocument92 pagesPe 1998 05 PDFDominic MendozaNo ratings yet

- Vinyl Mural: Spec: 588" WIDE X 100" HIGH Straight Cut Vinyl Graphic Cut Around Obstructions DetailDocument1 pageVinyl Mural: Spec: 588" WIDE X 100" HIGH Straight Cut Vinyl Graphic Cut Around Obstructions DetailDominic MendozaNo ratings yet

- Modis Mod28 SST ReferenceDocument3 pagesModis Mod28 SST ReferenceDominic MendozaNo ratings yet

- W3SZ PackRatsConference2014Document16 pagesW3SZ PackRatsConference2014Dominic Mendoza100% (1)

- Standard Hybrid Step MotorsDocument2 pagesStandard Hybrid Step MotorsDominic MendozaNo ratings yet

- ReadmeDocument1 pageReadmeDominic MendozaNo ratings yet

- Probset 2 SolnDocument10 pagesProbset 2 SolnDominic MendozaNo ratings yet

- TTTDocument3 pagesTTTDominic MendozaNo ratings yet

- Super CatDocument2 pagesSuper CatDominic MendozaNo ratings yet

- Calculator TechniquesDocument23 pagesCalculator TechniquesJoa See100% (1)

- 10970472Document10 pages10970472Dominic MendozaNo ratings yet

- Face RecognitionDocument4 pagesFace RecognitionDominic MendozaNo ratings yet

- KulangDocument1 pageKulangDominic MendozaNo ratings yet

- 05 Quiz1 SolutionsDocument2 pages05 Quiz1 SolutionsDominic MendozaNo ratings yet

- Face RecognitionDocument4 pagesFace RecognitionDominic MendozaNo ratings yet

- VerifierDocument2 pagesVerifierDominic MendozaNo ratings yet

- KulangDocument1 pageKulangDominic MendozaNo ratings yet

- Philippine Passport Application FormDocument1 pagePhilippine Passport Application FormCarlo ELad MontanoNo ratings yet

- 8 Deferred AnnuityDocument2 pages8 Deferred AnnuityDominic MendozaNo ratings yet

- MidDocument1 pageMidDominic MendozaNo ratings yet

- License 3rdpartynoticeDocument82 pagesLicense 3rdpartynoticeNur SaktiNo ratings yet

- Readme SDKDocument1 pageReadme SDKDominic MendozaNo ratings yet

- OJT - Companies - Elecs - XLSX Filename - UTF-8''OJT Companies - ElecsDocument20 pagesOJT - Companies - Elecs - XLSX Filename - UTF-8''OJT Companies - ElecsDominic MendozaNo ratings yet

- Learn Python Programming for Beginners: Best Step-by-Step Guide for Coding with Python, Great for Kids and Adults. Includes Practical Exercises on Data Analysis, Machine Learning and More.From EverandLearn Python Programming for Beginners: Best Step-by-Step Guide for Coding with Python, Great for Kids and Adults. Includes Practical Exercises on Data Analysis, Machine Learning and More.Rating: 5 out of 5 stars5/5 (34)

- Machine Learning: The Ultimate Beginner's Guide to Learn Machine Learning, Artificial Intelligence & Neural Networks Step by StepFrom EverandMachine Learning: The Ultimate Beginner's Guide to Learn Machine Learning, Artificial Intelligence & Neural Networks Step by StepRating: 4.5 out of 5 stars4.5/5 (19)

- Python Programming : How to Code Python Fast In Just 24 Hours With 7 Simple StepsFrom EverandPython Programming : How to Code Python Fast In Just 24 Hours With 7 Simple StepsRating: 3.5 out of 5 stars3.5/5 (54)

- Clean Code: A Handbook of Agile Software CraftsmanshipFrom EverandClean Code: A Handbook of Agile Software CraftsmanshipRating: 5 out of 5 stars5/5 (13)

- The Advanced Roblox Coding Book: An Unofficial Guide, Updated Edition: Learn How to Script Games, Code Objects and Settings, and Create Your Own World!From EverandThe Advanced Roblox Coding Book: An Unofficial Guide, Updated Edition: Learn How to Script Games, Code Objects and Settings, and Create Your Own World!Rating: 4.5 out of 5 stars4.5/5 (2)

- Excel Essentials: A Step-by-Step Guide with Pictures for Absolute Beginners to Master the Basics and Start Using Excel with ConfidenceFrom EverandExcel Essentials: A Step-by-Step Guide with Pictures for Absolute Beginners to Master the Basics and Start Using Excel with ConfidenceNo ratings yet

- Blockchain Basics: A Non-Technical Introduction in 25 StepsFrom EverandBlockchain Basics: A Non-Technical Introduction in 25 StepsRating: 4.5 out of 5 stars4.5/5 (24)

- HTML, CSS, and JavaScript Mobile Development For DummiesFrom EverandHTML, CSS, and JavaScript Mobile Development For DummiesRating: 4 out of 5 stars4/5 (10)

- Python Programming For Beginners: Learn The Basics Of Python Programming (Python Crash Course, Programming for Dummies)From EverandPython Programming For Beginners: Learn The Basics Of Python Programming (Python Crash Course, Programming for Dummies)Rating: 5 out of 5 stars5/5 (1)

- GAMEDEV: 10 Steps to Making Your First Game SuccessfulFrom EverandGAMEDEV: 10 Steps to Making Your First Game SuccessfulRating: 4.5 out of 5 stars4.5/5 (12)

- ITIL 4: Digital and IT strategy: Reference and study guideFrom EverandITIL 4: Digital and IT strategy: Reference and study guideRating: 5 out of 5 stars5/5 (1)

- Blockchain: The complete guide to understanding Blockchain Technology for beginners in record timeFrom EverandBlockchain: The complete guide to understanding Blockchain Technology for beginners in record timeRating: 4.5 out of 5 stars4.5/5 (34)

- Introducing Python: Modern Computing in Simple Packages, 2nd EditionFrom EverandIntroducing Python: Modern Computing in Simple Packages, 2nd EditionRating: 4 out of 5 stars4/5 (7)

- How to Make a Video Game All By Yourself: 10 steps, just you and a computerFrom EverandHow to Make a Video Game All By Yourself: 10 steps, just you and a computerRating: 5 out of 5 stars5/5 (1)

- Android Studio Iguana Essentials - Kotlin EditionFrom EverandAndroid Studio Iguana Essentials - Kotlin EditionRating: 5 out of 5 stars5/5 (1)

- App Empire: Make Money, Have a Life, and Let Technology Work for YouFrom EverandApp Empire: Make Money, Have a Life, and Let Technology Work for YouRating: 4 out of 5 stars4/5 (21)

- The Ultimate Python Programming Guide For Beginner To IntermediateFrom EverandThe Ultimate Python Programming Guide For Beginner To IntermediateRating: 4.5 out of 5 stars4.5/5 (3)

- Software Development: BCS Level 4 Certificate in IT study guideFrom EverandSoftware Development: BCS Level 4 Certificate in IT study guideRating: 3.5 out of 5 stars3.5/5 (2)