Professional Documents

Culture Documents

Standard Deviation and Variance

Uploaded by

Jannela yernaidu0 ratings0% found this document useful (0 votes)

6 views3 pagesmining

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this Documentmining

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

6 views3 pagesStandard Deviation and Variance

Uploaded by

Jannela yernaidumining

Copyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 3

Standard Deviation and Variance

The standard deviation is the most commonly used

measure for variability. This measure is related to the

distance between the observations and the mean. For

example, suppose we have the following range of

numbers: 10, 20, 30, 40, 50, 60, 70, 80, 90, and 100.

The mean is 55 ((10 + 20 + 30 …. + 100) / 10). How

can the variability around the mean be best defined?

Taking all distances from the mean together is

inappropriate as this would result in the range: -45 (= 10

– 55), -35, -25, -15, -5, 5, 15, 25, 35 and 45. The sum of

this range is always 0, which of course is not

informative of the variability. It is more appropriate to

turn all distances into absolute distances (that is,

multiplying the negative numbers by -1). The sum then

amounts to 250 (45 + 35 + 25 + 15 + 5 + 5 + 15 + 25 +

35 + 45). This sum, divided by the number of

observations, yields the mean distance: 250 / 10 = 25.

However, this absolute measure is not often used

because it does not relate well to inferential statistics

(see chapter 3).

Another strategy is to sum the squared distances (a

negative score turns positive when squared). This results

in a sum of 8250 (= -452 + -352 + -252 + -152 + -52 + 52

+ 152 + 252 + 352 + 452 = 2025 + 1225 + 625 + 225 + 25

+ 25 + 225 + 625 + 1225 + 2025). By dividing this sum

by the number of observations (10), the average squared

distance to the mean equals 825. In statistics, this

number is known as the variance. The variance can be

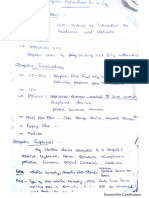

compared to the area of a square (see Figure 2.20)

Sides = 28.72

Area = 28.72 * 28.72 = 825

Figure 2.20 Variance Compared to the Area of a Square

In statistics, the measure of variability is preferably

indicated as a distance instead of a squared distance

(i.e., a square). The square root of 825 (= 28.72) is taken

(this value equals the length of the sides in Figure 2.20)

and the resulting measure is called the standard

deviation.i Roughly, the standard deviation can be

interpreted as the average distance from the mean,

although mathematically this is not correct.ii

i

In our example on p. 38 we divided by the total number of

n 2

∑ ( xi − µ )

2

observations (n): σ =

i

n . The standard deviation is

obtained by taking the square root: σ . These formulas

2

are correct when dealing with a population. In a random

sample the best (unbiased) estimates for both variance and

standard error in the population are obtained with n-1 as the

devisor:

n 2

∑ ( xi − x )

s2 = i

, the standard deviation (s) in the random

n −1

2

sample is s .

With n – 1 the variance within a random sample (s2) is an

unbiased approximation of the variance within the

population (σ2). Division by n would result in a systematic

underestimation of σ2. The reason for this bias is

interdependency: when all observations are summed, the

mean is no longer unknown (dividing the total sum by n

yields the mean). In statistics this interdependency is

expressed as the degrees of freedom (df). In this case df

equals n – 1. This simply means that n – 1 observations and

the mean suffice to calculate the exact value of the last

observation. In statistical packages, including SPSS, s2 and

s are calculated.

ii

The variance is the average squared distance to the mean.

However, it is erroneous to think that the standard deviation

(the square root of the variance) equals the average distance

to the mean. If one takes the square root from a sum of

numbers, then the outcome is not equal to the square root

taken from the sum of the same but squared numbers.

Example: √(22 + 32) = √(4 + 9) = 3,6 but 2 + 3 = 5.

However, we believe that a concept like the standard

deviation is better understood if one defines it roughly as

‘the average distance to the mean’.

You might also like

- Cam Unit - 1Document8 pagesCam Unit - 1Jannela yernaiduNo ratings yet

- Total Drilling Cost: AC BHMT's Value PropositionDocument23 pagesTotal Drilling Cost: AC BHMT's Value PropositionJannela yernaiduNo ratings yet

- Water 09 00796 v3Document20 pagesWater 09 00796 v3Jannela yernaiduNo ratings yet

- Vyoma Epaper 07 08Document10 pagesVyoma Epaper 07 08Jannela yernaiduNo ratings yet

- Modeling and SimulationDocument9 pagesModeling and SimulationJannela yernaiduNo ratings yet

- Cable Fault LocationDocument3 pagesCable Fault LocationJannela yernaiduNo ratings yet

- Preview Sri Ramakrishna Prabha September 201802877Document10 pagesPreview Sri Ramakrishna Prabha September 201802877Jannela yernaidu100% (1)

- Initiation SystemsDocument165 pagesInitiation SystemsJannela yernaiduNo ratings yet

- GroundControlforUndergroundMines PDFDocument72 pagesGroundControlforUndergroundMines PDFJannela yernaiduNo ratings yet

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (537)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (400)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (588)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (895)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (345)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (121)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (821)

- Flexible Perovskite Solar CellsDocument31 pagesFlexible Perovskite Solar CellsPEDRO MIGUEL SOLORZANO PICONNo ratings yet

- BS DanielDocument6 pagesBS Danielsandy30694No ratings yet

- Just in Time AlgebraDocument289 pagesJust in Time AlgebraamaiscNo ratings yet

- Klüberpaste HS 91-21 EN enDocument4 pagesKlüberpaste HS 91-21 EN entroy2k0No ratings yet

- FluidsDocument10 pagesFluidslara alghamdiNo ratings yet

- Solution Manual For Modern Quantum Mechanics 2nd Edition by SakuraiDocument13 pagesSolution Manual For Modern Quantum Mechanics 2nd Edition by Sakuraia440706299No ratings yet

- SampleDocument43 pagesSampleSri E.Maheswar Reddy Assistant ProfessorNo ratings yet

- Speech Enhancement Using Minimum Mean-Square Error Short-Time Spectral Amplitude EstimatorDocument13 pagesSpeech Enhancement Using Minimum Mean-Square Error Short-Time Spectral Amplitude EstimatorwittyofficerNo ratings yet

- Unit 2 - Introduction To Java - Solutions For Class 9 ICSE APC Understanding Computer Applications With BlueJ Including Java Programs - KnowledgeBoatDocument8 pagesUnit 2 - Introduction To Java - Solutions For Class 9 ICSE APC Understanding Computer Applications With BlueJ Including Java Programs - KnowledgeBoatGopi Selvaraj67% (3)

- TractionDocument26 pagesTractionYogesh GurjarNo ratings yet

- Fpls 12 764103Document10 pagesFpls 12 764103Pawan MishraNo ratings yet

- 02 Minerals Library Basic Objects 5p1s4aDocument113 pages02 Minerals Library Basic Objects 5p1s4aman_y2k100% (1)

- CA-Clipper For DOS Version 5.3 Programming and Utilities GuideDocument718 pagesCA-Clipper For DOS Version 5.3 Programming and Utilities GuideChris Harker91% (11)

- Prediction of Graduate Admission IEEE - 2020Document6 pagesPrediction of Graduate Admission IEEE - 2020Anu RamanujamNo ratings yet

- Creating Interfaces For The SAP Application Interface Framework With Service Implementation WorkbenchDocument12 pagesCreating Interfaces For The SAP Application Interface Framework With Service Implementation WorkbenchKrishanu DuttaNo ratings yet

- Dish Washer Mcachine ProjectDocument43 pagesDish Washer Mcachine ProjectVijay Powar60% (5)

- How To Upload Excel File Into Internal Table With Required FormatDocument2 pagesHow To Upload Excel File Into Internal Table With Required FormatErick ViteNo ratings yet

- Parola A Do ZDocument8 pagesParola A Do ZjovicaradNo ratings yet

- Math 11-CORE Gen Math-Q2-Week 1Document26 pagesMath 11-CORE Gen Math-Q2-Week 1Christian GebañaNo ratings yet

- Abb Sattcon ComliDocument17 pagesAbb Sattcon Comlilgrome73No ratings yet

- Chapter 6: Fatigue Failure: Introduction, Basic ConceptsDocument21 pagesChapter 6: Fatigue Failure: Introduction, Basic ConceptsNick MezaNo ratings yet

- Recent Developments On The Interpretation of Dissolved Gas Analysis in TransformersDocument33 pagesRecent Developments On The Interpretation of Dissolved Gas Analysis in TransformersputrasejahtraNo ratings yet

- Asset Failure Detention Codes (ICMS - PAM - ZN - SECR 312)Document5 pagesAsset Failure Detention Codes (ICMS - PAM - ZN - SECR 312)mukesh lachhwani100% (1)

- LISTA PRECIOS CORPORATIVOS - Garantia en Partes de Diez (10) MesesDocument10 pagesLISTA PRECIOS CORPORATIVOS - Garantia en Partes de Diez (10) MesesJavier DavidNo ratings yet

- BGP Tutorial SimplifiedDocument41 pagesBGP Tutorial SimplifiedAashish ChaudhariNo ratings yet

- CN101A Timer ManualDocument2 pagesCN101A Timer ManualMauricioVilalvaJr.0% (1)

- Lab Guide #2 Coulombs LawDocument3 pagesLab Guide #2 Coulombs LawJoriel CruzNo ratings yet

- Experimental Study of Estimating The Subgrade Reaction ModulusDocument6 pagesExperimental Study of Estimating The Subgrade Reaction ModulusIngeniero EstructuralNo ratings yet

- Article On Online ShoppingDocument23 pagesArticle On Online ShoppingsaravmbaNo ratings yet

- HNBR Material TestDocument16 pagesHNBR Material TestskyerfreeNo ratings yet