Professional Documents

Culture Documents

M1-CS405 Computer System Architecture-Ktustudents - in

Uploaded by

joel varghese thomasOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

M1-CS405 Computer System Architecture-Ktustudents - in

Uploaded by

joel varghese thomasCopyright:

Available Formats

CS 405

COMPUTER SYSTEM

KTUStudents.in

ARCHITECTURE

SUFYAN P

Assistant Professor

sufyan@meaec.edu.in

Computer Science and engineering

MEA Engineering College, Perinthalmanna

For more study materials: WWW.KTUSTUDENTS.IN

TEXT BOOK:

K. Hwang and Naresh

KTUStudents.in

Jotwani, Advanced

Computer Architecture,

Parallelism,

Scalability,

Programmability, TMH,

2010.

For more study materials: WWW.KTUSTUDENTS.IN

Introduction to advanced computer

architecture

❖ Computer Organization:

• It refers to the operational units and their interconnections

that realize the architectural specifications.

• It describes the function of and design of the various units of

digital computer that store and process information

KTUStudents.in

❖ Computer hardware:

• Consists of electronic circuits, displays, magnetic and optical

storage media, electromechanical equipment and

communication facilities.

❖ Computer Architecture:

• It is concerned with the structure and behavior of the

computer.

• It includes the information formats, the instruction set and

techniques for addressing memory.

For more study materials: WWW.KTUSTUDENTS.IN

Introduction to advanced computer

architecture

Syllabus

• Basic concepts of parallel computer models

• SIMD computers

KTUStudents.in

• Multiprocessors and multi-computers

• Cache Coherence Protocols

• Multicomputers

• Pipelining computers and Multithreading

For more study materials: WWW.KTUSTUDENTS.IN

MODULE-1

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

CONTENTS

• Parallel computer models

o Evolution of Computer Architecture

o System Attributes to performance.

•

•

KTUStudents.in

Amdahl's law

Multiprocessors and Multicomputers,

• Multivector and SIMD computers,

• Architectural development tracks

• Conditions of parallelism.

For more study materials: WWW.KTUSTUDENTS.IN

Evolution of computer

KTUStudents.in

architecture

For more study materials: WWW.KTUSTUDENTS.IN

INTRODUCTION

• Study of computer architecture involves

both

o Hardware organization

o Programming

• Evolution of computer architecture started

KTUStudents.in

with Von Neumann Architecture

o Build as a sequential machine

o Executing scalar data

• Major advancement came due to following

techniques

o Look-ahead technique

o Parallelism & pipelining

o Flynn’s classification

o Parallel / vector computers

For more study materials: WWW.KTUSTUDENTS.IN

Look-ahead Technique

• Introduced for enabling instruction prefetching

• Used to overlap I/E operations

KTUStudents.in

o I/E➔ instruction fetch and execute

• Enable functional parallelism

o Different functions are distributed & they are performed concurrently

by processes or threads across different processors

For more study materials: WWW.KTUSTUDENTS.IN

Look-ahead Technique[2]

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

Flynn’s classification

• Classification is based on

o Instruction stream

o Data streams

• Classifications are:-

KTUStudents.in

o SISD(Single instruction stream over single data stream)

• E.g.: conventional sequential machines

o SIMD(single instruction stream over multiple data stream)

• E.g.: Vector computers

o MIMD

• E.g.: parallel computers

o MISD

• E.g.: special purpose computers

For more study materials: WWW.KTUSTUDENTS.IN

Pipelining

• Pipelining is a technique where multiple instructions are

overlapped during execution.

• Pipeline is divided into stages and these stages are

KTUStudents.in

connected with one another to form a pipe like structure.

• Instructions enter from one end and exit from another

end.

• Pipelining increases the overall instruction throughput.

For more study materials: WWW.KTUSTUDENTS.IN

Parallel computers

• Executions are carried out simultaneously

• 2 classes

o Shared memory multiprocessors

KTUStudents.in

o Message passing multicomputers

• Distinction lies in

o Memory sharing

o Interprocessor communication

For more study materials: WWW.KTUSTUDENTS.IN

Shared memory multiprocessor

• Processors in the multiprocessor system uses common

memory

• They communicate using shared variables

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

Message passing multicomputer

• Each computer node in the multicomputer system has a

local memory

KTUStudents.in

• Interprocessor communication done via message passing

For more study materials: WWW.KTUSTUDENTS.IN

Vector processor

• It is a processor whose instructions operate on

vector data

• 2 families of vector processor

o Memory to memory

o Register to register

KTUStudents.in

• Memory to memory architecture

o supports pipelined flow of vector operands directly from memory to

pipelines & then back to the memory

• Register to register architecture

o Uses registers to interface between memory & pipelines

For more study materials: WWW.KTUSTUDENTS.IN

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

System attributes to performance

• Ideal performance of a system demands perfect

matching between

o machine capability

o program behavior

• Machine capability can be enhanced via

KTUStudents.in

o Better h/w technology

o Innovative architectural features

o Efficient resource management

• Factors affecting program behavior

o Algorithm design

o Data structures

o Language efficiency

o Programmer skill

o Compiler technology

For more study materials: WWW.KTUSTUDENTS.IN

Performance factors

• Cycle time τ

• Clock rate f

o f= 1/τ

• CPI (cycles per instruction)

•

•

KTUStudents.in

Instruction count Ic

Processor cycle p

• Memory cycle m

• Ratio between memory cycle & processor cycle k

For more study materials: WWW.KTUSTUDENTS.IN

• Cycle time

o Time taken to complete once clock cycle

• Clock rate

o Inverse of cycle time

• Instruction count

o No: of machine instructions to be executed in a program

KTUStudents.in

• CPI (cycles per instruction)

o No: of cycles taken to execute one instruction

For more study materials: WWW.KTUSTUDENTS.IN

CPU time (T)

• CPU time is the time needed to execute a program

• It depends on following factors

o Ic

o CPI

o Cycle time

KTUStudents.in

• T = Ic * CPI* τ ………………..( 1)

• Instruction execution involves a cycle of events like

o Instruction fetch ➔ memory access

o Decode ➔ carried out by CPU

o Operand fetch ➔ memory access

o Execution ➔ carried out by CPU

o Store result ➔ memory access

For more study materials: WWW.KTUSTUDENTS.IN

CPI (cycles per instruction)

• It can be divided into 2 component terms

o Processor cycles p

o Memory cycles m

• Eq (1) can be replaced as

KTUStudents.in

o T= Ic * (p+m*k)* τ……..(2)

• p➔ no: of processor cycles needed for inst decode & execution

• m➔ no: of memory reference needed

• k➔ ratio between memory and processor cycles

• τ➔ processor cycle time

For more study materials: WWW.KTUSTUDENTS.IN

• Memory cycle

o Time needed to complete one memory reference

o Denoted as m

o k➔ depends on

• speed of cache

• memory technology

KTUStudents.in

• Processor memory interconnection scheme

For more study materials: WWW.KTUSTUDENTS.IN

• C ➔ total no: of clock cycles needed to execute a program (n

instructions)

• CPI➔ no: of clock cycles needed to execute single instruction

• CPI= C ………(3)

KTUStudents.in

Ic

• The eq1.1 can be rewritten as following

• T= Ic * CPI* τ ➔ T=Ic *C*τ

Ic

➔ T= C * τ ……….(4)

➔ T= C ………….(5)

f

For more study materials: WWW.KTUSTUDENTS.IN

System attributes

• 5 Performance factors( Ic, p,m,k, τ) are

influenced by 4 system attributes

o Instruction set architecture

o Compiler technology

o

o

KTUStudents.in

CPU implementation & control

Cache and memory hierarchy

• The instruction-set architecture affects the program

length (Ic) and processor cycle needed (p).

• The compiler technology affects the values of IC , p, and

the memory reference count (m).

For more study materials: WWW.KTUSTUDENTS.IN

MIPS rate (million instructions per

second)

• MIPS rate is based on following factors

o Clock rate f

o Instruction count Ic

o CPI of given machine

• MIPS rate= Ic ………(6)

KTUStudents.in

T * 106

• In terms of eq 1.1, the above eq can be rewritten as

following

Ic ➔f …..(7) ➔ f

Ic * CPI * τ * 106 CPI * 106 C/Ic * 106

➔ Ic * f ………(8)

MIPS rate = C * 106

For more study materials: WWW.KTUSTUDENTS.IN

Throughput rate

• CPU throughput Wp

• It is the measure of how many programs can be executed

per second, based on the MIPS rate & average program

length

KTUStudents.in

• Wp = f ……..(9)

Ic * CPI

For more study materials: WWW.KTUSTUDENTS.IN

Problem 1

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

solution

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

• Total no: of cycles required to execute complete

program

➔ 45000+64000+30000+16000

➔ 155000 cycles

• C=155000 cycles

KTUStudents.in

• Effective CPI= C/Ic

➔ 155000/100000

CPI = 1.55

For more study materials: WWW.KTUSTUDENTS.IN

• MIPS rate= f/CPI*106

= 40/ 1.55*106

=40*106/1.55*106

KTUStudents.in

= 25.8

For more study materials: WWW.KTUSTUDENTS.IN

• Given f=40 MHz ➔ τ =1/40

• T = Ic * CPI * τ

• = 100000*1.55*1/40

KTUStudents.in

• =100000*1.55*0.025

• =3875

• = 3.875 ms

For more study materials: WWW.KTUSTUDENTS.IN

Problem 2

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

Floating point operations per second

• Most computer applications use floating point

operations

• For those application, performance is measured using

FLOPS

KTUStudents.in

• Flops➔ no : of floating point operations per second

For more study materials: WWW.KTUSTUDENTS.IN

Implicit parallelism

o In this approach conventional languages like C,C++, Fortran is

used to write the source program

o Sequentially coded source program is translated to a parallel

object code

KTUStudents.in

o This is done by compiler

o Compiler must be able to detect parallelism

For more study materials: WWW.KTUSTUDENTS.IN

Explicit parallelism

• More effort is needed by the programmer

• Source program is developed using the parallel dialects

of C,C++,fortran

• Parallelism is explicitly specified in the source program

KTUStudents.in

• Compiler need not detect parallelism

• Reduce the burden of compiler

For more study materials: WWW.KTUSTUDENTS.IN

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

MULTIPROCESSORS &

KTUStudents.in

MULTICOMPUTERS

For more study materials: WWW.KTUSTUDENTS.IN

Introduction

• Parallel computers are divided into 2

o Shared memory multiprocessors

o Message passing multicomputers

KTUStudents.in

• Their difference is based on memory

o One has shared common memory

o Other has unshared distributed memory

For more study materials: WWW.KTUSTUDENTS.IN

Shared memory multiprocessor

• 3 models

o UMA model (uniform memory access)

o NUMA model (non-uniform memory access)

KTUStudents.in

o COMA model (cache only memory architecture)

• These models differ in how the memory &

peripheral resources are shared or distributed

For more study materials: WWW.KTUSTUDENTS.IN

UMA model

• Physical memory is uniformly shared by all the

processors

• All processors have equal access time

• Peripherals are also shared

KTUStudents.in

• Due to this high degree of resource sharing,

multiprocessors are also called as tightly coupled

systems

• Communication & synchronization b/w processors are

done via shared variables

• System interconnection is done using

o Bus

o Crossbar switch

o Multistage network

For more study materials: WWW.KTUSTUDENTS.IN

UMA model

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

UMA model

• UMA is sometimes called CC-UMA - Cache Coherent

UMA.

KTUStudents.in

• Cache coherent means if one processor updates a

location in shared memory, all the other processors

know about the update

For more study materials: WWW.KTUSTUDENTS.IN

Advantages

• Suitable for general purpose & time sharing

applications by multiple users

KTUStudents.in

• Speed up the execution of a single large program

in time critical applications

For more study materials: WWW.KTUSTUDENTS.IN

Symmetric Vs asymmetric

multiprocessor system

• Symmetric multiprocessor system

o All processor have equal access to all peripheral devices

o All processor are equally capable of running executive

KTUStudents.in

programs such as OS kernel, I/O routines

• Asymmetric multiprocessor system

o Only one or subset of processors are executive-capable

o Master processor (MP) can execute OS and I/O routines

o Remaining have no I/O capabilities

o Remaining processors are called as attached processors

(AP)

o AP executes user codes under the supervision of master

processors

For more study materials: WWW.KTUSTUDENTS.IN

NUMA model

• It is a shared memory system in which the access time

varies with the location of memory word

• There are two NUMA models

KTUStudents.in

o Shared local memory model

o Hierarchical cluster model

For more study materials: WWW.KTUSTUDENTS.IN

Shared local memory model

• Shared memory is physically distributed to all

processors

• They are called as local memories

KTUStudents.in

o Collection of local memories forms a global address space

o This is accessible by all processors

• Access variations

o Access to the local memory attached with a local processor is faster

o Access of remote memory attached with other processors takes longer

time

• This is due to delay in interconnection n/w

For more study materials: WWW.KTUSTUDENTS.IN

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

Hierarchical cluster model

• Processors are divided into several clusters

• Each cluster itself is a UMA or NUMA multiprocessor

• Clusters are connected to global shared memory

modules (GSM)

KTUStudents.in

o All clusters have equal access to global memory

• All processors belonging to same cluster uniformly

access the cluster shared memory modules (CSM)

• Access time to CSM is shorter than GSM

• Access rights to inter cluster memories can be specified

in various ways

For more study materials: WWW.KTUSTUDENTS.IN

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

COMA model

• Its is a multiprocessor using only the cache memory

• Special case of NUMA

• Distributed main memories are converted to caches

KTUStudents.in

• No memory hierarchy

• All caches form a global address space

• Remote cache access is assisted by distributed cache

directories

For more study materials: WWW.KTUSTUDENTS.IN

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

Representative multiprocessors

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

Message passing multicomputer

• Also called as distributed- memory multicomputer

• This system consist of multiple computers known as

nodes

• Nodes are interconnected by message passing n/w

KTUStudents.in

• Each node is an autonomous computer consisting

o Processor

o Local memory

o Attached disks or I/O peripherals

For more study materials: WWW.KTUSTUDENTS.IN

Message passing multicomputer

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

Message passing multicomputer

• Message passing n/w provide point to point static

connections among nodes

• All local memories are private & are accessible only by

KTUStudents.in

local processors

• Therefore they are also called as no-remote-memory

access machines (NORMA)

• Inter-node communications are carried out by message

passing

For more study materials: WWW.KTUSTUDENTS.IN

Advantages

• Scalability

• Fault tolerance

• Suitable for certain applications

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

Representative multicomputer

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

MULTIVECTOR AND SIMD

COMPUTERS

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

Introduction

• In this section we introduce super computer and parallel

processors for vector processing and data parallelism

KTUStudents.in

• Supercomputers are classified as

o Vector supercomputers

• Uses powerful processors equipped with vector hardware

o SIMD supercomputers

• Provide massive data parallelism

For more study materials: WWW.KTUSTUDENTS.IN

Supercomputers

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

Vector supercomputers

• A vector computer is build on top of a scalar processor

o Ie a vector processor is attached to the scalar processor

• Host computer loads the program & data to the main

memory

KTUStudents.in

• Scalar control unit decodes all the instructions

• If the decoded instruction is a scalar operation or a

program control operation,

o it is directly executed by the scalar processor

o Execution is done using scalar functional pipelines

For more study materials: WWW.KTUSTUDENTS.IN

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

• If the decoded instruction is a vector operation,

o It is sent to vector control unit

• Vector control unit

o It supervise the flow of vector data b/w main memory & vector

functional pipelines

o It coordinates the vector data flow

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

Vector processor models

• Register to register architecture

o Vector registers are used to hold the following

• Vector operands

• Intermediate vector results

KTUStudents.in

• Final vector results

o Vector functional pipelines receives operands from

this registers & put the results back to these registers

o All vector registers are programmable

o Each vector register has a component counter

• It keeps track of component registers used in

successive pipeline cycles

For more study materials: WWW.KTUSTUDENTS.IN

Vector processor models

• Memory to memory architecture

KTUStudents.in

• A vector stream unit is used instead of vector registers

• Vector operands & results are directly retrieved from

and stored into the main memory

• Eg: Cyber 205

For more study materials: WWW.KTUSTUDENTS.IN

Examples of vector supercomputers

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

SIMD Supercomputers

• Computers with multiple processing elements (PE)

• They perform the same operation on multiple data

points simultaneously,

KTUStudents.in

• Operational model of SIMD computers are specified

using 5 tuple

• M=(N,C,I,M,R)

For more study materials: WWW.KTUSTUDENTS.IN

SIMD Supercomputers

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

• N➔ no of processing elements(PE) in the machine

• C➔ set of instructions directly executed by CU

• I➔ set of instructions broadcasted by CU to all PE’s for

parallel execution

o This include arithmetic, logic, data routing operations

KTUStudents.in

executed by each PE over the data within that PE

• M➔ set of masking schemes

o Each mask partitions set of PE’s to enabled & disabled

subsets

• R➔ set of data routing functions

o Used in interconnection n/w for inter PE

communications

For more study materials: WWW.KTUSTUDENTS.IN

Examples of SIMD supercomputer

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

Amdahl’s law[1]

• It is named after computer scientist Gene Amdahl( a

computer architect from IBM and Amdahl corporation),

It is also known as Amdahl’s argument.

• It is a formula which gives the theoretical speedup in

KTUStudents.in

latency of the execution of a task at a fixed workload that

can be expected of a system whose resources are

improved.

• In other words, it is a formula used to find the maximum

improvement possible by just improving a particular

part of a system.

• It is often used in parallel computing to predict the

theoretical speedup when using multiple processors.

For more study materials: WWW.KTUSTUDENTS.IN

Amdahl’s law[2]

• Speed-up

• Speedup is defined as the ratio of performance for the

KTUStudents.in

entire task using the enhancement and performance for

the entire task without using the enhancement

• OR

• Speedup can be defined as the ratio of execution time

for the entire task without using the enhancement and

execution time for the entire task using the

enhancement.

For more study materials: WWW.KTUSTUDENTS.IN

Amdahl’s law[3]

• If Pe is the performance for entire task using the

enhancement when possible,

• Pw is the performance for entire task without using the

enhancement,

KTUStudents.in

• Ew is the execution time for entire task without using

the enhancement and

• Ee is the execution time for entire task using the

enhancement when possible then,

• Speedup = Pe/Pw

or

Speedup = Ew/Ee

For more study materials: WWW.KTUSTUDENTS.IN

Amdahl’s law[4]

• Amdahl’s law uses two factors to find speedup from

some enhancement –

• Fraction enhanced

KTUStudents.in

• Speedup enhanced

For more study materials: WWW.KTUSTUDENTS.IN

Amdahl’s law[5]

• Fraction enhanced –

• The fraction of the computation time in the original computer

that can be converted to take advantage of the enhancement.

KTUStudents.in

• For example- if 10 seconds of the execution time of a program

that takes 40 seconds in total can use an enhancement , the

fraction is 10/40. This obtained value is Fraction Enhanced.

• Fraction enhanced is always less than 1. (<1)

For more study materials: WWW.KTUSTUDENTS.IN

Amdahl’s law[6]

• Speedup enhanced –

• The improvement gained by the enhanced execution

mode; that is, how much faster the task would run if the

KTUStudents.in

enhanced mode were used for the entire program.

• For example – If the enhanced mode takes, say 3 seconds

for a portion of the program, while it is 6 seconds in the

original mode, the improvement is 6/3. This value is

Speedup enhanced.

• Speedup Enhanced is always greater than 1. (>1)

For more study materials: WWW.KTUSTUDENTS.IN

Amdahl’s law[7]

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

KTUStudents.in

THANK YOU

For more study materials: WWW.KTUSTUDENTS.IN

CONDITIONS OF PARALLELISM

KTUStudents.in

For more study materials: WWW.KTUSTUDENTS.IN

Introduction

• To execute several program segments in parallel, each

segment has to be independent of the other segment

• Dependency is the main challenge of parallelism

• Dependence graph shows the relation between program

KTUStudents.in

statements

o Nodes of dependence graph➔ program statements

o Directed edges with labels➔ relations among the

statements

For more study materials: WWW.KTUSTUDENTS.IN

Types of dependences

• Data dependence

KTUStudents.in

• Control dependence

• Resource dependence

For more study materials: WWW.KTUSTUDENTS.IN

DATA DEPENDENCE

• Ordering relationship b/w statements are

indicated by data dependence

• Types

KTUStudents.in

o Flow dependence

o Anti-dependence

o Output dependence

o I/O dependence

o Unknown dependence

For more study materials: WWW.KTUSTUDENTS.IN

Flow dependence

• A statement S2 is flow dependent on statement S1,

• if an execution path exists from S1 to S2, and if at least

one output of S1 is fed as an input to S2

• Denoted as S1➔S2

o Eg: consider following instruction

KTUStudents.in

• S1: LOAD R1,A

• S2: ADD R2,R1

• S2 is flow dependent on S1

o Coz o/p of S1 is fed as i/p of S2

o Ie variable A is passed into register R1

For more study materials: WWW.KTUSTUDENTS.IN

Anti-dependence

• Statement S2 is anti-dependent on statement S1 if,

• S2 follows S1 in program order and if o/p of S2 overlaps

the input to S1

• Denoted using a direct arrow crossed with a bar

• S1

KTUStudents.in

S2

o Eg: consider the following statements

o S2: ADD R2,R1

o S3: move R1,R3

• S3 is anti-dependent on S2 since S3 is overlapping the

input to S2

• Ie conflicts in the register content of R1

For more study materials: WWW.KTUSTUDENTS.IN

Output dependence

• Two statements are output dependent if they produce

the same output variable

• Denoted as S1 S2

• Eg:

KTUStudents.in

• S1: LOAD R1,A

• S3: MOVE R1,R3

• S3 is output dependent on S1 coz they both modify the

same register R1

For more study materials: WWW.KTUSTUDENTS.IN

I/O dependence

• I/O dependence occurs if same file is referenced by

both I/O statements

• Read and write are the I/O statements

• Eg:

•

•

S1:

S2:

KTUStudents.in

READ(4),A(i)

PROCESS

• S3: WRITE(4),B(I)

• S4: CLOSE(4)

• S1 and S3 are I/O dependent since both access the

same file

For more study materials: WWW.KTUSTUDENTS.IN

Control dependence

• Implies that order of execution of statements cannot be

determined before runtime

• Conditional statements will not be resolved until

runtime

KTUStudents.in

• Conditional branching may eliminate or introduce data

dependencies

• Control dependency prohibits parallelism

• Solution

o Compiler techniques

o Hardware Branch prediction technique

For more study materials: WWW.KTUSTUDENTS.IN

Resource dependence

• It is concerned with the conflicts in using shared

resources in parallel areas

• Shared resources are

o Integer units

o Floating point units

KTUStudents.in

o Registers

o Memory areas

• When conflicting resource is ALU➔ALU dependence

• If conflict involve workplace storage➔ storage

dependence

For more study materials: WWW.KTUSTUDENTS.IN

Hardware and software parallelism

• To implement parallelism we require

o Hardware support

o Software support

• Joint efforts from hardware designers & software

KTUStudents.in

programmers are required to exploit parallelism

For more study materials: WWW.KTUSTUDENTS.IN

Hardware parallelism

• This is a type of parallelism defined by machine

architecture & hardware multiplicity

• It is a function of cost & performance tradeoffs

• Indicates peak performance of processor resources

KTUStudents.in

• One way to characterize parallelism in processor

o No: of inst issues per machine cycle

• If a processor issues k inst per cycle➔ k-issue

processor

• If a processor issues 1 inst per cycle➔ one-issue

processor

o Eg: conventional pipelined processor

• i960CA➔ three-issue machine

For more study materials: WWW.KTUSTUDENTS.IN

Software Parallelism

• This type of parallelism is revealed in program profile or

program flow graph

o Flow graph displays the simultaneously executable operations

• Software parallelism is a function of

KTUStudents.in

o Algorithm

o Programming style

o Program design

• Types of software parallelism

o Control parallelism

o Data parallelism

For more study materials: WWW.KTUSTUDENTS.IN

Control Parallelism

• Allows 2 or more operations to be performed

simultaneously

• Control parallelism is achieved using

o pipelining

KTUStudents.in

o Multiple functional units

• Limitations

o Length of pipeline

o Multiplicity of functional units

• Pipelining & functional parallelism are handled by h/w

• Programmers need not take any special efforts to invoke

them

For more study materials: WWW.KTUSTUDENTS.IN

Data Parallelism

• Same operation is performed over many data elements

by many processors simultaneously

• Offers highest potential for concurrency

• Practiced in SIMD & MIMD modes

KTUStudents.in

• Data parallel code is easy to write & debug than control

parallel code

For more study materials: WWW.KTUSTUDENTS.IN

Role of compilers

• Compiler techniques are used to improve performance

• Early compilers which exploits parallelism are:-

o CDC STACKLIB

o Cray CFT

KTUStudents.in

• Features included in existing compilers to improve

parallelism are:-

o Loop transformation

o s/w pipelining

• To exploit parallelism to the most,

o Design the compiler & h/w jointly at the same time

For more study materials: WWW.KTUSTUDENTS.IN

You might also like

- Rohini 74684926776Document24 pagesRohini 74684926776gynoceNo ratings yet

- CSC580 Quick Notes Lect1and2Document18 pagesCSC580 Quick Notes Lect1and2Muhammad IkhmalNo ratings yet

- Faculty of Engineering: ECE 4240 - Microprocessor InterfacingDocument3 pagesFaculty of Engineering: ECE 4240 - Microprocessor InterfacingDheereshNo ratings yet

- CSE4001: Parallel and Distributed Computing FundamentalsDocument63 pagesCSE4001: Parallel and Distributed Computing FundamentalsANTHONY NIKHIL REDDYNo ratings yet

- Basics of Parallel Programming: Unit-1Document79 pagesBasics of Parallel Programming: Unit-1jai shree krishnaNo ratings yet

- Parallel Computer Models: CSE7002: Advanced Computer ArchitectureDocument37 pagesParallel Computer Models: CSE7002: Advanced Computer ArchitectureAbhishek singhNo ratings yet

- AmmuDocument17 pagesAmmualphiyaktom05No ratings yet

- CC unit 1Document24 pagesCC unit 1hawih58680No ratings yet

- Parallel Processing Topic OverviewDocument18 pagesParallel Processing Topic OverviewwmanjonjoNo ratings yet

- 5 Underlying Principles of Distributed - SoftwareDocument32 pages5 Underlying Principles of Distributed - Softwareselvajoe821No ratings yet

- Lecture 1 Introduction To PDCDocument17 pagesLecture 1 Introduction To PDCnimranoor137No ratings yet

- Introduction To Parallel ProcessingDocument47 pagesIntroduction To Parallel ProcessingMilindNo ratings yet

- Term Paper Cse 211Document20 pagesTerm Paper Cse 211Nancy GoyalNo ratings yet

- Aca Mod1Document125 pagesAca Mod1SadiaNo ratings yet

- ACA Notes Diginotes PDFDocument283 pagesACA Notes Diginotes PDFPrakhyath JainNo ratings yet

- Cloud Computing FundamentalsDocument31 pagesCloud Computing FundamentalsvizierNo ratings yet

- Opensees User Workshop 2003: P E E RDocument9 pagesOpensees User Workshop 2003: P E E RRian IbayanNo ratings yet

- The New Trends of Parallel ProcessingDocument5 pagesThe New Trends of Parallel ProcessingMohamed EL-FayomyNo ratings yet

- L1 IntroductionDocument12 pagesL1 IntroductionKarthik LaxmikanthNo ratings yet

- CH 1 Intro To Parallel ArchitectureDocument18 pagesCH 1 Intro To Parallel Architecturedigvijay dholeNo ratings yet

- 02 - Lecture #2Document29 pages02 - Lecture #2Fatma mansourNo ratings yet

- Lecture 1Document23 pagesLecture 1Lets clear Jee mathsNo ratings yet

- Areas in CSDocument21 pagesAreas in CSUday KumarNo ratings yet

- Comarch NewtemplateDocument3 pagesComarch NewtemplateVanie PascualNo ratings yet

- Lec 1Document21 pagesLec 1pritam044No ratings yet

- Al-Azhar University SCE 409 Computer ArchitectureDocument52 pagesAl-Azhar University SCE 409 Computer ArchitectureMohamed HelmyNo ratings yet

- Introduction and Course Outline: Advanced Operating Systems (M)Document21 pagesIntroduction and Course Outline: Advanced Operating Systems (M)Lokesh SharmaNo ratings yet

- Pendahuluan Paralel KomputerDocument167 pagesPendahuluan Paralel KomputerBudi SetiawanNo ratings yet

- 1.1.4 System Attributes To PerformanceDocument9 pages1.1.4 System Attributes To PerformanceVikram ShirolNo ratings yet

- Introduction To Parallel Computing LLNLDocument44 pagesIntroduction To Parallel Computing LLNLAntônio ArapiracaNo ratings yet

- HWSW Co Design Unit-1notesDocument195 pagesHWSW Co Design Unit-1notesswapna revuriNo ratings yet

- Data Parallel ArchitectureDocument17 pagesData Parallel ArchitectureSachin Kumar BassiNo ratings yet

- Research Paper Computer Architecture PDFDocument8 pagesResearch Paper Computer Architecture PDFcammtpw6100% (1)

- Application of Computer in EconomicsDocument194 pagesApplication of Computer in EconomicskanishkaNo ratings yet

- Design IssuesDocument12 pagesDesign IssuesAnonymous uspYoqENo ratings yet

- Parallel Computing TerminologyDocument11 pagesParallel Computing Terminologymaxsen021No ratings yet

- Applications of Computer in Educational ResearchDocument9 pagesApplications of Computer in Educational ResearchDr. Swarnima Jaiswal100% (1)

- Introduction to Parallel ComputingDocument15 pagesIntroduction to Parallel Computingsatish161188No ratings yet

- Unit 2Document76 pagesUnit 2Alex SonNo ratings yet

- Ch01 Section1 XP 051Document42 pagesCh01 Section1 XP 051watchstgNo ratings yet

- OS Embedded Systems CourseDocument22 pagesOS Embedded Systems CourseVinit PkNo ratings yet

- Lecture 1 - Overview of Distributed ComputingDocument71 pagesLecture 1 - Overview of Distributed ComputingĐình Vũ TrầnNo ratings yet

- Module 1 Chapter1Document37 pagesModule 1 Chapter1Usha Vizay KumarNo ratings yet

- Instructor: L. N. BhuyanDocument32 pagesInstructor: L. N. BhuyanAnkita SharmaNo ratings yet

- Ch1 Computing ParadigmsDocument18 pagesCh1 Computing Paradigmsmba20238No ratings yet

- Lecture 1 - Sec 2 - Introduction To Computer ArchitectureDocument17 pagesLecture 1 - Sec 2 - Introduction To Computer ArchitecturesaifsunnyNo ratings yet

- Computer Organization - IntroductionDocument69 pagesComputer Organization - IntroductiongmgaargiNo ratings yet

- Advanced Computer Architecture: Tran Ngoc Thinh HCMC University of TechnologyDocument46 pagesAdvanced Computer Architecture: Tran Ngoc Thinh HCMC University of TechnologyAnkit GuptaNo ratings yet

- Research Papers On Computer Architecture 2012Document5 pagesResearch Papers On Computer Architecture 2012wwvmdfvkg100% (1)

- Aca 4Document63 pagesAca 4Prateek SharmaNo ratings yet

- CS4230 Parallel Programming: Mary Hall August 21, 2012Document17 pagesCS4230 Parallel Programming: Mary Hall August 21, 2012tt_aljobory3911No ratings yet

- En BodyDocument2 pagesEn BodySarwar KhanNo ratings yet

- CEN510 VHDL Mod1 - IntroductionDocument48 pagesCEN510 VHDL Mod1 - IntroductionDaniel AgbajeNo ratings yet

- Cluster Computing: A Seminar Report Submitted ToDocument39 pagesCluster Computing: A Seminar Report Submitted ToVinayKumarSingh100% (5)

- Parallelism in Computer ArchitectureDocument27 pagesParallelism in Computer ArchitectureKumarNo ratings yet

- Computational Grids: Reasons, Applications and ChallengesDocument40 pagesComputational Grids: Reasons, Applications and ChallengesdaiurqzNo ratings yet

- Complete Lessonplan Aca 12 UnkiDocument19 pagesComplete Lessonplan Aca 12 UnkiSantosh DewarNo ratings yet

- Object-Oriented Technology and Computing Systems Re-EngineeringFrom EverandObject-Oriented Technology and Computing Systems Re-EngineeringNo ratings yet

- Design and Test Strategies for 2D/3D Integration for NoC-based Multicore ArchitecturesFrom EverandDesign and Test Strategies for 2D/3D Integration for NoC-based Multicore ArchitecturesNo ratings yet

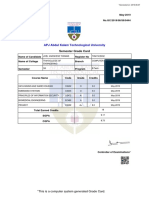

- Semester Grade CardDocument2 pagesSemester Grade Cardjoel varghese thomasNo ratings yet

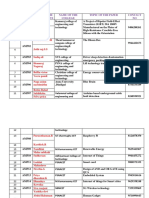

- My Grade CardDocument1 pageMy Grade Cardjoel varghese thomasNo ratings yet

- Myhc 27659 PDFDocument1 pageMyhc 27659 PDFghiniiNo ratings yet

- NAD Student Registration Process NAD IDDocument13 pagesNAD Student Registration Process NAD IDSteffinNelsonNo ratings yet

- TkmceDocument3 pagesTkmcejoel varghese thomasNo ratings yet

- Literature ReviewDocument4 pagesLiterature Reviewjoel varghese thomasNo ratings yet

- ACE College BTech Results 2015Document534 pagesACE College BTech Results 2015Sujith VargheseNo ratings yet

- Zapi Combi AC1Document84 pagesZapi Combi AC1ALEJO100% (1)

- Roland Fantom x6Document66 pagesRoland Fantom x6Blair Alward100% (1)

- Ilicore: 4 Channel Driver Motor Driver D5954Document6 pagesIlicore: 4 Channel Driver Motor Driver D5954CIACIACIACIACIACIANo ratings yet

- Philips Tpm17.7e LaDocument73 pagesPhilips Tpm17.7e LaBelkis Amion AlbonigaNo ratings yet

- LED Cube 4x4x4 DIY 3D LED DisplayDocument6 pagesLED Cube 4x4x4 DIY 3D LED DisplayDusan Petrovic100% (1)

- Accedian Networks EtherNID MetroNID 2pg - FINAL - 08.24.13 PDFDocument2 pagesAccedian Networks EtherNID MetroNID 2pg - FINAL - 08.24.13 PDFJesus Christ est digne d'adorationNo ratings yet

- Ngspice 27 ManualDocument624 pagesNgspice 27 ManualMile BelojicaNo ratings yet

- SIMULIA EM EMC For Electonics DatasheetDocument3 pagesSIMULIA EM EMC For Electonics Datasheetkutlu küçükvuralNo ratings yet

- PR 4Document6 pagesPR 4John Paul MoradoNo ratings yet

- Netbiter WS100 User Manual PDFDocument44 pagesNetbiter WS100 User Manual PDFCarlos Castillo UrrunagaNo ratings yet

- Slewing Controller SpecificationDocument7 pagesSlewing Controller SpecificationMohamed ElsayedNo ratings yet

- Neet Class Test Semiconductor Devices 2017Document4 pagesNeet Class Test Semiconductor Devices 2017umved singh yadavNo ratings yet

- Kalila Erving - CircuitBuilderSEDocument8 pagesKalila Erving - CircuitBuilderSECoco LolNo ratings yet

- Lecture - 5: DC-AC Converters: Ug - ProgramDocument54 pagesLecture - 5: DC-AC Converters: Ug - ProgramArife AbdulkerimNo ratings yet

- Introduction To The Network Communication TechnologyDocument4 pagesIntroduction To The Network Communication TechnologyRosalyn TanNo ratings yet

- Test Report Format - NSK5Document5 pagesTest Report Format - NSK5Rsp SrinivasNo ratings yet

- Manual Diagrama Detector de MetalesDocument18 pagesManual Diagrama Detector de MetalesEdmundo Cisneros0% (1)

- Keb F5 Frekventen RegulatorDocument378 pagesKeb F5 Frekventen RegulatorDaceDropNo ratings yet

- Jcoo-7ajs6m R0 enDocument5 pagesJcoo-7ajs6m R0 enOscar OjedaNo ratings yet

- IEEE 5 Bus System DataDocument7 pagesIEEE 5 Bus System Datasengsouvanhphaysaly phaysalyNo ratings yet

- Alarm Kebakaran Dengan Sensor Api Dan AsapDocument6 pagesAlarm Kebakaran Dengan Sensor Api Dan AsapDeny SetiadyNo ratings yet

- 05 FO - NP2006 LTE-FDD Link Budget 71P PDFDocument71 pages05 FO - NP2006 LTE-FDD Link Budget 71P PDFhamzaNo ratings yet

- Suvetha Devi Muthu Priya: S.No Batch NO Name of The Participants Name of The College Topic of The Paper Contact NODocument2 pagesSuvetha Devi Muthu Priya: S.No Batch NO Name of The Participants Name of The College Topic of The Paper Contact NOmanoj3e3790No ratings yet

- ADC Interfacing with 8051 for Data AcquisitionDocument5 pagesADC Interfacing with 8051 for Data AcquisitionSrideviKumaresanNo ratings yet

- Panasonic RX-D29 - en - User Manual PDFDocument12 pagesPanasonic RX-D29 - en - User Manual PDFFlorian Leordeanu0% (1)

- Design and Development of PCB Milling MachineDocument6 pagesDesign and Development of PCB Milling Machinesjcit.bracNo ratings yet

- MyScholar Arduino TrainingDocument42 pagesMyScholar Arduino TrainingPuan SitiNo ratings yet

- L Series IP Camera User ManualDocument24 pagesL Series IP Camera User ManualRicardo BarrazaNo ratings yet

- Recorder7 Data SheetDocument2 pagesRecorder7 Data SheetAnggitan HaryoNo ratings yet

- Data Logger System 7000Document8 pagesData Logger System 7000aishwarya badkulNo ratings yet