Professional Documents

Culture Documents

Ijcs 43 3 11 PDF

Ijcs 43 3 11 PDF

Uploaded by

Abdul KhaliqOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Ijcs 43 3 11 PDF

Ijcs 43 3 11 PDF

Uploaded by

Abdul KhaliqCopyright:

Available Formats

IAENG International Journal of Computer Science, 43:3, IJCS_43_3_11

______________________________________________________________________________________

Improving KNN Method Based on Reduced

Relational Grade for Microarray Missing Values

Imputation

Yun He, De-chang Pi

hybridization signal.

Abstract—Microarray gene expression data generally suffers Even though microarray technology is efficient, accurate

from missing values, which adversely affects downstream and low-cost, it still suffers from the problem of missing

analysis. A new similarity metric method called reduced values due to a variety of internal or external factors in

relational grade was proposed, based on which we further

presented an improved KNN method named RKNN imputation

experiments. The missing value can account for 10% and

algorithm for iteratively estimating microarray missing values. even in some cases, up to 90% of genes have one or more

Reduced relational grade is an improvement of gray relational missing values [2]. All of such issues as image corruption,

grade. The former can achieve the same performance as the hybridization failures, insufficient resolution, or dust and

latter, whereas the former can greatly reduce the time scratches on slide can cause gene missing values.

complexity. RKNN imputes missing data iteratively by Many data analysis methods, such as principal component

considering the reduced relational grade as similarity metric

and expanding the set of candidate genes for nearest neighbors analysis (PCA), singular value decomposition (SVD) and

with imputed genes, which increases the effect and performance hierarchical clustering, just can be applied with complete

of the imputation algorithm. We selected data sets of different datasets without missing values. Besides, it has been found

kind, such as time series, mixed and non-time series, and then that missing values impede microarray data analysis and law

experimentally evaluated the proposed method. The results discovery [4]. Experimenting repetitively is regarded as a way

demonstrate that the reduced relational grade is effective and

to solve missing data problem, but most of them are complex,

RKNN outperforms common imputation algorithms.

costly and time-consuming. Deleting incomplete genes before

Index Terms—Gene expression data, reduced relational analyzing is a simple approach to obtain a complete dataset.

grade, imputation, iteration Unfortunately, deletion strategy omits incomplete genes, so it

leads to insufficient original dataset especially for

multivariate data like microarray gene expression. It

I. INTRODUCTION generates serious bias and inaccurate conclusion when

missing ratio is large or data distribution of missing values is

A N important part of human genome project is to analyze

and utilize microarray gene expression data, which

records the abundance of gene transcripts mRNA in cells and

non-random [5]. Missing value imputation [6]-[7] is a

low-cost and efficient approach to recover all missing data

contains significant control information about gene function. without repetitive experiments [8]. Substituting missing

Microarray analysis technology on gene expression data has values with the global or class-conditional mean/mode has

been widely used in numerous fields related to investigating been employed to handle missing values. Furthermore,

drug effects, identification of critical genes for diagnosis or imputation before analysis can significantly improve the

therapy and cancer classification. performance of some machine learning algorithms which

DNA microarray technology [1] is one of the most useful suffer missing values, namely C4.5, reference [9] shows that

tools for monitoring gene expression level, which can KNN imputation can enhance prediction accuracy of C4.5

simultaneously analyze the mRNA levels of thousands of over small software project datasets.

genes in particular cells or tissues on a gene chip. In a DNA We propose an iterative imputation algorithm based on a

microarray experiment, plenty of DNA probes are fixed on a kind of novel distance metric for predicting gene expression

given spot, and then hybridized with samples of fluorescence missing values, called Iterative imputation based on reduced

labeled DNA, cDNA or RNA, so the gene sequence relational grade (RKNN). RKNN measures the similarity

information can be obtained by detecting the strength of between a gene with missing values and its nearest neighbors

with the reduced relational grade, which improves gray

relational grade. Besides, imputing missing values iteratively

Manuscript received September 02, 2015; revised December 14, 2015.

has high data utilization by using incomplete genes (with

This work was supported in part by the National Natural Science Foundation

of China (U1433116), the Fundation of Graduate Innovation Center in missing values). We experimentally evaluate the proposed

NUAA (kfjj20151602). algorithm on different kinds of publicly available microarray

Yun He is with the College of Computer Science and Technology, datasets and the results demonstrate that the reduced

Nanjing University of Aeronautics and Astronautics, Nanjing, 210016

China. (E-mail: 1561850387@qq.com). relational grade achieve similar performance as gray one

De-Chang Pi is with the College of Computer Science and Technology, when capturing ‘nearness’ but greatly reduces the time

Nanjing University of Aeronautics and Astronautics, Nanjing, 210016 complexity. Moreover, RKNN algorithm outperforms the

China. (E-mail: dc.pi@163.com).

(Advance online publication: 27 August 2016)

IAENG International Journal of Computer Science, 43:3, IJCS_43_3_11

______________________________________________________________________________________

conventional KNN method. confidence intervals because it ignores the uncertainty of the

The rest of this paper is organized as follows. It has a brief imputed dataset [14]-[15]. Filling in missing values with

review on related work about imputation methods in Section iterative imputation is the alternative of single imputation.

II. In Section III, we introduce the concept of gray relational Reference [16] presented a nonparametric iterative

grade. Both the reduced relational grade and RKNN imputation algorithm and confirmed that it outperforms

algorithm are proposed in Section IV, while the analysis of normal single imputation. Iterative imputation based on gray

them are discussed in Section V. In Section VI we conclude relational grade (GKNN) proposed in [14] uses gray

this paper. relational grade as its similar metric to select K nearest

neighbors. GKNN can obtain great results when dealing with

missing values in heterogeneous data (continuous and discrete

II. RELATED WORK data). However, GKNN is more suitable for small datasets,

Throughout this paper, the original gene expression matrix because calculating the grey correlation degree costs a lot of

(with missing values) is denoted by X , which contains n time with the expansion of the data scale. We propose a

genes and m attributes (n m), where the i-th row represents RKNN imputation algorithm based on GKNN in this paper,

gene xi and xi [ xi1, xi 2 , , xim ] . xij denotes the expression and reduced relational grade is designed as the similar metric

method, which can significantly decrease time complexity

level of gene xi in sample j. All of complete genes from X and keep the good imputation performance compared with the

constitute a complete data matrix X complete (without missing conventional relational grade.

values). The gene with missing values is called a target gene,

and the set of genes with available information for imputing

missing values in a target gene is referred as its candidate III. GRAY RELATIONAL GRADE

genes. Gray System Theory (GST) was developed by Deng in

K nearest-neighbors (KNN) imputation is defined to find K 1982 [17]. The System is good at handling complex systems

nearest neighbors from complete data sets for the target gene to get reliable results. Gray Relational Analysis (GRA), a

assumed to have a missing value in attribute j, and then fill in method of GST, can seek the numerical relationship among

the target gene with a weighted average of values in attribute j different subsystems to measure the similarity. GRA is used to

from the K closest genes [10]. The idea of KNN is that objects quantify the trend relationship of two systems or two elements

close to each other are potentially similar. For a gene in a system. Generally, if the development tendency between

expression dataset, similar genes in similar experiments will two systems is consistent, the relational grade is large.

have similar expression, based on which KNN implements the Otherwise, it is small.

imputation for gene expression datasets. Gray relational coefficient (GRC) is used to describe the

Reference [10] presented a comparative study of three similarity between a target and a candidate gene on the

kinds of imputation methods, namely Singular Value attribute q in a given dataset. xi and x j represent the target

Decomposition-based method, KNN and row average, on

gene microarray data of different missing ratio. Experimental and the candidate gene respectively. The GRC is defined as

results showed that KNN imputation has the best robustness follows:

min t min k | x ik x tk | max t max k | x ik x tk |

and accuracy. Reference [11] synthetically analyzed the GRC (x iq , x jq )

performances of 23 kinds of imputation methods and | x iq x jq | max t max k | x ik x tk |

demonstrated that KNN imputation has excellent estimation (1)

accuracy. So far, many researches have improved KNN where is the distinguishing coefficient, [0,1] ,

imputation and they mainly aim at two aspects: the order of generally =0.5.

imputation and the metric distance. Among these researches, Gray relational grade takes mean processing to change each

a sequential KNN (SKNN) imputation [12] method sorts the series’ gray relational coefficient at all attributes into their

target genes (with missing values) according to their missing average as similarity metric of two genes.

ratio and then imputes genes with the smallest missing rate

1 m

first. Once all missing values in a target gene are imputed, the GRG(x i , x j ) GRC (x ik , x jk ) (2)

target gene will be considered as a complete one. Shell m k 1

Neighbors imputation [13] fills in an incomplete instance by GRG measures the relationship between two genes at a

only using its left and right nearest neighbors with respect to global perspective, which overcomes the deficiency of

each attribute, and the size of the set of nearest neighbors is Minkowski distance. When selecting K nearest neighbors for

determined by cross-validation method. Existing KNN a target gene, the larger the value of gray relational grade is,

imputation is based on Minkowski distance, which is a simple the higher similarity and the less difference between genes are.

superimposed distance on different attributes of two genes Otherwise, the less similarity and the more difference.

without considering the whole data set. Reference [14]

showed that gray relational grade is more appropriate to

capture the proximity between two instances than Minkowski IV. RKNN ALGORITHM

distance or others.

A. Reduced relational grade

Single imputation that affords single estimation for each

missing data is a kind of common strategy. Nevertheless, Gene expression data matrix of high-dimensional describes

single imputation cannot provide effective standard errors and the expression levels of thousands of genes in different

(Advance online publication: 27 August 2016)

IAENG International Journal of Computer Science, 43:3, IJCS_43_3_11

______________________________________________________________________________________

experimental conditions. According to (1), we must search the C. Data normalization

whole candidate dataset one time when calculating a GRC of Generally, the similarity between two genes is dominated

each target gene. For gene data matrix, this kind of approach by attributes with greater magnitude units. To avoid the bias

will cost too much time. In this paper, we propose a new generated by unit difference and make the data processing

relational coefficient denoted as Reduced Relational convenient, data should be normalized before calculating the

Coefficient (RRC), which is a kind of reduce of GRC. RRC is reduced relational grade for RKNN imputation algorithm. In

appropriate for measuring the nearness between a target this paper, we select Min-Max normalization. Original data

gene xi and a candidate gene x j at a specific attributes q: will be normalized into [0,1]. Assume that max(t) and min(t)

Maxk { x ik min k , x ik max k } (3)

represent the maximum and minimum values on attribute t

RRC (x iq , x jq )

x iq x jq Maxk { x ik min k , x ik max k } respectively, and xit is the expression value of gene xi on

attribute t. Data is normalized as follows:

xit min(t)

Where function Max{,} takes the larger one of two values. xit (7)

min k and max k refer to the minimum and maximum values max(t) min(t)

of attributes k respectively, which are easily obtained during

the process of data inputting or data normalizing. D. RKNN algorithm design

With experimenting in many times, we found the RKNN uses RRG as similarity metric method to select K

calculation result of formula mint mink | xik xtk | always neighbors and iteratively imputes missing values with

tends to zero, and the value of maxt maxk | xik xtk | tends weighted average until the termination condition is satisfied.

to Maxk {| xik min k |,| x ik max k |} . In short, equation (3) is

Algorithm : RKNNimpute

a simplification of (1), and that means the results of Input : gene expression dataset X with missing values

mint mink | xik xtk | and maxt maxk | xik xtk | , which Output : complete gene expression dataset

need a huge amount of computations, are approximately 01: Step1 : Initialization

replaced by their extreme, so RRC can greatly reduce the time 02 : FOR each target gene xi in X

complexity. 03 : replece all missing values in xi with row averages

In (3), RRC (xiq , x jq ) is valued in [0, 1]. The greater the 04 : END FOR / / obtaining a complete matrix X complete

05: normalize X complete , get X complete ( 0 )

value of RRC (xiq , x jq ) is, the larger the similarity between

06 : Step2 : Imputation

x iq and x jq will be. If x iq x jq , RRC (xiq , x jq ) 1 . On the 07 : h 0

contrary, if x iq and x jq have completely different values on 08 : REPEAT

09 : h / / the kth iterative imputation(h 1, 2,3 )

attribute q, the value of RRC (xiq , x jq ) is minimal. The 10 : FOR each target gene xi in X

reduced relational grade between the target gene xi and the 11 : construct the candidate gene dataset based onX complete ( h1)

candidate gene x j is defined as follows: 12 : FOR each candidate gene x j

13 : compute RRG xi , x j

1 m

RRG(x i , x j ) RRC (x ik , x jk ) (4) 14 : END FOR

m k 1

15: elect the K nearest genes

Similarly, RRG is also the mean processing and the greater 16: impute all missing values in xi using equation(6)?

the RRG is, the larger similarity between genes is achieved. 17 : END FOR / / obtaining X complete ( h )

Assume that the size of candidate gene dataset is N*m. For 18 : FOR each imputation value yˆ j ( h ) X complete ( h ) , yˆ j (h 1) X complete ( h1)

any target gene, when calculating its GRG with all of genes in

1

2

compute ( h ) (yˆ j ( h ) yˆ j (h 1) )

N

candidate dataset, the time complexity is O(N*N*m); while 19 : j 1

N

calculating the RRG, the time complexity is reduced to 20 : END FOR

O(N*m). The improvement of time complexity is amazing, 20 : UNTIL ( (h) or reach N m the maximum number of iteration)

considering gene expression dataset is N m . Herein, generally convergence accuracy is 10 3 and the

B. Imputation maximum number of iteration is N m 10 .

For a given target gene xi (the value of xit is missing), The accuracy of imputation algorithm is evaluated by the

root mean square error (RMSE) as follows:

RKNN calculates the RRG(xi , x j ) between xi and each

N

candidate gene x j , then selects K most similar genes as its K (yˆ y ) i i

2

nearest neighbors and finally imputes xit with the weighted RMSE i 1

(8)

N

average of its K neighbor genes at attribute t: Where yi is the actual value; ŷi is the imputed value, and N

yˆit k 1 wik xkt

K

(5) is the total number of missing values. The smaller the RMSE

Where wik is the weight of k-th neighbor gene xk to xi . is, the better imputation accuracy will be, and it means the

estimated value is close to the exact one.

wik RRG( xi , xk ) / k 1 RRG( xi , xk )

K

(6)

(Advance online publication: 27 August 2016)

IAENG International Journal of Computer Science, 43:3, IJCS_43_3_11

______________________________________________________________________________________

V. EXPERIMENTS AND ANALYSIS the type of data and missing ratio, but in theory, there is no

exact formula. Reference [18] designed a procedure for

A. Data selecting K automatically, and demonstrated that K can be set

Gene expression data from microarray technology is a to any value in the range 10-15. Reference [10] addressed this

matrix, which presents of expression level of various genes question in KNN method and reported the best results for K is

(rows) under different experimental conditions (columns). In in the range 10-20. It was fond experimentally that when K is

this study, we used five public available microarray data sets valued in [10, 15], the fluctuation of K can hardly affect the

in three different types obtained from the public genetic performance of algorithms. Therefore, we take K=10 in

databases: http://www.ncbi.nlm.nih.gov/geo/. subsequent experiments.

Two data sets (data sets NTS1 and NTS2) from the study in

C. Experimental evaluation on RRG

yeast Saccharomyces cerevisiae consist of non-time series

microarray data. The NTS1 is a comparison of cDNA coming In order to assess the performance of RRG, GRG was used

from mex67-5 temperature-sensitive mutant and that from as a reference. Whether RRG can improve the system

Mex67 wildtype strain both at 37℃, while NTS2 compares property when compared with GRG was indirectly showed by

cDNA from yra1-1 temperature-sensitive mutant with that the comparison results of RKNN and GKNN, since the only

distinction of these two algorithms lies in different similarity

from Yra1 wildtype strain both at 37℃ too. Both NS1 and

metric methods.

NS2 have six samples representing six experiments.

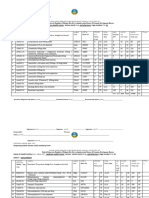

Comparison results are presented in Fig. 1, where Fig. 1(a)

The third and the fourth data sets (data sets TS1 and TS2)

display the RMSE on TS1 dataset with missing ratio 5%, 10%,

are time series data. TS1 tested the transcriptional response of

15%, 20%, respectively. Similarly, Fig. 1(b) and Fig. 1(c) are

S.cerevisiae to aeration after anaerobic growth. The six

the results on datasets NTS1 and MIX, separately.

attributes of TS1 stands for how long it has been aerated. TS2

The results show that the RMSE of the second iteration

contains the data from a cdc15-2-based sychronisation, which

dramatically decreases when compared with the first iteration

is composed of 25 attributes implying different culture time.

imputation both in RKNN and GKNN algorithms at different

The fifth data set belongs to a study of gene expression in

missing ratio. So iterative procedure can refine the imputation

Salmonella enterica after treating with 2mM hydrogen

value. From Fig 1, the two curves, which describe the

peroxide. It is termed by MIX, and contains both time and

performance of RKNN and GKNN, basically coincide in each

non-time course data.

subfigure. That indicates the two imputation algorithms based

TABLE I on RRG and GRG approximately have the same imputation

DIMENSION AND TYPES OF THE GENE EXPRESSION DATASETS accuracy, which means that considering neighbors selection

Data Original Data Complete Data Missing

and weight calculation, the same results as GRG are achieved

Type by applying RRG as the similarity metric.

set row column row column Rate

non-time

NTS1 7684 6 7106 6 1.46% TS1 5% TS1 10%

series 0.049 0.0496

GKNN GKNN

non-time RKNN 0.0494 RKNN

NTS2 7684 6 7589 6 0.04%

series 0.0485

time 0.0492

TS1 6495 6 3491 6 12.07%

series

0.049

time 0.048

TS2 8832 25 4078 25 16.10%

RMSE

RMSE

series 0.0488

mixed

MIX 5184 6 5151 6 0.11 0.0475

series 0.0486

0.0484

0.047

All of these five data sets suffer missing problems, 0.0482

especially TS2. Firstly, these data sets need to be

0.0465 0.048

pre-processed by removing genes with missing values to 0 5

Iteration

10 0 5

Iteration

10

obtain complete data sets. Table I shows the dimensions of the TS1 15% TS1 20%

original data matrices before and after pre-processing 0.056

GKNN

0.059

GKNN

(complete data). 0.0555 RKNN

0.058

RKNN

Before experimenting, missing values at different ratio 0.055

were introduced into these five complete data sets randomly, 0.0545 0.057

and then they are analyzed by imputation algorithm. 0.054

RMSE

RMSE

0.056

0.0535

B. Parameter K

0.053 0.055

KNN method or its variations have one thing in common: 0.0525

An appropriate K must be selected. The value of K can affect 0.054

0.052

the prediction of KNN method or its variations. If K is too

0.0515 0.053

large, the similarity of some neighbors will be insufficient, 0 5

Iteration

10 0 5

Iteration

10

and too much neighbors may result in imputation performance (a) Experimental results on TS1

reduction; if K is too small, it will strengthen a few neighbors

and the negative impact of noise data will increase

simultaneously. The value of K is empirically found related to

(Advance online publication: 27 August 2016)

IAENG International Journal of Computer Science, 43:3, IJCS_43_3_11

______________________________________________________________________________________

0.087

NTS1 5%

0.0882

NTS1 10% effectively as well.

GKNN GKNN

RKNN RKNN

0.0869 0.0881 TABLE II

TIME-CONSUMING OF RKNN AND GKNN FOR TEN ITERATIONS ON TS1

0.0868 0.088

Consuming

0.0867 0.0879

Dataset TS1

time (ms)

RMSE

RMSE

0.0866 0.0878

missing ratio 5% 10% 15% 20%

0.0865 0.0877

RKNN 2978 5388 6876 8096

0.0864 0.0876 GKNN 4111439 7176826 8904707 11435077

0.0863 0.0875

TABLE III

0.0862

0 5 10

0.0874

0 5 10

TIME-CONSUMING OF RKNN AND GKNN FOR TEN ITERATIONS ON NTS1

Iteration Iteration

Consuming

Dataset NTS1

NTS1 15% NTS1 20% time (ms)

0.093 0.0965

GKNN GKNN

0.0928 RKNN RKNN missing ratio 5% 10% 15% 20%

0.096

RKNN 11582 21032 28565 31979

0.0926

0.0955 GKNN 33457400 60015902 79448905 96778006

0.0924

RMSE

RMSE

0.0922 0.095 TABLE IV

0.092

TIME-CONSUMING OF RKNN AND GKNN FOR TEN ITERATIONS ON MIX

0.0945

Consuming

0.0918 Dataset MIX

time (ms)

0.094

0.0916

missing ratio 5% 10% 15% 20%

0.0914 0.0935

0 5 10 0 5 10 RKNN 6185 10925 13945 18185

Iteration Iteration

GKNN 14534205 23306022 29330607 36188027

(b) Experimental results on NTS1

MIX 5% MIX 10%

Overall, RRG has same performance as GRG on

0.0282 0.0295

GKNN GKNN

imputation accuracy. Moreover, RRG greatly reduces the

0.0281

RKNN

0.0294

RKNN

time complexity.

0.028 D. Experimental evaluation on RKNN

0.0293

0.0279

In order to evaluate the proposed RKNN algorithm with

RMSE

RMSE

0.0292 some microarray data sets, two algorithms were selected in

0.0278

our experiments. One is the algorithm of sequential KNN

0.0291

0.0277 imputation (SKNN), the other is the iterative KNN imputation

0.029

(IKNN) [17] by changing normal KNN method into an

0.0276

iterative imputation based on iterative principle.

0.0275

0 5 10

0.0289

0 5 10

RKNN, SKNN and IKNN are applied to five datasets TS1,

Iteration Iteration TS2, NTS1, NTS2, and MIX at different missing ratio 5%,

0.0312

MIX 15%

0.0322

MIX 20% 10%, 15%, 20% and 25%. The experimental results in RMSE

GKNN GKNN showed the phenomenon of prediction accuracy for these

RKNN RKNN

0.031 0.032 three imputation algorithms in Fig 2.

0.0308 0.0318

TS1

0.064

RMSE

RMSE

RKNN

0.0306 0.0316

0.062 SKNN

IKNN

0.0304 0.0314 0.06

0.058

0.0302 0.0312

RMSE

0.056

0.03 0.031

0 5 10 0 5 10

Iteration Iteration

0.054

(c) Experimental results on MIX 0.052

Fig. 1. Experimental results for ten iterations on TS1, NTS1 and MIX

0.05

datasets for RKNN and GKNN algorithms.

0.048

5 10 15 20 25

Table II presents time-consuming scale of the comparison Missing ratio

experiments on dataset TS1, similarly Table III and Table IV (d) Comparative results on TS1

display the time-consuming of RKNN and GKNN on dataset

NTS1 and dataset MIX, respectively. Obviously, RRG

proposed in this paper compared with GRG can decrease

computational complexity significantly, and reduce runtime

(Advance online publication: 27 August 2016)

IAENG International Journal of Computer Science, 43:3, IJCS_43_3_11

______________________________________________________________________________________

0.072

TS2 decrease while the missing ratio increases generally. Fig. 2(d),

0.071

RKNN Fig. 2(e) and Fig. 2(h) presented the results on time series

SKNN

0.07 IKNN datasets TS1, TS2, and mixed dataset MIX, respectively. The

0.069

imputation accuracies of RKNN, SKNN and IKNN are close

0.068

to each other over these three datasets, but we can still find out

that RKNN has the smallest estimation error. The advantage

RMSE

0.067

of RKNN is very obvious on non-time series datasets NTS1

0.066

and NTS2 displayed in Fig. 2(f) and Fig. 2(g). The

0.065

performance of algorithms depends on the type of datasets,

0.064

and RKNN is more appropriate for non-time series datasets.

0.063

Hence, compared with IKNN and SKNN algorithms, our

0.062

5 10 15 20 25 method RKNN has the best performance.

Missing ratio

(e) Comparative results on TS2

VI. CONCLUSIONS

NTS1

0.105 In this work, we proposed a new similarity metric method

RKNN

SKNN

named reduced relational grade (RRG), which is an

0.1

IKNN improvement of GRG. The performance of RRG was

indirectly assessed and compared with GRG over three

0.095 datasets of different types at different missing ratio.

RMSE

Considering estimation accuracy, RRG and GRG have the

0.09

same similar results, but RRG significantly decreases the time

complexity. Therefore, RRG is a kind of more efficient

0.085

method to capture ‘nearness’ between two instances

compared with GRG. Based on RRG, we further proposed an

0.08

improved KNN method for estimating missing values on

5 10 15 20 25

Missing ratio

microarray gene expression data, named RKNN imputation.

(f) Comparative results on NTS1 RKNN is ability to efficiently utilize data and it also can

impute missing values iteratively. We experimentally

NTS2

0.092

evaluated the performance of RKNN compared with IKNN

0.09

and SKNN algorithms on five datasets at different missing

RKNN ratio. The results show that RKNN works well on imputing

0.088

SKNN missing values. It should also be noted that the appropriate

IKNN

0.086

convergence accuracy and the maximum number of iteration

0.084

RMSE

can affect the performance of RKNN imputation, so how to

0.082 efficiently and reasonably determine them would be further

0.08 researched.

0.078

0.076

0.074

REFERENCES

5 10 15 20 25

Missing ratio [1] Hoheisel J D. “Microarray technology: beyond transcript profiling and

(g) Comparative results on NTS2 genotype analysis,” Nature Reviews Genetics, vol. 7, no. 3, pp.

200-210, 2006.

[2] Brevern A G D, Hazout S, Malpertuy A. “Influence of microarrays

MIX experiments missing values on the stability of gene groups by

0.036

RKNN hierarchical clustering,” BMC Bioinformatics, vol. 5, no. 1, pp.

0.035 SKNN 114-119, 2004.

0.034

IKNN [3] Yang Y H, Buckley M J, Dudoit S, et al. “Comparison of methods for

image analysis on cDNA microarray data,” Journal of Computational

0.033 and Graphical Statistics, vol. 11, no. 1, pp. 108-136, 2002.

0.032 [4] Junger W L, de Leon A P. “Imputation of missing data in time series for

RMSE

air pollutants,” Atmospheric Environment, vol. 102, pp. 96-104, Feb

0.031 2015.

0.03 [5] García-Laencina P J, Sancho-Gómez J L, Figueiras-Vidal A R, et al. “K

nearest neighbours with mutual information for simultaneous

0.029

classification and missing data imputation,” Neurocomputing, vol. 72,

0.028 no. 7-9, pp. 1483-1493, 2009.

[6] Fukuta K, Okada Y. “LEAF: Leave-one-out Forward Selection method

0.027

5 10 15 20 25 for information gene discovery in DNA microarray data,” IAENG

Missing ratio International Journal of Computer Science, vol. 38, no. 2, pp 160-167,

(h) Comparative results on MIX 2011.

Fig. 2. Experimental results on datasets TS1, TS2, NTS1, NTS2 and MIX [7] Okada Y, Okubo K, Horton P, et al. “Exhaustive search method of gene

for three algorithms. expression modules and its application to human tissue data,” IAENG

international journal of computer science, vol. 34, no. 1, pp 119-126,

From Fig 2, we find that the accuracies of algorithms 2007.

(Advance online publication: 27 August 2016)

IAENG International Journal of Computer Science, 43:3, IJCS_43_3_11

______________________________________________________________________________________

[8] Moorthy K, Saberi Mohamad M, Deris S. “A Review on Missing Value

Imputation Algorithms for Microarray Gene Expression Data,”

Current Bioinformatics, vol. 9, no. 1, pp. 18-22, 2014.

[9] Song Q, Shepperd M, Chen X, et al. “Can k-NN imputation improve

the performance of C4.5 with small software project data sets? A

comparative evaluation,” The Journal of Systems and Software, vol.

81, no. 12, pp. 2361-2370, 2008.

[10] Troyanskaya O, Cantor M, Sherlock G, et al. “Missing value

estimation methods for DNA microarrays,” Bioinformatics, vol. 17, no.

6, pp. 520-525, 2001.

[11] Liew A W C, Law N F, Yan H. “Missing value imputation for gene

expression data: computational technique to recover missing data from

available information,” Briefings in Bioinformatics, vol. 12, no. 5, pp.

498-513, 2010.

[12] Meng F, Cai C, Yan H. “A Bicluster-Based Bayesian Principal

Component Analysis Method for Microarray Missing Value

Estimation,” Biomedical and Health Informatics, vol. 18, no. 3, pp.

862-871, 2014.

[13] Zhang S. “Shell-neighbor method and its application in missing data,”

Applied Intelligence, vol. 35, no. 1, pp. 123-133, 2011.

[14] Zhang S. “Nearest neighbor selection for iteratively KNN imputation,”

The Journal of Systems and Software, vol. 85, no. 11, pp. 2541-2552,

2012.

[15] Riggi S, Riggi D, Riggi F. “Handling missing data for the identification

of charged particles in a multilayer detector: A comparison between

different imputation methods,” Nuclear Instruments and Methods in

Physics Research A, vol. 780, pp 81-90, Apr 2015.

[16] Zhang S, Jin Z, Zhu X. “NIIA: Nonparametric Iterative Imputation

Algorithm”, Berlin: Springer-Verlag, 2008.

[17] Song Q, Shepperd M. “Predicting software project effort: A grey

relational analysis based method,” Expert Systems with Applications.

vol. 38, no. 6, pp. 7302-7316, 2011.

[18] Brás L P, Menezes J C. “Improving cluster-based missing value

estimation of DNA microarray data,” Biomolecular Engineering, vol.

24, no. 2, pp. 273-282, 2007.

(Advance online publication: 27 August 2016)

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5819)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1093)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (845)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (348)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- AgilePM Template - Terms of Reference (ToR)Document5 pagesAgilePM Template - Terms of Reference (ToR)Abdul KhaliqNo ratings yet

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Acsl 16-17 Contest 1 Notes - Recursive Functions Cns WDTPD 1Document10 pagesAcsl 16-17 Contest 1 Notes - Recursive Functions Cns WDTPD 1api-328824013No ratings yet

- Predicting Star Ratings Based On Annotated Reviews of Mobile AppsDocument8 pagesPredicting Star Ratings Based On Annotated Reviews of Mobile AppsAbdul KhaliqNo ratings yet

- Master Thesis - Niels DenissenDocument127 pagesMaster Thesis - Niels DenissenAbdul KhaliqNo ratings yet

- It Governance Mechanisms in Higher Education: SciencedirectDocument6 pagesIt Governance Mechanisms in Higher Education: SciencedirectAbdul KhaliqNo ratings yet

- How To Properly Size A Steam TrapDocument4 pagesHow To Properly Size A Steam TrapJessicalba Lou100% (1)

- Test Guideline No. 442CDocument40 pagesTest Guideline No. 442CAdriana HusniNo ratings yet

- Manea 2013 - Lean ProductionDocument8 pagesManea 2013 - Lean ProductionwoainiNo ratings yet

- Oscar WaoDocument8 pagesOscar Waoapi-235934457No ratings yet

- 1 - Introduction For PschologyDocument6 pages1 - Introduction For PschologyWeji ShNo ratings yet

- X-Sample Paper (UTS-09 by O.P. GUPTA)Document8 pagesX-Sample Paper (UTS-09 by O.P. GUPTA)Uma SundarNo ratings yet

- Orbis2 November Orbuzz 2019Document12 pagesOrbis2 November Orbuzz 2019Basavaraja NNo ratings yet

- Passwords Ma Rzo 07Document21 pagesPasswords Ma Rzo 07Nusrat Jahan MuniaNo ratings yet

- ASTM C59-C59M - 00 (Reapproved 2011)Document2 pagesASTM C59-C59M - 00 (Reapproved 2011)Black GokuNo ratings yet

- Fullerton Online Teacher Induction Program: New Teacher Email Subject Area Grade LevelDocument6 pagesFullerton Online Teacher Induction Program: New Teacher Email Subject Area Grade Levelapi-398397642No ratings yet

- Bhu1101 Lecture 3 NotesDocument5 pagesBhu1101 Lecture 3 NotesDouglasNo ratings yet

- Modeling Palm Bunch Ash Enhanced Bioremediation of Crude-Oil Contaminated SoilDocument5 pagesModeling Palm Bunch Ash Enhanced Bioremediation of Crude-Oil Contaminated SoilInternational Journal of Science and Engineering InvestigationsNo ratings yet

- Prepared By: Jonalyn G. Monares Checked By: Ma. Romila D. Uy Teacher 1 English Head Teacher IiiDocument39 pagesPrepared By: Jonalyn G. Monares Checked By: Ma. Romila D. Uy Teacher 1 English Head Teacher IiiJanethRonard BaculioNo ratings yet

- Focus Group DiscussionDocument9 pagesFocus Group DiscussionPaulo MoralesNo ratings yet

- Masters of DeceptionDocument1 pageMasters of DeceptionDoug GrandtNo ratings yet

- Roto NT - Catalogue For Timber ProfilesDocument284 pagesRoto NT - Catalogue For Timber ProfilesThomas WilliamsNo ratings yet

- Lesson 2: Happiness and Ultimate Purpose: τέλος (Telos)Document2 pagesLesson 2: Happiness and Ultimate Purpose: τέλος (Telos)CJ Ibale100% (1)

- DPU User ManualDocument70 pagesDPU User Manualyury_dankoNo ratings yet

- Kanban Card 15Document28 pagesKanban Card 15Byron Montejo100% (1)

- 10 Research Definition With RefferenceDocument5 pages10 Research Definition With RefferenceZuhaib Sagar100% (3)

- Dispensary APTsDocument12 pagesDispensary APTsTewodros TafereNo ratings yet

- Power System Stability Course Notes PART-1: Dr. A.Professor Mohammed Tawfeeq Al-ZuhairiDocument8 pagesPower System Stability Course Notes PART-1: Dr. A.Professor Mohammed Tawfeeq Al-Zuhairidishore312100% (1)

- Files PDFDocument1 pageFiles PDFSouravNo ratings yet

- Common Design Patterns For AndroidDocument9 pagesCommon Design Patterns For Androidaamir3g2912No ratings yet

- Only $5: Amazo MDocument55 pagesOnly $5: Amazo MTa TaNo ratings yet

- John Deere 6090hf475 Installation DrawingDocument1 pageJohn Deere 6090hf475 Installation Drawinganna100% (56)

- stm32 Education Step2Document11 pagesstm32 Education Step2Nanang Roni WibowoNo ratings yet

- Interpreting Electronic Sound Technology in The Contemporary Javanese SoundscapeDocument3 pagesInterpreting Electronic Sound Technology in The Contemporary Javanese SoundscapeJey JuniorNo ratings yet

- 4G 2G 2F 3G UphillDocument2 pages4G 2G 2F 3G Uphillamit singhNo ratings yet