Professional Documents

Culture Documents

Analog Quantum Computer CVQC

Uploaded by

Cleilson PereiraOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Analog Quantum Computer CVQC

Uploaded by

Cleilson PereiraCopyright:

Available Formats

Quantum Analogue Computing

By Vivien M. Kendon1 , Kae Nemoto2 and William J. Munro2,1

1

School of Physics and Astronomy, University of Leeds, Leeds LS2 9JT, UK

2

National Institute of Informatics, 2-1-2 Hitotsubashi, Chiyoda-ku, Tokyo,

101-8430, Japan

We briefly review what a quantum computer is, what it promises to do for us, and

why it is so hard to build one. Among the first applications anticipated to bear

fruit is quantum simulation of quantum systems. While most quantum computa-

tion is an extension of classical digital computation, quantum simulation differs

arXiv:1001.2215v1 [quant-ph] 13 Jan 2010

fundamentally in how the data is encoded in the quantum computer. To perform

a quantum simulation, the Hilbert space of the system to be simulated is mapped

directly onto the Hilbert space of the (logical) qubits in the quantum computer.

This type of direct correspondence is how data is encoded in a classical analogue

computer. There is no binary encoding, and increasing precision becomes exponen-

tially costly: an extra bit of precision doubles the size of the computer. This has

important consequences for both the precision and error correction requirements of

quantum simulation, and significant open questions remain about its practicality.

It also means that the quantum version of analogue computers, continuous variable

quantum computers (CVQC) becomes an equally efficient architecture for quantum

simulation. Lessons from past use of classical analogue computers can help us to

build better quantum simulators in future.

Keywords: quantum information, quantum computation, quantum simulation

1. Introduction

It is a quarter of a century since the seminal work of Feynman (1982) and Deutsch

(1985) introduced the concept of quantum computation, and fifteen years since Shor

(1997) produced an efficient quantum algorithm for factoring large numbers, thereby

raising the game for cryptographic schemes by suggesting a quantum computer

could break them more easily. Yet working quantum computers are still just toys

in a test tube performing calculations a child can do in their head. This is not for

want of trying. Spurred on by the seminal work of Shor (1995), Laflamme et al.

(1996), Steane (1996), who showed how quantum error correction can be used to

protect quantum systems from decoherence for long enough to run a computation,

the growth in research has been phenomenal on both theoretical and experimental

aspects of the challenge to construct a quantum computer. Steady progress has been

made over the intervening years, and there are a plethora of promising architectures

on the drawing board and the laboratory bench. There is currently no clear front

runner in the pack. We are at a stage of development equivalent to that of classical

computers before the advent of silicon chips (a mere fifty years ago). We know a

lot about what a quantum computer should be able to do, and what components

it needs, but we haven’t identified the best materials to build it with, nor what it

will be most useful for calculating.

Article submitted to Royal Society TEX Paper

2 V. Kendon, K. Nemoto, W. J. Munro

qubit register H gates produce superposition

of all possible inputs measure to get answer

H M

prepare input data

H M

CNOT

compute using

plus workspace qubits

quantum gates

H M

H M

CNOT

H M

time

Figure 1. Digital quantum computation (gate model).

2. Quantum computing

Feynman and Deutsch both (independently) perceived that a superposition of mul-

tiple quantum trajectories looks like a classical parallel computer, which calculates

the result of many different input values in the time it takes for one processor to

do one input value. Except the quantum system doesn’t need a stack of CPUs, the

parallel processing comes ‘for free’ with quantum dynamics, potentially providing

a vast economy of scale over classical computation. Of course, there is no ‘free

lunch’. Despite doing all the calculations in superposition, we can only access one

of the results when we measure the final state of the quantum computer. Figure 1

illustrates schematically how a simple gate model quantum computer works.

Programming for a quantum computer is thus trickier than classical program-

ming: you have to include a step to select the answer you want (at least with high

probability) out of all the possible results. Quantum programming techniques can

be grouped into a few distinct types. Promise problems, like Deutsch and Jozsa

(1992), hidden subgroup problems, like Shor (1997) for factoring, search algorithms

like Grover (1996), and quantum versions of random walks (see Ambainis (2004) for

a review, and Kendon (2006) for a gentle introduction). Some techniques are best

understood as a different architecture rather than a different programming method.

Minimisation problems, such as finding ground state energies, or satisfiability prob-

lems (kSAT) are best tackled using adiabatic quantum computation (Farhi et al.

2000). Adiabatic quantum computation has been proved to be equivalent to the

standard gate model of quantum computing (Aharonov et al. 2007), meaning that

we will be able to run adiabatic quantum algorithms on any quantum computer.

Many of these potential applications will require very large quantum comput-

ers before they gain an advantage over classical computers. For example, the best

classical factoring to date (Bahr et al. 2005) can handle a 200 digit number (RSA-

200), which is about 665 bits. For an n-bit input number, a basic implementation

of Shor’s quantum factoring algorithm needs 2n qubits in the QFT register plus

5n qubits for modular exponentiation = 7n logical qubits, so a 665 bit number

needs 4655 logical qubits. For a quantum computer of this size, error correction will

be essential. How much this increases the number of physical qubits depends on

the error rates. The closer to the threshold rates of 10−3 to 10−4 , the more error

correction is necessary (Devitt et al. 2009). These threshold error rates are smaller

than any experiment has yet achieved. For low error rates, maybe 20–200 physical

qubits per logical qubit are sufficient, while for high error rates (close the threshold)

Article submitted to Royal Society

Quantum Analogue Computing 3

it blows up quickly to around 105 per logical qubit. This suggests we may need Ter-

aqubit quantum computers to break factoring. While the scaling favours quantum,

the crossover point is high.

The picture is rather more promising for using a quantum computer to simulate

a quantum system, Feynman’s original inspiration for quantum computation. For

example, a system consisting of N 2-state quantum particles has 2N possible states,

and in general, it could be in superposition of all of them. Classical simulation of

such a system requires one complex number per state, which amounts to 2N +1 ×size-

of-double. With 1 Gbyte of memory, a system of N = 26 of the 2-state quantum

particles can be accommodated. The record set by De Raedt et al. (2007) is N = 36

in 1Terabyte of memory. Each additional particle doubles the amount of memory

required. Simulating more than 40 or so qubits in a general superposition state

is beyond current classical capabilities. If the system is restricted to only part of

its full Hilbert space, not all superpositions are needed, and with appropriately

designed methods to take this into account, larger classical simulations are possible

(Verstraete et al. 2004). This suggests that quantum computers with upwards of 40

logical qubits could perhaps do something useful for us we can’t accomplish with

classical simulation, in stark contrast to the Teraqubits required for factoring.

While a classical simulation allows the full quantum state to be tracked through-

out, data extraction from quantum simulations is not nearly so easy. Each run pro-

vides only a single snapshot of the outcome of a measurement on the superposition

state of the system. But methods have been designed for extracting key parame-

ters, such as energy gaps (Wu et al. 2002), or correlation functions (Somma et al.

2002) from quantum simulations. Open problems in the theory of nanoscale super-

conducting materials are one example where quantum simulation could make an

impact on the development of the field.

Quantum simulation has been demonstrated (Somaroo et al. 1999), for small

systems using NMR quantum computers. So what is stopping us from building a

quantum computer with 40 to 100 qubits tomorrow? Quite simply, this is extremely

demanding technology to design and build. It requires single quantum particles to be

localised and controlled for long enough to apply hundreds or thousands of precise

manipulations to them, singly or in pairs, and then measured individually with high

fidelity. And throughout the process, unwanted interactions from nearby material

or light must be prevented, or minimised and any remaining effects reversed using

error correction techniques. Current state of the art for a quantum simulator is

. 10 qubits. Scaling up to 50 with a few hundred manipulations is believable with

the steady rate of development the experimentalists have been delivering. And it

is believable that at around 50 qubits, a quantum simulator could perform a short

computation without needing significant amounts of error correction. Going beyond

this without error correction is stretching credibility based on what we know today.

But error correction designed for digital quantum computers doesn’t work well

enough for a quantum simulator. We will elaborate on this point in section 4, after

discussing simulation in general, to set the problem in context.

3. Simulation

Computer simulations play an important role in most areas of science. Numer-

ical methods kick in wherever analytic calculations become too complicated or

Article submitted to Royal Society

4 V. Kendon, K. Nemoto, W. J. Munro

Number Unary Binary

0 0

1 • 1

2 •• 10

3 ••• 11

4 • • •• 100

··· ··· ···

N N ו log2 N bits

Figure 2. Binary counting needs exponentially less memory than unary.

intractable, which can happen even for simple systems, like three bodies moving

under their mutual gravitational interactions. We use computer simulation to test

our models of the real world, by calculating in detail what they predict, then com-

paring this with our experimental observations. We don’t expect perfect agreement,

both experiments and computations have limited precision, and we may know the

model is only an approximation (to simplify the computation). If our calculations

and observations agree as well as we anticipate, this is evidence our models are

good, and hence we understand at some level how the system works. We may then

use our computer simulations to predict things we haven’t yet observed, or provide

more details of processes that are hard to observe by experiment. We may even

simulate systems that can’t, or don’t, exist in nature, to better understand why

nature is the way it is.

That computation of any sort works in a useful way is not trivial. An accurate

calculation of the trajectory of a space probe that takes five years to reach its

destination is crucial: we cannot just launch many test probes and see where they

end up in order to work out which trajectory we want. One key difference between

a classical physical system and a computer simulation of it is that we represent

it using binary numbers (0 and 1) in a register in the computer. This is like the

difference between counting on your fingers and writing down the number 8. Eight

has just one symbol in place of your eight fingers. Eight people have 64 fingers

between them and that only took two symbols to write down. This makes for a

huge saving in the amount of memory a computer needs compared to the size of

the physical system, see figure 2. Binary numbers mean counting using base two,

whereas we usually use base ten. The saving in memory is huge whichever base we

use, but the inner workings of the computer are easier to arrange using binary.

A second important reason why computer simulations work depends on the com-

plexity of the model compared with the complexity of the system being studied.

Stars and planets are highly complex systems, but we don’t need to model most

of the details to predict to very high precision how the planet will orbit the star.

Gravitation is a supremely simple theory compared with systems it can correctly

describe the behaviour of. This is part of the way our world appears to be, described

as the “unreasonable effectiveness of mathematics” by Wigner (1960). More discus-

sion of simulating complex classical systems, and how to apply quantum computers

to this task, can be found in Harris and Kendon (2010), here we are specifically

interested in simulating quantum systems.

Article submitted to Royal Society

Quantum Analogue Computing 5

4. Efficiency and precision

For practical purposes, an efficient computation is one that gives answers in rela-

tively short human time scales (seconds, minutes, hours or days) at a cost we can

afford to pay. Computer scientists make quantitative comparisons between differ-

ent algorithms by associating a cost with each step of the algorithm and with the

amount of memory required to hold the data. This abstracts the idea that physical

computers have a limited size (memory) and maximum rate of elementary calcula-

tion steps per unit time. In most cases, ‘efficient’ means the algorithms use resources

that scale as a simple polynomial (like N 2 ) of the size N of the problem.

If we could efficiently simulate a quantum algorithm on a classical computer, we

would immediately have a classical algorithm that is sufficiently good to render the

quantum algorithm superfluous. To prove an algorithmic speed up for a quantum

algorithm, we have to prove that no classical algorithm of any type can do as well:

such proofs are in general very difficult. Shor’s algorithm (Shor 1997) is only the

‘best known’ algorithm for factoring, there is no proof something classical and faster

can’t be found in future.

Just as we can’t usually make a perfect measurement that determines exactly

how long or heavy something is, we also generally have to represent numbers in a

computer to a fixed precision. As the computation proceeds, the errors due to the

fixed precision slowly grow. For very long computations, they can overwhelm the

answer and render it useless. The accuracy we require for our answer thus places an-

other demand on how much memory and time we need to perform our computation.

Starting out with a higher precision to represent our numbers allows high precision

for the answer. If we represent our numbers as floating point with an exponent, e.g.,

0.1011101101 × 24 , then for an error of size ε, the number of bits (binary digits) of

precision is log2 (1/ε). For the example just given, which has ten bits of precision,

ε = 2−10 . Efficient algorithms require resources (time and memory) that scale as

log2 (1/ε). Algorithms running on digital computers, that store their numbers in

this binary format, usually have this logarithmic scaling for precision. However, for

computers that store information in a unary encoding, such as quantum simulators,

the errors will scale as 1/ε, thus requiring exponentially more resources for the same

increase in precision as their binary encoded counterparts.

Approximating real numbers to a fixed precision introduces errors into calcu-

lations that grow larger as the computation proceeds. If the computation requires

many steps, it may be necessary to use a higher precision to avoid the errors swamp-

ing the final answer. As well as errors arising from the limited precision, the oper-

ation of the computer itself may be imperfect and introduce random errors. In our

present day classical computers, such errors are so unlikely to happen we can forget

about them. In contrast, error correction for digital quantum computation will be

essential beyond about 100 qubits and 100 computational steps.

5. Quantum simulation of quantum systems

The main reason why classical simulations of quantum systems are not efficient is

because the memory required scales exponentially in the system size, due to the

exponential number of quantum superpositions. This can be solved by using the

efficient storage achieved by quantum computers. Mapping the Hilbert space of the

Article submitted to Royal Society

6 V. Kendon, K. Nemoto, W. J. Munro

adder multiplier integrator constant

x x R R

+ x+y x a a·x xdy x

y y

Figure 3. GPAC operation set.

system directly onto the Hilbert space of the quantum computer gives us efficient

memory use. We also require that a quantum simulator can run using an efficient

number of time steps. Lloyd (1996) showed that the Hamiltonian evolution of the

quantum system can be decomposed into a sequence of standard Hamiltonians

by using the Trotter approximation. Each standard Hamiltonian is applied for a

short time to build up the desired evolution. The number of steps required scales

polynomially in the accuracy, so this part of the algorithm is also efficient.

However, any small errors or imperfections in the operation of the quantum

simulator will affect the result in a linear rather than logarithmic way, because the

system is mapped directly onto the quantum simulator, rather than being binary

encoded into numbers in qubit registers. Consequently, we will need exponentially

more resources to correct for these errors. This raises the prospect of crippling error

correction requirements for practical quantum simulators (Brown et al. 2006).

Quantum simulation has much in common with analogue computation in the

way the data is encoded. It shares the problem that an extra bit of precision requires

a doubling of the resources. However, this suggests two avenues for the development

of quantum simulation that have not yet been well-explored. Firstly, looking back

to the era of analogue computation can teach us lessons for how to make quan-

tum simulation practically useful, despite the unfavorable scaling of the resources.

Secondly, we can consider quantum versions of analogue computation for quantum

simulation architectures. We discuss both these ideas in the next sections.

6. Analogue computing

Despite the exponential saving in memory gained through binary encoding, the

earliest computers didn’t use it. From the invention of the astrolabe for plotting

the heavens in around 200 BC, through the slide rule, and mechanical differential

analyser (Thomson 1876), these computational devices represented the quantity

they were computing as the size of some part of the apparatus. If you wanted a more

accurate answer, you had to build a larger device: for another bit or precision, it

would need to be twice the size. Yet, for the problems these machines were designed

to solve, they were very effective in practice.

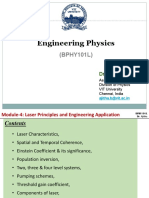

Shannon (1941) provided a theoretical model, the General Purpose Analogue

Computer (GPAC), which is a mathematical description of the differential analyser.

The GPAC consists of a set of nonlinear boxes, connected by their inputs and

outputs. Four basic operations are sufficient, shown in figure 3. There are a few

rules for how the boxes can be connected. Each output of a box goes to at most one

input; inputs cannot be interconnected, i.e., there is no “splitter” to allow the same

input to go to more than one box; similarly, outputs cannot be interconnected. It

has been proved by Pour-El (1978), and Lipshitz and Rubel (1981) that the set of

functions generated this way is the set of differentially algebraic functions. In other

words, we can solve any ordinary differential equation with this type of device,

Article submitted to Royal Society

Quantum Analogue Computing 7

given appropriate boundary conditions as part of the input. This abstract notion

of computation can be realised in many different physical systems, such as water in

pipes or electronic circuits. Such systems were quite common in university science

departments before digital computers became widespread.

There have been a number of suggestions for how to expand the model by adding

more operations to the available set e.g., Rubel (1993), Graca (2004), Mills (2008).

These are mathematical extensions and it isn’t clear whether they can be physically

constructed, raising the question of just exactly what we can in practice compute

given the way the physical world works. Rubel (1981) observed that there are simple

differential equations that are universal in the sense that they can approximate

any other differential equation to arbitrary accuracy. In practice, these probably

aren’t useful for computation, attempts to build a circuit that implements such a

differential equation turn out to be completely unstable (Mills 2009). While Rubel

(1989) proved that a classical digital computer can efficiently simulate an analogue

computer, the reverse question of whether an analogue computer can simulate a

digital computer efficiently is open.

Analogue computers might still provide a useful function in our digital world:

for certain calculations they are extremely fast. When a specific task is required

repeatedly (e.g., video rendering in real time), a dedicated analogue “chip” might

outperform a digital circuit.

7. Continuous variable quantum computing

There is a fully quantum version of analogue computing, usually known as continu-

ous variable quantum computing (CVQC) and first described by Lloyd and Braunstein

(1999). The information is encoded in the eigenstates of a continuous-spectrum

operator such as position x̂ or momentum p̂. Computations are carried out by

manipulating the physical states. Position and momentum are a conjugate pair of

operators, which are orthogonal in the sense that [x̂, p̂] = i up to a real normalisa-

tion constant. They act on the Hilbert Space L2 (R), the space of square-integrable

functions over R (square-integrable is important as it corresponds to being normal-

isable). To perform a quantum computation, we create an initial state, evolve the

state in a prescribed way by applying an appropriate Hamiltonian to the state, and

then perform a measurement from which we can extract the results.

Universal computation can be done in CVQC using a small set of elementary

operations. Any Hamiltonian can be written as a Hermitian polynomial in the

position and momentum operators x̂ and p̂, and we can generate any Hermitian

polynomial in x̂ and p̂ by using the following set of operators (Lloyd and Braunstein

1999):

1. simple linear operations, {±x̂, ±p̂}, and quadratic, e.g., x̂2 + p̂2 ,

2. a non-linear operation at least cubic i.e., x̂3 or p̂3 , the Kerr Hamiltonian

HKerr = (x̂2 + p̂2 )2 , may be easier experimentally,

3. an interaction to couple two modes together, e.g., the beam splitter operation.

It is easy to see how this works when the continuous quantity is the position, x

(say). After evolving the state with some Hamiltonian, measuring the new position

Article submitted to Royal Society

8 V. Kendon, K. Nemoto, W. J. Munro

gives us the answer (or one possible answer out of a superposition or distribution).

But the Heisenberg uncertainty principle means that if we know the position ex-

actly, the momentum is totally undetermined. This asymmetry in the uncertainty

is known as squeezing, and using a single quadrature like this requires infinitely

squeezed states, which are unphysical. Experimentally, we can’t even get a good

approximation of an infinitely squeezed state, so for practical purposes, we need to

choose a different way to encode our information, and the obvious choice is to use

Gaussian states, which have the uncertainty spread between the two quadratures x

and p. The nonlinear operation in our CVQC will evolve the state into something

that is no longer a Gaussian state: if it didn’t, the whole computation would be

efficiently classically simulatable (Bartlett et al. 2002). This corresponds to having

a superposition or distribution of different answers: when we make a measurement,

we will interpret the outcome as a Gaussian state from which we can extract the

result of out computation.

Implementing CVQC in a real system may require some modification to the set

of elementary gates, to adapt it to what is experimentally practical, for an exam-

ple, see Wagner et al. (2010). While continuous variables are a practical choice for

many quantum communications tasks (see Braunstein and van Loock (2005) for a

review), very little has been developed for CVQC. In particular, it shares the un-

favorable scaling of resources with precision that classical analogue and quantum

simulation have, and standard error correction results for digital quantum compu-

tation don’t apply (Niset et al. 2009).

8. CVQC for quantum simulation

For quantum simulation, where the digital advantage of binary encoding is already

lost, CVQC deserves a critical second look. Many quantum systems are naturally

continuous: for these a CVQC quantum simulation would be especially appropriate.

Experimental systems suitable for CVQC can be developed from many of the ar-

chitectures currently proposed for digital quantum computing: whenever qubits are

coupled using some sort of field mode, this can be inverted by regarding the field

mode as the continuous variable and the qubits as the control system. Wagner et al.

(2010) describes how the micromaser can be used this way, and the photonic mod-

ule of Devitt et al. (2007) is also promising. Squeezing is one of the elementary

operations we need in CVQC when encoding with Gaussian states, and we can

use the degree of squeezing to estimate how large our system needs to be to beat

classical simulation. Suzuki et al. (2006) have achieved 7dB of squeezing in optical

experiments. For one mode, 7dB of squeezing corresponds to 2 − 3 bits of precision

i.e., around 5 distinguishable outcomes when the state is measured. With arbi-

trary perfect coupling, going beyond what we can simulate classically corresponds

to more than 17 modes coupled together, since the combination takes us above 40

equivalent (qu)bits. Experimentally, nine modes have been combined in CV error

correction by Aoki et al. (2009), though this stays within Gaussian states and is

thus still efficiently classically simulatable. This is a very rough estimate, because

evolving the system accurately in a classical simulation would in practice require

higher precision to produce the required final precision for the output. On the other

hand, we can’t achieve arbitrary coupled states with current technology. But it does

give a feel for the potential for CVQC quantum simulation.

Article submitted to Royal Society

Quantum Analogue Computing 9

9. The future of quantum simulation

The goal of building a quantum computer large enough to solve problems beyond

the reach of the classical computational power available to us, is coming closer. The

first useful application for quantum computers is likely to be simulation of quantum

systems. Small quantum simulations have already been demonstrated, and useful

quantum simulations – that solve problems inaccessible to classical computation

– require less than a hundred qubits and may be viable without error correction.

While the theoretical underpinnings of digital computation are well developed, there

are significant gaps in our understanding of analogue computing theory, and these

are in turn slowing the progress of CVQC and quantum simulation. Investment in

developing the missing theory is as crucial for the future development of quantum

technology as experimental progress in quantum coherence and quantum control.

We thank Katherine Brown, Simon Devitt, Mark Everitt, Martin Jones, and Rob Wagner

for useful and stimulating discussions. Thanks to Mark Everitt and Rob Wagner for drafts

of figure 3. VK is funded by a Royal Society University Research Fellowship. WM and KN

acknowledge funding from the EU project HIP. This work was supported by a UK Royal

Society International Joint Project Grant.

References

Aharonov, D., van Dam, W., Kempe, J., Landau, Z., Lloyd, S., Regev, O., 2007. Adiabatic

quantum computation is equivalent to standard quantum computation. SIAM Journal

on Computing 37, 166, arXiv:quant-ph/0405098.

Ambainis, A., 2004. Quantum walk algorithms for element distinctness. In: 45th Annual

IEEE Symposium on Foundations of Computer Science, Oct 17-19, 2004. IEEE Com-

puter Society Press, Los Alamitos, CA, pp. 22–31.

Aoki, T., Takahashi, G., Kajiya, T., ichi Yoshikawa, J., Braunstein, S. L., van Loock, P.,

Furusawa, A., 2009. Quantum error correction beyond qubits. Nature Physics 5, 541.

Bahr, F., Boehm, M., Franke, J., Kleinjung, T., 2005. Factored RSA200 on 9th May 2005.

URL http://www.loria.fr/~ zimmerma/records/factor.html

Bartlett, S., Sanders, B., Braunstein, S. L., Nemoto, K., 2002. Efficient classical simulation

of continuous variable quantum information processes. Phys. Rev. Lett. 88, 097904,

arXiv:quant-ph/0109047.

Braunstein, S. L., van Loock, P., 2005. Quantum information with continuous variables.

Rev. Mod. Phys. 77, 513–578, arXiv:quant-ph/0410100v1.

Brown, K. R., Clark, R. J., Chuang, I. L., 2006. Limitations of quantum simulation

examined by simulating a pairing hamiltonian using nuclear magnetic resonance.

Phys. Rev. Lett. 97, 050504, arXiv:quant-ph/0601021.

De Raedt, K., Michielsen, K., De Raedt, H., Trieu, B., Arnold, G., Richter, M., Lip-

pert, T., Watanabe, H., Ito, N., 2007. Massive parallel quantum computer simulator.

Comp. Phys. Comm. 176, 127–136, arXiv:quant-ph/0608239v1.

Deutsch, D., 1985. Quantum-theory, the Church-Turing principle and the universal quan-

tum computer. Proc. R. Soc. Lond. A 400 (1818), 97–117.

Deutsch, D., Jozsa, R., 1992. Rapid solutions of problems by quantum computation.

Proc. Roy. Soc. Lon. A 439, 553.

Devitt, S. J., Greentree, A. D., Ionicioiu, R., O’Brien, J. L., Munro, W. J., Hollenberg,

L. C. L., 2007. Photonic module: An on-demand resource for photonic entanglement.

Phys. Rev. A 76, 052312.

Devitt, S. J., Nemoto, K., Munro, W. J., 2009. The idiots guide to quantum error correc-

tion. arXiv:0905.2794v2 [quant-ph].

Article submitted to Royal Society

10 V. Kendon, K. Nemoto, W. J. Munro

Farhi, E., Goldstone, J., Gutmann, S., Sipser, M., 2000. Quantum computation by adia-

batic evolution, arXiv:quant-ph/0001106.

Feynman, R. P., 1982. Simulating physics with computers. Int. J. Theor. Phys. 21, 467.

Graca, D. S., 2004. Some recent developments on Shannon’s GPAC. Math. Log. Quart.

50 (4–5), 473–485.

Grover, L. K., 1996. A fast quantum mechanical algorithm for database search. In:

Proc. 28th Annual ACM STOC. ACM, NY, p. 212, arXiv:quant-ph/9605043.

Harris, S., Kendon, V., 2010. Quantum-assisted biological modelling. Also submitted to

PTRS visions issue.

Kendon, V., 2006. A random walk approach to quantum algorithms.

Phil. Trans. Roy. Soc. A 364, 3407–3422.

Laflamme, R., Miquel, C., Paz, J. P., Zurek, W. H., 1996. Perfect quantum error correcting

code. Phys. Rev. Lett. 77, 198–201.

Lipshitz, L., Rubel, L. A., 1981. A differentially algebraic replacement theorem, and analog

computability. Proc. Amer. Math. Soc. 99, 367–372.

Lloyd, S., 1996. Universal quantum simulators. Science 273, 1073–1078.

Lloyd, S., Braunstein, S. L., 1999. Quantum computation over continuous variables.

Phys. Rev. Lett. 82, 1784, arXiv:quant-ph/9810082v1.

Mills, J. W., 2008. The nature of the extended analog computer. Physica D: Nonlinear

Phenomena 237 (9), 1235–1256.

Mills, J. W., 2009. Private communication.

Niset, J., Fiurasek, J., Cerf, N. J., 2009. A no-go theorem for gaussian quantum error

correction. Phys.Rev.Lett. 102, 120501.

Pour-El, M., 1978. Abstract computability and its relation to the general purpose analog

computer, etc. Trans. Amer. Math. Soc. 199, 1–28.

Rubel, L. A., 1981. A universal differential equation. Bull. Amer. Math. Soc. 4, 345–349.

Rubel, L. A., 1989. Digital simulation of analogue computation and Church’s thesis.

J. Symbolic Logic 54, 1011–1017.

Rubel, L. A., 1993. The extended analog computer. Adv. in Appl. Maths. 14 (1), 39–50.

Shannon, C., 1941. Mathematical theory of the differential analyzer.

J. Math. Phys. Mass. Inst. Tech. 20, 337–354.

Shor, P. W., 1995. Scheme for reducing decoherence in quantum computer memory.

Phys. Rev. A 52, R2493.

Shor, P. W., 1997. Polynomial-time algorithms for prime factorization and discrete loga-

rithms on a quantum computer. SIAM J. Sci Statist. Comput. 26, 1484.

Somaroo, S. S., Tseng, C. H., Havel, T. F., Laflamme, R., Cory, D. G., 1999. Quantum

simulations on a quantum computer. Phys. Rev. Lett. 82, 5381–5384.

Somma, R., Ortiz, G., Gubernatis, J. E., Knill, E., La flamme, R., April 2002. Simulating

physical phenomena by quantum networks. Phys. Rev. A 65 (4), 042323.

Steane, A., 1996. Multiple particle interference and quantum error correction.

Proc. Roy. Soc. Lond. A 452, 2551, arXiv:quant-ph/9601029.

Suzuki, S., Yonezawa, H., Kannari, F., Sasaki, M., Furusawa, A., 2006. 7 dB quadrature

squeezing at 860 nm with periodically-poled KTiOPO4 . Appl. Phys. Lett. 89, 061116.

Thomson, W., 1876. Mechanical integration of the general linear differential equation of

any order with variable coeffcients. Proc. Roy. Soc. 24, 271–275.

Verstraete, F., Porras, D., Cirac, J. I., 2004. Density matrix renormalization group and

periodic boundary conditions: A quantum information perspective. Phys. Rev. Lett. 93,

227205.

Wagner, R. C., Everitt, M. S., Jones, M. L., Kendon, V. M., 2010. Universal continuous

variable quantum computation in the micromaser. In preparation.

Wigner, E., 1960. The unreasonable effectiveness of mathematics in the natural sciences.

Communications on Pure and Applied Maths. 13 (1), 1–14.

Wu, L. A., Byrd, M. S., Lidar, D. A., July 2002. Polynomial-time simulation of pairing

models on a quantum computer. Phys. Rev. Lett. 89 (5), 057904.

Article submitted to Royal Society

You might also like

- 1 s2.0 S0965997822002381 MainDocument5 pages1 s2.0 S0965997822002381 Maindodol garutNo ratings yet

- (IJETA-V9I3P1) :yew Kee WongDocument7 pages(IJETA-V9I3P1) :yew Kee WongIJETA - EighthSenseGroupNo ratings yet

- Quantam ComputersDocument21 pagesQuantam Computersapi-3746880No ratings yet

- Quantum Computers: A Seminar Presentation OnDocument19 pagesQuantum Computers: A Seminar Presentation Onjaya100% (1)

- Karishma Report 1Document15 pagesKarishma Report 1anudeep ReverentNo ratings yet

- QuantumDocument12 pagesQuantumSandeepNo ratings yet

- Quantum Computing Research Trends Report - ElsevierDocument13 pagesQuantum Computing Research Trends Report - Elseviersrishtityagi2020No ratings yet

- BCG The Next Decade in Quantum Computing Nov 2018 21 R Tcm9 207859Document30 pagesBCG The Next Decade in Quantum Computing Nov 2018 21 R Tcm9 207859Ammer Yaser MehetanNo ratings yet

- Quantum ComputingDocument7 pagesQuantum ComputingマルワNo ratings yet

- Quantum Computing in Banking - IndianDocument5 pagesQuantum Computing in Banking - Indianalexander benNo ratings yet

- Quantum ComputingDocument6 pagesQuantum ComputingramakilaNo ratings yet

- Most Cited Papers: Quantum ComputersDocument205 pagesMost Cited Papers: Quantum ComputersManjunath.RNo ratings yet

- Playing With A Quantum ComputerDocument9 pagesPlaying With A Quantum ComputerdaniNo ratings yet

- Quantum ComputersDocument21 pagesQuantum Computersshweta_bharti_7No ratings yet

- Research Paper MSCSDocument14 pagesResearch Paper MSCSEmin JabbrNo ratings yet

- 31658179800805Document13 pages31658179800805Eduardus Henricus HauwertNo ratings yet

- (IJETA-V9I2P2) :yew Kee WongDocument6 pages(IJETA-V9I2P2) :yew Kee WongIJETA - EighthSenseGroupNo ratings yet

- Ca1 RPMW 09apr2023Document6 pagesCa1 RPMW 09apr2023wevertonscNo ratings yet

- The Future of Quantum ComputerDocument6 pagesThe Future of Quantum ComputerVIVA-TECH IJRINo ratings yet

- PDFDocument5 pagesPDFNoel JosephNo ratings yet

- Technion - IIT Haifa, IsraelDocument5 pagesTechnion - IIT Haifa, Israelsepot24093No ratings yet

- Chapter-1: Quantum ComputingDocument19 pagesChapter-1: Quantum ComputingMahesh ReddyNo ratings yet

- Quantum - Computing (Aditya Raj)Document24 pagesQuantum - Computing (Aditya Raj)Aditya RajNo ratings yet

- High Performance Quantum Computing MainframesDocument7 pagesHigh Performance Quantum Computing MainframesMehul JainNo ratings yet

- A Seminar Report On:: "Quantum Structural Complexity"Document28 pagesA Seminar Report On:: "Quantum Structural Complexity"Yashoda NeupaneNo ratings yet

- Quantum Mechanics and ComputersDocument45 pagesQuantum Mechanics and ComputersMohammed RayaanNo ratings yet

- QC Ecsc 405Document18 pagesQC Ecsc 405Chenemie JohnNo ratings yet

- Understanding How Noise Impacts Quantum Algorithms on IBM ComputersDocument9 pagesUnderstanding How Noise Impacts Quantum Algorithms on IBM ComputersArthur RebelloNo ratings yet

- Quantum ComputerDocument4 pagesQuantum ComputerIstuti GuptaNo ratings yet

- Quantum Ca1Document12 pagesQuantum Ca1Anulekha DattaNo ratings yet

- Quantum Computer Architectures SurveyDocument5 pagesQuantum Computer Architectures SurveydiaeshantonyNo ratings yet

- Artificial Intelligence: Mingsheng YingDocument15 pagesArtificial Intelligence: Mingsheng YingClement ThansNo ratings yet

- Abi Earnest Quantum Computer Docx (4) - 221202 - 144448Document26 pagesAbi Earnest Quantum Computer Docx (4) - 221202 - 144448AppukuttanNo ratings yet

- p1 Quantum Computer Architecture Toward Full-Stack Quantum AcceleratorsDocument17 pagesp1 Quantum Computer Architecture Toward Full-Stack Quantum AcceleratorsAritra SarkarNo ratings yet

- Aveghseo,+03 PPTR 16547 PDocument7 pagesAveghseo,+03 PPTR 16547 PマルワNo ratings yet

- Quantum Computing For Finance State-of-the-Art andDocument24 pagesQuantum Computing For Finance State-of-the-Art andalexander benNo ratings yet

- Quantum Computing Report on Future ProspectsDocument38 pagesQuantum Computing Report on Future ProspectshimanshuNo ratings yet

- P300-Rieffel PDFDocument36 pagesP300-Rieffel PDFJorge Alejandro Barrutia BorbollaNo ratings yet

- Quantum Algorithms: Where We've Been, Where We Are, and Where We're GoingDocument26 pagesQuantum Algorithms: Where We've Been, Where We Are, and Where We're GoingTarek SeguirNo ratings yet

- Flying Unicorn: Developing A Game For A Quantum Computer: Kory BeckerDocument22 pagesFlying Unicorn: Developing A Game For A Quantum Computer: Kory BeckerSadernNo ratings yet

- Quantum Computing for Memory & Hardware Size ReductionDocument1 pageQuantum Computing for Memory & Hardware Size ReductionRishabh KashyapNo ratings yet

- Quantum Machine Learning of Eco-Environmental SurfacesDocument3 pagesQuantum Machine Learning of Eco-Environmental SurfacesfariNo ratings yet

- Solving Machine Learning Optimization Problems Using Quantum ComputersDocument6 pagesSolving Machine Learning Optimization Problems Using Quantum ComputersJehangir VakilNo ratings yet

- (IJCST-V10I2P2) :yew Kee WongDocument6 pages(IJCST-V10I2P2) :yew Kee WongEighthSenseGroupNo ratings yet

- Innovating With Quantum Computing: Enterprise Experimentation Provides View Into Future of ComputingDocument16 pagesInnovating With Quantum Computing: Enterprise Experimentation Provides View Into Future of Computingnilayan mukherjeeNo ratings yet

- AbstractDocument13 pagesAbstractEnoch HsiaoNo ratings yet

- Ladd 2010Document9 pagesLadd 2010Amrutha Swathi PriyaNo ratings yet

- Lukac Perkowski Book Introduction and Quantum MechanicsDocument104 pagesLukac Perkowski Book Introduction and Quantum MechanicsSitalk TeresNo ratings yet

- Quantum Computing Hardware TypesDocument9 pagesQuantum Computing Hardware TypesSeeron SivashankarNo ratings yet

- Physics Physical Property Interaction QuantizationDocument9 pagesPhysics Physical Property Interaction QuantizationMohammed Abdul RahmanNo ratings yet

- Wang QuantumComputationQuantum 2012Document23 pagesWang QuantumComputationQuantum 2012ANo ratings yet

- Quantum Computing: An Introduction: Thomas BethDocument2 pagesQuantum Computing: An Introduction: Thomas BethLuis Gustavo NetoNo ratings yet

- The Physical Implementation of Quantum ComputationDocument9 pagesThe Physical Implementation of Quantum ComputationPaden TranNo ratings yet

- Seminar 1Document14 pagesSeminar 1Waris QuraishiNo ratings yet

- Quantum Complexity Theory - (Definicion Moderna de QTMS) - Bernstein-VaziraniDocument64 pagesQuantum Complexity Theory - (Definicion Moderna de QTMS) - Bernstein-VaziraniGabriel SennoNo ratings yet

- CSE Quantum Computing ReportDocument31 pagesCSE Quantum Computing ReportUtkarshPrasadNo ratings yet

- Quantum Computing: Gateway to Future TechDocument22 pagesQuantum Computing: Gateway to Future TechartifexeNo ratings yet

- Bhanu 16ejtcs016 SeminarReportDocument36 pagesBhanu 16ejtcs016 SeminarReportjayaNo ratings yet

- ISO27701 EU Sep 19Document9 pagesISO27701 EU Sep 19Viko0% (1)

- Springer Text Books: S.No. Book Title Author Edition OpenurlDocument21 pagesSpringer Text Books: S.No. Book Title Author Edition OpenurlGustavo ReyesNo ratings yet

- 2018-05-26-Kotlin Demystified, Rust Over The Past 3 Years + GatsbyDocument5 pages2018-05-26-Kotlin Demystified, Rust Over The Past 3 Years + GatsbyCleilson PereiraNo ratings yet

- The Cyphernomicon PDFDocument366 pagesThe Cyphernomicon PDFMiguel SerraNo ratings yet

- The Cyber Skill Gap PDFDocument134 pagesThe Cyber Skill Gap PDFCleilson PereiraNo ratings yet

- COVID19 WebDocument173 pagesCOVID19 WebCleilson PereiraNo ratings yet

- Phishing Techniques, Defenses, and Future TrendsDocument5 pagesPhishing Techniques, Defenses, and Future TrendsCleilson PereiraNo ratings yet

- Eforensics Magazine 2018 08 Social Media and Instant Message Forensics PREVIEWDocument15 pagesEforensics Magazine 2018 08 Social Media and Instant Message Forensics PREVIEWCleilson PereiraNo ratings yet

- AgileDocument112 pagesAgileVenkatesh.R100% (6)

- Big Data from Facebook Walls using MongoDBDocument55 pagesBig Data from Facebook Walls using MongoDBCleilson PereiraNo ratings yet

- Modular Logic Metaprogramming: Karl Klose Klaus OstermannDocument20 pagesModular Logic Metaprogramming: Karl Klose Klaus OstermannCleilson PereiraNo ratings yet

- A Search Engine Backed by Internet-Wide ScanningDocument12 pagesA Search Engine Backed by Internet-Wide ScanningCleilson PereiraNo ratings yet

- En US CNTNT Ebook AI A Developer's Guide To Building AI ApplicationsDocument52 pagesEn US CNTNT Ebook AI A Developer's Guide To Building AI Applicationsdeveloper79No ratings yet

- Eforensics Magazine 2019 02 OSINT in Forensics PREVIEWDocument17 pagesEforensics Magazine 2019 02 OSINT in Forensics PREVIEWCleilson PereiraNo ratings yet

- Telemedicine challenges in BrazilDocument11 pagesTelemedicine challenges in BrazilCleilson PereiraNo ratings yet

- Eforensics Magazine 2018 05 Open Source Tools and Their Developers UPDATED PDFDocument178 pagesEforensics Magazine 2018 05 Open Source Tools and Their Developers UPDATED PDFCleilson PereiraNo ratings yet

- Your Guide To Contact Center Best Practices: Ebook Vol. 1, April 2015Document16 pagesYour Guide To Contact Center Best Practices: Ebook Vol. 1, April 2015Cleilson PereiraNo ratings yet

- How To Make A Semantic Web Browser: Dennis Quan David R. KargerDocument11 pagesHow To Make A Semantic Web Browser: Dennis Quan David R. KargerCleilson PereiraNo ratings yet

- Clinical Data Standards WhitepaperDocument8 pagesClinical Data Standards WhitepaperCleilson PereiraNo ratings yet

- Generating C++ Template Metaprograms with Racket MacrosDocument9 pagesGenerating C++ Template Metaprograms with Racket MacrosCleilson PereiraNo ratings yet

- Oow08 Rdfs Owl Apps 131263Document64 pagesOow08 Rdfs Owl Apps 131263Asgar AliNo ratings yet

- Linux Networking KernelDocument29 pagesLinux Networking Kernelapi-3754041No ratings yet

- Memex ExplorerDocument2 pagesMemex ExplorerCleilson PereiraNo ratings yet

- The Next Analytics Age AIDocument41 pagesThe Next Analytics Age AInicolepetrescuNo ratings yet

- Memex ExplorerDocument5 pagesMemex ExplorerCleilson PereiraNo ratings yet

- Visual Forensic Analysis and Reverse Engineering of Binary Data PDFDocument63 pagesVisual Forensic Analysis and Reverse Engineering of Binary Data PDFGuilherme do ValleNo ratings yet

- Programming Languages by Type GuideDocument34 pagesProgramming Languages by Type GuideCleilson PereiraNo ratings yet

- Anti-Forensics and Anti-Anti-Forensics PDFDocument60 pagesAnti-Forensics and Anti-Anti-Forensics PDFCleilson PereiraNo ratings yet

- Slides ICIWDocument15 pagesSlides ICIWKhairi AzmiNo ratings yet

- Class 6 - Lasers Problems - Dr. Ajitha - PHY1701Document15 pagesClass 6 - Lasers Problems - Dr. Ajitha - PHY1701Nakshathra D 21BCE1062No ratings yet

- Chemguide - Answers: Electron AffinitiesDocument1 pageChemguide - Answers: Electron AffinitiesDaneilla BanksNo ratings yet

- Physics 30 Calendar Semester 1 2023-2024 With HomeworkDocument4 pagesPhysics 30 Calendar Semester 1 2023-2024 With HomeworkdaanjansenluoNo ratings yet

- Lecture2 v1Document36 pagesLecture2 v1Kathérine JosephNo ratings yet

- LasersDocument49 pagesLasersPragam100% (1)

- Ch08 LectureDocument54 pagesCh08 LectureArbel EcksteinNo ratings yet

- Electrons in Atoms ExplainedDocument12 pagesElectrons in Atoms ExplainedAshrafNo ratings yet

- Elements and AtomsDocument16 pagesElements and Atomshhemn94No ratings yet

- Quantum Computation and Simulation: Christopher MonroeDocument13 pagesQuantum Computation and Simulation: Christopher Monroehrithik hrithikNo ratings yet

- ESQ 03 QuantumDocument11 pagesESQ 03 QuantumJack BornNo ratings yet

- The Variational Theorem: A Powerful Tool for Approximating Ground State EnergiesDocument11 pagesThe Variational Theorem: A Powerful Tool for Approximating Ground State EnergiesPalash0% (1)

- PHYS105 BSED 3B PS2 QuestionnaireDocument2 pagesPHYS105 BSED 3B PS2 QuestionnairePrincess Ghhela Pallega EpaNo ratings yet

- Quantum Information Theory: Key Concepts and Novel LimitsDocument260 pagesQuantum Information Theory: Key Concepts and Novel LimitsJoey33% (3)

- Knowledge and ConsciousnessDocument46 pagesKnowledge and ConsciousnesschandanuppinNo ratings yet

- Collected Scientific Papers PDFDocument346 pagesCollected Scientific Papers PDFamitavagayenNo ratings yet

- PHY-502 (Mathematical Methods of Physics-II) Mid Term 2017-I (GC University)Document1 pagePHY-502 (Mathematical Methods of Physics-II) Mid Term 2017-I (GC University)Ghulam Farid0% (1)

- QM Notes: The Wave FunctionDocument11 pagesQM Notes: The Wave Functionmiyuki shiroganeNo ratings yet

- 5.thermodynamics and Statistical Physics - GATE PDFDocument20 pages5.thermodynamics and Statistical Physics - GATE PDFAthiraTJNo ratings yet

- Electromagnetism Homework: Magnetic Fields and Multipole ExpansionsDocument2 pagesElectromagnetism Homework: Magnetic Fields and Multipole Expansions丁安磊No ratings yet

- Matrix Energetics Text EnglDocument5 pagesMatrix Energetics Text EnglQuantumHealings100% (3)

- Csir-Net Physics: Now It Is Easy To Prepare For Csir-Net, Gate, Jest, Tifr, Iit-Jam and Set ExamDocument20 pagesCsir-Net Physics: Now It Is Easy To Prepare For Csir-Net, Gate, Jest, Tifr, Iit-Jam and Set ExamAli KhanNo ratings yet

- (Oxford Master Series in Physics) Peter Blood - Quantum Confined Laser Devices (2015, Oxford University Press) PDFDocument419 pages(Oxford Master Series in Physics) Peter Blood - Quantum Confined Laser Devices (2015, Oxford University Press) PDFNitika GuptaNo ratings yet

- Wave Nature of Matter ChapterDocument30 pagesWave Nature of Matter ChapterMuhammad Ashfaq Ahmed75% (4)

- Cavity Quantum Electrodynamics For Superconducting Electrical Circuits - An Architecture For Quantum ComputationDocument14 pagesCavity Quantum Electrodynamics For Superconducting Electrical Circuits - An Architecture For Quantum ComputationHuỳnh NguyênNo ratings yet

- JESUS & MARY SCHOOL Multiple Choice Chemistry QuestionsDocument3 pagesJESUS & MARY SCHOOL Multiple Choice Chemistry QuestionsRoman AchkarNo ratings yet

- Detailed Lesson Plan in Modern PhysicsDocument17 pagesDetailed Lesson Plan in Modern PhysicsDolores FilipinoNo ratings yet

- Quantum superposition explained: Schrödinger's cat thought experimentDocument2 pagesQuantum superposition explained: Schrödinger's cat thought experimentBharat AV SreyusNo ratings yet

- Luminescence Types and MechanismsDocument8 pagesLuminescence Types and MechanismsshafiulNo ratings yet

- Gizmos - Photoelectric EffectDocument5 pagesGizmos - Photoelectric EffectAshleyNo ratings yet

- Dark Matter and the Dinosaurs: The Astounding Interconnectedness of the UniverseFrom EverandDark Matter and the Dinosaurs: The Astounding Interconnectedness of the UniverseRating: 3.5 out of 5 stars3.5/5 (69)

- Knocking on Heaven's Door: How Physics and Scientific Thinking Illuminate the Universe and the Modern WorldFrom EverandKnocking on Heaven's Door: How Physics and Scientific Thinking Illuminate the Universe and the Modern WorldRating: 3.5 out of 5 stars3.5/5 (64)

- Packing for Mars: The Curious Science of Life in the VoidFrom EverandPacking for Mars: The Curious Science of Life in the VoidRating: 4 out of 5 stars4/5 (1395)

- Quantum Spirituality: Science, Gnostic Mysticism, and Connecting with Source ConsciousnessFrom EverandQuantum Spirituality: Science, Gnostic Mysticism, and Connecting with Source ConsciousnessRating: 4 out of 5 stars4/5 (6)

- A Beginner's Guide to Constructing the Universe: The Mathematical Archetypes of Nature, Art, and ScienceFrom EverandA Beginner's Guide to Constructing the Universe: The Mathematical Archetypes of Nature, Art, and ScienceRating: 4 out of 5 stars4/5 (51)

- A Brief History of Time: From the Big Bang to Black HolesFrom EverandA Brief History of Time: From the Big Bang to Black HolesRating: 4 out of 5 stars4/5 (2193)

- The Physics of God: How the Deepest Theories of Science Explain Religion and How the Deepest Truths of Religion Explain ScienceFrom EverandThe Physics of God: How the Deepest Theories of Science Explain Religion and How the Deepest Truths of Religion Explain ScienceRating: 4.5 out of 5 stars4.5/5 (23)

- Lost in Math: How Beauty Leads Physics AstrayFrom EverandLost in Math: How Beauty Leads Physics AstrayRating: 4.5 out of 5 stars4.5/5 (125)

- The End of Everything: (Astrophysically Speaking)From EverandThe End of Everything: (Astrophysically Speaking)Rating: 4.5 out of 5 stars4.5/5 (155)

- The Tao of Physics: An Exploration of the Parallels between Modern Physics and Eastern MysticismFrom EverandThe Tao of Physics: An Exploration of the Parallels between Modern Physics and Eastern MysticismRating: 4 out of 5 stars4/5 (500)

- Summary and Interpretation of Reality TransurfingFrom EverandSummary and Interpretation of Reality TransurfingRating: 5 out of 5 stars5/5 (5)

- Black Holes: The Key to Understanding the UniverseFrom EverandBlack Holes: The Key to Understanding the UniverseRating: 4.5 out of 5 stars4.5/5 (13)

- The Power of Eight: Harnessing the Miraculous Energies of a Small Group to Heal Others, Your Life, and the WorldFrom EverandThe Power of Eight: Harnessing the Miraculous Energies of a Small Group to Heal Others, Your Life, and the WorldRating: 4.5 out of 5 stars4.5/5 (54)

- Quantum Physics: What Everyone Needs to KnowFrom EverandQuantum Physics: What Everyone Needs to KnowRating: 4.5 out of 5 stars4.5/5 (48)

- Quantum Physics for Beginners Who Flunked Math And Science: Quantum Mechanics And Physics Made Easy Guide In Plain Simple EnglishFrom EverandQuantum Physics for Beginners Who Flunked Math And Science: Quantum Mechanics And Physics Made Easy Guide In Plain Simple EnglishRating: 4.5 out of 5 stars4.5/5 (18)

- Midnight in Chernobyl: The Story of the World's Greatest Nuclear DisasterFrom EverandMidnight in Chernobyl: The Story of the World's Greatest Nuclear DisasterRating: 4.5 out of 5 stars4.5/5 (409)

- The Holographic Universe: The Revolutionary Theory of RealityFrom EverandThe Holographic Universe: The Revolutionary Theory of RealityRating: 4.5 out of 5 stars4.5/5 (76)

- Bedeviled: A Shadow History of Demons in ScienceFrom EverandBedeviled: A Shadow History of Demons in ScienceRating: 5 out of 5 stars5/5 (5)

- The Magick of Physics: Uncovering the Fantastical Phenomena in Everyday LifeFrom EverandThe Magick of Physics: Uncovering the Fantastical Phenomena in Everyday LifeNo ratings yet

- Chasing Heisenberg: The Race for the Atom BombFrom EverandChasing Heisenberg: The Race for the Atom BombRating: 4.5 out of 5 stars4.5/5 (8)

- Too Big for a Single Mind: How the Greatest Generation of Physicists Uncovered the Quantum WorldFrom EverandToo Big for a Single Mind: How the Greatest Generation of Physicists Uncovered the Quantum WorldRating: 4.5 out of 5 stars4.5/5 (8)