Professional Documents

Culture Documents

Chap 2 PDF

Uploaded by

Manish ShresthaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Chap 2 PDF

Uploaded by

Manish ShresthaCopyright:

Available Formats

Agents in Artificial Intelligence

Artificial intelligence is defined as study of rational agents. A rational

agent could be anything which makes decisions, like a person, firm,

machine, or software. It carries out an action with the best outcome after

considering past and current percepts (agent’s perceptual inputs at a given

instance).

An AI system is composed of an agent and its environment. The agents

act in their environment. The environment may contain other agents. An

agent is anything that can be viewed as:

perceiving its environment through sensors and

acting upon that environment through actuators

Note: Every agent can perceive its own actions (but not always the effects)

To understand the structure of Intelligent Agents, we should be familiar

with Architecture and Agent Program. Architecture is the machinery that

the agent executes on. It is a device with sensors and actuators, for

example: a robotic car, a camera, a PC. Agent program is an

implementation of an agent function. An agent function is a map from

the percept sequence (history of all that an agent has perceived till date)

to an action.

Agent = Architecture + Agent Program

Rationality

Rationality is nothing but status of being reasonable, sensible, and having

good sense of judgment.

Rationality is concerned with expected actions and results depending

upon what the agent has perceived. Performing actions with the aim of

obtaining useful information is an important part of rationality.

What is Ideal Rational Agent?

An ideal rational agent is the one, which is capable of doing expected

actions to maximize its performance measure, on the basis of −

Its percept sequence

Its built-in knowledge base

Rationality of an agent depends on the following −

The performance measures, which determine the degree of

success.

Agent’s Percept Sequence till now.

The agent’s prior knowledge about the environment.

The actions that the agent can carry out.

A rational agent always performs right action, where the right action

means the action that causes the agent to be most successful in the given

percept sequence. The problem the agent solves is characterized by

Performance Measure, Environment, Actuators, and Sensors (PEAS)

Examples Of Agents:-

A software agent has Keystrokes, file contents, received network

packages which act as sensors and displays on the screen, files, sent

network packets acting as actuators.

A Human agent has eyes, ears, and other organs which act as sensors and

hands, legs, mouth, and other body parts acting as actuators.

A Robotic agent has Cameras and infrared range finders which act as

sensors and various motors acting as actuators.

TYPES OF AGENTS

Simple Reflex Agents

They choose actions only based on the current percept.

They are rational only if a correct decision is made only on the basis

of current precept.

Their environment is completely observable.

Condition-Action Rule − It is a rule that maps a state (condition) to an

action.

Model Based Reflex Agents

They use a model of the world to choose their actions. They maintain an

internal state.

Model − knowledge about “how the things happen in the world”.

Internal State − It is a representation of unobserved aspects of current

state depending on percept history.

Updating the state requires the information about −

How the world evolves.

How the agent’s actions affect the world.

Goal Based Agents

They choose their actions in order to achieve goals. Goal-based approach

is more flexible than reflex agent since the knowledge supporting a

decision is explicitly modeled, thereby allowing for modifications.

Goal − It is the description of desirable situations.

Utility Based Agents

They choose actions based on a preference (utility) for each state.

Goals are inadequate when −

There are conflicting goals, out of which only few can be achieved.

Goals have some uncertainty of being achieved and you need to

weigh likelihood of success against the importance of a goal.

Types of Environment in AI

We can classify environments to predict how difficult the AI task will be.

Fully Observable

When it is possible to determine the complete state of the environment

each time your agent needs to make the optimal decision. For example, a

checkers game can be classed as fully observable, because the agent can

observe the full state of the game (how many pieces the opponent has,

how many pieces we have etc.)

Partially Observable

Contrast to fully observable environments, Agents may memory of past

decision to make the optimal choice within their environment. An

example of this could be a Poker game. The Agent may not know what

cards the opponent has and will have to make best decision based on what

cards the opponent has played.

Deterministic

Deterministic environments are where your agent's actions uniquely

determine the outcome. So for example, if we had a pawn while playing

chess and we moved that piece from A2 to A3 that would always work.

There is no uncertainty in the outcome of that move.

Stochastic

Unlike deterministic environments, there is a certain amount of

randomness involved. Using our poker game example, when a card is

dealt there is a certain amount of randomness involved in which card will

be drawn.

Discrete

In discrete environments, we have a finite amount of action choices  and

a finite amount of things that we can sense. Using our checkers example

again, there are a finite amount of board positions and a finite amount of

things we can do within the checkers environment.

Continuous

In continuous environments, many actions can be sensed by our agents.

To apply this to a medical context, a patient's temperature and blood

pressure are continuous variables, and can be sensed by medical agents

designed to capture vital signs from patients and then recommend

diagnostic action to healthcare professionals.

Benign

In benign environments, the environment has no objective by itself that

would contradict your own object. For example, when it rains it might

ruin your plans to play cricket (great game, I promise) but it doesn't rain

just because Thor (God of Thunder) doesn't want you to play cricket. It

does it through factors unrelated to your objective.

Adversarial

Adversarial environments on the other hand do get out to get you. This is

commonplace in games, such as video games, where bosses and enemies

are out to destroy your plans of getting that high score, or in chess where

an AI would be out to checkmate you.

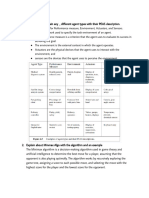

PEAS

There are certain types of AI agents. But apart from these types, there are many

agents which are being designed and created today and they differ from each other

in some aspects and have some aspects in common too. So, to group similar types

of agents together, a system was developed which is known as PEAS system.

PEAS stands for Performance, Environment, Actuators, and Sensors. Based

on these properties of an agent, they can be grouped together or can be

differentiated from each other. Each agent has these following properties defines

for it.

Performance:

The output which we get from the agent. All the necessary results that an agent

gives after processing comes under its performance.

Environment:

All the surrounding things and conditions of an agent fall in this section. It

basically consists of all the things under which the agents work.

Actuators:

The devices, hardware or software through which the agent performs any actions

or processes any information to produce a result are the actuators of the agent.

Sensors:

The devices through which the agent observes and perceives its environment are

the sensors of the agent.

EXAMPLE:

Let us take an example of a self-driven car. As the name suggests, it is a car which

drives on its own, by taking all the necessary decisions while driving without any

help from the user (customer). In other words, we can say that this car drives on its

own and requires no driver. The PEAS description for this agent will be as

follows:

Performance: The performance factors for a self-driven car will be the Speed,

Safety while driving (both of the car and the user), Time is taken to drive to a

particular location, the comfort of the user, etc.

Environment: The road on which the Car is being driven, other cars present on the

road, pedestrians, crossings, road signs, traffic signals, etc. , all act as its

environment.

Actuators: All those devices through which the control of the car is handled, are

the actuators of the car. For example, the Steering, Accelerator, Breaks, Horn,

Music system, etc.

Sensors: All those devices through which the car gets an estimate about its

surroundings and it can draw certain perceptions out of it are its sensors. For

example, Camera, Speedometer, GPS, Odometer, Sonar, etc.

You might also like

- Reinforcement Learning Explained - A Step-by-Step Guide to Reward-Driven AIFrom EverandReinforcement Learning Explained - A Step-by-Step Guide to Reward-Driven AINo ratings yet

- Agents in Artificial IntelligenceDocument7 pagesAgents in Artificial IntelligenceJAYANTA GHOSHNo ratings yet

- Agents and Environments Rationality Peas (Performance Measure, Environment, Actuators, Sensors) Class of Environment Agent TypesDocument32 pagesAgents and Environments Rationality Peas (Performance Measure, Environment, Actuators, Sensors) Class of Environment Agent TypesAga ChimdesaNo ratings yet

- Lecture 2Document20 pagesLecture 2SHARDUL KULKARNINo ratings yet

- unit-1 part 2Document8 pagesunit-1 part 2anshul.saini0803No ratings yet

- AI Intro1Document81 pagesAI Intro1saad younasNo ratings yet

- AI - Agents & EnvironmentsDocument7 pagesAI - Agents & Environmentsp229252No ratings yet

- CH 2 Agents Type and StrcutureDocument28 pagesCH 2 Agents Type and StrcutureYonatan GetachewNo ratings yet

- Agents in AIDocument14 pagesAgents in AIRitam MajumderNo ratings yet

- 03 - AgentsDocument25 pages03 - AgentsGilbert KhoueiryNo ratings yet

- AI Unit 1Document50 pagesAI Unit 1Lavanya H MNo ratings yet

- By DR Narayana Swamy Ramaiah Professor, Dept of CSE SCSE, FET, JAIN Deemed To Be UniversityDocument27 pagesBy DR Narayana Swamy Ramaiah Professor, Dept of CSE SCSE, FET, JAIN Deemed To Be UniversityDr Narayana Swamy RamaiahNo ratings yet

- Agents in Artificial IntelligenceDocument8 pagesAgents in Artificial Intelligenceolastig technologiesNo ratings yet

- AICH2Document38 pagesAICH2Aliyu AhmedNo ratings yet

- 2 Intelligent AgentDocument33 pages2 Intelligent AgentsantaNo ratings yet

- Chap2 AIDocument46 pagesChap2 AIshiferawyosef12No ratings yet

- The Structure and Types of Intelligent AgentsDocument6 pagesThe Structure and Types of Intelligent AgentsSIDDHANT JAIN 20SCSE1010186No ratings yet

- Midterm: What Are Agent and Environment?Document14 pagesMidterm: What Are Agent and Environment?menchu galdonesNo ratings yet

- AI Lecture 2 PDFDocument15 pagesAI Lecture 2 PDFJabin Akter JotyNo ratings yet

- Intelligent Agents PEAS ModelDocument39 pagesIntelligent Agents PEAS ModelookuroooNo ratings yet

- Intelligent AgentsDocument32 pagesIntelligent AgentsHerimantoNo ratings yet

- AI Agents and Their EnvironmentsDocument15 pagesAI Agents and Their EnvironmentsKunal KumarNo ratings yet

- Ai CH2Document34 pagesAi CH2Nurlign YitbarekNo ratings yet

- Intelligent Agents and Types of Agents: Artificial IntelligenceDocument22 pagesIntelligent Agents and Types of Agents: Artificial IntelligenceFind the hero in you100% (1)

- Artificial IntelligenceDocument9 pagesArtificial IntelligenceWodari HelenaNo ratings yet

- Chapter 2 - Intelligent AgentDocument38 pagesChapter 2 - Intelligent AgentmuhammedNo ratings yet

- A.I Lecture 4Document28 pagesA.I Lecture 4Abdul MahmudNo ratings yet

- Lec 03Document40 pagesLec 03Sourabh ShahNo ratings yet

- AI Chap-2Document38 pagesAI Chap-2Merdi SJNo ratings yet

- AI-Intelligent Agents: Dr. Azhar MahmoodDocument45 pagesAI-Intelligent Agents: Dr. Azhar MahmoodMansoor QaisraniNo ratings yet

- Intelligent Agents and EnvironmentDocument9 pagesIntelligent Agents and EnvironmentliwatobNo ratings yet

- AI-Unit-2 (Updated Handsout)Document14 pagesAI-Unit-2 (Updated Handsout)Nirjal DhamalaNo ratings yet

- CHAPTER TwoDocument34 pagesCHAPTER Twoteshu wodesaNo ratings yet

- Artificial Intelligence - IntroductionDocument40 pagesArtificial Intelligence - IntroductionAbhishek SainiNo ratings yet

- Unit - V: AgentsDocument44 pagesUnit - V: AgentsBhargavNo ratings yet

- CS302 Unit1-IDocument21 pagesCS302 Unit1-IPrakhar GargNo ratings yet

- CH 2 Intelligent Agent For CsDocument50 pagesCH 2 Intelligent Agent For Csbenjamin abewaNo ratings yet

- Chapter II Intelligent AgentsDocument50 pagesChapter II Intelligent AgentsblackhatNo ratings yet

- Artificial IntelligenceDocument331 pagesArtificial IntelligenceMukul.A. MaharajNo ratings yet

- AI - Agents & EnvironmentsDocument6 pagesAI - Agents & EnvironmentsRainrock BrillerNo ratings yet

- Chapter 2Document41 pagesChapter 2kiramelaku1No ratings yet

- Intelligent Agents: Lecturer: DR - Nguyen Thanh BinhDocument39 pagesIntelligent Agents: Lecturer: DR - Nguyen Thanh BinhThuThao NguyenNo ratings yet

- Agents: Aiza Shabir Lecturer Institute of CS&IT The Women University, MultanDocument30 pagesAgents: Aiza Shabir Lecturer Institute of CS&IT The Women University, MultanShah JeeNo ratings yet

- CSE440_Lect_3_AgentDocument35 pagesCSE440_Lect_3_AgentSumaiya SadiaNo ratings yet

- Lecture 3Document23 pagesLecture 3SOUMYODEEP NAYAK 22BCE10547No ratings yet

- AI AgentsDocument9 pagesAI AgentsNirmal Varghese Babu 2528No ratings yet

- Chapter Two Intelligent Agent: ObjectiveDocument46 pagesChapter Two Intelligent Agent: ObjectiveTefera KunbushuNo ratings yet

- Week 2Document5 pagesWeek 2susanabdullahi1No ratings yet

- Understanding Intelligent AgentsDocument28 pagesUnderstanding Intelligent Agentspacho herreraNo ratings yet

- Intelligent Agent DefinitionDocument21 pagesIntelligent Agent Definitionali hussainNo ratings yet

- Ai 4Document24 pagesAi 4Kashif MehmoodNo ratings yet

- 1.3.1 Introduction To AgentsDocument3 pages1.3.1 Introduction To AgentsNarendra KumarNo ratings yet

- 2.agent Search and Game PlayingDocument35 pages2.agent Search and Game PlayingRandeep PoudelNo ratings yet

- Chapter Two: Intelligent Agents: Hilcoe School of Computer Science and TechnologyDocument48 pagesChapter Two: Intelligent Agents: Hilcoe School of Computer Science and TechnologyTemam MohammedNo ratings yet

- Agents & Environment in Ai: Submitted byDocument14 pagesAgents & Environment in Ai: Submitted byCLASS WORKNo ratings yet

- Chapter 2 - Inteligent AgentDocument31 pagesChapter 2 - Inteligent AgentDawit AndargachewNo ratings yet

- 18AI71 - AAI INTERNAL 1 QB AnswersDocument14 pages18AI71 - AAI INTERNAL 1 QB AnswersSahithi BhashyamNo ratings yet

- 3 - C Intelligent AgentDocument22 pages3 - C Intelligent AgentPratik RajNo ratings yet

- Lecture 05 - 06 Intelligent Agents - AI - UAARDocument33 pagesLecture 05 - 06 Intelligent Agents - AI - UAAREk RahNo ratings yet

- FundMe Social Welfare Crowd Funding Website Project DefenseDocument9 pagesFundMe Social Welfare Crowd Funding Website Project DefenseManish ShresthaNo ratings yet

- Final Year Online Donation Site DocumentationDocument30 pagesFinal Year Online Donation Site DocumentationManish ShresthaNo ratings yet

- Final Year Online Donation System 8th IndexDocument3 pagesFinal Year Online Donation System 8th IndexManish ShresthaNo ratings yet

- Final Year Online Donation System IndexDocument3 pagesFinal Year Online Donation System IndexManish ShresthaNo ratings yet

- Final Year Online Donation Site DocumentationDocument30 pagesFinal Year Online Donation Site DocumentationManish ShresthaNo ratings yet

- Chapter 10: Natural Language Processing: Components of NLPDocument3 pagesChapter 10: Natural Language Processing: Components of NLPManish Shrestha100% (1)

- Chapter 7: ReasoningDocument5 pagesChapter 7: ReasoningManish ShresthaNo ratings yet

- Chapter 8: Expert System: What Are Expert Systems?Document9 pagesChapter 8: Expert System: What Are Expert Systems?Manish ShresthaNo ratings yet

- Learning AI PDFDocument8 pagesLearning AI PDFManish ShresthaNo ratings yet

- AI Chap (I)Document6 pagesAI Chap (I)Manish ShresthaNo ratings yet

- CH 5Document7 pagesCH 5Manish ShresthaNo ratings yet

- Artificial Neural Networks (ch7)Document12 pagesArtificial Neural Networks (ch7)Manish ShresthaNo ratings yet

- Online Job Portal Complete Project ReportDocument46 pagesOnline Job Portal Complete Project ReportManish Shrestha80% (10)

- Artiticial Intelligence AI Chapter 1 Ioenotes Edu NPDocument5 pagesArtiticial Intelligence AI Chapter 1 Ioenotes Edu NPSawn HotNo ratings yet

- Online Job Portal Power Point PresentationDocument13 pagesOnline Job Portal Power Point PresentationManish ShresthaNo ratings yet

- Online Job Portal Complete Project ReportDocument46 pagesOnline Job Portal Complete Project ReportManish Shrestha80% (10)

- Customer Churn - E-Commerce: Capstone Project ReportDocument43 pagesCustomer Churn - E-Commerce: Capstone Project Reportnisha arul100% (1)

- Profit TakerDocument9 pagesProfit TakerCososoNo ratings yet

- Moog DS2000XP GUI Manual PDFDocument47 pagesMoog DS2000XP GUI Manual PDFใบบอนสิชล100% (1)

- Achieving Goals and Overcoming ChallengesDocument8 pagesAchieving Goals and Overcoming ChallengesmahaNo ratings yet

- Capstone Project I. Definition: Machine Learning Engineer NanodegreeDocument26 pagesCapstone Project I. Definition: Machine Learning Engineer NanodegreeVaibhav MandhareNo ratings yet

- MG 585 Project ManagementDocument17 pagesMG 585 Project ManagementPaula PlataNo ratings yet

- Cadangan Jawapan STPM 2013Document37 pagesCadangan Jawapan STPM 2013jennyhewmtNo ratings yet

- 10997C Github User Guide For MCTsDocument9 pages10997C Github User Guide For MCTsZ OureelNo ratings yet

- ROV INSET Completion Report TemplateDocument31 pagesROV INSET Completion Report Templateglenn bermudoNo ratings yet

- Data Processor Cover LetterDocument5 pagesData Processor Cover Letterzys0vemap0m3100% (2)

- RCS Interworking Guidelines 17 October 2019: This Is A Non-Binding Permanent Reference Document of The GSMADocument27 pagesRCS Interworking Guidelines 17 October 2019: This Is A Non-Binding Permanent Reference Document of The GSMAMohsin KhanNo ratings yet

- How To Prepare Recovery or Revised Schedule PDFDocument30 pagesHow To Prepare Recovery or Revised Schedule PDFMohamed ElfahlNo ratings yet

- Assignment 4 Geometric TransformationsDocument2 pagesAssignment 4 Geometric TransformationsKrish100% (1)

- Is There Bitcoin Atm Mexicali Baja California - Google SearchDocument1 pageIs There Bitcoin Atm Mexicali Baja California - Google Searchannejessica396No ratings yet

- Robot state and safety mode dataDocument11 pagesRobot state and safety mode dataElie SidnawyNo ratings yet

- Cryptography Comes From Greek Word Kryptos, MeaningDocument33 pagesCryptography Comes From Greek Word Kryptos, Meaningraunakbajoria75% (8)

- Week004-DML-LabExer001 Rivera DennisDocument6 pagesWeek004-DML-LabExer001 Rivera DennisMary Jane PagayNo ratings yet

- Solution: K K: Terms Related To Graph Theory: For K, e N (n-1) and For N 2, The Degree Is Equal To N - 1. ExampleDocument1 pageSolution: K K: Terms Related To Graph Theory: For K, e N (n-1) and For N 2, The Degree Is Equal To N - 1. ExampleAthena LedesmaNo ratings yet

- Configure PoE Power ModesDocument16 pagesConfigure PoE Power ModesRoberto PachecoNo ratings yet

- Ai-Lcd104ha 530134Document3 pagesAi-Lcd104ha 530134Alex AbadNo ratings yet

- DRF Questions AnswersDocument20 pagesDRF Questions AnswersShaid Hasan ShawonNo ratings yet

- 4.storage Devices PDFDocument50 pages4.storage Devices PDFSujan pandey100% (1)

- While Working On SAP SD Pricing Attributes - What Is WRONG?Document11 pagesWhile Working On SAP SD Pricing Attributes - What Is WRONG?ermandeepNo ratings yet

- Sign Language RecognitionDocument9 pagesSign Language RecognitionIJRASETPublicationsNo ratings yet

- Group 3 Abe Plumbing Inc Written ReportDocument6 pagesGroup 3 Abe Plumbing Inc Written ReportGennica MurilloNo ratings yet

- Narrative ReportDocument4 pagesNarrative ReportMelody DacuyaNo ratings yet

- Computer Ports and CablesDocument2 pagesComputer Ports and CablesFaith AdrianoNo ratings yet

- Computer Awareness Capsule (Studyniti) - Kapil KathpalDocument62 pagesComputer Awareness Capsule (Studyniti) - Kapil KathpalVijay RaghuvanshiNo ratings yet

- Monitoring Card Type VT 13 477: Series 2XDocument8 pagesMonitoring Card Type VT 13 477: Series 2XАлександр БулдыгинNo ratings yet

- 7MCE1C3-Advanced Java ProgrammingDocument84 pages7MCE1C3-Advanced Java ProgrammingSarvasaisrinathNo ratings yet

- ChatGPT Side Hustles 2024 - Unlock the Digital Goldmine and Get AI Working for You Fast with More Than 85 Side Hustle Ideas to Boost Passive Income, Create New Cash Flow, and Get Ahead of the CurveFrom EverandChatGPT Side Hustles 2024 - Unlock the Digital Goldmine and Get AI Working for You Fast with More Than 85 Side Hustle Ideas to Boost Passive Income, Create New Cash Flow, and Get Ahead of the CurveNo ratings yet

- Algorithms to Live By: The Computer Science of Human DecisionsFrom EverandAlgorithms to Live By: The Computer Science of Human DecisionsRating: 4.5 out of 5 stars4.5/5 (722)

- Defensive Cyber Mastery: Expert Strategies for Unbeatable Personal and Business SecurityFrom EverandDefensive Cyber Mastery: Expert Strategies for Unbeatable Personal and Business SecurityRating: 5 out of 5 stars5/5 (1)

- Cyber War: The Next Threat to National Security and What to Do About ItFrom EverandCyber War: The Next Threat to National Security and What to Do About ItRating: 3.5 out of 5 stars3.5/5 (66)

- CompTIA Security+ Get Certified Get Ahead: SY0-701 Study GuideFrom EverandCompTIA Security+ Get Certified Get Ahead: SY0-701 Study GuideRating: 5 out of 5 stars5/5 (2)

- ChatGPT Millionaire 2024 - Bot-Driven Side Hustles, Prompt Engineering Shortcut Secrets, and Automated Income Streams that Print Money While You Sleep. The Ultimate Beginner’s Guide for AI BusinessFrom EverandChatGPT Millionaire 2024 - Bot-Driven Side Hustles, Prompt Engineering Shortcut Secrets, and Automated Income Streams that Print Money While You Sleep. The Ultimate Beginner’s Guide for AI BusinessNo ratings yet

- Scary Smart: The Future of Artificial Intelligence and How You Can Save Our WorldFrom EverandScary Smart: The Future of Artificial Intelligence and How You Can Save Our WorldRating: 4.5 out of 5 stars4.5/5 (55)

- Chip War: The Quest to Dominate the World's Most Critical TechnologyFrom EverandChip War: The Quest to Dominate the World's Most Critical TechnologyRating: 4.5 out of 5 stars4.5/5 (227)

- Digital Gold: Bitcoin and the Inside Story of the Misfits and Millionaires Trying to Reinvent MoneyFrom EverandDigital Gold: Bitcoin and the Inside Story of the Misfits and Millionaires Trying to Reinvent MoneyRating: 4 out of 5 stars4/5 (51)

- Reality+: Virtual Worlds and the Problems of PhilosophyFrom EverandReality+: Virtual Worlds and the Problems of PhilosophyRating: 4 out of 5 stars4/5 (24)

- Chaos Monkeys: Obscene Fortune and Random Failure in Silicon ValleyFrom EverandChaos Monkeys: Obscene Fortune and Random Failure in Silicon ValleyRating: 3.5 out of 5 stars3.5/5 (111)

- Generative AI: The Insights You Need from Harvard Business ReviewFrom EverandGenerative AI: The Insights You Need from Harvard Business ReviewRating: 4.5 out of 5 stars4.5/5 (2)

- The Infinite Machine: How an Army of Crypto-Hackers Is Building the Next Internet with EthereumFrom EverandThe Infinite Machine: How an Army of Crypto-Hackers Is Building the Next Internet with EthereumRating: 3 out of 5 stars3/5 (12)

- The Intel Trinity: How Robert Noyce, Gordon Moore, and Andy Grove Built the World's Most Important CompanyFrom EverandThe Intel Trinity: How Robert Noyce, Gordon Moore, and Andy Grove Built the World's Most Important CompanyNo ratings yet

- ChatGPT Money Machine 2024 - The Ultimate Chatbot Cheat Sheet to Go From Clueless Noob to Prompt Prodigy Fast! Complete AI Beginner’s Course to Catch the GPT Gold Rush Before It Leaves You BehindFrom EverandChatGPT Money Machine 2024 - The Ultimate Chatbot Cheat Sheet to Go From Clueless Noob to Prompt Prodigy Fast! Complete AI Beginner’s Course to Catch the GPT Gold Rush Before It Leaves You BehindNo ratings yet

- The Simulated Multiverse: An MIT Computer Scientist Explores Parallel Universes, The Simulation Hypothesis, Quantum Computing and the Mandela EffectFrom EverandThe Simulated Multiverse: An MIT Computer Scientist Explores Parallel Universes, The Simulation Hypothesis, Quantum Computing and the Mandela EffectRating: 4.5 out of 5 stars4.5/5 (20)

- The E-Myth Revisited: Why Most Small Businesses Don't Work andFrom EverandThe E-Myth Revisited: Why Most Small Businesses Don't Work andRating: 4.5 out of 5 stars4.5/5 (709)

- Invent and Wander: The Collected Writings of Jeff Bezos, With an Introduction by Walter IsaacsonFrom EverandInvent and Wander: The Collected Writings of Jeff Bezos, With an Introduction by Walter IsaacsonRating: 4.5 out of 5 stars4.5/5 (123)

- AI Superpowers: China, Silicon Valley, and the New World OrderFrom EverandAI Superpowers: China, Silicon Valley, and the New World OrderRating: 4.5 out of 5 stars4.5/5 (398)

- Blood, Sweat, and Pixels: The Triumphant, Turbulent Stories Behind How Video Games Are MadeFrom EverandBlood, Sweat, and Pixels: The Triumphant, Turbulent Stories Behind How Video Games Are MadeRating: 4.5 out of 5 stars4.5/5 (335)