Professional Documents

Culture Documents

Lec 33

Uploaded by

todkarvarshaOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Lec 33

Uploaded by

todkarvarshaCopyright:

Available Formats

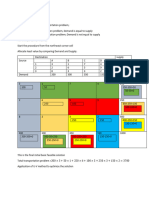

Gaussian Mixture Model by Using EM

Shubing Wang∗

April 17, 2004

1. 2-component Gaussian Mixture Model. We consider the

The MRI image of bone slice The histgram of the reduced intensity list

500

250

450

case that all the 5 parameters 50

200 400

T 350

θ = (p, µ1 , σ1 , µ2 , σ2 ) 100

150 300

are unknown. By using EM algorithm:

250

150

100 200

• Estimation Step: define p˜i = P (yi ∼ f1 |Θ), then

150

200

50 100

pf1 (yi |Θ) 50

p̃ =

250

. 50 100 150 200 250

0 0

50 100 150 200 250

pf1 (yi |Θ) + (1 − p)f2 (yi |Θ)

So Figure 1: Left: mid sagittal brain image. Right: histogram of

0

X

0

√ image intensity.

Q(Θ, Θ ) = {[p˜i (log p − log 2π

i 500

(Yi − µ01 )2

− log σ10 − )] 450

The Gaussian mix−

2σ12 ture model

√ 400

+[(1 − p˜i )(log(1 − p0 ) − log 2π

(Yi − µ02 )2 350

− log σ20 − )]}

2σ22 300

• Maximization Step: 250

P

i p˜i

p0 = 200

n

150

P

p˜ Y

0

µ1 = Pi i i

i p˜i 100

sP

(Yi − µ01 )2

i p˜iP

50

σ10 =

i p˜i 0

P 50 100 150 200 250

(1 − p˜i )Yi

µ02 = Pi

i (1 − p˜i ) Figure 2: The comparation of the original data and the fitted

sP Gaussian Mixture Model

i (1P− p˜i )(Yi − µ02 )2

σ20 =

i (1 − p˜i )

3. Segmentation by Using Gaussian Mixture Model. We

2. M -component Gaussian Mixture Model. It can derived start with data as shown in Figure 1. We always should

similarly by using EM with the Maximization step: get the histgram as well, since the initial guess is cru-

cial when one is trying to do EM algorithm. A good ini-

P

0 i p˜ ji

pj = , 1≤j≤M tial guess will absolutely improve the preformance of the

n

programming and histgrams provide the clue for goog

P

p˜ Y

µ0j = Pi ji i , if 1 ≤ j < M initial guess. One can get the 2-component Gaussian

i p˜ ji

sP Mixture Model as shown in Figure 2. The result of seg-

0 2

i p˜ji (Yi − µj ) mentation as shown in Figure 3.

σj0 = P , if 1 ≤ j < M

i p˜ji Intuitively, 3-component Gaussian Mixture Model will

P PM −1 give a better result. Figure 4 and Figure 5 show the re-

i (1 − j=1 p˜ji )Yi

0

µM = P PM −1 sult of segmentation by using the 3-component Gaussian

i (1 − j=1 p˜ji ) Mixture Model.

v

uP PM −1

u i (1 − j=1 p˜ji )(Yi − µ0M )2

0

σM = t P PM −1

i (1 − j=1 p˜ji )

∗ Email: wang6@wisc.edu

1 1

0.9 0.9

50 0.8 50 0.8

0.7 0.7

100 0.6 100 0.6

0.5 0.5

150 150

0.4 0.4

0.3 0.3

200 200

0.2 0.2

0.1 0.1

250 250

0 0

50 100 150 200 250 50 100 150 200 250

Figure 3: The two components of the 2-component Gaussian

Mixture Model

The Gaussian Mixture Model and the Original Data

500

450

400

350

300

250

200

150

100

50

0

50 100 150 200 250

Figure 4: The fitting 3-component Gaussian Mixture Model

1 1

0.9 0.9

50 0.8 50 0.8

0.7 0.7

100 0.6 100 0.6

0.5 0.5

150 150

0.4 0.4

0.3 0.3

200 200

0.2 0.2

0.1 0.1

250 250

0 0

50 100 150 200 250 50 100 150 200 250

1

0.9

50 0.8

0.7

100 0.6

0.5

150

0.4

0.3

200

0.2

0.1

250

0

50 100 150 200 250

Figure 5: The 3 segments of the 3-component Gaussian Mix-

ture Model

You might also like

- Image5 Intensity TransfDocument25 pagesImage5 Intensity TransfYaacoub El GuezdarNo ratings yet

- Exp 1 GraphDocument4 pagesExp 1 Graphbabidoll111No ratings yet

- ISO Starline Pump Curves and DataDocument99 pagesISO Starline Pump Curves and DataJohn SmithNo ratings yet

- KLUEVER, Craig A. Sistemas Dinâmicos Modelagem, Simulação e Controle-Ch06Document32 pagesKLUEVER, Craig A. Sistemas Dinâmicos Modelagem, Simulação e Controle-Ch06Pedro IsaiaNo ratings yet

- Module 4 Programming - Matlab: 1 Written ExercisesDocument4 pagesModule 4 Programming - Matlab: 1 Written ExerciseswandersonNo ratings yet

- Presentasi 12Document5 pagesPresentasi 12oliaNo ratings yet

- Two Dimensional Phase Unwrapping FinalpdfDocument32 pagesTwo Dimensional Phase Unwrapping FinalpdfsoroushNo ratings yet

- Msa RD Power HouseDocument1 pageMsa RD Power HouseTitus OmondiNo ratings yet

- Avg. PM2.5Document4 pagesAvg. PM2.5Aashish PatilNo ratings yet

- Evaluation of Stages Power MeterDocument3 pagesEvaluation of Stages Power MeterShem RodgerNo ratings yet

- Ficha Tecnica U 150 KSBDocument1 pageFicha Tecnica U 150 KSBElizardo Isaias Campos CamposNo ratings yet

- MT23STR020 Assignment 01Document5 pagesMT23STR020 Assignment 01pratik vharambaleNo ratings yet

- Color Plates: Thrusting Event SubductionDocument15 pagesColor Plates: Thrusting Event Subductioniulyan007No ratings yet

- Engineering Dynamics: Solutions To Chapter 2Document63 pagesEngineering Dynamics: Solutions To Chapter 2Karim TouqanNo ratings yet

- 1 Elem 1 Elem 1 Elem 2 Elem 1 Elem 1 Elem 1 Elem 2 ElemDocument1 page1 Elem 1 Elem 1 Elem 2 Elem 1 Elem 1 Elem 1 Elem 2 ElemMercy SalazarNo ratings yet

- Fundamentos 1Document3 pagesFundamentos 1jeffersonramoschero2305No ratings yet

- KSB Etanorm CurvesDocument83 pagesKSB Etanorm CurvesEric Fabro100% (5)

- Birsan Dragos Alexandru Grupa 443-B Lab5 - SEP Semnal DreptunghiularDocument2 pagesBirsan Dragos Alexandru Grupa 443-B Lab5 - SEP Semnal DreptunghiularDragos AlexandruNo ratings yet

- Denah 3Document1 pageDenah 3albaihaqiNo ratings yet

- Baseball Parabola HandoutDocument2 pagesBaseball Parabola HandoutMr. VanWylen100% (2)

- 315 Pump Industry: RPM TRIMMING (R) : R 538 Curve No: 50 HZ SUC: 300 DISCH: 250Document2 pages315 Pump Industry: RPM TRIMMING (R) : R 538 Curve No: 50 HZ SUC: 300 DISCH: 250g1ann1sNo ratings yet

- Airfoil E423Document1 pageAirfoil E423burhanNo ratings yet

- Test Data: Figure 1. Position of The ScannerDocument5 pagesTest Data: Figure 1. Position of The ScannerahmetNo ratings yet

- Exercise & Assignment Design of RC Staircase: SKAB 4333Document10 pagesExercise & Assignment Design of RC Staircase: SKAB 4333Faddzil HamdanNo ratings yet

- 5 - PDFsam - 1shear N T BeamsDocument1 page5 - PDFsam - 1shear N T BeamsAnonymous dxsNnL6S8hNo ratings yet

- Presentation 2Document2 pagesPresentation 2api-628196609No ratings yet

- Example: Input Design: EE263 Autumn 2015 S. Boyd and S. LallDocument9 pagesExample: Input Design: EE263 Autumn 2015 S. Boyd and S. LallMouliNo ratings yet

- CHPTR 4 Final Acts EmsaDocument20 pagesCHPTR 4 Final Acts EmsaBhebz Erin MaeNo ratings yet

- Chart 1 DT/DX: Q (W) T1 (K) T2 (K) T3 (K) T4 (K) T5 (K) T6 (K) 10 370 368.5 365.8 312.1 309.3 307.2 10 20 30 70 80 90Document2 pagesChart 1 DT/DX: Q (W) T1 (K) T2 (K) T3 (K) T4 (K) T5 (K) T6 (K) 10 370 368.5 365.8 312.1 309.3 307.2 10 20 30 70 80 90mohammadNo ratings yet

- Denah PondasiDocument1 pageDenah PondasiMustika RahmadiniNo ratings yet

- Lengua OfiDocument1 pageLengua OfiAlexander RussellNo ratings yet

- KSB Family CurvesDocument1 pageKSB Family CurvesHarshad Athawale100% (1)

- Design of Columns Attanagalla Water Supply Pump Station - Column - Z1Document11 pagesDesign of Columns Attanagalla Water Supply Pump Station - Column - Z1Amila KulasooriyaNo ratings yet

- Chart Title: 350 F (X) - 4.144x + 325 R 1Document3 pagesChart Title: 350 F (X) - 4.144x + 325 R 1vio270809No ratings yet

- Chart Title: 350 F (X) - 4.144x + 325 R 1Document3 pagesChart Title: 350 F (X) - 4.144x + 325 R 1vio270809No ratings yet

- Minimum Operating Envelope 3 Inch 8ppg Trim ChokesDocument1 pageMinimum Operating Envelope 3 Inch 8ppg Trim Chokescarlos angelNo ratings yet

- C:/Tricomponente 08-05-2019/acetaminofen - Dat, DAD-280 NM: Retention Time Name Area (Group) Name AreaDocument1 pageC:/Tricomponente 08-05-2019/acetaminofen - Dat, DAD-280 NM: Retention Time Name Area (Group) Name AreaMaria Del Pilar Hurtado SanchezNo ratings yet

- English Metric: Column Interaction Diagram Units: KG, KG-MDocument4 pagesEnglish Metric: Column Interaction Diagram Units: KG, KG-MHans OlmosNo ratings yet

- Home GateDocument1 pageHome GateOmbeni HysonNo ratings yet

- Heat GraphDocument1 pageHeat GraphParth FalduNo ratings yet

- Department of Statistics Course STATS 330/762: Advanced Statistical Modelling/Special Topic in RegressionDocument10 pagesDepartment of Statistics Course STATS 330/762: Advanced Statistical Modelling/Special Topic in RegressionPETERNo ratings yet

- Example: Braced Slender Column With Bi-Axial Bending: General Design ParametersDocument11 pagesExample: Braced Slender Column With Bi-Axial Bending: General Design ParametersTruong Phuoc TriNo ratings yet

- Example: Braced Slender Column With Bi-Axial Bending: General Design ParametersDocument11 pagesExample: Braced Slender Column With Bi-Axial Bending: General Design ParametersTruong Phuoc TriNo ratings yet

- Tampak Depan: Skala 1: 150Document1 pageTampak Depan: Skala 1: 150fikri raissanugrahaNo ratings yet

- SES 50 (Imp Dia 254mm)Document1 pageSES 50 (Imp Dia 254mm)Jaeni GilangNo ratings yet

- Start Up SP: 200 Kasus Servo SP: 300: Step SP: 200 Fungsi Tangga SP:200Document4 pagesStart Up SP: 200 Kasus Servo SP: 300: Step SP: 200 Fungsi Tangga SP:200HadzalieGharaufiNo ratings yet

- Torque and Current vs. Speed Curve (50Hz) : Report No.: TCSC3IC21601A2SL Type: HMC3 160L-4 KW: 15,00Document1 pageTorque and Current vs. Speed Curve (50Hz) : Report No.: TCSC3IC21601A2SL Type: HMC3 160L-4 KW: 15,00L DKNo ratings yet

- Section at P-P Section at Q-Q: NotesDocument1 pageSection at P-P Section at Q-Q: NotesAvinash Chouhan100% (1)

- Digital Communication Systems by Simon Haykin-14Document4 pagesDigital Communication Systems by Simon Haykin-14oscarNo ratings yet

- Nama: Davina Audy Fitriana NIM: J1D115018: Laporan SementaraDocument9 pagesNama: Davina Audy Fitriana NIM: J1D115018: Laporan SementaraWahyu SetiadiNo ratings yet

- Transportation and Assignment ProblemDocument23 pagesTransportation and Assignment ProblemmaruiaNo ratings yet

- Fee For Academic Session 2023 24Document7 pagesFee For Academic Session 2023 24WearIndianNo ratings yet

- Kua Kec. Lasolo 2022Document15 pagesKua Kec. Lasolo 2022Ary AnarchindoNo ratings yet

- Example: Cantilever Column With To Bi-Axial Bending: Input TablesDocument16 pagesExample: Cantilever Column With To Bi-Axial Bending: Input TablesmukeshNo ratings yet

- ASK Side - Channel - Pumps-Unlocked-23Document1 pageASK Side - Channel - Pumps-Unlocked-23JohnsonNo ratings yet

- 3.rencana Pondasi-ModelDocument1 page3.rencana Pondasi-ModelKursigoyangrotanNo ratings yet

- ShardlowDocument19 pagesShardlowhiba mhiriNo ratings yet

- Delta Dps-650lb B Ecos 2171 600w ReportDocument1 pageDelta Dps-650lb B Ecos 2171 600w ReportAbraham MoralesNo ratings yet

- Foundation PlanDocument1 pageFoundation PlanHikmat B. Ayer - हिक्मत ब. ऐरNo ratings yet

- Database Management Systems: Understanding and Applying Database TechnologyFrom EverandDatabase Management Systems: Understanding and Applying Database TechnologyRating: 4 out of 5 stars4/5 (8)

- Cs6402 DAA Notes (Unit-3)Document25 pagesCs6402 DAA Notes (Unit-3)Jayakumar DNo ratings yet

- Kurdish Java Structured Programming - 2 PDFDocument40 pagesKurdish Java Structured Programming - 2 PDFRanjaChavoshi100% (3)

- Q11Document4 pagesQ11akurathikotaiahNo ratings yet

- 12 OOPS in PythonDocument5 pages12 OOPS in PythonREBEL RAJPUTNo ratings yet

- Tower of Hano1 2023Document2 pagesTower of Hano1 2023Queenie DamalerioNo ratings yet

- Homework 1Document3 pagesHomework 1keoxxNo ratings yet

- 06 Network Models-2Document46 pages06 Network Models-2watriNo ratings yet

- Dynamic Programming Treatment of The Travelling Salesman ProblemDocument4 pagesDynamic Programming Treatment of The Travelling Salesman ProblemSumukhNo ratings yet

- Operating Systems: 06CS53 Fifth Semester B.E. Degree Examination, Dec.09/Jan.l0Document3 pagesOperating Systems: 06CS53 Fifth Semester B.E. Degree Examination, Dec.09/Jan.l0Ayushi BoliaNo ratings yet

- Generalized Disjunctive ProgrammingDocument25 pagesGeneralized Disjunctive ProgrammingArash Mari OriyadNo ratings yet

- EN5101 Digital Control Systems: Kalman FilterDocument6 pagesEN5101 Digital Control Systems: Kalman FilterAbdesselem BoulkrouneNo ratings yet

- Huffman Coding AssignmentDocument7 pagesHuffman Coding AssignmentMavine0% (1)

- Outlook Temp Humidity Windy PlayDocument17 pagesOutlook Temp Humidity Windy PlaySarfarazNo ratings yet

- Btech Cs 6 Sem Data Compression Kcs 064 2023Document2 pagesBtech Cs 6 Sem Data Compression Kcs 064 2023Yash ChauhanNo ratings yet

- Goldberg-Tarjan Algorithm - ContinueDocument6 pagesGoldberg-Tarjan Algorithm - ContinuePIPALIYA NISARGNo ratings yet

- RecursionDocument2 pagesRecursionSarthak SNo ratings yet

- ADS PPT A Division Grp18Document19 pagesADS PPT A Division Grp18Ojas YouNo ratings yet

- HW 3Document12 pagesHW 3Tim LeeNo ratings yet

- JavproDocument108 pagesJavpromihir_shah_CENo ratings yet

- 06 Hands-On Activity 1Document4 pages06 Hands-On Activity 1Agatha RomanillosNo ratings yet

- Lecture 3.2.2 Collision Resolution StrategiesDocument35 pagesLecture 3.2.2 Collision Resolution Strategiesborab25865No ratings yet

- W11 Trees PDFDocument8 pagesW11 Trees PDFJohn Kenneth MantesNo ratings yet

- Sec 42 BDocument1 pageSec 42 BroufixNo ratings yet

- Circular Single Linked ListDocument30 pagesCircular Single Linked ListMohammad JunaidNo ratings yet

- ADA Index and Sample Output PDFDocument8 pagesADA Index and Sample Output PDFKavita GohelNo ratings yet

- RSF G4 Ken Lee Kit KhenDocument6 pagesRSF G4 Ken Lee Kit KhenLEE KIT KHEN KENNo ratings yet

- Canon's AlgorithmDocument11 pagesCanon's AlgorithmVenkatesan NNo ratings yet

- Swarm Intelligence PSO and ACODocument69 pagesSwarm Intelligence PSO and ACOKrishna Reddy KondaNo ratings yet

- Departamento de Ciencias de La ComputaciónDocument7 pagesDepartamento de Ciencias de La ComputaciónAndrewEstradaNo ratings yet

- Relational Algebra and Relational Calculus: Pearson Education © 2009Document57 pagesRelational Algebra and Relational Calculus: Pearson Education © 2009NN TutorialsNo ratings yet