Professional Documents

Culture Documents

A Hierarchical Analysis Approach For High Performance Computing and Communication Applications

Uploaded by

poppy tooOriginal Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

A Hierarchical Analysis Approach For High Performance Computing and Communication Applications

Uploaded by

poppy tooCopyright:

Available Formats

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

A Hierarchical Analysis Approach for High Performance Computing and

Communication Applications

Salim Hariri Pramod Varshney, Luying Zhou, Haibo Xu, Shihab Ghaya

ATM HPDC Laboratory Dept. of Electrical Engineering and Computer Science,

ECE Department Syracuse University,

University of Arizona Syracuse, NY 13244

Hariri@ece.arizona.edu {varshney,lzhou,haibo,ghaya}@cat.syr.edu

simulation techniques. We concentrate on analytical

Abstract techniques in this paper. Analytical techniques use

mathematical models to represent the functions of

The design, analysis and development of parallel and different layers of an HPCC system. Modeling includes

distributed applications on High Performance Computing queueing network models, Markov chains, and stochastic

and Communication (HPCC) Systems are still very Petri nets. Kurose [7] provides a detailed overview on

challenging tasks. Therefore, there is a great need for an tools developed by 1988. Also, there are several existing

integrated multilevel analysis methodology to assist in network modeling and simulation tools such as QNA[1],

designing and analyzing the performance of both existing Netmod[2], Netmodeler[3], OPNET, COMNET and a

and proposed new HPCC systems. Currently, there are few others. Most of the tools do not address the end-to-

no comprehensive analysis methods that address such end performance analysis that takes into account the effect

diverse needs. This paper presents a three-level of application, protocol, and network on the performance.

hierarchical modeling approach for analyzing the end-to- Queueing network models concerning standard data and

end performance of an application running on an HPCC communication networks can be found in numerous

system. The overall system is partitioned into application references [8-11]. However, there is no comprehensive

level, protocol level and network level. Functions at each queueing network models that are tractable and efficient

level are modeled using queueing networks. This to analyze HPCC systems and their applications.

approach enables the designer to study system The main objective of the research presented in this

performance for different types of networks and protocols paper is to remedy this problem and to develop an

and different design strategies. In this paper we use efficient hierarchical approach to analyze the performance

video-on-demand as an application example to show how of HPCC systems and their applications. In this paper we

our approach can be used to analyze the performance of present a generic three-level hierarchical approach for

such an application. analyzing the end-to-end performance of an application

running on an HPCC system. The overall system is

1. Introduction partitioned into application level, protocol level and

The evolution and proliferation of heterogeneous network level. Functions at each level are modeled using

computing systems such as workstations, high queueing networks. Norton Equivalence for queueing

performance servers, specialized parallel computers, and networks [13] and equivalent-queue representations of

supercomputers, interconnected by high-speed complex sections of a network are employed to simplify

communication networks, has led to an increased interest the analysis process. This approach enables the designer

in studying High Performance Computing and to study system performance for different types of

Communication (HPCC) systems and parallel and networks and protocols and different design strategies. In

distributed applications running on HPCC resources. this paper we use video-on-demand as an application

There has been an increased interest in developing example to show how our analysis approach can be used

computer-aided engineering tools to assist in modeling, for the performance analysis of an HPCC system and its

analysis, and design of HPCC systems [1-4]. applications. This paper is organized as follows: Section 2

The three general approaches used for network describes the three-level hierarchical approach, and the

modeling, analysis, and design, are measurement performance evaluation methodology. Section 3 presents

techniques (monitoring), analytical techniques, and a video-on-demand case study, and Section 5 is the

conclusion.

0-7695-0001-3/99 $10.00 (c) 1999 IEEE 1

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

2. Hierarchical Modeling of HPCC Systems in the design and analysis of HPCC systems and their

applications.

Our analysis approach is based on decomposing the

overall system environment into three levels and 2.1 Network Level Analysis

subsequently model each level in isolation while the The main objective of the analysis at this level is to

contributions of the other levels are captured using their identify the parameters and performance issues of interest

equivalent queueing models. By applying this to the analysis at the network level. In this level, one is

decomposition approach, the analysis is more efficient; In interested in determining the packet transfer time through

this approach, it is possible to omit all the unnecessary that network, network utilization, what-if scenarios when

details while concentrating on modeling accurately all the different network technologies are used (e.g., ATM,

essential functions associated with the desired level of Gigabit Ethernet). In what follows, we describe the

analysis. performance issues associated with network level

When product form solutions exist and local balance is analysis.

satisfied, one could employ Norton equivalence [13] in

the analysis of queueing networks. We use Norton 2.1.1 Packet Transfer Time.

equivalence to single out a particular level, or even a The packet transfer time through the network

subsection of it, from the overall end-to-end queueing represents the delay imposed by the network on system

network for analysis and replace the remainder of the response time. For example, in a switched-based network,

system with its equivalent simplified contribution – either packet transfer time can be evaluated in terms of four

in the form of a composite Poisson source or a composite components: transmission delay, propagation delay,

queue. Consider the analysis of the protocol level switch delay and queueing delay.

distributed at both ends of the communication channel. The first three components are deterministic, while the

For this, we first perform detailed analysis on the network last one is stochastic in nature. A lot of work on this

level and obtain the delay faced by a traffic unit that aspect of network analysis has been reported in the

traverses the network (for example, a message packet). literature [13-16]. We adopt these results to our

Next, we approximate the network with an equivalent hierarchical analysis approach and use them when

queueing node; this node has its service rate equal to the appropriate to analyze the queueing models.

delay obtained from the network analysis mentioned

above. This approximation of the network’s contribution 2.1.2 Network Utilization.

helps us to concentrate on the details of the protocol level The possibility of full utilization in the network under a

without the complications of the network level. Now, we given traffic load is an important criterion in network

can isolate the protocol level (with a connecting network design. For varying traffic patterns, we use the network

node) for detailed study, replacing the contribution of the level analysis to determine the corresponding utilization

application layer with a composite Poisson source that factor for alternate network designs. This type of analysis

supplies the traffic in terms of packets.. We can extend describes the load handling capability of the network. The

this method to further isolate and concentrate on a portion network utilization analysis together with cost-

of the protocol level, say for instance, flow control. performance consideration can influence the network

The advantages of this hierarchical analysis design choice. In the video-on-demand example, we

methodology are manifold. First and foremost is the demonstrate this type of analysis under different traffic

simplification in analysis brought forth by decomposition. intensity (number of customers).

Isolating a level and concentrating on its details provides

for a thorough analysis. Alternate functional 2.1.3 Equivalent Network-Level Queuing Model.

implementations can be tried out within the smaller unit To illustrate the concept of equivalent nodes, we use

and what-if scenarios can be explored. This gives a quick the distributed system example shown in Figure 1. A set

picture of variations in the system performance for of servers and client machines are connected together by

different design strategies. System parameters of interest three networks. Server S is connected to both an Ethernet

are now available at a finer level and their cumulative and an ATM switch, and Client C is connected to an

contribution provides the end-to-end parameters; this can FDDI network. Suppose we need to analyze the

also help to identify potential bottlenecks. Queueing performance between Server S and Client C as in a

networks provide a uniform framework to represent all database access or video file transfer. To simplify the

levels of the system abstraction. And finally, this analysis, we apply Norton theorem to obtain equivalent

methodology provides a stepwise and fairly accurate queueing nodes .

framework to develop a performance tool that can assist This can be achieved by first approximating each

network by a queueing node, such that the service time of

0-7695-0001-3/99 $10.00 (c) 1999 IEEE 2

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

each queue is set equal to the packet transfer time through outgoing link is assumed to be equal to p/N [13]. The cell

the network considering appropriate background network waiting time at the ATM switch is approximated as

load [17]. The resulting approximate queueing network N −1 p

Dcell = × × ∆T (2.1)

2(1 − p)

connecting Server S and Client C is shown in Figure 1. p1 N

and p2 are the branch probabilities of the traffic from

server S. To find the traffic λ3 entering the network level, The packet transmission time through the ATM

we use the open equivalent queuing node approach. This network, Dp and the equivalent transfer rate, µ1, are given

input traffic is set to be equal to the throughput of the as

shortened link connecting the output of the Server and the D p = D t + D p r + D c ell (2.2)

input of the client. The throughput is calculated based on

upper level traffic condition. µ1 =1/Dp. (2.3)

where Dt is the packet transmission delay,

L

… Dt = × ∆T (2.4)

48

Ethernet where L is the packet length in bytes, ∆T is the switch

speed

Dpr, and the propagation delay is given by,

Client C D pr = 5 × H (2.5)

Server S FDDI

ATM

switch H is the link length. The propagation delay is assumed

to be 5µs/km-cable-length.

… …

Calculating µ2: Transfer Rate over Ethernet

The average packet waiting time is given by

−2λτp 1 τp

Figure 1. Distributed network application

E(b2)+(4e+2)E(b)τp +5τp2 +4e(2e−1)τp2 (1−e )( + −3τp)

In Figure 2, µ1, µ2 and µ3 are the packet transfer rates E(w) =0.5λ× +2τpe− * −λτλp−1 e −2λτp

1−λ(E(b)+τp +2eτp) Fp (λ)e +e

through the respective networks and are obtained as

follows: (2.6)

Traffic Source where λ is the total packet arrival rate to the network,

λ3 E(b) and E(b2) are the first and second moments of the

packet service time, τp is the maximum end-to-end

p2 p1 propagation delay and Fp* is the Laplace Transform of the

Ethernet ATM probability density function of the packet service time

[14].

µ2 µ1 The packet transmission time through the Ethernet is

λ2 λ1 given as

D pe = E ( w) + Dtp (2.7)

FDDI

and the transfer rate, µ2, is

µ3 To Client µ2=1/Dpe. (2.8)

where Dtp is the mean packet transmission and

Figure 2. Queueing network model.

propagation time and is given by

Calculating µ1 : Transfer Rate over ATM network L τp

Assume that the ATM switch has an N*N Space- Dtp = + (2.9)

C 2

where C is the link speed, L is the packet length, and τ p

Division architecture and with nonblocking and output

buffer features, the service time (switch time for one cell)

is ∆T for one slot. Assume that the traffics at the N links is the end-to-end propagation delay.

are identical. Let p denote the probability that a slot or

input link i contains a cell. The probability of a cell Calculating µ3 : Transfer Rate over FDDI network

arriving at an incoming link destined for a particular Maximum station access delay is given by [15]

Dmax = ( M − 1) × TTRT + 2 R (2.10)

0-7695-0001-3/99 $10.00 (c) 1999 IEEE 3

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

where M is the number of stations, TTRT is the target management unit where functions like addressing and

token rotation time, and R is the ring latency. routing are performed. Acknowledgment unit represents

The maximum packet transmission time and transfer rate the initiation of feedback from the receiver end and this

through the FDDI network are given by could trigger the appropriate flow-control and error-

Dmp = Dmax + Dtp (2.11)

control functions. The packets are finally consumed at

the application level at the receiver end (sink). This can

µ3=1/Dmp (2.12) be represented as an open queueing network with some

closed chains due to feedback.

where Dtp is the packet transmission time and

propagation time

L 1 Region A

Dtp = + × 5 × H

Appl.

(2.13)

C 2

Controller

where C is the link speed, L is the packet length and H is

the link length. Connection Flow Control Error Control

Management

2.2 Protocol Level Analysis Ack.

In the protocol level analysis, we analyze in detail, the Ack.

Network Interface

communication protocol running on the HPCC system.

The protocol could be a standard protocol like the Network

TCP/IP, IP/ATM, or a synthesized protocol, where the

Network Interface Ack.

designer specifies the implementation of each protocol

mechanism or function. Some of the main issues of Ack.

interest in protocol level analysis are throughput, protocol Connection

Management

Flow Control Error Control

processing overhead and latency, reliability, and

suitability of a certain protocol mechanism of flow control Controller

or error control for a particular application.

In the discussion below, we describe a general protocol Appl.

Region B

model, wherein we represent any protocol as a set of Figure 3. Example of a Flow Diagram representing Protocol

functions and not in terms of distinctive software layers as Level Functions

in the OSI model. These functions include connection

management, flow control, error control, and At the protocol level analysis, one might need to

acknowledgements. Connection management could analyze the impact of using different flow and error

encompass functions like data stream segmentation and control mechanisms on protocol performance as discussed

reassembly, framing, addressing and routing, signaling, below:

priority, and preemption. The flow-control mechanism Flow control: The two main mechanisms are window

could be window based, rate based, or credit based. The based and rate based flow control schemes.

error control scheme could be Stop and Wait, selective (1) Window Based Flow Control: This scheme changes

retransmission, go-back-N, etc. What-if scenarios under an open queueing network into a closed one [24] and

alternate design implementations could be analyzed and thus the window flow control queueing model is the

this would aid the designer in making the appropriate Norton equivalent queue of the close-queueing

choices for the protocol functions that are appropriate for network.

a given application. A generalized protocol model could (2) Rate Based Flow Control: An important scheme that

be represented by a flow diagram as shown in the example provides rate based flow control is the leaky bucket

given in Figure 3. scheme. In this scheme, packets cannot be transmitted

before they obtain a permit, where the flow of permits

In Figure 3 the data flow is assumed to be duplex and controls the flow of packets. The average delay for a

Regions A and B are shown in a symmetric manner to packet to obtain a permit is given by [18]

represent protocols at both ends of the system. The e − λ / r (λ / r ) k

contents of the boxes are determined by the actual

ak = (2.14)

k!

protocol. Let us consider a specific case. Let region A

r−λ

represent a server and let B represent a client. We assume p0 = , p1 = (1 − a 0 − a1 ) p0 a 0 (2.15)

a controller that coordinates the protocol level functions. ra 0

The packets initially pass through the connection

0-7695-0001-3/99 $10.00 (c) 1999 IEEE 4

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

1 i −2

1 − a1 chosen computing platforms that take into consideration

pi = −

a0

∑a

j =0

i − j +1 pj +

a0

pi , for i ≥ 2. such as workloads, problem size, and memory

requirements. At this level one may be interested in

(2.16)

∞

factors like processor scheduling, parallel or distributed

∑ p ( j − W)

1

T= (2.17) processing, storage strategy, type of storage such as disk

r j =W +1 j or tape (for archives), type of data distribution on disks

where the p’s are the steady state probabilities in the such as striping and duplicating, use of caches, etc.

Markov model used to model the availability of permits, r Analysis is then performed to obtain the end-to-end

is the rate at which the permits are allowed into the performance metrics of interest to the application level

system, and W is the maximum number of permits. Once analysis such as application response time, application

the permit is obtained, the packet joins a queue for utilization on each computing platform involved in its

transmission; the queueing delay at this queue is execution, and application execution cost. In section 3,

determined by the distribution of the packet sizes and we show in more detail the performance analysis at the

standard queueing models are used to calculate the application level.

queueing delays. 3. Case Study: A Video-on-Demand System

Error Control: There are different error control

In this Section, we illustrate how to apply our

schemes such as stop-and-wait, go-back-N, selective

hierarchical analysis approach to analyze the performance

repeat, etc. The performance models of some error control

of a Video-On-Demand (VOD) system.. Figure 5 shows a

schemes are given below in Table 2.1 [19]:

2-tiered architecture for providing video-on-demand

Table 2.1. Performance models of error control schemes services.

A VoD system is divided into several identical

Error Control Maximum Expected Number of customer groups. Each customer group is served by a

Scheme Throughput retransmissions

Stop & Wait (1-p)/ tT 1/(1-p)

Local Video Server (LVS) connected through a local area

Selective Repeat (1-p)T *l /( l+l’) 1/(1-p) network. Several such LVS’s within a geographical area

Go back N (1-p)/[T+( tT -T)p] [1+( tT/T -1)p]/(1-p) are connected to a Central Video Server (CVS) by a

In this table, p is the probability of receiving a frame in backbone network. Each LVS has a local copy of all the

error, T is the time required to transmit a frame, tT is the popular videos on its disk storage. The CVS keeps a copy

minimum time between successive frames. l is the length of the popular videos on its disk storage and archives the

of the packet (data) field in the frame and l’ is the total non-popular ones on a magnetic tape storage system. A

number bits in the control fields. client request arrives first at the respective LVS. If the

We can use the Norton equivalence for open queuing request is for a popular movie and the LVS is not

networks to analyze the protocol performance. The congested, then the request is handled at the LVS itself.

network can be approximated with an equivalent node However, if the LVS is congested or if the request is for a

whose service rate is equal to the delay faced by a single non-popular video, then it will be directed to the CVS.

message unit passing through the network as shown in Since there are only two tiers in the system under study,

Figure 4. The application that generates the message the request either is served at the CVS or is blocked. In

packets is modeled by a composite Poisson source. the latter scenario, the client will be notified.

Disk

Protocol Level Protocol level Central Video

Archive Server (CVS)

Composite Poisson

Equivalent Node Backbone

Source Network

for Network

feedback Local Video

Local Video Local Video

Local Video

Disk Server(LVS) Server(LVS)

Server(LVS) Server(LVS)

Figure 4. Model for hierarchical analysis involving Protocol

and Network Levels.

Local

Access

2.3 Application level Analysis Network

At this level, we perform analysis of the HPCC Customers

application under consideration or a combination of

HPCC applications (workloads). We develop analytical

models to characterize an HPCC computation on its Figure 5. Layout of a VoD system

0-7695-0001-3/99 $10.00 (c) 1999 IEEE 5

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

The goal of our analysis is to demonstrate how this nl = T(nl)/Rpl (4.1)

hierarchical approach can be used to analyze the This is obtained by iteration till we arrive at the

performance of different configurations of network or maximum supportable number of customers. The

computing technologies. throughput can be calculated using the Mean Value

Analysis algorithm [20].

3.1 Application Level Analysis

Given a set of system parameters in LVS: mean frame

The objective of the application level analysis is to size of 28000Bytes/frame (we use the value obtained from

determine some parameters and issues of interest with the statistics of a Star Wars MPEG1 trace [21]), disk

respect to an application such as a VoD application. This retrieval rate of 66.96 frames/s (per disk), scheduling rate

first involves determining the features of the servers such of 60000/s, we obtain the capacity of the LVS as shown in

as disk storage, presence of archives, scheduling of file Figure 7 (a).

retrievals, queuing of requests, QoS guarantees, and throughput vs. number of users

1200

client-side buffer for minimum build-up. The request

arrival for videos from customers is assumed to be a

1000

Poisson process. The analysis here involves determining

the load handling capacity at the LVS and the CVS. The 800

load handling capacity indicates the maximum number of

throughput (frames)

customers that the system can support, and blocking 600

probability for customer requests (admission control).

µdisk µdisk

400

µsched µsched

. . 200 5 disks

. . 10 disks

15 disk

required frames

0

µProtocol

0 5 10 15 20 25 30 35 40 45

T(n) [Throughput] number of users

Figure 6(b). Reduced network of Figure 6(a)

Figure 6(a) Close queueing network is used To calculate the equivalent Poisson

representation Of frame retrieval at LVS source into protocol layer Figure 7(a). Throughput vs number of users

The number of customers that can be handled at the For 15 disks, the throughput is T(nl)=1004 f/s, and the

LVS depends primarily on the retrieval capability of the maximum number of requests the LVS can handle is

disk subsystem. Once admitted, we assume a Round nl=41. This number is used to estimate traffic through

Robin scheduling strategy for the customers, where each local network. Thus for a given server, with a given

one is allotted a service time for frame retrieval. A service number of disks, we can find the limit on the number of

round is completed when all the admitted customers have customers who can be guaranteed a quality of service.

been served once. The service rate is proportional to the Since the server is now simultaneously handling nL

retrieval capability. For a disk, retrieval capacity is given requests, we can consider the LVS to be composed of nL

by kRd Megabits/sec (Mbps), where Rd is the retrieval rate virtual servers and thus model the LVS as an M/M/nL/nL

from a disk head, and k is a factor to account for retrieval system [22] where queueing of requests is not allowed.

latencies. Also, we assume that there are d disks in a disk This assumes the service times to be exponentially

array. distributed with a mean service time of Tm, which is equal

to the duration of the movie, taking into account the

3.1.1 Application level analysis at the local server

interaction periods with the client. We assume Tm=3600s

Assuming the average size of a frame to be F Mbits, and (µ=1/Tm). From this M/M/nL/nL model, we can

we convert the retrieval rate to frames per second. We compute the blocking probability PB(L) for a request whose

assume in a service round, only 1 frame is retrieved for a arrival rate is λp (popular video requests). This is given

customer; During the QoS negotiation phase, we agree to by the following equation:

guarantee a minimum playback rate to the customers,

ρ nL

equal to Rpl (we assume 24 frames/sec). We model the PB = p0 (4.2)

frame retrieval from disks as a closed queueing network nL !

(see Figure 6(a)). To get the equivalent application

where ρ = λp/µ and,

queueing model into the Protocol layer, the protocol layer −1

n ρc

equivalent queue is shorted (Figure 6(b)). So, for a state- p 0 = ∑ (4.3)

dependent throughput of T(nl), the number of customers c =0 c!

that can be admitted will be

0-7695-0001-3/99 $10.00 (c) 1999 IEEE 6

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

The plot of the request blocking probability on LVS the CVS, with guaranteed QoS. The closed queueing

against N, the maximum number of supported customers, network model for video retrieval in CVS is similar to that

is shown in Figure 7(b). for LVS as shown in Figure 8.

Given the system parameters for the CVS: Disk

retrieval rate=66.96 frames/s(per disk), Scheduling

N=8

rate=60000 /s, Number of LVS L=10, the maximum

N=16 number of customers that can be supported for number of

disks ranging from 30 to 60 is shown in Table 4.2 along

N=23

with the corresponding frame throughput.

Table 4.2 CVS parameters and capacity

N=31

# of Disk-heads 30 40 50 60

Total Throughput 1108 1470 1832 2203

(frames/s)

Max N (number of 46 61 76 92

customers accepted)

Thus, from these computations, with the system

Figure 7(b). Request blocking probability at LVS capacity nc, and modeling the server as an M/M/nc/nc

model, we derive the blocking probabilities of the

3.1.2. Application level analysis at the central server admission control phase as shown in Figure 7(c).

Figure 8 shows the model of the video retrieval process

at the CVS. All blocked requests as well as requests for

non-popular movies are handled at the CVS. The arrival

rate of requests to the CVS from an LVS would then be

(λpPB(L) + λnp). Assuming there are L LVS’s managed by

one CVS, the total arrival rate at the CVS would be Lλnp

for the non-popular movies and LλpPB(L) for the popular

ones. This is shown in Figure 8 as λ= L(PB(L)λp+λnp). The

acceptance rate for popular movies is given by λp*=L(1-

PB(C))PB(L) λp and the acceptance rate for non-popular

movies λnp*=L(1- PB(C)) λnp. These requests for popular

and non-popular movies are handled separately at the Figure 7(c). Request Blocking Probability at CVS

CVS; the former by the archival subsystem and the latter

by the disk storage subsystem (service rate (µd)). The 3.2 Protocol Level Analysis

branch probability to the archive storage is In the VoD system, the average frame delay is an

Pa= λnp*/(λp*+λnp*) and the branch probability to the disks important parameter that will not only limit how many

is Pd= λp*/(λp*+λnp*). That is, we assume the relative users can be admitted into system, but also determine how

access probabilities to the disk and archive to be in the large the buffer in the set-top box should be. In this case

proportion of the relative accepted request rate for study, we will evaluate two kinds of flow control

popular and non-popular movies, respectively. The non- schemes: window flow control and leaky bucket scheme

popular movies are first accessed from the archives flow control. In order to evaluate and compare these two

(which could be a magnetic-tape storage system) and are kinds of flow control schemes, we assume that the packet

then laid out on the disk caches using efficient algorithms size (L) and the average network delay (µ) are the same in

for striping and placement [23]. Now, the scheduler these two schemes.

generates disk reads to retrieve the video files. Window Flow control: In window-based flow control, an

Archive access

pnp delay open queueing network is modeled as a closed queueing

λ

(1-Pb)λ

Stream

network [24]. This closed network is a cyclic queueing

Scheduler Disks

Manager

pp

Pb λ

Figure 8. Video file retrieval process at the CVS Network Equivalent queue

As in the case of LVS’s, the blocking probability for

requests is derived from the M/M/n/n model for which we

need to determine the number of customers accepted by Figure 9(a). Flow control closed network.

0-7695-0001-3/99 $10.00 (c) 1999 IEEE 7

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

network composed of two network layer equivalent For a specific number of users in the VoD system, we

queues as shown in Figure 9(a). The number of packets in can first calculate the number of packets that will be

the closed network is the window size. By applying the generated by the application level, then we calculate the

Mean Value Analysis algorithm, we can get the P a c k e ts w a itin g fo r p e r m it T r a n s m is s io n q u e u e

throughput (S) of this queueing network. Then, we can

determine the average packet delay to be equal to 1/S.

Since each packet from the application layer will P e r m it b u c k e t

require a protocol processing delay equal to an average

period 1/S, we can substitute the protocol level queueing

Figure 11(a). Leaky bucket flow control scheme

network with an equivalent queueing node with a service

rate S. The throughput S depends on the window size and

the service rate of the equivalent queue corresponding to

the network (see Figure 9(b)). When the window size of 8

E q uivalent w aitin g T ransm issio n q ueue

q ueue

Poisson source from equivalent server

Application level service rate = S Figure 11 (b). Equivalent queue of leaky bucket

Figure 9(b). Flow Control equivalent queue flow control

and 16, the relation between the average frame delay and average number of packets in these two series queues.

the number of users admitted to the service is shown in

Figure 10. In this figure, we assume that the average Further we can get the average delay a frame will

frame length is 28000 byte, the packet length in the experience during the processing of the protocol

protocol level is 1000 bits, and the network bandwidth is functions. The relation between average frame delay and

155Mbps. the number of users admitted to the VoD system is plotted

when the bucket size is 8 and 16.

From Figure 10, we know that if the window size of

Packets waiting for permit Transmission queue

window flow control equals the bucket size of the leaky

bucket scheme, both schemes of flow control provide

similar frame delay for small number of users in the VoD

Permit bucket system. However, when the number of users increases, the

leaky bucket scheme will provide much less frame delay.

Furthermore, when the window size of the window flow

Figure 11(a). Leaky bucket flow control scheme.

control increases, the average frame delay will decreases

and it will approach the curve of the leaky bucket flow

control scheme.

3.3. Network Level Analysis

In this level of analysis, we concentrate on the design

Figure 10. average frame delay vs. number of users and the performance analysis of different network

technologies such as Switch Ethernet, ATM, FDDI, etc.

Leaky bucket flow control scheme: In this analysis we The performance metrics of interest at this level include

assume that the permits arrive at a rate of one per 1/r network transfer delay, cell loss probability, throughput,

second, where r is the transmission rate of the network error rates, etc. We do need to perform the network level

equivalent queue measured by the number of packets analysis for the central and local networks. For this VOD

transmitted per seconds (see Figure 11(a)). By using system, we analyze the network transmission delay and

equations (2.14) to (2.17), we can calculate the average the average packet delay. We also determine the amount

time (T) a packet will wait for a permit to join the of traffic that can be handled by various networks, and

transmission queue. Since every packet needs first to wait suggest the ideal network technology for the given

for a permit and then wait to be transmitted, we can model application.

this scheme with two M/M/1 queues in series as shown in

3.3.1. Analysis of the Local Access Network

Figure 11(b). The first one is the equivalent waiting queue

(with service rate 1/T) while the second one is the Based on the analysis performed at the application

transmission queue with service rate r. level and protocol level, we obtain the traffic, and can

0-7695-0001-3/99 $10.00 (c) 1999 IEEE 8

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

thus proceed with the network level analysis. We evaluate Local access link

User 1

the packet transfer time when the clients (set-top boxes Backbone connection

User 2

and/or computers) are connected by: 100 Mbps Fast .

.

Ethernet switch, 25Mbps ATM switch and 155 Mbps User n

Backbone Connection

ATM switch .

to the central server

.

To calculate the packet transfer delay for each local .

access network, the following queueing network can be User 1

User 2

Set-top to switch .

.

User n

User 1

Figure 14. Queueing model of the backbone network

Switch to video server

User 2

. If we assume that the client is connected to the local

. access network by 155 Mbps ATM connection and there

User n

are 30 users allowed accessing the video server

simultaneously. In this analysis, we study two types of

ATM backbone networks with ATM connections

Figure 12. Queueing model of local access network.

operating at 155 and 622 Mbps. Figure 17 shows the

used to model the structure of the local access network. relations between the average video frame delay and the

Each link in the network is modeled as an M/M/1 queue, number of local access networks. Based on our

hence the packet delay for the local access network is the assumption and calculation, if 622 Mbps ATM network is

sum of the delay from the client to the switch and the used to interconnect the local access network to the

delay from the switch to the video server. central video server, 38 local access networks can be

The packet delay for each network type is shown in connected to the central video server.

Figure 13. From this analysis, it is clear that if we use 155 0.06

average frame delay vs. number of users

Mbps link to connect the set-top device and the video

Ethernet

server, the local access network can support up to 30 25 Mbps ATM

0.05 155 Mbps ATM

users accessing the video server. time between frames

0.04

average frame delay

average frame delay vs. number of users

0.06

155 Mbps ATM

622Mbps ATM 0.03

time between frames

0.05

0.02

0.04

average frame delay

0.03 0.01

0.02

0

5 10 15 20 25 30 35 40

number of users

0.01

Figure 15 average frame delay vs. number of LVS

0

5 10 15 20 25 30 35 40

number of users

Figure 13. Average frame delay vs. number of users 5. Conclusion

4.3.2 Analysis of the CVS Backbone Network In this paper, we presented a three-level hierarchical

analysis approach to analyze the performance of a High

To calculate the average frame delay from the central

Performance Computing and Communication system and

server to the client, the following queueing model can be

its applications. We used queueing network models to

used to model the two -tier structure of the VoD system.

represent the system in each of its three levels of

The frame delay consists of the delay of the local access

abstraction. Norton Equivalence for queuing networks and

link, the delay of the backbone connection and the delay

equivalent queueing-node models were used to isolate and

in the connection to the central server as shown in Figure

analyze each of the three levels of analysis. The analysis

14.

at each layer can be carried out in detail. Our approach

0-7695-0001-3/99 $10.00 (c) 1999 IEEE 9

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

Proceedings of the 32nd Hawaii International Conference on System Sciences - 1999

enables users to perform sensitivity analysis and evaluate [17] S. Lam, “Carrier Sense Multiple Access Protocol for Local

the performance of different design. We also described a Area Networks”, Computer Networks Vol 4, 1980, pp21-

simple 2-tiered VoD system and analyzed its performance 32.

[18] L. C. Mitchell, D. A. Lide, “End-To-End Performance

at the application and network levels. A proof-of-concept

Modeling of Local Area Networks”, IEEE Journal on

design and analysis tool, Net-HPCC, has been Selected Areas in Communications, Vol.SAC-4, No.6,

implemented in the lines of our approach at the ATM September1986.

HPDC laboratory at The University of Arizona. Further [19] D. Bertsekas, and R. Gallagher, Data Networks, Prentice

detailed about Net-HPCC toolkit can be found at Hall, 1991

http://www.ece.arizona.edu/~hpdc. [20] M. Schwartz, Telecommunication Networks Protocols,

Modeling and Analysis, Addison Wesley.

References [21] M. Reiser, “Mean-Value Analysis and Convulution

[1] W. Whitt, “The Queueing Network Analyzer”, Bell System Method for Queue-Dependent Servers in Closed Queueing

Technical journal, Vol.62, No.9, Nov.1983, pp.2779- Networks,”, Performance. Evaluation. ACM, vol. 1, 1981.

2815. [22] M. Garret, “starwars.frames”: available via anonymous ftp

[2] D. W. Bachmann, M. E. Segal, M. Srinivasan , and from thumper.bellcore.com.

T.J.Teorey, “Netmod: A DesignTool for large Scale [23] V. O. K. Li, W. Liao, X. Qiu, E. W. M. Wong,

Heterogeneous Campus networks”, IEEE Journal on “Performance Model of Interactive Video-on-Demand

Selected Areas in Communications, Vol.9, No.1, Jan 1991. Systems”, IEEE Journal on Selected Areas in

[3] Rindos, W. Metz, R. Draper, A. Zaghloul, “Netmodeler: Communications, Vol.14, No.6, August 1996.

An Object Oriented Performance Analyis Tool”, IBM, [24] Y. H. Chang, D. Coggins, D. Pitt, D. Skellern, M. Thapar,

RTP, NC, USA C. Venkataraman, “An Open-Systems Approach to Video

[4] W. C. Lau, and S. Q. Li, “Traffic Analysis in Large-Scale on Demand”, IEEE Communications magazine, May

High-Speed Integrated Networks: Validation of Nodal 1994.

Decomposition Approach”, Proc. IEEE INFOCOM’93, [25] M. Reiser, A queueing Network Analysis of Computer

pp. 1320-1329, 1993 communication Networks with Window Flow control,

[5] L. Kleinrock, Communication Nets; Stochastic Message IEEE Trans. On Comm, Vol. COM-27, August 1979.

Flow and Delay, New York:

[6] McGraw-Hill, 1964. Reprinted by Dover Publications,

1972.

[7] L. Kleinrock, “On the Modeling and Analysis of Computer

Networks”, Proc. of the IEEE, Vol. 8, NO. 8, August

1993.

[8] J. F. Kurose and H. T. Mouftah, “Computer-Aided

Modeling, Analysis, and Design of

Communication Networks”, IEEE Journal on Selected

Areas in Communication, Vol. 6, NO. 1, January 1988.

[9] L. Kleinrock, Queuing Systems, Vol. 2:

ComputerApplications, Wiley, New York 1976.

[10] M. Schwartz, Telecomunication Networks: Protocols

Modeling and Analysis, Reading, MA, Addison-Wesley,

1987.

[11] R.W. Wolff, Stochastic Modeling and the Theory of

Queues, International Series in Industrial and System

Engineering, Prentice Hall, Englewood Cliffs, New Jersy,

1989.

[12] T. G. Robertazzi, Computer Networks and Systems,

Springer Verlag, New York, 1994.

[13] K. M. Chandy, U. Herzog and L. Woo, “Parametric

Analysis of Queuing Networks”, IBM J. RES. DEVELOP.

January 1975, pp36-42

[14] M. J. Karol et al, “Input versus Output Queueing on a

Space-Division Packet Switch”, IEEE Trans. Comm. V35,

N2, pp1347-1356, 1987

[15] E. Drakopoulos, M. J. Merges, “Performance Analysis of

Client-Server Storage Systems”, IEEE Trans. On

Computers, Vol.41, No.11, November 1992.

[16] S. Mirchandani, R. Khanna, FDDI Technology and

Application, 1993

0-7695-0001-3/99 $10.00 (c) 1999 IEEE 10

You might also like

- Plugin Science 1Document18 pagesPlugin Science 1Ask Bulls BearNo ratings yet

- A Survey of Network Simulation Tools: Current Status and Future DevelopmentsDocument6 pagesA Survey of Network Simulation Tools: Current Status and Future DevelopmentsSebastián Ignacio Lorca DelgadoNo ratings yet

- Clustering Traffic FullDocument63 pagesClustering Traffic Fullprabhujaya97893No ratings yet

- Pce Based Path Computation Algorithm Selection Framework For The Next Generation SdonDocument13 pagesPce Based Path Computation Algorithm Selection Framework For The Next Generation SdonBrian Smith GarcíaNo ratings yet

- MGRP CiscoDocument8 pagesMGRP CiscoSuryadana KusumaNo ratings yet

- Wireless Communications - 2004 - Anantharaman - TCP Performance Over Mobile Ad Hoc Networks a Quantitative StudyDocument20 pagesWireless Communications - 2004 - Anantharaman - TCP Performance Over Mobile Ad Hoc Networks a Quantitative Studyafe123.comNo ratings yet

- The Track & TraceDocument11 pagesThe Track & TraceReddy ManojNo ratings yet

- An Evaluation Study of AnthocnetDocument37 pagesAn Evaluation Study of AnthocnetExpresso JoeNo ratings yet

- D R - E P G D: Esigning UN Time Nvironments To Have Redefined Lobal YnamicsDocument16 pagesD R - E P G D: Esigning UN Time Nvironments To Have Redefined Lobal YnamicsAIRCC - IJCNCNo ratings yet

- Network Administrator Assistance System Based On FDocument7 pagesNetwork Administrator Assistance System Based On FMohamed Ali Hadj MbarekNo ratings yet

- Techniques For Simulation of Realistic Infrastructure Wireless Network TrafficDocument10 pagesTechniques For Simulation of Realistic Infrastructure Wireless Network TrafficA.K.M.TOUHIDUR RAHMANNo ratings yet

- A Queueing Model For Evaluating The Transfer Latency of Peer To Peer SystemsDocument12 pagesA Queueing Model For Evaluating The Transfer Latency of Peer To Peer SystemsSiva Vijay MassNo ratings yet

- Java IEEE AbstractsDocument24 pagesJava IEEE AbstractsMinister ReddyNo ratings yet

- Automation of Network Protocol Analysis: International Journal of Computer Trends and Technology-volume3Issue3 - 2012Document4 pagesAutomation of Network Protocol Analysis: International Journal of Computer Trends and Technology-volume3Issue3 - 2012surendiran123No ratings yet

- Research Statement Highlights Momentum-Based Distributed Stochastic Network ControlDocument4 pagesResearch Statement Highlights Momentum-Based Distributed Stochastic Network ControlMaryam SabouriNo ratings yet

- Fggy KKL SkyDocument14 pagesFggy KKL SkyFahd SaifNo ratings yet

- Paper ArchitecturalSurveyDocument9 pagesPaper ArchitecturalSurveyMuhammmadFaisalZiaNo ratings yet

- Analysis of Performance Evaluation Techniques for Wireless Sensor NetworksDocument12 pagesAnalysis of Performance Evaluation Techniques for Wireless Sensor Networksenaam1977No ratings yet

- Computer Network Performance Evaluation Based On Different Data Packet Size Using Omnet++ Simulation EnvironmentDocument5 pagesComputer Network Performance Evaluation Based On Different Data Packet Size Using Omnet++ Simulation Environmentmetaphysics18No ratings yet

- Y Performance Evaluation For SDN Deployment An Approach Based On Stochastic Network CalculusDocument9 pagesY Performance Evaluation For SDN Deployment An Approach Based On Stochastic Network CalculusTuan Nguyen NgocNo ratings yet

- One Sketch To Rule Them All: Rethinking Network Flow Monitoring With UnivmonDocument14 pagesOne Sketch To Rule Them All: Rethinking Network Flow Monitoring With Univmonthexavier666No ratings yet

- Energy Management in Wireless Sensor NetworksFrom EverandEnergy Management in Wireless Sensor NetworksRating: 4 out of 5 stars4/5 (1)

- Analysis of Behavior of MAC Protocol and Simulation of Different MAC Protocol and Proposal Protocol For Wireless Sensor NetworkDocument7 pagesAnalysis of Behavior of MAC Protocol and Simulation of Different MAC Protocol and Proposal Protocol For Wireless Sensor NetworkAnkit MayurNo ratings yet

- System Level Analysis For ITS G5 and LTE V2X Performance ComparisonDocument10 pagesSystem Level Analysis For ITS G5 and LTE V2X Performance Comparisonمصعب جاسمNo ratings yet

- Analytical Modeling of Bidirectional Multi-Channel IEEE 802.11 MAC ProtocolsDocument19 pagesAnalytical Modeling of Bidirectional Multi-Channel IEEE 802.11 MAC ProtocolsAbhishek BansalNo ratings yet

- 2021-COMNET-5G Network Slices Resource Orchestration NSALHABDocument25 pages2021-COMNET-5G Network Slices Resource Orchestration NSALHABNguyễn Tiến NamNo ratings yet

- Modeling and Simulation of Computer Networks and Systems: Methodologies and ApplicationsFrom EverandModeling and Simulation of Computer Networks and Systems: Methodologies and ApplicationsFaouzi ZaraiNo ratings yet

- Implementing On-Line Sketch-Based Change Detection On A Netfpga PlatformDocument6 pagesImplementing On-Line Sketch-Based Change Detection On A Netfpga PlatformHargyo Tri NugrohoNo ratings yet

- Critical Path ArchitectDocument11 pagesCritical Path ArchitectBaluvu JagadishNo ratings yet

- Abid 2013Document7 pagesAbid 2013Brahim AttiaNo ratings yet

- A Formal Approach To Distributed System TestsDocument10 pagesA Formal Approach To Distributed System Testskalayu yemaneNo ratings yet

- Internet of ThingsDocument20 pagesInternet of ThingsKonark JainNo ratings yet

- Tutorial On High-Level Synthesis: and WeDocument7 pagesTutorial On High-Level Synthesis: and WeislamsamirNo ratings yet

- Network Statistical AnalysisDocument19 pagesNetwork Statistical AnalysisvibhutepmNo ratings yet

- Measurement of Commercial Peer-To-Peer Live Video Streaming: Shahzad Ali, Anket Mathur and Hui ZhangDocument12 pagesMeasurement of Commercial Peer-To-Peer Live Video Streaming: Shahzad Ali, Anket Mathur and Hui ZhangLuisJorgeOjedaOrbenesNo ratings yet

- Shift Register Petri NetDocument7 pagesShift Register Petri NetEduNo ratings yet

- STC Micro09Document12 pagesSTC Micro09Snigdha DasNo ratings yet

- Peer To PeerDocument8 pagesPeer To PeerIsaac NabilNo ratings yet

- QoS-enabled ANFIS Dead Reckoning AlgorithmDocument11 pagesQoS-enabled ANFIS Dead Reckoning AlgorithmacevalloNo ratings yet

- Yield-Oriented Evaluation Methodology of Network-on-Chip Routing ImplementationsDocument6 pagesYield-Oriented Evaluation Methodology of Network-on-Chip Routing Implementationsxcmk5773369nargesNo ratings yet

- Simulation-Based Performance Evaluation of Routing Protocols For Mobile Ad Hoc NetworksDocument11 pagesSimulation-Based Performance Evaluation of Routing Protocols For Mobile Ad Hoc NetworksMeenu HansNo ratings yet

- High Performance Computing in Power System Applications.: September 1996Document24 pagesHigh Performance Computing in Power System Applications.: September 1996Ahmed adelNo ratings yet

- 07 - Network Traffic Classification Using K-Means ClusteringDocument6 pages07 - Network Traffic Classification Using K-Means ClusteringfuturerestNo ratings yet

- Experimental Evaluation of LANMAR, A Scalable Ad-Hoc Routing ProtocolDocument6 pagesExperimental Evaluation of LANMAR, A Scalable Ad-Hoc Routing ProtocolveereshkumarmkolliNo ratings yet

- Network Administrator Assistance System Based On Fuzzy C-Means AnalysisDocument2 pagesNetwork Administrator Assistance System Based On Fuzzy C-Means AnalysisAli ShaikNo ratings yet

- 3 AfbDocument10 pages3 AfbWalter EscurraNo ratings yet

- Wireless Networks Throughput Enhancement Using Artificial IntelligenceDocument5 pagesWireless Networks Throughput Enhancement Using Artificial Intelligenceabs sidNo ratings yet

- A Comparison of Algorithms For Controller Replacement in Software Defined NetworkingDocument4 pagesA Comparison of Algorithms For Controller Replacement in Software Defined NetworkingAnoop BennyNo ratings yet

- 10.1.1.139.6493 Co-SimulationDocument14 pages10.1.1.139.6493 Co-SimulationОлександр ДорошенкоNo ratings yet

- Increasing Network Lifetime by Energy-Efficient Routing Scheme For OLSR ProtocolDocument5 pagesIncreasing Network Lifetime by Energy-Efficient Routing Scheme For OLSR ProtocolManju ManjuNo ratings yet

- Vlsi Handbook ChapDocument34 pagesVlsi Handbook ChapJnaneswar BhoomannaNo ratings yet

- Performance Evaluation of Parallel Sorting Algorithms On IMAN1 SupercomputerDocument17 pagesPerformance Evaluation of Parallel Sorting Algorithms On IMAN1 SupercomputerBrayanNo ratings yet

- Hardware Performance Simulations of Round 2 Advanced Encryption Standard AlgorithmsDocument55 pagesHardware Performance Simulations of Round 2 Advanced Encryption Standard AlgorithmsMihai Alexandru OlaruNo ratings yet

- TCP Congestion Control Survey MechanismsDocument8 pagesTCP Congestion Control Survey MechanismsChiranjib PatraNo ratings yet

- Pal A Charla 1997Document13 pagesPal A Charla 1997Gokul SubramaniNo ratings yet

- A Modular Architecture FullDocument27 pagesA Modular Architecture Fulljohn mortsNo ratings yet

- Ijet V3i4p13Document6 pagesIjet V3i4p13International Journal of Engineering and TechniquesNo ratings yet

- Computers in Industry: Gonca Tuncel, Gu Lgu N AlpanDocument10 pagesComputers in Industry: Gonca Tuncel, Gu Lgu N AlpanJUAN ALEJANDRO TASCÓN RUEDANo ratings yet

- An Application of Genetic Fuzzy System in Active Queue Management For TCP/IP Multiple Congestion NetworksDocument6 pagesAn Application of Genetic Fuzzy System in Active Queue Management For TCP/IP Multiple Congestion NetworkserpublicationNo ratings yet

- CWRC Project: Implementation Phase OneDocument13 pagesCWRC Project: Implementation Phase Onepoppy tooNo ratings yet

- Trusted Identity As A Service: White Paper 2019Document14 pagesTrusted Identity As A Service: White Paper 2019poppy tooNo ratings yet

- Assignment 1: A, B, C, U, VDocument1 pageAssignment 1: A, B, C, U, Vpoppy tooNo ratings yet

- Mobile Identity Authentication: A Secure Technology Alliance Mobile Council White PaperDocument39 pagesMobile Identity Authentication: A Secure Technology Alliance Mobile Council White Paperpoppy tooNo ratings yet

- Rcs Business CommunicationDocument18 pagesRcs Business Communicationpoppy tooNo ratings yet

- CCK/F/FSM/03: Application For Frequency Assignment and Licence in The Fixed and Mobile Radio Communication ServiceDocument3 pagesCCK/F/FSM/03: Application For Frequency Assignment and Licence in The Fixed and Mobile Radio Communication Servicepoppy tooNo ratings yet

- X3550M2 1U Rack Mountable ServerDocument2 pagesX3550M2 1U Rack Mountable Serverpoppy tooNo ratings yet

- x3550m2 HW Installation User GuideDocument138 pagesx3550m2 HW Installation User Guidepoppy tooNo ratings yet

- Communications Act PDFDocument53 pagesCommunications Act PDFlandry NowaruhangaNo ratings yet

- VNL Cascading Star Architecture for Rural GSM and BroadbandDocument1 pageVNL Cascading Star Architecture for Rural GSM and Broadbandpoppy tooNo ratings yet

- CCK grants provisional approval for Vihaan Networks equipmentDocument3 pagesCCK grants provisional approval for Vihaan Networks equipmentpoppy tooNo ratings yet

- Type Approval Distribution of Communications Equipment 2009Document12 pagesType Approval Distribution of Communications Equipment 2009poppy tooNo ratings yet

- CCK/F/FSM/03: Application For Frequency Assignment and Licence in The Fixed and Mobile Radio Communication ServiceDocument3 pagesCCK/F/FSM/03: Application For Frequency Assignment and Licence in The Fixed and Mobile Radio Communication Servicepoppy tooNo ratings yet

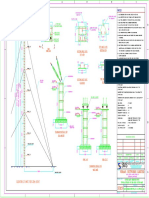

- Drawing 1 EriccsonDocument11 pagesDrawing 1 Ericcsonpoppy tooNo ratings yet

- VNL-Essar Field Trial: Nairobi-KenyaDocument13 pagesVNL-Essar Field Trial: Nairobi-Kenyapoppy tooNo ratings yet

- Kenya YU 2decDocument4 pagesKenya YU 2decpoppy tooNo ratings yet

- YU Kenya AgrDocument11 pagesYU Kenya Agrpoppy tooNo ratings yet

- Carrier Gride AP25dbi - PA1 PDFDocument4 pagesCarrier Gride AP25dbi - PA1 PDFpoppy tooNo ratings yet

- Carrier Gride AP25dbi - PA1 PDFDocument4 pagesCarrier Gride AP25dbi - PA1 PDFpoppy tooNo ratings yet

- Fop Faq: PDF Created by Apache FOPDocument20 pagesFop Faq: PDF Created by Apache FOPpoppy tooNo ratings yet

- VNL SLA-1-reviewDocument23 pagesVNL SLA-1-reviewpoppy tooNo ratings yet

- 20 GMT Medium 170 PDFDocument1 page20 GMT Medium 170 PDFpoppy tooNo ratings yet

- Idu 4e1 Master Box Rev 01Document1 pageIdu 4e1 Master Box Rev 01poppy tooNo ratings yet

- Dual Polarized Base Station AntennasDocument3 pagesDual Polarized Base Station Antennaspuput_asNo ratings yet

- Carrier Gride AP25dbi - PA1 PDFDocument4 pagesCarrier Gride AP25dbi - PA1 PDFpoppy tooNo ratings yet

- Safaricom Cluster: Proposed Plan (Phase 1)Document5 pagesSafaricom Cluster: Proposed Plan (Phase 1)poppy tooNo ratings yet

- Agreement Pilot Project VNL - UTLDocument39 pagesAgreement Pilot Project VNL - UTLpoppy tooNo ratings yet

- V P O A CDMA800 VP/360/10.5/0 Type VNL33: Ertically Olarised Mnidirectional NtennaDocument1 pageV P O A CDMA800 VP/360/10.5/0 Type VNL33: Ertically Olarised Mnidirectional Ntennapoppy tooNo ratings yet

- SMS Design Consultants Pvt. LtdDocument1 pageSMS Design Consultants Pvt. Ltdpoppy tooNo ratings yet

- Software Project Model For: Library Management SystemDocument7 pagesSoftware Project Model For: Library Management SystemMaria AsifNo ratings yet

- Using The Agilent Instrument Control Framework To Control The Agilent 1290 Infinity LC Through Waters Empower SoftwareDocument8 pagesUsing The Agilent Instrument Control Framework To Control The Agilent 1290 Infinity LC Through Waters Empower SoftwareMiguel Angel Rubiol GarciaNo ratings yet

- Pantech DownloaderDocument3 pagesPantech DownloaderRita HoweNo ratings yet

- Software E1 Quiz1Document13 pagesSoftware E1 Quiz1Jordan Nieva AndioNo ratings yet

- Behringer Xd80usb Behringer Xd80usb Manual de UsuarioDocument9 pagesBehringer Xd80usb Behringer Xd80usb Manual de UsuarioLuis Alfonso Sánchez MercadoNo ratings yet

- Cyber Security BusinessDocument33 pagesCyber Security BusinessEnkayNo ratings yet

- Tic Tac ToeDocument22 pagesTic Tac ToeLipikaNo ratings yet

- Configure Nexus 7000 Switches in Hands-On LabDocument2 pagesConfigure Nexus 7000 Switches in Hands-On LabDema PermanaNo ratings yet

- Object-Oriented Programming Concepts & Programming (OOCP) 2620002Document3 pagesObject-Oriented Programming Concepts & Programming (OOCP) 2620002mcabca5094No ratings yet

- A Seminar ReportDocument37 pagesA Seminar Reportanime loverNo ratings yet

- (4.0) 3609.9705.22 - DB5400 - 6179.2985.10 - VCMS - and - RRMC - Server - Specification - v0100Document16 pages(4.0) 3609.9705.22 - DB5400 - 6179.2985.10 - VCMS - and - RRMC - Server - Specification - v0100Nguyen Xuan NhuNo ratings yet

- 58 Cool Linux Hacks!Document15 pages58 Cool Linux Hacks!Weverton CarvalhoNo ratings yet

- Modern Data Strategy 1664949335Document39 pagesModern Data Strategy 1664949335Dao NguyenNo ratings yet

- VSM Recruiting-The Lean WayDocument12 pagesVSM Recruiting-The Lean Wayrinto.epNo ratings yet

- Hack I.T. - Security Through Pen - NeznamyDocument1,873 pagesHack I.T. - Security Through Pen - Neznamykevin mccormacNo ratings yet

- Application Notes BDL3245E/BDL4245E/BDL4645E: Remote Control Protocol For RJ-45 InterfaceDocument16 pagesApplication Notes BDL3245E/BDL4245E/BDL4645E: Remote Control Protocol For RJ-45 Interfacezebigbos22No ratings yet

- Alphabetical List Hierarchical ListDocument135 pagesAlphabetical List Hierarchical ListbooksothersNo ratings yet

- List of 7400 Series Integrated Circuits - Wikipedia, The Free EncyclopediaDocument11 pagesList of 7400 Series Integrated Circuits - Wikipedia, The Free EncyclopediaHermes Heli Retiz AlvarezNo ratings yet

- Improving Domino and DB2 Performance: Document Version 1Document24 pagesImproving Domino and DB2 Performance: Document Version 1grangenioNo ratings yet

- IOReturnDocument3 pagesIOReturnBrayan VillaNo ratings yet

- Erpi Admin 11123510Document416 pagesErpi Admin 11123510prakash9565No ratings yet

- Manual ZTE ZXCTN-6200-V11 20090825 EN V1Document73 pagesManual ZTE ZXCTN-6200-V11 20090825 EN V12011273434No ratings yet

- Resume of JbarshayDocument2 pagesResume of Jbarshayapi-27570233No ratings yet

- Jaya Pandey: Customer Support, Service Quality & Vendor Management - South AsiaDocument3 pagesJaya Pandey: Customer Support, Service Quality & Vendor Management - South AsiaJaya PandeyNo ratings yet

- Evan Patrick: Education ExperienceDocument1 pageEvan Patrick: Education ExperienceMargarita Urieta AsensioNo ratings yet

- Sonet/Sdh: 20.1 Review QuestionsDocument4 pagesSonet/Sdh: 20.1 Review QuestionsOso PolNo ratings yet

- CS2031 Digital Logic Design OBE AdnanDocument4 pagesCS2031 Digital Logic Design OBE Adnanseemialvi6No ratings yet

- csn10107 Lab06Document13 pagescsn10107 Lab06Liza MaulidiaNo ratings yet

- GNS3 Routing LabDocument7 pagesGNS3 Routing LabTea KostadinovaNo ratings yet

- 2011 PopCap Mobile Phone Games PresentationDocument94 pages2011 PopCap Mobile Phone Games Presentation徐德航No ratings yet