Professional Documents

Culture Documents

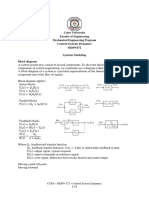

Ts3 Ts4 Ts1 Ts2: Distributed Testing/inference Scheme Process

Uploaded by

ZOOM1 i4Original Description:

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Ts3 Ts4 Ts1 Ts2: Distributed Testing/inference Scheme Process

Uploaded by

ZOOM1 i4Copyright:

Available Formats

A

Previous

ℱ1 + 𝛽1 𝐸𝑁 is used to

Ts1 infer 𝐵𝑘

Ts2 Ts3 Ts4 Process Distributed

ℱ2 +𝛽2

testing/inference

…

ℱ𝑖 (𝐵𝑘𝑃 ) Simple

ℱ𝑀 + 𝛽𝑀

𝐵𝑘𝑃

Concatenation of P Scheme

𝐸𝑁𝑘−1 output ( M times) ℱ1 (𝐵𝑘 )

…

…

Voting

Weight

ℱ2 (𝐵𝑘 )

𝑝

𝐵𝑘 ℱ𝑖 (𝐵𝑘𝑝 ) ℱ𝑖 (𝐵𝑘 ) 𝐸𝑁𝑘−1 (𝐵𝑘 )

𝐵𝑘

…

Data Partition

M loops

𝐵𝑘2 Distributed ℱ𝑖 (𝐵𝑘2 )

Testing ℱ𝑀 (𝐵𝑘 )

𝐵𝑘1

ℱ𝑖 (𝐵𝑘1 )

Ensemble Network Group of

𝒌𝒕𝒉data stream (EN) consists of 𝑴 𝑷 data 𝑷 inference outputs single base Prediction from M

𝑵𝟎 computing Final

without Label base classifiers and

groups from each data group using classifier different base

their voting weight nodes base classifier 𝓕𝒊

Ensemble

𝓕𝒊 prediction classifiers 𝓕𝟏 ,… Output

, 𝓕𝒎 ,…, 𝓕𝑴

ℱ𝑤𝑖𝑛

B Tr1 Tr2 Tr3 Tr4 Process Distributed

Training

𝐵𝑘′𝑃 ℒ𝑃 Scheme

…

…

Data Annotation and Data Partition

𝐵𝑘 Enrichment using

𝐵𝑘′ using Spark 𝐵𝑘

′𝑝

ℒ𝑝 Model

Fusion

ℒ𝐴𝐺𝐺 (ℱ𝑛𝑒𝑤 )

𝑫𝑨𝟑 module Platform ℱ𝑛𝑒𝑤 modifies Ensemble Network

…

…

Process (𝐸𝑁). If drift occurs, ℱ𝑛𝑒𝑤 is

𝐵𝑘′2 Distributed ℒ2 stacked as a new member

(Annotated+ Augmented Training

+ Labeled) Data 𝐵𝑘′1

of 𝐸𝑁. Otherwise, ℱ𝑛𝑒𝑤

ℒ1

replaces the winning

base classifier ℱ𝑤𝑖𝑛

𝒌𝒕𝒉data 𝑷 data groups + Aggregated

Accumulated 𝑵𝟎 computing 𝑷 sub local model

stream with ℱ𝑤𝑖𝑛 (winning

Data nodes models (A base

limited label base classifier)

classifier)

C 𝐖𝐞𝐒𝐜𝐚𝐭𝐭𝐞𝐫𝐍𝐞𝐭 ′ 𝐬 𝐥𝐚𝐫𝐠𝐞 − 𝐬𝐜𝐚𝐥𝐞 𝐝𝐢𝐬𝐭𝐫𝐢𝐛𝐮𝐭𝐞𝐝 𝐩𝐫𝐞𝐪𝐮𝐞𝐧𝐭𝐢𝐚𝐥 𝐭𝐞𝐬𝐭 − 𝐭𝐡𝐞𝐧 − 𝐭𝐫𝐚𝐢𝐧 𝐬𝐜𝐞𝐧𝐚𝐫𝐢𝐨 in classification task

𝐸𝑁𝑘−1 𝑫𝒂𝒕𝒂 𝑺𝒕𝒓𝒆𝒂𝒎 𝑩 = 𝑩𝟏 , 𝑩𝟐 , 𝑩𝟑 , … , 𝑩𝒌 , … , 𝑩𝑲 , … ; 𝟏 ≤ 𝒌 ≤ 𝑲; If any base classifiers are removed, EN is

updated. Winning base classifier is obtained

𝐵𝑘−1 from the highest voting weight

Ts1 Ts2 Ts3 Ts4

…

ℱ1 + 𝛽1

Global drift

Update base Base Learner

Concatenation of Obtain Ensemble detection - using

M Loop Distributed classifier voting Pruning ℱ2 +𝛽2

𝐵𝑘 Data Partition Inference using M base

partition output

for each base

Output using

voting weight

weight (𝛽) based Mechanism,

one sigma rule

Information from

…

classifiers on one sigma rule Voting weight

classifier 𝓕𝒎 (𝛽(𝑘−1) ) the winning base

assessment normalization ℱ𝑀 + 𝛽𝑀

𝐸𝑁𝑘 classifier output

In the node level (Inference

partition and assess each

sample using one sigma rule

for classifier weight update) –

Fig. C.1

Pseudolable from

Ensemble Output

Winning base

classifier ℱ𝑤𝑖𝑛

drift information

Tr1 Tr2 Tr3 Process

Tr4 ℱ1 + 𝛽1

ℱ2 +𝛽2

Distributed Model Fusion,

Data Annotation and Data Partition

…

The next

𝐵𝑘 Enrichment using

𝐵𝑘′ using Spark

Training using

winning base

creating new

base classifier prequential

𝑫𝑨𝟑 module on 𝑩𝒌 Platform ℱ𝑀 + 𝛽𝑀

classifier (ℱ𝑤𝑖𝑛 ) ℱ𝑛𝑒𝑤 Process 𝐵𝑘+1

…

ℱ𝑛𝑒𝑤 + 𝛽𝑛𝑒𝑤

in the case of

In the node level (Local drift illustration.

𝐸𝑁𝑘 ℱ𝑛𝑒𝑤 is attached

Inference Partition using a drift handling and

C.1. FWRLS)-Fig. C.2

A. Distributed testing

base classifier ℱ𝑚 and Penalty

𝐵𝑘+1 and reward mechanism C.2. Base learner learning (evolving) scheme

B. Distributed training

ℱ𝑚 (𝐵𝑘𝑃 ) A node

ℱ𝑤𝑖𝑛 (𝐵𝑘′𝑃 ) A node

a partition

model to be

merged

scheme

C. Prequential test-then-

…

Winning model

ℒ𝑝 Winning model

ℒ𝑝

ℱ𝑚

train scenario of

ℱ𝑤𝑖𝑛

+ + WeScatterNet which

In the node level In the node level

𝐵𝑘𝑃

Inference Partition and Penalty and Local drift handling (rule growing and pruning), makes use of distributed

Reward Mechanism 𝐵𝑘′𝑃 FWRLS (consequent parameter estimation)

a data group of a data group

training end testing

unlabelled data

scheme

You might also like

- Lecture No. 3 - Per-Unit System and Impedance DiagramDocument4 pagesLecture No. 3 - Per-Unit System and Impedance DiagramSteven John De VeraNo ratings yet

- Tutorial: First, Do You See QI Macros On Your Excel Menu?Document34 pagesTutorial: First, Do You See QI Macros On Your Excel Menu?ramiro ruizNo ratings yet

- SE ToolsDocument37 pagesSE Toolsfernando garzaNo ratings yet

- Principles of Information Security 6th Edition Whitman Solutions ManualDocument25 pagesPrinciples of Information Security 6th Edition Whitman Solutions ManualDavidBishopsryz98% (51)

- RCM Business - The New Marketing Plan BookDocument32 pagesRCM Business - The New Marketing Plan BookSamir K Mishra93% (70)

- Getting Started in Data Analysis Using Stata: Oscar Torres-ReynaDocument63 pagesGetting Started in Data Analysis Using Stata: Oscar Torres-Reynashivani singhNo ratings yet

- Large Scale Distributed Graph Processing: Data Mining (CS6720)Document7 pagesLarge Scale Distributed Graph Processing: Data Mining (CS6720)Rachit TibrewalNo ratings yet

- Kernel Embeddings of Conditional DistributionsDocument33 pagesKernel Embeddings of Conditional DistributionsDaniel VelezNo ratings yet

- Geeksforgeeks Org Array Subarray Subsequence and Subset Ref LBPDocument2 pagesGeeksforgeeks Org Array Subarray Subsequence and Subset Ref LBPTest accNo ratings yet

- Lecture 9Document10 pagesLecture 9Md Shahjahan IslamNo ratings yet

- How To Derive Errors in Neural Network With The Backpropagation Algorithm?Document7 pagesHow To Derive Errors in Neural Network With The Backpropagation Algorithm?Андрей МаляренкоNo ratings yet

- CH#3 Numerical Differentiation and Integration-14!05!2023-First FormDocument46 pagesCH#3 Numerical Differentiation and Integration-14!05!2023-First FormZayan AliNo ratings yet

- An Introduction To t-SNE With Python Example by Andre Violante Towards Data ScienceDocument12 pagesAn Introduction To t-SNE With Python Example by Andre Violante Towards Data SciencepbnfouxNo ratings yet

- Eniscope Data Sheet (PT. EEI)Document2 pagesEniscope Data Sheet (PT. EEI)egi yugiNo ratings yet

- Journal of Statistical SoftwareDocument66 pagesJournal of Statistical SoftwareJose Luis MoralesNo ratings yet

- 21 ReviewDocument40 pages21 ReviewAhmet KerimNo ratings yet

- Introduction To AI (Studia)Document11 pagesIntroduction To AI (Studia)szefek24No ratings yet

- Getting Started in R Stata Notes On Exploring Data: Oscar Torres-ReynaDocument33 pagesGetting Started in R Stata Notes On Exploring Data: Oscar Torres-ReynaWilians SantosNo ratings yet

- SUMMARIZED STEEL DESIGN FlexureDocument1 pageSUMMARIZED STEEL DESIGN Flexurekenji belanizoNo ratings yet

- RNN Part2Document42 pagesRNN Part2Bhushan Raju GolaniNo ratings yet

- Implement Neural Networks Using Keras and Pytorch: Liang LiangDocument32 pagesImplement Neural Networks Using Keras and Pytorch: Liang LiangraveritaNo ratings yet

- Mug21 DL ML v3Document84 pagesMug21 DL ML v3Fernando CisnerosNo ratings yet

- Disentangling Label Distribution For Long-Tailed Visual RecognitionDocument15 pagesDisentangling Label Distribution For Long-Tailed Visual Recognition2000010605No ratings yet

- IDS 7 NeuralNetworks 2Document88 pagesIDS 7 NeuralNetworks 2Serena Angelis Koyouer KamdemNo ratings yet

- ICT Lecture01 GIMPADocument40 pagesICT Lecture01 GIMPAJoseph Kabu TettehNo ratings yet

- Joint Embeddings of Shapes and Images Via CNN Image PurificationDocument43 pagesJoint Embeddings of Shapes and Images Via CNN Image PurificationnsparikhNo ratings yet

- Accelerating Machine Learning Training Time For Limit Order Book PredictionDocument7 pagesAccelerating Machine Learning Training Time For Limit Order Book PredictionSiyao LuNo ratings yet

- DAT560G Entity RelationshipsDocument67 pagesDAT560G Entity Relationshipshaojie shiNo ratings yet

- Unit-1 Notes - Data StructureDocument153 pagesUnit-1 Notes - Data Structureatulshukla.socialNo ratings yet

- Gentle Introduction To Local Search in Combinatorial OptimizationDocument98 pagesGentle Introduction To Local Search in Combinatorial OptimizationQuang AnhNo ratings yet

- Journal of Statistical SoftwareDocument9 pagesJournal of Statistical SoftwareMonteagudo JorgeNo ratings yet

- Rate SplitingDocument39 pagesRate Splitingsima.sobhi70No ratings yet

- CS3500 Computer Graphics: Module: Colours EtcDocument30 pagesCS3500 Computer Graphics: Module: Colours Etcapi-3799599No ratings yet

- Solar Cell Simulation: 1. PN Unión ModelDocument4 pagesSolar Cell Simulation: 1. PN Unión ModelCristian PachonNo ratings yet

- MicrosoftDefender MindMap-1Document1 pageMicrosoftDefender MindMap-1aasifali786No ratings yet

- MISO ARX TheoryDocument29 pagesMISO ARX TheoryAlex StihiNo ratings yet

- Problem Set 3: Questions To Understand Recursive Complexity AnalysisDocument3 pagesProblem Set 3: Questions To Understand Recursive Complexity AnalysisRonaldMartinezNo ratings yet

- 10 29109-Gujsc 1003694-2005762Document13 pages10 29109-Gujsc 1003694-2005762محمد ابو خضيرNo ratings yet

- 11 EC EncodingDocument47 pages11 EC EncodingDiamondNo ratings yet

- CN-computer Networks AkashDocument67 pagesCN-computer Networks AkashElon muskNo ratings yet

- CS 601 Machine Learning Unit 5Document18 pagesCS 601 Machine Learning Unit 5Priyanka BhateleNo ratings yet

- 5.a Input and Transfer ImpedanceDocument6 pages5.a Input and Transfer ImpedanceSaravanan ManavalanNo ratings yet

- CS480 Lecture November 14thDocument72 pagesCS480 Lecture November 14thRajeswariNo ratings yet

- Unit 2Document11 pagesUnit 2hollowpurple156No ratings yet

- CS3500 Computer Graphics Module: Scan Conversion: P. J. NarayananDocument56 pagesCS3500 Computer Graphics Module: Scan Conversion: P. J. Narayananapi-3799599No ratings yet

- Chapter 5 Z TransformDocument33 pagesChapter 5 Z TransformAsge AscheNo ratings yet

- 08 Natural Language Processing in TensorflowDocument29 pages08 Natural Language Processing in TensorflowAkbar ShakoorNo ratings yet

- Ch3 Abrham MDocument99 pagesCh3 Abrham MAsge AscheNo ratings yet

- Ch.6 - Circular MotionDocument9 pagesCh.6 - Circular Motionnoriegak94No ratings yet

- Data MiningDocument21 pagesData Miningmohamedelgohary679No ratings yet

- Dimension Reduction (PCADocument12 pagesDimension Reduction (PCASharmeen ShaikhNo ratings yet

- ALGORITHMSDocument6 pagesALGORITHMSEmaaz SiddiqNo ratings yet

- Introduction To BIDocument1 pageIntroduction To BIZubeen ShahNo ratings yet

- Lecture 3Document5 pagesLecture 3akhbar elyoum academyNo ratings yet

- Cont Math FoundationDocument18 pagesCont Math FoundationMuhammad ZohaibNo ratings yet

- Comparison of Numerical-Analysis SoftwareDocument10 pagesComparison of Numerical-Analysis Softwareava939No ratings yet

- L22a-Đã Chuyển ĐổiDocument65 pagesL22a-Đã Chuyển ĐổiNamNo ratings yet

- Natural Language Processing With Deep Learning CS224N/Ling284Document62 pagesNatural Language Processing With Deep Learning CS224N/Ling284dinhmanh hoangNo ratings yet

- Understanding Q-Q Plots: Latest NewsDocument4 pagesUnderstanding Q-Q Plots: Latest NewsAhmed ElmaghrabiNo ratings yet

- Lec21 BiasVarianceDecompositionDocument15 pagesLec21 BiasVarianceDecompositionhu jackNo ratings yet

- UNATE - Timing Arc - VLSI ConceptsDocument10 pagesUNATE - Timing Arc - VLSI ConceptsIlaiyaveni IyanduraiNo ratings yet

- R ProgrammingDocument114 pagesR ProgrammingKunal DuttaNo ratings yet

- ASDDocument11 pagesASDZOOM1 i4No ratings yet

- Home TEMPLATE:: Old Link in I-4Document2 pagesHome TEMPLATE:: Old Link in I-4ZOOM1 i4No ratings yet

- Results FinalDocument38 pagesResults FinalZOOM1 i4No ratings yet

- FCM - The Fuzzy C-Means Clustering AlgorithmDocument13 pagesFCM - The Fuzzy C-Means Clustering Algorithmsuchi87No ratings yet

- Chapter 6Document18 pagesChapter 6ehmyggasNo ratings yet

- Sample - Project Abstract - Outline Report - Course No. - BITS ID No.Document8 pagesSample - Project Abstract - Outline Report - Course No. - BITS ID No.ANKUR KUMAR SAININo ratings yet

- Multiple Imputation MethodDocument72 pagesMultiple Imputation MethodSrea NovNo ratings yet

- Glencoe Mathematics - Online Study ToolsDocument4 pagesGlencoe Mathematics - Online Study ToolsShahzad AhmadNo ratings yet

- Direct Transient ResponseDocument24 pagesDirect Transient Responseselva1975No ratings yet

- Apachecon Advanced Oo Database Access Using PdoDocument37 pagesApachecon Advanced Oo Database Access Using PdoAndreaaSimoesNo ratings yet

- Flag-F (Logistics Note-G20-ETWG-1-Indonesia)Document5 pagesFlag-F (Logistics Note-G20-ETWG-1-Indonesia)SHAILENDRA SINGHNo ratings yet

- E Banking and E Payment - IsDocument20 pagesE Banking and E Payment - IsaliiiiNo ratings yet

- Lanelet2: A High-Definition Map Framework For The Future of Automated DrivingDocument8 pagesLanelet2: A High-Definition Map Framework For The Future of Automated DrivingJEICK HINCAPIE BARRERANo ratings yet

- Assignment Summary: Creating A Multimedia PresentationDocument2 pagesAssignment Summary: Creating A Multimedia PresentationAria LeNo ratings yet

- Solano UserGuide enDocument23 pagesSolano UserGuide enTuan-Anh VoNo ratings yet

- Ipmiutil User GuideDocument127 pagesIpmiutil User Guidesatish_pup3177No ratings yet

- Sbs w2k C Constants Enumerations and StructuresDocument12 pagesSbs w2k C Constants Enumerations and StructuresMd. Ashraf Hossain SarkerNo ratings yet

- Papyrus 0Document577 pagesPapyrus 0Wayne HensonNo ratings yet

- Manual Digital Audaces MarcadaDocument171 pagesManual Digital Audaces MarcadaAstridChoque100% (1)

- Secure and Fast Online File Sharing Script (V2.1.3) - Vcgsoft Freeware TeamDocument4 pagesSecure and Fast Online File Sharing Script (V2.1.3) - Vcgsoft Freeware TeamVickyMalhotraNo ratings yet

- Ankit ResumeDocument1 pageAnkit ResumeAman NegiNo ratings yet

- Microprogramming (Assembly Language)Document24 pagesMicroprogramming (Assembly Language)Thompson MichealNo ratings yet

- An Introduction To Android Development: CS231M - Alejandro TroccoliDocument22 pagesAn Introduction To Android Development: CS231M - Alejandro TroccolisinteNo ratings yet

- Elevator Program in JavaDocument7 pagesElevator Program in JavaUmmu Ar Roisi50% (2)

- DT Midterm ReviewerDocument13 pagesDT Midterm ReviewerMarcos JeremyNo ratings yet

- Ac2000 WebDocument192 pagesAc2000 WebmahirouxNo ratings yet

- ScanCONTROL 2900 Interface SpecificationDocument19 pagesScanCONTROL 2900 Interface SpecificationRadoje RadojicicNo ratings yet

- Network Slicing For 5G With SDN/NFV: Concepts, Architectures and ChallengesDocument20 pagesNetwork Slicing For 5G With SDN/NFV: Concepts, Architectures and ChallengesAsad Arshad AwanNo ratings yet

- Installing OpenSceneGraphDocument9 pagesInstalling OpenSceneGraphfer89chopNo ratings yet

- Dice Resume CV Bhandari ADocument8 pagesDice Resume CV Bhandari AkavinNo ratings yet

- Avi EcommerceDocument11 pagesAvi EcommerceAvinash JangraNo ratings yet