Professional Documents

Culture Documents

An Introductory Note On Machine Learning. A V Narasimhadhan

An Introductory Note On Machine Learning. A V Narasimhadhan

Uploaded by

Kalpana Bhat0 ratings0% found this document useful (0 votes)

10 views2 pagesOriginal Title

Template_machinelearning

Copyright

© © All Rights Reserved

Available Formats

PDF, TXT or read online from Scribd

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

0 ratings0% found this document useful (0 votes)

10 views2 pagesAn Introductory Note On Machine Learning. A V Narasimhadhan

An Introductory Note On Machine Learning. A V Narasimhadhan

Uploaded by

Kalpana BhatCopyright:

© All Rights Reserved

Available Formats

Download as PDF, TXT or read online from Scribd

You are on page 1of 2

AN INTRODUCTORY NOTE ON MACHINE LEARNING.

A V Narasimhadhan

Department of Electronics and Communications Engineering, National Institute of Technology, Surathkal, India.

Email ID: dhansiva@nitk.edu.in

Machine to do classification/recognition/regression is a difficult reduction of accuracy in the classification or regression. Elaborately,

task as it understands the numerical values which may be similar to more number of layers would give the more complicated non linear

different objects to recognize. More precisely, in terms of features, function to minimize. Since the optimizers use gradient descent

there is a lot of variability in patterns of a single class, and methods, it may cause vanishing gradients and may end up in

probability that features vectors of patterns from different classes the worst local minimum [13]- [14]. The computation graph that

can be arbitrarily close [1]. the neural network allows to use the backpropagation algorithm

Because of simplicity in implementation, learning of machines which is recursive. However, research is going on for the efficient

was started by linear models which are generally efficient. However, optimizer other the gradient methods where we have the advantages

linear models are not always sufficient to fit the data [2]- [5]. of backpropagation algorithm. In addition to this area, research

And, we know that the nonlinear models are always good fit is going on for the weight initialization, activation functions and

for the data. Support vector machines(SVMs), Decision trees batch normalization techniques. The weight initialization plays an

and neural networks provide the good parameterized class of important role in training the neural network. One of the popular is

nonlinear functions to fit the data. Among these approaches, Xavier initialization which maintains the sufficient dynamic range

neural networks gained momentum as it has the advantage of back at each layer. Activation function also plays crucial in avoiding

propagation algorithms [6]- [8], unlike other approaches such as the vanishing and exploding the gradients. In 2016, people have

SVMs and Decision trees and many more. More of we have high proposed the new architecture named residual network (RESNET)

end computing devices to implement the non linear models. Fitting which has the concept of learning as good/equivalent as the

is generally classified as classification or regression depending on previous network [15]- [18]. None of the architecture is able to

the usage. In case of classification, the output of the model takes dominate the RESNET as all most all layer architectures are merely

two or finite values whereas for the regression, out of the model the modifications of RESENTs [19]- [20].

takes values from real line, for instance, localization, filtering,

denoising and synthesis etc. come under regression which is a class One of the common problems that we would face in machine

of fitting. Perceptron algorithm, least squares method, fisher linear learning is overfitting. This is because of insufficient data to train

discriminant analysis and popularly the logistic regression are used the machine. Solutions to this problem are to either increase the

to learn the linear classifiers [9]. On the other hand, SVMs, neural data size or use the regularization term. Adding regularization to the

networks present the linear and non linear classifiers as well. All data is nothing but giving prior information to the machine. Usually,

these classifiers are the approximations of the Bayesian classifiers with no prior, the learning is named classical inference and with

[10], meaning that, error we get using the Bayesian classifier is not prior, the learning is named as the Bayesian inference in statistical

more than the error obtained by any other classifier. Essentially, sense [21]- [24]. However, in 2017, from Geoffrey Hinton’s lab,

Bayessian sets bound on the error. However, Bayesian classifier CapsuleNet [25] has been proposed, which is different from all the

is unused since it has a problem of estimating the densities from other architectures available. Importantly, the CapsuleNET does not

the available fewer samples. Among the linear classifiers, the use the pooling layer as it only passes the dominant features to the

logistic regression is more close to the Bayes classifier in sense, next layer resulting at high end layers that do not have the spatial

and in some situations, the error produced in both cases is the information. The advantage of this NET is that it works effectively

same [2]. The non linear SVM classifier was popular more than a even if we have a limited number of samples in the data. Precisely,

decade before the revisit of the convolutional neural network. The parts of the network works as a regularization term [26]- [29].

idea of this non linear classifier is that features are non linearly The discussion is for the cases where we have the data with

transformed into higher dimension where in the classes are linearly lables which is the tranining data. Learning that we get with these

separable to which one can apply the linear classifier [1]. samples of the data is called the supervised learning. However, in

most of the cases, we obtained the data with no labels. Hence, we

There are many types of neural networks out of which, need to train the classifiers without labels. This type of learning

convolutional neural networks (CNN) are most popular. First is called unsupervised learning. The goal of this learning is to

convolutional neural network, which is LeNet [11], came in 1998, get the underlying hidden structure of the data. Unsupervised

but due to the implementation issues, people had not shown interest learning includes clustering, dimensionality reduction, density

in it. With the advent of fast computing devices, convolutional estimation etc. Some of the most commonly used algorithms

neural networks came in light in 2012 with the introduction of the in unsupervised learning include: clustering, neural networks, and

Alexnet [12]. The main advantage of convolutional neural networks approaches for learning latent variable models. Before the evolution

is removal of finding the features which are input to the linear or of neural networks, the popular algorithms in this learning were

non linear models, and importantly, these networks learn deeply clustering , and approaches such as expectation maximization and

hence it is called the deep nets. Many modifications of Alexnet have blind separation techniques. Blind separation techniques are still

been proposed by the changes in the number of network layers. sought after, which are principal component analysis, independent

People have noticed that increasing the layers is the reason for the component analysis, non-negative matrix factorization and singular

value decomposition [1]- [2]. classification with deep convolutional neural net- works”, In

NIPS, pp. 1106-1114, 2012.

In recent years, people are moving from unsupervised learning [13] D. P. Kingma and J. Ba, Adam: A method for stochastic

to reinforcement learning. However, unsupervised learning still optimization, arXiv preprint arXiv:1412.6980, 2014.

is a hot topic in the paradigm of machine learning. As said in [14] R. K. Srivastava, K. Greff, and J. Schmidhuber, ” Training

the previous paragraph, unsupervised learning includes the density very deep networks“, In NIPS, 2015.

estimation. Most of the cases, to estimate the density, we assume [15] K. He, X. Zhang, S. Ren, and J. Sun. Delving deep in-

the form of density and estimate the parameters using maximum torectifiers: Surpassing human-level performance on imagenet

likelihood (ML) and Bayesian methods from the available data. Of classification. In ICCV, 2015.

course, the non parametric methods are even popular for estimating [16] K. He, X. Zhang, S. Ren, and J. Sun. Deep residual learning

the densities. In recent years, Generative Adversarial Nets (GANs) for image recognition. In CVPR, 2016.

[30] are used to estimate the density, which has different concepts [17] K. He, X. Zhang, S. Ren, and J. Sun, ”Identity mappings in

unlike the concepts of conventional parametric and non parametric deep residual networks“, In ECCV, 2016.

methods. Apart from the GANs, Pixel CNN [31], Pixel RNN [32] [18] B. M. Wilamowski and H. Yu, ” Neural network learning

(Autoregressive models) and variational encoders [33] are used to without backpropagation“, IEEE Transactions on Neural Net-

generate the new samples from given samples. However, GANs works, 21(11):17931803, 2010.

are more popular since the generated samples follow the input [19] K. Simonyan and A. Zisserman, “Very deep convolutional

samples distribution, and it is not the case with Pixel CNN, Pixel networks for large-scale image recognition“, In ICLR, 2015.

RNN and variational encoders. [20] C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed, D. Anguelov,

D. Erhan, V. Vanhoucke, and A. Rabinovich, ”Going deeper

Reinforcement Learning (RL) is in between supervised learning with convolutions“, In CVPR, 2015.

and unsupervised learning technique which interacts with a real [21] Harold Jeffreys, “Thoery of probability”, Oxfor University

time environment unlike deep learning and machine learning which Press, Oxford, UK, 1961 reprint edition,1939

works on pre-collected data [34]. It is analogous to how dogs and [22] Frederick, “Statistical Methods for Speech Recognition“, MIT

other animals get trained. Collection of data is not always easy, in Press, Cambridge, MA, 1997.

those cases we can make our agent interact with the environment [23] I. T. Jollige, ”pricipal component analysis“, Springer Verlag,

directly and learn from it. The basic terminology of RL includes New York, 1986.

these 4 words: State, action, reward and next state. State is used [24] C. W. Therrien, ”Decision estimation and classification: An

to describe the agent about the environment, it can be as simple introduction to Pattern recognition and Related topics“, Wiely,

as a Vector or an Image. Action is a thing which the agent can New York, 1989.

perform while interacting with the environment, say for example [25] Sara Sabour, Nicholas Frosst, Geoffrey E Hinton,“Dynamic

move forward, move backward, move left and right etc. Reward is Routing Between Capsules”, arxiv.org, 2017

used to tell the agent whether its learning is going in the expected [26] Arjun Punjabi, Jonas Schmid, and Aggelos K. Katsaggelos,

manner or not, say we want to train a robot to walk, we cant “ Examining the Benefits of Capsule Neural Networks”

collect data or program a robot which can walk perfectly in any arxiv.org, 2020.

situation. There we can use RL with input image as the state and [27] Rinat Mukhometzianov and Juan Carrillo, “CapsNet compara-

the movements of the Limbs as actions, if the robot is walking as tive performance evaluation for image classification, arxiv.org,

we need or without falling then we give positive rewards else we 2018.

feed negative rewards. The Goal of any RL agent is to maximize [28] F. Deng, S. Pu, X. Chen, Y. Shi, T. Yuan, and S. Pu,

its reward [34]- [37]. Hyperspectral image classification with capsule network using

limited training samples, Sensors, vol. 18, no. 9, p. 3153,

2018.

I. REFERENCES [29] R. LaLonde and U. Bagci, Capsules for object segmentation,

[1] C. M. Bishop, “pattern recognition and Machine learning”, arXiv preprint arXiv:1804.04241, 2018.

Springer, 2006. [30] Ian Goodfellow et al., Generative Adversarial Nets, NIPS

[2] C. M. Bishop, “Neural Networks for pattern recognition”, 2014.

Oxford University Press, Indian Edition, 2003. [31] A. van den Oord, N. Kalchbrenner, O. Vinyals, L. Espeholt, A.

[3] R. O. Duda and PE Hart, “Pattern Classification and Scene Graves, and K. Kavukcuoglu. Conditional image generation

analysis”, Wiley, 1973. with pixelcnn decoders. NIPS 2016.

[4] ,R. O. Duda, PE Hart and D. G. Stork, “Pattern Classifica- [32] A. Oord, N. Kalchbrenner, K. Kavukcuoglu,“Pixel Recurrent

tion”, Wiley, 2002. Neural Networks” arxiv.org, 2016.

[5] Valdimir Cherkassky and Fillip Mullier, ”Learning from data: [33] Diederik P. Kingma and Max Welling, “Auto Encoding Vari-

concepts, theory, and methods“, Wiley, New York 1951. ational Bayes”, ICLR 2014.

[6] Y. S. Abu-Mostafa, ”Learning from hints in neural networks“, [34] Volodymyr Mnih, Koray Kavukcuoglu, David Silver, Alex

Journal of complexity, 1990. Graves, Ioannis Antonoglou, Daan Wierstra and Martin Ried-

[7] C. M. Bishop, ” Neural Networks for Pattern Recognition“, miller, “ Playing Atari with Deep Reinforcement Learning”,

Oxford University Press,1995. arxiv.org, 2013.

[8] M. H. Hassoun, ” Fundamentals of Artificial Neural Net- [35] Volodymyr Mnih, Nicolas Heess, Alex Graves and Ko-

works“, MIT Press, New York, 1995. ray Kavukcuoglu, “Recurrent Models of Visual Attention”,

[9] I. Guyon and D. G. Stork, ” Linear Discriminant and support arxiv.org, 2014.

vector classifiers“, Advances in large margin classifiers, MIT [36] Hado van Hasselt, Arthur Guez and David Silver,“Deep

Press Cambridge, MA, 1999. Reinforcement Learning with Double Q-learning”, arxiv.org,

[10] T. M Cover and P. E Hart, ”Nearest Neighbor pattern classi- 2015.

fication“ , IEEE Transaction on Information Thoery, 1967. [37] A. Rupam Mahmood, Dmytro Korenkevych, Gautham Vasan,

[11] Y. LeCun, L. Bottou, G. B. Orr, and K.R. Mller, “Efficient William Ma, James Bergstra, “Benchmarking Reinforcement

backprop. In Neural Networks: Tricks of the Trade”, pages Learning Algorithms on Real-World Robots”, arxiv.org, 2018.

9-50. Springer, 1998. .

[12] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “ImageNet

You might also like

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeFrom EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeRating: 4 out of 5 stars4/5 (5814)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreFrom EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreRating: 4 out of 5 stars4/5 (1092)

- Never Split the Difference: Negotiating As If Your Life Depended On ItFrom EverandNever Split the Difference: Negotiating As If Your Life Depended On ItRating: 4.5 out of 5 stars4.5/5 (845)

- Grit: The Power of Passion and PerseveranceFrom EverandGrit: The Power of Passion and PerseveranceRating: 4 out of 5 stars4/5 (590)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceFrom EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceRating: 4 out of 5 stars4/5 (897)

- Shoe Dog: A Memoir by the Creator of NikeFrom EverandShoe Dog: A Memoir by the Creator of NikeRating: 4.5 out of 5 stars4.5/5 (540)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersFrom EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersRating: 4.5 out of 5 stars4.5/5 (348)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureFrom EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureRating: 4.5 out of 5 stars4.5/5 (474)

- Her Body and Other Parties: StoriesFrom EverandHer Body and Other Parties: StoriesRating: 4 out of 5 stars4/5 (822)

- The Emperor of All Maladies: A Biography of CancerFrom EverandThe Emperor of All Maladies: A Biography of CancerRating: 4.5 out of 5 stars4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)From EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Rating: 4.5 out of 5 stars4.5/5 (122)

- The Little Book of Hygge: Danish Secrets to Happy LivingFrom EverandThe Little Book of Hygge: Danish Secrets to Happy LivingRating: 3.5 out of 5 stars3.5/5 (401)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyFrom EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyRating: 3.5 out of 5 stars3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)From EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Rating: 4 out of 5 stars4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaFrom EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaRating: 4.5 out of 5 stars4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryFrom EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryRating: 3.5 out of 5 stars3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnFrom EverandTeam of Rivals: The Political Genius of Abraham LincolnRating: 4.5 out of 5 stars4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealFrom EverandOn Fire: The (Burning) Case for a Green New DealRating: 4 out of 5 stars4/5 (74)

- The Unwinding: An Inner History of the New AmericaFrom EverandThe Unwinding: An Inner History of the New AmericaRating: 4 out of 5 stars4/5 (45)

- Sap Barcode DevelopmentDocument12 pagesSap Barcode DevelopmentAbhilashNo ratings yet

- Atcd Unit 1Document58 pagesAtcd Unit 1Sudhir Kumar SinghNo ratings yet

- Computer Network Prelim To MidtermDocument33 pagesComputer Network Prelim To MidtermMary JOy BOrjaNo ratings yet

- Telecom I NationDocument3 pagesTelecom I Nationمحمد علیNo ratings yet

- Implementation Issue For Super Instructions in GforthDocument9 pagesImplementation Issue For Super Instructions in GforthvijaymathewNo ratings yet

- Image Classification Using Naïve Bayes Classifier: Dong-Chul ParkDocument5 pagesImage Classification Using Naïve Bayes Classifier: Dong-Chul ParkMuksin AlfalahNo ratings yet

- TestDocument26 pagesTestAyoub LahdoudNo ratings yet

- Fieldvue DVC5000 Series Digital Valve ControllerDocument10 pagesFieldvue DVC5000 Series Digital Valve Controllerhocine1No ratings yet

- FEFLOW - 7.3 - Internet - Licensing HOW TO - FINALDocument16 pagesFEFLOW - 7.3 - Internet - Licensing HOW TO - FINALCarolina SayagoNo ratings yet

- Calculate Client Security Hash - Walkthrough Hints PDFDocument18 pagesCalculate Client Security Hash - Walkthrough Hints PDFYessy TrottaNo ratings yet

- VpsDocument3 pagesVpsISG AixNo ratings yet

- IFN556 2023 Sem2 Assessment2Document5 pagesIFN556 2023 Sem2 Assessment2jayNo ratings yet

- Project ReportDocument31 pagesProject ReportbilalNo ratings yet

- Analysing Malicious CodeDocument196 pagesAnalysing Malicious Codeuchiha madaraNo ratings yet

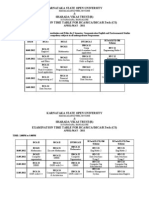

- Downloads Time Table April 2012 BCA MCA IMCA (1) FinalDocument10 pagesDownloads Time Table April 2012 BCA MCA IMCA (1) Finallovedixit1No ratings yet

- Best Ways To Create Password Reset Disk For WindowsDocument17 pagesBest Ways To Create Password Reset Disk For WindowsMarquez LumiuNo ratings yet

- JasminDocument117 pagesJasminfbd123No ratings yet

- 15CS565 Module4Document61 pages15CS565 Module4Ravi ShankarNo ratings yet

- Study Outline For Data StructureDocument73 pagesStudy Outline For Data StructuremusaNo ratings yet

- Angular - Angular Coding Style GuideDocument45 pagesAngular - Angular Coding Style GuideEdgard Joel Bejarano AcevedoNo ratings yet

- Income&expenseDocument32 pagesIncome&expensevokaNo ratings yet

- (Sem4.1) Key LoggerDocument6 pages(Sem4.1) Key LoggerElit HakimiNo ratings yet

- Case Study: Distributed Scrum Project For Dutch RailwaysDocument7 pagesCase Study: Distributed Scrum Project For Dutch RailwaysDark LordNo ratings yet

- Srs Student ManagementdocxDocument16 pagesSrs Student ManagementdocxLevko DovganNo ratings yet

- Ceragon NetMaster User Guide R15A00 Rev A.01Document614 pagesCeragon NetMaster User Guide R15A00 Rev A.01Telworks RSNo ratings yet

- VHDL Code For Full Adder PDFDocument6 pagesVHDL Code For Full Adder PDFvenkata satishNo ratings yet

- Got2000 o Mes eDocument210 pagesGot2000 o Mes eFlávio Henrique VicenteNo ratings yet

- Online Examination System Android App: Diploma in Information TechnologyDocument16 pagesOnline Examination System Android App: Diploma in Information TechnologyVed Engineering CHANo ratings yet

- Cbnetv2: A Composite Backbone Network Architecture For Object DetectionDocument11 pagesCbnetv2: A Composite Backbone Network Architecture For Object DetectionMih VicentNo ratings yet

- WWW - Referat.ro Computer - Docec656Document2 pagesWWW - Referat.ro Computer - Docec656Claudiu IlincaNo ratings yet