Professional Documents

Culture Documents

AI Fundamentals: Constraints Satisfaction Problems: Maria Simi

AI Fundamentals: Constraints Satisfaction Problems: Maria Simi

Uploaded by

sowdaga mustafizur rahmanOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

AI Fundamentals: Constraints Satisfaction Problems: Maria Simi

AI Fundamentals: Constraints Satisfaction Problems: Maria Simi

Uploaded by

sowdaga mustafizur rahmanCopyright:

Available Formats

AI

Fundamentals: Constraints Satisfaction Problems

Maria Simi

Constraints satisfaction

LESSON 3

– SEARCHING FOR SOLUTIONS

Searching for solutions

Most problems cannot be solved by constraint propagation alone. In this case we must

search for solutions or combine constraint propagation with search.

A classical formulation of CSP as search problem is the following:

1. States are partial assignments

2. Initial state: the empty assignment

3. Goal state: a complete assignment satisfying all constraints

4. Action: assign to a unassigned variable xi a value in Di

Branching factor = |D1|´|D2|´ … ´ |Dn| ?

Assume d the maximum cardinality

Top level n ´d, then (n −1)´d, … 1´d → n! ´ dn leaves

We can do better …

02/10/17 AI FUNDAMENTALS - M. SIMI 3

CSP as search problems

We can exploit commutativity. A problem is commutative if the order of

application of any given set of actions has no effect on the outcome. In this case

the order of variable assignment does not change the result.

1. We can consider a single variable for assignment at each step, so the

branching factor is d and the number of leaves is dn

2. We can also exploit depth limited search: backtracking search with depth

limit n, the number of variables.

Search strategies:

Generate and Test. We generate a full solution and then we test it. Not the best.

Anticipated control. After each assignment we check the constraints; if some

constraints is violated, we backtrack to previous choices (undoing the assignment).

02/10/17 AI FUNDAMENTALS - M. SIMI 4

Backtracking search for map coloring

WA=red WA=green WA=blue

WA=red WA=red

NT=green NT=blue

WA=red WA=red

NT=green NT=green

Q=red Q=blue

02/10/17 AI FUNDAMENTALS - M. SIMI 5

Backtracking search algorithm

02/10/17 AI FUNDAMENTALS - M. SIMI 6

Heuristics and search strategies for CSP

1. SELECT-UNASSIGNED-VARIABLE: Which variable should be assigned next?

2. ORDER-DOMAIN-VALUES: in which order should the values be tried?

3. INFERENCE: what inferences should be performed at each step in the search?

Techniques for constraint propagation (local consistency enforcement) can

be used.

4. BACKTRACKING: where to back up to? When the search arrives at an

assignment that violates a constraint, can the search avoid repeating this

failure? Forms of intelligent backtracking.

02/10/17 AI FUNDAMENTALS - M. SIMI 7

Choosing the next variable to assign

Dynamic ordering is better.

1. Minimum-Remaining-Values (MRV) heuristic: Choosing the variable with the

fewest “legal” remaining values in its domain.

Also called most costrained variable or fail-first heuristics, because it helps in

discovering inconsistencies earlier.

2. Degree heuristic: select the variable that is involved in the largest number of

constraints on other unassigned variables. To be used when the size of the

domains is the same. The degree heuristic can be useful as a tie-breaker in

connection with MRV.

Example: which variable to choose at the beginning in the map coloring

problem?

02/10/17 AI FUNDAMENTALS - M. SIMI 8

Choosing a value to assign

Once a variable has been selected, the algorithm must decide on the order in

which to assign values to it.

1. Least-constraining-value: prefers the value that rules out the fewest choices

for the neighboring variables in the constraint graph. The heuristic is trying to

leave the maximum flexibility for subsequent variable assignments.

Note:

In the choice of variable, a fail-first strategy helps in reducing the amount of

search by pruning larger parts of the tree earlier. In the choice of value, a fail-last

strategy works best in CSPs where the goal is to find any solution; not effective if

we are looking for all solutions or no solution exists.

02/10/17 AI FUNDAMENTALS - M. SIMI 9

Interleaving search and inference

• One of the simplest forms of inference/constraint propagation is Forward

Checking (FC)

• Whenever a variable X is assigned, the forward-checking process establishes

arc consistency of X for the arcs connecting neighbor nodes:

for each unassigned variable Y that is connected to X by a constraint, delete from Y ’s

domain any value that is inconsistent with the value assigned to X.

• Forward checking is a form of efficient constraint propagation and is weaker

than other forms of inference.

02/10/17 AI FUNDAMENTALS - M. SIMI 10

FC applied to map coloring

WA = r {r{ggb}b}

{b}

Q = g NT

{r

{r}g b}

V = b Q

Q {r

{g}g b}

WA

WA

SA NSW {r{r}gb}b}

{r

{ }gb}b}

{g

{b} VV {r{b}

g b}

{r g b} T

02/10/17 AI FUNDAMENTALS - M. SIMI 11

The same example showing the progress

02/10/17 AI FUNDAMENTALS - M. SIMI 12

Maintaining Arc Consistency (MAC)

{r{g

{ }gb}b}

The inference procedure calls AC-3,

but instead of a queue of all arcs in NT

{r

{r}g b}

the CSP, we start with only the arcs Q

Q {r

{g}g b}

(Xj, Xi) for all Xj that are unassigned WA

WA

variables that are neighbors of Xi.

SA NSW {r gb}b}

{r

{{g}gb}b} V {r g b}

WA = r

Q = g {r g b} T

02/10/17 AI FUNDAMENTALS - M. SIMI 13

Chronological backtracking

§ With normal/chronological backtracking

with ordering Q, NSW, V , T, SA, WA, NT. NT

§ Suppose we have assigned: {Q=red, Q

Q

NSW=green, V=blue, T=red} and we WA

consider a value for SA

§ We fail repeatedly, in fact no value is good SA NSW

NSW

in this situation

§ Chronological backtracking tries all the {r, b, g}

VV

values for Tasmania, the last variable, but

we continue to fail. Trashing behavior.

§ Tasmania has nothing to do with SA, nor the TT

other variables.

Intelligent backtracking: looking backward

The trick is to consider alternative values

only for the assigned variables which are NT

responsible for the violation of constraints: Q

Q

the conflict set. In this case {Q, NSW, V}, WA

The backjumping method backtracks to

the most recent assignment in the conflict SA NSW

NSW

set; in this case, backjumping would jump

over Tasmania and try a new value for V. {r, b, g}

VV

This strategy is not useful if we do FC or

MAC as inconsistency is detected earlier.

TT

Conflict directed backjumping

Assume ordering WA, NSW, T, NT, Q, V, SA.

NT

WA = red, NSW = red and T = red.

Q

NT, Q, V, SA together do not have solutions. Where

to backjump if we discover inconsistency at NT? WA

WA

NSW is the last responsible. The conflict set of NT,

SA NSW

NSW

{WA}, does not help.

Rule for computing a more informative conflict

set: V

If every possible value for Xj fails, backjump to the

most recent variable Xi in conf (Xj), and update its

TT

conflict set:

conf (Xi) ← conf (Xi) ∪ conf (Xj) − {Xi}

Computing conflict sets

WA=red conf={}

1. When SA fails, its conflict set is {WA, NSW,

NT,Q, V}. We backjump to V. V’s conflict set is

extended with that of SA and becomes: {WA, NSW=red = {WA}

conf={}

NSW, NT, Q}.

2. When V fails, we backjump to Q. Q’s conflict T=red conf={}

set is extended with that of V and becomes:

{WA, NSW, NT}. NT conf

conf={ WA, NSW

= {WA } }

3. When Q fails, we backjump to NT and

extend its conflict set {WA} to {WA, NSW}. Q conf

conf={ WA, NSW, NT

= {NSW, NT} }

4. When alsp NT fails we can backjump to

NSW and update its conflict set to {WA} V conf

conf={ WA, NSW, NT, Q

= {NSW} }

SA conf={WA, NSW, NT, Q, V}

02/10/17 AI FUNDAMENTALS - M. SIMI 17

Constraint learning

When the search arrives at a contradiction, we know that some subset of the

conflict set is responsible for the problem.

Constraint learning is the idea of finding a minimum set of variables from the

conflict set that causes the problem.

This set of variables, along with their corresponding values, is called a no-good.

We record the no-good, either by adding a new constraint to the CSP or by

keeping a separate cache of no-goods. This way when we encounter a nogood

state again we do not need to repeat the computation.

The state {WA = red , NT = green, Q = blue} is a no-good. We avoid repeating

that assignment.

02/10/17 AI FUNDAMENTALS - M. SIMI 18

Local search methods

§ They require a complete state formulation of the problem: all the elements of

the solution in the current state. For CSP a complete assignment.

§ They keep in memory only the current state and try to improve it, iteratively.

§ They do not guarantee that a solution is found even if it exists (they are not

complete). They cannot be used to prove that a solution doe not exist.

To be used when:

§ The search space is too large for a systematic search and we need to be very

efficient in time and space.

§ We need to provide a solution but it is not important to produce the set of

actions leading to it (the solution path).

§ We know in advance that solutions exist.

02/10/17 AI FUNDAMENTALS - M. SIMI 19

Local search methods for CSP

Local methods use a complete-state formulation: we start with a complete

random assignment and we try to fix it until all the constraints are satisfied.

Local methods prove quite effective in large scale real problems where the

solutions are densely distributed in the space such as, for example the n-Queens

problem.

N-Queens as a CSP:

Qi: position of i-th queen in the i-th column of the board

Di: {1 … n}

Costraints are “non-attack” constraints among pair of queens.

Initial state: a random configuration

Action: change the value of one queen, i.e. move a queen within its column.

02/10/17 AI FUNDAMENTALS - M. SIMI 20

A basic algorithm for local search [AIFCA]

1: function Local_search(V, Dom, C) returns a complete & consistent assignment

2: Inputs:

3: V: a set of variables

4: Dom: a function such that Dom() is the domain of variable X

5: C: set of constraints to be satisfied

9: Local: A, an array of values indexed by variables in V , the assignment

10: repeat until termination

11: for each variable X in V do # random initialization or random restart

12: A[X] := a random value in Dom(X)

13: while not stop_walk() & A is not a satisfying assignment do # local search

14: Select a variable Y and a value w ∈ Dom(Y) # consider successors

15: Set A[Y] := w

16: if A is a satisfying assignment then stop_walk() implements

17: return A some stopping criterion

02/10/17 AI FUNDAMENTALS - M. SIMI 21

Specializations of the algorithm

§ Extreme cases:

ü Random sampling: no walking is done to improve solution (stop_walk is always

true), just generating random assignments and testing them

ü Random walk: no restarting is done (stop_walk is always false)

§ Family of algorithms:

ü Iterative best improvement: choose the successor that most improves the current

state according to an evaluation function f (hill-climbing, greedy ascent/discent). In

In CSP, f = number of violated constraints or conflicts. We stop when f=0 or cycle.

ü Randomness can be used to escape local minima:

- Random restart is a global random move

- Random walk is local random move.

ü Stochastic local search algorithms combine Iterative best improvement with

randomness

02/10/17 AI FUNDAMENTALS - M. SIMI 22

Variants of stochastic local search

Algorithms differ in how much work they require to guarantee the best

improvement step. Which successor to select?

§ Most improving choice

selects a variable–value pair that makes the best improvement. If there are many

such pairs, one is chosen at random. Needs to evaluate all of them.

§ Two stage choice

1. Select the variable that participates in most conflicts

2. Select the value that minimizes conflicts or a random value

§ Any choice

1. Choose a conflicting variable at random/choose a conflict and a variable within it

2. Select the value that minimizes conflicts or a random value

02/10/17 AI FUNDAMENTALS - M. SIMI 23

Min-conflict heuristics

All the local search techniques are candidates for application to CSPs, and some

have proved especially effective.

Min-conflict heuristics is widely used and also quite simple:

• Select a variable at random among conflicting variables

• Select the value that results in the minimum number of conflicts with other

variables.

02/10/17 AI FUNDAMENTALS - M. SIMI 24

Min-conflicts algorithm

02/10/17 AI FUNDAMENTALS - M. SIMI 25

Min-conflicts in action

The run time of min-conflicts is roughly independent of problem size.

It solves even the million-queens problem in an average of 50 steps.

Local search: improvements

The landscape of a CSP under the min-conflicts heuristic usually has a series of

plateaux. Possible improvements are:

§ Tabu search: local search has no memory; the idea is keeping a small list of the last t

steps and forbidding the algorithm to change the value of a variable whose value was

changed recently; this is meant to prevent cycling among variable assignments.

§ Constraint weighting:

can help concentrate the search on the important constraints. We assign a numeric

weight to each constraint, which is incremented each time the constraint is violated.

The goal is to minimize the weights of the violated constraints.

02/10/17 AI FUNDAMENTALS - M. SIMI 27

Local search: alternatives (see AIMA)

§ Simulated annealing

a tecnique for allowing downhill moves at the beginning of the algorithm

and slowly freezing this possibiity as the algorithm progresses

§ Population based methods (inspired by biological evolution):

ü Local beam search: proceed with the k-best successors (k = the beam

width) according to the evaluation function.

ü Stochastic local beam search: selects k of the individuals at random with a

probability that depends on the evaluation function; the individuals with

a better evaluation are more likely to be chosen.

ü Genetic algorithms …

Local search methods and online search

Another advantage of local search methods is that they can be used in an online

setting when the problem changes dynamically.

Consider complex flights schedules: a problem due to bad weather at one

airport can render the schedule infeasible and require a rescheduling.

A local search methods are more effective as they try to repair the schedule with

minimum variations, instead of producing from scratch a new schedule, which

might be very different from the previous.

Evaluating Randomized Algorithms

§ Randomized algorithms are difficult to evaluate since they give a different

result and a different run time each time they are run. They must be run

several times.

§ Taking the average run time or median run time is ill defined. When the

algorithm runs forever what do we do?

§ One way to evaluate (or compare) algorithms for a particular problem instance

is to visualize the run-time distribution, which shows the number of runs

solved by the algorithm as a function of the number of steps (or run time)

employed.

02/10/17 AI FUNDAMENTALS - M. SIMI 30

Run time distribution [AIFCA]

02/10/17 AI FUNDAMENTALS - M. SIMI 31

Increasing the probabilities of success

§ A randomized algorithm that succeeds some of the time can be extended to an

algorithm that succeeds more often by running it multiple times, using a random

restart.

§ An algorithm that succeeds with probability p, that is run n times or until a solution is

found, will find a solution with probability

1−(1−p)n

Note: (1−p)n is the probability of failing n times. Each attempt is independent.

§ Examples:

An algorithm with p=0.5 of success, tried 5 times, will find a solution around 96.9%

of the time; tried 10 times it will find a solution 99.9% of the time.

An algorithm with p=0.1, running it 10 times will succeed 65% of the time, and

running it 44 times will give a 99% success rate.

02/10/17 AI FUNDAMENTALS - M. SIMI 32

Constraint Satisfaction

LESSON 3: THE STRUCTURE OF PROBLEMS

Independent sub-problems

The structure of the problem, as represented by the constraint graph, can be

used to find solutions quickly. We will examine problems with a specific

structure and strategies for improving the process of finding a solution.

The first obvious case is that of independent subproblems.

In the map coloring example, Tasmania is not connected to the mainland;

coloring Tasmania and coloring the mainland are independent sub-

problems—any solution the mainland combined with any solution for

Tasmania yields a solution for the whole map.

Each connected components of the constraint graph corresponds to a sub-

problem CSPi.

If assignment Si is a solution of CSPi, then ⋃j 𝑆𝑖 is a solution of ⋃j 𝐶𝑆𝑃𝑖

Independent sub-problems: complexity

The saving in computational time is relevant

§ n # variables

§ c # variables for sub-problems

§ d size of the domain NT

Q

§ n/c independent problems

WA

§ O(dc) complexity of solving one

§ O(dc n/c) linear on the number of variables n SA NSW

rather than O(dn) exponential!

Dividing a Boolean CSP with 80 variables into V

four sub-problems reduces the worst-case

solution time from the lifetime of the universe T

down to less than a second!!!

The structure of problems: trees

a) In a tree-structured constraint graph, two nodes are connected by only one

path; we can choose any variable a the root of a tree. A in fig (b).

b) Chosen a variable as the root, the tree induces a topological sort on the

variables. Children of a node are listed after their parent.

Directed Arc Consistency (DAC)

A CSP is defined to be directed arc-consistent under an ordering of variables X1,

X2, … Xn if and only if every Xi is arc-consistent with each Xj for j > i.

We can make a tree-like graph directed arc-consistent in one pass over the n

variables,; each step must compare up to d possible domain values for two

variables, for a total time of O(nd 2).

Tree-CSP-solver:

1. Proceeding from Xn to X2 make the arcs Xi ® Xj DAC consistent by reducing the

domain of Xi, if necessary. It can be done in one pass.

2. Proceeding from X1 to Xn assign values to variables; no need for backtracking since

each value for a father has at least one legal value for the child.

Tree-CSP-Solver algorithm

Reducing graphs to trees

NT NT

Q Q

WA WA

SA NSW NSW

V V

T T

If we could delete South Australia, the graph would become a tree.

This can be done by fixing a value for SA (which one in this case does not matter)

and removing inconsistent values from the other variables.

Cutset conditioning

In general we must apply a domain splitting strategy, trying with different

assignments:

1. Choose a subset S of the CSP’s variables such that the constraint graph

becomes a tree after removal of S. S is called a cycle cutset.

2. For each possible consistent assignment to the variables in S :

a. remove from the domains of the remaining variables any values that are

inconsistent with the assignment for S

b. If the remaining CSP has a solution, return it together with the assignment for S.

Time complexity: O(dc (n − c)d2)

where c is the size of the cycle cutset and d the size of the domain

We have to try each of the dc combinations of values for the variables in S, and

for each combination we must solve a tree problem of size (n − c).

Tree decomposition

The approach consists in a tree

decomposition of the constraint graph

into a set of connected sub-problems.

Each sub-problem is solved

independently, and the resulting

solutions are then combined in a clever

way

Properties of a tree decomposition

A tree decomposition must satisfy the following three requirements:

1. Every variable in the original problem appears in at least one of the sub-problems.

2. If two variables are connected by a constraint in the original problem, they must

appear together (along with the constraint) in at least one of the sub-problems.

3. If a variable appears in two sub-problems in the tree, it must appear in every

subproblem along the path connecting those sub-problems.

Conditions 1-2 ensure that all the variables and constraints are represented in the

decomposition.

Condition 3 reflects the constraint that any given variable must have the same value in

every sub-problem in which it appears; the links joining subproblems in the tree enforce

this constraint.

Solving a decomposed problem

§ We solve each sub-problem independently. If any problem has no solution, the

original problem has no solution.

§ Putting solutions together. We solve a meta-problem defined as follows:

§ Each sub-problem is “mega-variable” whose domain is the set of all solutions for the

sub-problem

Ex. Dom(X1) ={⟨WA=r, SA=b, NT=g〉 ...} the 6 solutions to 1° subproblem

§ The constraints ensure that the subproblem solutions assign the same values to the

the variables they share.

Ideally we should find, among many possible one, a tree decomposition with

minimal tree width (the size of the largest subproblem – 1). This is NP-hard.

If w is the minimum tree width of the possible tree decompositions, the

complexity is O(ndw+1).

Simmetry

Simmetry is an important factor for reducing the complexity of CSP problems.

Value simmetry: the value does not really matters.

§ WA, NT, and SA must all have different colors, but there are 3! = 6 ways to satisfy

that. If S is a solution to the map coloring with n variables, there are n! solutions

formed by permuting the color names.

Symmetry-breaking constraint:

§ we might impose an arbitrary ordering constraint, NT < SA < WA, that requires the

three values to be in alphabetical order; we get only one solution out of the 6.

§ In practice, breaking value symmetry has proved to be important and

effective on a wide range of problems.

§ Symmetry is an important area of research in CSP.

Conclusions

ü We have seen how to search efficiently for solutions:

ü Algorithms for local stochastic search in large state spaces

ü How we can exploit the structure of the problem

ü CSP is a large field of study: many more things could be presented

Your turn

ü Review ”population based methods” for CSP.

ü Apply search techniques to the solution of some problem.

ü Find a problem that can be solved with tree search and solve it with the tree

search algorithm

ü…

References

[AIMA] Stuart J. Russell and Peter Norvig. Artificial Intelligence: A Modern

Approach (3rd edition). Pearson Education 2010. (Cap. 6)

[AIFCA] David L. Poole, Alan K. Mackworth. Artificial Intelligence: foundations of

computational agents (2nd edition), Cambridge University Press, 2017–

Computers. http://artint.info/2e/html/ArtInt2e.html (Cap 4)

[Tsang] Edward Tsang. Foundations of Constraint Satisfaction, Computation in

Cognitive Science. Elsevier Science. Kindle Edition, 2014.

02/10/17 AI FUNDAMENTALS - M. SIMI 47

You might also like

- NPDA Problem Solution ManualDocument10 pagesNPDA Problem Solution ManualactayechanthuNo ratings yet

- Problemas de Satisfacción de RestriccionesDocument40 pagesProblemas de Satisfacción de RestriccionesCamilo IsazaNo ratings yet

- Constraint Satisfaction ProblemsDocument40 pagesConstraint Satisfaction Problemsapi-19801502No ratings yet

- Constraint Satisfaction ProblemsDocument43 pagesConstraint Satisfaction ProblemsNah Not todayNo ratings yet

- Sol 101204Document4 pagesSol 101204ciuciu.denis.2023No ratings yet

- Homework Four Solution - CSE 355Document10 pagesHomework Four Solution - CSE 355Don El SharawyNo ratings yet

- Greedy Strategy: Algorithm: Design & AnalysisDocument26 pagesGreedy Strategy: Algorithm: Design & AnalysisastercsNo ratings yet

- TQC SMDocument79 pagesTQC SMAaron ChanNo ratings yet

- GeorgiaTech CS-6515: Graduate Algorithms: EXAM3 Flashcards by Daniel Conner - BrainscapeDocument10 pagesGeorgiaTech CS-6515: Graduate Algorithms: EXAM3 Flashcards by Daniel Conner - BrainscapeJan_SuNo ratings yet

- Constraint Satisfaction Problems: Section 1 - 3Document36 pagesConstraint Satisfaction Problems: Section 1 - 3Anonymous 2C9l3iQNo ratings yet

- CSPDocument9 pagesCSPSrinivas VemuriNo ratings yet

- Constraint Satisfaction ProblemsDocument35 pagesConstraint Satisfaction Problemsরাফিদ আহমেদNo ratings yet

- 2G1505 Automata Theory: Oo OoDocument3 pages2G1505 Automata Theory: Oo Oociuciu.denis.2023No ratings yet

- Solution To CS 5104 Take-Home QuizDocument2 pagesSolution To CS 5104 Take-Home QuizkhawarbashirNo ratings yet

- 2D1371 Automata TheoryDocument4 pages2D1371 Automata Theoryciuciu.denis.2023No ratings yet

- Lecture 10 - Chomsky Normal FormDocument75 pagesLecture 10 - Chomsky Normal FormAnutaj NagpalNo ratings yet

- A General Introduction To Artificial IntelligenceDocument58 pagesA General Introduction To Artificial IntelligenceÁnh PhạmNo ratings yet

- Constraint Satisfaction Problems: Unit 3Document40 pagesConstraint Satisfaction Problems: Unit 3srilakshmisiriNo ratings yet

- 05 CSPDocument28 pages05 CSPNada ElokabyNo ratings yet

- Product ConvergenceDocument6 pagesProduct ConvergenceSalvador Sánchez PeralesNo ratings yet

- NP CompleteDocument10 pagesNP Complete李葵慶No ratings yet

- CSC2105: Algorithms Greedy Graph AlgorithmDocument39 pagesCSC2105: Algorithms Greedy Graph Algorithmтнє SufiNo ratings yet

- 2G1505 Automata TheoryDocument3 pages2G1505 Automata Theoryciuciu.denis.2023No ratings yet

- AI-Lecture 7 (Constraint Satisfaction Problems)Document60 pagesAI-Lecture 7 (Constraint Satisfaction Problems)Yna ForondaNo ratings yet

- Partially Supported by CNPQ E-Mail: Dalmazi@Grt0 /0 /0Document13 pagesPartially Supported by CNPQ E-Mail: Dalmazi@Grt0 /0 /0tareghNo ratings yet

- cs188 Fa22 Lec04Document50 pagescs188 Fa22 Lec04Yasmine Amina MoudjarNo ratings yet

- Tensegrıty and Math - Lectures On Rigidity - Connelly 2014 - Lec 1Document23 pagesTensegrıty and Math - Lectures On Rigidity - Connelly 2014 - Lec 1Leonid BlyumNo ratings yet

- P' To Node V: CT2-Answer Key Set-A-Batch-2Document9 pagesP' To Node V: CT2-Answer Key Set-A-Batch-2Ch Rd ctdNo ratings yet

- Johnsons Algorithm Brilliant Math Amp Science WikiDocument6 pagesJohnsons Algorithm Brilliant Math Amp Science WikiClaude EckelNo ratings yet

- Monkhorst Pack2Document2 pagesMonkhorst Pack2Juan Jose Nava SotoNo ratings yet

- Constraint Satisfaction ProblemsDocument49 pagesConstraint Satisfaction ProblemsPoonam KumariNo ratings yet

- Bölüm 10 Çalışma SorularıDocument26 pagesBölüm 10 Çalışma SorularıBuse Nur ÇelikNo ratings yet

- 1-4 Gradually Varied FlowDocument18 pages1-4 Gradually Varied FlowujjancricketNo ratings yet

- ACOPF-Based TNEP Using GWOADocument8 pagesACOPF-Based TNEP Using GWOADivya RajoriaNo ratings yet

- Asg 02Document3 pagesAsg 02Tomas TankosNo ratings yet

- Formal Chapter 4Document42 pagesFormal Chapter 4Ebisa KebedeNo ratings yet

- E463 PDFDocument13 pagesE463 PDFJuan Alex CayyahuaNo ratings yet

- EECE 338 - Course SummaryDocument16 pagesEECE 338 - Course Summary. .No ratings yet

- Automata Theory: Lecture 8, 9Document20 pagesAutomata Theory: Lecture 8, 9Badar Rasheed ButtNo ratings yet

- Horospherical Limit Points of S-Arithmetic Groups: Dave Witte Morris and Kevin WortmanDocument8 pagesHorospherical Limit Points of S-Arithmetic Groups: Dave Witte Morris and Kevin Wortmanmujo mujkicNo ratings yet

- Constraint Satisfaction Problems: Section 1 - 3Document44 pagesConstraint Satisfaction Problems: Section 1 - 3Tariq IqbalNo ratings yet

- Acfrogbp63ucyfdbq8ssvzkdpwlzecc3rwypbwtssidbjukf Irwdruo2woiqlov Ggrf1pzkuhflayjbetalxxtis0thg Ah0u2ktkrzimu0luh5ui96 Rq897mhgyyvawucxx26qnifvk5ronfDocument2 pagesAcfrogbp63ucyfdbq8ssvzkdpwlzecc3rwypbwtssidbjukf Irwdruo2woiqlov Ggrf1pzkuhflayjbetalxxtis0thg Ah0u2ktkrzimu0luh5ui96 Rq897mhgyyvawucxx26qnifvk5ronfFaith Niña Marie A. MAGSAELNo ratings yet

- Constraint Satisfaction Problems IIDocument35 pagesConstraint Satisfaction Problems IIHuyCườngNo ratings yet

- Chapter 9Document4 pagesChapter 9cicin8190No ratings yet

- Resolution in Solving Graph ProblemsDocument15 pagesResolution in Solving Graph ProblemsVkingNo ratings yet

- 16smbemm2-1-Apr 2020Document4 pages16smbemm2-1-Apr 2020esanjai510No ratings yet

- Bisimulation Up-To: Up To Bisimilarity (Mil83) - We Show That This Is Compatible Whenever The BehaviourDocument24 pagesBisimulation Up-To: Up To Bisimilarity (Mil83) - We Show That This Is Compatible Whenever The BehaviourVictor Hugo Males BravoNo ratings yet

- DSP Chapter-6Document24 pagesDSP Chapter-6AbhirajsinhNo ratings yet

- Analytic Resum BenchmarkingDocument5 pagesAnalytic Resum BenchmarkingFrancescoCoradeschiNo ratings yet

- Module 1: Assignment 1: January 4, 2022Document7 pagesModule 1: Assignment 1: January 4, 2022aditya bNo ratings yet

- ch08 AnsDocument20 pagesch08 Ansrifdah abadiyahNo ratings yet

- Tut-3 SolutionsDocument3 pagesTut-3 SolutionsChirag ahjdasNo ratings yet

- AI-Lecture 7 (Constraint Satisfaction Problem)Document80 pagesAI-Lecture 7 (Constraint Satisfaction Problem)Braga Gladys MaeNo ratings yet

- AI-Lecture 7 (Constraint Satisfaction Problem)Document80 pagesAI-Lecture 7 (Constraint Satisfaction Problem)Braga Gladys MaeNo ratings yet

- Trefethen ResistDocument13 pagesTrefethen ResistMohakNo ratings yet

- DD2371 Automata TheoryDocument5 pagesDD2371 Automata Theoryciuciu.denis.2023No ratings yet

- Phys 1214 Spring 2015 Midterm SolutionsDocument5 pagesPhys 1214 Spring 2015 Midterm SolutionsHimanshu SharmaNo ratings yet

- Power Graph and Exchange Property For Resolving Sets: U. Ali, G. Abbas, S. A. BokharyDocument9 pagesPower Graph and Exchange Property For Resolving Sets: U. Ali, G. Abbas, S. A. BokharykoyotovNo ratings yet

- Constraint SatDocument57 pagesConstraint SatJetlin C PNo ratings yet

- Reference BookDocument3 pagesReference BookPham ThanhNo ratings yet

- AI42001 Practice 2Document4 pagesAI42001 Practice 2rohan kalidindiNo ratings yet

- CS F211 DSA Handout 2024Document3 pagesCS F211 DSA Handout 2024f20212358No ratings yet

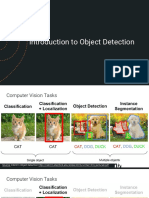

- Introduction To Object DetectionDocument24 pagesIntroduction To Object Detectioni notNo ratings yet

- Feature Extraction Gabor FiltersDocument2 pagesFeature Extraction Gabor FiltersZeeshan AslamNo ratings yet

- Image Processing/Communications-MATLAB: Code TitleDocument7 pagesImage Processing/Communications-MATLAB: Code Titlebabu surendraNo ratings yet

- Particle Swarm Optimization: Technique, System and ChallengesDocument9 pagesParticle Swarm Optimization: Technique, System and ChallengesMary MorseNo ratings yet

- NeetCode 150 - A List by Amoghmc - LeetCodeDocument1 pageNeetCode 150 - A List by Amoghmc - LeetCodepunit patelNo ratings yet

- Linear Programming QuizDocument1 pageLinear Programming QuizFarhana SabrinNo ratings yet

- Unit II: Computer ArithmeticDocument23 pagesUnit II: Computer ArithmeticMeenakshi PatelNo ratings yet

- Chapter2 Limitations of RNNDocument29 pagesChapter2 Limitations of RNNMohamedChiheb BenChaabaneNo ratings yet

- En Bucket SortDocument2 pagesEn Bucket SortJorman RinconNo ratings yet

- Algo PPT of Bits WilpDocument62 pagesAlgo PPT of Bits WilpAkash KumarNo ratings yet

- אלגוריתמים- הרצאה 7 - Single Source Shortest Path ProblemDocument5 pagesאלגוריתמים- הרצאה 7 - Single Source Shortest Path ProblemRonNo ratings yet

- Data Structures: Unit III - Part 4 Bca, NGMCDocument10 pagesData Structures: Unit III - Part 4 Bca, NGMCSanthosh kumar KNo ratings yet

- PCM, Differential Coding, DPCM, DM, ADPCM - Ze-Nian Li and Mark SDocument13 pagesPCM, Differential Coding, DPCM, DM, ADPCM - Ze-Nian Li and Mark SAkshay GireeshNo ratings yet

- Vu SolvedDocument60 pagesVu SolvedImranShaheenNo ratings yet

- DPCMDocument13 pagesDPCM20ELB355 MOHAMMAD ESAAMNo ratings yet

- List of Metaphor-Based MetaheuristicsDocument5 pagesList of Metaphor-Based Metaheuristicsredix pereiraNo ratings yet

- Expand Function in Legendre Polynomials On The Interval - 11 - Mathematics Stack ExchangeDocument3 pagesExpand Function in Legendre Polynomials On The Interval - 11 - Mathematics Stack ExchangeArup AbinashNo ratings yet

- CS251 Unit4 SlidesDocument127 pagesCS251 Unit4 SlidesChiranth M GowdaNo ratings yet

- ECE 3040 Lecture 6: Programming Examples: © Prof. Mohamad HassounDocument17 pagesECE 3040 Lecture 6: Programming Examples: © Prof. Mohamad HassounKen ZhengNo ratings yet

- JNTUA B Tech 2018 3 1 Sup R15 ECE 15A04502 Digital Communication SystemsDocument1 pageJNTUA B Tech 2018 3 1 Sup R15 ECE 15A04502 Digital Communication SystemsHarsha NerlapalleNo ratings yet

- 3 DTFTDocument20 pages3 DTFTBomber KillerNo ratings yet

- Tutorial Sheet of Computer Algorithms (BCA)Document3 pagesTutorial Sheet of Computer Algorithms (BCA)xiayoNo ratings yet

- Chapter 2Document22 pagesChapter 2bekemaNo ratings yet

- Prediction of Brain Stroke Using Machine LearningDocument8 pagesPrediction of Brain Stroke Using Machine Learningmanan1873.be22No ratings yet

- Section 3 Newton Divided-Difference Interpolating PolynomialsDocument43 pagesSection 3 Newton Divided-Difference Interpolating PolynomialsShawn GonzalesNo ratings yet

- HW 4a Solns PDFDocument7 pagesHW 4a Solns PDFAshwin ShreyasNo ratings yet

- A Brief Introduction To Sigma Delta ConversionDocument7 pagesA Brief Introduction To Sigma Delta ConversionraphaelcuecaNo ratings yet